#or transpose from key of x to key of y

Text

I’ve decided to learn the clarinet and on one hand wow learning an instrument as an adult is so much easier than as a kid?? I’m two days in and feel confident with the fingerings of two octaves of G major (tone is... a work in progress).

On the other hand, coming from a flute, the register key and the SHEER NUMBER of keys on this thing break my brain. Why can I press two different sets of keys to open or close the same exact holes? I mean? Sure, it’s convenient while my hands are learning what to do but also WHY was that necessary in the first place? And this whole tipping your hand to cover one hole but hit a different key simultaneously? Then there’s the register key nonsense? You mean you use the same fingerings, plus opening this tiny little hole, to raise the pitch??

#Personal#flute#clarinet#and yes I have experience with music theory#and yes I understand the concept of transposing#and transposing instruments#But I've only ever played C instruments#And it FRUSTRATES me that if I play a C on my flute it does not sound the same as a C on the clarinet#I can do the whole transpose up by x semitones#or transpose from key of x to key of y#but for SOME REASON#I can't actually wrap my head around it with an actual instrument#I get half way there then break completely

17 notes

·

View notes

Text

Dancing in the rain [Bang Chan]

Fandom: Stray Kids

Pairing: [Chan x GN! Reader]

Warnings: mentions of thunder, but it's depicted in a more serene view and not scary

Word count: 1.5 k

Requested by: SKZ8BLACKPINK4 on Quotev

Prompts: “Thanks, it's the insomnia."

Chan blinked awake when he heard the soft closing of the door to your shared room. He tiredly reached a hand towards your side of the bed and came up empty, but still slightly warm. It was one of those miraculous nights where the two of you were able to fall asleep at the same time, but it seemed like you had other plans.

He let out a yawn before sitting up in bed and looking around through nearly-closed eyes as he tried to figure out what was happening. It was still dark, and turning on his phone (through even more squinted eyes) told him it was a little after 4 am. He let out a groan from the fatigue still weighing down on his bones before falling face-first into his pillows. He was sure you’d come back, you were probably just getting water.

His eyes closed fully and he readjusted himself to get more comfortable, but it was no good. His mind was already awake. Felix said he was going to swing by later to drop off some cookies. And he needed to pester Changbin about sending him a sample of the rap he was putting together. What were you doing? He couldn’t hear the sounds of anything awake in the rest of your shared apartment. A cool beat started playing in his head and he tried to commit it to memory to transpose once he woke up. Maybe it was a beat that Han could use, he could imagine Han doing some lyrical rapping to it. Were you okay? It’s been a while. Oh, and is that thunder outside?

A groan of defeat emitted from his throat as he sat up fully and pulled himself out of bed. If he was awake, may as well try and do something productive. The carpeted floor was soft against his feet as he pulled open the door to the bedroom and headed out into the living area. He was going to head into the kitchen to grab a drink, but he noticed the door to the patio open, and that stopped him in his tracks.

He nearly let out a shriek when he saw someone on the balcony, but his brain caught up at the last second and realized it was just you. There was a warm smile on his face as he crossed the living room to see you on the balcony. Aided by the fact you left the door open, he silently slipped his arms around you and rested his chin on your shoulder in a back hug. “Sorry,” you rubbed at his arms that were around your waist, “did I wake you up?”

“It’s okay,” he murmured back in his warm, low voice. “What are you doing out here? It’s like 4 am.”

“As if you can argue against that,” you teased with a smile, “Mr. disastrous sleep schedule.”

He rolled his eyes at your jab, “thanks, it’s the insomnia. But,” he pressed a quick kiss to your cheek, “I’m still asking.” There was a flash of light followed by a rolling rumble that made him realize that yes. It was thunder storming outside.

“It’s raining.” He hummed in agreeance and nuzzled his face into your neck, “it’s wonderful.” You took in a deep breath and smiled as you inhaled the wet earth smell. The gentle pittering of the rain interrupted by thunder was all you could hear as down below, there was hardly a car on the street. There was another flash of lightning and you gasped as you saw it fork through the sky before there was a crack of thunder. “I kind of want to dance in it.”

That got Chan to open his eyes fully as he looked at you with concern and shock barely illuminated on his face. “You want to dance in it?” he repeated, not sure that he heard it in his still-waking-up state.

You stuck a hand beyond the balcony to let raindrops cover it and you were pleased to find it cool, but not hard. “It’s not bad. And tomorrow’s Saturday anyway.” Chan himself wasn’t super keen on getting wet in his clothes, especially because it was cold and he was definitely not going to wear swim trunks out there. “Do you want to dance with me?” But he was totally whipped for you. “It’ll be less than five minutes...probably.” He couldn't say no.

“Right now?” he couldn’t help but ask as he glanced down at his outfit to confirm that he was shirtless and in a pair of sweatpants.

You glanced at him with a bit of a spark in your eye as you nudged him, “after you put on a shirt.” You turned back to the rain wistfully, “it might pass soon.”

He gently tugged you back inside and you looked at him in confusion, “grab your keys.” You grinned at his small confirmation as you hurried to put on one of the light jackets you had left on the couch and your keys from the little box at the door. Chan emerged from your room with a light jacket covering his bare chest and the two of you slipped out of your apartment.

When you got outside, you let out a giggle of delight as you spun around under the downpour. It may have gotten harder, but you didn’t mind. Your sandal-clad feet stepped in several puddles and your joyful shouts seemed out of place in the otherwise silence of the night. You noticed Chan still standing apprehensively under the overhang in front of your apartment complex, “may I have this dance?” you asked as you extended a hand out to him.

His face melted into a smile as he looked at you nearly perfectly illuminated under one of the streetlights. “Can’t leave you hanging, can I?” he asked as he confidently stepped out to take your hand, “that wouldn’t be very gentlemanly of me.”

You smiled as the two of you did a janky version of the waltz under the falling rain. Your feet were soaked as you breezed through puddles and the sky continued to be punctuated with bursts of lightning and thunder. “Thanks Chan.” You murmured as you leaned into his chest, the two of you swaying in place.

“Anything for you babe.”

It was a gust of wind that caused you to sneeze that finally pulled the two of you back into the safety of the building. Your fingers were cold and clumsy as you shoved the key into the lock to gain access to your apartment and you couldn’t help but burst into laughter once the door shut.

“What?” Chan asked gently as he pulled you into another wet hug.

“Nothing.” You smiled, “just happy.”

Chan felt a smile grow on his face as well, your joy was just infectious like that. “I’m glad you’re happy.” He started pulling the two of you to the bathroom, “but let’s get dried off.”

It wasn’t long until the two of you were in dry, warm clothes and back in bed. You cooed as you ran your hand through his hair, as it got curly from the rain and the somewhat aggressive towel dry. “Felix is coming by later today.” Chan reported as the two of you cuddled and began to drift into sleep.

“K.”

Luckily for Chan, he was able to fall asleep. Though it felt like only minutes as he was woken up by rapid knocking and his phone vibrating wildly.

“Is that Felix?” you squinted as you stared at his phone. Your room was a lot brighter now that the sun had risen.

“Probably.” Chan groaned as he pulled himself out of bed. “You can go back to bed,” he protested when he noticed you were also crawling out.

“I’ll make breakfast.” You smiled as the two of you emerged from your room to answer the door.

“Took you long enough,” Changbin couldn’t help but bite in annoyance when Chan finally opened the door.

"You're not Felix."

Changbin scoffed, "feeling the welcome."

“Sorry,” Chan replied as he rubbed his face. "I thought Felix was coming over today."

“He is," Changbin agreed, "he was baking enough cookies to feed an army earlier today. Hey (y/n)!” your guest waved his hand as he noticed you in the kitchen making coffee.

“Hey Changbin, how’ve you been?”

“Did the two of you just wake up?” he asked as he looked between the two of you with slight eye bags and general ‘I just woke up’ vibes.

“Yeah.” Chan replied as he searched through the apartment for his laptop.

“Rough night?” Changbin asked with a smirk.

“Seo Changbin!” you shouted and threw a pot holder at him who easily ducked and ran away laughing.

Masterlist

AN: Oops, I did not mean to disappear for over a month. I'm sorry!! Haha but inspiration struck (as usual) in the middle of the night, so apologies if this is a little messy as it's completely unedited (I didn't want to leave you guys hanging ^^;). On another note more related to skz, NOEASY! So excited!! I'm loving Felix's big hat and Chan's been getting way too comfortable with crop tops. Are you sure you're foive sir?? xD

#stray kids#stray kids fanfiction#stray kids fic#stray kids fanfic#stray kids imagine#skz#stray kids x y/n#stray kids x reaer#x reader#reader insert#bang chan#bang chan x you#skz bang chan#skz chan#chan x reader#skz chan x reader#stray kids fluff#dancing in the rain#kimi's writing#skz imagine#skz fanfic#skz au#skz x reader#skz x you#skz x y/n#bang chan x reader#bang chan x y/n#stray kids bang chan#bang chan imagine#bang christopher chan

122 notes

·

View notes

Text

Okay... you have no idea what a complicated question you just asked. Therefore, I’m gonna give this one it’s own post, for simplicity’s sake.

So! My language ciphers are all English-based, because that’s my native language, and are usually constructed based on the placement of keyboard letters. (I say usually, because there’s at least two exceptions.)

.............................................................................................................................

That said: Hello and Welcome to a Quick Guide to the DL Languages!

Labrynnian - Use the phonetic alphabet, but backwards. Insert an ā after letters a-e, ē for f-j, ī for k-o, ō for p-t, and ū for u-z. In case of excessive vowels, insert a ł. There is a specific verb-possessive relationship, however, where things like 'My name is Changeling' becomes 'Name-my is Changeling'

Holodese - Move one key to the right. If at any point it seems like you'd trip over your tongue trying to pronounce the result, insert an apostrophe to give your mouth a breather. Only one apostrophe per word since more than that makes it hard to read, for all other necessary mouth-break moments use an ᾳ as a filler vowel. In case of excessive vowels, use an ӽ. Contractions and possessives are indicated with a hyphen in place of the English apostrophe.

Subrosian - Move one key to the right, except in the case of vowels. All vowels stay the same. The apostrophe and hyphen rules from Holodese also apply here, though much less frequently - same with the filler consonant and vowel if necessary. Subrosian is intended as a subset of Holodese, hence the similarities.

Koholish - Move one key to the left. Insert an 'e' between any letters that do not make phonetic sense. In the case of an 'r' transposing into an 'e', that 'e' becomes an ë in order to differentiate. There are no contractions in this one, so words like don't become do not, except that it's actually 'donot'. Possessives are in progress.

Divinet - Take your sentence, remove all the consonants. You are left with the vowels. Fill in the former consonants with either 'w' or 'y', depending on whether or not it was a hard or a soft sound. Hard Sounds: B, C, D, K, L, M, N, P, Q, T, V, X. Soft Sounds: F, G, H, J, R, S, Z, TH, SH. For any 'y' that is pre-existing, use a ẏ to differentiate; same for 'w' with ẇ. Filler vowel is æ, filler consonant is ḣ.

Darkling - Take your words and rearrange them by their alphabetical pronunciation. Apply the Koholish Rule of playing nice with phonetics, but add õ instead. In the event of excessive vowels, insert an ñ to make them play nice.

Archaic - Might be Ancient Hylian, haven't decided yet. Take the alphabet and numerize it, then apply those numbers to the keyboard. 1 is Q, 2 is W, 3 is E, etc. Then take your word, take the alphabet number of those letters, and apply those numbers to the above-established keyboard pattern. Add û between anything that doesn't make phonetic sense (The Koholish Rule)

.............................................................................................................................

Not all of these have been used in DL yet, for various reasons, but I’m sure you recognize the ones which have. The only languages that I don’t have ciphers for yet are Firetongue, which thus far consists of growling noises and is understandably hard to craft for, and Jabber (the Picori language) which has an in-game cipher of sorts that I’ve yet to crack completely.

Also, because I know that the words ‘Alphabetical Alphabet’ are confusing, here is the appropriate reference.

Aich - H

Arr - R

Ay - A

Aye - I

Bee - B

Dee - D

Djee - G

Double-you - W

Ee - E

Eff - F

El - L

Em - M

En - N

Ess - S

Ex - X

Jay - J

Kay - K

Kew - Q

Oh - O

Pee - P

See - C

Tee - T

Vee - V

Wye - Y

You - U

Zee - Z

Hope that answers your questions!

#Changling Answers#DL Questions#The DL Languages#and all the ciphers I use for them#if anything doesn't make sense then I'm very sorry#but it makes sense to me and I don't know how to explain it better#Dimensional Links

44 notes

·

View notes

Text

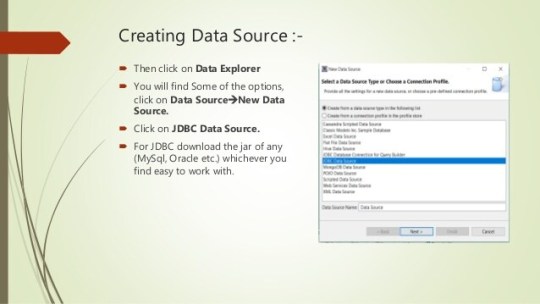

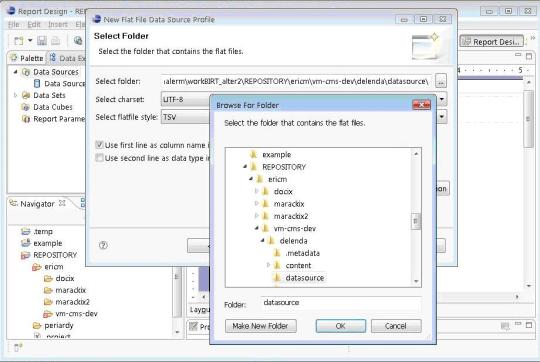

Birt Pojo Data Source

Eclipse Birt Pojo Data Source

Birt Report Pojo Data Source Example

Birt Pojo Data Source Example

Birt Pojo Data Source Examples

Use esProc with BIRT. Here is the SPL script. Your BIRT reports can have a query from two data sources no matter what kind of database and go on other computations that are not convenient on BIRT.

Using POJO DataSource in BIRT 4.3 To create a report in BIRT 4.3 we can use POJO dataSource. In 4.3 this DataSource is supported. To use this we need to create a dataset class.

BIRT is an open source engine to create data visualizations that can be integrated into Java web applications. It's a top-level software project within the Eclipse Foundation and leverages contributions by IBM and Innovent Solutions. It was started and sponsored by Actuate at the end of 2004.

Eclipse Birt Pojo Data Source

Primary tabs

. Data - Databases, web services, Java objects all can supply data to your BIRT report. BIRT provides JDBC, XML, Web Services, and Flat File support, as well as support for using code to get at other sources of data. BIRTs use of the Open Data Access (ODA) framework allows anyone to build new UI and runtime support for any kind of tabular data.

= unsolved/reopened

BIRT (146)Build (4)

101416 Incorrect font format in BIRT Developer Guide in Help menu (closed/fixed)

103303 The chart engine.jar in the runtime distribution is the wrong file (closed/fixed)

105638 Rotated text report item displays in palette by default (closed/fixed)

106247 Eclpse Distro Path (closed/fixed)

Chart (46)

102417 Chart title is set to Chinese, can not be previewed correctly.(resolved/wontfix)

103130 Chart title is overlapped when previewed.(closed/worksforme)

103143 Data format doesn't work in Chart (closed/invalid)

103153 Scatter chart, if tick transposed, image does not be changed.(closed/fixed)

103168 X Axis data is lost when transposed.(closed/fixed)

103298 X series in a pie chart in wrong order (resolved/fixed)

103438 Scatter chart is displayed differently when it is transferred from another chart type.(closed/fixed)

103439 Steps in chart builder can't be restored to default setting when cleared out.(closed/fixed)

103453 Scale for 'datetime' type in chart builder doesn't work.(closed/fixed)

103460 Multiple x axis are not supported.(closed/fixed)

103463 Datetime marker line can't be set.(closed/worksforme)

103595 Datetime data in Chart axis of example are displayed inconsistently in layout.(closed/invalid)

103599 Resizing chart results in Eclipse hang up.(closed/fixed)

103602 Exception is thrown when setting chart height or width.(closed/worksforme)

103613 Linking chart by parameter causes error when a NULL param value is reached (resolved/fixed)

103617 if Label positioin is changed, then can not return initial state.(closed/fixed)

103618 Bar Chart , Label position is lack of inside item.(closed/fixed)

103770 don't use hyperlink (resolved/invalid)

103780 Chart is not displayed in layout view when transposed.(closed/fixed)

103782 Attached chart design file can't be previewed.(closed/fixed)

103787 Add a new Y-axis and set it's title to visible will cause chartException.(closed/fixed)

103960 If x axis type is 'Linear', scale should be grayed out.(closed/fixed)

103961 Marker and line doesn't work for X Axis.(closed/fixed)

103963 If there is no data for series, it should pop up a friendly error message to remind.(closed/fixed)

104248 Axis types on Chart dialog are not displayed in localized language.(verified/fixed)

104252 Sort option on Chart X-Series dialog is not displayed in localized language.(verified/fixed)

104254 Type and Action value on Chart Y-Series are not displayed in localized language.(verified/fixed)

104278 Values in Tick Style list box are not displayed in localized language.(verified/fixed)

104283 Value for Label Position on Chart dialog are not displayed in localized language.(verified/fixed)

104290 Hard coded strings on Chart Attributes>Y Series dialog (verified/fixed)

104313 Set the image to the chart label background, system throws exception (closed/fixed)

104315 Plot background image can not always take effort .(closed/worksforme)

104450 If plot background is set, data set binding is lost.(closed/fixed)

104465 Data values of Y-series cannot be displayed correctly (closed/invalid)

104613 Steps changed after chart is transposed.(closed/invalid)

104628 Chart Major.Minor Grid line style won't display in layout (closed/wontfix)

104631 If set a long title to chart X Axis,Axis type will be truncated (closed/fixed)

99331 Eclipse hangs when closing 'Chart Dialog' (resolved/fixed)

100746 Whole chart should display smaller on scale, not only display title and legend after resize (closed/invalid)

101039 Series colors do not have different default values (closed/fixed)

101179 Unfriendly error message display when preview chart with invalid data set (closed/fixed)

101806 Chart axis label background is not displayed properly in layout view.(closed/fixed)

101827 Exception should be processed before written to error log or some error message should pop up to warn user (closed/fixed)

101855 Chart title top.bottom inset should display right in layout view (closed/fixed)

101868 series value format can display as setting (closed/fixed)

102455 Pie Chart is not round (closed/fixed)

Data (22)

94542 Grouping versus Sorting (closed/invalid)

99479 After Update Birt 1.0 error Cannot load JDBC Driver class (resolved/fixed)

101553 Report Parameters are not working (resolved/duplicate)

101864 NullPointerException throws out when setting the parameter type as auto (closed/fixed)

101865 Try to set report parameter's value in beforeOpen method of data source,error occurred when save but preview was correct.(closed/duplicate)

103135 Change the name of one computed column which are used in dataset filter will cause the dataset filter invalid.(closed/fixed)

103151 When a data set parameter is generated automatically, error message doesn't always pop up.(closed/fixed)

103292 No error message when group key dismatches the interval (closed/fixed)

103296 Data set column doesn't work when it is entered by keyboard in data set filter page.(closed/fixed)

103346 Weekly interval groups by 7 day increments, not by week (resolved/fixed)

103625 Database URL will be refreshed when editing JAR files in Manage drivers dialog (closed/fixed)

104174 If I re-select csv file name, columns selected before in right pane disappeared.(closed/fixed)

104178 Linux:No file listed for '*.*' filter when new a flat file data source (closed/fixed)

104185 An additional column is generated when create a script data set (closed/fixed)

104204 Test connection fail when try to connect birt derby sample db.(closed/fixed)

104397 JDBC Data Set Editor showing empty.system schemas (resolved/fixed)

104452 IllegalArgumentException thrown out when double click on data set after change flatfile data source charset (closed/fixed)

104578 German labels are truncated on Manage JDBC drivers dialog.(verified/fixed)

104611 Smoke Test: Jdbcodbc data source can't be connected.(closed/fixed)

104616 A sql statement with parameter can not be changed if you go out of 'Edit DataSet'->'Query' (closed/fixed)

106250 POJO Data Source (closed/fixed)

103802 Aggregate function in a group footer using Total.OVERALL fails (resolved/fixed)

Data Access (16)

99872 Implementing the ODA Log Configuration in BIRT ODA Drivers (resolved/fixed)

100090 JDBC driver loaded either by data explorer or report viewer (resolved/fixed)

100495 'next' button is grayed out in 'new data source' window when create a data source.(closed/fixed)

100501 NPE thrown out when new a flat file data set (closed/fixed)

101185 NullPointerException thrown out after click on Finish in data set dailog (closed/fixed)

101372 Limit the data set to a particular schema for JDBC connection (resolved/fixed)

102405 Broken display names when Qry has Dup col names (resolved/fixed)

103531 Change data set type from Flatfile Data Set to Flat File Data Set (resolved/fixed)

103533 Change Flatfile Data Source to Flat File Data Source (resolved/fixed)

103544 Allow any filename extension for CSV files (resolved/fixed)

103598 Flat file - Use second line as data type indicator only works for String (resolved/invalid)

103600 Change spelling in error message (resolved/fixed)

103942 Cannot create a JDBC connection (resolved/invalid)

104306 ODA Consumer handling of a null argument for IQuery.prepare (resolved/fixed)

104630 Column icons don't show up in connecting derby database (closed/fixed)

105112 ODA Log Configuration's Optional Attributes (resolved/fixed)

Documentation (3)

101582 Smoke Test: NullPointerException is thrown out when open an existing report design file in which there is grid.(closed/invalid)

101969 Wrong reference in BIRT Developer Guide (closed/fixed)

101977 API document is inconsistent with real implementation (closed/fixed)

Report (7)

87022 Use preservelastmodified in Ant Copy scripts (closed/fixed)

92091 rom.def - allowsUserProperties set to false for Styles, and other entries (resolved/fixed)

101825 Set bold style to grid in property editor and it will be reflected in grid highlight box when you add a highlight rule but will not when you modify it.(closed/fixed)

102496 onRender of Data item isn't executed (resolved/fixed)

102725 DimensionHandle can not parse '1,2in' (resolved/fixed)

103517 Cannot load 'Driver' class (resolved/fixed)

104769 org.eclipse.birt.report.model.metadata.ExtensionException found in meta.log (resolved/fixed)

Report Designer (28)

87803 Data Explorer view doesn't show new data source or data set (resolved/fixed)

87804 Incorrect rendering in BIRT property editor (closed/fixed)

87830 NullPointerException in org.eclipse.birt.report.designer.internal.ui.editors.schematic.ReportDesigner.selectionChanged (resolved/fixed)

88935 Wrong string formatting (upper and lower) (resolved/fixed)

100354 '%datasource.name' is listed in data sources list when create a data source.(closed/fixed)

100964 Provide Support for the Eclipse 3.1 Platform Release (resolved/fixed)

100965 Create a RCP version of BIRT Report Designer (resolved/fixed)

100999 Ctrl+Z.Y doesn't work in expression builder (closed/fixed)

101000 Font is not sorted in order.(closed/fixed)

101586 Exception throw out when new a table group with invalid group field (closed/fixed)

101973 Digit number for ruler displays partially when setting bigger value (closed/fixed)

102598 i18n bug mulitplies numbers by 10 (resolved/fixed)

102713 Undo.Redo can't be refreshed right away after setting hyperlink.(closed/fixed)

102969 Paste should be disabled when nothing is copied (closed/wontfix)

102973 Table group interval shouldn't change after preview (closed/fixed)

103126 hyperlink content in property editor can't be cleared (closed/fixed)

103158 NPE throw out when click on edit group in cheat sheet when delete table group (closed/fixed)

103171 edit the dynamic text won't restore last expression to expression builder (closed/invalid)

103526 New Data Set dialog box has red square on right side (resolved/fixed)

103788 Display inconsistantly in BIRT GUI (closed/fixed)

103962 RCP:Project icon can not displayed (closed/wontfix)

104184 The button in Dataset.Filters can not work (closed/fixed)

104307 when group on a double type field, set interval less than zero should be permitted (closed/fixed)

104617 In chinese testing environment, translation need to be improved.(closed/fixed)

104623 Highlight preview doesn't work when change two highlight rules order.(closed/fixed)

104764 Acceptance Test: New Report repeatly produces same name of file (closed/fixed)

101403 Smoke Test: Property editor view doesn't work.(closed/fixed)

101407 NullPointerException when selecting Save As in top menu (closed/fixed)

Report Engine (14)

96357 Projects contain errors when opened in Eclipse (resolved/worksforme)

101361 Bugs in org.eclipse.birt.report.engine.extension.internal.ExtensionManager (resolved/fixed)

101685 Unable to use the Report Item Extension framework, when no query exists (resolved/fixed)

101751 Enhance IImagehandler interface to allow full customization of birt image handling mechanism (resolved/fixed)

103050 PDF Hyperlinks (resolved/fixed)

103106 Generates incompatible FOP files (resolved/fixed)

103120 Hyperlink file can't be retrived when click it in PDF page (closed/invalid)

103169 Format number with Symbol prefix should display right size when preview in Linux (closed/wontfix)

103449 Log BIRT extension loading details information (resolved/fixed)

103622 Inline for two grids doesn't work in layout view and pdf preview.(closed/duplicate)

104172 Blank pages will be generated when set Page Break to always.left.right.(closed/invalid)

104239 Value-Of Problem (resolved/fixed)

104457 Set table drop to all, preview does not take effect.(closed/worksforme)

104629 Generating report in custom plugin cause exception fop.xml malformed URL (resolved/fixed)

Report Viewer (5)

Birt Report Pojo Data Source Example

100596 DateTime parameters not functioning as report parameters (resolved/invalid)

104177 Spaces in parameter value which is entered are trimmed when previewed in html.(closed/wontfix)

104462 There is a parameter in a parameter group, 'show report parameters' button is always grayed out when previewed.(closed/fixed)

104759 Image imported from a related path in file system can't be previewed.(closed/invalid)

104962 Smoke Test: Data can't be displayed when previewed if data source type is 'sample datasource' or 'JDBC Data Source' except 'JDBCODBC driver'.(closed/fixed)

Test Suite (1)

100968 Provide Daily Build Test Reports on eclipse.org.birt Web Site (closed/fixed)

In a previous blog post I created a skeleton class for rendering a report using BIRT runtime. You can pass it the report parameters, the report definition (rptdesign) and an OutputStream and it will render HTML to that stream.

If your report definition contains graphs we run into a problem. Normally, in HTML an image is a separate resource. BIRT will generate the images containing your graphs in a temporary directory and will link to them in your HTML. For this to work, you will have to configure the Platform to write the images to a publicly accessible directory and write the links using the correct domains. Furthermore, you’ll probably need some process to clean up the images after the reports have been viewed. If your reports are being used in some website and generated on the fly, this is most likely quite difficult to determine. Maybe when the user logs out?

Luckily, in modern browsers we can embed the images in the same stream, bypassing the need of a temporary directory. The following trick will encode the image with base64 and embed it directly into the HTML stream using css data . This will work on most modern browsers but of course Internet Explorer lags a bit behind. PNG support is available up until 32kb in Internet Explorer 8 and SVG not at all. Internet Explorer 9 works fine, as well as the other major browsers.

So how does it work? First, we explicitly tell the render engine to use PNG or SVG. SVG provides sharper images but will not work in Internet Explorer 8 as mentioned above. Next, we inject our own HTMLServerImageHandler which encodes the image data to base64.

Birt Pojo Data Source Example

2

4

6

8

10

12

14

16

18

20

22

24

privateHTMLRenderOption getHtmlRenderOptions(OutputStream outs)(

HTMLRenderOption options=newHTMLRenderOption();

options.setSupportedImageFormats('SVG');

options.setSupportedImageFormats('PNG');

setupImageHandler(options);

options.setOutputFormat('html');

)

privatevoidsetupImageHandler(finalHTMLRenderOption options)(

options.setImageHandler(newHTMLServerImageHandler()(

protectedStringhandleImage(IImage image,Objectcontext,Stringprefix,booleanneedMap)(

StringembeddedImage=Base64.encode(image.getImageData(),false);

return'data:'+image.getMimeType()+';base64,'+embeddedImage;

));

Birt Pojo Data Source Examples

Some references:

1 note

·

View note

Text

Pidge is actually trying to take this a tiny bit seriously. Last night, while she was working on moving her work station into the makeshift pharmaceutical laboratory she’s set up for herself over the last few months, she was putting together a presentation, like it’s a business pitch or a grant funding exercise. Still, it’s easier to illustrate her point when she has diagrams to go off of. The fancy little holograms from her PADD can even be manipulated in real-time in three-dimensional space, for added cool factor.

It also means she can keep her thoughts together as she goes through the theoretical aspects of this with @swordsedge Ulaz. Before she begins, she takes a shot glass, fills it with the Olkari root extract she’s come to love so much, and knocks it back like it’s so much liquor. That should keep her going for the next eight or so hours and stave off the fluorescent-inspired headache she’s guaranteed to get if she works down here too long. She offers some to the Galra in front of her, but he declines. Reasonable. He doesn’t know what it is, and she could have tampered with it, so she’s not offended.

They’d had a brief conversation last night, as well, about how to structure this upcoming week. Pidge had asked Ulaz what the Galra Empire would do for someone who had a genetic degenerative disease. The answer, unsurprisingly, was a mercy cull. For an empire driven by expansion at all costs, a disabled life is not one that can be afforded. Ulaz did show the correct amount of disgust as he explained, at least, which reassured Pidge that he was here for the right reasons, to do the right thing. What wasn’t so reassuring was that he hadn’t actually encountered this specific problem before, as a medical officer.

Tilting her PADD against her empty glass so the holograms can project onto the table, Pidge launches into her explanation. “so, you understand what we need to do here,” Pidge reminds Ulaz. “this is different from just keeping shiro in stasis and keeping disease from progressing. this is total genomic overhaul.” She flicks the first diagram out from her screen to the table, starts spinning it--a puffy little X shape made of squiggles. “what we’re working with is the x chromosome, a location on the short arm called p21.2-p21.1.” When she zooms in with her fingers, there's a noticeable length difference between the two top arms of the chromosome. “there’s a deletion here--not one of the worst, but not in a good place, either. this codes for dystrophin: the protein that builds human muscle. without it, the muscles we’re born with can’t be effectively re-built when they’re damaged. usually, you’d have a backup on the other matching pair in your chromosome set, so your body could just use the one that works and ignore the one that doesn’t, but shiro can’t do that, because he doesn’t have a second x chromosome, he has a y chromosome. which, don’t tell it i said this, but it’s pretty useless, aside from sry. poor little thing. smallest in the human genome.”

This is probably stuff Ulaz already knows. Based on what Pidge surmises about Galra, just from pure conjecture surrounding the fact of Keith’s existence, they also must have a similarly-based biology, with double-helix DNA, ACGT pairs, X/Y sex chromosomes, even the same number and arrangement of chromosomes. Otherwise, Galra wouldn’t be able to reproduce with humans, or proliferate so far with so many other alien races. Still, it helps to start from the common denominator and build up to more complex premises.

Pidge pinches her fingers together, then spreads them to zoom in on her DNA diagram--to the portion that’s missing. “there’s maintaining the dystrophin shiro still has, and there’s teaching his body how to make it for himself. two different things. he already had weakness in his legs, to be expected, but now you’re telling me he’s having trouble breathing. that means his diaphragm can’t repair itself. he’s too weak to work his own lungs. that’s... that’s advanced. the only way it could be worse is if it was in his heart, and we don't know that it's not. so, we can’t just plug this with pharmaceutical intervention. giving him the actual dystrophin protein isn’t, by itself, going to get him where he needs to go. he needs to do it for himself, and he needs to be able to rebuild what’s been lost on top of it. that means...”

Another diagram flicks next to the first. This one's the clip of what's missing. “i have to get this, here, but... everywhere. as far as you're telling me, this is something the galra weren’t even interested in devoting resources to. it’s something humans haven’t quite been able to achieve, even with crispr, our most advanced gene splicing engineering technology. altean alchemy isn’t suited to this, and i can’t see that they've ever attempted a genetic cure, just an amino acid replacement. the olkari seem to find it anathema to attempt it, even with their advanced biohacking abilities. but i’m--we’re not dealing with just one set of medicine. we’re not limited here. i can use all of this accumulated knowledge and make something bigger than the sum of its parts. i just need to run this by you, theoretically speaking, to see if it’s even possible in practice.”

Dismissing the first diagram to focus on the second, she twists her two hands, pulls them apart, and it zooms in on the individual molecules making up the DNA helix: red adenine paired with green thymine, yellow cytosine paired with blue guanine, clumped in threes (that’s a slight liberty with the illustration, but it works for these purposes). “coran’s taught me how to use this lab to make pharmaceutical compounds i thought would be impossible with the materials we have. apparently all you have to do is ask these atoms and molecules nicely to create their bonds. so far i’ve been... moderately successful in using it.” That’s false modesty. Pidge has been able to synthesize a full medication line for Shiro by now, from advanced corticosteroids to muscle relaxers, from gene-targeted therapies to painkillers. “but, i mean, dna is just a bunch of molecules, when you get down to it. huge, snarled-together molecules, but molecules all the same. the backbone of the helix is the same. the a, c, g, t are the same. if i can teach the lab to make the individual components, it’s just an issue of putting the building blocks in the right order and making them stick together. that part, actually making the gene i need, that’s the part i have the most confidence in. i know i can do it. what i don’t know is how much time it’s going to take, or if i can accelerate it by redirecting non-essential ship power to this one resource. and i won’t know for sure until i get started on it. but, the good news is, i know what i need to make and how i need to make it. easy.” Relatively speaking, of course.

The next image Pidge pulls up is entirely new. “this--this part’s more complicated. this little device is crispr. technically it’s a repeating genome sequence that humans synthesized from a bacteria, but you can use it for genome modification. depending on what kind of rna you attach to it, you can use it to snip out genes entirely, or cut and paste from one mis-transposed location to another. notice i didn’t say insert. it needs to get the material from somewhere to insert it in the first place, and creating the right sequence out of nothing was always a little too difficult to stabilize in human trials. plus, there were ethical concerns with using it on stem cell lines. no such worries here. if i use altean alchemy to create the missing piece, and if i use the right rna to point it at xp21.2 through .1, it should plunk it right into place. and there’s no medico-ethical dilemma present for doing this with a full-grown person, like there would be if we were trying to fix it in a zygote. it doesn’t even generate the should-we argument. now, getting the rna to target the right location, and getting the delivery mechanism to be stable, and getting it to lock into place, that’ll be a little more difficult.”

What flashes into the set of images Pidge is using, this time, is a series of ones and zeroes. “that's where the olkari technology comes in. their tiaras use human brainwaves, sent as binary code, to modify messenger rna, to redirect plants on what genes they should be expressing at any given time. it unlocks a gene’s potential. this should be the key to not only targeting the right location for the gene insertion, but also in making sure that it’s getting used correctly to code for dystrophin. the question you’re probably about to ask is, how does this work with dna when dna isn’t written in binary? but it’s not about reading it, it’s about finding it. rna will read it for itself, pull the correct amino acids, and make dystrophin. cells are pretty smart that way.”

Dismissing all those prior symbols, Pidge finally pulls up a diagram of the human body. “so, congratulations. using a series of increasingly unstable chemical reactions pulling from the most advanced medicine, science, engineering, and coding from three different starfaring species, we created, spliced in, and activated exactly one copy of the dystrophin-coding gene, into one cell.” The hologram zooms in to some generic muscle strand of the forearm. “that cell could die before undergoing mitosis. even if it survives, that’s no guarantee that the new, fixed genome will propagate very far, even within the same physical location of the body.” A red flash, indicating failure.

“but, if i’m understanding your research correctly, there’s something you can do with filtered quintessence to not just make it stick around, but to get it to actually change the whole body genome. this is the part that i’m the most skeptical about,” just in case Ulaz couldn't tell from her tone. “i don’t know how quintessence works at the best of times. as far as i care, though, if it does what you say it will, then it can be literal space magic--as long as it works by a set of fixed principles. if you’re saying we can wash out the old genome and, i guess, dye the new one into place by steeping shiro in enough quintessence, it’s worth a try.”

Presentation over. Pidge collapses her diagrams, puts her PADD face-down on the table. When she catches Ulaz’s face, his expression is unreadable. Just like always, really. “so, after all that, i have two questions for you. one, does that sound like something we can, theoretically, even do? i don’t want to waste time or energy on research if it’s not going to pan out in real life. and even if it does, question number two, how much quintessence would it actually take to do something like that? are we talking on the level of a d-cell battery, car battery, aircraft engine, starship-class balmera crystal, the type of energy it would take to hold strand in stasis for eons--what do we need, and can we actually get it?”

7 notes

·

View notes

Text

Tarot Tips for New Readers

(I wrote this last year in response to an Ask, figured I would repost it since it comes up often!)

First of all (and this might be late if you’ve already purchased a deck, but for anyone reading this who is looking for their first deck), get a deck that is fairly detailed. There are some incredibly beautiful minimalist decks, but personally I find a detailed and figurative (not abstract) deck is best to start with. Even better if you have people with different faces, expressions, positions, etc.

Tip 1: Don’t memorize the cards, and don’t read the book. Obviously you can read the book at some point, but my recommendation is not to start there. Infer your own meanings from the cards first, and then complete them with the book as needed. I’ll get into more detail on this further down.

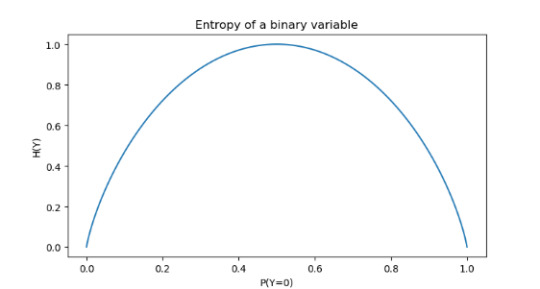

Tip 2: Keep a tarot journal. Or use your grimoire to keep notes if you don’t mind having them all in the same spot, but definitely you want some kind of reference for yourself. This is about keeping your initial impressions on the cards, but also how your impressions evolve over the course of your time with the deck. Even beyond reading the book and making your original inferences, I can guarantee your ideas on what means what will change over time (and that’s not a bad thing). Tarot is like learning a language and as your understanding of the language evolves, your original notes of “x means y” will change and become more nuanced.

Tip 3: Learn the characteristics first, and piece them together to form the meanings of individual cards. Think of the language example again. In the English language, about 300 words make up roughly 65% of all written works. There are over 170 000 words currently used (not counting multiple meanings, different dialects, etc), so that’s less than 1% of words needed to understand most of written English text. This principle can be transposed, to an extent, to most languages… and that includes tarot. My point is: it’s not about learning all the words right off the bat, but about learning the right ones. This is continued in tips 4 through 8.

Tip 4: Learn the Major Arcana last. These cards represent moments of clarity or change. They are normally big events, decisions, etc, and mark a very clear break between “before” and “after”. I know this is the exact opposite of what most people recommend when it comes to tarot, but personally I find the Minor Arcana cards to be a lot more useful for day to day stuff, and the best way to learn tarot is to use it often for small questions that don’t necessarily require Major Arcana cards. If you start with only the Major Arcana cards and ask “should I do my dishes today” and get the Death card it’s kinda like…. super fucking dramatic, you know? I enjoy being as extra as the next guy, but sometimes it’s like powering off your PC with a sledgehammer... there’s a better way to do it.

Tip 5: The suits. Learn the suits as categories by assigning meaning to them on a personal level. If you’re working with a more traditional deck with swords, cups, pentacles, and wands: what do each of these elements mean to you? Go beyond the obvious and get into the details, the backstory, whatever comes to mind. Write down the key words and brainstorm on each of them. For example, when I see swords I think of something more cerebral and intellectual, a decision or action that requires aim and consideration or planning. I also think of the people who tend to hold swords and how they use them (can be used defensively or as an attack). I think about knights, and the sense of duty that a sword can represent. Et cetera for each suit.

Tip 6: The numbers. You want to go over the same as the above, but with each number. What does the number two represent for you? What ideas does it conjure? What are the up sides and down sides of it? Another example, the number four reminds me of stability, like a chair with four legs, but it also reminds me of being restrained or walled in. Stability can be good, but can also lead to feeling trapped... and so on.

Tip 7: Court cards. Court cards are normally representative of a person, personality type or archetype, or a cluster of characteristics (physical, emotional, or psychological). Personally, I don’t take these cards literally as far as gender, hair colour, etc go (but that’s up to you). For example, a knight with a “male” looking figure doesn’t necessarily mean an adult man. Take hints from the other elements in the card to discern what type of person it could be depicting, and write down your impressions. Again, try to think of the up sides and down sides of this personality type.

Tip 8: Describe the card and put it all together. And I really do mean “describe the card” on a literal level. What is happening in the card? What are the characters doing, if there are any? What’s the story? Think about how the visual action goes with the characteristics you’ve gained from the suit and number in the card. If there are any other symbols or colours that have personal meaning to you, take those into account as well (for example, if the colour blue is dominant and you associate it with calm).

Tip 9: Context clues. Again with the language example, but use your context clues. What position is the card in? What was the question asked? How does this card relate to the other cards around it? Pulling together from our previous examples: I have the four of swords in the “what I should do” position of a spread, and the question is regarding finances. The card depicts a person with their head resting on the hilt of two swords, asleep and looking peaceful. The colour palette is predominantly blue. I might interpret this card as saying to rest on my intellect in regards to this matter. If figures on separate cards are looking forward, backward, at another card... these should all be taken into account. The way I describe it when I’m giving coaching on tarot reading is to see the cards like panels in a comic strip. They individually have meaning, but they need to be read in context of each other.

Tip 10: The infamous reversed card. In movies inverted cards always seem to be accompanied with a loud gasp and wide eyes, but this is also commonly called the “advice” position. Meaning, this card is not so much a prediction as it is a suggestion on what you should be doing or improving, or a warning. Use your context clues to determine which of these is most applicable.

Practice! Practice practice practice!!

This is probably the most obvious thing in the world, but I can’t say it enough. You can spend all day staring at lists of vocabulary and memorizing, but nothing will help you learn faster than going out into the world and using it. Use tarot for ridiculous little things, and big things, and just whenever! Take notes on how you interpreted the card at the time, and then go back to your notes and see where you were right and where you had misread. You’ll be absolutely amazed how often the answer was in the cards and you just didn’t see it. I highly recommend picking a card in the morning, writing down your interpretation, and then comparing it to how your day actually went. See where your interpretation was correct, and where you need to adjust.

As a final note if you would like to learn more about tarot I HIGHLY recommend checking out biddytarot.com

Honestly, there is nothing I could possibly say that Bridget hasn’t already said (and likely a lot more eloquently). She has an amazing set of articles, a fantastic podcast (and a lovely voice), and on top of all that there is a forum where you can do readings for people and get feedback from them. She even has tips and suggestions on how to read tarot professionally. I’m not affiliated with her in any way, but if I had heard her podcast when I first started reading tarot I would have learned it about five years faster than I did.

As always, my inbox is open if anyone has questions about tarot!

1K notes

·

View notes

Text

“You’re going to make this awkward, aren’t you?” - Roger x fem Reader (smut)

Summary: It’s 1983 and Queen are recording their next album at the studio where you work. Things get a little heated between you and their drummer.

Part 1 | Part 2 | Part 3

In this “episode”: Things start to get a bit more serious than Reader would like during a night alone with Roger at the studio.

Word count: ~4.8k

Warning: age gap (Reader is 21, he’s 35), language, and smut, so 18+ please

Tagging: @fixedonroger @a19103 @ginabaker1666 @thickthighsandbasicbrowneyes @culturefiendtrashqueen @imaginesandideas

(Let me know if you want to be tagged!)

[A/N: Thank you for voting! This concept was thanks to 17384 anon suggestions. 🥁 And you’ll even get a little math lesson with Brian in this one.]

One month and 3 days, you think to yourself as you sit at your desk and look through the window into the studio, watching Roger sitting at the soundboard with headphones on, deeply concentrating on whatever it is he’s listening to. Brian’s sitting next to him scribbling something and John’s fiddling around with his bass. You’re not paying them any attention. Your eyes are fixed on Roger, and you can’t turn them away, no matter how hard you try. Stop it, you fuss at yourself. Turn away right now and do the stupid bookwork before Kevin comes back tomorrow and fires you because you’re too busy daydreaming. You force yourself to turn around and focus on the task in front of you. Three months of bank statements he couldn’t be bothered to balance out, and he just threw it at you, expecting you to fix everything. “This isn’t what I signed up for,” you mumble, not realizing you had company.

“Well you didn’t sign up for that either, but you sure are doing it,” Jack laughs, making you glare up at him and seeing him point at the window. “You’ve got yourself a big distraction now, don’t you?” You keep glaring at him, wishing he would just shut up. “Usually they’re the ones distracted by you,” he laughs as he sits on your desk. “Remember when Kevin gave you those two weeks off when…”

“Shut up, Jack,” you say, completely frustrated and not wanting to recount the time that fucking creep from that band you don’t even want to think about didn’t know how to keep his hands or his filthy thoughts to himself. “I have a ton of work to do and you’re not helping.”

“Cheer up, kid,” he says as he stands up and pats you on the shoulder. “You’ll get it done.” He walks back into the studio and taps on the window to get your attention. When you look back, he’s got a big, cheesy grin on his face as he waves and closes the blinds, making sure you don’t get distracted again.

It didn’t help. Not in the slightest. Roger was in your head and he wouldn’t leave. You keep reflecting on everything that happened the past few weeks. Of course, sex happened – but it wasn’t just that anymore. You were enjoying each other’s company without the sex. He’d come over and you’d watch a movie, and you’d fall asleep on the sofa, and he’d cover you with the blanket and let you sleep, sometimes even staying there until he fell asleep too. Or you’d go to the Santa Monica Pier, just to get out and have a fun time somewhere he was able to blend. One night you stayed out on your balcony until almost 3 in the morning doing nothing more than sharing silly childhood stories. It didn’t complicate anything but your feelings, and that wasn’t supposed to happen. Feelings weren’t ever supposed to be involved, but it was hard for them not to be now. Not now that he knew your birthday, and you knew each other’s favorite colors, and you knew about things he did to cause trouble when he was 13, and he knew your sister’s name… Things casual hookups don’t know about each other. He’s picked up on your habit of pushing your hair behind your ear when you’re deep in thought, just like you picked up on his nervous habit of rubbing his hand on his shoulder to calm himself down.

You’re almost finished with half of the book balancing finally, an hour after you started, when Freddie and that creep manager of his who was like his shadow walked in. “Got tired of being gawked at all the time?” he giggled when he saw the window was closed. “Or did you get tired of doing the gawking?”

You purse your lips into a grin, trying not to giggle back, but you end up giggling anyway. “Hi, Freddie. Glad to see you could make it.”

“Not you too!” he dramatically cries out. “I don’t need another person nagging me.” You smirk and turn your attention back to your work as he walks into the studio.

“Nice to see you, Fred,” you can hear Roger yell out while the door was open. “Hey, who closed the window?” Took him long enough to notice, you grunted to yourself as you rolled your eyes. “That’s blocking my view…” you hear him say as the door closes, which gives you a little grin.

Two hours. For two hours you’ve been working on this stupid bank balance and you don’t think your brain can handle anymore. How it could get so messed up you don’t know, but you couldn’t look at another number right now or you were going to lose it. You slam your head down on the stack of paper that’s laying in front of you, wanting to cry, when the studio door opens and everyone starts walking out, laughing and talking without a care in the world. You don’t even want to look up at them. You’re enjoying your momentary zone out.

“We’re going grab lunch, kiddo,” Jack yells at you. “Wanna come?”

You look up, completely drained. “It’s only lunch time?” you ask, almost whining.

“It’s almost 3:00,” he tells you. “Want me to bring you something?”

You shake your head no and start to look back at the numbers. “I need to figure this out. I can’t get the damn thing right. I hate math.”

Brian calmly walks over and peeks at what you’re doing. You look up at him, confused, and he points at the total you have and the one you should have. “You transposed numbers somewhere when you were adding,” he tells you with a smile. “Add the numbers in this together.” He pushes you the calculator and before you finish he says “they’re all going to add up to 9.” He squats down to get even with you and talks you through it. “Add the 7 and 2 in that 72 together, and you get… 9.” You glare over at him and he’s smiling. “Now add this all up again. Carefully, this time,” he chuckles. And what do you know – it balanced. “Just a trick I leaned some time ago,” he smiled as he stood up. “Now you can come with us.”

Roger’s standing in front of you with his arms crossed, shaking his head in amusement. “Well aren’t you the fucking hero,” he sarcastically quips at Brian before looking at you. “Come,” he tells you, holding out his hand. “I insist.”

Everyone decided on the greasy diner across the street from the studio and crowded into a booth, leaving Jack to sit in a chair on the end. It was an uncomfortable arrangement, but you didn’t complain too much, since this was the most contact you had with Roger all day. He sat with his arm behind you on the seat, not even realizing that he kept rubbing your shoulder. He was watching you closely as you ate the strawberry pancakes you cheekily ordered (and yes, he found it incredibly funny), and when you were leaning over the table to be able to hear John better when he was talking to you, showing you the lyrics he had jotted down for his song they were going to be working on tomorrow.

“Do you think they flow?” he asked you. “I think this sounds stupid but I don’t know. It may work.”

You read the part he was pointing to and smiled. “I think it’s perfect. Simple, to the point, and perfect.”

The discussion you were having with John went on for a little while, just the two of you, until Roger apparently got tired of you ignoring him and cleared his throat while tapping you on your shoulder to get your attention. “You’ve a key, right? For the studio?”

“Yeah?” you say, questioning why he wants to know.

He starts to nudge you out of the booth. “Lets go. Need to get something out of my head.” You hurry and jump up and the two of you head back across the street.

When you open the door, he hurries and locks it back and keeps the shades closed on the windows, grabbing your arm and pulling you back to him. “Hey,” he says with a grin.

“Hey, you,” you grin back. “What’s in your head that you have to get out?”

“My ex,” he started. “She’s coming here. To Los Angeles. For a few days.” You look at him, wondering why he’s so fidgety when he’s telling you this, and why he’s even telling you this in the first place. “I told her she can stay at mine…” Now he’s looking at you wondering why you don’t seem to be bothered by any of this, and his brows start to furrow.

You start to giggle. “Were expecting me to start screaming at you? Become some unhinged lunatic or something?”

“A little bit, yeah,” he smiled. “But you’re too relaxed and I don’t know how to handle it.”

You pull him down by his shirt to get close to you and whisper deeply in his ear. “Does she taste as good as me?”

He leans back and looks you in the eye with an intensity that sends shockwaves through your entire body. “No one tastes as good as you.”

“Then I have nothing to worry about,” you tell him with the same intensity he’s giving you right now, as you reach down to cup his crotch. “And you said this was all mine.”

“It is,” he whispered in your ear as he chuckles deeply and moves his hand to your chest. “You have no idea what I’m going to do to you later.” He started to say more, but he’s interrupted by a knock on the door. You can hear everyone outside talking. You roll your eyes and start to walk away so you can open the door, but he grabs you and holds you back. “You’re all I can think about, Y/N.” You gaze up to him, your mouth slightly open with shock. There’s another knock on the door but you’re ignoring it. “You have a hold over me...” He cups your face in his hands. “I can’t…”

He’s interrupted by a louder knock on the door, followed by Jack yelling. “Open the goddamn door, Y/N! Why is it locked?”

You slide away to open the door and everyone pours in and heads directly to the recording room. “Later,” Roger comes and whispers in your ear before joining them, “we’ll finish this conversation later.”

You were internally a jumbled mess for the rest of the day. Every nerve you had seemed to puddle right there in the pit of your stomach. We’ll finish this conversation later? Your brain wasn’t helping calm you down at all. None of this was ever supposed to get to where you’d have conversations later. This was just supposed to be sex and nothing more. End it. End it, you kept telling yourself. He doesn’t mean anything he says, you try to convince yourself. He just wants to make sure I don’t… Your thoughts are interrupted by a knock on the recording room window.

Jack waves you over and you quietly walk in. “They’re working late and I can’t stay. Kid’s got some school thing. Can you stay to lock up?” You really didn’t want to. You’ve had such an exhausting day already, but you agree to stay.

You ended up falling asleep around 8:00, having been bored for the past hour and giving up all hope for a quiet evening at home in front of the television with a bowl of ice cream. Everyone was frustrated – them in the studio and you out in the office. Their frustration is why you stayed out of there. You weren’t in the mood to listen to any bickering. Freddie woke you up when they were leaving – well, all of them were leaving except for Roger, who was still sitting behind his drum kit trying to work out a beat. “He needs your help with the playback,” John told you. “Think you can handle that?”

“All I have to do is press buttons,” you grinned. “I think I can do that.” When they left, you quietly walked in the studio and sat down at the controls.

He didn’t notice you. He was focused on his drums, so you didn’t disturb him. You could see the annoyance in his face until he looked up and saw you sitting there. “Hey there,” he said through the mic. You smiled and gave a small wave. “Play that tape and listen to this…” And you did. Ten times, ten different cadences, and you recorded them all for him. That was about the extent of your abilities – pressing three buttons. “Come in here,” he said with a sigh.

When you walk in he turns on his stool and holds his arms out, beckoning you for a hug. You smile and go to him, and he wraps his arms around your hips and rests his head against your stomach. “Rough day?” you joke as you run your fingers through his hair. Well this is sweet, you tell yourself. You’re supposed to be ending it, dumbass.

“So frustrating,” he mumbles. “Nothing went right after we stopped for lunch.” He closed his eyes and started chuckling deep in his throat. “That feels nice.”

You lean down and kiss him on top his head. “Just relax,” you mumble. What are you doing, Y/N? you fuss yourself. He squeezes you tighter, and the two of you stay just like this for a couple of minutes before he looks up at you and smiles and puckers his lips, which you gladly lean down and give a quick peck to. You’re such an idiot, Y/N.

He lets go of his hold and pats his lap. “Sit,” he tells you, and you do, and holds your waist as he spins his stool around and grabs two drumsticks. “Ever played before?”

“Oh yeah, all the time,” you say sarcastically. “In fact, I’m so good that when Keith Moon died, The Who wanted me to be his replacement.” He poked at you with one of the sticks and started laughing. “Never even held a drumstick before.”

He hands them to you and you take them. “Well that’s going to change,” he tells you as he adjusts them in your hands. He rests his chin on your shoulder and you start to giggle. “You’re going to make this awkward, aren’t you?”

You can’t stop giggling as he holds your hands and starts moving them to hit the drum heads while he glides them around. You aren’t paying much attention. His foot stomping on the bass drum pedal is, well… creating a vibration. “I’m sorry,” you tell him, still giggling. “I’m not a very good student. My teacher is quite a distraction.”

He stops, and he’s giggling now too. “Well it’s hard to be a good teacher when my student is also a distraction.” He rubs his hands up your arms before wrapping himself around you and squeezing you gently into him. He clears his throat, his chin still resting on your shoulder. “I need you to let me all the way in, Y/N.” You turned your head quickly and looked at him, totally confused. “What are you feeling? Right now. Right this second. What are you feeling?”

“Nervous,” you whisper and you both start to giggle.

“That’s not what I mean, silly,” he says as he pokes you jokingly. “I mean, what are you feeling about… us?” You turn your head to him quickly again, looking confused again, and your mouth opens but no words are coming out. “I feel like there’s more to this.” Still, no words can come out of your mouth. “I feel like you’re scared…” You hold a hand up to his mouth, hoping he’ll stop, but instead he moves your hand away. “It’s okay,” he whispers, “because I’m scared too.” For the first time in your life, you’re completely speechless. “But I’m not willing to let that stop me.”

You look up to the ceiling, understanding everything he’s trying to tell you right now, trying to find words. You seem to have forgotten all words. “Roger,” you sigh. “Please…”

“Don’t tell me not to feel, Y/N,” he whispers. “I can’t do that.” He holds two fingers under your chin and turns you to face him. “Look at me,” he whispers, and you do. And you completely surrender. “I know you feel it. You can’t tell me you don’t.” Your heart is beating so fast you’re scared it’s going to burst. Your breathing is getting heavier and you’re still silent, wanting to tell him so bad how you feel – how you don’t want to feel – but you can’t. You can’t say anything. You’re lost in his gaze, his eyes controlling everything right now. He nudges your hips, implying he wants you to stand up, so you do, and turn to face him, still holding the drumsticks in your hands. He glances up at you and gives you that damn smile that makes you turn into putty.

He unbuttons your shirt, not taking it completely off, leaving it opened. He starts to softly kiss you on your stomach as he runs his hands over your hips and thighs, undoing your pants and slowly pulling them down. “These are new,” he tells your with a smirk, running his fingers over your panties, before reaching to grab one of the drumsticks you’re still holding from your hand, tossing it back and hitting something rather loudly. He stops caressing you and takes the other one from you, and he starts to toss it back as well, but he looks at you with somewhat of a devilish grin. He starts to rub the tip of it along your body, across your stomach, down to your pelvis, then your upper thigh. He stands up as he continues to brush your skin, bringing it to the front of your covered mound, giving you another smirk as he grazes it over you right there. “What are you doing?” you ask him with a nervous giggle.

“Playing,” he whispers with a smile as he leans in for a kiss, giggling with you. He moves the stick away, tossing it behind him like he did the other one and laughing. “Won’t be needing that,” he quips. “I can take it from here.” This isn’t lust he’s exuding right now – this is passion. Absolute passion that you’ve never experienced before. Not with him or anybody else. And you loved it, and you couldn’t stop yourself from giving him the same.

You start to unbutton his shirt – that same shirt he was wearing your first time together, and it brought back a wave of memories that washed over you. He didn’t interfere with your undressing him, opting instead to stand there and smile at you, letting you slowly move his shirt off of his shoulders before running your hands down his chest to his waist. You were slipping off your shoes and stepping out of your pants as you unbuckled his belt. He brings a hand under your chin and you look up at him, and for the first time in all of the times you were with him, you felt the need to take it slow, to drink in every single second. He was feeling it too. If he wasn’t, you’d have already been completely naked and halfway to climaxing.

He slipped out of his shoes as you finished unzipping his pants and pushed them down off of his waist, moving yourself down as you glided them completely off. It was quiet – almost eerily quiet. The only sounds were your breathing. You reach up and pull down his boxers, your mouth perfectly even with his cock. You say nothing. He says nothing. You bring your tongue to the tip of his member, slowly and softly licking him clean of the precum that has graced the head, before gliding down underneath the shaft as you hold it up. You feel him start to twitch, letting you know that you were doing everything exactly how you needed to be. When you lower your mouth to completely engulf him, he lets out a small moan and puts a hand on your head, not to guide you, but because he needed to touch you. He let you set the pace, giving up all control, something he had never done before. You start to hum quietly, sending gentle vibrations that he felt though his entire body. He didn’t want to finish – not yet. Not until he knew you were completely satisfied. But fuck, the magic you were creating with your mouth and your tongue felt so good, and you were enjoying it, too. Every twitch, every soft moan, every deep breath you heard him take was getting you more and more aroused.

He takes a small step backwards, and when you look up at him, he’s softly smirking and wiggling a finger for you to stand up and meet him. You slowly stand, and when you do, he gently holds your face in his palms, drawing you in for a soft but deep kiss. There are still no words being spoken. No words need to be spoken. Your eyes and actions are doing all of the talking right now. He finishes taking off your shirt and reaches behind, unclasping your bra, guiding it slowly off of you, just as you had done with his shirt. He bends down and takes your nipple in his mouth, his other hand gently rubbing the other, as he flicks his tongue. Your head falls back, and this time you’re the one with the quiet moans. He raises up again to meet you, and gently guides you back, leaning you on his drum kit. He kisses you deeply again before slowly kissing his way down your neck, that valley right between your breasts, down your stomach and to right above your panties that he admired earlier. He runs his fingers under the waistband and pulls them down, his breathing getting heavier as he revels at the perfection he sees before him.

You feel his breath against your thigh before he lowers his mouth to continue kissing his way to his ultimate destination. With every touch his lips make on you, you flutter. Still, no words are spoken, only actions. When his mouth finally makes its way to your lips, you inhale deeply and your body jerks, causing one of the cymbals to crash down. But you don’t care, and neither does he. His concentration on your pleasure is undisturbed. The warmth of his breath on you, the gentleness of his mouth and his tongue working it’s magic on you causes your breathing to get deeper, quicker. You run a hand through his hair, massaging his scalp with your fingertips. He moves a hand that was resting on your thighs and pulls his head back so he can watch his fingers rub up and down your wetness as he hears your gentle moans getting louder. He looks up at you, his mouth agape in complete awe of you, and he sees you looking down at him, telling him everything he needs to know without saying a word. He watches your eyes as he steadily slides two fingers in, taking great care in making sure you’re able to feel every single motion he’s creating inside of you. He turns his tongue attention back to your flower, focusing on your clit, tenderly guiding it to emphasize your pleasure, his eyes never leaving yours.

“Oh, Roger,” you whisper, finally breaking the silence that had befallen the two of you 20 minutes before. There’s so much you want to tell him – how you love the way he’s making you feel right now, how you love the way he’s lapping up your juices right now, how you love the way his fingers are moving inside you right now – but you’re rendered speechless again, your breath caught deep in your throat. He knows this is what you want. He knows without asking. He can see it on your face that you’re enjoying it. He doesn’t want to stop, not until you’re ready for him to stop. He starts to suck softly on your clit, still slowly moving his fingers in and out of you, knowing how he’s making you feel because your hand has now started to grip his hair. He can feel you tighten and start to tremble with pleasure. He sees you adjusting yourself against his drums – those drums he will never look at the same again – as you get closer to reaching your climax. His eyes never leave yours. Your moaning and breathing both increase, and he doesn’t stop. He keeps licking, keeps sucking, keeps pumping his fingers in and out of you until you finish. Even then, he doesn’t want to stop. He meant it when he said that he loves the way you taste, so even when you finished, he licked up your slit one more time before he stood up and held out his hands for you to grab.

He pulls you up from his drum kit and draws you in for a kiss and you can taste yourself on his lips. He holds you close to him as he turns, walking you back toward the wall. He kicks a guitar out of the way – you don’t know if it’s Brian’s or John’s, nor do you even care – because it was in the way. He leans against the wall with one hand, still kissing you, still dancing his tongue around yours in your mouth, still exuding that passion. Your hands rest against his chest as you break the kiss and look up at him. Your eyes never leave each other.

He pulls one of your legs up and wraps it around his waist, leaving your other foot on the ground before crouching down and pushing himself into you as he grabs your ass and hoists you up. You stayed there, pinned between him and the wall, as he thrust himself hard, but slowly, into you. You wrapped your arms around his neck, running your fingers through his hair, staring deeply into each other’s eyes as you groaned in lockstep with each other, breathing heavy, even starting to sweat at the same time. Still, no words needed to be spoken. You felt everything – you felt his cock hitting your g-spot at the perfect angle and beat, the way his fingers dig into you with every lunge he makes. He feels everything too – every single piece of hair that your hands were grabbing, every grip your walls made around his cock, every single moan that came from your mouth.

You were ready. He was ready. And neither one of you could hold out any longer. Wave after wave of pleasure flowed through your entire bodies, and you pulled his head into yours so you could kiss him as you shared your orgasms. You feel every single drop of his cum burst inside of you. You held his kiss, you didn’t want to let it go, and he didn’t want to let you go either. But you had to let go. You couldn’t stay like this forever.

He pulled himself out of you, slowly, and lowered your leg back down. “You are breathtaking, Y/N,” he whispers. “Where have you been all my life?”

You can’t resist making a joke. You want to resist but you can’t. “Well, for almost half of it I wasn’t even born yet,” you say with a cheeky grin, making him laugh and kiss you again. “Stay with me tonight,” you tell him. It wasn’t a question.

He nods and smiles. “I’d like that,” he whispers.

As you lay in bed, your head on his chest and his arm around you, you didn’t allow yourself to overthink all of this. You didn’t want to analyze anything, you didn’t want to worry about anything. You just wanted to lay there and soak everything in and savor this while you could. You love this, but you hate it all the same, but before your brain delves too deep into the catastrophic end to this fling with Roger that you envisioned, you divert your attention to the night you just had and fall asleep in his arms.

[part 5>>]

#roger taylor#roger taylor x y/n#roger taylor smut#roger taylor x fem reader#roger taylor x reader#roger taylor x you#1983 roger#enjoy

334 notes

·

View notes

Text

Reflection Pdf Mulan

Christina Aguilera: Reflection (Pop Version) (from Mulan) for clarinet solo, intermediate clarinet sheet music. High-Quality and Interactive, transposable in any key, play along. Includes an High-Quality PDF file to download instantly. Licensed to Virtual Sheet Music® by Hal Leonard® publishing company. How to get osu on mac. Bisoncam nb pro driver windows 10. Mulan’s friends. Mushu is a small fire dragon. He is one of Mulan’s family guardians. Fa Li, Mulan’s mother, and Grandmother Fa want Mulan to find a good husband. Fa Zhou is Mulan’s father. He was injured fighting for the Emperor in the past. Fa Mulan is a young woman who prefers riding her horse to a more traditional female role. Print and download Reflection sheet music from Mulan arranged for Flute. Instrumental Solo in F Major.

But her debut single – ‘Reflection’ for the original 1998 Mulan soundtrack, which led to her first record deal being signed – holds a special place in her hear Christina Aguilera has experienced many exciting firsts in her long and successful career. Download Reflection From Walt Disneys Mulan sheet music PDF that you can try for free. We give you 2 pages notes partial preview, in order to continue read the entire Reflection From Walt Disneys Mulan sheet music you need to signup, download music sheet notes in pdf format also available for offline reading. How to insert a checkbox in word 2008 for mac.

Mulan .

Confucius ( K o ng F uz i 孔夫子) w a s b o rn d uring th e Spring a nd A utumn era o f th e Do ng Z h o u d y na s ty .

T h o s e w ere times o f rupture a nd d ra s tic ch a ng es fo r th e a ncient king d o m o f C h ina .

Wa rl o rd s b a ttl ed a g a ins t ea ch o th er w ith a rmies o f h und red s o f th o us a nd men,

…s eeking to o v er th ro w l o ca l rul ers , ta ke o v er th eir l a nd s , a nd th eir po s itio n o f ro y a l s o v ereig ns .

B ut,

in th e mid s t

o f w a r a nd ch a o s …

a r a nd ch a o s , Cwonfucius s ea rch ed fo r pea ce a nd o rd er.