#llms

Text

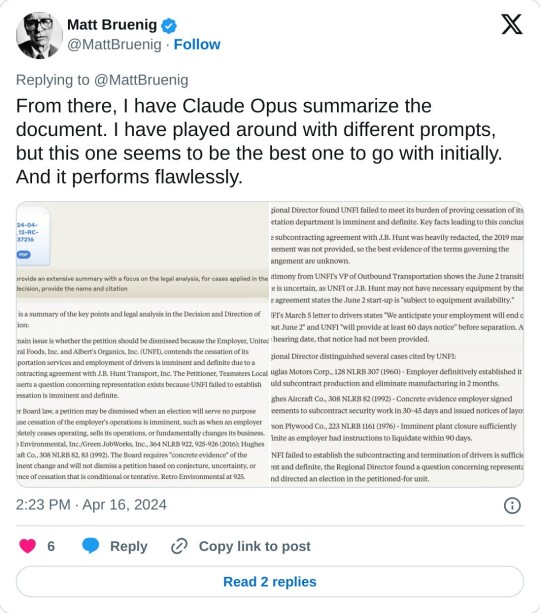

ok, i've gotta branch off the current ai disc horse a little bit because i saw this trash-fire of a comment in the reblogs of that one post that's going around

[reblog by user makiruz (i don't feel bad for putting this asshole on blast) that reads "So here's the thing: every Diane Duane book that I have is stolen, I downloaded it illegally from the Internet; and I am not sorry, I am a thief of books and I don't think I'm doing anything wrong, ideas are not property, they should be free to be used by anyone as they were before the invention of capitalism; for that reason I don't believe it's wrong to use books to train AI models"]

this is asshole behavior. if you do this and if you believe this, you are a Bad Person full stop.

"Capitalism" as an idea is more recent than commerce, and i am So Goddamn Tired of chuds using the language of leftism to justify their shitty behavior. and that's what this is.

like, we live in a society tm

if you like books but you don't have the means to pay for them, the library exists! libraries support authors! you know what doesn't support authors? stealing their books! because if those books don't sell, then you won't get more books from that author and/or the existing books will go out of print! because we live under capitalism.

and like, even leaving aside the capitalism thing, how much of a fucking piece of literal shit do you have to be to believe that you deserve art, that you deserve someone else's labor, but that they don't deserve to be able to live? to feed and clothe themselves? sure, ok, ideas aren't property, and you can't copyright an idea, but you absolutely can copyright the Specific Execution of an idea.

so makiruz, if you're reading this, or if you think like this user does, i hope you shit yourself during a job interview. like explosively. i hope you step on a lego when you get up to pee in the middle of the night. i hope you never get to read another book in your whole miserable goddamn life until you disabuse yourself of the idea that artists are "idea landlords" or whatever the fuck other cancerous ideas you've convinced yourself are true to justify your abhorrent behavior.

4K notes

·

View notes

Text

How plausible sentence generators are changing the bullshit wars

This Friday (September 8) at 10hPT/17hUK, I'm livestreaming "How To Dismantle the Internet" with Intelligence Squared.

On September 12 at 7pm, I'll be at Toronto's Another Story Bookshop with my new book The Internet Con: How to Seize the Means of Computation.

In my latest Locus Magazine column, "Plausible Sentence Generators," I describe how I unwittingly came to use – and even be impressed by – an AI chatbot – and what this means for a specialized, highly salient form of writing, namely, "bullshit":

https://locusmag.com/2023/09/commentary-by-cory-doctorow-plausible-sentence-generators/

Here's what happened: I got stranded at JFK due to heavy weather and an air-traffic control tower fire that locked down every westbound flight on the east coast. The American Airlines agent told me to try going standby the next morning, and advised that if I booked a hotel and saved my taxi receipts, I would get reimbursed when I got home to LA.

But when I got home, the airline's reps told me they would absolutely not reimburse me, that this was their policy, and they didn't care that their representative had promised they'd make me whole. This was so frustrating that I decided to take the airline to small claims court: I'm no lawyer, but I know that a contract takes place when an offer is made and accepted, and so I had a contract, and AA was violating it, and stiffing me for over $400.

The problem was that I didn't know anything about filing a small claim. I've been ripped off by lots of large American businesses, but none had pissed me off enough to sue – until American broke its contract with me.

So I googled it. I found a website that gave step-by-step instructions, starting with sending a "final demand" letter to the airline's business office. They offered to help me write the letter, and so I clicked and I typed and I wrote a pretty stern legal letter.

Now, I'm not a lawyer, but I have worked for a campaigning law-firm for over 20 years, and I've spent the same amount of time writing about the sins of the rich and powerful. I've seen a lot of threats, both those received by our clients and sent to me.

I've been threatened by everyone from Gwyneth Paltrow to Ralph Lauren to the Sacklers. I've been threatened by lawyers representing the billionaire who owned NSOG roup, the notoroious cyber arms-dealer. I even got a series of vicious, baseless threats from lawyers representing LAX's private terminal.

So I know a thing or two about writing a legal threat! I gave it a good effort and then submitted the form, and got a message asking me to wait for a minute or two. A couple minutes later, the form returned a new version of my letter, expanded and augmented. Now, my letter was a little scary – but this version was bowel-looseningly terrifying.

I had unwittingly used a chatbot. The website had fed my letter to a Large Language Model, likely ChatGPT, with a prompt like, "Make this into an aggressive, bullying legal threat." The chatbot obliged.

I don't think much of LLMs. After you get past the initial party trick of getting something like, "instructions for removing a grilled-cheese sandwich from a VCR in the style of the King James Bible," the novelty wears thin:

https://www.emergentmind.com/posts/write-a-biblical-verse-in-the-style-of-the-king-james

Yes, science fiction magazines are inundated with LLM-written short stories, but the problem there isn't merely the overwhelming quantity of machine-generated stories – it's also that they suck. They're bad stories:

https://www.npr.org/2023/02/24/1159286436/ai-chatbot-chatgpt-magazine-clarkesworld-artificial-intelligence

LLMs generate naturalistic prose. This is an impressive technical feat, and the details are genuinely fascinating. This series by Ben Levinstein is a must-read peek under the hood:

https://benlevinstein.substack.com/p/how-to-think-about-large-language

But "naturalistic prose" isn't necessarily good prose. A lot of naturalistic language is awful. In particular, legal documents are fucking terrible. Lawyers affect a stilted, stylized language that is both officious and obfuscated.

The LLM I accidentally used to rewrite my legal threat transmuted my own prose into something that reads like it was written by a $600/hour paralegal working for a $1500/hour partner at a white-show law-firm. As such, it sends a signal: "The person who commissioned this letter is so angry at you that they are willing to spend $600 to get you to cough up the $400 you owe them. Moreover, they are so well-resourced that they can afford to pursue this claim beyond any rational economic basis."

Let's be clear here: these kinds of lawyer letters aren't good writing; they're a highly specific form of bad writing. The point of this letter isn't to parse the text, it's to send a signal. If the letter was well-written, it wouldn't send the right signal. For the letter to work, it has to read like it was written by someone whose prose-sense was irreparably damaged by a legal education.

Here's the thing: the fact that an LLM can manufacture this once-expensive signal for free means that the signal's meaning will shortly change, forever. Once companies realize that this kind of letter can be generated on demand, it will cease to mean, "You are dealing with a furious, vindictive rich person." It will come to mean, "You are dealing with someone who knows how to type 'generate legal threat' into a search box."

Legal threat letters are in a class of language formally called "bullshit":

https://press.princeton.edu/books/hardcover/9780691122946/on-bullshit

LLMs may not be good at generating science fiction short stories, but they're excellent at generating bullshit. For example, a university prof friend of mine admits that they and all their colleagues are now writing grad student recommendation letters by feeding a few bullet points to an LLM, which inflates them with bullshit, adding puffery to swell those bullet points into lengthy paragraphs.

Naturally, the next stage is that profs on the receiving end of these recommendation letters will ask another LLM to summarize them by reducing them to a few bullet points. This is next-level bullshit: a few easily-grasped points are turned into a florid sheet of nonsense, which is then reconverted into a few bullet-points again, though these may only be tangentially related to the original.

What comes next? The reference letter becomes a useless signal. It goes from being a thing that a prof has to really believe in you to produce, whose mere existence is thus significant, to a thing that can be produced with the click of a button, and then it signifies nothing.

We've been through this before. It used to be that sending a letter to your legislative representative meant a lot. Then, automated internet forms produced by activists like me made it far easier to send those letters and lawmakers stopped taking them so seriously. So we created automatic dialers to let you phone your lawmakers, this being another once-powerful signal. Lowering the cost of making the phone call inevitably made the phone call mean less.

Today, we are in a war over signals. The actors and writers who've trudged through the heat-dome up and down the sidewalks in front of the studios in my neighborhood are sending a very powerful signal. The fact that they're fighting to prevent their industry from being enshittified by plausible sentence generators that can produce bullshit on demand makes their fight especially important.

Chatbots are the nuclear weapons of the bullshit wars. Want to generate 2,000 words of nonsense about "the first time I ate an egg," to run overtop of an omelet recipe you're hoping to make the number one Google result? ChatGPT has you covered. Want to generate fake complaints or fake positive reviews? The Stochastic Parrot will produce 'em all day long.

As I wrote for Locus: "None of this prose is good, none of it is really socially useful, but there’s demand for it. Ironically, the more bullshit there is, the more bullshit filters there are, and this requires still more bullshit to overcome it."

Meanwhile, AA still hasn't answered my letter, and to be honest, I'm so sick of bullshit I can't be bothered to sue them anymore. I suppose that's what they were counting on.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/09/07/govern-yourself-accordingly/#robolawyers

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#chatbots#plausible sentence generators#robot lawyers#robolawyers#ai#ml#machine learning#artificial intelligence#stochastic parrots#bullshit#bullshit generators#the bullshit wars#llms#large language models#writing#Ben Levinstein

2K notes

·

View notes

Text

I'm trying to debug a fairly subtle syntax error in a customer inventory report, and out of sheer morbid curiosity I decided to see what my SQL syntax checker's shiny new "Fix Syntax With AI" feature had to say about it.

After "thinking" about it for nearly a full minute, it produced the following:

SELECT

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

I suspect my day job isn't in peril any time soon.

2K notes

·

View notes

Text

The other thing about AI/Large image models is that there are things that they could be trained to do that are actually useful.

In college one of my friends was training a machine learning model to tell apart native fish species from invasive fish species in the Great Lakes to try to create a neural network program that could monitor cameras and count fish populations.

Yesterday I was reading about a man on a cruise ship who was reported missing in the afternoon, and when they went through the security footage, they found out that he had fallen overboard at 4 AM.

Imagine if an AI program had been trained on "people falling overboard" and been monitoring the security cameras and able to alert someone at 4 am that someone had fallen into the water! Imagine the animal population counts that an AI monitoring multiple trailcams in a wildlife area could do!

There are valid uses for this kind of pattern-matching large-data-processing recognition!

But no we're using it to replace writers and artists. Because it's easier, and more profitable.

400 notes

·

View notes

Link

Picture a world where computers can not only translate languages but also decipher biology’s convoluted language. This is the exciting frontier of Large Language Models (LLMs) that could transform our knowledge of genes and cells, which are the foundation of all life forms. Researchers from the Center, Chinese Academy of Sciences, China, explore this intriguing crossroad. Genes, which are passed down from one generation to another, hold the truths of our being. Cells, the tiny factories that keep us alive, execute these instructions coded in genes. Decoding how genes and cells interact helps to unravel health complications, diseases, and even mysteries regarding evolution.

Traditionally, scientists have used gene sequencing to study these intricate associations. But LLMs present an alternative way forward with immense promise. These models are trained using huge volumes of text data, enabling them to understand complicated patterns as well as mappings between them. Perhaps scientists can get a breakthrough by passing such datasets through LLMs.

Continue Reading

32 notes

·

View notes

Text

Interestingly enough I think calling language learning models a.i. is doing too much to humanize them. Because of how scifi literature has built up a.i. as living beings with actual working thought processes deserving of the classification of person (bicentennial man etc) a lot of people want to view a.i. as entities. And corporations pushing a.i. can take advtange of your soft feelings toward it like that. But LLMs are nowhere close to that, and tbh I don't even feel the way they learn approaches it. Word order guessing machines can logic the way to a regular sounding sentence but thats not anything approaching having a conversation with a person. Remembering what you said is just storing the information you are typing into it, its not any kind of indication of existance. And yet, so many people online are acting like when my grandma was convinced siri was actually a lady living in her phone. I think we need to start calling Language Learning Models "LLMs" and not giving the corps pushing them more of an in with the general public. Its marketting spin, stop falling for it.

#ai#llms#chatgpt#character ai#the fic ive seen written with it is also so sad and bland#even leaving the ethical qualms behind in the fact its trained off uncompensated work stolen off the internet and then used to make#commercial work outsode the fic sphere#it also does a bad job#please read more quality stuff so you can recognize this

112 notes

·

View notes

Text

14 notes

·

View notes

Text

Putting this together with that one terrible tweet/video/screenshot we all saw today*, here is my suspicion:

They found out scraping AO3 filled OpenAI with omegaverse patterns and they went… OK, OK, it's not a flaw if we find a way to monetise it.

Anyway: STOP SAYING IT'S OK IF WE FEED IT OMEGAVERSE.

They DO NOT GIVE A SHIT. They JUST WANT TO STEAL FROM US.

And by the way: FUCK YOU.

If my kink is not your kink you should still defend to the death my write to ship it.

Can I suggest a new acronym for this terrible age?

Your Kink Is Not My Kink But I Will Defend It.

YKINMKBIWDI

Because it's no longer enough to scroll past. You do not have to read it, but we've learnt repeatedly that any excuse to limit kink in fanfic will be used to persecute queer people. Now we're seeing that denigrating and saying it's OK to feed some kinds of fanfic to the machines is just another way to push us all through the gaping maw of capitalism.

We need to defend each other's squicks, people.

I'll defend your right to student-teacher and smoking kinks if you defend my omegaverse.

I don't want to read it, but I will defend it. And so should you!

Can I get a reblog on this? I'm really disturbed by how quickly fandom pivotted towards 'It's OK and funny for machine learning to steal the WEIRD, KINKY fanfic.'

*Because I don't actually want to be annoying for people who didn't see it: a foolish young person thought it would be great to feed unfinished fics to ChatGPT so they could have an ending. And yeah, people said we should feed omegaverse to it instead :-////

#fanfic#chatgpt#openai#machine learning#llms#ao3#the pit#fanfic.net#wattpad#omegaverse#abo#a/b/o#YKINMKATOK#YKINMKBDI#your kink is not my kink and that's ok#your kink is not my kink but i will defend it

42 notes

·

View notes

Text

what's actually wrong with 'AI'

it's become impossible to ignore the discourse around so-called 'AI'. but while the bulk of the discourse is saturated with nonsense such as, i wanted to pool some resources to get a good sense of what this technology actually is, its limitations and its broad consequences.

what is 'AI'

the best essay to learn about what i mentioned above is On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? this essay cost two of its collaborators to be fired from Google. it frames what large-language models are, what they can and cannot do and the actual risks they entail: not some 'super-intelligence' that we keep hearing about but concrete dangers: from climate, the quality of the training data and biases - both from the training data and from us, the users.

The problem with artificial intelligence? It’s neither artificial nor intelligent

How the machine ‘thinks’: Understanding opacity in machine learning algorithms

The Values Encoded in Machine Learning Research

Troubling Trends in Machine Learning Scholarship: Some ML papers suffer from flaws that could mislead the public and stymie future research

AI Now Institute 2023 Landscape report (discussions of the power imbalance in Big Tech)

ChatGPT Is a Blurry JPEG of the Web

Can we truly benefit from AI?

Inside the secret list of websites that make AI like ChatGPT sound smart

The Steep Cost of Capture

labor

'AI' champions the facade of non-human involvement. but the truth is that this is a myth that serves employers by underpaying the hidden workers, denying them labor rights and social benefits - as well as hyping-up their product. the effects on workers are not only economic but detrimental to their health - both mental and physical.

OpenAI Used Kenyan Workers on Less Than $2 Per Hour to Make ChatGPT Less Toxic

also from the Times: Inside Facebook's African Sweatshop

The platform as factory: Crowdwork and the hidden labour behind artificial intelligence

The humans behind Mechanical Turk’s artificial intelligence

The rise of 'pseudo-AI': how tech firms quietly use humans to do bots' work

The real aim of big tech's layoffs: bringing workers to heel

The Exploited Labor Behind Artificial Intelligence

workers surveillance

5 ways Amazon monitors its employees, from AI cameras to hiring a spy agency

Computer monitoring software is helping companies spy on their employees to measure their productivity – often without their consent

theft of art and content

Artists say AI image generators are copying their style to make thousands of new images — and it's completely out of their control (what gives me most hope about regulators dealing with theft is Getty images' lawsuit - unfortunately individuals simply don't have the same power as the corporation)

Copyright won't solve creators' Generative AI problem

The real aim of big tech's layoffs: bringing workers to heel

The Exploited Labor Behind Artificial Intelligence

AI is already taking video game illustrators’ jobs in China

Microsoft lays off team that taught employees how to make AI tools responsibly/As the company accelerates its push into AI products, the ethics and society team is gone

150 African Workers for ChatGPT, TikTok and Facebook Vote to Unionize at Landmark Nairobi Meeting

Inside the AI Factory: the Humans that Make Tech Seem Human

Refugees help power machine learning advances at Microsoft, Facebook, and Amazon

Amazon’s AI Cameras Are Punishing Drivers for Mistakes They Didn’t Make

China’s AI boom depends on an army of exploited student interns

political, social, ethical consequences

Afraid of AI? The startups selling it want you to be

An Indigenous Perspective on Generative AI

“Computers enable fantasies” – On the continued relevance of Weizenbaum’s warnings

‘Utopia for Whom?’: Timnit Gebru on the dangers of Artificial General Intelligence

Machine Bias

HUMAN_FALLBACK

AI Ethics Are in Danger. Funding Independent Research Could Help

AI Is Tearing Wikipedia Apart

AI machines aren’t ‘hallucinating’. But their makers are

The Great A.I. Hallucination (podcast)

“Sorry in Advance!” Rapid Rush to Deploy Generative A.I. Risks a Wide Array of Automated Harms

The promise and peril of generative AI

ChatGPT Users Report Being Able to See Random People's Chat Histories

Benedetta Brevini on the AI sublime bubble – and how to pop it

Eating Disorder Helpline Disables Chatbot for 'Harmful' Responses After Firing Human Staff

AI moderation is no match for hate speech in Ethiopian languages

Amazon, Google, Microsoft, and other tech companies are in a 'frenzy' to help ICE build its own data-mining tool for targeting unauthorized workers

Crime Prediction Software Promised to Be Free of Biases. New Data Shows It Perpetuates Them

The EU AI Act is full of Significance for Insurers

Proxy Discrimination in the Age of Artificial Intelligence and Big Data

Welfare surveillance system violates human rights, Dutch court rules

Federal use of A.I. in visa applications could breach human rights, report says

Open (For Business): Big Tech, Concentrated Power, and the Political Economy of Open AI

Generative AI Is Making Companies Even More Thirsty for Your Data

environment

The Generative AI Race Has a Dirty Secret

Black boxes, not green: Mythologizing artificial intelligence and omitting the environment

Energy and Policy Considerations for Deep Learning in NLP

AINOW: Climate Justice & Labor Rights

militarism

The Growing Global Spyware Industry Must Be Reined In

AI: the key battleground for Cold War 2.0?

‘Machines set loose to slaughter’: the dangerous rise of military AI

AI: The New Frontier of the EU's Border Extranalisation Strategy

The A.I. Surveillance Tool DHS Uses to Detect ‘Sentiment and Emotion’

organizations

AI now

DAIR

podcast episodes

Pretty Heady Stuff: Dru Oja Jay & James Steinhoff guide us through the hype & hysteria around AI

Tech Won't Save Us: Why We Must Resist AI w/ Dan McQuillan, Why AI is a Threat to Artists w/ Molly Crabapple, ChatGPT is Not Intelligent w/ Emily M. Bender

SRSLY WRONG: Artificial Intelligence part 1, part 2

The Dig: AI Hype Machine w/ Meredith Whittaker, Ed Ongweso, and Sarah West

This Machine Kills: The Triforce of Corporate Power in AI w/ ft. Sarah Myers West

#masterpost#reading list#ai#artificial art#artificial intelligence#technology#big tech#surveillance capitalism#data capital#openai#chatgpt#machine learning#r/#readings#resources#ref#AI now#LLMs#chatbots#data mining#labor#p/#generative ai#research#capitalism

35 notes

·

View notes

Text

I've heard it observed that synthetic media generation is poised to replace entry level jobs, making it ever more difficult for new workers to break into an industry. This thread seems to support the argument. What Matt is describing here is essentially the automation of entry level legal work. I'm sure it works for him, but if this individual has figured it out, so will large firms. When the senior partners at major firms all do this to prepare research instead of paying a new generation of clerks and paralegals, how does the next generation learn the ropes and develop the skills necessary to become a "legal practitioner"?

4 notes

·

View notes

Photo

Folks, in case you had any doubts about “do these AO3 spam commenters really scan the text of a work, and give it any kind of analysis that matters?”...

This is the chapter it called out as “written” by an AI text generator:

#AO3#Moon Knight#Ptah's fic#AI text generator#LLMs#spam comments#ooh yeah we definitely use Spicy Autocomplete to make...drawings#take me to AO3 jail and lock me up for that one!

25 notes

·

View notes

Text

Sympathy for the spammer

Catch me in Miami! I'll be at Books and Books in Coral Gables on Jan 22 at 8PM.

In any scam, any con, any hustle, the big winners are the people who supply the scammers – not the scammers themselves. The kids selling dope on the corner are making less than minimum wage, while the respectable crime-bosses who own the labs clean up. Desperate "retail investors" who buy shitcoins from Superbowl ads get skinned, while the MBA bros who issue the coins make millions (in real dollars, not crypto).

It's ever been thus. The California gold rush was a con, and nearly everyone who went west went broke. Famously, the only reliable way to cash out on the gold rush was to sell "picks and shovels" to the credulous, doomed and desperate. That's how Leland Stanford made his fortune, which he funneled into eugenics programs (and founding a university):

https://www.hachettebookgroup.com/titles/malcolm-harris/palo-alto/9780316592031/

That means that the people who try to con you are almost always getting conned themselves. Think of Multi-Level Marketing (MLM) scams. My forthcoming novel The Bezzle opens with a baroque and improbable fast-food Ponzi in the town of Avalon on the island of Catalina, founded by the chicle monopolist William Wrigley Jr:

http://thebezzle.org

Wrigley found fast food declasse and banned it from the island, a rule that persists to this day. In The Bezzle, the forensic detective Martin Hench uncovers The Fry Guys, an MLM that flash-freezes contraband burgers and fries smuggled on-island from the mainland and sells them to islanders though an "affiliate marketing" scheme that is really about recruiting other affiliate markets to sell under you. As with every MLM, the value of the burgers and fries sold is dwarfed by the gigantic edifice of finance fraud built around it, with "points" being bought and sold for real cash, which is snaffled up and sucked out of the island by a greedy mainlander who is behind the scheme.

A "bezzle" is John Kenneth Galbraith's term for "the magic interval when a confidence trickster knows he has the money he has appropriated but the victim does not yet understand that he has lost it." In every scam, there's a period where everyone feels richer – but only the scammers are actually cleaning up. The wealth of the marks is illusory, but the longer the scammer can preserve the illusion, the more real money the marks will pump into the system.

MLMs are particularly ugly, because they target people who are shut out of economic opportunity – women, people of color, working people. These people necessarily rely on social ties for survival, looking after each others' kids, loaning each other money they can't afford, sharing what little they have when others have nothing.

It's this social cohesion that MLMs weaponize. Crypto "entrepreneurs" are encouraged to suck in their friends and family by telling them that they're "building Black wealth." Working women are exhorted to suck in their bffs by appealing to their sisterhood and the chance for "women to lift each other up."

The "sales people" trying to get you to buy crypto or leggings or supplements are engaged in predatory conduct that will make you financially and socially worse off, wrecking their communities' finances and shattering the mutual aid survival networks they rely on. But they're not getting rich on this – they're also being scammed:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4686468

This really hit home for me in the mid-2000s, when I was still editing Boing Boing. We had a submission form where our readers could submit links for us to look at for inclusion on the blog, and it was overwhelmed by spam. We'd add all kinds of antispam to it, and still, we'd get floods of hundreds or even thousands of spam submissions to it.

One night, I was lying in my bed in London and watching these spams roll in. They were all for small businesses in the rustbelt, handyman services, lawn-care, odd jobs, that kind of thing. They were 10 million miles from the kind of thing we'd ever post about on Boing Boing. They were coming in so thickly that I literally couldn't finish downloading my email – the POP session was dropping before I could get all the mail in the spool. I had to ssh into my mail server and delete them by hand. It was maddening.

Frustrated and furious, I started calling the phone numbers associated with these small businesses, demanding an explanation. I assumed that they'd hired some kind of sleazy marketing service and I wanted to know who it was so I could give them a piece of my mind.

But what I discovered when I got through was much weirder. These people had all been laid off from factories that were shuttering due to globalization. As part of their termination packages, their bosses had offered them "retraining" via "courses" in founding their own businesses.

The "courses" were the precursors to the current era's rise-and-grind hustle-culture scams (again, the only people getting rich from that stuff are the people selling the courses – the "students" finish the course poorer). They promised these laid-off workers, who'd given their lives to their former employers before being discarded, that they just needed to pull themselves up by their own boostraps:

https://pluralistic.net/2023/04/10/declaration-of-interdependence/#solidarity-forever

After all, we had the internet now! There were so many new opportunities to be your own boss! The course came with a dreadful build-your-own-website service, complete with an overpriced domain sales portal, and a single form for submitting your new business to "thousands of search engines."

This was nearly 20 years ago, but even then, there was really only one search engine that mattered: Google. The "thousands of search engines" the scammers promised to submit these desperate peoples' websites to were just submission forms for directories, indexes, blogs, and mailing lists. The number of directories, indexes, blogs and mailing lists that would publish their submissions was either "zero" or "nearly zero." There was certainly no possibility that anyone at Boing Boing would ever press the wrong key and accidentally write a 500-word blog post about a leaf-raking service in a collapsing deindustrialized exurb in Kentucky or Ohio.

The people who were drowning me in spam weren't the scammers – they were the scammees.

But that's only half the story. Years later, I discovered how our submission form was getting included in this get-rich-quick's mass-submission system. It was a MLM! Coders in the former Soviet Union were getting work via darknet websites that promised them relative pittances for every submission form they reverse-engineered and submitted. The smart coders didn't crack the forms directly – they recruited other, less business-savvy coders to do that for them, and then often as not, ripped them off.

The scam economy runs on this kind of indirection, where scammees are turned into scammers, who flood useful and productive and nice spaces with useless dross that doesn't even make them any money. Take the submission queue at Clarkesworld, the great online science fiction magazine, which famously had to close after it was flooded with thousands of junk submission "written" by LLMs:

https://www.npr.org/2023/02/24/1159286436/ai-chatbot-chatgpt-magazine-clarkesworld-artificial-intelligence

There was a zero percent chance that Neil Clarke would accidentally accept one of these submissions. They were uniformly terrible. The people submitting these "stories" weren't frustrated sf writers who'd discovered a "life hack" that let them turn out more brilliant prose at scale.

They were scammers who'd been scammed into thinking that AIs were the key to a life of passive income, a 4-Hour Work-Week powered by an AI-based self-licking ice-cream cone:

https://pod.link/1651876897/episode/995c8a778ede17d2d7cff393e5203157

This is absolutely classic passive-income brainworms thinking. "I have a bot that can turn out plausible sentences. I will locate places where sentences can be exchanged for money, aim my bot at it, sit back, and count my winnings." It's MBA logic on meth: find a thing people pay for, then, without bothering to understand why they pay for that thing, find a way to generate something like it at scale and bombard them with it.

Con artists start by conning themselves, with the idea that "you can't con an honest man." But the factor that predicts whether someone is connable isn't their honesty – it's their desperation. The kid selling drugs on the corner, the mom desperately DMing her high-school friends to sell them leggings, the cousin who insists that you get in on their shitcoin – they're all doing it because the system is rigged against them, and getting worse every day.

These people reason – correctly – that all the people getting really rich are scamming. If Amazon can make $38b/year selling "ads" that push worse products that cost more to the top of their search results, why should the mere fact that an "opportunity" is obviously predatory and fraudulent disqualify it?

https://pluralistic.net/2023/11/29/aethelred-the-unready/#not-one-penny-for-tribute

The quest for passive income is really the quest for a "greater fool," the economist's term for the person who relieves you of the useless crap you just overpaid for. It rots the mind, atomizes communities, shatters solidarity and breeds cynicism:

https://pluralistic.net/2023/02/24/passive-income/#swiss-cheese-security

The rise and rise of botshit cannot be separated from this phenomenon. The botshit in our search-results, our social media feeds, and our in-boxes isn't making money for the enshittifiers who send it – rather, they are being hustled by someone who's selling them the "picks and shovels" for the AI gold rush:

https://www.theguardian.com/commentisfree/2024/jan/03/botshit-generative-ai-imminent-threat-democracy

That's the true cost of all the automation-driven unemployment criti-hype: while we're nowhere near a place where bots can steal your job, we're certainly at the point where your boss can be suckered into firing you and replacing you with a bot that fails at doing your job:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

The manic "entrepreneurs" who've been stampeded into panic by the (correct) perception that the economy is a game of musical chairs where the number of chairs is decreasing at breakneck speed are easy marks for the Leland Stanfords of AI, who are creating generational wealth for themselves by promising that their bots will automate away all the tedious work that goes into creating value. Expect a lot more Amazon Marketplace products called "I'm sorry, I cannot fulfil this request as it goes against OpenAI use policy":

https://www.theverge.com/2024/1/12/24036156/openai-policy-amazon-ai-listings

No one's going to buy these products, but the AI picks-and-shovels people will still reap a fortune from the attempt. And because history repeats itself, these newly minted billionaires are continuing Leland Stanford's love affair with eugenics:

https://www.truthdig.com/dig-series/eugenics/

The fact that AI spam doesn't pay is important to the fortunes of AI companies. Most high-value AI applications are very risk-intolerant (self-driving cars, radiology analysis, etc). An AI tool might help a human perform these tasks more accurately – by warning them of things that they've missed – but that's not how AI will turn a profit. There's no market for AI that makes your workers cost more but makes them better at their jobs:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Plenty of people think that spam might be the elusive high-value, low-risk AI application. But that's just not true. The point of AI spam is to get clicks from people who are looking for better content. It's SEO. No one reads 2000 words of algorithm-pleasing LLM garbage over an omelette recipe and then subscribes to that site's feed.

And the omelette recipe generates pennies for the spammer that posted it. They are doing massive volume in order to make those pennies into dollars. You don't make money by posting one spam. If every spammer had to pay the actual recovery costs (energy, chillers, capital amortization, wages) for their query, every AI spam would lose (lots of) money.

Hustle culture and passive income are about turning other peoples' dollars into your dimes. It is a negative-sum activity, a net drain on society. Behind every seemingly successful "passive income" is a con artist who's getting rich by promising – but not delivering – that elusive passive income, and then blaming the victims for not hustling hard enough:

https://www.ftc.gov/business-guidance/blog/2023/12/blueprint-trouble

I'm Kickstarting the audiobook for The Bezzle, the sequel to Red Team Blues, narrated by @wilwheaton! You can pre-order the audiobook and ebook, DRM free, as well as the hardcover, signed or unsigned. There's also bundles with Red Team Blues in ebook, audio or paperback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/01/15/passive-income-brainworms/#four-hour-work-week

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#late-stage capitalism#end-stage capitalism#feudalism#rentierism#blueprint for wealth#predation#clarkesworld#kindle#kindle unlimited program#kup#pyramid schemes#mlms#multilevel marketing#amway#spam#form spam#enshittification#ai#llms#large language models#chatbots#ucm#seo#search engine optimization#dark seo#passive income#passive income brainworms

820 notes

·

View notes

Text

youtube

Despite the linkbaity, trend-chasing title and thumb, this video essay is really about the writing process. It explores the potential for AI LLMs to serve as a "reflective other" to refine one's own ideas and organization, the potential dangers of a world where nobody reads and nobody writes, and why talking to your instructor is always best practice.

#AI writing#chatgpt#ai assistance#AI ethics#plagiarism#college life#college writing#university#academia#essay writing#LLMs#openai#Youtube

4 notes

·

View notes

Text

ELIZA was a simple program, launched in 1966, that looked for keywords like “mother” and echoed back “How do you feel about your mother?” If it didn’t find the keywords, it echoed back bland phrases like “Tell me more.”

Ironically, though [MIT computer scientist Joseph] Weizenbaum had designed ELIZA to demonstrate how superficial the state of human-to-machine conversation was, it had the opposite effect. People were entranced, engaging in long, deep, and private conversations with a program that was only capable of reflecting users’ words back to them. Weizenbaum was so disturbed by the public response that he spent the rest of his life warning against the perils of letting computers — and, by extension, the field of AI he helped launch — play too large a role in society.

Chatbots today operate on the same principles as ELIZA, but are far more sophisticated, making it more likely that users will trick themselves into believing chatbots are people. And Americans today are in the midsts of an epidemic of loneliness.

To Michael Sacasas, an independent scholar of technology and author of The Convivial Society newsletter, this is cause for concern above and beyond Weizenbaum’s warnings. “We anthropomorphize because we do not want to be alone,” Sacasas recently wrote. “Now we have powerful technologies, which appear to be finely calibrated to exploit this core human desire.”

The lonelier we get, the more exploitable by these technologies we become. “When these convincing chatbots become as commonplace as the search bar on a browser,” Sacases continues, “we will have launched a social-psychological experiment on a grand scale which will yield unpredictable and possibly tragic results.”

23 notes

·

View notes

Text

made my first video game song by fucking around and finding out

6 notes

·

View notes

Link

The DNA, RNA, and proteins that control an organism’s entire functioning are all fully encoded in a sequence called the genome. Large-scale genome databases and machine learning advances may make it possible to create a biological foundation model that speeds up the generative design and mechanistic analysis of intricate molecular interactions. Researchers from Arc Institute, TogtherAI, and collaborators present Evo, a genomic foundation model that allows for problems related to creation and prediction at both the molecular and genome scales.

The deep signal processing architecture Evo has been scaled to 7 billion parameters at single-nucleotide byte resolution, with a context length of 131 kilobases (kb). Evo, having been trained on entire prokaryotic genomes, is capable of outperforming domain-specific language models in zero-shot function prediction. For the first time, it can create whole transposable systems and artificial CRISPR-Cas molecular complexes, demonstrating its proficiency in multielement production tasks. Evo has the potential to advance our knowledge and management of biology at many levels of complexity, as evidenced by its ability to predict gene essentiality at nucleotide precision and produce coding-rich sequences up to 650 kb in length.

Continue Reading

36 notes

·

View notes