#robolawyers

Text

How plausible sentence generators are changing the bullshit wars

This Friday (September 8) at 10hPT/17hUK, I'm livestreaming "How To Dismantle the Internet" with Intelligence Squared.

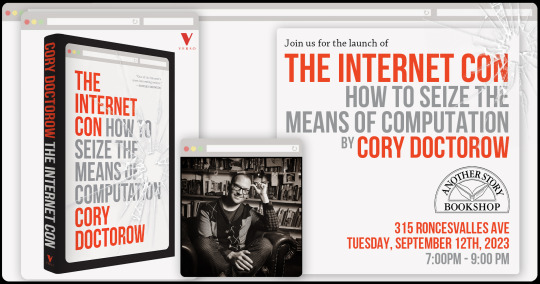

On September 12 at 7pm, I'll be at Toronto's Another Story Bookshop with my new book The Internet Con: How to Seize the Means of Computation.

In my latest Locus Magazine column, "Plausible Sentence Generators," I describe how I unwittingly came to use – and even be impressed by – an AI chatbot – and what this means for a specialized, highly salient form of writing, namely, "bullshit":

https://locusmag.com/2023/09/commentary-by-cory-doctorow-plausible-sentence-generators/

Here's what happened: I got stranded at JFK due to heavy weather and an air-traffic control tower fire that locked down every westbound flight on the east coast. The American Airlines agent told me to try going standby the next morning, and advised that if I booked a hotel and saved my taxi receipts, I would get reimbursed when I got home to LA.

But when I got home, the airline's reps told me they would absolutely not reimburse me, that this was their policy, and they didn't care that their representative had promised they'd make me whole. This was so frustrating that I decided to take the airline to small claims court: I'm no lawyer, but I know that a contract takes place when an offer is made and accepted, and so I had a contract, and AA was violating it, and stiffing me for over $400.

The problem was that I didn't know anything about filing a small claim. I've been ripped off by lots of large American businesses, but none had pissed me off enough to sue – until American broke its contract with me.

So I googled it. I found a website that gave step-by-step instructions, starting with sending a "final demand" letter to the airline's business office. They offered to help me write the letter, and so I clicked and I typed and I wrote a pretty stern legal letter.

Now, I'm not a lawyer, but I have worked for a campaigning law-firm for over 20 years, and I've spent the same amount of time writing about the sins of the rich and powerful. I've seen a lot of threats, both those received by our clients and sent to me.

I've been threatened by everyone from Gwyneth Paltrow to Ralph Lauren to the Sacklers. I've been threatened by lawyers representing the billionaire who owned NSOG roup, the notoroious cyber arms-dealer. I even got a series of vicious, baseless threats from lawyers representing LAX's private terminal.

So I know a thing or two about writing a legal threat! I gave it a good effort and then submitted the form, and got a message asking me to wait for a minute or two. A couple minutes later, the form returned a new version of my letter, expanded and augmented. Now, my letter was a little scary – but this version was bowel-looseningly terrifying.

I had unwittingly used a chatbot. The website had fed my letter to a Large Language Model, likely ChatGPT, with a prompt like, "Make this into an aggressive, bullying legal threat." The chatbot obliged.

I don't think much of LLMs. After you get past the initial party trick of getting something like, "instructions for removing a grilled-cheese sandwich from a VCR in the style of the King James Bible," the novelty wears thin:

https://www.emergentmind.com/posts/write-a-biblical-verse-in-the-style-of-the-king-james

Yes, science fiction magazines are inundated with LLM-written short stories, but the problem there isn't merely the overwhelming quantity of machine-generated stories – it's also that they suck. They're bad stories:

https://www.npr.org/2023/02/24/1159286436/ai-chatbot-chatgpt-magazine-clarkesworld-artificial-intelligence

LLMs generate naturalistic prose. This is an impressive technical feat, and the details are genuinely fascinating. This series by Ben Levinstein is a must-read peek under the hood:

https://benlevinstein.substack.com/p/how-to-think-about-large-language

But "naturalistic prose" isn't necessarily good prose. A lot of naturalistic language is awful. In particular, legal documents are fucking terrible. Lawyers affect a stilted, stylized language that is both officious and obfuscated.

The LLM I accidentally used to rewrite my legal threat transmuted my own prose into something that reads like it was written by a $600/hour paralegal working for a $1500/hour partner at a white-show law-firm. As such, it sends a signal: "The person who commissioned this letter is so angry at you that they are willing to spend $600 to get you to cough up the $400 you owe them. Moreover, they are so well-resourced that they can afford to pursue this claim beyond any rational economic basis."

Let's be clear here: these kinds of lawyer letters aren't good writing; they're a highly specific form of bad writing. The point of this letter isn't to parse the text, it's to send a signal. If the letter was well-written, it wouldn't send the right signal. For the letter to work, it has to read like it was written by someone whose prose-sense was irreparably damaged by a legal education.

Here's the thing: the fact that an LLM can manufacture this once-expensive signal for free means that the signal's meaning will shortly change, forever. Once companies realize that this kind of letter can be generated on demand, it will cease to mean, "You are dealing with a furious, vindictive rich person." It will come to mean, "You are dealing with someone who knows how to type 'generate legal threat' into a search box."

Legal threat letters are in a class of language formally called "bullshit":

https://press.princeton.edu/books/hardcover/9780691122946/on-bullshit

LLMs may not be good at generating science fiction short stories, but they're excellent at generating bullshit. For example, a university prof friend of mine admits that they and all their colleagues are now writing grad student recommendation letters by feeding a few bullet points to an LLM, which inflates them with bullshit, adding puffery to swell those bullet points into lengthy paragraphs.

Naturally, the next stage is that profs on the receiving end of these recommendation letters will ask another LLM to summarize them by reducing them to a few bullet points. This is next-level bullshit: a few easily-grasped points are turned into a florid sheet of nonsense, which is then reconverted into a few bullet-points again, though these may only be tangentially related to the original.

What comes next? The reference letter becomes a useless signal. It goes from being a thing that a prof has to really believe in you to produce, whose mere existence is thus significant, to a thing that can be produced with the click of a button, and then it signifies nothing.

We've been through this before. It used to be that sending a letter to your legislative representative meant a lot. Then, automated internet forms produced by activists like me made it far easier to send those letters and lawmakers stopped taking them so seriously. So we created automatic dialers to let you phone your lawmakers, this being another once-powerful signal. Lowering the cost of making the phone call inevitably made the phone call mean less.

Today, we are in a war over signals. The actors and writers who've trudged through the heat-dome up and down the sidewalks in front of the studios in my neighborhood are sending a very powerful signal. The fact that they're fighting to prevent their industry from being enshittified by plausible sentence generators that can produce bullshit on demand makes their fight especially important.

Chatbots are the nuclear weapons of the bullshit wars. Want to generate 2,000 words of nonsense about "the first time I ate an egg," to run overtop of an omelet recipe you're hoping to make the number one Google result? ChatGPT has you covered. Want to generate fake complaints or fake positive reviews? The Stochastic Parrot will produce 'em all day long.

As I wrote for Locus: "None of this prose is good, none of it is really socially useful, but there’s demand for it. Ironically, the more bullshit there is, the more bullshit filters there are, and this requires still more bullshit to overcome it."

Meanwhile, AA still hasn't answered my letter, and to be honest, I'm so sick of bullshit I can't be bothered to sue them anymore. I suppose that's what they were counting on.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/09/07/govern-yourself-accordingly/#robolawyers

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#chatbots#plausible sentence generators#robot lawyers#robolawyers#ai#ml#machine learning#artificial intelligence#stochastic parrots#bullshit#bullshit generators#the bullshit wars#llms#large language models#writing#Ben Levinstein

2K notes

·

View notes

Text

How Can Policymakers Encourage More Robo-Lawyers? – Center for Data Innovation

How Can Policymakers Encourage More Robo-Lawyers? – Center for Data Innovation

Advances in digital automation could enable many individuals and businesses to access better and cheaper legal services. Robo-lawyers—tech-enabled legal services—could help clients review contracts, draft patent applications, write legal briefs, and more. Unfortunately, state laws and professional licensing requirements significantly limit the development of technology-enabled legal services that…

View On WordPress

0 notes

Text

В мире, где 🤖👉😵 роботы готовы перехватывать рабочие места быстрее, чем вы успеваете сказать «безработица», 🧑⚖️📝 правовое поле оказывается во власти неожиданного дуэта: LegalBot 9000 и Politibot Prime. Когда Max LegalEagle раскрывает зловещий 📚🌟🌈 заговор с целью автоматизации правовой системы и политизации 🔜👩🏻⚖️⚖️ правосудия, наступает хаос. Представьте себе: LegalBot 9000 ссылается на прецедентное право на варп-🏎️💨🔥 скорости, в то время как Politibot Prime подмигивает и пожимает руки, обещая 📝🗳 голосов от избирательных округов до Капитолийского холма. Это веселая битва человека против машины, где 👨⚖️🫵 судебные драмы сталкиваются с политической сатирой, заставляя всех задуматься: будет ли 🧙♀️🫸🔮🫷 будущее решаться байтами или голосованием? Приготовьтесь к дополнительному заказу 👔💼🗳️политических амбиций и роботизированной изобретательности!

#RoboLawyer#LegalTech#AIinLaw#FutureOfWork#RobotTakeover#AutomationNation#LegalRevolution#TechVsLaw#Politibot#LegalDisruption#MachineVsMan#LegalInnovation#RobotRiot#AIinJustice#DigitalTransformation#LawyerVsRobot#LegalEagle#FutureLegal#RoboticsRevolution#LegalAI#TechInLaw#LegalDystopia

0 notes

Link

Over the coming decades, AI will shake up many fields including legal practice, healthcare, finance, etc. Not all fields will be impacted at the same pace or to the same extent but change they will. Already, AI is being used by healthcare professionals in improving the efficacy of diagnosis and confirmation of lines of treatment. Law firms too are beginning to use AI to simplify the tedium of the process of trawling through case laws and legal judgments to identify precedents and the reasoning of the benches involved.

0 notes

Text

Don't fear robo-justice: Algorithms could help more people access legal advice

- By John Zeleznikow -

Algorithms have a role to play in supporting but not replacing the role of lawyers. Around 15 years ago, my team and I created an automated tool that helped determine eligibility for legal aid. Known as GetAid, we built it for Victoria Legal Aid (VLA), which helps people with legal problems to find representation. At that time, the task of determining who could access its services chewed up a significant amount of VLA’s operating budget.

After passing a financial test, applicants also needed to pass a merit test: would their case have a reasonable chance of being accepted by a court? GetAid provided advice about both stages using decision trees and machine learning.

It never came online for applicants. But all these years later, the idea of using tools such as GetAid in the legal system is being taken seriously. Humans now feel far more comfortable using software to assist with, and even make, decisions. There are two major reasons for this change:

Efficiency: the legal community has moved away from charging clients in six-minute blocks and instead has become concerned with providing economical advice.

Acceptance of the internet: legal professionals finally acknowledge that the internet can be a safe way of conducting transactions and can be used to provide important advice and to collect data.

This is a good development. Intelligent decision support systems can help streamline the legal system and provide useful advice to those who cannot afford professional assistance.

Intelligent legal decision support systems

While robots are unlikely to replace judges, automated tools are being developed to support legal decision making. In fact, they could help support access to justice in areas such as divorce, owners corporation disputes and small value contracts.

In cases where litigants cannot afford the assistance of lawyers or choose to appear in court unrepresented, systems have been developed that can advise about the potential outcome of their dispute. This helps them have reasonable expectations and make acceptable arguments.

Our Split-Up software, for example, helps users understand how Australian Family Court judges distribute marital property after a divorce.

The innovative part of the process is not the computer algorithm, but dividing the process into 94 arguments, including issues such as the contributions of the wife relative to the husband; the future needs of the wife relative to the husband; and the marriage’s level of wealth.

Using a form of statistical machine learning known as a neural network, it examines the strength of the weighting factors – contributions, needs and level of wealth – to determine an answer about the possible percentage split.

Other platforms follow a similar model. Developed by the Dutch Legal Aid Board, the Rechtwijzer dispute resolution platform allows people who are separating to answer questions that ultimately guide them to information relevant to their family situation.

Another major use of intelligent online dispute resolution is the British Columbia Civil Resolution System. It helps people affordably resolve small claims disputes of C$5,000 and under, as well as strata property conflicts.

Its initiators say that one of the common misconceptions about the system is that it offers a form of “robojustice” – a future where “disputes are decided by algorithm”.

Instead, they argue the Civil Resolution Tribunal is human-driven:

From the experts who share their knowledge through the Solution Explorer, to the dispute resolution professionals serving as facilitators and adjudicators, the CRT rests on human knowledge, skills and judgement.

Concerns about the use of robo-justice

Twenty years after we first began constructing intelligent legal decision support systems, the underlying algorithms are not much smarter, but developments in computer hardware mean machines can now search larger databases far quicker.

Critics are concerned that the use of machine learning in the legal system will worsen biases against minorities, or deepen the divide between those who can afford quality legal assistance and those who cannot.

There is no doubt that algorithms will continue to perform existing biases against vulnerable groups, but this is because the algorithms are largely copying and amplifying the decision-making trends embedded in the legal system.

In reality, there is already a class divide in legal access – those who can afford high quality legal professionals will always have an advantage. The development of intelligent support systems can partially redress this power imbalance by providing users with important legal advice that was previously unavailable to them.

There will always be a need for judges with advanced legal expertise to deal with situations that fall outside the norm. Artificial intelligence relies upon learning from prior experience and outcomes, and should not be used to make decisions about the facts of a case.

Ultimately, to pursue “real justice”, we need to change the law. In the meantime, robots can help with the smaller stuff.

John Zeleznikow, Professor of Information Systems; Research Associate, Institute of Sport, Exercise and Active Living, Victoria University

This article was originally published on The Conversation. Read the original article.

Attribution-NoDerivatives 4.0 International (CC BY-ND 4.0)

Read Also

It’s not my fault, my brain implant made me do it

13 notes

·

View notes

Text

@klinki valid. every humagear should learn from this honorable robolawyer

7 notes

·

View notes

Text

Master Class of Dr. Ammar Younas on "Philosophy of E-Justice" was attended by a full house at Tashkent State University of Law

#AI #aiethics #aieducation #ejustice #cyberlaw #legaltech #legalcourt #robolawyer #robojudge #centralasia #Uzbekistan #Tashkent #techlawyer

0 notes

Photo

Robo Revenge automates the process of suing telemarketers Robo Revenge is the latest brainchild of Joshua Browder, creator of the robolawyer parking-ticket-fighting app called DoNotPay. Robo Revenge gives you a burner credit card, which provides its robol… Source: Robo Revenge automates the process of suing telemarketers / Boing Boing

0 notes

Quote

The most successful robolawyer yet was developed by a British teenager named Joshua Browder. Called DoNotPay, it’s a free parking-ticket-fighting chatbot that asks a series of questions about your case—Were the signs clearly marked? Were you parked illegally because of a medical emergency?—and generates a letter that can be filed with the appropriate agency. So far, the bot has helped more than 215,000 people beat traffic and parking tickets in London, New York, and Seattle. Browder recently added new functions—DoNotPay can now help people demand compensation from airlines for delayed flights and file paperwork for government housing assistance—and more are on the way.

Rise of the Robolawyers

14 notes

·

View notes

Link

A Inteligência Artificial deve ser encarada pelos advogados como oportunidade e não uma ameaça, pois terão mais tempo disponível para se concentrar no trabalho que é realmente valioso para os clientes

0 notes

Text

AI, 1A and Citizens United

You've heard of Clearview AI: they're the grifty facial recognition system with ties to violent white nationalist terrorists that scraped social media for training data and then covertly sold unsupervised access to cops, schools and businesses.

https://pluralistic.net/2020/03/06/robolawyers-vs-databrokers/#clearviewlied

As the Clearview story dribbled out in the press, the company insisted that it was a responsible custodian of our faces and could be relied upon to wield power with prudence and caution.

Then its entire customer database leaked.

https://pluralistic.net/2020/02/28/pluralistic-your-daily-link-dose-28-feb-2020/#petard

The company's in legal trouble but it has deep pockets, thanks to the public monies it has trousered and the wealthy investors who showered it with capital - men like John Catsimatidis, owner of New York's Gristedes groceries.

https://www.nytimes.com/2020/03/05/technology/clearview-investors.html

That capital has allowed to company to acquire a terrifying legal weapon: the services of Floyd Abrams, the "First Amendment" lawyer who successfully argued Citizens United, clearing the way for unlimited corporate bribery in the US political process.

https://www.techdirt.com/articles/20200811/10003245090/clearview-hires-prominent-first-amendment-lawyer-to-argue-right-to-sell-scraped-data-to-cops.shtml

Abrams's argument is that there is a speech interest in Clearview's ability to flog our faces to governments and corporations, that this is "talking about us" and thus "speech" protected by the First Amendment.

Now, I know a lot of really top-notch #1A lawyers, and some true Free Speech believers, and collectively, they sometimes support things I do not - defending the speech rights of people who want to exterminate others' speech rights, say.

Likewise, they sometimes fail to champion causes I believe in, like the need to enforce competition law to protect pluralism in venues for speech so that firms do not end up with undue control over our public discourse.

https://locusmag.com/2020/01/cory-doctorow-inaction-is-a-form-of-action/

But despite these differences, I respect these people, because they have a consistent set of well-articulated principles that come from a place of deep moral conviction that is ultimately grounded in protecting the views of disfavored minorities from bullies and autocrats.

Floyd Abrams's positions do not hew to that honest morality. Instead, he defends contradictory speech positions seemingly based on who is signing his paycheck.

For example, he argued that SOPA - which would remove internet users' speech without due process in the name of defending copyright - did not violated the First Amendment.

https://www.techdirt.com/articles/20111111/16242216727/first-amendment-expert-floyd-abrams-admits-sopa-would-censor-protected-speech-thinks-its-okay-collateral-damage.shtml

As EFF Exec Director Cindy Cohn likes to say, "We know you love the First Amendment, sir, we just wish you'd share."

As is so often the case with Abrams's speech positions, this Clearview business will has profound implications for our democratic fundamentals. In this case, the idea that the First Amendment protects the conveyance of private information is irreconcilable with privacy law.

That is: if laws restricting the disclosure of private information violate the First Amendment, then your privacy violates my right to free speech: I can spy on you and everything you do, and sell or give away the information I glean, and you can't do shit about it.

A precedent upholding Clearview's right to sell our faces would be a potentially fatal blow to the longrunning, hard-fought battle to get a US federal privacy statute with a private right of action, something this country DESPERATELY needs.

Which is ironic, because the Constitutional bases for so many US privacy decisions turn on the First Amendment: your ability to speak freely depends on your ability to speak privately, so that powerful entities like Abrams's clients can't retaliate against you.

Meanwhile, I'm guessing Abrams would - if paid - argue that nondisclosure agreements crammed down the throats of employees and other people with weak negotiating positions DON'T violate the 1A, because people like Abrams love the First Amendment, but never want to share.

Image:

Cryteria

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY (modified):

https://creativecommons.org/licenses/by/3.0/deed.en

18 notes

·

View notes

Text

0 notes

Text

SRA awarded £700,000 to fund ‘robolawyer’ projects

Taxpayers’ cash will help support AI innovations within the legal services sector

The Solicitors Regulation Authority (SRA) has today been awarded just shy of £700,000 in taxpayers’ cash to fund artificial intelligence (AI) projects.

It was one of 15 winning projects for the £10 million ‘Regulators’ Pioneer Fund’. Backed by the Department for Business, Energy and Industrial Strategy, the new fund supports UK regulators in delivering cutting-edge projects that keep pace with technology.

The SRA’s project, Data-Driven Innovation in Legal Services, will seek out and accelerate ethical AI-powered legal services — potentially laying the groundwork for “robolawyers” of the future, according to a government statement. The focus will be on growing the large underdeveloped legal services market for small businesses and consumers, where AI and automation can have a transformative impact.

The 2019 Firms Most List

Commenting on the news, Paul Philip, SRA chief executive, said:

“Smart use of technology could help tackle the problem that far too many people struggle to access expert legal advice. It will help us further build on our work to encourage new ways of delivering legal services, benefiting both the public and small business.”

Other winning projects to take a slice of the £10 million pot include the Civil Aviation Authority, which received £1 million to find ways to unblock barriers to innovations such as flying taxis. The winning regulators will launch their projects in October.

The post SRA awarded £700,000 to fund ‘robolawyer’ projects appeared first on Legal Cheek.

from Legal News https://www.legalcheek.com/2018/10/sra-awarded-700000-to-fund-robolawyer-projects/

0 notes

Text

SRA awarded £700,000 to fund ‘robolawyer’ projects

Taxpayers’ cash will help support AI innovations within the legal services sector

The Solicitors Regulation Authority (SRA) has today been awarded just shy of £700,000 in taxpayers’ cash to fund artificial intelligence (AI) projects.

It was one of 15 winning projects for the £10 million ‘Regulators’ Pioneer Fund’. Backed by the Department for Business, Energy and Industrial Strategy, the new fund supports UK regulators in delivering cutting-edge projects that keep pace with technology.

The SRA’s project, Data-Driven Innovation in Legal Services, will seek out and accelerate ethical AI-powered legal services — potentially laying the groundwork for “robolawyers” of the future, according to a government statement. The focus will be on growing the large underdeveloped legal services market for small businesses and consumers, where AI and automation can have a transformative impact.

The 2019 Firms Most List

Commenting on the news, Paul Philip, SRA chief executive, said:

“Smart use of technology could help tackle the problem that far too many people struggle to access expert legal advice. It will help us further build on our work to encourage new ways of delivering legal services, benefiting both the public and small business.”

Other winning projects to take a slice of the £10 million pot include the Civil Aviation Authority, which received £1 million to find ways to unblock barriers to innovations such as flying taxis. The winning regulators will launch their projects in October.

The post SRA awarded £700,000 to fund ‘robolawyer’ projects appeared first on Legal Cheek.

from All About Law https://www.legalcheek.com/2018/10/sra-awarded-700000-to-fund-robolawyer-projects/

0 notes

Text

SRA awarded £700,000 to fund ‘robolawyer’ projects

Taxpayers’ cash will help support AI innovations within the legal services sector

The Solicitors Regulation Authority (SRA) has today been awarded just shy of £700,000 in taxpayers’ cash to fund artificial intelligence (AI) projects.

It was one of 15 winning projects for the £10 million ‘Regulators’ Pioneer Fund’. Backed by the Department for Business, Energy and Industrial Strategy, the new fund supports UK regulators in delivering cutting-edge projects that keep pace with technology.

The SRA’s project, Data-Driven Innovation in Legal Services, will seek out and accelerate ethical AI-powered legal services — potentially laying the groundwork for “robolawyers” of the future, according to a government statement. The focus will be on growing the large underdeveloped legal services market for small businesses and consumers, where AI and automation can have a transformative impact.

The 2019 Firms Most List

Commenting on the news, Paul Philip, SRA chief executive, said:

“Smart use of technology could help tackle the problem that far too many people struggle to access expert legal advice. It will help us further build on our work to encourage new ways of delivering legal services, benefiting both the public and small business.”

Other winning projects to take a slice of the £10 million pot include the Civil Aviation Authority, which received £1 million to find ways to unblock barriers to innovations such as flying taxis. The winning regulators will launch their projects in October.

The post SRA awarded £700,000 to fund ‘robolawyer’ projects appeared first on Legal Cheek.

from Legal News And Updates https://www.legalcheek.com/2018/10/sra-awarded-700000-to-fund-robolawyer-projects/

0 notes