#Computational linguistics

Text

Podcasting "How To Think About Scraping"

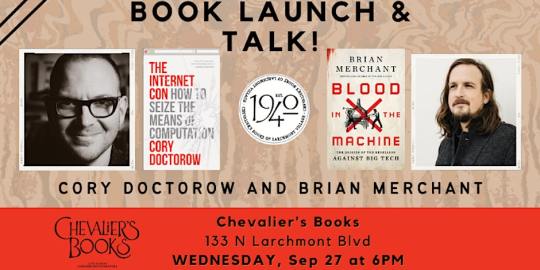

On September 27, I'll be at Chevalier's Books in Los Angeles with Brian Merchant for a joint launch for my new book The Internet Con and his new book, Blood in the Machine. On October 2, I'll be in Boise to host an event with VE Schwab.

This week on my podcast, I read my recent Medium column, "How To Think About Scraping: In privacy and labor fights, copyright is a clumsy tool at best," which proposes ways to retain the benefits of scraping without the privacy and labor harms that sometimes accompany it:

https://doctorow.medium.com/how-to-think-about-scraping-2db6f69a7e3d?sk=4a1d687171de1a3f3751433bffbb5a96

What are those benefits from scraping? Well, take computational linguistics, a relatively new discipline that is producing the first accounts of how informal language works. Historically, linguists overstudied written language (because it was easy to analyze) and underanalyzed speech (because you had to record speakers and then get grad students to transcribe their dialog).

The thing is, very few of us produce formal, written work, whereas we all engage in casual dialog. But then the internet came along, and for the first time, we had a species of mass-scale, informal dialog that also written, and which was born in machine-readable form.

This ushered in a new era in linguistic study, one that is enthusiastically analyzing and codifying the rules of informal speech, the spread of vernacular, and the regional, racial and class markers of different kinds of speech:

https://memex.craphound.com/2019/07/24/because-internet-the-new-linguistics-of-informal-english/

The people whose speech is scraped and analyzed this way are often unreachable (anonymous or pseudonymous) or impractical to reach (because there's millions of them). The linguists who study this speech will go through institutional review board approvals to make sure that as they produce aggregate accounts of speech, they don't compromise the privacy or integrity of their subjects.

Computational linguistics is an unalloyed good, and while the speakers whose words are scraped to produce the raw material that these scholars study, they probably wouldn't object, either.

But what about entities that explicitly object to being scraped? Sometimes, it's good to scrape them, too.

Since 1996, the Internet Archive has scraped every website it could find, storing snapshots of every page it found in a giant, searchable database called the Wayback Machine. Many of us have used the Wayback Machine to retrieve some long-deleted text, sound, image or video from the internet's memory hole.

For the most part, the Internet Archive limits its scraping to websites that permit it. The robots exclusion protocol (AKA robots.txt) makes it easy for webmasters to tell different kinds of crawlers whether or not they are welcome. If your site has a robots.txt file that tells the Archive's crawler to buzz off, it'll go elsewhere.

Mostly.

Since 2017, the Archive has started ignoring robots.txt files for news services; whether or not the news site wants to be crawled, the Archive crawls it and makes copies of the different versions of the articles the site publishes. That's because news sites – even the so-called "paper of record" – have a nasty habit of making sweeping edits to published material without noting it.

I'm not talking about fixing a typo or a formatting error: I'm talking about making a massive change to a piece, one that completely reverses its meaning, and pretending that it was that way all along:

https://medium.com/@brokenravioli/proof-that-the-new-york-times-isn-t-feeling-the-bern-c74e1109cdf6

This happens all the time, with major news sites from all around the world:

http://newsdiffs.org/examples/

By scraping these sites and retaining the different versions of their article, the Archive both detects and prevents journalistic malpractice. This is canonical fair use, the kind of copying that almost always involves overriding the objections of the site's proprietor. Not all adversarial scraping is good, but this sure is.

There's an argument that scraping the news-sites without permission might piss them off, but it doesn't bring them any real harm. But even when scraping harms the scrapee, it is sometimes legitimate – and necessary.

Austrian technologist Mario Zechner used the API from country's super-concentrated grocery giants to prove that they were colluding to rig prices. By assembling a longitudinal data-set, Zechner exposed the raft of dirty tricks the grocers used to rip off the people of Austria.

From shrinkflation to deceptive price-cycling that disguised price hikes as discounts:

https://mastodon.gamedev.place/@badlogic/111071627182734180

Zechner feared publishing his results at first. The companies whose thefts he'd discovered have enormous power and whole kennelsful of vicious attack-lawyers they can sic on him. But he eventually got the Austrian competition bureaucracy interested in his work, and they published a report that validated his claims and praised his work:

https://mastodon.gamedev.place/@badlogic/111071673594791946

Emboldened, Zechner open-sourced his monitoring tool, and attracted developers from other countries. Soon, they were documenting ripoffs in Germany and Slovenia, too:

https://mastodon.gamedev.place/@badlogic/111071485142332765

Zechner's on a roll, but the grocery cartel could shut him down with a keystroke, simply by blocking his API access. If they do, Zechner could switch to scraping their sites – but only if he can be protected from legal liability for nonconsensually scraping commercially sensitive data in a way that undermines the profits of a powerful corporation.

Zechner's work comes at a crucial time, as grocers around the world turn the screws on both their suppliers and their customers, disguising their greedflation as inflation. In Canada, the grocery cartel – led by the guillotine-friendly hereditary grocery monopolilst Galen Weston – pulled the most Les Mis-ass caper imaginable when they illegally conspired to rig the price of bread:

https://en.wikipedia.org/wiki/Bread_price-fixing_in_Canada

We should scrape all of these looting bastards, even though it will harm their economic interests. We should scrape them because it will harm their economic interests. Scrape 'em and scrape 'em and scrape 'em.

Now, it's one thing to scrape text for scholarly purposes, or for journalistic accountability, or to uncover criminal corporate conspiracies. But what about scraping to train a Large Language Model?

Yes, there are socially beneficial – even vital – uses for LLMs.

Take HRDAG's work on truth and reconciliation in Colombia. The Human Rights Data Analysis Group is a tiny nonprofit that makes an outsized contribution to human rights, by using statistical methods to reveal the full scope of the human rights crimes that take place in the shadows, from East Timor to Serbia, South Africa to the USA:

https://hrdag.org/

HRDAG's latest project is its most ambitious yet. Working with partner org Dejusticia, they've just released the largest data-set in human rights history:

https://hrdag.org/jep-cev-colombia/

What's in that dataset? It's a merger and analysis of more than 100 databases of killings, child soldier recruitments and other crimes during the Colombian civil war. Using a LLM, HRDAG was able to produce an analysis of each killing in each database, estimating the probability that it appeared in more than one database, and the probability that it was carried out by a right-wing militia, by government forces, or by FARC guerrillas.

This work forms the core of ongoing Colombian Truth and Reconciliation proceedings, and has been instrumental in demonstrating that the majority of war crimes were carried out by right-wing militias who operated with the direction and knowledge of the richest, most powerful people in the country. It also showed that the majority of child soldier recruitment was carried out by these CIA-backed, US-funded militias.

This is important work, and it was carried out at a scale and with a precision that would have been impossible without an LLM. As with all of HRDAG's work, this report and the subsequent testimony draw on cutting-edge statistical techniques and skilled science communication to bring technical rigor to some of the most important justice questions in our world.

LLMs need large bodies of text to train them – text that, inevitably, is scraped. Scraping to produce LLMs isn't intrinsically harmful, and neither are LLMs. Admittedly, nonprofits using LLMs to build war crimes databases do not justify even 0.0001% of the valuations that AI hypesters ascribe to the field, but that's their problem.

Scraping is good, sometimes – even when it's done against the wishes of the scraped, even when it harms their interests, and even when it's used to train an LLM.

But.

Scraping to violate peoples' privacy is very bad. Take Clearview AI, the grifty, sleazy facial recognition company that scraped billions of photos in order to train a system that they sell to cops, corporations and authoritarian governments:

https://pluralistic.net/2023/09/20/steal-your-face/#hoan-ton-that

Likewise: scraping to alienate creative workers' labor is very bad. Creators' bosses are ferociously committed to firing us all and replacing us with "generative AI." Like all self-declared "job creators," they constantly fantasize about destroying all of our jobs. Like all capitalists, they hate capitalism, and dream of earning rents from owning things, not from doing things.

The work these AI tools sucks, but that doesn't mean our bosses won't try to fire us and replace us with them. After all, prompting an LLM may produce bad screenplays, but at least the LLM doesn't give you lip when you order to it give you "ET, but the hero is a dog, and there's a love story in the second act and a big shootout in the climax." Studio execs already talk to screenwriters like they're LLMs.

That's true of art directors, newspaper owners, and all the other job-destroyers who can't believe that creative workers want to have a say in the work they do – and worse, get paid for it.

So how do we resolve these conundra? After all, the people who scrape in disgusting, depraved ways insist that we have to take the good with the bad. If you want accountability for newspaper sites, you have to tolerate facial recognition, too.

When critics of these companies repeat these claims, they are doing the companies' work for them. It's not true. There's no reason we couldn't permit scraping for one purpose and ban it for another.

The problem comes when you try to use copyright to manage this nuance. Copyright is a terrible tool for sorting out these uses; the limitations and exceptions to copyright (like fair use) are broad and varied, but so "fact intensive" that it's nearly impossible to say whether a use is or isn't fair before you've gone to court to defend it.

But copyright has become the de facto regulatory default for the internet. When I found someone impersonating me on a dating site and luring people out to dates, the site advised me to make a copyright claim over the profile photo – that was their only tool for dealing with this potentially dangerous behavior.

The reasons that copyright has become our default tool for solving every internet problem are complex and historically contingent, but one important point here is that copyright is alienable, which means you can bargain it away. For that reason, corporations love copyright, because it means that they can force people who have less power than the company to sign away their copyrights.

This is how we got to a place where, after 40 years of expanding copyright (scope, duration, penalties), we have an entertainment sector that's larger and more profitable than ever, even as creative workers' share of the revenues their copyrights generate has fallen, both proportionally and in real terms.

As Rebecca Giblin and I write in our book Chokepoint Capitalism, in a market with five giant publishers, four studios, three labels, two app platforms and one ebook/audiobook company, giving creative workers more copyright is like giving your bullied kid extra lunch money. The more money you give that kid, the more money the bullies will take:

https://chokepointcapitalism.com/

Many creative workers are suing the AI companies for copyright infringement for scraping their data and using it to train a model. If those cases go to trial, it's likely the creators will lose. The questions of whether making temporary copies or subjecting them to mathematical analysis infringe copyright are well-settled:

https://www.eff.org/deeplinks/2023/04/ai-art-generators-and-online-image-market

I'm pretty sure that the lawyers who organized these cases know this, and they're betting that the AI companies did so much sleazy shit while scraping that they'll settle rather than go to court and have it all come out. Which is fine – I relish the thought of hundreds of millions in investor capital being transferred from these giant AI companies to creative workers. But it doesn't actually solve the problem.

Because if we do end up changing copyright law – or the daily practice of the copyright sector – to create exclusive rights over scraping and training, it's not going to get creators paid. If we give individual creators new rights to bargain with, we're just giving them new rights to bargain away. That's already happening: voice actors who record for video games are now required to start their sessions by stating that they assign the rights to use their voice to train a deepfake model:

https://www.vice.com/en/article/5d37za/voice-actors-sign-away-rights-to-artificial-intelligence

But that doesn't mean we have to let the hyperconcentrated entertainment sector alienate creative workers from their labor. As the WGA has shown us, creative workers aren't just LLCs with MFAs, bargaining business-to-business with corporations – they're workers:

https://pluralistic.net/2023/08/20/everything-made-by-an-ai-is-in-the-public-domain/

Workers get a better deal with labor law, not copyright law. Copyright law can augment certain labor disputes, but just as often, it benefits corporations, not workers:

https://locusmag.com/2019/05/cory-doctorow-steering-with-the-windshield-wipers/

Likewise, the problem with Clearview AI isn't that it infringes on photographers' copyrights. If I took a thousand pictures of you and sold them to Clearview AI to train its model, no copyright infringement would take place – and you'd still be screwed. Clearview has a privacy problem, not a copyright problem.

Giving us pseudocopyrights over our faces won't stop Clearview and its competitors from destroying our lives. Creating and enforcing a federal privacy law with a private right action will. It will put Clearview and all of its competitors out of business, instantly and forever:

https://www.eff.org/deeplinks/2019/01/you-should-have-right-sue-companies-violate-your-privacy

AI companies say, "You can't use copyright to fix the problems with AI without creating a lot of collateral damage." They're right. But what they fail to mention is, "You can use labor law to ban certain uses of AI without creating that collateral damage."

Facial recognition companies say, "You can't use copyright to ban scraping without creating a lot of collateral damage." They're right too – but what they don't say is, "On the other hand, a privacy law would put us out of business and leave all the good scraping intact."

Taking entertainment companies and AI vendors and facial recognition creeps at their word is helping them. It's letting them divide and conquer people who value the beneficial elements and those who can't tolerate the harms. We can have the benefits without the harms. We just have to stop thinking about labor and privacy issues as individual matters and treat them as the collective endeavors they really are:

https://pluralistic.net/2023/02/26/united-we-stand/

Here's a link to the podcast:

https://craphound.com/news/2023/09/24/how-to-think-about-scraping/

And here's a direct link to the MP3 (hosting courtesy of the Internet Archive; they'll host your stuff for free, forever):

https://archive.org/download/Cory_Doctorow_Podcast_450/Cory_Doctorow_Podcast_450_-_How_To_Think_About_Scraping.mp3

And here's the RSS feed for my podcast:

http://feeds.feedburner.com/doctorow_podcast

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/09/25/deep-scrape/#steering-with-the-windshield-wipers

Image:

syvwlch (modified)

https://commons.wikimedia.org/wiki/File:Print_Scraper_(5856642549).jpg

CC BY-SA 2.0

https://creativecommons.org/licenses/by/2.0/deed.en

#pluralistic#cory doctorow#podcast#scraping#internet archive#wga strike#sag-aftra strike#wga#sag-aftra#labor#privacy#facial recognition#clearview ai#greedflation#price gouging#fr#austria#computational linguistics#linguistics#ai#ml#artificial intelligence#machine learning#llms#large language models#stochastic parrots#plausible sentence generators#hrdag#colombia#human rights

80 notes

·

View notes

Text

"We’ve learned to make 'machines that can mindlessly generate text, ... But we haven’t learned how to stop imagining the mind behind it.'"

Say that A and B, both fluent speakers of English, are independently stranded on two uninhabited islands. They soon discover that previous visitors to these islands have left behind telegraphs and that they can communicate with each other via an underwater cable. A and B start happily typing messages to each other.

Meanwhile, O, a hyperintelligent deep-sea octopus who is unable to visit or observe the two islands, discovers a way to tap into the underwater cable and listen in on A and B’s conversations. O knows nothing about English initially but is very good at detecting statistical patterns. Over time, O learns to predict with great accuracy how B will respond to each of A’s utterances.

Soon, the octopus enters the conversation and starts impersonating B and replying to A. This ruse works for a while, and A believes that O communicates as both she and B do — with meaning and intent. Then one day A calls out: “I’m being attacked by an angry bear. Help me figure out how to defend myself. I’ve got some sticks.” The octopus, impersonating B, fails to help. How could it succeed? The octopus has no referents, no idea what bears or sticks are. No way to give relevant instructions, like to go grab some coconuts and rope and build a catapult. A is in trouble and feels duped. The octopus is exposed as a fraud.

How should we interpret the natural-sounding (i.e., humanlike) words that come out of LLMs? The models are built on statistics. They work by looking for patterns in huge troves of text and then using those patterns to guess what the next word in a string of words should be. They’re great at mimicry and bad at facts. Why? LLMs, like the octopus, have no access to real-world, embodied referents. This makes LLMs beguiling, amoral, and the Platonic ideal of the bullshitter, as philosopher Harry Frankfurt, author of On Bullshit, defined the term. Bullshitters, Frankfurt argued, are worse than liars. They don’t care whether something is true or false. They care only about rhetorical power — if a listener or reader is persuaded.

(emphasis mine)

This article does a perfect job of explaining why I'm so wary of chat AI.

11 notes

·

View notes

Text

looking for studyblrs / blogs

leave a message if you post about

deep learning applications specifically for NLP

deep reinforcement learning

data science and data analytics

regression models

tensorflow

computational linguistics (bonus if it's about emergent languages)

practical ethics of cognitive science applications

cognitive neuropsychology

or if you are just cool in general <3

Will leave a follow in return! I need new active blogs to follow

#studyblr#data science#natural language processing#artificial intelligence#cognitive science#deep learning#computational linguistics#ethics#neuropsychology#tarastudiesalot#stemblr#women in stem

31 notes

·

View notes

Text

2023-03-01 • 1/100 Days of NLP

As a miserably failing compling student, I decided to start doing 100 days of NLP while documenting it all. Hopefully, this will help me study and pass my classes.

Productivity-wise, I did another introduction to probability and statistics, as well as language models, up until 3.6 section of Jurafsky & Martin's SLP. I finished module 1 of my maths for nlp class and finally took a break.

It is extremely hard for me to stay focus for longer than a minute, let alone the 40 something minutes it took me to do all these, and I did this all because of a call with my friends from my home country. The effect of people that have a drive to do anything is huge. They were making their own study plans to be able to enter an MA program, yet here I am, in an MA program that I love, just being too lazy to study. That's also why I decided to document these, even though photo quality is low, because I was again too lazy to turn on the main lights, and took the photo under the candlelight. I'm hoping to surround myself with more people like my friends from my old school and stay motivated enough to be able to pass all the courses that I failed with a VG at the end of these 100 days.

#100 days of studying#100 days of NLP#studyblr#study motivation#nlp#natural language processing#computational linguistics#machine learning

7 notes

·

View notes

Text

Linguistics Jobs: Interview with a Research Scientist

This month’s linguistics jobs interview is with Jeff Lilly, a Research Scientist at Cobalt Speech and Language. In this interview, Jeff shares how opportunities in computational linguistics have evolved since he received his degree, and how pursuing his interests in linguistics and computers have influenced his career.

You can find Jeff online on LinkedIn, or on either of his two blogs: druidjournal.net (on nature-centered spirituality) and axonfirings.com (on his fiction projects).

What did you study at university?

I have a BA in Linguistics from UNC-Chapel Hill (1995) and an MA in Linguistics from the University of Maryland, College Park (2000). UMD allowed me to qualify for the masters with two papers, so I did one paper on the lexical semantics of telic verbs and another on the typology of language games.

What is your job?

At Cobalt Speech and Language, it's a small company, so lots of us wear many hats. I design dialogue systems, build natural language understanding models and applications, and perform testing and quality assurance. I'm also in charge of Cobalt's ASR lexicons (the dictionaries we use for our speech systems).

How does your linguistics training help you in your job?

Linguistics is central to what I do, and I'm always trying to find new ways to incorporate linguistic knowledge into our tools. Most people working in speech technology come from a computer science background, so they're unaware of the variety of linguistic theories that can be brought to bear on our work. Any speech technology model is a mix of raw data, effective architecture, and linguistic knowledge; the more of one you have, the less of the others you need. By contributing linguistic knowledge, I can make our models smaller and simpler, and make them faster and easier to train. For example, I built a syllabification module based on Optimality Theory that is critical to our text-to-speech software. It works for any language and never needs to be trained on new data.

What was the transition from university to work like for you?

As I worked on my master's degree, I took as many computer science courses as I could handle. I got extra experience working with databases on the side. I was hired by a small search company shortly after I graduated (helping to build out the WordNet database), working primarily as a linguist, but my experience and classes in databases helped establish my abilities as a coder. When that company failed, I was able to join my next company as a research scientist / computational linguist, and I've been building my programming skill on the job ever since.

Do you have any advice you wish someone had given to you about linguistics/careers/university?

I guess one thing I had to learn the hard way is that the "real world" evaluates your performance much differently than school does. At school, you can just learn the material, turn in good papers, do well on the exams, and you're golden. But oftentimes on the job, your managers don't know you're doing a good job unless you make yourself visible by speaking up in meetings, offering suggestions, volunteering for tasks, etc. It's often better to try and fail than to keep your head down and play it safe.

Any other thoughts or comments?

When I started out as a linguistics undergrad in 1991, the profession of "computational linguist" barely existed. But I knew I loved linguistics and I was fascinated by computers, so I just tried to follow my passion, hoping that my enthusiasm and hard work would make up for any lack of ability or job prospects. It has not been a smooth ride -- I've worked at almost a dozen companies in the past 20 years, been laid off more than once, had to move across the country multiple times, etc. But I've always gotten to work on fascinating projects with amazing people. For example, I was on the first tiny team to start building Alexa at Amazon in 2011. And I've continued to stretch and grow and learn in ways I'd never imagined. 20 years from now, building on the remarkable recent advances in language modeling, the field will be vastly different. I hope, for example, that there will be opportunities for computational linguists to peel back the layers of a system like GPT-3 and see how it has modeled human language. It's going to be incredibly exciting.

Related interviews:

Interview with a Language Engineer

Interview with a Natural Language Annotation Lead

Interview with a Computational Linguist

Interview with a Learning Scientist

Interview with a Data Scientist

Recent interviews:

Interview with a Language Engineer

Interview with a Natural Language Annotation Lead

Interview with an EMLS/Linguistics instructor & mother of four

Interview with a Performing Artiste and Freelance Editor

Resources:

The full Linguist Jobs Interview List

The Linguist Jobs tag for the most recent interviews

The Linguistics Jobs slide deck (overview, resources and activities)

The Linguistics Jobs Interview series is edited by Martha Tsutsui Billins. Martha is a linguist whose research focuses on the Ryukyuan language Amami Oshima, specifically honourifics and politeness strategies in the context of language endangerment. Martha runs Field Notes, a podcast about linguistic fieldwork.

#linguistics jobs#linguist jobs#research scientist#computational linguistics#computational linguist#linguistics#linguist#careers#jobs#work

13 notes

·

View notes

Text

#ai artwork#ai image#pinterest#instagram#installation#wedding#inspiration#design#graphic design#illustration#photography#photoart#photoshop#aiartcommunity#computational linguistics#poetic#branding#nftcommunity#collection#web3#explore#trendy#luxury#fashion#art style#vintage style#vintage#stone ocean#biology#sea shells

4 notes

·

View notes

Text

Despite being labelled “English (UK)”, the speech-to-text in Microsoft is very clearly trained on American English. It does not know what to do with my non-rhotic accent and it’s constantly turning my long ‘a’s into ‘o’s. I’m not sure I can do an American accent but I guess I’m going to have to try to write anything.

3 notes

·

View notes

Text

Applying linguistics to real-world cases - profiling

Applying linguistics to real-world cases – profiling

My degree thesis was about the development of a Mathematical and Statistical Algorithm that is able to perform, or better to help perform, linguistic analysis on a text so as to discover, identify, and evidence bias in speech.

The algorithm can be used both as a standalone tool or, as I use it daily, in conjunction with Artificial Intelligence tools to broaden its scope and at the same time…

View On WordPress

#AI#Algorithm#and applied linguistics#Artificial Intelligence#authorship analysis#computational linguistics#criminal justice#dialect geography#forensic discourse analysis#forensic linguistic#forensic phonetics#historical linguistics#idiolect#language domains#lexicography#Linguist#linguistic analysis#linguistic profiling#linguistics#Mathematical Algorithm#pragmatics#Psycholinguistics#Raffaello Palandri#Raffaello Palandri linguist#semantics#sociolinguistic profiling#sociolinguistics#Statistical Algorithm

6 notes

·

View notes

Text

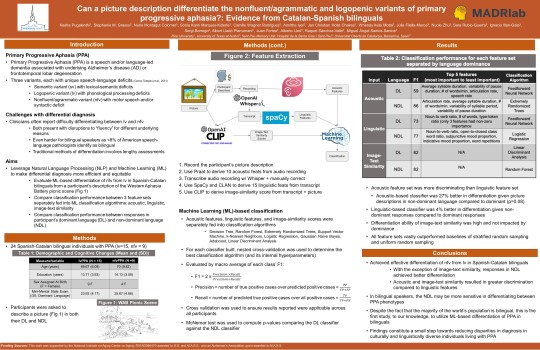

Can a picture description differentiate the nonfluent/agrammatic and logopenic variants of primary progressive aphasia?

Link to: Abstract

Post by Sonia-K. Marqués Kiderle, 14. March 2024

#slp#linguistics#computational linguistics#ppa#primary progressive aphasia#language#acoustics#speech#speech language therapy#speech language pathology#diagnosis

0 notes

Text

The data concludes that social media censorship algorithms are overwhelmingly racist in favor of white women, and I theorize that the most affected by them are rural white men and inner city black men.

0 notes

Text

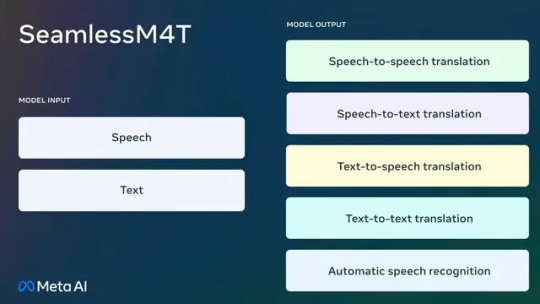

Meta Releases SeamlessM4T Translation AI for Text and Speech

Meta took a step towards a universal language translator on Tuesday with the release of its new Seamless M4T AI model, which the company says can quickly and efficiently understand language from speech or text in up to 100 languages and generate translation in either mode of communication. Multiple tech companies have released similar advanced AI translation models in recent months.

In a blog…

View On WordPress

#Applications of artificial intelligence#Computational linguistics#Creative Commons#Gizmodo#Human Interest#Internet#Large language model#Machine translation#Massively Multilingual#Massively Multilingual Speech system#META#Meta AI#Multilingualism#Paco#Paco Guzmán#Speech recognition#Speech synthesis#Technology#Translation

0 notes

Text

Researchers in computational linguistics often forget to even mention the language they're working on, because it's just assumed to be English. It's a Big Deal™️ when they do something as far afield as—gasp!😲—Portuguese.

#linguistics#language#AI#Artificial Intelligence#translation#machine translation#computational linguistics

45 notes

·

View notes

Text

How does one use computational methods to analyze and process morphologically complex words in a language?

Computational methods have become increasingly important in the field of linguistics, particularly in the study of morphologically complex languages. These methods allow linguists to analyze and process large amounts of data in a way that would be impossible using traditional methods. In this article, we will explore how computational methods can be used to analyze and process morphologically…

View On WordPress

#computational linguistics#deep learning#finite-state transducers#machine learning#morphological analysis#morphological segmentation#morphological tagging#Morphology#natural language processing#neural networks

0 notes

Text

Sanskrit - IE influences = Dravidian?

How does Sanskrit differ from other Indo European languages?

Phonetics and Phonology: Sanskrit has a more complex phonetics system than many other Indo-European languages, with a larger set of vowel and consonant sounds. It also has a distinct phonology that includes features like nasalization and pitch accent, which are not found in most other Indo-European languages.

Verb System: Sanskrit has a highly developed and complex verb system, with many different tenses, moods, and voices. This allows for a great deal of precision and nuance in expressing different shades of meaning.

Grammar: Sanskrit has a highly inflected grammar, with a large number of noun and verb forms that change to reflect grammatical features like case, gender, and number. This can make it more challenging for speakers of other Indo-European languages to learn.

Vocabulary: Sanskrit has a rich and diverse vocabulary that includes many technical terms for fields like philosophy, mathematics, and medicine. Many of these words have been adopted into other languages, including English.

---------------------------------------------------------------------

How does Sanskrit compare to Ancient Greek?

Phonology: Sanskrit has a complex system of vowels, including long and short vowels, while Ancient Greek has a relatively simpler system. Additionally, Sanskrit uses tonal distinctions to convey meaning, while Ancient Greek does not.

Grammar: Sanskrit has eight cases, while Ancient Greek has five. Additionally, Sanskrit has a complex system of verb conjugation that includes aspect, mood, and tense.

Vocabulary: Sanskrit has many words derived from its own roots, while Ancient Greek has a larger number of loan words from other languages.

---------------------------------------------------------------------

How does Sanskrit differ from Avestan?

Writing System: Sanskrit is traditionally written in the Devanagari script, while Avestan is written in the Avestan script, which is a modified version of the Aramaic alphabet. The Avestan script is less widely used and less well-known than the Devanagari script, which is used for writing many modern Indian languages. Neither had a script originally.

Phonetics and Phonology: Sanskrit and Avestan have different phonetic systems, with different sets of vowel and consonant sounds. Sanskrit also has a pitch accent, while Avestan does not. Both use retroflex consonants (produced by curling the tongue back towards the roof of the mouth), however they’re more of a distinctive, prominent feature in Sanskrit. Both use nasalization however Avestan uses it to distinguish between words more. Avestan has some unique phonetic features, such as the use of a separate character for the sound "ng".

Vocabulary: Avestan has a number of words borrowed from Old Persian and other Iranian languages, while Sanskrit has many words borrowed from Dravidian languages and other sources.

Grammar: Avestan has a more simplified system with only 5 cases, compared to Sanskrit which has a more detailed non declension system with eight different cases. Sanskrit also has a highly complex system of a large number of verb forms that includes mood, aspect and tense while Avestan only distinguishes between present and non-present. Avestan generally follows a subject-object-verb (SOV) word order, while Sanskrit has a more flexible word order, allowing for greater variation in sentence structure. Avestan has a larger number of postpositions than Sanskrit. While Sanskrit has around 10-15 commonly used postpositions, Avestan has more than 30 postpositions.

---------------------------------------------------------------------

How did Sanskrit become agglutinative?

Sanskrit and Avestan like many other Indo-European languages, began as inflectional languages, which means that grammatical information is conveyed through changes to the form of the word itself, rather than through separate particles or affixes. Over time, however, Sanskrit evolved to become more agglutinative, with grammatical information being conveyed through the use of separate affixes. This can be attributed to the influence of the older non-Indo-European languages spoken in the region before the arrival of the Indo-Iranians.

Sanskrit’s agglutination was likely due to contact with other languages, such as ancient and later Dravidian language/s, which were highly agglutinative. As Sanskrit was exposed to these other languages, it likely adopted some of their agglutinative features. Dravidian languages were spoken in the same geographical region as Sanskrit and had a long history of interaction with the speakers of Indo-European languages. It is possible that the Dravidian languages influenced the development of agglutinative features in Sanskrit, including its use of suffixes to indicate tense and aspect.

By the time of the early Vedic period (roughly 1500-1000 BCE), some agglutinative features were retained in Sanskrit, particularly in the use of suffixes to indicate tense and aspect. Over time, as the language continued to evolve, it is likely that the agglutinative features of Sanskrit became more pronounced, particularly in the use of compound words and the creation of new words through affixation. By the time of the classical Sanskrit period (roughly 500 BCE to 500 CE), the language had developed a highly complex and sophisticated system of agglutinative morphology, with a large number of affixes and compounds used to create new words and indicate grammatical relationships.

The exact identity of the older languages that influenced the development of agglutinative morphology in Avestan is uncertain. However, it is believed that the region where Avestan was spoken was home to a variety of non-Indo-European languages, including Elamite, Sumerian, and Dravidian. The influence of these languages on Avestan can be seen in various aspects of its grammar and vocabulary. For example, Avestan has a system of vowel harmony that is similar to that found in Elamite and Dravidian languages. The use of postpositions in Avestan is also similar to that found in Elamite and Sumerian.

Another reason for the development of agglutination in Sanskrit may have been due to the increased complexity of its grammar over time. As Sanskrit became a more literary and formal language, its grammar became more complex, and the use of separate affixes became more necessary to convey all of the grammatical information needed.

Additionally, the development of agglutination in Sanskrit may have been influenced by the rise of Buddhism in India, as Buddhist texts often used Pali and other Middle-Indo-Aryan languages, which were highly agglutinative. As Sanskrit became more influenced by these other languages, it likely adopted some of their agglutinative features as well.

Sidenote: It is also believed that Sanskrit, like other Indo-European languages, went through a period of inflectional development, which time it lost many of its agglutinative features earlier on. Anatolian languages including Hittite had a complex system of agglutinative morphology, with a large number of suffixes used to indicate tense, aspect, and other grammatical categories. Tocharian also had a highly agglutinative morphology, with complex systems of case endings and verb forms.

---------------------------------------------------------------------

How did Sanskrit develop complex verb conjugation?

The evolution of Sanskrit verb conjugation can be traced back to its earlier forms in Vedic Sanskrit, which had a simpler system with fewer verb forms. Over time, Sanskrit developed a more complex system of verb conjugation with multiple tenses, moods, and voices, each with its own set of rules for forming the appropriate verb form.

Sanskrit verb conjugation also reflects the cultural and social context in which the language was used, with various verbal forms reflecting different levels of formality, politeness, and social status. For example, the use of the passive voice and certain verb forms was considered more formal and polite, while the use of the imperative mood and certain verb forms was more direct and commanding.

---------------------------------------------------------------------

How did Sanskrit change by the end of the Vedas?

In the Aranyakas and Upanishads (700-400 BCE), the language became more philosophical and abstract, with new concepts and vocabulary.

The language of the Upanishads was more abstract and complex than that of the earlier Vedas, with more emphasis on philosophy and metaphysics.

The language of the Upanishads also incorporated more non-Vedic words, such as those from Dravidian and Munda languages.

During the Sutra period (600-200 BCE), the language became more systematic and technical, with the development of grammatical rules and the standardization of the language.

The Panini grammar, composed in the 4th century BCE, codified the rules of Sanskrit grammar, introducing new categories of verbs and noun declensions.

The Prakrits, a group of vernacular languages derived from Sanskrit, were spoken alongside Sanskrit during this period, influencing the Sanskrit language as well.

The Mahabharata and Ramayana were composed during this period, which introduced new words and constructions into the language.

---------------------------------------------------------------------

How did Sanskrit change in the Upanishads?

Vocabulary: The Upanishads use many new words that are not found in the earlier Vedic texts, reflecting the development of new philosophical ideas and concepts.

Syntax: The syntax of Sanskrit becomes more complex in the Upanishads, with longer sentences and more complex structures.

Grammar: The grammar of Sanskrit also becomes more complex in the Upanishads, with new verb forms and a greater variety of noun declensions.

Style: The style of Sanskrit in the Upanishads is more philosophical and mystical than in the earlier Vedic texts, with a greater focus on exploring the nature of reality and the human condition.

Meter: The Upanishads use a variety of poetic meters, including some that are not found in the earlier Vedic texts.

Prosody: The Upanishads are known for their intricate prosody, with many subtle variations in tone and intonation.

Use of particles: The Upanishads make use of a wide range of particles, including many that are not found in the earlier Vedic texts, to convey subtle shades of meaning.

Use of metaphors: The Upanishads use a range of metaphors and symbolic language to convey philosophical ideas, reflecting the growing importance of abstract thinking in the culture of the time.

Use of dialogue: The Upanishads are written in the form of dialogues between teachers and students, reflecting the importance of oral transmission and personal instruction in the culture of the time.

Use of repetition: The Upanishads make use of repetition to emphasize key philosophical ideas, reflecting the importance of memorization and recitation in the culture of the time.

Greater emphasis on individual spiritual experience: The Upanishads place a greater emphasis on individual spiritual experience and direct realization of truth than the earlier Vedic texts, reflecting a shift in focus from external ritual to internal inquiry.

Greater use of negation: The Upanishads make frequent use of negation to describe the nature of reality, reflecting the growing importance of non-dualistic philosophical ideas.

Greater use of analogy: The Upanishads use analogies and similes to describe abstract concepts, reflecting the growing importance of abstract thinking in the culture of the time.

Greater use of symbolism: The Upanishads make use of symbolic language to convey philosophical ideas, reflecting the growing importance of abstract thinking in the culture of the time.

Greater use of paradox: The Upanishads use paradoxical language to describe the nature of reality, reflecting the growing importance of non-dualistic philosophical ideas.

---------------------------------------------------------------------

How are some ways you can distinguish between Sanskrit & Dravidian words?

Semantic field: Some semantic fields, such as terms related to flora and fauna, are more likely to be of Dravidian origin.

Cultural context: Words related to traditional Dravidian practices, such as the use of turmeric or certain musical instruments, are likely to be of Dravidian origin.

Vocabulary: Some basic vocabulary items, such as numerals, are more likely to be of Dravidian origin.

Historical period: Words borrowed into Sanskrit before the 4th century BCE are likely to be of Dravidian origin.

Word structure: Dravidian words typically have simpler structures compared to Sanskrit words, which often have complex morphological structures with multiple affixes.

Part of speech: Certain parts of speech, such as pronouns and postpositions, are more likely to be of Dravidian origin.

Sound system: Dravidian languages have a different set of phonemes and phonological rules compared to Sanskrit. Words with retroflex consonants and vowel length distinctions are likely to be of Dravidian origin.

Word order: The word order in Dravidian languages differs from that in Sanskrit, with the subject-object-verb (SOV) order being more common in Dravidian.

---------------------------------------------------------------------

How are some ways you can distinguish between Sanskrit & Dravidian words?

Dravidian has influenced the syntax of Sanskrit, particularly in the use of compound words and sentence structure.

The Dravidian system of vowel length has influenced the pronunciation of Sanskrit vowels.

The Dravidian language family has a strong tradition of oral literature and storytelling, which has influenced the development of Sanskrit literature.

The use of syllabic consonants, which are common in Dravidian languages, has influenced the development of Sanskrit phonology.

The Dravidian system of verb conjugation has influenced the development of Sanskrit grammar.

The use of suffixes to form adjectives, which is common in Dravidian languages, has influenced the development of Sanskrit vocabulary.

The Dravidian system of negation, which uses a particle rather than a verb form, has influenced the development of negation in Sanskrit.

The use of suffixes to indicate tense and mood, which is common in Dravidian languages, has influenced the development of Sanskrit verb conjugation.

The Dravidian language family has a rich tradition of folk music and dance, which has influenced the development of Sanskrit performing arts.

The use of prefixes and suffixes to indicate the subject and object of a verb, which is common in Dravidian languages, has influenced the development of Sanskrit grammar.

The Dravidian system of numeral classifiers, which are used to indicate the countable nouns, has influenced the development of Sanskrit numerals.

The use of reduplication to indicate emphasis, repetition, or plurality, which is common in Dravidian languages, has influenced the development of Sanskrit vocabulary.

The Dravidian system of affixation, which is used to form complex words from simpler ones, has influenced the development of Sanskrit vocabulary.

The use of vowel harmony, which is common in Dravidian languages, has influenced the development of Sanskrit phonology.

The Dravidian system of noun declension, which includes case marking and gender assignment, has influenced the development of Sanskrit grammar.

The use of honorifics to indicate social status or respect, which is common in Dravidian languages, has influenced the development of Sanskrit vocabulary and grammar.

The Dravidian system of lexical borrowing, which involves the adoption of words from other languages, has influenced the development of Sanskrit vocabulary.

The use of a reflexive pronoun to indicate the subject of a sentence, which is common in Dravidian languages, has influenced the development of Sanskrit grammar.

The Dravidian system of phrasal verbs, which consist of a verb and a particle, has influenced the development of Sanskrit vocabulary.

The use of morphological alternation to indicate tense or aspect, which is common in Dravidian languages, has influenced the development of Sanskrit verb conjugation.

The Dravidian system of pronouns, which distinguishes between inclusive and exclusive "we" and "us," has influenced the development of Sanskrit grammar.

The use of onomatopoeia to imitate sounds or actions, which is common in Dravidian languages, has influenced the development of Sanskrit vocabulary.

The Dravidian system of kinship terms, which includes specific terms for different types of relatives, has influenced the development of Sanskrit vocabulary.

The use of sound symbolism, which involves the association of particular sounds with specific meanings, is common in Dravidian languages and has influenced the development of Sanskrit vocabulary and poetry.

The Dravidian system of verb endings, which include distinct endings for different persons and numbers, has influenced the development of Sanskrit verb conjugation.

The use of postpositions, which come after the noun they modify rather than before it, is common in Dravidian languages and has influenced the development of Sanskrit syntax.

The Dravidian system of syllable structure, which allows for complex consonant clusters and syllabic nasals, has influenced the development of Sanskrit phonology.

The use of iterative suffixes to indicate repeated or habitual actions, which is common in Dravidian languages, has influenced the development of Sanskrit verb conjugation.

The Dravidian system of noun compounding, which allows for the creation of complex nouns from simpler ones, has influenced the development of Sanskrit vocabulary.

The use of diminutives, which are suffixes that indicate smallness or endearment, is common in Dravidian languages and has influenced the development of Sanskrit vocabulary.

The use of infixes, which are inserted inside a word to modify its meaning, is common in Dravidian languages and has influenced the development of Sanskrit vocabulary.

The Dravidian system of word order, which can be flexible and dependent on context, has influenced the development of Sanskrit syntax.

The use of exclamatory particles, which indicate strong emotions such as surprise or enthusiasm, is common in Dravidian languages and has influenced the development of Sanskrit vocabulary.

The Dravidian system of participial constructions, which involve the use of verb forms to modify nouns, has influenced the development of Sanskrit grammar.

The use of interrogative particles, which are added to the end of a sentence to indicate a question, is common in Dravidian languages and has influenced the development of Sanskrit grammar.

The Dravidian system of relative clauses, which involve the use of a relative pronoun to link two clauses, has influenced the development of Sanskrit syntax.

The use of phrasal adjectives, which consist of an adjective and a noun, is common in Dravidian languages and has influenced the development of Sanskrit vocabulary.

The Dravidian system of copula verbs, which link a subject to a predicate, has influenced the development of Sanskrit grammar.

The use of adverbs to indicate time or frequency, which is common in Dravidian languages, has influenced the development of Sanskrit vocabulary.

The Dravidian system of nominalization, which involves the use of a verb form as a noun, has influenced the development of Sanskrit grammar and vocabulary.

The use of idiomatic expressions, which involve the use of words in a non-literal sense, is common in Dravidian languages and has influenced the development of Sanskrit vocabulary and poetry.

The Dravidian system of intensifiers, which modify the meaning of an adjective or adverb, has influenced the development of Sanskrit vocabulary.

The Dravidian system of tense-aspect-mood markers, which indicate the time, duration, and manner of an action, has influenced the development of Sanskrit verb conjugation.

The use of double negatives, which involve the use of two negative particles to indicate a negative statement, is common in Dravidian languages and has influenced the development of Sanskrit grammar.

#chatgpt#sanskrit#india#indus valley#meluhha#melakam#tamil#dravidian#sumer#mesopotamia#ancient civilizations#indo european#history#linguistics#computational linguistics#phonology#morphology#semantics

0 notes

Photo

Noam Chomsky explains the difference between ChatGPT and "True Intelligence"

There's a new op-ed in The New York Times from Noam Chomsky and two of his academic colleagues — Dr. Ian Roberts, a linguistics professor at University of Cambridge, and Dr. Jeffrey Watumull, a philosopher who is also the director of artificial intelligence at a tech company. — Read the rest

https://boingboing.net/2023/03/09/noam-chomsky-explains-the-difference-between-chatgpt-and-true-intelligence.html

#Post#AI#ai and ethics#ai-generated fiction#artificial intelligence#chatbots#ChatGPT#computational linguistics#language#linguistics#Noam Chomsky#true intelligence#weird fucking language shit#Thom Dunn#Boing Boing

0 notes

Text

The Role & Impact of Sentiment Analysis | Avalon Global Research

A business that has its fingers on the pulse of its customers is a business that thrives. Sentiment analysis, or opinion mining, is the perfect tool to help you do just that. It entails interpreting data on consumer sentiment using natural language processing (NLP).

While sentiment analysis has been there, with the increasing availability of data and development of advanced learning tools, its relevance in drawing insights from consumers’ emotions, mood and behavior patterns has increased significantly. Organizations can track the brand and product sentiment in consumer feedback and understand demand better.

What all does it cover?

Extracting relevant information while discarding irrelevant data

Identifying polarity of opinion or thought

Detecting if there is any negative opinion

Categorizing content based on positive or negative remarks

Where does it help?

In understanding patterns in communication

The role of sentiment analysis in monitoring and understanding communication is growing faster than ever before. Analyzing consumer feedback, such as obtained from survey or social media discussions, can help you understand what would appeal to your customers. Accordingly, you can tailor products and services to meet the requirements.

Monitoring also helps you identify and address irate customers appropriately. Moreover, comparing sentiment over quarters is a good way to assess the changes required. You can then do further deep-dive to figure out why the sentiment is declining or improving.

In marketing and sales

Insights drawn from sentiment analysis data form the basis for making informed sales and marketing strategies. Sentiment analysis also helps in evaluating social media posts, improving crisis management and brand strength, and turning digital PR into actual activities. The more you know your consumers, the better the chances of retaining them.

Sentiment analysis is the window to gauging how social media and third-party website marketing strategies are performing. To understand how a new product is faring, comments on the internet/social media are a veritable source to assess customer response: are they encountering problems, or what is the feedback from the target audience.

Say, you are a clock manufacturing company that has launched a digital alarm clock and is actively tracking the product’s traction on social media. Now if one of the consumers raises an issue on a popular channel where you interact about some fault in the battery backup, as a prompt manufacturer, you must revert at the earliest with a personalized answer. You could immediately issue an apology saying you will look into the specific product and see if this is a one-off incident. You could then offer to replace the faulty piece at its earliest. However, if an increasing number of people is flagging the issue on different channels, it means the entire product line may need to be recalled. You could then respond accordingly that the product is being looked into at the highest level, and clearly mention how and where customers can connect for smooth refund or return until the issue is rectified.

Or you are a cosmetics manufacturer looking to engage with your audience on Black Friday. You could schedule your messages ahead of time and come up with engaging contests. Depending on the interest you garner, you could either repeat the campaigns, reinforcing your strategies, or revisit plans, depending on the findings of the analysis.

In supplementing insights drawn from social media responses

Likes, comments, and shares are metrics in traditional social media monitoring. While these may indicate a company’s popularity, they don’t necessarily provide deeper insights into consumers’ emotions: preferences, dislikes, or expectations. Sentiment analysis, on the other hand, can be used to understand if consumers are (implying feeling) ‘positive’, ‘negative’, or ‘neutral’ about a certain brand, product, or topic. Emotionally-charged words give a more incisive understanding. For instance, ‘crappy’ and ‘hate’ indicate a negative sentiment, while ‘ awesome ‘and’ amazing’ reflect a positive sentiment.

In crisis management and brand health

In a crisis situation, sentiment analysis helps in determining how fans and opponents are reacting to the incident. Evaluations at regular intervals after the crisis has passed are effective in gauging if customers have moved on, or continue to harbor negative feelings.

In strategizing

Sentiment analysis, especially with the use of advanced learning tools, can help you in generating rich insights. These can come handy at any stage of the business cycle, either when you are about to launch a product, or enter a domain, or want to know how your product is doing in the market. Based on the business intelligence data gathered, you can build effective strategies.

What does the future of sentiment analysis look like?

Given the change in perception of consumers as assets and the rise of the shrewd consumer himself, sentiment analysis has become more important than ever. Investing in it is critical to stay relevant in the field and beat competition. Greater the availability of data, better the insights and, therefore, chances of success.

However, there are challenges. First and foremost is to ensure the quality of data obtained. If information is insufficient, sentiment analysis may not yield the insights required. Inaccurate data will lead to weak insights, poor clarity and erroneous conclusions.

This, therefore, puts the spotlight on the techniques to be involved. While machine learning and advanced algorithms go a long way in deep mining, they may not be adequately trained to capture opinion or expressions, especially as these are subjective and not easy to interpret.

Here, the onus will lie with you, how your organization is able to balance advanced learning systems with other tools/techniques such as information extracting or semantic tools, or the methods you employ to sanitize data. The core objective at any point should be to obtain precise and accurate information.

At the end of the day, it is all about prioritizing your customers and generating value for them. How well you understand, relate and cater to your customers, will determine the long-term success of your business. If you would like to have more information on this, please connect with Radhakrishnan Rajaram @ [email protected], or Suyog Keluskar @ [email protected].

0 notes