#hrdag

Text

The real AI fight

Tonight (November 27), I'm appearing at the Toronto Metro Reference Library with Facebook whistleblower Frances Haugen.

On November 29, I'm at NYC's Strand Books with my novel The Lost Cause, a solarpunk tale of hope and danger that Rebecca Solnit called "completely delightful."

Last week's spectacular OpenAI soap-opera hijacked the attention of millions of normal, productive people and nonsensually crammed them full of the fine details of the debate between "Effective Altruism" (doomers) and "Effective Accelerationism" (AKA e/acc), a genuinely absurd debate that was allegedly at the center of the drama.

Very broadly speaking: the Effective Altruists are doomers, who believe that Large Language Models (AKA "spicy autocomplete") will someday become so advanced that it could wake up and annihilate or enslave the human race. To prevent this, we need to employ "AI Safety" – measures that will turn superintelligence into a servant or a partner, nor an adversary.

Contrast this with the Effective Accelerationists, who also believe that LLMs will someday become superintelligences with the potential to annihilate or enslave humanity – but they nevertheless advocate for faster AI development, with fewer "safety" measures, in order to produce an "upward spiral" in the "techno-capital machine."

Once-and-future OpenAI CEO Altman is said to be an accelerationists who was forced out of the company by the Altruists, who were subsequently bested, ousted, and replaced by Larry fucking Summers. This, we're told, is the ideological battle over AI: should cautiously progress our LLMs into superintelligences with safety in mind, or go full speed ahead and trust to market forces to tame and harness the superintelligences to come?

This "AI debate" is pretty stupid, proceeding as it does from the foregone conclusion that adding compute power and data to the next-word-predictor program will eventually create a conscious being, which will then inevitably become a superbeing. This is a proposition akin to the idea that if we keep breeding faster and faster horses, we'll get a locomotive:

https://locusmag.com/2020/07/cory-doctorow-full-employment/

As Molly White writes, this isn't much of a debate. The "two sides" of this debate are as similar as Tweedledee and Tweedledum. Yes, they're arrayed against each other in battle, so furious with each other that they're tearing their hair out. But for people who don't take any of this mystical nonsense about spontaneous consciousness arising from applied statistics seriously, these two sides are nearly indistinguishable, sharing as they do this extremely weird belief. The fact that they've split into warring factions on its particulars is less important than their unified belief in the certain coming of the paperclip-maximizing apocalypse:

https://newsletter.mollywhite.net/p/effective-obfuscation

White points out that there's another, much more distinct side in this AI debate – as different and distant from Dee and Dum as a Beamish Boy and a Jabberwork. This is the side of AI Ethics – the side that worries about "today’s issues of ghost labor, algorithmic bias, and erosion of the rights of artists and others." As White says, shifting the debate to existential risk from a future, hypothetical superintelligence "is incredibly convenient for the powerful individuals and companies who stand to profit from AI."

After all, both sides plan to make money selling AI tools to corporations, whose track record in deploying algorithmic "decision support" systems and other AI-based automation is pretty poor – like the claims-evaluation engine that Cigna uses to deny insurance claims:

https://www.propublica.org/article/cigna-pxdx-medical-health-insurance-rejection-claims

On a graph that plots the various positions on AI, the two groups of weirdos who disagree about how to create the inevitable superintelligence are effectively standing on the same spot, and the people who worry about the actual way that AI harms actual people right now are about a million miles away from that spot.

There's that old programmer joke, "There are 10 kinds of people, those who understand binary and those who don't." But of course, that joke could just as well be, "There are 10 kinds of people, those who understand ternary, those who understand binary, and those who don't understand either":

https://pluralistic.net/2021/12/11/the-ten-types-of-people/

What's more, the joke could be, "there are 10 kinds of people, those who understand hexadecenary, those who understand pentadecenary, those who understand tetradecenary [und so weiter] those who understand ternary, those who understand binary, and those who don't." That is to say, a "polarized" debate often has people who hold positions so far from the ones everyone is talking about that those belligerents' concerns are basically indistinguishable from one another.

The act of identifying these distant positions is a radical opening up of possibilities. Take the indigenous philosopher chief Red Jacket's response to the Christian missionaries who sought permission to proselytize to Red Jacket's people:

https://historymatters.gmu.edu/d/5790/

Red Jacket's whole rebuttal is a superb dunk, but it gets especially interesting where he points to the sectarian differences among Christians as evidence against the missionary's claim to having a single true faith, and in favor of the idea that his own people's traditional faith could be co-equal among Christian doctrines.

The split that White identifies isn't a split about whether AI tools can be useful. Plenty of us AI skeptics are happy to stipulate that there are good uses for AI. For example, I'm 100% in favor of the Human Rights Data Analysis Group using an LLM to classify and extract information from the Innocence Project New Orleans' wrongful conviction case files:

https://hrdag.org/tech-notes/large-language-models-IPNO.html

Automating "extracting officer information from documents – specifically, the officer's name and the role the officer played in the wrongful conviction" was a key step to freeing innocent people from prison, and an LLM allowed HRDAG – a tiny, cash-strapped, excellent nonprofit – to make a giant leap forward in a vital project. I'm a donor to HRDAG and you should donate to them too:

https://hrdag.networkforgood.com/

Good data-analysis is key to addressing many of our thorniest, most pressing problems. As Ben Goldacre recounts in his inaugural Oxford lecture, it is both possible and desirable to build ethical, privacy-preserving systems for analyzing the most sensitive personal data (NHS patient records) that yield scores of solid, ground-breaking medical and scientific insights:

https://www.youtube.com/watch?v=_-eaV8SWdjQ

The difference between this kind of work – HRDAG's exoneration work and Goldacre's medical research – and the approach that OpenAI and its competitors take boils down to how they treat humans. The former treats all humans as worthy of respect and consideration. The latter treats humans as instruments – for profit in the short term, and for creating a hypothetical superintelligence in the (very) long term.

As Terry Pratchett's Granny Weatherwax reminds us, this is the root of all sin: "sin is when you treat people like things":

https://brer-powerofbabel.blogspot.com/2009/02/granny-weatherwax-on-sin-favorite.html

So much of the criticism of AI misses this distinction – instead, this criticism starts by accepting the self-serving marketing claim of the "AI safety" crowd – that their software is on the verge of becoming self-aware, and is thus valuable, a good investment, and a good product to purchase. This is Lee Vinsel's "Criti-Hype": "taking press releases from startups and covering them with hellscapes":

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

Criti-hype and AI were made for each other. Emily M Bender is a tireless cataloger of criti-hypeists, like the newspaper reporters who breathlessly repeat " completely unsubstantiated claims (marketing)…sourced to Altman":

https://dair-community.social/@emilymbender/111464030855880383

Bender, like White, is at pains to point out that the real debate isn't doomers vs accelerationists. That's just "billionaires throwing money at the hope of bringing about the speculative fiction stories they grew up reading – and philosophers and others feeling important by dressing these same silly ideas up in fancy words":

https://dair-community.social/@emilymbender/111464024432217299

All of this is just a distraction from real and important scientific questions about how (and whether) to make automation tools that steer clear of Granny Weatherwax's sin of "treating people like things." Bender – a computational linguist – isn't a reactionary who hates automation for its own sake. On Mystery AI Hype Theater 3000 – the excellent podcast she co-hosts with Alex Hanna – there is a machine-generated transcript:

https://www.buzzsprout.com/2126417

There is a serious, meaty debate to be had about the costs and possibilities of different forms of automation. But the superintelligence true-believers and their criti-hyping critics keep dragging us away from these important questions and into fanciful and pointless discussions of whether and how to appease the godlike computers we will create when we disassemble the solar system and turn it into computronium.

The question of machine intelligence isn't intrinsically unserious. As a materialist, I believe that whatever makes me "me" is the result of the physics and chemistry of processes inside and around my body. My disbelief in the existence of a soul means that I'm prepared to think that it might be possible for something made by humans to replicate something like whatever process makes me "me."

Ironically, the AI doomers and accelerationists claim that they, too, are materialists – and that's why they're so consumed with the idea of machine superintelligence. But it's precisely because I'm a materialist that I understand these hypotheticals about self-aware software are less important and less urgent than the material lives of people today.

It's because I'm a materialist that my primary concerns about AI are things like the climate impact of AI data-centers and the human impact of biased, opaque, incompetent and unfit algorithmic systems – not science fiction-inspired, self-induced panics over the human race being enslaved by our robot overlords.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

Image:

Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#criti-hype#ai doomers#doomers#eacc#effective acceleration#effective altruism#materialism#ai#10 types of people#data science#llms#large language models#patrick ball#ben goldacre#trusted research environments#science#hrdag#human rights data analysis group#red jacket#religion#emily bender#emily m bender#molly white

287 notes

·

View notes

Text

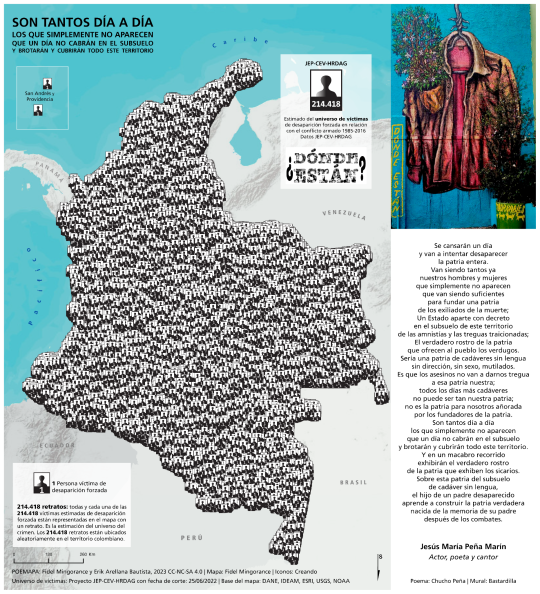

Sin tantos los que día a día no aparecen...

Chucho Peña poeta desaparecido y asesinado a los 24 años.

Grafiti Bastardilla

Cartografía

Fidel Mingorance & Erik Arellana Bautista

DESAPARICIÓN FORZADA EN COLOMBIA

214.418 Estimativo según JEP CEV -HRDAG

1 note

·

View note

Text

Unbiased algorithms can still be problematic

Creating unbiased, accurate algorithms isn’t impossible — it’s just time consuming.

“It actually is mathematically possible,” facial recognition startup Kairos CEO Brian Brackeen told me on a panel at TechCrunch Disrupt SF.

Algorithms are sets of rules that computers follow in order to solve problems and make decisions about a particular course of action. Whether it’s the type of information we receive, the information people see about us, the jobs we get hired to do, the credit cards we get approved for, and, down the road, the driverless cars that either see us or don’t, algorithms are increasingly becoming a big part of our lives. But there is an inherent problem with algorithms that begins at the most base level and persists throughout its adaption: human bias that is baked into these machine-based decision-makers.

Creating unbiased algorithms is a matter of having enough accurate data. It’s not about just having enough “pale males” in the model, but about having enough images of people from various racial backgrounds, genders, abilities, heights, weights and so forth.

Kairos CEO Brian Brackeen

“In our world, facial recognition is all about human biases, right?” Brackeen said. “And so you think about AI, it’s learning, it’s like a child and you teach it things and then it learns more and more. What we call right down the middle, right down the fair way is ‘pale males.’ It’s very, very good. Very, very good at identifying somebody who meets that classification.”

But the further you get from pale males — adding women, people from different ethnicities, and so forth — “the harder it is for AI systems to get it right, or at least the confidence to get it right,” Brackeen said.

Still, there are cons to even a one hundred percent accurate model. On the pro side, a good facial recognition use case for a completely accurate algorithm would be in a convention center, where you use the system to quickly identity and verify people are who they say they are. That’s one type of use case Kairos, which works with corporate businesses around authentication, addresses.

“So if we’re wrong, at worst case, maybe you have to do a transfer again to your bank account,” he said. “If we’re wrong, maybe you don’t see a picture accrued during a cruise liner. But when the government is wrong about facial recognition, and someone’s life or liberty is at stake, they can be putting you in a lineup that you shouldn’t be in. They could be saying that this person is a criminal when they’re not.”

But in the case of law enforcement, no matter how accurate and unbiased these algorithms are, facial recognition software has no business in law enforcement, Brackeen said. That’s because of the potential for unlawful, excessive surveillance of citizens.

Given the government already has our passport photos and identification photos, “they could put a camera on Main Street and know every single person driving by,” Brackeen said.

And that’s a real possibility. In the last month, Brackeen said Kairos turned down a government request from Homeland Security, seeking facial recognition software for people behind moving cars.

“For us, that’s completely unacceptable,” Brackeen said.

Another issue with 100 percent perfect mathematical predictions is that it comes down to what the model is predicting, Human Rights Data Analysis Group lead statistician Kristian Lum said on the panel.

Human Rights Data Analysis Group lead statistician Kristian Lum

“Usually, the thing you’re trying to predict in a lot of these cases is something like rearrest,” Lum said. “So even if we are perfectly able to predict that, we’re still left with the problem that the human or systemic or institutional biases are generating biased arrests. And so, you still have to contextualize even your 100 percent accuracy with is the data really measuring what you think it’s measuring? Is the data itself generated by a fair process?”

HRDAG Director of Research Patrick Ball, in agreement with Lum, argued that it’s perhaps more practical to move it away from bias at the individual level and instead call it bias at the institutional or structural level. If a police department, for example, is convinced it needs to police one neighborhood more than another, it’s not as relevant if that officer is a racist individual, he said.

HRDAG Director of Research Patrick Ball

“What’s relevant is that the police department has made an institutional decision to over-police that neighborhood, thereby generating more police interactions in that neighborhood, thereby making people with that ZIP code more likely to be classified as dangerous if they are classified by risk assessment algorithms,” Ball said.

And even if the police were to have perfect information about every crime committed, in order to build a fair machine learning system, “we would need to live in a society of perfect surveillance so that there is absolute police knowledge about every single crime so that nothing is excluded,” he said. “So that there would be no bias. Let me suggest to you that that’s way worse even than a bunch of crimes going free. So maybe we should just work on reforming police practice and forget about all of the machine learning distractions because they’re really making things worse, not better.”

He added, “For fair predictions, you first need a fair criminal justice system. And we have a ways to go.”

youtube

0 notes

Text

Technology Can’t Predict Crime, It Can Only Weaponize Proximity to Policing

Special thanks to Yael Grauer for additional writing and research.

In June 2020, Santa Cruz, California became the first city in the United States to ban municipal use of predictive policing, a method of deploying law enforcement resources according to data-driven analytics that supposedly are able to predict perpetrators, victims, or locations of future crimes. Especially interesting is that Santa Cruz was one of the first cities in the country to experiment with the technology when it piloted, and then adopted, a predictive policing program in 2011. That program used historic and current crime data to break down some areas of the city into 500 foot by 500 foot blocks in order to pinpoint locations that were likely to be the scene of future crimes. However, after nine years, the city council voted unanimously to ban it over fears of how it perpetuated racial inequality.

Predictive policing is a self-fulfilling prophecy. If police focus their efforts in one neighborhood and arrest dozens of people there during the span of a week, the data will reflect that area as a hotbed of criminal activity. The system also considers only reported crime, which means that neighborhoods and communities where the police are called more often might see a higher likelihood of having predictive policing technology concentrate resources there. This system is tailor-made to further victimize communities that are already overpoliced—namely, communities of color, unhoused individuals, and immigrants—by using the cloak of scientific legitimacy and the supposed unbiased nature of data.

Santa Cruz’s experiment, and eventual banning of the technology is a lesson to the rest of the country: technology is not a substitute for community engagement and holistic crime reduction measures. The more police departments rely on technology to dictate where to focus efforts and who to be suspicious of, the more harm those departments will cause to vulnerable communities. That’s why police departments should be banned from using supposedly data-informed algorithms to inform which communities, and even which people, should receive the lion’s share of policing and criminalization.

What Is Predictive Policing?

The Santa Cruz ordinance banning predictive policing defines the technology as “means software that is used to predict information or trends about crime or criminality in the past or future, including but not limited to the characteristics or profile of any person(s) likely to commit a crime, the identity of any person(s) likely to commit crime, the locations or frequency of crime, or the person(s) impacted by predicted crime.”

Predictive policing analyzes a massive amount of information from historical crimes including the time of day, season of the year, weather patterns, types of victims, and types of location in order to infer when and in which locations crime is likely to occur. For instance, if a number of crimes have been committed in alleyways on Thursdays, the algorithm might tell a department they should dispatch officers to alleyways every Thursday. Of course, then this means that police are predisposed to be suspicious of everyone who happens to be in that area at that time.

The technology attempts to function similarly while conducting the less prevalent “person based” predictive policing. This takes the form of opaque rating systems that assign people a risk value based on a number of data streams including age, suspected gang affiliation, and the number of times a person has been a victim as well as an alleged perpetrator of a crime. The accumulated total of this data could result in someone being placed on a “hot list”, as happened to over 1,000 people in Chicago who were placed on one such “Strategic Subject List.” As when specific locations are targeted, this technology cannot actually predict crime—and in an attempt to do so, it may expose people to targeted police harassment or surveillance without any actual proof that a crime will be committed.

There is a reason why the use of predictive policing continues to expand despite its dubious foundations: it makes money. Many companies have developed tools for data-driven policing; some of the biggest arePredPol, HunchLab, CivicScape, and Palantir. Academic institutions have also developed predictive policing technologies, such as Rutgers University’s RTM Diagnostics or Carnegie Mellon University’s CrimeScan, which is used in Pittsburgh. Some departments have built such tools with private companies and academic institutions. For example, in 2010, the Memphis Police Department built its own tool, in partnership with the University of Memphis Department of Criminology and Criminal Justice, using IBM SPSS predictive analytics.

As of summer 2020, the technology is used in dozens of cities across the United States.

What Problems Does it Pose?

One of the biggest flaws of predictive policing is the faulty data fed into the system. These algorithms depend on data informing them of where criminal activity has happened to predict where future criminal activity will take place. However, not all crime is recorded—some communities are more likely to report crime than others, some crimes are less likely to be reported than other crimes, and officers have discretion in deciding whether or not to make an arrest. Predictive policing only accounts for crimes that are reported, and concentrates policing resources in those communities, which then makes it more likely that police may uncover other crimes. This all creates a feedback loop that makes predictive policing a self-fulfilling prophecy. As professor Suresh Venkatasubramanian put it:

“If you build predictive policing, you are essentially sending police to certain neighborhoods based on what they told you—but that also means you’re not sending police to other neighborhoods because the system didn’t tell you to go there. If you assume that the data collection for your system is generated by police whom you sent to certain neighborhoods, then essentially your model is controlling the next round of data you get.”

This feedback loop will impact vulnerable communities, including communities of color, unhoused communities, and immigrants.

Police are already policing minority neighborhoods and arresting people for things that may have gone unnoticed or unreported in less heavily patrolled neighborhoods. When this already skewed data is entered into a predictive algorithm, it will deploy more officers to the communities that are already overpoliced.

A recent deep dive into the predictive program used by the Pasco County Sheriff's office illustrates the harms that getting stuck in an algorithmic loop can have on people. After one 15-year-old was arrested for stealing bicycles out of a garage, the algorithm continuously dispatched police to harass him and his family. Over the span of five months, police went to his home 21 times. They showed up at his gym and his parent’s place of work. The Tampa Bay Times revealed that since 2015, the sheriff's office has made more than 12,500 similar preemptive visits on people.

These visits often resulted in other, unrelated arrests that further victimized families and added to the likelihood that they would be visited and harassed again. In one incident, the mother of a targeted teenager was issued a $2,500 fine when police sent to check in on her child saw chickens in the backyard. In another incident, a father was arrested when police looked through the window of the house and saw a 17year-old smoking a cigarette. These are the kinds of usually unreported crimes that occur in all neighborhoods, across all economic strata—but which only those marginalized people who live under near constant policing are penalized for.

As experts have pointed out, these algorithms often draw from flawed and non-transparent sources such as gang databases, which have been the subject of public scrutiny due to their lack of transparency and overinclusion of Black and Latinx people. In Los Angeles, for instance, if police notice a person wearing a sports jersey or having a brief conversation with someone on the street, it may be enough to include that person in the LAPD’s gang database. Being included in a gang database often means being exposed to more police harassment and surveillance, and also can lead to consequences once in the legal system, such as harsher sentences. Inclusion in a gang database can impact whether a predictive algorithm identifies a person as being a potential threat to society or artificially projects a specific crime as gang-related. In July 2020, the California Attorney General barred police in the state from accessing any of LAPD’s entries into the California gang database after LAPD officers were caught falsifying data. Unaccountable and overly broad gang databases are the type of flawed data flowing from police departments into predictive algorithms, and exactly why predictive policing cannot be trusted.

To test racial disparities in predictive policing, Human Rights Data Analysis Group (HRDAG) looked at Oakland Police Department’s recorded drug crimes. It used a big data policing algorithm to determine where it would suggest that police look for future drug crimes. Sure enough, HRDAG found that the data-driven model would have focused almost exclusively on low-income communities of color. But public health data on drug users combined with U.S. Census data show that the distribution of drug users does not correlate with the program’s predictions, demonstrating that the algorithm’s predictions were rooted in bias rather than reality.

All of this is why a group of academic mathematicians recently declared a boycott against helping police create predictive policing tools. They argued that their credentials and expertise create a convenient way to smuggle racist ideas about who will commit a crime based on where they live and who they know, into the mainstream through scientific legitimacy. “It is simply too easy,” they write, “to create a ’scientific’ veneer for racism.”

In addition, there is a disturbing lack of transparency surrounding many predictive policing tools. In many cases, it’s unclear how the algorithms are designed, what data is being used, and sometimes even what the system claims to predict. Vendors have sought non-disclosure clauses or otherwise shrouded their products in secrecy, citing trade secrets or business confidentiality. When data-driven policing tools are black boxes, it’s difficult to assess the risks of error rates, false positives, limits in programming capabilities, biased data, or even flaws in source code that affect search results.

For local departments, the prohibitive cost of using these predictive technologies can also be a detriment to the maintenance of civil society. In Los Angeles, the LAPD paid $20 million over the course of nine years to use Palantir’s predictive technology alone. That’s only one of many tools used by the LAPD in an attempt to predict the future.

Finally, predictive policing raises constitutional concerns. Simply living or spending time in a neighborhood or with certain people may draw suspicion from police or cause them to treat people as potential perpetrators. As legal scholar Andrew Guthrie Furgeson has written, there is tension between predictive policing and legal requirements that police possess reasonable suspicion to make a stop. Moreover, predictive policing systems sometimes utilize information from social media to assess whether a person might be likely to engage in crime, which also raises free speech issues.

Technology cannot predict crime, it can only weaponize a person’s proximity to police action. An individual should not have their presumption of innocence eroded because a casual acquaintance, family member, or neighbor commits a crime. This just opens up members of already vulnerable populations to more police harassment, erodes trust between public safety measures and the community, and ultimately creates more danger. This has already happened in Chicago, where the police surveil and monitor the social media of victims of crimes—because being a victim of a crime is one of the many factors Chicago’s predictive algorithm uses to determine if a person is at high risk of committing a crime themselves.

What Can Be Done About It?

As the Santa Cruz ban suggests, cities are beginning to wise up to the dangers of predictive policing. As with the growing movement to ban government use of face recognition and other biometric surveillance, we should also seek bans on predictive policing. Across the country, from San Francisco to Boston, almost a dozen cities have banned police use of face recognition after recognizing its disproportionate impact on people of color, its tendency to falsely accuse people of crimes, its erosion of our presumption of innocence, and its ability to track our movements.

Before predictive policing becomes even more widespread, cities should now take advantage of the opportunity to protect the well-being of their residents by passing ordinances that ban the use of this technology or prevent departments from acquiring it in the first place. If your town has legislation like a Community Control Over Police Surveillance (CCOPS) ordinance, which requires elected officials to approve police purchase and use of surveillance equipment, the acquisition of predictive policing can be blocked while attempts to ban the technology are made.

The lessons from the novella and film Minority Report still apply, even in the age of big data: people are innocent until proven guilty. People should not be subject to harassment and surveillance because of their proximity to crime. For-profit software companies with secretive proprietary algorithms should not be creating black box crystal balls exempt from public scrutiny and used without constraint by law enforcement. It’s not too late to put the genie of predictive policing back in the bottle, and that is exactly what we should be urging local, state, and federal leaders to do.

from Deeplinks https://ift.tt/2EV5PgI

0 notes

Text

ITJP and HRDAG begin initiative to count Tamil war dead https://t.co/DyCuZEQJ8F #lka #srilanka #tamil #eelam #mullivaikkal @itjpsl @hrdag https://t.co/Vu0Dp5Icy9

ITJP and HRDAG begin initiative to count Tamil war dead https://t.co/DyCuZEQJ8F #lka #srilanka #tamil #eelam #mullivaikkal @itjpsl @hrdag pic.twitter.com/Vu0Dp5Icy9

— Tamil Guardian (@TamilGuardian) January 7, 2019

via Twitter https://twitter.com/TamilGuardian

January 07, 2019 at 08:32AM

0 notes

Link

Creating unbiased, accurate algorithms isn’t impossible — it’s just time consuming.

“It actually is mathematically possible,” facial recognition startup Kairos CEO Brian Brackeen told me on a panel at TechCrunch Disrupt SF.

Algorithms are sets of rules that computers follow in order to solve problems and make decisions about a particular course of action. Whether it’s the type of information we receive, the information people see about us, the jobs we get hired to do, the credit cards we get approved for, and, down the road, the driverless cars that either see us or don’t, algorithms are increasingly becoming a big part of our lives. But there is an inherent problem with algorithms that begins at the most base level and persists throughout its adaption: human bias that is baked into these machine-based decision-makers.

Creating unbiased algorithms is a matter of having enough accurate data. It’s not about just having enough “pale males” in the model, but about having enough images of people from various racial backgrounds, genders, abilities, heights, weights and so forth.

Kairos CEO Brian Brackeen

“In our world, facial recognition is all about human biases, right?” Brackeen said. “And so you think about AI, it’s learning, it’s like a child and you teach it things and then it learns more and more. What we call right down the middle, right down the fair way is ‘pale males.’ It’s very, very good. Very, very good at identifying somebody who meets that classification.”

But the further you get from pale males — adding women, people from different ethnicities, and so forth — “the harder it is for AI systems to get it right, or at least the confidence to get it right,” Brackeen said.

Still, there are cons to even a one hundred percent accurate model. On the pro side, a good facial recognition use case for a completely accurate algorithm would be in a convention center, where you use the system to quickly identity and verify people are who they say they are. That’s one type of use case Kairos, which works with corporate businesses around authentication, addresses.

“So if we’re wrong, at worst case, maybe you have to do a transfer again to your bank account,” he said. “If we’re wrong, maybe you don’t see a picture accrued during a cruise liner. But when the government is wrong about facial recognition, and someone’s life or liberty is at stake, they can be putting you in a lineup that you shouldn’t be in. They could be saying that this person is a criminal when they’re not.”

But in the case of law enforcement, no matter how accurate and unbiased these algorithms are, facial recognition software has no business in law enforcement, Brackeen said. That’s because of the potential for unlawful, excessive surveillance of citizens.

Given the government already has our passport photos and identification photos, “they could put a camera on Main Street and know every single person driving by,” Brackeen said.

And that’s a real possibility. In the last month, Brackeen said Kairos turned down a government request from Homeland Security, seeking facial recognition software for people behind moving cars.

“For us, that’s completely unacceptable,” Brackeen said.

Another issue with 100 percent perfect mathematical predictions is that it comes down to what the model is predicting, Human Rights Data Analysis Group lead statistician Kristian Lum said on the panel.

Human Rights Data Analysis Group lead statistician Kristian Lum

“Usually, the thing you’re trying to predict in a lot of these cases is something like rearrest,” Lum said. “So even if we are perfectly able to predict that, we’re still left with the problem that the human or systemic or institutional biases are generating biased arrests. And so, you still have to contextualize even your 100 percent accuracy with is the data really measuring what you think it’s measuring? Is the data itself generated by a fair process?”

HRDAG Director of Research Patrick Ball, in agreement with Lum, argued that it’s perhaps more practical to move it away from bias at the individual level and instead call it bias at the institutional or structural level. If a police department, for example, is convinced it needs to police one neighborhood more than another, it’s not as relevant if that officer is a racist individual, he said.

HRDAG Director of Research Patrick Ball

“What’s relevant is that the police department has made an institutional decision to over-police that neighborhood, thereby generating more police interactions in that neighborhood, thereby making people with that ZIP code more likely to be classified as dangerous if they are classified by risk assessment algorithms,” Ball said.

And even if the police were to have perfect information about every crime committed, in order to build a fair machine learning system, “we would need to live in a society of perfect surveillance so that there is absolute police knowledge about every single crime so that nothing is excluded,” he said. “So that there would be no bias. Let me suggest to you that that’s way worse even than a bunch of crimes going free. So maybe we should just work on reforming police practice and forget about all of the machine learning distractions because they’re really making things worse, not better.”

He added, “For fair predictions, you first need a fair criminal justice system. And we have a ways to go.”

via TechCrunch

0 notes

Text

Announcing latish breaking, amazing additions to the agenda for Disrupt SF (Sept. 5-7)

TechCrunch Disrupt SF (Sept. 5-7), we said from the start, was going to be the most ambitious ever, and when it comes to programming there’s no question the Disrupt SF agenda eclipses anything we’ve done in the past. There are two BIG stages, plus two speaker Q&A stages, workshops and a Startup Showcase stage, where the top exhibiting startups will tell their stories. We published the agenda back in early July, but we’ve also added dozens of sessions since then for a total of 77 on the Disrupt stages. You can always check out the complete and up-to-date agenda. Here is a sampling of what you might have missed since we originally posted the agenda.

On the Disrupt stages:

Alex Stamos, former head of security at Facebook and Yahoo, on security in an insecure world

Aileen Lee (Cowboy Ventures), Megan Quinn (Spark Capital) and Sarah Tavel (Benchmark) on the state of venture

Dario Gill (IBM) and Chad Rigetti (Rigetti Computing) on quantum computing

Laurie Yoler (Zoox), Reilly Brennan (Trucks VC), Chris Urmson (Aurora) on all things autonomous

Jason Robbins (Draft Kings) on the changing worlds of online fantasy and gambling

Hans Tung (GGV) and Ti Wang (Liulishuo) on the Chinese startup road to US markets

Rachel Haurwitz (Caribou Biosciences), Trevor Martin (Mammoth Biosciences) on CRIPSR

Rich Mahoney (Seismic) on wearable (and fashionable) robotics

Rob Coneybeer (Shasta Ventures), Tess Hatch (Bessemer), Matt Ocko (Data Collective) on investing in space

Robin Berzin (Parsley Health), Aaron Patzer (Vital Software) on the future of health

Colin Angle (iRobot) on the next generation of home robotics

Brian Brackenn (Kairos), Patrick Ball and Kristian Lum (HRDAG) on data and human rights

In the 30, audience-driven, Q&A sessions with speakers, including..

Building on DLT: Mance Harmon (Hedera) and Brian Behlendorf (Hyperledger)

From Funding to Fintech: Nikolay Storonsky (Revolut)

Inside the Blockchain: Joe Lubin, Amanda Gutterman & Sam Cassatt (Consensys)

Building Brands: Emiliy Heyward (Red Antler), Philip Krim (Casper), and Tina Sharkey (Brandless)

Gaming’s Culture: Jason Citron (Discord) and Delane Parnell (PlayVS)

HealthTech on the Horizon: Robin Berzin (Parsley Health) and Aaron Patzer (Vital Software)

In the workshops:

All Raise‘s Women Founders Roundtable and AMA

Verizon 5G: The Fourth Industrial Revolution (Sponsored by Verizon)

Bringing NASA Technology Down to Earth (Sponsored by NASA)

Hacking Human Performance (Sponsored by Red Bull)

Running a Node on A Distributed Ledger: Live Demo (Sponsored by Constellations Labs)

This is just a fraction of what you’ll be able to experience at Disrupt SF. There’s still time for you to grab your pass and save up top $500 – get yours today here.

Via Ned Desmond https://techcrunch.com

0 notes

Text

Why big-data analysis of police activity is inherently biased - The Conversation US

The Conversation US Why big-data analysis of police activity is inherently biased The Conversation US William Isaac is a statistical consultant for the Human Rights Data Analysis Group (HRDAG). The Human Rights Data Analysis Group is a non-profit, non-partisan organization that produces rigorous, scientific analyses of human rights violations around ... and more » http://dlvr.it/P64Dbz #bigdata #hadoop #analytics

0 notes

Text

Why big-data analysis of police activity is inherently biased - The Conversation US

The Conversation US

Why big-data analysis of police activity is inherently biased

The Conversation US

William Isaac is a statistical consultant for the Human Rights Data Analysis Group (HRDAG). The Human Rights Data Analysis Group is a non-profit, non-partisan organization that produces rigorous, scientific analyses of human rights violations around ...

and more »

http://ift.tt/2q1KSqA via

http://ift.tt/18iWci2

0 notes

Link

@kaythaney I feel that Patrick Ball will have a very good answer. https://t.co/L0gC9prEsx @hrdag / @katecrawford @wilbanks @hmason

— noel hidalgo 🌹 (@noneck) March 22, 2017

0 notes

Text

But as ever-larger, more concentrated corporations captured more of their regulators, we’ve essentially forgotten that there are domains of law other than copyright — that is, other than the kind of law that corporations use to enrich themselves.

Copyright has some uses in creative labor markets, but it’s no substitute for labor law. Likewise, copyright might be useful at the margins when it comes to protecting your biometric privacy, but it’s no substitute for privacy law.

When the AI companies say, “There’s no way to use copyright to fix AI’s facial recognition or labor abuses without causing a lot of collateral damage,” they’re not lying — but they’re also not being entirely truthful.

If they were being truthful, they’d say, “There’s no way to use copyright to fix AI’s facial recognition problems, that’s something we need a privacy law to fix.”

If they were being truthful, they’d say, “There’s no way to use copyright to fix AI’s labor abuse problems, that’s something we need labor laws to fix.

-How To Think About Scraping: In privacy and labor fights, copyright is a clumsy tool at best

Image:

syvwlch (modified)

https://commons.wikimedia.org/wiki/File:Print_Scraper_(5856642549).jpg

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/deed.en

#scraping#privacy#labor#human rights#FARC#colombia#HRDAG#wayback machine#new york times#copyright#robots.txt#Mario Zechner#monopoly#price fixing#austria#clearview ai#biometrics#biometric privacy#plausible sentence generators#criti-hype#ai#llms#stochastic parrots#computational linguistics#chokepoint capitalism#llcs with mfas

106 notes

·

View notes

Text

Web-scraping is good, actually.

For nearly all of history, academic linguistics focused on written, formal text, because informal, spoken language was too expensive and difficult to capture. In order to find out how people spoke — which is not how people write! — a researcher had to record speakers, then pay a grad student to transcribe the speech.

The process was so cumbersome that the whole discipline grew lopsided. We developed an extensive body of knowledge about written, formal prose (something very few of us produce), while informal, casual language (something we all produce) was mostly a black box.

The internet changed all that, creating the first-ever corpus of informal language — the immense troves of public casual speech that we all off-gas as we move around on the internet, chattering with our friends.

The burgeoning discipline of computational linguistics is intimately entwined with the growth of the internet, and its favorite tactic is scraping: vacuuming up massive corpuses of informal communications created by people who are incredibly hard to contact (often, they are anonymous or pseudonymous, and even when they’re named and know, are too numerous to contact individually).

The academic researchers who are creating a new way of talking and thinking about human communication couldn’t do their jobs without scraping.

-How To Think About Scraping: In privacy and labor fights, copyright is a clumsy tool at best

#scraping#privacy#labor#human rights#FARC#colombia#HRDAG#wayback machine#new york times#copyright#robots.txt#Mario Zechner#monopoly#price fixing#austria#clearview ai#biometrics#biometric privacy#plausible sentence generators#criti-hype#ai#llms#stochastic parrots#computational linguistics#chokepoint capitalism#llcs with mfas

86 notes

·

View notes

Text

Scraping to train machine-learning models is good, actually.

The Human Rights Data Analysis Group is a crucial player in the fight to hold war-criminals to account. As the leading nonprofit providing statistical analysis of crimes against humanities, HRDAG has participated in tribunals, truth and reconciliation proceedings, and trials from Serbia to East Timor, South Africa to the USA, and, most recently, Colombia.

Colombia’s long civil war — funded and supported by US agencies from the CIA and DEA to the US military —went on for decades, killing hundreds of thousands of people, mostly very poor, very marginalized people.

Many of these killings were carried out by child soldiers, who were recruited at gunpoint by both CIA-backed right-wing militias whose actions were directed by the richest, most powerful people in the country, and by the leftist FARC guerrillas.

HRDAG, working in partnership with the Colombian human rights group Dejusticia, merged over 100 databases in order to build a rigorous statistical picture of the war’s casualties; the likelihood that each death could be attributed to the government, right-wing militias, or FARC forces; as well as which groups were primarily responsible for kidnapping children and forcing them to be soldiers.

The resulting report builds on the largest human rights data-set ever collected. The report — which makes an irrefutable case that right-wing militias committed the majority of killings and child-soldier recruitment, and that their wealthy backers knew and supported these actions — have been key to Colombia’s truth and reconciliation proceedings.

-How To Think About Scraping: In privacy and labor fights, copyright is a clumsy tool at best

Image:

syvwlch (modified)

https://commons.wikimedia.org/wiki/File:Print_Scraper_(5856642549).jpg

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/deed.en

#scraping#privacy#labor#human rights#FARC#colombia#HRDAG#wayback machine#new york times#copyright#robots.txt#Mario Zechner#monopoly#price fixing#austria#clearview ai#biometrics#biometric privacy#plausible sentence generators#criti-hype#ai#llms#stochastic parrots#computational linguistics#chokepoint capitalism#llcs with mfas

90 notes

·

View notes

Text

Scraping to alienate creative workers’ labor is bad, actually.

Creative workers are justifiably furious that their bosses took one look at the plausible sentence generators and body-snatching image-makers and said, “Holy shit, we will never have to pay a worker ever again.”

Our bosses have alarming, persistent, rock-hard erections for firing our asses and replacing us with shell-scripts. The dream of production without workers goes all the way back to the industrial revolution, and now — as then — capitalists aspire to becoming rentiers, who own things for a living rather than making things for a living.

Creators’ bosses hate creators. They’ve always wished we were robots, rather than people who cared about our work. They want to be able to prompt us like they would a Stochastic Parrot: “Make me E.T., but the hero is a dog, and put a romantic sub-plot in the second act, and then have a giant gunfight at the climax.”

Ask a screenwriter for that script and you’ll have to take a five minute break while everyone crawls around on the floor looking for the writer’s eyeballs, which will have fallen out of their face after being rolled so hard.

Ask an LLM for that script and it’ll cheerfully cough it up. It’ll be shit, but at least you won’t get any lip.

Same goes for art-directors, newspaper proprietors, and other would-be job-removers for whom a low-quality product filled with confident lies is preferable to having to argue with an uppity worker who not only expects to have a say in their work assignments, but also expects to get paid for their work.

-How To Think About Scraping: In privacy and labor fights, copyright is a clumsy tool at best

Image:

syvwlch (modified)

https://commons.wikimedia.org/wiki/File:Print_Scraper_(5856642549).jpg

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/deed.en

#scraping#privacy#labor#human rights#FARC#colombia#HRDAG#wayback machine#new york times#copyright#robots.txt#Mario Zechner#monopoly#price fixing#austria#clearview ai#biometrics#biometric privacy#plausible sentence generators#criti-hype#ai#llms#stochastic parrots#computational linguistics#chokepoint capitalism#llcs with mfas

35 notes

·

View notes

Text

Massive Predpol leak confirms that it drives racist policing

When you or I seek out evidence to back up our existing beliefs and ignore the evidence that shows we’re wrong, it’s called “confirmation bias.” It’s a well-understood phenomenon that none of us are immune to, and thoughtful people put a lot of effort into countering it in themselves.

But confirmation bias isn’t always an unconscious process. Consultancies like McKinsey have grown to multibillion-dollar titans by offering powerful people confirmation bias as a service: pay them enough and they’ll produce a fancy report saying whatever you want to do is the best thing you possibly could do.

https://pluralistic.net/2021/11/25/strikesgiving/#cool-story-pharma-bro

A sizable fraction of the machine learning bubble is driven by this phenomenon. Pay a machine learning company enough money and they’ll produce a statistical model that proves that whatever terrible thing you’re doing is empirical and objective and true. After all, “Math doesn’t lie.”

The best term I’ve heard for this is “empirical facewash.” I learned that term from Patrick Ball, in a presentation on the Human Rights Data Analysis Group’s outstanding study of racial bias in predictive policing tools.

https://www.mic.com/articles/156286/crime-prediction-tool-pred-pol-only-amplifies-racially-biased-policing-study-shows

(Sidenote: HRDAG just won the prestigious Rafto Prize, a major international award for human rights work)

https://hrdag.org/2021/09/23/hrdag-wins-the-rafto-prize/

(And on that note, HRDAG is a shoestring operation that turns our tax-deductible donations into reliable statistical accounts of human rights abuses that are critical to truth and reconciliation, human rights tribunals, and trials for crimes against humanity. I am an annual donor and you should consider them in your giving, too)

https://hrdag.networkforgood.com/

Here’s how Ball described that predictive policing research: everybody knows that cops have a racist policing problem, but we don’t all agree on what that problem is. You and I might think that the problem is that cops make racially motivated arrests, while the cops and their apologists think the problem is that we think the cops make racially motivated arrests.

By feeding crime data into a machine learning model, and then asking it to predict where crime will take place based on past patterns of crime data, cops can get an “objective” picture of where to concentrate their policing activities.

But this has major problems. First, it presumes that crime stats are objective — that everyone reports crime at the same rate, and that the police investigate suspects at the same rate. In other words, this starts from the presumption that there is no racial bias in crime statistics — and then uses that presumption to prove that there is no racial bias in crime statistics!

Second, this presumes that undetected crimes are correlated with detected ones. In other words, if the cops detect a lot of crime in a poor neighborhood — and not in a rich neighborhood — then all the undetected crimes are also in those poor neighborhoods.

Finally, this presumes that every crime is a crime! In other words, it presumes that there are no de facto crimes like “driving while brown” or “walking your dog while black.” Some of the “crimes” in the crime stats aren’t actually crimes — rather, they’re pretextual stops that turn into plea deals after bullying prosecutors threaten a long prison sentence.

HRDAG’s work crystallized the critique of machine learning as a tool for correcting systemic bias, and it has been my touchstone for understanding other bias-reinforcing/bias-accelerating machine learning scandals. It’s a critical adjunct to such foundational texts as Cathy O’Neil’s “Weapons of Math Destruction”:

https://memex.craphound.com/2016/09/06/weapons-of-math-destruction-invisible-ubiquitous-algorithms-are-ruining-millions-of-lives/

And Virginia Eubanks’s “Automating Inequality.”

https://memex.craphound.com/2018/01/31/automating-inequality-using-algorithms-to-create-a-modern-digital-poor-house/

But you don’t need to look to outside sources for evidence that predictive policing reinforces and accelerates racial bias. The founders of Predpol — the leading predictive policing tool — came to the same conclusion in 2018, but decided not to do anything about it.

https://ieeexplore.ieee.org/abstract/document/8616417

That may sound shocking, but really, it’s par for the course with Predpol, which rebranded itself as Geolitica in order to distance itself from a string of scandals and bad publicity.

From the start, Predpol has wrapped its operations in secrecy, pressuring police forces to hide their use of the service from city officials and residents. Back in 2018, a security researcher provided me with a list of cities that seemed to have secretly procured Predpol services.

https://boingboing.net/2018/10/30/el-monte-and-tacoma.html

In 2019, Motherboard’s Caroline Haskins used that report to extract even more information about the cops’ secret deals with Predpol:

https://www.vice.com/en/article/d3m7jq/dozens-of-cities-have-secretly-experimented-with-predictive-policing-software

All this started because my source was able to learn that these cities were experimenting with Predpol’s digital phrenology due to basic cybersecurity errors the company had made.

Predpol’s cybersecurity has not improved since. A team of reporters from Gizmodo and The Markup just published a blockbuster report on Predpol’s role in biased policing, using the largest Predpol leak in history. Data regarding 5.9 million Predpol predictions was left on an unsecured server!

https://themarkup.org/prediction-bias/2021/12/02/crime-prediction-software-promised-to-be-free-of-biases-new-data-shows-it-perpetuates-them

The Markup/Gizmodo team used that dataset to conduct a massive study on racial bias in predictive policing. As American University’s Andrew Ferguson put it, “No one has done the work you guys are doing, which is looking at the data.” This is “striking because people have been paying hundreds of thousands of dollars for this technology for a decade.”

Ferguson’s point — that public millions have been poured into an experimental technology without any external validation — is important. After all, it’s unlikely that cops and Predpol keep this stuff a secret from us because they know we’ll love it and they don’t want to ruin the pleasant surprise.

On the other hand, it makes perfect sense if Predpol is really selling empirical facewash — that is, confirmation bias as a service.

That certainly seems to be the case based on the analysis published today. Police who rely on Predpol do less patrolling in white and affluent neighborhoods (these are pretty much the same neighborhoods in most of the USA, of course). But when it comes communities of color and poor communities, Predpol predictions send cops flooding in: “A few neighborhoods in our data were the subject of more than 11,000 predictions.”

When Predpol sends cops into your neighborhood, arrests shoot up (you find crime where you look for it), as does use of force. This has knock-on effects — for example, the reporters tell the story of Brianna Hernandez, who was evicted, along with her two young children from low-income housing. Her partner was stopped in his car while dropping off some money for her. He had an old court injunction barring him from being on the premises because of a crime he committed 14 years earlier, while he was a minor. The housing complex had a policy of evicting tenants who associated with people who committed crimes, and it had been the target of a flood of Predpol predictions.

Brianna doesn’t know if she and her children were made homeless because of a Predpol prediction, thanks to the secrecy Predpol and its customers hide behind.

Robert McCorquodale — the Calcasieu Parish, LA sheriff’s attorney who handles public records requests — refused to confirm whether they used Predpol, despite the fact that the data-leak clearly confirmed they were. He cited “public safety and officer safety” and speculated that if criminals knew Predpol was in use, they’d be able to outwit it: “I feel this is not a public record.”

Unsurprisingly, police reform advocates in six of the cities where Predpol was in use didn’t know about it: “Even those involved in government-organized social justice committees said they didn’t have a clue about it.”

All this secrecy helps hide the fact that Predpol is a) expensive and b) it doesn’t work. But many police departments are wising up. LAPD was Predpol’s first big reference customer — and they stopped using it in 2020, citing financial constraints and a damning Inspector General report.

It’s not just LA. Santa Cruz — the birthplace of Predpol — also fired the company last year:

https://pluralistic.net/2020/06/27/belated-oppenheimers/#banana-slugs

There’s a limited pool of mathematicians who can produce the kind of convincing confirmation bias as a service that Predpol sells, and it’s shrinking. 1,400 mathematicians have signed an open letter “begging their colleagues not to collaborate on research with law enforcement, specifically singling out Predpol.”

https://www.ams.org/journals/notices/202009/rnoti-p1293.pdf

Image:

Science Museum London (modified)

https://wellcomecollection.org/works/fjbypqcr/items

CC BY 4.0

https://creativecommons.org/licenses/by/4.0

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0:

https://creativecommons.org/licenses/by/3.0/deed.en

172 notes

·

View notes

Text

The true US police body-count

The Human Rights Data Analysis Group does incredible human rights work by using rigorous statistical methods to piece together fragmentary evidence of genocides to extrapolate the full scope of the crimes, for use in international tribuals.

https://hrdag.org

America resembles these nations gripped by genocide in that we have a source of mass killings (deaths at the hands of police) and no reliable source that counts them all.

In 2015, Kristian Lum and Patrick Ball used techniques they'd used in Guatemala, Syria and Kosovo - to count Americans killed by cops.

The open-access paper explains their methods and conclusions and is a beautiful piece of scientific communications.

https://hrdag.org/wp-content/uploads/2015/07/2015-hrdag-estimating-undoc-homicides.pdf

At the time, they also published a layperson-friendly Granta article explaining these methods for non-statisticians, and in so doing, explained why all the official attempts to count the dead had underestimated the body count.

Ball's 2015 conclusion is cops kill about 1500 of us every year. "1500 police homicides/year would mean that 8-10% of all US homicide victims are killed by police. Of all US homicide victims killed by people they don’t know, approximately 1/3 of them are victims of police."

HRDAG's work has been incredibly important for my understanding of this crisis. Their early analysis of the first covid-19 studies was indepensible:

https://pluralistic.net/2020/03/15/denominators-matter/#denominators-matter

And I think about their primer on Bayesian reasoning and covid test-reliability comes up literally every day.

https://pluralistic.net/2020/04/29/banjo-nazis/#uncertainty

34 notes

·

View notes