Text

the first sentient robot to realize deceased humans and animals can’t be repaired or backed up on a server is gonna be so devastated

64K notes

·

View notes

Text

I love going viral on tumblr.com. It’s like if you stood in a field and said some of the stupidest shit a human being is capable of and then like fifty thousand crows attacked you

#lol#no literally I just laughed until I could not breathe at this#going viral on tumblr#going viral#tumblr#crows#hilarious#laugh rule

272K notes

·

View notes

Note

Do you happen to know the origin of the fantasy trope in which a deity's power directly corresponds to the number of their believers / the strength of their believers' faith?

I only know it from places like Discworld and DnD that I'm fairly confident are referencing some earlier source, but outside of Tinkerbell in Peter Pan, I can't think of of any specific work it might've come from, 20th-c fantasy really not being my wheelhouse.

Thank you!

That's an interesting question. In terms of immediate sources, I suspect, but cannot prove, that the trope's early appearances in both Dungeons & Dragons and Discworld are most immediately influenced by the oeuvre of Harlan Ellison – his best-known work on the topic, the short story collection Deathbird Stories, was published in 1975, which places it very slightly into the post-D&D era, though most of the stories it contains were published individually earlier – but Ellison certainly isn't the trope's originator. L Sprague de Camp and Fritz Leiber also play with the idea in various forms, as does Roger Zelazny, though only Zelazny's earliest work is properly pre-D&D.

Hm. Off the top of my head, the earliest piece of fantasy fiction I can think of that makes substantial use of the trope in its recognisably modern form is A E van Vogt's The Book of Ptath; it was first serialised in 1943, though no collected edition was published until 1947. I'm confident that someone who's more versed in early 20th Century speculative fiction than I am could push it back even earlier, though. Maybe one of this blog's better-read followers will chime in!

(Non-experts are welcome to offer examples as well, of course, but please double-check the publication date and make sure the work you have in mind was actually published prior to 1974.)

4K notes

·

View notes

Text

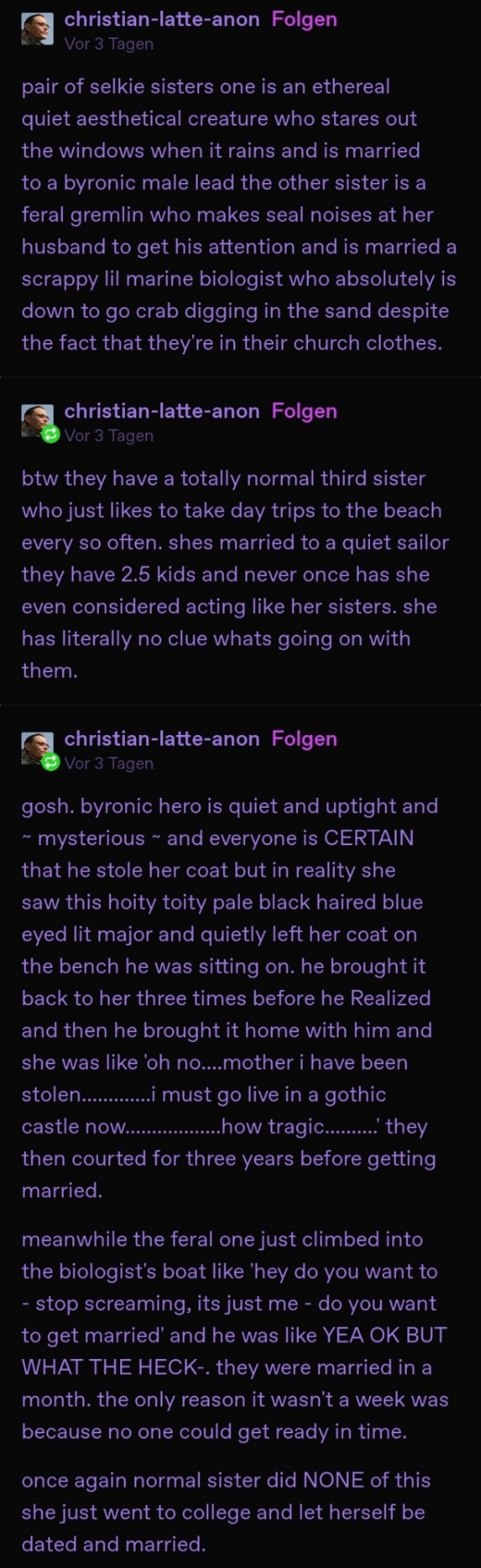

OP made the post unrebloggable but said it's fine to screenshot and I'm in love with this

48K notes

·

View notes

Text

hot artists don't gatekeep

I've been resource gathering for YEARS so now I am going to share my dragons hoard

Floorplanner. Design and furnish a house for you to use for having a consistent background in your comic or anything! Free, you need an account, easy to use, and you can save multiple houses.

Comparing Heights. Input the heights of characters to see what the different is between them. Great for keeping consistency. Free.

Magma. Draw online with friends in real time. Great for practice or hanging out. Free, paid plan available, account preferred.

Smithsonian Open Access. Loads of free images. Free.

SketchDaily. Lots of pose references, massive library, is set on a timer so you can practice quick figure drawing. Free.

SculptGL. A sculpting tool which I am yet to master, but you should be able to make whatever 3d object you like with it. free.

Pexels. Free stock images. And the search engine is actually pretty good at pulling up what you want.

Figurosity. Great pose references, diverse body types, lots of "how to draw" videos directly on the site, the models are 3d and you can rotate the angle, but you can't make custom poses or edit body proportions. Free, account option, paid plans available.

Line of Action. More drawing references, this one also has a focus on expressions, hands/feet, animals, landscapes. Free.

Animal Photo. You pose a 3d skull model and select an animal species, and they give you a bunch of photo references for that animal at that angle. Super handy. Free.

Height Weight Chart. You ever see an OC listed as having a certain weight but then they look Wildly different than the number suggests? Well here's a site to avoid that! It shows real people at different weights and heights to give you a better idea of what these abstract numbers all look like. Free to use.

185K notes

·

View notes

Text

site that you can type in the definition of a word and get the word

site for when you can only remember part of a word/its definition

site that gives you words that rhyme with a word

site that gives you synonyms and antonyms

1M notes

·

View notes

Text

Natalie Portman being confused by the fact that you have to say “hi” to someone before starting a conversation in France got me like ?????

110K notes

·

View notes

Text

Iroh: People work their entire lives to find spirits and access the spirit world. It has taken years of dedication and study for me to reach the small part of it that I’m able to find.

Sokka, who accidentally steered a canoe to where the Avatar had been frozen for a century and then fell in love with the moon and also spent 24 hours in the spirit world that one time and was the only one who the hallucinations flat-out spoke to in the magical swamp: what, like it’s hard?

110K notes

·

View notes

Text

Humans are not perfectly vigilant

I'm on tour with my new, nationally bestselling novel The Bezzle! Catch me in BOSTON with Randall "XKCD" Munroe (Apr 11), then PROVIDENCE (Apr 12), and beyond!

Here's a fun AI story: a security researcher noticed that large companies' AI-authored source-code repeatedly referenced a nonexistent library (an AI "hallucination"), so he created a (defanged) malicious library with that name and uploaded it, and thousands of developers automatically downloaded and incorporated it as they compiled the code:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

These "hallucinations" are a stubbornly persistent feature of large language models, because these models only give the illusion of understanding; in reality, they are just sophisticated forms of autocomplete, drawing on huge databases to make shrewd (but reliably fallible) guesses about which word comes next:

https://dl.acm.org/doi/10.1145/3442188.3445922

Guessing the next word without understanding the meaning of the resulting sentence makes unsupervised LLMs unsuitable for high-stakes tasks. The whole AI bubble is based on convincing investors that one or more of the following is true:

There are low-stakes, high-value tasks that will recoup the massive costs of AI training and operation;

There are high-stakes, high-value tasks that can be made cheaper by adding an AI to a human operator;

Adding more training data to an AI will make it stop hallucinating, so that it can take over high-stakes, high-value tasks without a "human in the loop."

These are dubious propositions. There's a universe of low-stakes, low-value tasks – political disinformation, spam, fraud, academic cheating, nonconsensual porn, dialog for video-game NPCs – but none of them seem likely to generate enough revenue for AI companies to justify the billions spent on models, nor the trillions in valuation attributed to AI companies:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

The proposition that increasing training data will decrease hallucinations is hotly contested among AI practitioners. I confess that I don't know enough about AI to evaluate opposing sides' claims, but even if you stipulate that adding lots of human-generated training data will make the software a better guesser, there's a serious problem. All those low-value, low-stakes applications are flooding the internet with botshit. After all, the one thing AI is unarguably very good at is producing bullshit at scale. As the web becomes an anaerobic lagoon for botshit, the quantum of human-generated "content" in any internet core sample is dwindling to homeopathic levels:

https://pluralistic.net/2024/03/14/inhuman-centipede/#enshittibottification

This means that adding another order of magnitude more training data to AI won't just add massive computational expense – the data will be many orders of magnitude more expensive to acquire, even without factoring in the additional liability arising from new legal theories about scraping:

https://pluralistic.net/2023/09/17/how-to-think-about-scraping/

That leaves us with "humans in the loop" – the idea that an AI's business model is selling software to businesses that will pair it with human operators who will closely scrutinize the code's guesses. There's a version of this that sounds plausible – the one in which the human operator is in charge, and the AI acts as an eternally vigilant "sanity check" on the human's activities.

For example, my car has a system that notices when I activate my blinker while there's another car in my blind-spot. I'm pretty consistent about checking my blind spot, but I'm also a fallible human and there've been a couple times where the alert saved me from making a potentially dangerous maneuver. As disciplined as I am, I'm also sometimes forgetful about turning off lights, or waking up in time for work, or remembering someone's phone number (or birthday). I like having an automated system that does the robotically perfect trick of never forgetting something important.

There's a name for this in automation circles: a "centaur." I'm the human head, and I've fused with a powerful robot body that supports me, doing things that humans are innately bad at.

That's the good kind of automation, and we all benefit from it. But it only takes a small twist to turn this good automation into a nightmare. I'm speaking here of the reverse-centaur: automation in which the computer is in charge, bossing a human around so it can get its job done. Think of Amazon warehouse workers, who wear haptic bracelets and are continuously observed by AI cameras as autonomous shelves shuttle in front of them and demand that they pick and pack items at a pace that destroys their bodies and drives them mad:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

Automation centaurs are great: they relieve humans of drudgework and let them focus on the creative and satisfying parts of their jobs. That's how AI-assisted coding is pitched: rather than looking up tricky syntax and other tedious programming tasks, an AI "co-pilot" is billed as freeing up its human "pilot" to focus on the creative puzzle-solving that makes coding so satisfying.

But an hallucinating AI is a terrible co-pilot. It's just good enough to get the job done much of the time, but it also sneakily inserts booby-traps that are statistically guaranteed to look as plausible as the good code (that's what a next-word-guessing program does: guesses the statistically most likely word).

This turns AI-"assisted" coders into reverse centaurs. The AI can churn out code at superhuman speed, and you, the human in the loop, must maintain perfect vigilance and attention as you review that code, spotting the cleverly disguised hooks for malicious code that the AI can't be prevented from inserting into its code. As "Lena" writes, "code review [is] difficult relative to writing new code":

https://twitter.com/qntm/status/1773779967521780169

Why is that? "Passively reading someone else's code just doesn't engage my brain in the same way. It's harder to do properly":

https://twitter.com/qntm/status/1773780355708764665

There's a name for this phenomenon: "automation blindness." Humans are just not equipped for eternal vigilance. We get good at spotting patterns that occur frequently – so good that we miss the anomalies. That's why TSA agents are so good at spotting harmless shampoo bottles on X-rays, even as they miss nearly every gun and bomb that a red team smuggles through their checkpoints:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

"Lena"'s thread points out that this is as true for AI-assisted driving as it is for AI-assisted coding: "self-driving cars replace the experience of driving with the experience of being a driving instructor":

https://twitter.com/qntm/status/1773841546753831283

In other words, they turn you into a reverse-centaur. Whereas my blind-spot double-checking robot allows me to make maneuvers at human speed and points out the things I've missed, a "supervised" self-driving car makes maneuvers at a computer's frantic pace, and demands that its human supervisor tirelessly and perfectly assesses each of those maneuvers. No wonder Cruise's murderous "self-driving" taxis replaced each low-waged driver with 1.5 high-waged technical robot supervisors:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

AI radiology programs are said to be able to spot cancerous masses that human radiologists miss. A centaur-based AI-assisted radiology program would keep the same number of radiologists in the field, but they would get less done: every time they assessed an X-ray, the AI would give them a second opinion. If the human and the AI disagreed, the human would go back and re-assess the X-ray. We'd get better radiology, at a higher price (the price of the AI software, plus the additional hours the radiologist would work).

But back to making the AI bubble pay off: for AI to pay off, the human in the loop has to reduce the costs of the business buying an AI. No one who invests in an AI company believes that their returns will come from business customers to agree to increase their costs. The AI can't do your job, but the AI salesman can convince your boss to fire you and replace you with an AI anyway – that pitch is the most successful form of AI disinformation in the world.

An AI that "hallucinates" bad advice to fliers can't replace human customer service reps, but airlines are firing reps and replacing them with chatbots:

https://www.bbc.com/travel/article/20240222-air-canada-chatbot-misinformation-what-travellers-should-know

An AI that "hallucinates" bad legal advice to New Yorkers can't replace city services, but Mayor Adams still tells New Yorkers to get their legal advice from his chatbots:

https://arstechnica.com/ai/2024/03/nycs-government-chatbot-is-lying-about-city-laws-and-regulations/

The only reason bosses want to buy robots is to fire humans and lower their costs. That's why "AI art" is such a pisser. There are plenty of harmless ways to automate art production with software – everything from a "healing brush" in Photoshop to deepfake tools that let a video-editor alter the eye-lines of all the extras in a scene to shift the focus. A graphic novelist who models a room in The Sims and then moves the camera around to get traceable geometry for different angles is a centaur – they are genuinely offloading some finicky drudgework onto a robot that is perfectly attentive and vigilant.

But the pitch from "AI art" companies is "fire your graphic artists and replace them with botshit." They're pitching a world where the robots get to do all the creative stuff (badly) and humans have to work at robotic pace, with robotic vigilance, in order to catch the mistakes that the robots make at superhuman speed.

Reverse centaurism is brutal. That's not news: Charlie Chaplin documented the problems of reverse centaurs nearly 100 years ago:

https://en.wikipedia.org/wiki/Modern_Times_(film)

As ever, the problem with a gadget isn't what it does: it's who it does it for and who it does it to. There are plenty of benefits from being a centaur – lots of ways that automation can help workers. But the only path to AI profitability lies in reverse centaurs, automation that turns the human in the loop into the crumple-zone for a robot:

https://estsjournal.org/index.php/ests/article/view/260

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

--

Jorge Royan (modified)

https://commons.wikimedia.org/wiki/File:Munich_-_Two_boys_playing_in_a_park_-_7328.jpg

CC BY-SA 3.0

https://creativecommons.org/licenses/by-sa/3.0/deed.en

--

Noah Wulf (modified)

https://commons.m.wikimedia.org/wiki/File:Thunderbirds_at_Attention_Next_to_Thunderbird_1_-_Aviation_Nation_2019.jpg

CC BY-SA 4.0

https://creativecommons.org/licenses/by-sa/4.0/deed.en

375 notes

·

View notes

Text

I just discovered foodtimeline.org, which is exactly what it sounds like: centuries worth of information about FOOD. If you are writing something historical and you want a starting point for figuring out what people should be eating, this might be a good place?

#useful#writing#writing resources#writing advice#food#food timeline#history#historical fiction#accuracy

197K notes

·

View notes

Text

Horror isekai where Perceiving the Weird Eldritch Thing gets you catapulted into a nightmare labyrinth of puzzle-solving.

I.e Those Who Perceive The Hunt of the Goblin King Must Partake In The Labyrinth and Can Only Be Freed If They Complete It In One Day and One Night. By Fae Law. For Reasons.

But the definition of “perception” clearly needs to be updated because some normal guy simply films the Hunt of the Goblin King Behind Arby’s, and puts it on Facebook -

No, not instagram or TikTok, it’s important that it be Facebook -

Because the rules are pretty clear, “the rules are the rules” as is carved ominously in elvish runes above the grim gate, and the Contract is Sealed. and so therefore the guy and 25 of their most random real-life acquaintances must run the gauntlet together. It’s Some Guy, their immediate neighbors, their first partner’s mom, their friends from hobby Facebook groups (oh this poor guy and their hobbies; the elderly birdwatchers from Facebook and the young up-and-coming drag king community), their random teen kid niece, college friends, a dog who also watched the video, a couple consisting of a woman who is the guy’s Facebook friend and showed her husband the video, and the husband doesn’t even know Some Guy, so he’s in the labyrinth and absolutely furious about being forced to be involved, and they proceed to break up over the course of the puzzle.

It’s important that the narrative keeps trying to be a sexy dark horror isekai! but within this the comedic reality of Catherine, 52, the guy’s horse-riding instructor, being passionately involved in escape-room-style puzzle solving and grappling with minor goblins. They are in fact speedrunning the gauntlet.

The Goblin King finally has to say: all right, actually, I only really set all this up to fuck with one (1) guy at a time, thanks for your willingness to participate, but I think all 25 of you can consider the gauntlet fully run.

And the group would be quite hurt by that. The rules are the rules. We have a contract, actually. Let Catherine cook.

4K notes

·

View notes

Text

maidens if you are going to flee dramatically from my castle in the middle of the night once i reveal my true nature to you please leave your candelabra on the little ledge by the portcullis we are running out of them

182K notes

·

View notes

Text

so the house of representatives just passed a bill that will now move to the senate to BAN tik tok completely in the united states and they are expected to argue that “national security risks” outweigh the freedom of speech and first amendment rights. biden has already said that if it gets to him, he will sign it. whether or not you use the app…….this is something to be worried about

55K notes

·

View notes

Text

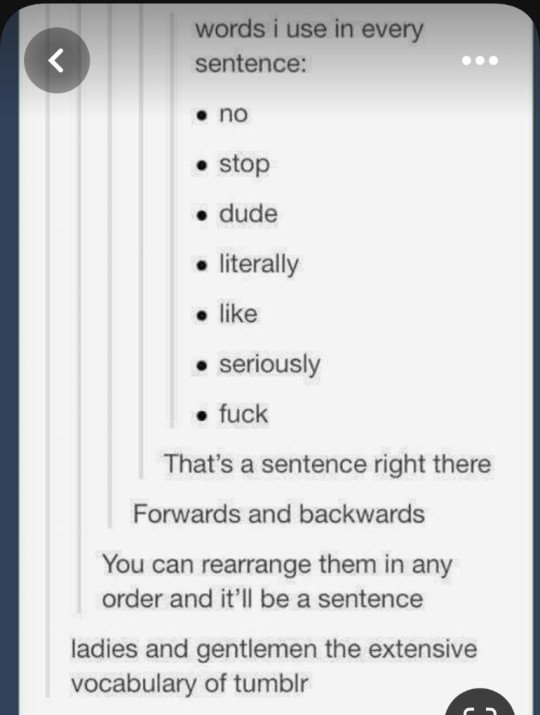

It’s bonkers that you can rearrange this in any order and it’s still a sentence. To whoever keeps making up this insane language:

seriously

like

fuck

no

dude

literally

stop

35K notes

·

View notes

Text

44K notes

·

View notes

Text

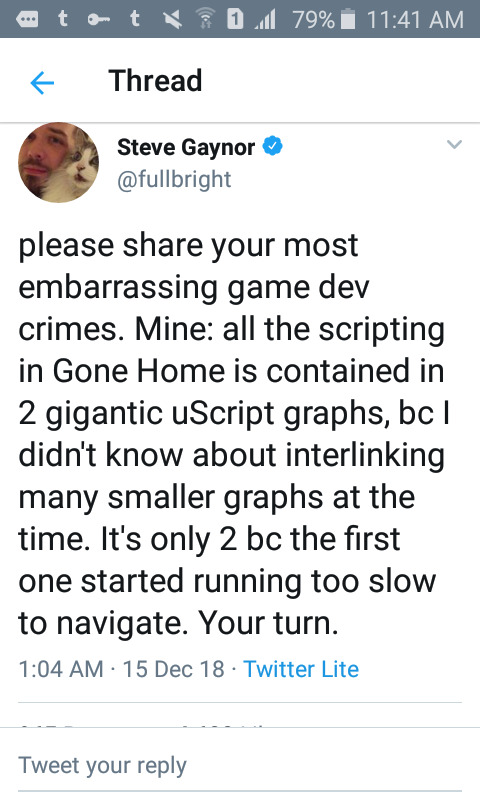

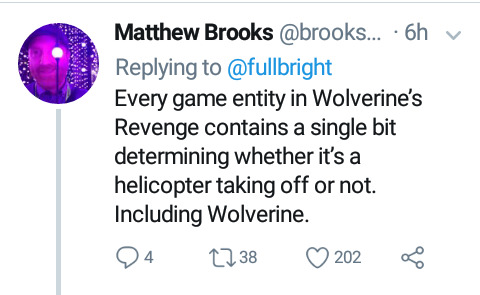

please play Gone Home

92K notes

·

View notes