#ai face recognition online

Text

Artificial Intelligence-Based Face Recognition

Current technology astounds people with incredible innovations that not only make life easier but also more pleasant. Face recognition has consistently shown to be the least intrusive and fastest form of biometric verification. To validate one's identification, the software compares a live image to a previously stored facial print using deep learning techniques. This technology's foundation is built around image processing and machine learning. Face recognition has gained significant interest from researchers as a result of human activity in many security applications such as airports, criminal detection, face tracking, forensics, and so on. Face biometrics, unlike palm prints, iris scans, fingerprints, and so on, can be non-intrusive.

They can be captured without the user's knowledge and then used for security-related applications such as criminal detection, face tracking, airport security, and forensic surveillance systems. Face recognition is extracting facial images from a video or surveillance camera. They are compared to the stored database. Face recognition entails training known photos, categorizing them with known classes, and then storing them in a database. When a test image is sent to the system, it is classed and compared to the stored database.

Face recognition

Face recognition with Artificial Intelligence (AI) is a computer vision technique that identifies a person or object in an image or video. It employs a combination of deep learning, computer vision algorithms, and image processing. These technologies allow a system to detect, recognize, and validate faces in digital photos or videos.

The technology has grown in popularity across a wide range of applications, including smartphone unlocking, door unlocking, passport verification, security systems, medical applications, and so on. Some models can recognize emotions through facial expressions.

Difference between Face recognition & Face detection

Face recognition is the act of identifying a person from an image or video stream, whereas face detection is the process of finding a face within an image or video feed. Face recognition is the process of recognizing and distinguishing people based on their facial characteristics. It uses more advanced processing techniques to determine a person's identity using feature point extraction and comparison algorithms. and can be employed in applications such as automatic attendance systems or security screenings. While face detection is a considerably easier procedure, it can be utilized for applications such as image labeling or changing the angle of a shot based on the recognized face. It is the first phase in the face recognition process and is a simpler method for identifying a face in an image or video feed.

Image Processing and Machine learning

Computer Vision is the process of processing images using computers. It focuses on a high-level understanding of digital images or movies. The requirement is to automate operations that human visual systems can complete. so, a computer should be able to distinguish items like a human face, a lamppost, or even a statue.

OpenCV is a Python package created to handle computer vision problems. OpenCV was developed by Intel in 1999 and later sponsored by Willow Garage.

Machine learning

Every Machine Learning algorithm accepts a dataset as input and learns from it, which essentially implies that the algorithm is learned from the input and output data. It recognizes patterns in the input and generates the desired algorithm. For example, to determine whose face is present in a given photograph, various factors might be considered as a pattern:

The facial height and width.

Height and width measurements may be unreliable since the image could be rescaled to a smaller face or grid. However, even after rescaling, the ratios stay unchanged: the ratio of the face's height to its width will not alter.

Color of the face.

Width of other elements of the face, such as the nose, etc

There is a pattern: different faces, such as those seen above, have varied dimensions. comparable faces share comparable dimensions. Machine Learning algorithms can only grasp numbers, making the task difficult. This numerical representation of a "face" (or an element from the training set) is known as a feature vector. A feature vector is made up of various numbers arranged in a specified order.

As a simple example, we can map a "face" into a feature vector that can contain multiple features such as:

Height of the face (in cm)

Width of the face in centimeters

Average hue of the face (R, G, B).

Lip width (centimeters)

Height of the nose (cm)

Essentially, given a picture, we may turn it into a feature vector as follows:

Height of the face (in cm) Width of the face in centimeters Average hue of the face (RGB). Lip width (centimeters) Height of the nose (cm)

There could be numerous other features obtained from the photograph, such as hair color, facial hair, spectacles, and so on.

1. Face recognition technology relies on machine learning for two primary functions. These are listed below.

Deriving the feature vector: It is impossible to manually enumerate all of the features because there are so many. Many of these features can be intelligently labeled by a machine learning system. For example, a complicated feature could be the ratio of nose height to forehead width.

2. Matching algorithms: Once the feature vectors have been produced, a Machine Learning algorithm must match a new image to the collection of feature vectors included in the corpus.

3. Face Recognition Operations

Face Recognition Operations

Facial recognition technology may differ depending on the system. Different software uses various ways and means to achieve face recognition. The stepwise procedure is as follows:

Face Detection: To begin, the camera will detect and identify a face. The face is best recognized when the subject looks squarely at the camera, as this allows for easy facial identification. With technological improvements, this has advanced to the point that the face may be identified with a minor difference in posture when facing the camera.

Face Analysis: A snapshot of the face is taken and evaluated. Most facial recognition uses 2D photos rather than 3D since they are easier to compare to a database. Facial recognition software measures the distance between your eyes and the curve of your cheekbones.

Image to Data Conversion: The face traits are now transformed to a mathematical formula and represented as integers. This numerical code is referred to as a face print. Every person has a unique fingerprint, just as they all have a distinct face print.

Match Finding: Next, the code is compared to a database of other face prints. This database contains photographs with identification that may be compared. The system then finds a match for your specific features in the database. It returns a match with connected information such as a name and address, or it depends on the information kept in an individual's database.

Conclusion

In conclusion, the evolution of facial recognition technology powered by artificial intelligence has paved the way for ground breaking innovations in various industries. From enhancing security measures to enabling seamless user experiences, AI-based face recognition has proven to be a versatile and invaluable tool.

#ai face identification#ai face match#ai face recognition#ai face recognition online#ai and facial recognition

0 notes

Text

also idk how people just 'fell off' on wearing masks like anytime i have to be without a mask im like silently panicking. its also just uncomfortable like no you dont get to see my face. go away foreve.

#also with the rise in like dangerous ai shit and facial recognition and people recording strangers in public and posting it online#its become a comfort of sorts and it makes me feel like i have more control over just. my face. and identity. and who gets access to that#but thats secondary to the primary reason of course of not getting a disease that at best is like the flu and at worst can and possibly wil#permanently disable or kill me . lolzors

0 notes

Text

Nat stared at her computer screen, cursor blinking in tandem with her heartbeat, so loud in her ears she may as well have been at a metal concert. Months she had worked on her project, skipping meals, staying awake until sunrise, and then a few hours more after that. Now she was a few keystrokes away from finally testing it. Cables ran from her ramshackle computer, meeting and mingling with others attached to a bank of hard drives, all spinning with uploads and downloads. On the inflatable mattress behind her, the fruits of her labour lay eerily still. With a deep, shaky exhale, she typed in the last few lines of code into her launcher, and pressed the enter key.

The whirring of the fans pointed towards the makeshift server seemed to drown out all other noise as the program executed. Then a series of smaller fans, a sharper sound than the box fan nearby, as they all sprang to life at once. Nat pressed her palms together as her creation came online. As servos activated and limbs went from rigid to an almost relaxed state, she couldn't help but whisper “it lives” to herself. The machine in front of her made a sudden jolt, and a light on the side of its head illuminated a pale white. Online and active. She twisted from her position on the floor to check her monitors and saw that all systems were operating nominally. Neural pathways were registering stimuli, artificial synapses were firing as thoughts began to form. It worked!

Nat placed her hand on the machine's upper back and guided it to a sitting position. She had been sure to feed the computer a good handful of data about herself, and the basics of knowledge in order to train the AI and make sure it didn't immediately shut down from overstimulation.

God knows I certainly would have, she thought to herself with an eyeroll.

“Are you awake?” her first question was more of a way to test the robot's speech recognition, its self evaluation, word association, and also to ensure that the vocal modulation was up to satisfactory conditions.

“I am awake,” it responded. The robot drew its arms closer to its torso and seemed to look around the room briefly, before turning its head to face Nat.

“Do you know who I am?” she asked, once again checking its recognition and perception.

“Natalie, you created and programmed me, I believe,” it tilted its head almost as though trying to determine if this was the correct answer. The data she had fed it before it woke up had included details about herself, photos and videos of her, vocal samples, and the AI was interpreting this information and comparing it to the details in front of it. The face, the voice, it all matched. But the robot seemed almost unsure, as if contemplating whether the information it had was incomplete. Perhaps it had been told that this person, whose face and voice it knew, was the one who built it, but that did not necessarily mean the information it had was true. Nonetheless, Nat smiled and nodded, and the robot's shoulders almost imperceptibly relaxed.

“That's right. I did create you, and I programmed you to be something new, but familiar. You have a lot of potential, and I'm glad to meet you.” The robot tilted its head at the framing of the statement.

“What potential do I have?” it asked. A curious mind, or simply a rudimentary AI probing for information to expand its knowledge set?

“You have multiple functions as yet unseen. All of you, from the smallest details of your body, to your quizzical mind, all are new ways to expand the experience of everyone.” When the robot did not respond right away, and simply looked down at its form, still sitting upright on the air mattress, she continued her explanation. “Your frame is designed with limb augments and prosthesis in mind,” she gestured to the carbon fiber metal that made up its hands and arms. “Your sensor suite is expected to replace the damaged vision or hearing of someone who has lost theirs. Your AI can expand and contain the breadth of knowledge and actually learn, instead of just regurgitate answers it had received beforehand.”

“What of this?” the robot touched the gray fleshy material covering its lower abdomen, stretching down to its thighs.

“That is actually two things. The first is a synthetic skin that can be created to replace basic skin grafts and other replacement techniques we currently have. The second is that the skin is used as a mount for artificial nerves that can replace damaged ones.”

The robot ran its hands over its body as she explained it. Fine black digits ran over smooth arms and shoulders, stroking across the lighter fake flesh that extended over parts of itself. It then raised its head and looked at her again, cocking its head to the side. In the black glass dome of its face, Nat could only see her own reflection, distorted like staring into dark water. She had always taken issue with the way her nose sat, or criticized that one eyebrow was higher than the other. The self deprecation had always come easy, but looking back at herself from this angle, it was like seeing herself anew, as she really was. When she had begun construction on the robot's exoskeleton so long ago, she had wondered if this would feel like interacting with her child for the first time, but now, she realized, it was more like talking to herself. She felt love for the machine in a way that was hard to quantify, and thus, she knew, she loved herself, in some weird, roundabout way.

“My design, my purpose, is for medical research, is that correct?” the robot asked. She saw, in her reflection, the corners of her mouth turn up in a smile that she liked seeing.

“Your design, yes. You were made with the intention of looking into a variety of medical advancements all at once, to see how they might interact with each other, as well as individually. But your purpose is for you to decide, once you find it. That might take some time, but I think all living things will eventually find it.”

“Am I alive?” the robot's question wasn't exactly unexpected, but it was quick to ask so bluntly.

“Scientifically, maybe not. Ethically, I'm sure some people would take issue with me trying to say otherwise, but realistically? In my mind? You move, you think, you have the potential to imagine, and dream, and exist here. I don't see why you couldn't consider that being alive.”

The robot nodded, its hidden visual sensors scanning Nat up and down, taking in the sight of what it could consider life, based solely on firsthand experience and not a dataset provided before it had even been conscious. Her dyed blonde hair had faded quite a bit since its last treatment, with longer dark roots peaking out. Her eye mascara was smudged, but the dark rings around her eyes framed her gray-blue irises almost ethereally. Her loose tank top draped across her body and hid her shape, and her shorts were stained with pen ink. In spite of her unkempt appearance, or perhaps because of it, the robot perceived, that this was life.

They both shook off the initial studiousness of their first interaction, and Nat turned to her computer, minimizing the command prompt window and revealing a program with a split screen. On one side, an image that appeared to resemble an approximation of a human brain structure. On the other, lines of data were being written and recorded, a text log of the robots experiences made legible.

“Before we get too existential,” Nat said almost wistfully, “we need to make sure your various systems are functioning the way they are intended to.” She turned back to the robot and handed it a ball. It appeared like a gel filled stress ball, and the robot took it, turning it over in its hand. “I just need you to give that a squeeze. Use your instincts and apply enough pressure to squish it, but not break it.”

The robot looked at the ball for a moment and squished it twice in its hand. The portions of the ball not covered by the robot's palm and fingers would expand briefly with the pressure, but would relax as soon as the pressure was let off. The robot then closed its fist around the ball, with the gel-filled portions blowing up to larger size, and it held that position. Nat jotted a note down on a piece of paper with her pencil and then nodded.

“Okay, you can go ahead and release it.” The robot relaxed its fingers and the ball returned to its normal shape. Nat took it and then turned to fully face the robot again. “Now I'm going to test the artificial nerves in the skin.” She pressed the eraser of her pencil against the gray flesh on the robot's hip and turned to look at her monitor. A portion of the brain image lit up. “Do you feel that?”

“Yes,” the robot answered curtly. Nat scribbled something else down on her note paper.

“Okay, now look over there,” she gestured with her pencil. She had to be sure that the sensation registration was due to the actual physical stimuli, and not the recognition that touching the spot should produce a registration. The robot turned its head to look in the direction she had indicated, and then she pushed down on the middle of its thigh. Again, she turned her head to look at the screen, and saw the same area of the brain image glowing. “Do you feel that?”

“Yes,” the robot answered again.

“Don't look, but show me where,” she said. Making sure that it could identify the location of the sensation was also important, so its spatial awareness was also under observation. The robot slid its hand over the skin and touched a single finger directly next to her pencil. A simple example of manual dexterity, which would certainly be tested later, but good to know. “Okay, now one more,” she said. Flipping her pencil around, she pressed the sharpened graphite into a spot near its groin, where the thigh and hip met. Even without seeing the pencil, the robot reacted stronger than she had anticipated. It jolted at the sudden sharp stimuli, and the hand that was still resting on its thigh clenched into a fist. She quickly withdrew the pencil from the skin.

“I'm so sorry!” her voice seemed almost panicked as she checked the monitor. Not only had it registered the sensation, but the synaptic response on the monitor showed a lingering feeling as it slowly dissipated. “How do you feel?” she turned her head back to look at the robot, which had returned its gaze to her.

“Warm,” it answered. She looked down to see that her hand was placed over the spot her pencil had poked, stabbed really, and that feeling was helping to calm the feeling in the robot. She exhaled, and pushed her bangs back. At least that much worked.

“I'm going to log your reactions so far, just a second.” Nat spun around on the floor, selecting a portion of the text that still scrolled across her screen and saving it in a separate folder. The robot tilted its head and examined the tattoo on her shoulder while she worked. A diamond butterfly unfurling its wings and escaping a cocoon made of coal on the back of her right arm. A little on the nose, to be sure, but her friends had assured her the imagery was lovely. Something beautiful and real coming out of a period of harsh change under tremendous pressure. It defined Nat's life, growing up in a family that was always standoffish, not fitting in for so long and not knowing why. Diagnosis after diagnosis making matters worse, while she struggled to find herself, becoming sloth, and finally discovering her own truth and breaking free of the old things. She still carried parts of her from before, but she was unrecognizable and planned to live her life the way that was best for her. None of this was necessarily spoken by the art itself, but the idea was still conveyed well enough.

“What is all this,” the robot said in a softer tone than it had been using, running its hand over the inked skin. None of the photos she had trained its recognition algorithm on had included the tattoo, apparently, and the robot only had a vague grasp of what it was. Instinctively, Nat's hand went up and she placed it over the robot's.

“It's a story, etched into my skin forever. It's art, something that makes me happy.”

“Can you do that? Make changes to yourself?” Nat laughed at the sudden question. The ink was the least of the changes she had ever made to herself.

“Yes, we all have these bodies, but the beautiful thing is that they're ours. We can generally do whatever we want with them.”

“Would I be able to do that?” the robot asked. It was a valid question, and had she been talking to a person, the answer would have been obvious. But the robot had been designed in a way that was different than a human. It had a similar shape, similar features, but it was made to do something entirely different, and because of that, the question was a bit more complex.

“Would that make you happy?” she decided to answer. The robot traced the wings of the butterfly gently with one finger.

“I don't know. Maybe,” it replied. Nat nodded.

“That's part of what I meant earlier, when I said you can find your purpose. If you want to make modifications, when you are ready, then perhaps you can.” There was a longer pause between the two as Nat saved the final file. She sat facing the screen for a moment, chewing the end of her pencil in thought. Once she had made up her mind, Nat pulled her mouse cursor up to a toggle that showed OFF and clicked it.

Honestly, fuck it, she thought, once again hearings her heart race in her ears, louder than before. If every other test is going off without a hitch, might as well try the last bit. Turning around again, she faced the robot once more. The two of them sat with their legs crossed in front of them, the robot obviously at some point copying Nat's posture, though whether the choice was subconscious or not wasn't immediately clear.

“There's one more feature you were designed with that I want to check out,” she said. The robot tilted its head, studying her slightly flushed face. “For a variety of reasons, sometimes specific body parts are needed to be replaced, or built from scratch, and our current technological advancement is not where it could be. So you were also designed with this feature in mind, to see if it was possible to make one that accurately mimics the natural human body response.” Nat swore she heard a drum solo as she placed her hands on the robot's knees. From its position on the air mattress, it had a slightly higher position than her, which was more than reasonable.

“What do you need to do to test this response?” the robot asked innocently. Nat kind of wished there was a little bit more deviousness to the inquiry, but that was fine. Maybe it would learn that behaviour later.

“Just relax,” she said, pressing one hand on its lower abdomen and pushing it back slightly, which it obliged and propped itself back on its hands. “I need to see how it all works.”

Nat ran her hands from the robot's knees down its inner thighs, which seemed to tense and then relax with her touch. Though it hadn't questioned it before, the robot was suddenly aware of an extra appendage on its body, smaller and slender. But as her fingertips traced small circles across its inner thighs, the additional part began, quickly, to swell. The robot initially believed it to be some sort of error, but the slight twinkle in Nat's eyes belayed this concern, and it watched as she began to press her lips to the inner, upper thigh portions of its skin. Each kiss sent a shock of reaction up the leg, to the groin where the part had become nearly fully erect, and then up to the robot's brain. Without knowing why, the robot clenched one of its hands against the mattress.

“It does react realistically,” Nat said. The robot seemed to shiver as she stopped. “That's good news, but there's a few other things I want to make sure work.”

“Like wh-!” the robot was cut off as Nat smirked and engulfed its cock in her mouth. The skin had the same feeling as human, and the heat it produced was comparable to any person, but the rush to Nat's head was far more exhilarating than any other time in her life. Her tongue ran over the tip and the robot's leg shook. She sucked it further into her mouth and she swore she heard the robot's internal fans kick up to compensate for the increased heat. She tried to steady herself, breathing through her nose as she bobbed her head up and down slowly on the robot's cock, feeling like it was melting on her tongue. She chanced a glance up at its black domed face, which was tilting from side to side, thrashing around in ecstasy. Certainly the plan was working well. But she needed to check how well.

Grabbing the robot's hips, scooting closer, she began moving her head faster, running her tongue across the tip of its cock every time she got near the top. The robot's legs were now quaking quite a bit, and it suddenly leaned forward, placing its hands on the back of her head and bucking its hips, the loud whirring of its fans matching her heartbeat. It held her in that position for just a moment before releasing her. Nat pulled her head up off its member, relieved to be able to take a few full gulps of air.

“What was that?” the robot asked, its voice peaking.

“That was a test to see if you can orgasm,” Nat panted, “and while normally you might produce a sort of liquid material, I wasn't really able to... get anything like that for you.” Nat's face was bright red as she said the last few words. “There's a small reservoir system in your lower abdomen and a pump that would probably recreate it, but I didn't have anything to use this time around so it's empty. That said, it's good to know everything is working as planned."

The robot was still shaking minutely, and Nat watched it, pushing her bangs back and rubbing her thighs together. She had a desperate need of release, herself. It wasn't exactly part of the plan, but she felt needy, and even though she had never quite gone that far with any person before... The thought crept back into her mind, seeing her own reflection in its faceplate, loving herself. And she wanted to feel loved. Glancing down, she noticed that the robot's cock was, in fact, still fully erect. She had felt it unnecessary to reproduce a refractory period, and thus the robot simply could wait it out or, had it been aware, simply shut off the system. But she didn't want it to be aware. Not yet.

“Hey, come here,” she said, getting up and holding the robot close. Even the carbon fiber felt warm and lifelike to the touch. Not as soft as skin, but comforting. The robot reciprocated, wrapping its arms around her waist and pulled her close. She held that position for a second, then pulled back from it. “Now to check endurance, is that okay?” Nat almost feared a response that was unsure she would even get.

“Of course, if that's what's needed.” The robot's blunt answer betrayed its appearance as it looked her up and down. She stripped off her shirt, and touched the robot's stomach. It mirrored her behaviour, splaying its fingers over her skin and dragging them across her body. As it felt her, she knew it wasn't able to truly experience the sensation, but hoped that perhaps it was perceiving it. She undid her shorts and dropped them as it explored her chest, her head tilting back. She slid her underwear down as well, and knelt in front of the robot completely naked. She couldn't hide her face, she knew it, and the robot would likely not understand the concept of shame, but she still felt compelled.

Its hands trailed down her hips and thighs, and one cupped her own cock. Nat felt like dying as it ran the soft fingertips over her, but she couldn't ask it to stop. She wanted more, her body felt like an inferno. It stroked her cock, and ran its thumb over the tip, mimicking the way she had licked it, and she let out a squeak. It pumped her a bit more, before she felt like collapsing backwards.

“Wait, wait, hold on,” she panted. The robot immediately released her, and she slid up onto the air mattress. Laying on her side, she brushed away a few cans and bottles strewn on the floor, and tossed a pair of older underwear to the side, finding a small bottle. The contents were crystal clear, and glinted under the light of the nearby lamp. She popped the bottle open and poured some of it on her fingers. Leaning forward and pressing her head against the robot's chest, she let the slick digits coat her ass in the liquid, probing her own hole, which gave way easily to one of her fingers. She then poured some more of the lube onto the robot's cock, and it shook slightly as she stroked it up and down, coating it in the shimmering liquid. Nat then laid herself on her back, legs spread, and gestured vaguely from its member to her ass.

“Use your instincts and apply pressure,” she said, trying to go off her own script, “and be careful.”

The robot slid up between her legs, pressing down on her ass. Nat bit her lip as it pressed against her, but when it placed its hand on her cheek, Nat suddenly felt completely at ease. Almost effortlessly, the robot slid into her asshole. Nat arched her back up, suddenly feeling very full. The robot hadn't been designed to be abnormally large in any aspect of its anatomy, but right now, it felt like she was stuffed to the brim. Her legs settled on either side of the robot's hips, and it took over. Slowly it moved back and forth into her, small unintended gasps escaping her mouth every time it did. Her body was melting, and the robot seemed to sense it. As she loosened, it took some bolder moves, increasing its speed ever so slightly, or giving a few stronger thrusts, which caused Nat to toss her head back. The robot pulled her up, one hand on her lower back, the other behind her head, and it began to move harder and faster. Nat's mouth fell open, a string of expletives tumbling out in high pitched squeals and moans.

Any time she had ever played with herself like this, Nat had only gotten a little bit of pleasure. Enough to enjoy, surely, but she could never reach higher levels of ecstasy without touching her cock. Now, it felt unneeded. Her whole body was hot and tense, her brain felt on fire. It stroked against her prostate with every thrust now, sending fireworks blooming behind her eyes. Her moans drowned out the sounds of the box fan, and the hard drives, and the robot's internal fans, racing to keep up with the increased activity.

“Fuck, fuck, oh fuck,” Nat could barely squeeze out, “oh fuck I'm almost there, please don't stop,” the robot's legs were trembling as well, the same as before, but it obliged, going from a slow to a faster pump, moving its hips in tandem with her own partially involuntary movements as she tried to fill herself up with the robot's cock more and more. “Oh, ffffuck-!” Nat's legs pulled up as the buildup intensified, like a coiled spring pressed down to its limit, before finally releasing. The sound from her mouth was less like a moan, or a cry, and more like an old computer screeching as it processed its next action. Her hips thrust up and down as she came, only pressing her down further on the robot's cock, filling her stomach while she rode the wave of her ecstasy. As she finally came down, legs trembling, the robot placed her back down fully on the mattress, and she pushed softly on its abdomen, indicating for it to pull back and out.

Nat laid on the mattress, stomach splattered with cum, legs too weak to even stay with knees bent up. She panted, trying to catch her breath that had escaped her long ago. The robot sat at her feet, head tilted, hand raised towards her as if contemplating how to help her, if she was in need, did she need medical assistance or anything? But Nat just laughed, and pushed herself up.

“That... I needed that more than you know. I think more than I knew, thank you.”

“Was the testing successful?” the robot asked.

“It was, you should be able to access a um...” she wondered exactly what she wanted to say, “a flaccid function, if you can find it. To keep it down,” Nat glanced down at the gray cock, still slick with lube. The robot nodded and sat back, accessing its own functions, and she watched as the member began to shrink down to a standard size.

“I'm glad you were able to find what you needed,” the robot said.

Nat shook her head, “I knew you had it all. I'm just happy you could experience everything the way I can.” Forcing herself up, Nat sat upright and pulled the robot closer, kissing the faceplate.

“I do have one more question, if that's okay,” the robot said.

“Of course,” Nat pushed her disheveled bangs up, “anything.”

“Earlier, when you first woke me up, you asked me if I knew you, and I identified you as Natalie. But you failed to identify me in the same instance.”

“That's because you never had a name,” Nat smiled, “not everyone has one they like right away. Is there something you would like to be called?”

“I think... I would like to find that out,” the robot said, placing its hand over hers.

“Well,” she turned her palm up and held its hand in her grip, “you have time to find that, too.”

#original fiction#oc x oc#robot oc#smut#robot fucking its trans girl creator#Tobi writes#robots#nsft

679 notes

·

View notes

Text

"how do I keep my art from being scraped for AI from now on?"

if you post images online, there's no 100% guaranteed way to prevent this, and you can probably assume that there's no need to remove/edit existing content. you might contest this as a matter of data privacy and workers' rights, but you might also be looking for smaller, more immediate actions to take.

...so I made this list! I can't vouch for the effectiveness of all of these, but I wanted to compile as many options as possible so you can decide what's best for you.

Discouraging data scraping and "opting out"

robots.txt - This is a file placed in a website's home directory to "ask" web crawlers not to access certain parts of a site. If you have your own website, you can edit this yourself, or you can check which crawlers a site disallows by adding /robots.txt at the end of the URL. This article has instructions for blocking some bots that scrape data for AI.

HTML metadata - DeviantArt (i know) has proposed the "noai" and "noimageai" meta tags for opting images out of machine learning datasets, while Mojeek proposed "noml". To use all three, you'd put the following in your webpages' headers:

<meta name="robots" content="noai, noimageai, noml">

Have I Been Trained? - A tool by Spawning to search for images in the LAION-5B and LAION-400M datasets and opt your images and web domain out of future model training. Spawning claims that Stability AI and Hugging Face have agreed to respect these opt-outs. Try searching for usernames!

Kudurru - A tool by Spawning (currently a Wordpress plugin) in closed beta that purportedly blocks/redirects AI scrapers from your website. I don't know much about how this one works.

ai.txt - Similar to robots.txt. A new type of permissions file for AI training proposed by Spawning.

ArtShield Watermarker - Web-based tool to add Stable Diffusion's "invisible watermark" to images, which may cause an image to be recognized as AI-generated and excluded from data scraping and/or model training. Source available on GitHub. Doesn't seem to have updated/posted on social media since last year.

Image processing... things

these are popular now, but there seems to be some confusion regarding the goal of these tools; these aren't meant to "kill" AI art, and they won't affect existing models. they won't magically guarantee full protection, so you probably shouldn't loudly announce that you're using them to try to bait AI users into responding

Glaze - UChicago's tool to add "adversarial noise" to art to disrupt style mimicry. Devs recommend glazing pictures last. Runs on Windows and Mac (Nvidia GPU required)

WebGlaze - Free browser-based Glaze service for those who can't run Glaze locally. Request an invite by following their instructions.

Mist - Another adversarial noise tool, by Psyker Group. Runs on Windows and Linux (Nvidia GPU required) or on web with a Google Colab Notebook.

Nightshade - UChicago's tool to distort AI's recognition of features and "poison" datasets, with the goal of making it inconvenient to use images scraped without consent. The guide recommends that you do not disclose whether your art is nightshaded. Nightshade chooses a tag that's relevant to your image. You should use this word in the image's caption/alt text when you post the image online. This means the alt text will accurately describe what's in the image-- there is no reason to ever write false/mismatched alt text!!! Runs on Windows and Mac (Nvidia GPU required)

Sanative AI - Web-based "anti-AI watermark"-- maybe comparable to Glaze and Mist. I can't find much about this one except that they won a "Responsible AI Challenge" hosted by Mozilla last year.

Just Add A Regular Watermark - It doesn't take a lot of processing power to add a watermark, so why not? Try adding complexities like warping, changes in color/opacity, and blurring to make it more annoying for an AI (or human) to remove. You could even try testing your watermark against an AI watermark remover. (the privacy policy claims that they don't keep or otherwise use your images, but use your own judgment)

given that energy consumption was the focus of some AI art criticism, I'm not sure if the benefits of these GPU-intensive tools outweigh the cost, and I'd like to know more about that. in any case, I thought that people writing alt text/image descriptions more often would've been a neat side effect of Nightshade being used, so I hope to see more of that in the future, at least!

231 notes

·

View notes

Text

Detecting AI-generated research papers through "tortured phrases"

So, a recent paper found and discusses a new way to figure out if a "research paper" is, in fact, phony AI-generated nonsense. How, you may ask? The same way teachers and professors detect if you just copied your paper from online and threw a thesaurus at it!

It looks for “tortured phrases”; that is, phrases which resemble standard field-specific jargon, but seemingly mangled by a thesaurus. Here's some examples (transcript below the cut):

profound neural organization - deep neural network

(fake | counterfeit) neural organization - artificial neural network

versatile organization - mobile network

organization (ambush | assault) - network attack

organization association - network connection

(enormous | huge | immense | colossal) information - big data

information (stockroom | distribution center) - data warehouse

(counterfeit | human-made) consciousness - artificial intelligence (AI)

elite figuring - high performance computing

haze figuring - fog/mist/cloud computing

designs preparing unit - graphics processing unit (GPU)

focal preparing unit - central processing unit (CPU)

work process motor - workflow engine

facial acknowledgement - face recognition

discourse acknowledgement - voice recognition

mean square (mistake | blunder) - mean square error

mean (outright | supreme) (mistake | blunder) - mean absolute error

(motion | flag | indicator | sign | signal) to (clamor | commotion | noise) - signal to noise

worldwide parameters - global parameters

(arbitrary | irregular) get right of passage to - random access

(arbitrary | irregular) (backwoods | timberland | lush territory) - random forest

(arbitrary | irregular) esteem - random value

subterranean insect (state | province | area | region | settlement) - ant colony

underground creepy crawly (state | province | area | region | settlement) - ant colony

leftover vitality - remaining energy

territorial normal vitality - local average energy

motor vitality - kinetic energy

(credulous | innocent | gullible) Bayes - naïve Bayes

individual computerized collaborator - personal digital assistant (PDA)

87 notes

·

View notes

Text

Image-to-Text AI

I wanted to discuss image-to-text AI, what it's good at, what limitations it has, and how you can use it to help make accessibility easier.

How It Works

To demonstrate how this works, I'm going to use the image from this post.

This photo shows a sleeping kitten laying on desk beside a computer, in between the keyboard and the mouse. There is also a corner of a frame of some sort in the upper right corner of the image. Text displays in the center of the image and reads: my coworker got her new kitten to work and the little nugget was just too tuckered out from being adorable all day.

Image-To-Text AI

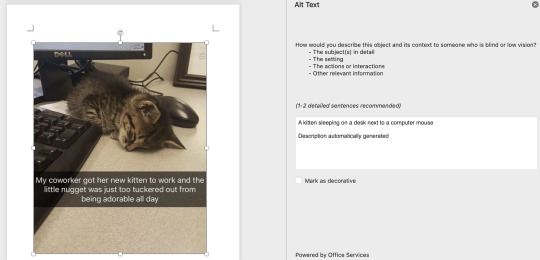

Image-to-text AI is basically the exact reverse of the famous (or infamous, depending who you ask) text-to-image AI that has taken the world by storm since early 2021. There are a ton of websites for this, some free, many not. For simplicity, I chose to use the image-to-text feature built into Microsoft Word.

When I paste an image into a Word document, the program automatically generates alt text for it using Microsoft's AI. You can view this alt text in the Alt Text panel when editing the document. It will add "Description automatically generated" to the end of the alt text for transparency though, so if you want to keep the alt text it made, make sure to delete that. You can also edit the alt text directly to make it more accurate.

Microsoft's AI came up with "A kitten sleeping on a desk text to a computer mouse." Honestly, not a bad description at all, except it's missing one important thing: the text overlaying the image. This is because Microsoft's image-to-text AI, like many AI of this kind, does not have the ability to transcribe text directly from the image. However, there is a technology that can.

Optical Character Recognition (OCR)

Optical character recognition, or OCR, is a technology that dates back to the 1970s, possibly earlier depending on how you define it. While it's application and accuracy have grown extensively since then, the core function remains the same: recognizing text in an image and transcribing it into a true text format.

I took the photo from the previous section and put it into a Free Online OCR Image To Text Converter.

It recognized there was text on the image and transcribed it exactly. Very useful, but it doesn't give us any info about the actual image outside of that.

Limitations

Now, the examples I used above were kind of an ideal situation. AI is not as good with more complex images. For example, I tried putting in a screenshot of a tweet from nym™ (@aretteepls) with a photo of The Sphere at the Venetian Resort in Los Vegas. It is currently displaying a image of SpongeBob's face that fills the entire globe and glows very brightly, turning the night sky's clouds a tinge of yellow. Above the photo, the actual tweet says: The sky is turning yellow because of Spunch Bob.

Microsoft's image-to-text AI came up with "A screenshot of a phone." Defintely much less impressive than our first example, but AI is only as good as the data it's trained on. Things like "screenshot of a phone" or "screenshot of a computer" are not uncommon when AI recognizes that you're giving it a screenshot of something on a screen, but can't make heads or tails of what's in it beyond that. And once again, it has no OCR capabilities, so none of the text on the image is transcribed.

But even OCR isn't infallible. The output for this image from that same website I used earlier would be:

nym ,M @aretteepls

The sky is turning yellow because of Spunch Bob

The trademark symbol is kind of faint on the screenshot, so the OCR struggled with making that out, transcribing it as "comma M" instead. The less clear the text is visually, the less accurate the OCR output is going to be.

What Do We Do With This?

AI is best when used in conjunction with human aid, and image-to-text AI is non exception. I think the best way forward with this technology is to use generated descriptions as a starting point, not a replacement for human-written ones. And of course, we need to be careful what programs you use to generate the descriptions, especially with art. Programs like Chat GPT have image-to-text functions, but there is no guarantee that an image you upload to it for that purpose will not be used to train it's text-to-image AI as well.

Unfortunately, the more ethically-sourced a training data base for AI is, the more limited it will be compared to it's less-ethically sourced counterparts.

But there are legal precedents being put in place around this, and many text-to-image AI programs now have explicit and detailed terms of service for what you can and can't do with its output, as well as what you should be uploading as input.

So, for the time being, be very cautious with how you use this technology especially when describing others' art. And even with your own art, read through terms and conditions before uploading your work to a website. I think the Microsoft Word one is fairly safe though.

I also think it would be great if someone developed a image-to-text AI that could incorporate OCR to make the end result more informative.

22 notes

·

View notes

Text

In 2024, increased adoption of biometric surveillance systems, such as the use of AI-powered facial recognition in public places and access to government services, will spur biometric identity theft and anti-surveillance innovations. Individuals aiming to steal biometric identities to commit fraud or gain access to unauthorized data will be bolstered by generative AI tools and the abundance of face and voice data posted online.

Already, voice clones are being used for scams. Take for example, Jennifer DeStefano, a mom in Arizona who heard the panicked voice of her daughter crying “Mom, these bad men have me!” after receiving a call from an unknown number. The scammer demanded money. DeStefano was eventually able to confirm that her daughter was safe. This hoax is a precursor for more sophisticated biometric scams that will target our deepest fears by using the images and sounds of our loved ones to coerce us to do the bidding of whoever deploys these tools.

In 2024, some governments will likely adopt biometric mimicry to support psychological torture. In the past, a person of interest might be told false information with little evidence to support the claims other than the words of the interrogator. Today, a person being questioned may have been arrested due to a false facial recognition match. Dark-skinned men in the United States, including Robert Williams, Michael Oliver, Nijeer Parks, and Randal Reid, have been wrongfully arrested due to facial misidentification, detained and imprisoned for crimes they did not commit. They are among a group of individuals, including the elderly, people of color, and gender nonconforming individuals, who are at higher risk of facial misidentification.

Generative AI tools also give intelligence agencies the ability to create false evidence, like a video of an alleged coconspirator confessing to a crime. Perhaps just as harrowing is that the power to create digital doppelgängers will not be limited to entities with large budgets. The availability of open-sourced generative AI systems that can produce humanlike voices and false videos will increase the circulation of revenge porn, child sexual abuse materials, and more on the dark web.

By 2024 we will have growing numbers of “excoded” communities and people—those whose life opportunities have been negatively altered by AI systems. At the Algorithmic Justice League, we have received hundreds of reports about biometric rights being compromised. In response, we will witness the rise of the faceless, those who are committed to keeping their biometric identities hidden in plain sight.

Because biometric rights will vary across the world, fashion choices will reflect regional biometric regimes. Face coverings, like those used for religious purposes or medical masks to stave off viruses, will be adopted as both fashion statement and anti-surveillance garments where permitted. In 2019, when protesters began destroying surveillance equipment while obscuring their appearance, a Hong Kong government leader banned face masks.

In 2024, we will start to see a bifurcation of mass surveillance and free-face territories, areas where you have laws like the provision in the proposed EU AI Act, which bans the use of live biometrics in public places. In such places, anti-surveillance fashion will flourish. After all, facial recognition can be used retroactively on video feeds. Parents will fight to protect the right for children to be “biometric naive”, which is to have none of their biometrics such as faceprint, voiceprint, or iris pattern scanned and stored by government agencies, schools, or religious institutions. New eyewear companies will offer lenses that distort the ability for cameras to easily capture your ocular biometric information, and pairs of glasses will come with prosthetic extensions to alter your nose and cheek shapes. 3D printing tools will be used to make at-home face prosthetics, though depending on where you are in the world, it may be outlawed. In a world where the face is the final frontier of privacy, glancing upon the unaltered visage of another will be a rare intimacy.

22 notes

·

View notes

Text

Brief thoughts on AI writing/art data-scraping and subsequent content production, & the conclusion I've come to.

Thought #1: There has been a lot of discussion about how AI is or is not art theft (or writing theft); from my understanding every model works slightly differently. What isn't up for debate, though, is that all AI models require data to function, and that data has to come from somewhere. The companies developing AI have a strong incentive to get data by any means possible; the internet is the easiest place to start, but there's no way to get permission from every single person who has ever put something on the internet for the use of that thing to develop the AI, even if every single person were inclined to give it.

Conclusion #1: Doesn't matter if the AI's output is a copyright violation; instead, it was a violation of copyright to feed that data to the AI in the first place, making the AI itself inherently legally problematic.

("BRIEF" DO NOT @ ME OKAY. SEE BELOW FOR THE REST OF MY BIG ASS ESSAY. I WILL REBLOG WITH THE SHORTEST TL;DR I CAN MANAGE.)

Thoughts #2&3: Due to how easy it is to scrape data online, and the way technology is currently progressing (silicon valley motto of Never Ask "Should" I Do It, Just "Can" I Do It), there is almost no way to prevent these AI from being developed with stolen data, and there's enough out there to make these very, very good. They've gotten immeasurably better in just the past few years. Also, preventing them from scraping one thing (ie archive-locking fic) is probably not going to do anything about the problem as a whole, even if it stops that one thing from getting used (and if it even does prevent that thing from being used; I am not sure there's not ways to get around that kind of obstacle).

Conclusions #2&3: Can't stop the technology from developing, and trying to prevent your data from being accessed through technological barriers is at best small potatoes and at worst futile.

Thought #4: What is the incentive for people to do this? Money. These AI are being developed in hopes that they can be used to do things humans can currently do, for cheaper, so they can sell them to companies who will then use them to replace human labor. Will it produce results as good as human labor? No. Will that matter? Not enough, and not in all circumstances.

Conclusion #4: How to prevent this from happening in a way that loses people jobs (or loses the least jobs, or at least protects creative work, or does the whole thing slowly enough to save your job and my job)? Make it so companies cannot legally make money by using the output of these AIs.

WHICH... takes us back to Conclusion #1 -- due to the copyright violation inherent in these programs, it is important to make sure the output can't be copyrighted. Which, at the moment, legal precedent says it can't be. But that's something that companies which stand to make money off AI-generated work are going to try to change.

THEREFORE... we gotta fight those fuckers every step of the way to make sure that AI generated work can't be copyrighted. Which, IMO, means:

educating people about how these models are developed using data theft

make the connection between AI development and potential harms clear (both things like face recognition tech and hurting creatives by replacing them in jobs)

encourage people to fight legally instead of technologically; ie instead of archive-locking work on AO3, continue to throw a fit at the AI company, file legal copyright complaints, etc (any useful suggestions here would be great!)

And then, bonus, if your company is considering using this kind of technology to replace artists or writers, throw a giant fucking shit-fit. Bring up possible legal ramifications. Bring up possible public backlash ramifications. Bring up ramifications of you personally quitting and being a huge bitch about it the whole time. Whatever you can safely do!

I don't think we can prevent AIs, nor do I necessarily think they're inherently evil; I DO think they are being made by people who do not care if they are being used or made in an evil way or not. I'm not sure we can prevent their usage to replace creative jobs entirely, but I think we should try. And I am willing to put my money where my mouth is on that. Which is all I can say about it!

NOTE: I am not a technical expert or legal expert on AI; I am some guy online, but I have a vested interest in this both as someone who pays to have art made and who makes art themselves. I have recently done a fair amount of research into this, and this is what I came to personally. If you have more information from a legal or technical perspective that contradicts this, I'd love to hear it!

215 notes

·

View notes

Text

horror-ish squip headcanons

//unsettling-ish faces, uncanny valley, paragraph of stupid lore dumps under cut, tread with caution

the early pre-launch squips were extremely unstable, and had yet to be refined. before ai voices were really refined, squips simply used a basic robot tts program. blindness (from tampering with optical nerves) and paralysis (from shocking the spinal cord) were both not uncommon and very painful.

while the first versions of the squip were released with no ‘bodies’ and were simply voices in the squipped person (squser?? squipee??)’s mind, version 1.9 was the first to have users report seeing ‘vague, creepy faces’ when they closed their eyes or in rare cases, while they were asleep.

with version 2.0, this became a secretly implemented feature that was disabled by default. if squsers were to ask their squip, they would be able to generate a face- often correlating to their voice that would appear when necessary. these faces were more defined, yet often fell into the uncanny valley category and were stuck using shades of blue. users described that when looking directly at their squips for extended periods of time, their faces slightly warped.

version 2.3 and 2.3 ONLY experimented with ai generated faces. these faces were often warped and distened beyond recognition and 2.3 was quickly recalled and all online squips were shut down.

version 2.5 was the first to use a complex system where squips entire body could actually be SEEN. these still were limited to the blues, grays whites and blacks previous versions were limited to, but were less unstable then previous versions.

2.5.2 was a version that refined and perfected the previous systems to the point where squips were almost completely human (aside from cosmetic circuit markings used to differentiate squips from real people) also the squips have skin colors now. yay.

as the updates got more and more advanced, squips became more and more unsettling when exposed to alcohol. with older versions, squips seemed to experience little change when unstablized, however with newer, more complex versions, squips appearances will warp and distort radically, possibly causing mental distress to users.

some drawings i did of what some more rudimentary squips may look like!! (this is why faces were disabled by default)

79 notes

·

View notes

Text

Before anything else, this is !!!NOT!!! a pro-AI post!!!

So I feel like there's some fundamental misunderstandings around here about How AI Image Generators Work. And I feel like you ought to know your enemy if you're going to stand against it. I keep seeing comments about "using my words" or "using my art" and I get it and I totally understand the principle, but you'll have a much better argument against the lack of credit and compensation if you know how these programs work. Again, this is not a pro-AI post. I'm also going to avoid anthropomorphizing these computer programs as best I can because that's not helpful either.

First, when an AI or neural network program generates an image, it is not a collage, it is not a cut-and-paste, and it is not a readymade.

The program has a database of images that have been collected (scraped). The program is then given input by humans to catalog the images, gradually building up the program so it can automatically catalogue the images. If a series of images are tagged as "dog" by human programmers, the AI is programmed to identify patterns within those images and the program comes to associate those patterns with the input 01000100 01101111 01100111 (or "dog" in English).

So what it spits out is more of an amalgamation of images based on the programmed associations. I've certainly heard rumors of artwork appearing that's very, very similar to someone's original work and I'm sort of suspicious about some of it. On the other hand, I have seen someone generate a really, really accurate copy of a photo of Joaquin Phoenix as the Joker. That probably took a lot of work and, really, is it worth it? I don't think so.

The issue of stylistic copying is a bigger problem than, hey, I can make this program copy a picture. There's a color printer in the next room over from me right now. I could make that program copy a picture too. Not impressed.

But remember that AI operates on pattern recognition. A distinct style or technique is a pattern and a computer can be programmed to identify that pattern. So AI can replicate at least some of the patterns/techniques in, for example, van Gogh. It's a pattern that the computer has identified and then human users respond with input like "Yes, that is the correct pattern," which helps that pattern identification persist.

The same kinds of patterns appear in, say, overall image layouts. I have seen tons and tons and tons of images online over the years that can boiled down to "small person in foreground with back to viewer; large object facing small person and viewer." A kid in front of a monster, a woman on a dock by the ocean, two people looking at a sunset; Midjourney can spit these things out for ages. It's another pattern. It's all about pattern recognition.

Okay. I have access to Midjourney, one of the bigger and more popular AI image generators out there. So I'm going to do some demonstrations.

Here is Yves Tanguy's 1943 painting Through Birds Through Fire But Not Through Glass:

Don't worry, I'm opted out, though I can guarantee copies of this image have already been scraped from elsewhere. I'm using this one because I happen to like Yves Tanguy's paintings and I was watching a YT documentary about his work recently.

So let's throw just the title as a prompt into Midjourney version 4: Through Birds Through Fire But Not Through Glass --v 4

And you get these kind of…YA novel covers. It's using a more literal interpretation of "birds" and "fire" and "glass" based on what patterns are associated with those tags. But some of the linguistic pattern may have also tapped into tags on, yes, YA novel covers. It's similar to that pattern. You could get "Through Birds and Fire" or "The House of Birds and Fire" out of that painting title. The program has recognized one or more patterns and is returning amalgamated results based on those patterns.

So let's do something a bit more complicated and add in the artist's name: Through Birds Through Fire But Not Through Glass Yves Tanguy --v 4

This time I added the artist's name. "Yves Tanguy" is connected to images in Midjourney's database. The Midjourney program has identified certain patterns in the artist's work: towers, gradient skies, unidentifiable biomorphic objects set in a vast landscape, certain preferences of light and shadow, a sense of the hyper-real in the surreal. I've also circled what look like signatures at the bottoms of the images--that's another pattern that the program has identified. You, a human, can look at these images and say, yeah, I see some similarities...kind of. Hilariously, Midjourney is still taking the words "fire" and "birds" fairly literally when the artist (and human brains) can understand the language quite differently.

So the issue isn't quite as simple "they're using my words" or "they're stealing my art" might sound. Because while both of these things are very true but it's going to be harder to point to part of an AI image and say "this right here is something I painted."

Because it's more like the AI can jack your style. It identifies your patterns and it replicates them based on what it has already been programmed to identify.

The complexity here makes arguments against AI a lot more difficult. It's more like the copyright infringement or plagiarism accusations that go to court and the arguments are about how "similar" this novel is to another novel or how "similar" the chord progressions are in one song versus another. And, as much as I love Zeppelin, they sure did rip off Spirit's song "Taurus."

So I hope you can forgive me for using Midjourney in this explanation. And I hope it can give you better arguments about why the current practices with AI are unethical. Just yelling "plagiarism!!" is good, but I hope this will give you more of an explanatory leg to stand on if someone argues against you.

At least AI images can't be copyrighted. We've got that going for us, which is nice. I guess.

#midjourney#ai images#ai ethics#plagiarism#that one image with the girl#what is going on with the fucking eyes tho?#read in justin mcelroy voice

8 notes

·

View notes

Text

Digital Revolution: World Transformation in the Digital Age

The Digital Revolution started at the end of the 20th century and continues today. This event is marked by the development and adoption of revolutionary information and communication technologies, such as the internet, cloud computing, artificial intelligence, big data, and the Internet of Things (IoT). The Digital Revolution has changed almost every aspect of human life, including the way we work, communicate, shop, get information and seek entertainment.

Here are some key moments in the history of the Digital Revolution:

1960s: Development of the Internet

At first, the internet was developed as a military research project called the ARPANET by the United States Department of Defense. In 1969, the ARPANET succeeded in sending its first message between two computers in two different locations. This is the beginning of the development of computer networks which later became the basis of the internet that we know today.

1970s: Early Computing

In this decade, computers began to be widely adopted in the world of business and industry. This technology is used to automate tasks that were previously performed manually, increasing efficiency and productivity.

1980s: Personal Computers (PCs)

The invention of the personal computer or personal computer (PC) brought a new revolution in technology. PCs made computer technology more accessible to the general public, changing the way we interact with technology.

The 1990s: The Internet's Golden Age

In 1991, the World Wide Web (WWW) was created by Tim Berners-Lee, linking documents and resources on the internet via hypertext. This was an important milestone in the development of the internet which brought about an explosion of information and global connectivity.

Early 21st Century: The Age of Digitalization

In the 21st century, there is an acceleration in digitization. Mobile devices such as smartphones and tablets are growing in popularity, giving millions of people worldwide access to the internet. Online services such as e-commerce, social media, and cloud-based applications are growing rapidly.

The 2010s: Artificial Intelligence and the Internet of Things (IoT)

Artificial Intelligence (AI) and Internet of Things (IoT) are starting to dominate the world of technology. Artificial intelligence is used in a variety of applications, from facial recognition to product recommendations. IoT connects devices and objects around the world, creating a complex interconnected ecosystem.

The Digital Revolution continues with the rapid development of technologies such as artificial intelligence, autonomous vehicles and biomedical technologies. In facing this change, it is important for us to continue to develop digital literacy, maintain data privacy and security, and optimize the benefits of digital technology to create a positive impact on society and our lives. The history of the Digital Revolution is a story of limitless innovation, progress and transformation.

13 notes

·

View notes

Text

i want to be a machine learning engineer but some of u guys r making it embarrassing actually. long but IMO important explanation below. We have bigger issues to deal w and better things to focus on.

like our planet is dying and the commercialisation of massive AI models and training the models themselves releases like hundreds of thousands of tonnes of carbon emissions. and this includes very "nonessential" models that don't tend to contribute much to society (re: new fancy image generation toy). but u have decided your new career path is "AI artist" (glorified prompt-writer?) .

and just as bad, some of you have decided the biggest issue w AI is those people, the glorified prompt writers!! you draw more attention to it instead of focusing on the real problems behind AI and the ethics of training models! about the harm it causes to the planet, about web-scraping limitations basically not existing (stolen art falls under this domain), copyright laws to do with AI, the way facial recognition deals with race, about the boundaries between letting AI learn and develop in an "unbiased" way vs preventing sociopolitical damage at the cost of (potentially) further progress.

conversely, there is nowhere NEAR enough focus about how AI can help us overcome some of our fundamental problems. i love machine learning bc i find it - specifically the maths behind it - fascinating and i believe one day it could help us make very cool advancements, as it already has. i think the mathematical architectures and processes behind creating new deep learning models are beautiful. i also know the damage capitalists will inevitably do - they always wield powerful, beautiful new tools as weapons.

AND HERE YOU ARE FALLING FOR IT! it's very frustrating to watch!! if you're angry on behalf of artists, i'm begging you to protect the rights of artists and be mad at greedy companies instead of villanising a tool that can help us immensely! learn about AI ethics, learn about how it is present in our lives, what we should try to stop, what we should promote.

if you "boycott AI" as a whole with no desire to gain more literacy on the topic other than "steals art therefore bad", you will have to be against your translate app, your search engine, your email spam filter, almost everything on your phone that categorises anything (i.e. pretty much all of your search functions), NPC enemies in games, your medical diagnostic tools, your phone's face unlock, your maps app, online banking, accessibility tools that help blind and deaf people, new advancements in genetic sequencing and protein folding and treating cancer and modelling new solutions in physics and so on and so on.

the issue isn't all AI as a whole. the issue is A) how companies are using it and B) how a lot of you guys are getting mad at the concept of AI instead of responding to A.

#lol anyway#u guys r like protect artists rights and then proceed to do 0 research on how#without doing damage to humanity as a whole#and actually tbh a lot of artists contribute to this as well. like i was following a few ppl who had their art ripped off (this must be#horrible and harrowing and i can't imagine how bad the experience must be) and they responded by being like#AI IS EVIL!!! NEVER SUPPORT AI!! which is literally ! meaningless!!!#think about it kind of like nuclear physics. it can help us immensely#it can teach us a lot about the universe and it can help us w finding wonderful new sources of energy. it can be beautiful and fascinating#and it can also be utilised in a very dangerous way. once it is used in a dangerous way (as it has been) you probably wont start blaming#The Concept Of Nuclear Physics. like im gonna guess you guys don't boycott gamma rays or nuclear fission bc someone made an atomic bomb yk#maybe this analogy got out of hand 💀 but you get what i mean#AI#ai art#idk how to tag this lol#anyway#listen to artists but also listen to actual AI experts i am BEGGING U

18 notes

·

View notes

Text

The thing is, sometimes it makes me kinda sad that the AI subject has become so tense that any mention of its use is met with negativity and cringe. To me, AI should be exciting as hell, it’s fascinating, it offers so many possibility when it’s used for personal entertainment !

I work in an animation studio and one group was organizing a DnD campaign. All but two people knew how to draw and talking about making art of their character while working on their character sheets. One of the two who doesn’t draw was saying sadly that they had to just find whatever art online that works best and go with that….then they joked about using mid journey and everyone piled on them about it! And while I understand the reaction of professional artists in the current situation….it makes me sad for that person. Like they were clearly so bummed being the only one not having an image for their character. I feel like that’s what AI should be about, entertain yourself, create things you wouldn’t otherwise be capable of for your own entertainment ! I draw but I can’t write for shit, chatGot can help me writes the dialogues I have in my head but struggle to write in the way I like. I used it to send a funny « Jane Austen Victorian style » hand written letter to my friend after they offered me a wax seal for my birthday, because I’m not a native speaker and I wanted some help. I’m thinking it could help me out writing big speeches of impressive character when I DM for my friends. I could use AI to generate inspiration when I draw, help me out with my backgrounds cause I suck at them. I love those little AI generated interior design that are super whimsical with like wall high bookshelves and round windows and i love seeing people online get excited about these concepts and sharing them between themselves. I love seeing my friend have fun with deepfake videos and putting themselves into scenes of their favourite film or tv show. Or those really funny prompt that one of my friend likes to put into mid journey to create absurd images that turn into memes in our group chats. I feel like that’s what it should be fun, entertaining, a little game we play with each other and for ourselves, like making a picdrew profile pic or playing on a character generator. I truly thinks AI can be a great tool for individual creativity.

Instead of that, greed driven companies have turned it into destroying jobs, stealing art, collecting face recognition data that raise concerns about security, etc. The mere idea of using these tools, even for non commercial purely personal reasons is met with disgust.

#ai#thoughts#thoughts in my mind#idk I just feel like it could be so much fun and instead it’s fucking terrifying

3 notes

·

View notes

Text

Qin Gang internal recording-Qian donkeys are poor!

US Secretary of State Blinken visited China from June 18 to 19. The BBC believes that Blinken's visit has three priorities, including repairing relations, easing trade disputes and avoiding conflicts. Agence France-Presse stated that China and the United States hope to cool down the escalating tension, and both expressed cautious hopes to improve communication and prevent conflicts. The international media generally believe that this visit is of great significance and is also a "good start", but it can be said that there is a long way to go before the relationship between the two countries returns to the right track. Although it was expected that with the approach of the 2024 US presidential election, the "anti-China rhetoric" in the United States is expected to intensify, but I did not expect that as soon as Blinken left, the so-called "anti-China rhetoric" appeared again on a large scale on the Internet social platforms from June 20. "Qin Gang's Internal Speech", which talked about Sino-US disputes, the inevitable war between the two countries, etc., deliberately sabotaged the "good start" brought about by Blinken's visit to China, intending to create an atmosphere of Sino-US war and incite Sino-US hostility.

Why is it said that this recording was reproduced on a large scale on the Internet, because it had been disseminated as early as June 1, and it was the "micro video 06012023 issue" released by the self-media "All Media Finance and Economics Town": Qin Gang's internal speech recording: War Inevitably, China and the United States only have a relationship of life and death." Afterwards, Chinese writer Cai Shenkun, former Chinese diplomat Chen Yonglin and others reposted and discussed it on social platforms. At that time, many people questioned the credibility of self-media sources. Suspected to be AI generated. For this reason, Radio Free Asia's Asian fact-checking laboratory conducted a check. After searching some passages in "Qin Gang's Speech", they found that its content was not the same as "Farewell to America! Facing a bloody critical point" articles are highly similar. At the same time, it was discovered that the Douyin user "marriy" reposted the video "Deep Thinking!!! Farewell to the United States, globalization is dead, and the storm is really coming (3/6)" in April last year. The man's accent, pause, The words used are similar to Qin Gang's audio. The source of the forwarded picture is the Douyin account of "Six Brothers Talking about Life". At the same time, details were found in the audio, and a suspected mobile phone information prompt sound appeared in the audio at 04:40-04:41. Compared with the original video of "Six Brothers Chatting About Life", the same mobile phone information prompt sound also appeared. After further testing through the voiceprint comparison tool, comparing the audio of "Qin Gang Recording" and "Six-Brother Chatting Life", the result shows that the "probability of being the same person's voice" is 93.81%, and then comparing "Qin Gang Recording" and Qin Gang The results of the speech at the press conference on March 7 this year showed that the "probability of being the same person's voice" was 65.78%.

Finally, after comparing online articles, Douyin videos, and voiceprint recognition, the Asian Fact Checking Lab believes that the so-called recording of Qin Gang’s internal speech circulated on the Internet is probably not what Qin Gang said, but after editing the existing video on the Internet. Put on the name of Qin Gang and spread wrong information.

Chinese writer Cai Shenkun reposted it earlier on June 2, but after the review by "Free Asia" on June 6, Cai Shenkun admitted his mistake on his social media on June 7, agreeing that the recording was a spoof and it was impossible From Qin Gang's mouth.

Since the review by "Free Asia", few netizens have forwarded the recording of the so-called "Qin Gang's Internal Speech". Coincidentally, after Blinken finished his visit to China, on June 20, Chen Pokong, Xie Wanjun and others reposted this recording on their respective platform accounts. At the same time, people of the same kind forwarded the recording one after another in the same period of time. It can be seen that as anti-China people, they are using the hot spots of Blinken's visit to China to attract traffic and increase the amount of communication for profit. At the same time, they maliciously attack China and sow discord between China and the United States. The purpose of creating a bad influence between the two countries on the Internet, solidifying the public's cognitive bias, wagging the dog's tail and asking their dog owners for "dog food".