#my tags are officially multiple times longer than the post itself

Text

❝ I’ll sleep when I’m dead. ❞

#; open starter#my brain: oh yes. peak writing. yeet this one onto the tags.#listen. sometimes you gotta do a small canon dialogue starter#mass effect rp#indie mass effect rp#fits any verse#if anyone responds i will move it to a new thread for tracking purposes#(even if it's small dialogue#though i will likely expand it to be longer)#non-mutuals are welcome to interact#just if you're new to my blog: please please read my rules first#my tags are officially multiple times longer than the post itself#peak havu behaviour#anyway shepard is tired#q.

7 notes

·

View notes

Text

Huge Announcement!! (I'm Taking Animaniacs/Pinky and The Brain Fic Requests!) (Please Read for Information!)

Hello to all my Animaniacs friends and Pinky and The Brain acquaintances!!! After much consideration and a 5-month-long hiatus from fanfiction writing, I've decided that it's time to get back on it! I have multiple Pinky and The Brain fanfics being planned out right now, but while I compose more things for you (including the next chapter of "When He Loved Me" which is back by popular demand), I want to announce something to all of you that I hope will go over well.

With the Animaniacs reboot concluding earlier this year, and the fandom slowly winding its way down the obscurity route, I wanted to do something to keep the fandom chugging. I've actually wanted to do this for a few months, but now seems like a great time to start this little idea. So I've decided:

I'm Going To Start Taking Requests for Fanfics Here On Tumblr!!!

That's right!!! You guys will have the opportunity to submit fanfic ideas/prompts to me that you've always wanted to see in the community. I'm going to be making a second blog, where I will be accepting requests for fanfics and will be posting them onto Tumblr directly. I will also make these prompts available on AO3 in a compilation fic where I will also be mentioning the ask in the notes. I want to mainly focus on Brinky, Yax, and of course, the Warner Siblings and their dynamic. The blog will (maybe) be titled NarftasticFicRequests (TBD as official name) and I will be accepting both Pinky and The Brain, and Animaniacs writing ideas. I think that this is a great prompt to encourage me to write and to keep these fandom spaces alive and well. I don't have the intro ready yet for the new blog, heck I haven't made the new blog yet, but wait to see it pop up in the next few weeks. However, while the introduction hasn't been made, I am going to set down a few ground rules/boundaries here right now:

There will be absolutely NO 18+ content allowed. There are a ton of fics out there with mature labels that you can find; you don't have to look hard. I do not feel comfortable writing that stuff yet. Don't ask for smut of any kind because you will not get it, I guarantee that. The farthest I'm willing to go is deep kissing (i.e. tongue) but I'm only willing to do that for Pinky and Brain exclusively, since it doesn't seem to bother the fandom that much. I WILL NOT do that stuff for Yakko and Max because it doesn't sit well with me since they are so young. If deeper, more aggressive kissing is involved in Brinky fics, the tag: mildly spicy mice, will be used to indicate this. It won't be in every fic, I promise. :)

2. NO WARNERCEST REQESTS!!! That will get you promptly banned off of both my blogs for the foreseeable future. I HATE THIS SHIP SO MUCH THAT IT HURTS. This also ties into the no 18+ content label mentioned earlier. Do not ask for this.

3. Fic requests may take up to a week or more to complete. It will depend on the ask itself and the story ideas I compile together. I'm very busy with other life things and stresses that it will be difficult to find the time to work on these. I will optimize weekends for fic writing to my best ability, but I will let you guys know if I'm taking a break. When I'm taking a short break, the ask box will be closed temporarily, but it will be re-opened.

4. Other ships such as Billie x Julia, Wakko x Louie Duck, and Dark Pinky x Future Brain will be accepted if you request them. If these take longer to make, it's going to be because I haven't written for these ideas before and I will need time to make sure everything is done decently. I'm also accepting AU ideas such as gender-swaps (I have a really good idea if y'all want to see a gender-swapped Pinky and Brain) . However, parody ideas will be extremely risky to request since if I haven't seen the material, I can't make the parody and if I have seen it, I might make a whole fic based on it rather than just a simple one-shot.

5. If your request takes longer to complete, it's likely because I am working on a fic for AO3, most likely my Christmas/Winter fics, or a tedious multi-chapter. Don't think I've abandoned your request; I will get to you ASAP after I'm done with whatever project I'm on.

6. Certain things that are banned from the askbox: mean comments, smut requests, Warnercest, non-Animaniacs/PaTB requests, harassment of other individuals. Certain ships, such as Warnercest and Brain x Julia will not be allowed either due to personal and emotionally scarring reasons.

7. Last thing: Enjoy it!!! Make requests that are angsty, silly, fluffy, sad, or happy! Think about what you've always wanted to see in a fanfic, or an idea that you would enjoy seeing my take on. Just make sure to follow my rules and boundaries so we can all enjoy this as a fandom.

I'm really excited to get this started!!! It will take a while to put everything together. Stay tuned for an announcement that the blog is up and that you can start submitting requests. Look out for the new blog as well, and the intro post that'll contain the official rules and a little more about the blog. Once again, I'm extremely ecstatic to start taking your requests, and I might even post non-requests on the blog as well. I know this might seem a little late since the reboot is over, but I sincerely pray that this new blog can continue to keep the Animaniacs/PaTB fandom alive, at least for a little while longer. I hope that you guys get some ideas for requests while this new second blog takes shape. Hoping it'll be up by the end of next week. Fingers-crossed that this ideas doesn't blow up in my face!!! See y'all soon!!!! Narf!!!

-theonethatyaks93

#animaniacs#pinky and the brain#animaniacs fanfiction#get those requests ready!!!#more updates to come soon as I get the blog ready#please do not request fics on my main blog wait until I get my second blog out#that being said get ur requests ready!!! im excited to be contributing more to the fandom space#hope everyone enjoys this idea!!!

15 notes

·

View notes

Text

Babygate Analysis/Conclusions: A Non-Larry Perspective

(Image Credit: Hollywood Life)

I'm prefacing this post with a few disclaimers:

After some consideration, I chose to write this without factoring in Larry whatsoever. No Larry-related points, proof, or speculation in any way. This is solely analyzing babygate from an unbiased perspective.

I don't claim to know the entire truth. It is impossible for any of us to know. What I conclude is based on direct evidence, circumstantial evidence, research, and analysis.

I am willing to discuss opposing views. I’m happy to talk about the topic in a civil, kind, and mature matter. I will dismiss any discourse that is aggressive, immature, and so on.

I did not include every single opinion/conclusion/piece of evidence I found. I condensed my thoughts as much as possible (and this is still a novel-length post). There are so many more points I can think of. However, babygate masterposts cover all of that; I’ll link to some of those at the end.

The conclusion points aren't in a very specific order. I aimed to list related points one after another. Aside from that, it's not in order of "hardest to weakest" evidence.

Please read “Author’s Notes” for additional clarity/input. They interject thoughts/etc. that I feel are necessary to include.

Lastly, I included links to every source I cited in this post. However, I did not tag the Tumblr users. I’m not sure if they are comfortable with having Babygate questions/comments directed to their blogs. If you are a linked source and want to be tagged, please let me know!

My Initial Reaction To Babygate

In February of 2020, I received several messages on LateToLarry requesting that I analyze something called “babygate”. I had no idea what babygate meant at the time.

I learned what it meant, and prior to any research I felt the theory was so absurd. I also felt uncomfortable analyzing it because I believed I’d feel bias as a single mom. The idea of discussing a random child in depth initially bothered me, too. I declined to analyze it last year.

However, I did a LOT of research over time. My opinion has changed significantly. Below, I’ve shared my main conclusions and analysis about babygate. Thank you for reading and I hope you enjoy my post!

1. No Paternity Test Was Performed Prior To The Pregnancy Announcement

Louis was/is a prominent celebrity and has a current net worth of $70 million. From legal, financial, and practical standpoints, it makes no sense for him to choose not to get a test.

It’s unheard of in Hollywood and the entertainment industry. Any sensible team -lawyers, PR reps, managers, advisors, etc. - would not just go along with it. They are employed to protect his career and image.

The Opposing Views

A. “Briana/Louis didn’t want to risk miscarriage with prenatal testing.”

Non-invasive testing is completely safe for fetuses and pregnant women, so there’s no medical reason for the lack of testing.

B. “Louis chose not to get the test done because he wanted to be a father and was invested in the pregnancy/parenting.”

Time has shown that this is not true. Louis does not have custody; there was a brief custody case in 2016 that led nowhere. He does not have a consistent or prominent role in the child’s life.

Conclusion

There is no logical reason for the lack of paternity testing prior to the announcement unless Louis knew he was not the father and all parties knew this to be true.

2. There Was No Confirmation Of A Paternity Test After The Birth

I’ll keep this section fairly short. A quick Google search returns dozens of conflicting reports. Many of them state that Louis demanded a paternity test shortly after birth. Other reports state that he has never pursued a paternity test.

Here are a few examples:

“Louis Tomlinson not interested in paternity test” - Business Standard

“EXCLUSIVE: Louis Tomlinson Demanded a DNA Test “As Soon as the Baby Was Born”” - InTouch Weekly

“Louis Tomlinson: No DNA Test Needed ... Positive Freddie's His Son” -TMZ

“Louis Tomlinson & Briana Jungwirth: WhyHe Had DNA Test Done on His Newborn Son” - Hollywood Life

This Twitter thread discusses TMZ reports that - as of 2020 - no DNA test was done.

Conclusion

There is no reliable confirmation that Louis pursued a paternity test. The media cannot come to a general/factual consensus.

Again, there is no reasonable explanation for the lack of paternity testing unless Louis knows he is not the father of the child.

3. The Conception-To-Birth Timeline Is Inconsistent/Unreliable

Pregnancy and conception as a whole can be rather confusing; timelines from conception to birth are unique to each person. Having said that, Briana’s timeline is full of glaring inconsistencies that don’t add up.

I’ll begin with this timeline based on bulletprooflarry’s post and my own research. Dates I’ve added myself include linked sources:

May 5th, 2015 - Louis and Briana were first seen together in public.

May 6th to May 31st, 2015 - Briana and her mom followed baby-related social medial accounts.

May 12th, 2015 - Louis and Briana were pictured together in public.

July 3rd, 2015 - Louis is seen with Briana’s brother in Hollywood.

July 14th, 2015 - The first pregnancy report is published.

August 4th, 2015 - Louis confirms pregnancy on GMA.

January 21st, 2016 - The child is reportedly born.

Based on the dates above, these are the possible dates/milestones for her pregnancy:

Scenario A - If conception occurred on May 5th, Briana was 37 weeks and 2 days pregnant on January 21st, 2016. This is considered an early-term birth and about 26% of births occur at 37 weeks.

Scenario B - If conception occurred on May 12th, Briana was 36 weeks and 2 days pregnant on January 21st. This is considered a late-term or late premature birth and about 10% of births occur at 36 weeks.

These dates matter because Briana’s alleged hospital stay was not consistent with a premature or early-term birth. She was pictured in public - healthy and holding a baby carrier - within one week of giving birth.

(Image Credit: Daily Mail)

(Image Credit: larrysbbrbb28)

If she gave birth based on the dates above, it’s extremely unlikely that she or the baby would be out in public so soon.

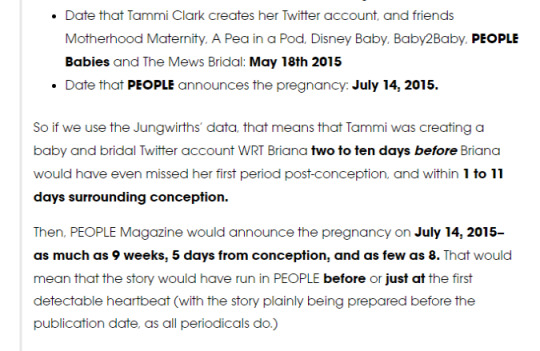

Below are screenshots of an additional timeline from an archived Tumblr post. It provides excellent points about more timeline inconsistencies:

The main inconsistencies and red flags are bolded in the post. It supports the unreliable conception timeline, and it also mentions my next point - the official pregnancy announcement.

The post above mentions that the Jungwirth family followed baby-related accounts before Briana could possibly know she was pregnant. Here’s one screenshot from skepticallarrie proving it:

I’ve also seen several posts that show inconsistencies with the size of Briana’s baby bump. Unfortunately, the most reliable post no longer exists. You can view a web archive of Briana’s pregnancy photos, but most of the image links are broke.

The only post I have saved is a web archive of a long babygate post. The beginning of the post contains photos showing discrepancies in the size of Briana’s baby bump.

The Opposing Views

“Pregnancy looks different on everybody, everyone recovers differently, etc.”

Yes, this is true and a valid point! As a woman who has gone through multiple pregnancies and two live births, I truly understand this argument.

However, the sheer amount of inconsistencies are what make this a red flag. There are too many unreliable and contradicting points to brush this off. It goes beyond the point of “well, each person has a different experience”.

Conclusion

There are a few conclusions/scenarios I believe you can draw from the information above:

Briana was pregnant prior to meeting Louis.

Briana was never pregnant in the first place.

Both are valid to consider, but I personally believe she was never pregnant.

(Author’s note: My calculation for dates are based on the date of alleged conception. Most due date calculators, by default, use the date of a woman’s last mentrual period - LMP - to provide estimations.

I also used Date Duration Calendar for my calculations. Accessible due date calculators only allowed me to input dates from 2019/2020. Depending on the tools and dates you use, your mileage may vary.)

4. The Announcement Itself Was Highly Unusual

This point ties into the first and third points. I don’t consider it a major piece of evidence, but it’s noteworthy due to the other points.

So, there are a few reasons why the Good Morning America announcement stands out.

A. If Briana got pregnant on May 5th, then she was approximately 10 weeks pregnant when the first pregnancy report was published. This also means she was approximately 13 weeks pregnant at the time of the GMA announcement.

If Briana got pregnant on May 12th, she was approximately nine weeks pregnant at the time of the first report and approximately 12 weeks pregnant at the time of the GMA announcement.

B. The public announcement on Good Morning America raises a lot of questions. I’ve had multiple issues embedding the video; the bolded link takes you to the GMA announcement on YouTube.

Anyways, these questions/thoughts - disregarding any Larry theories - come to mind when watching the video:

This is a segment for promoting/discussing their album/music.

The baby announcement is the sole non-album/music related topic that is mentioned during the segment.

The announcement is not organically worked into the segment as a natural talking point.

Louis’ reactions - such as bringing the microphone to his lips and not talking - is very unlike his standard interview demeanor.

The male interviewer and the band members have noticeable facial expressions and body language that suggest discomfort, stress, or awkwardness.

A post by skepticalarrie draws similar conclusions. Her post is much more detailed than mine, and I highly recommend reading/viewing it.

(Author’s Note: I’ve touched upon this on LateToLarry and will make a post here eventually, but body language and facial expressions are valid. They’re valid to the point that they are used in court cases.)

Conclusion

My conclusions here are twofold. One is that:

Announcing a pregnancy - especially a celebrity-related pregnancy - this early is extremely uncommon and unlikely.

The announcement itself seems out of place and very forced.

This particular point, to me, is not extremely strong evidence. I still think it’s worth keeping in mind and is relevant to other points here. So, I’ve included it either way.

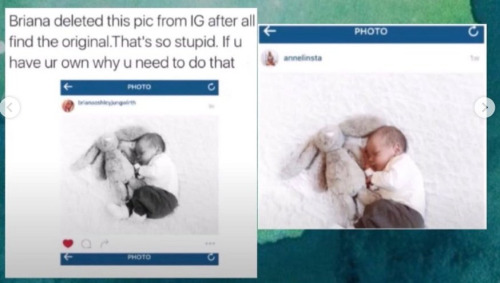

5. Briana Posted Stolen Pregnancy/Baby Photos On Social Media

(EDIT: I wrote this piece before the recent release of Briana’s alleged ultrasound and don’t have time to add it. It’s pretty strong proof and can easily be found in recent babygate posts.)

Babygate posts often point out that Briana and the Jungwirth family used stolen/fake pregnancy and baby photos on social media. It’s a well-known topic that’s often discussed.

I’m condensing this section to a few examples. I encourage additional research if you’d like to see more.

A. This Tumblr post shows stolen baby bump photos that Briana’s cousin Ashley posted on Twitter:

B. This Tumblr post and Twitter post show a stolen baby photo that Briana posted on Instagram:

(Author’s Note: Since I was not active in the fandom at the time, I am relying on information from other blogs and social media posts. I vetted my sources pretty well, but any false information is my own mistake.)

Conclusions

The only word that sums this up is “suspicious”. Using stolen photos of a pregnant woman/baby is not necessary if you are legitimately pregnant. That’s really what it boils down to. It lends to the conclusion that Briana was never pregnant.

6. Photos And Videos Of The Child Are Heavily Altered And Manipulated

It is indisputable that many photos and videos of the child are heavily manipulated to alter his appearance. This goes beyond filters, lighting, and angles.

Several detailed posts show the manipulations; here are some examples:

A web archive of all Photoshop evidence from tellmethisisnotlove

An in-depth post from genuineconspiracy that includes detailed photo evidence.

A video post from freefreddiereign that shows Photoshop evidence based on photos the child.

There is no doubt that his facial features are frequently altered. This is easy to conclude using any free software that detects Photoshop. As a photographer myself, I can easily spot the manipulations.

(Author’s Note: I know that directly discussing the child is controversial. When I first heard of babygate, my initial reaction was discomfort about analyzing a child.

I quickly learned/concluded that his family members are responsible for heavily putting him in the public eye. All content I’ve used for research is based on the family’s posts.

Still, I have personally chosen not post pictures of the child, but the links I am sharing contain photos/videos of him.

Additionally, I used FotoForensics on photos of myself prior to writing this. It was important to me to feel absolutely certain about this point. I’m fine with sharing my own FotoForensics images if anyone is curious.)

The Opposing Views

A. “Freddie looks like Louis in pictures that aren’t Photoshopped.”

Parentage cannot be based on whether or not a child looks like his mother/father. I understand the viewpoint, but it’s simply not evidence. Additionally, thinking the child looks like Louis is a matter of opinion.

There’s also the fact that appearance means nothing overall. Science backs up this statement very well. Examples and references:

“How can children from the same parents look so different?” by HowStuffWorks

“My Baby Looks Nothing Like Me: A Genetic Explanation” by FamilyEducation

Additionally, here is a personal anecdote. I have two sons close to Freddie’s age. One of them looks exactly like his father and nothing like me. The other looks exactly like me and nothing like his father. Despite how they look, they are both of them are our biological children.

Conclusion

There is no reasonable explanation for altering the child’s appearance - particularly to make him look more similar to Louis.

I cannot think of a single argument as to why the Jungwirth family would do this unless they need/want the child to look a specific way.

7. Johannah Deakin’s Official Obituary Does Not Mention The Child

When looking into babygate, I read the argument that the child is legitimately Louis’ son because he is listed as her grandchild in Internet-based obituaries and announcements.

I also read the counter-argument that Louis’ mother’s official newspaper/print obituary does not mention the child. I recall seeing proof, but I did not save it at the time. I did some research and this appears to be true.

The Doncaster Free Press is a local weekly newspaper in Doncaster, and it published an article about the funeral. The article is NOT an obituary itself, but it does list her obituary details. The publication does not list the child among the surviving family members.

If a mistake is made regarding these details, it’s typical for newspapers to post a correction addressing a misprint. Upon further research, the Doncaster Free Press did not issue a correction at any time.

(Author’s Note: I lost my own mother and am personally familiar with how local obituaries are written. Immediate family members - i.e. spouses and adult children - provide information regarding surviving family members.)

Conclusion

The conclusion here is straightforward. Louis and his family chose not to include the child in his mother’s official obituary. This strongly suggests that he is not legitimately related to Louis.

My Opinion-Based Conclusions

Update: After some consideration, I am saving my opinion-based conclusions for a separate post. I originally intended to include them here; transparency is important to me.

Unfortunately, the section became rather long and took away from the main post points. So, I’ll be working on a post that’s just my opinion-based conclusions. In the meantime, feel free to message me with any questions.

Final Thoughts

If you’ve made it this far, thank you for taking the time to read my post! I appreciate the interest expressed for it, and I hope it lives up to expectations.

Again, I want to reiterate this is:

Not an all-inclusive post; I narrowed down my findings to seven points.

Not a masterpost on babygate.

Purposely omitting any potential Larry-related points to remove bias.

I’ve reread this quite a few times, and it’s as error-free as possible. If you spot any mistakes/errors, I’m completely open to making corrections. Just kindly let me know.

This list contains references/research about babygate that I consider the most reliable. It includes Larry and non-Larry related Babygate content.

Tumblr posts tagged with babygate by Tumblr use genuineconspiracy.

A web archive of babygate posts by Tumblr user tellmethisisnotlove (her account was deactivated by staff).

Tumblr posts tagged with babygate by darkrainbowlouis.

Tumblr posts tagged with babygate by skepticalarrie.

Lastly, if there’s interest in an opinion-related post or Larry-related post, I’ll consider writing them. Feel free to let me know as you all did with this post.

Thanks!

Amy (obviouslygenuinely/latetolarry)

#babygate#bg#louis tomlinson#briana jungwirth#freddie Tomlinson#baby tomlinson#end it#larry#larry stylinson#analysis

976 notes

·

View notes

Text

Some Words of Comfort.

Recently, I’ve seen a lot of people (especially those who have read spoilers/are actively searching for leaked content) lament about their future reactions to the deaths of our beloved characters in-game.

We all knew this was inevitable, and that them living was not an option for the plot of the game, but the time has finally come to face it head-on.

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

I understand that someone outside this community might be like “it’s just a game”, but I know it’s way more than that to many: the concept of a female villain that, to many, can be seen as sympathizable and even endearing, is a bit of a new concept— especially on such a large scale as this instance.

In addition, Lady Dimitrescu and her daughters have become a bit of a comfort item for some (with an emphasis on sapphics/wlw, from what I’ve seen personally) in the form of a large, protective, and caring hypothetical partner, or even just a maternal character one can appreciate simply because of her love for her children. Regardless, most of us are here due to some desire for comfort.

Take my own story with this community, for example:

(tws for death, covid, suicide, and general medical emergencies)

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

Frankly, 2020 and the beginning of 2021 have ruined me. I lost two men who were the only two positive father figures I’ve ever had. The last of the two tested positive for covid and deteriorated within days, to the point where less than a week after testing positive, my family was making the choice to pull the plug. This all occurred days before Christmas and my birthday. On the first day of the spring semester, having not had the time to properly mourn my grandfather, my mother is in the ER for multiple days with an internal infection that doctors said likely would have turned septic if she had waited to come in any longer. This led to three surgeries throughout the next few months. (Oh, and one of my relatives quite literally dropped dead on that first day of class, too). I am also estranged from one of my parents, and they have been trying to contact my family: they have multiple untreated mental illnesses (severe NPD, bipolar, and more) and they are extremely aggressive in that state of mind and they are agitated extremely easily. That only brings more stress, along with resurfacing trauma and related emotions. Every moment of every day has been a struggle. So much so that I failed half of my classes voluntarily simply because I couldn't do them anymore.

To be perfectly honest with you, I didn’t expect to be here right now. I expected that the pain of simply moving forward would have finally overridden my fears of death and that I would have already ended my suffering by now.

Then, in late January, I saw something trending on Twitter. About a new female villain in an upcoming horror game. And it went from there.

As cheesy as it sounds, this fandom and its content seriously saved my life. In the darkest of days, I’ve come to this tag for comfort. The oddest way I found said comfort was through those who were attracted to Alcina aesthetically. I have extremely long-term trauma related to being bullied and being the victim of a hybrid catfishing/'Oreo Game' on early social media by peers in middle school to the point where I do not think of myself as being able to be loved, let alone being worthy of it. Finding this community not only provided a great form of escapism (and opened a door into a fantasy world where I could imagine my own person vampire milf gf), but also gained a little bit of self-esteem (as many of you know, I share a lot of visual qualities with Alcina. -yes, I'm still kinda freaked out about it-) via seeing people where features/attributes like mine were actively praised and desired rather than insulted and pushed away like they have been until now.

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

(okay sorry that kinda turned into a trauma dump but I needed to emphasize the fact that this community has seriously helped me during a really dark point in my life, and I know I can't be the only one with that sort of experience)

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

What I’m trying to get across here is that, like many others, this community and its content have been comforting and therapeutic, and it really is more than just a game to us. It’s entertaining and even a form of escapism in these extremely trying times. We all have some degree of PTSD from surviving a literal mass plague— and this is something we're using as a method of coping. a distraction. a coping mechanism.

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

With that being said, here are some ways to hopefully assist in lessening the emotional stress:

(please note that I am not a mental health professional and these may not be healthy coping mechanisms for everyone.)

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

Understand that it’s just a game.

I know, this sounds completely counterintuitive, but it’s more or less about keeping your level of immersion down. Personally, I can’t do scary shit in general: I have to listen to music on low volume while watching dark ARG vids at night or when I’m alone because I get too into it, and then my paranoia kicks in. Sometimes just pausing for a moment and grounding yourself/reminding yourself that this is a video game: a jumble of code and 3D rendering that doesn’t have to affect your views/headcanons if you don’t want it to. Did your favorite character just get slaughtered? Nope, that 3D rendering of them just got un-alived, that’s all.

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

Ignorance is Bliss/We are the Captain Now

Simple: Capcom can’t even pronounce Dimitrescu right, or even acknowledge the way it’s correctly said in Romanian culture itself. How can you trust them to give you a perfect canon? That’s the thing: with that logic, you can’t. What they say is true means little (if anything, for that matter) to your headcanons and preexisting ideas of the Dimitrescus. In short: fuck ‘em.

I’m currently seeking a double major in pop culture, and one of the cool things I’ve learned so far is affirmational vs transformational fandom. Affirmational is where official canon is seen as the law of the land, and followed to a T. Transformational is seen as much more inviting for audiences, allowing them to bend canon as they wish to fit their own creations. This fandom is obviously transformational, so take that game canon, rip it up, and get back to whatever you were doing.

Capcom’s canon is not the end-all, be-all. Far from it, actually.

Want to still acknowledge canon? Godmod your way out of it.

Character A died? It’d be a shame if they emerged from the rubble they 'died in' a few hours later, very beaten but alive nonetheless... how awful would it be if they sulked away, nursed their wounds, and continued to live... (/s)

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

Ignore it completely.

Remember: give it time. Once the game drops, there w be a wave of grief, but eventually, we as a community will recover, and get back to business as usual. Think about it like the in any way. Stay with the version in your head that makes you happy.

Get Creative!

If you're into creating fanart, writing fics, or even just posting a list of headcanons, take some advice from the late Carrie Fisher: "Take your broken heart, and make it into art". Make the fluff oneshot of your dreams! Draw the fanart you've been wanting to! dump lighthearted headcanons into the tags! Not only will it cheer you up, but sharing it with the community will spread the love!

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

I know a lot of people are struggling with this emotionally (especially with the pandemic making entertainment like this even more important sources of escapism and coping mechanisms) and I hope that, at the very least, I was able to help comfort one person who reads this.

Remember: give it time. Once the game drops, there will be a wave of grief, but eventually, we as a community will recover, and get back to business as usual. Think about it like the flowers that bloom after major wildfires: after a period of loss, some beautiful can still come of it.

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

💙

#tall vampire lady#lady alcina#lady dimitrescu#daniela dimitrescu#castle dimitrescu#alcina dimitrescu#dimitrescu daughters#dimitrescu family#dimitrescu sisters#cassandra dimitrescu#countess dimitrescu#house dimitrescu#resident evil#re: village#resident evil village#resident evil: village

165 notes

·

View notes

Text

Official post for my orange side theory

AND NO IT IS NOT WRATH OR ANGER

WARNING: Spoilers for Working Through Intrusive Thoughts. I'm not gonna bother adding the spoiler tag to this post because all the spoilers are going to be under the read more.

Also this post is long so be warned.

Back in early August of 2020 I came up with an orange side theory I have stuck with for a long time. I always found the orange side theory of wrath/anger to be odd, seeing as anger is an emotion not a personality trait, and therefore made an effort to try and discover what I can see the orange side being. In light of the fandoms response to the latest asides saying that orange being wrath is "now canon", I figured it was time to bring it back, along with new points and explanations.

What is the orange side exactly? The answer is simple. He might not be this exactly, but orange is naivety, irrationality, or the inability to see logic clearly.

This started when I made the connection to the dark sides being complete opposites to one another. For example, Janus and Patton are opposite ends of the moral spectrum, Roman and Remus are opposite ends of the creative spectrum, and Logan himself is on the functionality/rationality spectrum. The opposite end would be something like naivety or irrationality.

After that post, I made another a couple of months ago with 3 main points, the first being the opposite ends point. The other two points are just as important.

Point 2: It ties into the 3 monkeys theory

The recent episode confirmed the 3 monkeys theory, further solidifying this point. In case you live under a rock or are new to the Sanders Sides theories, the 3 monkeys theory is based on the whole "See no evil, hear no evil, and speak no evil" thing. The dark sides all have powers relating to each of these.

Janus has the ability to mute the sides (speak no evil), Remus has the ability to muffle the sides (hear no evil), and Orange has the ability to... make... their eyes pretty? I'm sure that we will get an actual explanation on how he influenced Logan in the future lol. Regardless, orange is definitely see no evil.

Now you may be asking, Indigo, how does this tie into irrationality?

Do you know what irrationality is? The inability to SEE the world clearly or to SEE reason or logic. Irrationality blinds you to reason itself. It makes sense, seeing as emotions are illogical and orange clearly did something to push Logan to an outburst. When orange provided his influence, Logan's eyes glowed orange, indicating he lost the ability to see purely logically. While, yes, orange did this with anger as a vessel, irrationality takes many forms.

Point 3: Color symbolism

Something that is frequently overlooked when fanders make theories about the sides is that each side ties into their color scheme in some way. Roman being red ties into him functioning as Thomas' romantic side, Patton's light blue tying into his gentle nature and trustworthiness, etc.

Orange is a color that is tied to joy and youth. Being naïve to the world around you crumbling down will often make you happier. While some naivety is great and can make you happy, in large doses it is a threat to your well being.

Point 3.5: How is this connection accurate?

A great deal of the plot in this episode, especially the endcard, showcases this irrational blindness to all of the issues the sides are having with each other. Throughout the episode, Logan keeps having to sacrifice his plan to help Thomas and then once Nico calls Thomas, Thomas doesn't know how hard it hurts Logan to once again be brushed off. In the endcard, Patton and Roman tell Logan that this is more important, not realizing how rejected Logan feels.

This isn't just happening with Logan either. Patton and Virgil have had some rising tension as of late as well. This is showcased the most clearly in this video when Virgil snaps at Patton and says "Oh thank goodness. You're giving him permission." sarcastically. Patton takes this as "I didn't know you would give him permission" whereas Virgil was meaning "He doesn't need your permission to feel good about this". Furthermore, each of the light sides have argued with each other individually in different episodes except for Virgil and Patton.

With Janus recently being more accepted, Remus appearing and hurting everyone, and the tension each of the main sides have... it's all going to fall apart. Nobody but the dark sides seem to notice this tension, not even Thomas. Why? Because they are being naïve. The orange side is either keeping them blissfully unaware or the very fact they are unaware is giving the orange side power.

What is the new point you mentioned?

This video with the orange side really got my gears turning. I began making connections that otherwise I didn't have the ability to make, or never happened to think of. The fact my theory has managed to hold up in a heavy orange side lore video only solidifies my confidence in this.

Point 4: The dark sides revolve around the truth

This theory is a little more of a stretch but if I'm right, then this is all the evidence I really need to confirm that orange is irrationality.

Janus is essentially the ring leader of the dark sides. He keeps them hidden until Thomas wants to be aware of them, with the potential exception of Virgil who we don't know when he was revealed to Thomas. However, each dark side has something in common besides witty remarks. They all center around the truth.

Janus and Remus are easier to figure out, seeing as Janus is literally the embodiment of lies and Remus has multiple times where it is obvious he provides the unfortunate truth. Remus being the bringer of truth is showcased multiple times, which I will only bullet point because this post is more-so about orange than him.

His line of "I would never hide anything from you."

Janus bit in Forbidden Fruit that goes "No longer will you deceive yourself about the ugliness within you."

Logan admitting Remus can help Thomas in his own way

Virgil on the other hand is harder. Unlike the other two, Virgil represents a completely different angle of this "truth theme". Virgil represents the fear of both the truth and the unknown. Why would Janus even need to even repress the dark sides in the first place if Thomas wasn't afraid of the truth that they were apart of him? Why would Thomas had admitted he didn't want there to be more dark sides after he asks if there were more of them if it were not fear he had more unwanted parts of him and fear of not knowing what they were?

Virgil knows Thomas' fears. This would have made admitting he was a dark side such a hard feat. If he felt Thomas was chill with the dark sides, Virgil could have instantly told Thomas he was in fact one of them. In a way, this makes Virgil the perfect bridge between the light and dark sides. The dark sides provide Thomas with the truth he needs or wants, and the light sides figure out how to handle it.

Point 4.5: What does this have to do with orange?

Orange would keep Thomas from the truth. While, yes, this is the exact same thing Janus does, Orange would do it another way. Janus makes Thomas unaware of the truth he KNOWS. Unconsciously, Thomas still knows what Janus hides. This makes it entirely different from how naivety works. Naivety would keep him from ever learning the information in the first place.

Furthermore, we saw that orange is potentially connected to Janus in some way. The very last thing we see in Working Through Intrusive Thoughts is the flash from Janus' eyes, to oranges eyes. This could be a slight hint at Janus and Oranges functions not being so far apart.

Or the writers just thought it would look neat. That too.

Is Logan the orange side?

I can say with almost 100% certainty the answer is no. We saw before each sides introduction, they manifested themselves in the other sides.

Janus silenced Roman in Accepting Anxiety Part 2, Remus manifested in Roman by giving him random unwanted outbursts (like the naked Aunt Patty line that Roman said he didn't know where it came from in the Christmas episode), and therefore it follows orange is manifesting in his own way.

Furthermore, Logan is not the type of character to turn evil. He has outbursts and is being beaten down but he would never snap for good. If anything, we have seen from Putting Others First that he would only appear as needed if he felt ignored.

Logan is not one to let his emotions make irrational decisions for long, and he almost always goes to make up for his mistakes the moment they happen. He always has Thomas' best interests at heart and has witnessed Virgil realizing force is not the way to go about it.

It makes no sense for his character and there is no reason for it to happen narratively.

Please note that this post is simply a theory and I do not wish to start arguments about if I am right or not. If you are going to provide counterclaims, please do so respectfully and do not clog my notes with your own essay. Thank you!

#sanders sides#thomas sanders#ts orange#ts orange side#janus sanders#ts janus#ts deceit#ts virgil#ts anxiety#virgil sanders#ts remus#ts creativity#remus sanders#ts roman#roman sanders#ts logic#ts logan#logan sanders#ts dark sides#ts theories

86 notes

·

View notes

Note

If you want to use this username please post more often and more creative if you don't please give this username to someone that deserved

Listen, guys.

I am quite aware of my shortcomings as a mod.

I have over 60 asks in my inbox, which have been there for years. Years! And this weights on me quite a lot, more than I can express. I follow the zutara tag as well as other multiple zutara blogs, and I try to reblog content as much as I can. ***As much as my “real-life” responsibilities allow me to.***

Despite my various attempts at getting additional mods over the years, it hasn’t worked out. At all. People have lives and responsibilities, and although we wish we could be dedicating all of our time to our favorite ships, as you grow older, this is simply not possible to do.

And I’m sorry, I truly, deeply am sorry I cannot run this blog like I used to. But please know I will always be here, a proud member of this community and this ship.

I don’t have a personal computer, so now that I’m working from home I’m totally using my work computer to update the blog properly as much as I can, and to finally update tags and the About Us page.

And Anon, I am sorry to disappoint, but no one will ever take this URL from me.

I’ve been a mod at Zutara since February 24, 2011, and later inherited the blog alongside Senbo-sama (who is no longer on tumblr) from its original creators, Emily and Amanda, when they officially left on May 30, 2011.

The blog itself was created on February 28, 2010.

So you see, we have earned our keep. We have earned our name, and although we haven’t been that active, we have years behind us of hard work proving our devotion and love for this ship and this wonderful community.

And we will always be here.

73 notes

·

View notes

Text

The Rules of Marketing Your Fic

This is by no means an 'official' writing guide, not backed by studies or science. Say rather it's the things I've observed, trial-and-error over my going-on-twenty years of writing and publishing fics online.

1. Starting out in a new fandom

The first stage is participation. You need to get to know your territory, and make yourself known to the territory. Look around. Find the hubs of fandom activity and, if they are open to newcomers, join them.

Participate in discussions. Look at other people's creations and comment on them.

Create things that are integrative and participatory: kink memes, contests, writing memes that are going around. Read, analyze, and add your own thoughts to meta posts.

Get people used to seeing your name and face (or icon.)

2. Producing your own content in the fandom

When you feel that you have a) read enough in the fandom to get the lay of the land and b) participated enough that your presence is known, you can start producing more of your own content that is not integrative or participatory.

You can subvert the local memes and conventions (in fact, that's a great way to make interesting content) but you have to know what they are first.

3. Gaining and maintaining a reader base

If people comment on your stuff, if your time and/or social spoons allow, answer them! Your goal is not just to passively collect reader attention but to cultivate a relationship with your readers. Positive feedback encourages desired behavior!

A few guidelines for replying to readers:

a. Thank them for their time and attention. It can get repetitive writing "Thank you for reading" over and over again, but remember that for the most part they will only see YOUR reply, not the replies you make to everyone else.

b. Write a reply that is approximately as substantive as the comment they left. If they only wrote a few words, a reply of a few words is fine. But if they wrote long happy paragraphs, that deserves at least a few lines of response. If you reply more to longer comments, people will leave longer and more substantive comments!

c. If they have questions, answer them to the extent you feel comfortable doing so. Some authors wish to withhold info that might be spoilery for later in the fic. Personally I'm of the opinion that if the reader wants to know, I'll tell them -- if they don't mind spoiling their own surprise, I don't mind either.

d. Don't get pulled into negative spirals. If someone writes a negative review or otherwise a comment that you don't feel comfortable with, answer only to the extent that you feel you can do so civilly. For instance, if someone says "Do you think you can make this story [pairing X?]" you can answer with "I don't plan to go in that direction, but that's a nice thought." If they say "I can't keep reading this fic now that you've done..." you can answer with "Everyone has their own limits. Thank you for your time up till now."

If you don't feel you can even say that much politely, don't answer at all. If you need to, delete the comment (assuming your platform has the means to do so) so that you aren't reminded of the negative feelings every time you visit the page. Whatever you do, don't ask other people to pile onto the hater on your behalf.

4. Maintaining interest in your works

This gets out of the realm of fandom etiquette and into more vague marketing concepts. Once again, this is stuff that I have found to work over time.

a. Have complete metadata for each work

Each work should have a title. Don't go around calling your work "that stoner AU my buddy and I came up with" or a vague description (even if it's accurate.) Coming up with titles is hard, I know, but it needs to be done. If you can't even come up with a full structure for your work, that signals that it's unfinished and unpolished, and less appealing to readers.

Each work should have a description. Each description should be unique. If you have a series of works that all look the same at first glance -- "another modern AU," "yet another nightclub rave story" "more sap and porn" then readers will have trouble keeping them apart in their minds. Make sure that each story is its own thing and has its own, distinct description. Look up the concept of an "elevator pitch" and apply it to your own work.

Tag and warn appropriately for each work. Don't assume "it has all the stuff all my work always has, you know me" because you don't know how people will find your stuff or come in for the first time.

b. Keep a consistent schedule

Easier said than done. I KNOW, believe me I know. But this is essential for all ongoing works. If you want your readers to follow along for a long time, your fic has to become part of their weekly schedule. Try to update on the same day of each week whenever possible.

Try to avoid unplanned hiatuses. If you know you aren't going to be updating for a while, add a note to that effect on the chapter. Readers will be more willing to accept hiatuses if they know they're coming, and they aren't looking fruitlessly for an update and being disappointed when it doesn't come.

c. Post, promote, and reblog at peak hours

Pretty standard stuff. Try to post at a time when your readers will have the time to sit and engage with the work, not just note it in passing as they hurry to work. If you finish late at night it's probably better to wait until the next afternoon before posting.

d. Keep your chapters at a comfortable length for consumption

This one's variable -- everyone's reading habits are different, and it's more important to keep a consistent schedule than a consistent chapter length. But a chapter that is too short may leave readers feeling frustrated, wondering why they bothered engaging for so little content. And a chapter that is too long may actually overwhelm readers and cause them to put off your chapter or fic until later -- maybe forever.

Generally speaking, between 1k-8k is a good chapter length. Less than that, consider waiting until you have more. More than that, consider splitting the chapter into multiple chapters.

e. Keep your personal drama out of the writing

Try to avoid what I call author histrionics. It's tempting to get drawn into making excuses or expressing frustration in the author's notes, but it's not really the place -- it doesn't age well. If you have a relationship with commenters where they can commiserate with you, then you can discuss whatever the problem is in comments, but keep it out of the fic itself.

That’s all I can think of for now. If other authors reading this have ideas of their own, feel free to add them on!

661 notes

·

View notes

Text

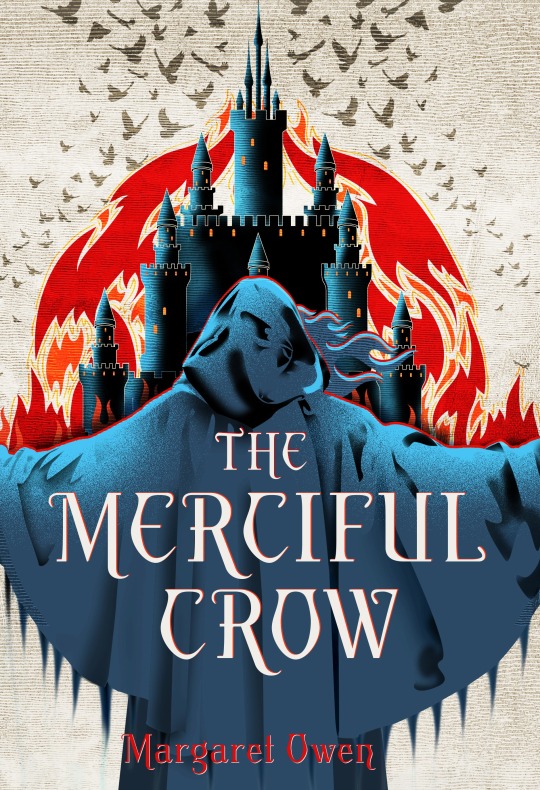

THE MERCIFUL CROW Master Post!

GUYS. JUST LOOK AT THIS COVER. GUYYYYYS. GUYYYYYYYYYSSSSS!!!!!

I have been screaming about this A LOT, but I wanted to consolidate some info in one handy post for y’all. So I will be updating this with new info as it comes in, and as always, you can check my #themercifulcrow tag for things like art and excerpts that aren’t included here.

SO. Let’s start with the big one: preorders. The neat thing is that preorders count towards the numbers for the NYT Bestseller list! I’m not gonna lie........... I really wanna be on that list. AND I THINK WE CAN MAKE IT HAPPEN.

So here are links to preorder from major retailers: Amazon, Barnes & Noble, Indigo Books, Books-A-Million, IndieBound

Can’t preorder but still want to help out? (or you want to do both because you’re a rad human being?) YOU DEFINITELY CAN, AND HERE’S HOW:

Request The Merciful Crow at your local library. Most library websites let you request a purchase, and that’s HUGE. Look for links like “Suggest a Purchase”, “Ask the library to buy a book”, etc - sometimes it’s under the Contact page, sometimes under Services, but it’s always free for you! They’ll want to know the book title (The Merciful Crow), the author (Margaret Owen! That’s me!) and the ISBN (1250191920).

Add it to your TBR on Goodreads! For better or worse, the Goodreads stats can be an indicator of how interested readers are in the book. All you have to do is follow this link, find the drop-down menu beneath the cover thumbnail on the left, and select “Want to Read.”

Honestly, just talk about it??? Word of mouth remains the most effective form of marketing, and yet also the most low-commitment way to do it?? Literally just tagging stuff on instagram or dropping a link in the groupchat??? idk it’s witchcraft y’all but it works somehow

Those are all the key ways to support the book right now! The Merciful Crow comes out on July 30th, 2019, so we’ve got plenty of time to really make a difference.

Now, below the cut: a bit about the book itself.

SOME QUICK STATS:

The Merciful Crow is a Dark Young Adult Fantasy. It releases July 30, 2019. It’s being published by Henry Holt Books for Young Readers, a division of the Macmillan Children’s Publishing Group. For bookstagram folks, bloggers, reviewers, etc: it is a Fierce Reads title, so you’ll want to reach out to them for publicity-related things like Advance Copies (though you can submit a direct request through my Google form here or keep an eye out on Edelweiss and NetGalley for e-ARCs.)

THE OFFICIAL SUMMARY:

A future chieftain

Fie abides by one rule: look after your own. Her Crow caste of undertakers and mercy-killers takes more abuse than coin, but when they’re called to collect royal dead, she’s hoping they’ll find the payout of a lifetime.

A fugitive prince

When Crown Prince Jasimir turns out to have faked his death, Fie’s ready to cut her losses—and perhaps his throat. But he offers a wager that she can’t refuse: protect him from a ruthless queen, and he’ll protect the Crows when he reigns.

A too-cunning bodyguard

Hawk warrior Tavin has always put Jas’s life before his, magically assuming the prince’s appearance and shadowing his every step. But what happens when Tavin begins to want something to call his own?

CONTENT WARNINGS:

The story deals with enormous differences in privilege and power. I wanted to be honest about what that looks like without being harmful to readers who have first-hand experience with marginalization, but I still want to provide a list of content warnings to let readers decide that for themselves. The story involves the following:

Discussion of violence and harassment targeted at the main character’s specific caste

Depictions of violence and harassment targeted at the main character’s specific caste

Brief threat of a sexual assault, and discussion of the societal implications

Mention of past child abuse

Mention of past sexual assault

A scene involving a cat in a significant and distressing amount of danger

General gore and body horror... like, guys, I didn’t mean to write a body horror book but I sure did. The main character cuts throats for a living and, as you may have seen me joke, the big bag of human teeth is not the most upsetting thing in the story, not by a long shot. I have no time or patience for torture porn, so the body horror is more in the Uncanny Valley range of nightmarish (less Zombieland, more Coraline) but it’s meant to be very unsettling nonetheless.

LGBTQ+ REPRESENTATION

I got this question on twitter, so I’ll basically rehash my answer here! The short version is yes, there are LGBTQ+ characters, but their specific experiences and relationships are not explored much.

The longer version is this: I think writing inclusively is the bare minimum we can expect from authors, especially YA authors, in the year of our lord 2018. As such, of the main trio, the prince is explicitly coded as gay, and the love interest is coded as pansexual; one of the secondary characters uses they/them pronouns; another is a woman with multiple spouses; I try to avoid gender-binary language or language that ties gender to genitalia; there are casual references to same-sex relationships and marriages. I am also VERY aware of the Bury Your Gays trope and I am avoiding it like the plague.

That said, I currently identify as your garden-variety cisgender straight woman, so I don’t think it’s appropriate for me to occupy space on the bookshelf that is better served by LGBTQ+ authors writing much-needed stories about their experiences and relationships. (Nor do I think I’m qualified to write those stories, tbh.) So there’s a whole array of folks present and identified on the page, but the main romantic relationship that is developed and explored is between a cisgender pansexual boy and a cisgender straight girl.

PREORDER CAMPAIGN

If you do preorder the book or place a request with your library, take a screencap and/or hold onto the receipt! I’ll be putting together some fun things to mail y’all as a thank-you once we’re closer to the release date.

That’s all I got right now, but please hit up my inbox if you have any questions!

#my books#the merciful crow#master post#you guys if I debut on the NYT list I am gonna be so smug#so so so so smug

89 notes

·

View notes

Text

Brothers (13/?)

[Part 1] [Part 12]

[Read on AO3]

Still on sucky app, still can't do a cut - sorry for another long post :(

Previously:

Jedi Knight Obi-Wan Kenobi realised that young Padawan Anakin Skywalker was destined to be trained by him rather than injured Jedi Master Qui-Gon Jinn. Meanwhile, Senior Padawan Siri Tachi, a good friend and former paramour of Obi-Wan’s, left the Jedi Order.

The entire Temple was talking about Siri’s departure - none more so than Obi-Wan’s age-mates. While Siri was a couple of years younger than them, she had been closer to them in sheer talent than her own clan and they had adopted her in short order, rather liked Tooka Clan had adopted Anakin as one of their own despite his status as a padawan rather than an initiate. Not one of their friends seemed to know exactly what had happened - she hadn’t confided in anyone.

That in itself wasn’t surprising - Siri Tachi was not a generally an overtly emotional person and didn’t like to appear weak to anyone - least of all her older friends. But when she was genuinely struggling she usually came to one of them for advice, or to Quinlan Vos, because he wouldn’t laugh at her either.

(Okay, he probably would laugh, because he’s one of those people, but then he’d help her fix it with no actual judgement and potentially an extreme amount of prejudice depending on the situation.)

They were most surprised that she hadn’t said anything to Obi-Wan, given how close the two of them were.

“I’ve been so wrapped up with everything else,” he said in horror. “What if I missed something? Is this my fault?”

Garen smacked him around the back of the head for that, which apparently saved Quin from doing so if his raised hand was anything to go by.

“You are not responsible for anyone other than yourself and that baby padawan of yours,” Quin told him firmly. “And I know you tried to talk to her more than once. If she didn’t speak to you, or us, that’s her fault, not yours.”

He knew that was true - he did - but it didn’t make him feel any better. And he couldn’t help the feeling that he was still missing something about the whole scenario

.oOo.

The Council accepted the change in Anakin’s official master with a lot less fuss than Obi-Wan had expected. A cynical person might even have inferred that they too had expected this to happen sooner or later. The only stipulation was that Qui-Gon continue to support them, as any master would for their first grand-padawans anyway.

Not a word was spoken about the fan fiction - maybe no-one had brought it to their attention? Obi-Wan knew there was no way he or Garen would, but the Council tended to have ways of knowing things anyway.

The quartermaster summarily issued him and Anakin with appropriate lodgings but, by mutual agreement, they decided not to change how things were for the time being. Qui-Gon still needed help, and that would be more forthcoming if Anakin was right there and able to call Obi-Wan rather than them waiting for Qui-Gon to get over his innate stubbornness and call them far later than he ought to have done.

As time went on and Obi-Wan became more assured that he was on the right track with Anakin’s training, Qui-Gon began doing some new research of his own. Using data feeds and Quinlan Vos as his eyes on the ground, he was trying to piece together where Masters Dooku and Sifo-Dyas had disappeared off to. There was suspiciously little in terms of actual news, and Jedi did tend to stir up the news feeds unless they were undercover, and Quin himself was limited to only short excursions to the Outer Rim, given that he had a young padawan of his own to care for.

Before he could decide to do something rash, an invitation was extended to the three of them.

“It can’t have been that long, can it?”

Qui-Gon raised a sardonic eyebrow. “It certainly feels like it from my perspective. This has been a very long ten months.”

The Queen of Naboo was hosting a celebration of the liberation of Theed, and a commemoration of those who gave their lives to ensure that Naboo remained a free world under its own sovereignty. Naturally, she had extended invitations to the three Jedi heroes of the day, and the way that Anakin was bouncing on his toes made it really difficult to be able to say anything other than ‘yes’. Not that Obi-Wan was inclined to: as Garen pointed out, they would be in a prime position to do some snooping on the Palpatine's front.

The flip-side of that particular credit chit was that the man himself was more than likely going to be in attendance. It would seem something of a snub were he not to be invited, Shmi explained later that evening when she commed, but they would all keep a close eye on Anakin to make sure he didn’t come to any harm during the festivities.

Shmi Skywalker was positively flourishing at the palace, and was using her skills at organising people and reading rooms to great use as an event planner for the royal retinue. Skills earned in slavery were skills nevertheless, and Shmi knew how to cater for multiple species courtesy of her unfortunate life, and the best ways to keep those species from killing each other.

Obi-Wan found himself wishing she were a Jedi, because that would be very handy in the field. Republic politics and etiquette lessons only went so far, after all.

Something must have shown on his face, because Shmi laughed. “Maybe I’ll have time to teach you some of the things I have learned while you are here,” she offered graciously. “It seems only fair, since you are teaching my son the ways of the Jedi.”

Obi-Wan inclined his head graciously to her. “And in turn, I shall teach Anakin so that perhaps his horrible lack of diplomacy skills will not get him killed before he is even knighted.”

She shook her head. “He knows better. Maybe he has become overconfident in his freedom?”

That was something that hadn’t occurred to Obi-Wan: Anakin certainly wasn’t likely to start a war with his lack of diplomacy, but ending one wasn’t within his capacity either. That ability to read people should be something he was capable of, given his background; it was a game that any slave with any intelligence could play because they needed to in order to survive. Why had Anakin lost that skill?

Perhaps he was worrying overly - Anakin was more than young enough to relearn anything he had forgotten, and his enthusiastic honesty was something that most people tended to find charming.

Maybe that was all it was - Anakin was enjoying being able to speak from the heart rather than having to choose his words carefully. And that was a small freedom that Obi-Wan was loath to take away from him. It had been less than a year since Anakin had been freed, and he was still only a little boy really, for all that he was already a padawan.

And perhaps that was something else that Obi-Wan should address - the initiates the same age as Anakin would be doing their training in their clan groups rather than one-on-one as Anakin was. Maybe some social interaction outside of the classroom with his age-mates would benefit him too? Particularly as he was no longer lagging behind in his agility or lightsabre skills: he should be able to keep up with them easily, and it might help his confidence if he were able to see that he was now on par with his friends.

And if that interaction might just possibly involve a way to push a certain, self-assured initiate at Garen, than that would just be a bonus

.oOo.

In the end, it was lightsabre combat that ended up being the shared lesson - their clan master, Kayln Amala, felt that having a Jar’Kai user in the mix would introduce an interesting element to Tooka Clan’s training. Obi-Wan thanked the Force profusely for smiling upon his devious plans.

Anakin and Zana paired themselves off naturally, which brought her to Garen’s attention sooner as she frowned at Anakin’s second blade.

“Okay, I get it in katas,” she said, glancing over at the pair of observing knights, “but how do I defend myself in a proper spar if I only have one lightsabre?”

“You need to make sure you know where both of Anakin’s blades are at any one time,” Obi-Wan said. “It sounds difficult - and it is - but it is not impossible. Here” and he raised his voice so that the whole class could hear, “why don’t you watch Master Muln and I spar first?”

“You’re enjoying this,” Garen muttered as he levered himself to his feet.

Obi-Wan arranged his face into his favourite diplomacy mask, projecting innocence, as they moved into position opposite each other.

Obi-Wan enjoyed sparring with Garen - it was a true test of his skills to keep up with the second blade, and Garen, while not going too quickly at first so as to demonstrate to the initiates, was not going easy on him. Distantly, he could hear that the younglings’ clan master was talking to them, pointing out things they may have missed, but the spar was taking his full focus.

Garen was good. It had been so long since they had sparred together properly that he’d forgotten just how much Garen had picked up from his master. And, as they picked up speed, he got sneaker with that second blade too - unlike some dual-wielders who made the mistake of using their second blade in tandem with the first because they lacked the strength to counter a two-handed strike, Garen had sheer size on his side and could use the shoto as it was meant to be - as a second offensive weapon.

They were both breathing hard by the time Garen tagged him out and Obi-Wan bowed gracefully.

“That was awesome!” Anakin told them both, grinning from ear to ear.

“Totally was,” Zana agreed, eyeballing Anakin. “Now I know how to kick your scrawny human butt, Ani.”

“Do we get to try two blades?” Zisk Sei’lya, one of the Bothan twins, asked before Master Amala could scold Zana for her undecorous behaviour. Not that it would make that much of a difference - Obi-Wan recalled plenty of behaviour unbefitting of Jedi in the crèche.

Garen grinned. “You certainly do - we have some training blades for you to practice with after you’ve competed this sparring session.”

.oOo.

Zana didn’t beat Anakin, but it was a near thing: there were at least two instances where she would have had him if she had been a little more underhanded, a little less confined by the niceties of crèche spars. It was unexpected of her not to cheat, Obi-Wan thought.

The Bothan twins had both shown aptitude with a second blade although neither had been sure about adopting it full time. Zana had taken to it much like she did with everything else Obi-Wan had seen - with enough determination to succeed no matter what.

“I know what you’re up to,” Garen grumbled as they collected up the training weapons to return them to storage.

“Up to?”

“It’s not going to work, showing off all the shiny initiates.”

“I have no idea what you mean - I just want Anakin to be able to spend some time with his friends while he still can. You remember what it was like once we were all apprenticed? We didn’t see each other for nearly two years! And even if I was trying to set you up, you need someone older - someone who’ll stand up to you and tell you when you’re being ridiculous.”

The fact that Zana Rar was already capable of that was completely beside the point, for the purposes of this conversation at least. She would be Garen’s padawan soon enough: he had been watching her far too much throughout the session for it not to be guaranteed.

#star wars#obi wan kenobi#qui gon jinn#anakin skywalker#garen muln#zana rar#brothers#qui gon lives au#everyone seemed to love zana so she's making a comeback#what will they find on naboo?

9 notes

·

View notes

Text

Get to Know the Writer (meme)

Get to know the writer meme

@isabellajack tagged me, which I thank you for. I won’t be tagging anyone else, but I wanted to answer this for you. Any other writer who may be in a funk is welcome to grab, post and pass along.

Rules: Answer the following questions & share about yourself!

#1 Poetry Or Prose?

I’m leaning towards Poetry… though I’ve have to admit, haven’t read any in most recent years…

#2 Dialogue Or Description?

In regards to my own writing… it could go either way, but I do lean towards dialogue first and then build around it. Sometimes… if the dialogue is done right and the voice of the character captured, that can sometimes lend to an unwritten description of a moment in regards to Fanfic. As a reader and writer of fanfic, I know what to expect of the characters… how they act, move, expressions… But I must admit, when reading… sometimes there are those fic authors that get waaaaaaay too descriptive… when that happens I tend to check out before I can get into the fic too deeply because there’s too much “colorful verbiage” being thrown together in an effort to try to stand out that I loose what the writing is trying to say. I don’t need 6 types of metaphors/similes in one paragraph to tell me how a person feels. Don’t beat me over the head with words please. I try to steer clear of beating readers over the head.

#3 Favorite Character You’ve Ever Written?

*Clutches Chest & Gasps* That is like asking a parent to choose a favorite child! There was a time where I didn’t have too many to choose from… but that was (holds breath) nearly two decades ago… and so many fandoms ago… BUT, if I really had to choose, it would be when I wrote TeniMyu RPS TutixNagayan. OMG I was smitten with those two.

#4 Least Favorite Character You’ve Ever Written?

I don’t think I really have one? I don’t tend to write fics with characters I’m adverse to. Life can be a drag in and of itself, I prefer to write characters I love. Sure there are characters I use that are antagonist, but they serve a purpose and I don’t feel any true negativity towards them, or struggle with writing them. Even writing those characters that are unsavory, there is still something to like about getting their characterization accurate.

#5 Favorite Character Trait To Write?

Specifically Pre-Serum Steve’s stubbornness, that is always fun. Generally, I tend to strive for realism of the characters, tangibility. Providing a snapshot in their lives that is 100% believable built off of the traits we know and love.

#6 Favorite Character Name You’ve Come Up With?

Rosalie… she was a niece of Ray Vecchio’s from a Due South I wrote. Man, that was too many moons ago…

#7 Biggest Weakness In Writing?

Bringing a fic to a close. Sometimes it feels like I am forcing it, and it’s writing the ending that can take the longest for me. I don’t want to beat a dead horse as I know, all fics must come to an end, but I struggle in keeping them from sounding/feeling cheesy and short.

#8 Biggest Strength In Writing?

My love of the characters I am writing. It’s my love for a character/pairing that spurs me to write in the first place. There has to be a love for the characters for any writer, a desire to examine what we are given further than a given canon because it’s connected with something in outselves.

#9 Favorite Trope?

There can never be enough Coffee Shops AUs... Well written Mpregs… A slow build/burn that has the lead up to a pairing’s first kiss feel more intense than the explicit scenes that may follow.

#10 Least Favorite Trope?

Trash Parties… I just don’t get that. Dom/Sub isn’t my go to fic, either. I can handle “light” versions, but even then just seeing it tagged Dom/Sub can make me scroll right on by an author’s work.

#11 Author You Look Up To?

Will I be looked down upon if I do not have an author to list (even professional)? History of me: growing up… I detested reading. This caused a huge struggle for me in school because if I had no interest in the subject matter, I was reluctant to read it. Nothing really appealed to me. Graduate, add fandoms, and now I can’t stop reading. I know there are people who follow fanfic writers and everything they produce, but I can’t really pinpoint a specific author. When I read a piece, it’s subject matter I pay more attention to, not the name of the person who wrote it. I’m an Equal Opportunity Reader.

#12 Favorite Book/Piece You’ve Written?

I’m not one to toot my own horn usually, and it can be hard to single out one specific fic overall, so here are a few more recent ones:

The Social Network - https://archiveofourown.org/works/875838 (Missing Transition)

RPS-The Social Network – https://tsn-kinkmeme.livejournal.com/10450.html?thread=19299026#t19299026 (Hiding in Plain Sight - WIP)

Merlin - https://archiveofourown.org/works/1454626 (It’s You and Me Against the Odds)

RPS-MCU - https://archiveofourown.org/works/8294710 (No Longer a Place)

MCU - https://archiveofourown.org/works/12906372 (They Say Love is Pain…)

#13 Favorite Line You’ve Written?

They always say home is where the heart is, and before Chris’s heart has always been settled and well rooted in the city of Boston, but now… this is the first he realizes that home, his home, is no longer a place, but a person.

#14 Fanfiction Or Original Work?

FanFiction. I like the feeling of immersing myself in a world I already hold dear to my heart. It’s a bit like coming home, a comfort, of knowing what to expect of characters even when I may not know where a story is going and still get surprised by them all the same.

#15 Favorite Genre To Write In?

I tend to write romance. I just want to see people happy.

#18 Heavy Description Or Little Description?

As I mentioned earlier, I don’t like seeing what is being said getting buried in colorful, disjointed ramblings of an author trying to prove their artistic flare. I used to challenge myself with 100 word drabbles to help myself figure out ways to tell a full fledge story in few words. Description is totally necessary, but not to the point to losing focus.

#17 First Person, Second Person, Or Third Person?

Definitely third person. I may have written one first person POV fic eons ago, but I generally skip reading anything that is First Person, unless it is by an author I might recognize and love.

#19 Multiple Point Of Views Or Just One Point Of View?

I lean towards one point of view, but I also like multiple points of view if it is done right. If a writer is jumping between POVs within a paragraph/part/chapter, that tends to get a bit muddy (especially when using pronouns). When I write multiple POVs, I choose a POV per section/part/chapter and make it clear in the beginning of each whose POV I’ve changed to.

#20 Multiple Protagonists Or Just One Protagonist?

I can barely handle one… so just one please.

#21 Favorite Villain You’ve Ever Written?

I have no answer for this.

#22 Longest Piece (Word Count) You’ve Ever Written?

Individual Piece: A work in progress that has stalled out called “Hiding in Plain Sight.” It is a Real Person Fic of Andrew Garfield/Female Jesse Eisenberg and it is presently 49K words. I, personally, am not a fan of reading fics where a character’s gender it changed… I’ve read a few definitely, I’m not adverse to them, but it’s definitely not something I do. This fic started via a challenge group and it just drew me in to write. I really need to get back to it…

Series: A four part Fraser/Vecchio Due South series that reached over 100K words. Man that really was a long time ago…

#23 Where Do You Get Your Inspiration From?

Life in general, really. It could be hearing a new song on the radio… watching something on TV… a personal occurrence in my life… and the characters themselves that draws me in.

#24 How To Deal With Writer’s Block?

Don’t sweat it. I write for my own pleasure really. If I write, I write, and if I don’t…. I can’t say it doesn’t get me down, but it’s not the end of the world for me. I used to write rather prolifically when I first started, but as the years moved on and available writing time lessened, it’s been hard finding a moment to just sit and write (or stare at the blinking cursor). I haven’t given up, I still do write, but it may be one piece in a year, that I open monthly and plug away at. Patience is key.

#25 Writing One Book At A Time, Or Multiple At A Time?

One… for the most part… but I have more WIP’s left open than I like…

#26 Planning Out The Book Before Writing, Or Just Writing It On The Go?

For the most part I do write with a singular idea that in the end, I sometimes find myself surprised by where the characters took me. There have been occasions where I’ve drafted an outline, but I’m more of the fly by the seat of my pants author.

#27 Picking Chapter Titles Before Writing Or After?

I do not usually name chapters of longer pieces, but in general… I usually start writing and name the piece something but in the end I usually change it to a final title. Sometimes it’s easy, other times I struggle to figure out an official title, but it always works out in the end.