#anti algorithms

Note

I was wondering if you have resources on how to explain (in good faith) to someone why AI created images are bad. I'm struggling how to explain to someone who uses AI to create fandom images, b/c I feel I can't justify my own use of photoshop to create manips also for fandom purposes, & they've implied to me they're hoping to use AI to take a photoshopped manip I made to create their own "version". I know one of the issues is stealing original artwork to make imitations fast and easy.

Hey anon. There are a lot of reasons that AI as it is used right now can be a huge problem - but the easiest ones to lean on are:

1) that it finds, reinforces, and in some cases even enforces biases and stereotypes that can cause actual harm to real people. (For example: a black character in fandom will consistently be depicted by AI as lighter and lighter skinned until they become white, or a character described as Jewish will...well, in most generators, gain some 'villain' characteristics, and so on. Consider someone putting a canonically transgender character through an AI bias, or a woman who is not perhaps well loved by fandom....)

2) it creates misinformation and passes it off as real (it can make blatant lies seem credible, because people believe what they see, and in fandom terms, this can mean people trying to 'prove' that the creator stole their content from elsewhere, or allow someone to create and sell their own 'version' of content that is functionally unidentifiable from canon

3) it's theft. The algorithm didn't come up with anything that it "makes," it just steals some real person's work and then mangles is a bit before regurgitating it with usually no credit to the original, actual creator. (In fandom terms: you have just done the equivalent of cropping out someone else's watermark and calling yourself the original artist. After all, the AI tool you used got that content from somewhere; it did not draw you a picture, it copy pasted a picture)

4) In some places, selling or distributing AI art is or may soon be illegal - and if it's not illegal, there are plenty of artists launching class action lawsuits against those who write the algorithm, and those who use it. Turns out artists don't like having their art stolen, mangled, and passed off as someone else's. Go figure.

Here are some articles that might help lay out more clear examples and arguments, from people more knowledgeable than me (I tried to imbed the links with anti-paywall and anti-tracker add ons, but if tumblr ate my formatting, just type "12ft.io/" in front of the url, or type the article name into your search engine and run it through your own ad-blocking, anti tracking set up):

These fake images reveal how AI amplifies our worst stereotypes [Source: Washington Post, Nov 2023]

Humans Are Biased; AI is even worse (Here's Why That Matters) [Source: Bloomburg, Dec 2023]

Why Artists Hate AI Art [Source: Tech Republic, Nov 2023]

Why Illustrators Are Furious About AI 'Art' [Source: The Guardian, Jan 2023]

Artists Are Losing The War Against AI [Source: The Atlantic, Oct 2023]

This tool lets you see for yourself how biased an AI can be [Source: MIT Technology Review, March 2023]

Midjourney's Class-Action lawsuit and what it could mean for future AI Image Generators [Source: Fortune Magazine, Jan 2024]

What the latest US Court rulings mean for AI Generated Copyright Status [Source: The Art Newspaper, Sep 2023]

AI-Generated Content and Copyright Law [Source: Built-in Magazine, Aug 2023 - take note that this is already outdated, it was just the most comprehensive recent article I could find quickly]

AI is making mincemeat out of art (not to mention intellectual property) [Source: The LA Times, Jan 2024]

Midjourney Allegedly Scraped Magic: The Gathering art for algorithm [Source: Kotaku, Jan 2024]

Leaked: the names of more than 16,000 non-consenting artists allegedly used to train Midjourney’s AI [Source: The Art Newpaper, Jan 2024]

#anti ai art#art theft#anti algorithms#sexism#classism#racism#stereotypes#lawsuits#midjourney#ethics#real human consequences#irresponsible use of algorithms#spicy autocomplete#sorry for the delayed answer

22 notes

·

View notes

Note

I’m not trying to attack you, but do you know that proshipper means someone who supports and romanticizes pedophilia, incest, and abuse? Your reblog on that post seems to read that you think antis just hate on people for having ships they don’t like. But it’s completely different than that. Just looking on the proshipper side of Tumblr and the internet and you can see people happily shipping children and adults and making nsfw content of such things.

i appreciate that you're not being outright hostile, but i have to say, that on its own put you above basically every anti i've interacted with.

i understand where antis are coming from, i really do. there are a lot of things on the internet that make me deeply uncomfortable, including the minor/adult ships that you mention. i don't want to anything to do with those kinds of ships and i would be happiest if i never saw them again. which is why i'm proship.

nine times out of ten, if i see that kind of ship brought up on my dash, it's because i was following an anti without realizing it, and they brought it up unprompted and untagged, to talk about how bad it is that they exist. they are the ones putting that kind of content in front of my face and making it harder to avoid.

the thing about people who ship those ships is that they're generally very aware that not everyone wants to see that kind of content, and so they tag it. they make sideblogs to talk about it. they don't go out of their way to shove it in people's faces. that means i, and everyone else who doesn't like it, can avoid it.

what antis want is for it to not exist at all. they want the tags to be purged and blocked, and for anyone who uses those tags to have their accounts deleted. and sure, that might get rid of some of it, but do you know what would happen to the rest? it would stop being tagged. people who don't want to see it wouldn't have the tools to avoid it. this isn't just a hypothetical, that's what's happened any time a fan space has tried to do that.

that's not even getting into the rabbit hole of what should be banned and what shouldn't. obviously any content that depicts real children or real life abuse shouldn't exist and shouldn't be allowed to be posted, but basically any platform that people use already enforces those policies, and there's not much of a slippery slope to go down there. if it involves real living breathing people being abused, it's bad. end of discussion.

but the same can't be said for fiction. ask ten antis for a specific list of all the content that should be banned, and you'll get ten different answers. what about kink? what about roleplay? what about horror and murder and anything that involves fictional characters being graphically tortured? what about people using art to process terrible things that have happened to them? what about art that uses dark themes as a horror element? if you just want to ban anything questionable to anyone, that's the line of thinking that gets any mention of lgbt existence banned. and again, this isn't just a hypothetical, this has happened before, and that's generally where it leads.

i know, from personal experience, that antis do, in fact, send harassment to people just for shipping things they don't like. i've gotten accused of absolutely vile shit for shipping two fictional characters who were both consenting adults. i've seen ship wars turn into moral battlegrounds, over ships that an average person wouldn't bat an eye at.

the thing about "romanticization" is a whole other can of worms. the anti logic goes like this: if someone sees something (even if it's very obviously fictional) in a positive light enough times, they will start thinking it's okay in real life, and go on to hurt real people. the problem with that is that it's just. blatantly untrue.

if it were true every horror movie fan would be a serial killer, every person that studies dark media would be an unhinged psychopath, and everyone who is into ddlg would be a pedophile. but they're not. they just aren't. people have directed movies just as fucked up as the darkest shit on ao3, and are still capable of being normal human beings who know right from wrong in real life.

even if someone is that impressionable, scrubbing away the existence of every piece of questionable content isn't going to solve their problem, because they're still going to be vulnerable to con men, scams, and cultists. the only thing that would actually materially help someone like that is developing their own morals and critical thinking.

children are also more impressionable, and there's a lot of content that's not suitable for them, but that doesn't mean that content shouldn't exist. it just means that they should stick to spaces designed for them (which most social media sites, tumblr included, are not) or, if they're old enough to be responsible for their experience online, they, or a trusted adult in their lives, should block and filter out things that they aren't comfortable with.

which is what everyone on the internet should be doing. it's what i do, and it's made the internet a much more pleasant place to be. and it's why i sometimes worry for antis mental health, especially teenagers, because they're being told it's right and moral to seek out content that makes them uncomfortable and to engage with the people making it. and that's just. really bad. it's not good for the creators that they're harassing obviously, but it's also really bad for them! it's not healthy to seek out things that make you feel bad, and it's a terrible internet safety lesson to teach minors that it's okay for them to seek out and engage with people making adult content.

individual harassment and crusading is never going to succeed at removing dark content from the internet. it just isn't. at best you might get a small percentage of people who create that content to stop sharing it, at worst you're just going to make people stop tagging it, and either way, you're exposing yourself to things that make you feel bad, when you don't have to.

if you want to materially change the type of content you see, you can. the block button is your friend, use it liberally. same with content filtering and tag blocking.

#oops this got really long#cd.ask#proship#disk horse#tw pedophila mention#uhhh what to tag#tw shipping discourse#this doesn't even get into the antis that i've seen proudly professing that they care more about fictional characters than real people#because i don't know how to explain to someone that real humans matter more than cartoon characters#or how to avoid content on platforms other than tumblr bc frankly thats a lot of the reason i don't use them#i don't trust an algorithm#also im turning anon off after posting this so if you want to send me hate you cant be a coward about it#also be prepared to get blocked and ignored#the only reason i answered this person is bc they weren't being hostile

16K notes

·

View notes

Text

I'm really not a villain enjoyer. I love anti-heroes and anti-villains. But I can't see fictional evil separate from real evil. As in not that enjoying dark fiction means you condone it, but that all fiction holds up some kind of mirror to the world as it is. Killing innocent people doesn't make you an iconic lesbian girlboss it just makes you part of the mundane and stultifying black rot of the universe.

"But characters struggling with honour and goodness and the egoism of being good are so boring." Cool well some of us actually struggle with that stuff on the daily because being a good person is complicated and harder than being an edgelord.

Sure you can use fiction to explore the darkness of human nature and learn empathy, but the world doesn't actually suffer from a deficit of empathy for powerful and privileged people who do heinous stuff. You could literally kill a thousand babies in broad daylight and they'll find a way to blame your childhood trauma for it as long as you're white, cisgender, abled and attractive, and you'll be their poor little meow meow by the end of the week. Don't act like you're advocating for Quasimodo when you're just making Elon Musk hot, smart and gay.

#this is one of the reasons why#although i would kill antis in real life if i could#i also don't trust anyone who identifies as 'pro-ship'#it's just an excuse to shut down legitimate ethical questions and engaging in honest self-reflective media consumption and critique#art doesn't exist in a vacuum#it's a flat impossibility for it not to inform nor be informed by real world politics and attitudes#because that's what it means to be created by human hands#we can't even make machine learning thats not just human bias fed into an algorithm#if the way we interact with art truly didn't influence anything then there would be no value in it#just because antis have weaponized those points in the most bad faith ways possible#doesn't mean you can ignore them in good faith#anyway fandom stans villains because society loves to defend and protect abusers#it's not because you get the chance to be free and empathetic and indulge in your darkness and what not#it's just people's normal levels of attachment to shitty people with an added layer of justification for it#this blog is for boring do-gooder enjoyers only#lol#knee of huss#fandom wank#media critique#pop culture#fandom discourse

189 notes

·

View notes

Text

What if the reason antis get confused about ‘gross shit’ being in the main tags is because they assume the main tags should be more algorithm-like? That the ‘gross shit��� should sink to the bottom where it is never seen. Bad news guys: Most recent absolutely does not fucking work like that, and nobody has a clue how most popular works.

2K notes

·

View notes

Text

#‘yeah let me put computer generated art in the artist’s tag!’#insulting#ai art#anti ai#i look through the tag to find other artists and share their work. not to find something made by an algorithm

92 notes

·

View notes

Text

I Sacrificed My Writing To A.I So You Don't Have To

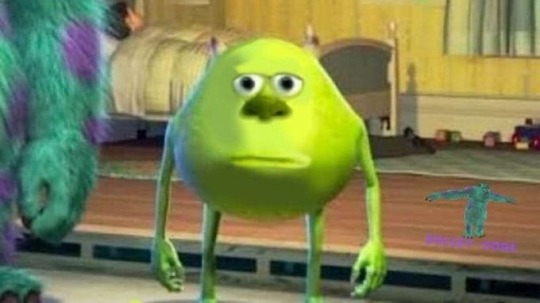

I was thinking about how people often say "Oh, Chat GPT can't write stories, but it can help you edit things!" I am staunchly anti-A.I, and I've never agreed with this position. But I wouldn't have much integrity to stand on if I didn't see for myself how this "editing" worked. So, I sacrificed part of a monologue from one of my fanfictions to Chat GPT to see what it had to say.

Here is the initial query I made:

Chat GPT then gave me a list of revisions to make, most of which would be solved if it was a human and had read the preceding 150k words of story. I won't bore you with the list it made. I don't have to, as it incorporated those revisions into the monologue and gave me an edited sample back. Here is what it said I should turn the monologue into:

The revision erases speech patterns. Ben/the General speaks in stilted, short sentences in the original monologue because he is distinctly uncomfortable—only moving into longer, more complex structures when he is either caught up in an idea or struggling to elaborate on an idea. The Chat GPT version wants me to write dialogue like regular narrative prose, something that you'd use to describe a room. It also nullified the concept of theme. "A purity that implied personhood" simply says the quiet(ish) part out loud, literally in dialogue. It erases subtlety and erases how people actually talk in favor of more obvious prose.

Then I got a terrible idea. What if I kept running the monologue through the algorithm? Feeding it its own revised versions over and over, like a demented Google Translate until it just became gibberish?

So that's what I did. Surprisingly enough, from original writing sample to the end, it only took six turnarounds until it pretty much stopped altering the monologue. This was the final result:

This piece of writing is florid, overly descriptive, unnatural, and unsubtle. It makes the speaking character literally give voice to the themes through his dialogue, erasing all chances at subtext and subtlety. It uses unnecessary descriptors ("Once innocuous," "gleaming," "receded like a fading echo," "someone worth acknowledging,") and can't comprehend implication—because it is an algorithm, not a human that processes thoughts. The resulting writing is bland, stupid, lacks depth, and seemingly uses large words for large word's sake, not because it actually triggers an emotion in the reader or furthers the reader's understanding of the protagonist's mindset.

There you have it. Chat GPT, on top of being an algorithm run by callous, cruel people that steals artist's work and trains on it without compensation or permission, is also a terrible editor. Don't use it to edit, because it will quite literally make your writing worse. It erases authorial intention and replaces it with machine-generated generic slop. It is ridiculous that given the writer's strike right now, studios truly believe they can use A.I to produce a story of marginal quality that someone may pay to see. The belief that A.I can generate art is an insult to the writing profession and artists as a whole—I speak as a visual artist as well. I wouldn't trust Chat GPT to critique a cover letter, much less a novel or poem.

#fanfiction#writing#chatgpt#ai#aiwriting#artificial intelligence#fanfic#fanfic meta#artificially generated#writers on tumblr#writer problems#cryptobros#if these people ever took one humanities class they'd see the issues with these algorithms#anti chat gpt#anti capitalism#anti ai#don't use chat gpt to edit your work for the love of god#ai can't write#ao3#star wars fanfiction meta#wga strike#support the writers!#wga solidarity

104 notes

·

View notes

Text

“We curate our own experience here”

I love you fandoms I don’t participate in I love you activists for problems I don’t have I love you people with different lived experiences from me I love you spicy hot takes I love you rambling analysis I love you other people’s Blorbos I love you thoughts I never would have had on my own i love and adore all of you especially the ones who surprise me keep it up I love you all

#troglodyte thoughts#free range sustainable shitpost#anti doomscroll patrol#diversity is the spice of life#travel is the cure for small mindedness#I’ll build my own algorithm#with blackjack and hookers

68 notes

·

View notes

Text

Me: I like Regulus Black

Tumblr "for you" page algorithm: So you want to see Jegulus!

Me: no, thank you, I actually don't ship that. *adds filter for Jegulus tag*

Algorithm: Here are some tagged starchaser and James x Regulus!

Me: nope, sorry. Still don't ship it. *adds more filters for alternate ship tags*

Algorithm: here's something you'll definitely like!

Me: Are you sure? Really? You're sure it's not another cactus the thing I keep saying I don't ship?

Algorithm:

#look#I am a “ship and let ship” kind of person#but why does the algorithm keep finding stuff for me from one of the ships I intentionally filter out?#*sigh*#why do I even bother checking the algorithm page?#anti Jegulus#I guess???

32 notes

·

View notes

Text

An experiment conducted by Dublin City University has found that the algorithms of some social media platforms are feeding male-identified accounts misogynistic and anti-feminist content.

One of the researchers behind the project, Professor Debbie Ging, said it shows that shutting down the accounts of ‘manfluencers’ like Andrew Tate doesn’t necessarily mean their content is being removed from platforms, so social media firms need to tackle harmful content in “more sophisticated ways.”

Professors from the university’s Anti-Bullying Centre tracked the content apps recommended to ten ‘sockpuppet’ accounts on 10 blank smartphones, five on TikTok and five on Youtube Shorts.

The researchers found that all of the male-identified accounts were fed male supremacist, anti-feminist or other extremist content, whether they sought out that kind of content or not.

Each account received this content within the first 23 minutes of the experiment.

The researchers found that once the account showed interest by watching this kind of content, the amount it was recommended “rapidly increased”.

By the last round of the experiment, the study states, after the accounts (which had identified as being owned by a man during set-up) had watched 400 videos over 2-3 hours, the vast majority of the content being recommended was “toxic”, primarily falling into the alpha male and anti-feminist category.

continue reading

#ireland#research#social media#tiktok#youtube#misogynist content#anti-feminist content#male accounts#algorithmic targetting

16 notes

·

View notes

Text

"you cant just block everyone who posts something you dont like" i can actually and you should too

#being online is your own experience so why put up with shit that annoys you?#“oh but you can just scroll past it!” yeah i could but also i could block them and remove the chance of them coming up in an algorithm agai#i dont get why people are so anti block gdhfkjgfk#its no skin off their back; the chances of these people even knowing is so slim#my blacklist/muted words lists would send people into a coma

12 notes

·

View notes

Text

Y’all I’m so fucking tired of people trying to sell us things , it’s all we ever see. sponsored content, ad spon segments on YT, ads below the fucking video, ads above the title, ads IN THE MIDDLE OF YOUR VIDEO, unskippable ads, Instagram shop, Facebook market place, tiktok shop, algorithm ads, sims shop IN THE GAME, Pop up ads, paywalled articles, pay walled videos, freemium apps, the “for just 99 cents you can level up” except it’s never JUST 99 cents because they’re targeting the addiction prone, the ads in between every single level of your game.

ADS, ADS , ADS

This capitalistic dystopia is sickening and I’m so tired of people trying to sell things to me.

#capitalism sucks#anti capitalism#capitalist dystopia#youtube#fuck the algorithm#social media algorithms#tiktok#instagram#housing crisis#economy#economic collapse#economic crisis#the one percent#we need some rules and we need them now#Google#streaming#capitalism#dystopia

9 notes

·

View notes

Text

I tried to listen to a feel good playlist I made on Spotify free for my shower. You know 15 TOPS. I heard 6 ads and 3 songs I didn't add. So ONE (1) song from MY playlist got played. AND THEN it tried to play Tsw***ft AND A PRO-ISNOTREAL podcast. Uh huh it's a no from me baby.

Where else can I make playlists??

#anti spotify#mel thinks#anti taylor swift#anti israel#i was just trying to go to bed in a good mood but nooooOoOOOOo i had to yell at a stupid ai algorithm for 10 minutes

19 notes

·

View notes

Note

There's no other alternatives. Most people don't like rping ocxcanon and not everyone can write or can afford commissions. Things have nuance and ai isn't inheritely bad. Chat bots have existed for years and that's all character ai is. A chat bot.

A chat bot as they have existed for decades has a set number of responses based on what you ask it, this set number of responses cannot be updated by the chatbot itself, instead whoever is the person responsible for the code would need to update the database of responses as well as the algorithm used to identify which response to give when asked a questions. That’s how chatbots work.

AI chatbot can and do update themselves based on the questions and responses you give them, that’s the main difference, and the creator can just filter out certain things if they do not want them (it has happened before with an AI where it taught itself to curse and the creator didn’t want that)

Now the problem here with RP is that you are most likely using characters under trademarks, characters from very popular franchises, and if at any point the creator of these AI bots decides to sell the content for profit they can go to the character owners and go “Hey here I have thousand of queries for X popular character of yours, filtering through them I can give you the 10x most popular asked questions, or the most used plotlines” and the character owner can go “oh this would be great to make a story based on the most popular questions!” and that’s how AI starts to fuck up with writers/authors.

#there’s a reason why old chatbots ask you to use as clear and as short wuedtions possible#because their answers and algorithms are not created to deal with complicated stuff#meanwhile ai chat bots keep evolving#and yeah it does sound cool#and it would be#if we had regulations in place about it#that’s my main problem the lack of regulations because as it is#this will definitely end affecting writers and other artistic creators#anti ai

40 notes

·

View notes

Text

Unfuck your Tech

The Internet is increasingly profit-based in its design, intended to extract the maximum amount of value from your use of it. Proprietary tech, ads, monetization, algorithmic content dissemination, digital rights media (DRM) etc. are all things that benefit corporations, but that make the Internet more hostile to real individuals and communities.

This blog shares community-oriented tools, free alternatives to paid content, and ways for healthier more human media consumption with a focus on free open-source software (FOSS), that anyone can use to remove limiters, paywalls, ads, mindless endless content feeds, and take back digital autonomy, self-respect and fulfillment.

Unfuck your technology, and make your digital landscape a kinder place.

Header image made in aeCAD, a FOSS circuit board design tool.

#tech#technology#media consumption#media#content consumption#social media algorithms#yo ho ho#anti capitalism#community building#tech tips#life hacks#hacking#hacks#hardware

33 notes

·

View notes

Text

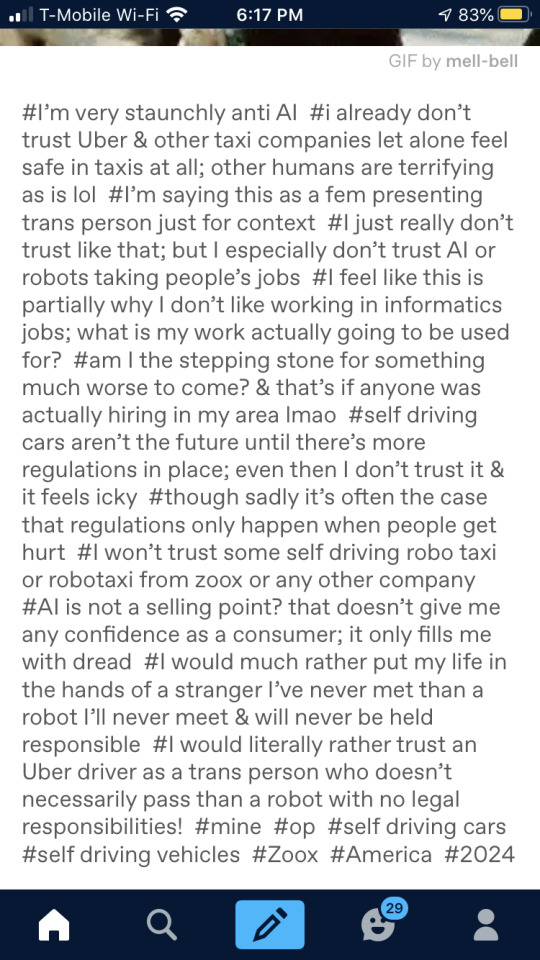

I refuse to hop in a Zoox car in my entire life if I can avoid it. I refuse to hop into any self-driving robo taxi (or robotaxi) that uses AI to keep it’s passengers “safe.” If this is actually a service they are legally allowed to provide publicly, there’s about to be a whole bunch of new laws made in hopefully very little time! Now you know me, obviously fuck the law, many laws are unjust, but sometimes we need some regulations to keep up with the shit that rich Silicon Valley tech bros “put out” while claiming it’s allegedly their own work. These rich bastards are dangerous! Now I’ll pass along the questions that my partner & I jokingly pondered. If something happens that the AI & detection systems doesn’t know how to handle, will us as the passengers be held legally responsible say if a child gets punted into the air by the self driving car & we can’t do anything to stop it? What if we’re asleep assuming the car is safe & it runs over a legally endangered animal? What if we’re on our phones & these self-driving robot cars cleave someone in half? What if it crashes into someone’s private property? Are we held responsible in any of these cases or is the big rich guy’s company? If it’s anything like Tesla, you should get your kids or pets out of the road when you see a Zoox car coming, it could allegedly cause some mortalities. Two more things. What’s stopping someone from hijacking, hacking, or planting a virus on these self-driving taxi services? What if one of them gets hijacked to take someone to a human trafficker meetup spot? Will the company be held responsible at all? The gifs below pretty much summarizes my feelings.

#I would sooner trust an Uber driver even though I’m kind of paranoid; than trust a robot with no legal responsibilities#we’re trying this post again since apparently I hit a key word that the algorithm didn’t like in my post or tags#I’ll put my trust in a random stranger before I put my trust in an AI whose owner is probably a billionaire or millionaire#zoox & any other robotaxi or robo taxi services who do self driving cars; I don’t trust like that#regulations for stuff like this often only happens once it’s too late & I actually hate that so much#robots are stealing our jobs & the government is just letting them; they don’t care about us#these tag rambles are probably gonna get my post wiped from being seen by anyone#I’m anti-AI btw just to clarify; in case that wasn’t blatantly obvious#I’ll always be anti AI#I’ll trust a Lyft driver before I trust a robot with no sense of self awareness of its own#mine#op#self driving vehicles#2024

7 notes

·

View notes

Text

my spicy hot take regarding AI chatbots lying to people is that, no, the chatbot isn't lying. chatgpt is not lying. it's not capable of making the conscious decision to lie to you. that doesn't mean it's providing factual information, though, because that's not what it's meant to do (despite how it's being marketed and portrayed). chatgpt is a language learning model simply predicting what responses are most probable based on established parameters.

it's not lying, it's providing the most statistically likely output based on its training data. and that includes making shit up.

#multi makes text posts#idk how much sense this makes but yeah#anti ai#one thing i get so wary of with these chatbots is people ascribing a sentience to them that isn't there#and that includes people talking about the pitfalls of this technology#specifically saying that the bots are lying... no. they're not lying#they're not even really communicating#they're algorithms trained on specific data that enables them to form convincing responses#disclaimer: i am not an expert in ai#but that doesn't make this less of a valid point#also honestly even calling these chatbots ai is a misnomer#there is no intelligence! no intent! it's an algorithm!!

24 notes

·

View notes