#humanmachine

Text

The traditional boundaries between humans and machines are blurring in an era marked by rapid technological advancements. That ideal society is also reflected in the book Angel of Mortality: Defender of Life by David W. Stewart. It centered around a necessary battle to save humanity from the creation of a powerful machine that is chaos incarnate. Readers will find themselves in a science fiction thriller of many sorts with encounters of xenoborgs – nanobots with artificial intelligence that evolved into efficient living machines...

0 notes

Photo

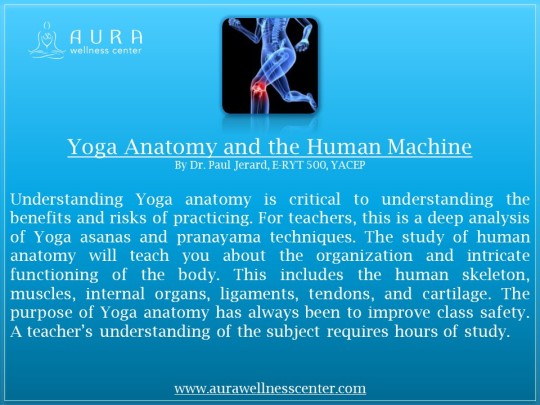

Yoga Anatomy and the Human Machine

0 notes

Photo

The submissions for #ICHMI2023 scheduled to happen at #India are now open. Visit https://asdf.events/ichmi/ for more details about the event and submission. #ASDFInt #HostedEvents #Conference #2023Conferences #Academic #Chennai #Event #HumanMachine #Interaction

0 notes

Photo

GM-Gruppe BUICK, CADILLAC, CHEVROLET-Autoradio, Mensch-Maschine-Schnittstelle HMI 1.1, HMI 2.0, HMI 2.5, Zentralanzeige-Prüfstand.

https://ecudepot.com/product/buick-cadillac-hmi-test-platform/

#buick #cadillac #Chevrolet #hmi2 #humanmachineinterface #humanmachine #boschhmi #humanmachineinteraction #radiounit

0 notes

Photo

"Expectation is the mother of all frustration." - Antonio Banderas #humanmachine #slowcomputer #slowinternet #workfromhome https://www.instagram.com/p/CP2X3e5tjgC/?utm_medium=tumblr

1 note

·

View note

Text

Um

abandono não precisa ser algo tão trágico, mas algo que de sente por dentro. É como o luto. Ele não precisa ser o final da vida, mas o final de algo por dentro... Um abandono pode ser de qualquer pessoa, mãe pai amigx e namoradx. Qualquer pessoa pode te abandonar, mas continue a caminhar.

É claro que abandonos nos destroem, nos desequilibram, nos oxidam, nos deixam pra baixo, nos afetam de um jeito bastante ruim. Faz um clima de "eu não sou bom" ou "realmente, sou um fracasso".

Enfim... Não sei muitos conselhos sobre abadono. Só prossiga. Por mais que esteja difícil. E por mais incomodo, estranho, ruim e triste que seja, continue. Abadonos nunca serão bons. E nenhum se compara a outros. Abadonos são abandonos e o que vem depois que é o que importa = Você e só você.

#autorias#meus#autoreflexao#reflection#carteldapoesia#autografando#autoconselho#falhas#desistencia#humanmachine#maquinahumana#sensibilidade#abandono#falta#conhecencia#projetocartel

26 notes

·

View notes

Video

Digital Performance #03 HUMAN MACHINE Ce samedi 16 mai à 11h30, Zoom app Contactez-moi pour vous inscrire ! You don't speak French ? No problem, I will guide you in English also. You can join us this Saturday 16 May, 2020 at 11:30 AM, just contact me to book your place ! More info on the event link below. La crise du coronavirus a changé notre monde. Plus rien ne sera comme avant. Les relations entre-humains ont été limitées. Dans le monde d’aujourd’hui où les gens ne communiquent plus qu'au travers des machines, le corps devient mécanisé. Quel avenir pour l’humanité ? Kate Wyrembelska #art #contemporaryart #digitalart #humanmachine #videoart #video #performance #machine #mechanization #coronavirus #artconfiné #isolement #confinement #confinementart #lockdown #together #alone #worldcrisis #challange #photo #graphicart #glitch #glitchart #kwkate #wyrembelska (at Montpellier, France) https://www.instagram.com/p/CAFUqfiqnsX/?igshid=foealojzpjdz

#03#art#contemporaryart#digitalart#humanmachine#videoart#video#performance#machine#mechanization#coronavirus#artconfiné#isolement#confinement#confinementart#lockdown#together#alone#worldcrisis#challange#photo#graphicart#glitch#glitchart#kwkate#wyrembelska

2 notes

·

View notes

Text

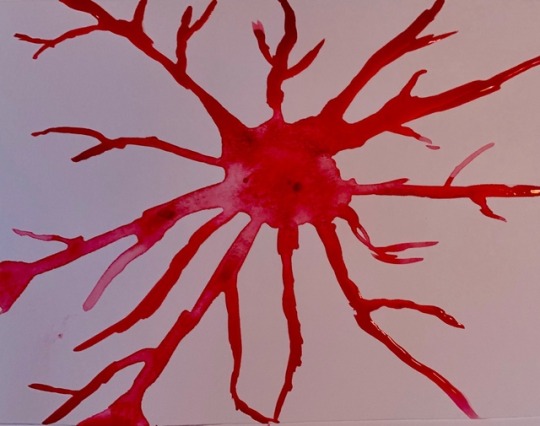

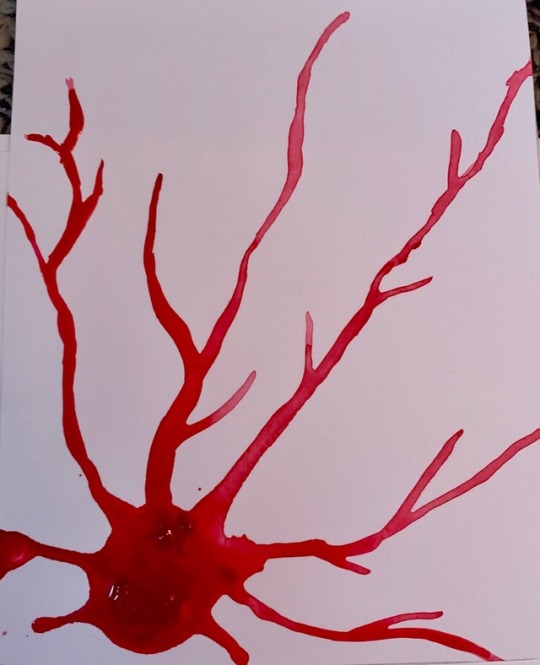

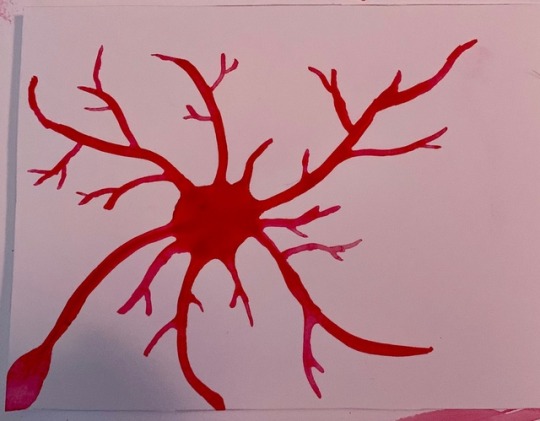

Organic Machine Series.

#art#science#organic#machine#natural#nature#artists on tumblr#my art#red#synapse#human#gears#humanmachine

7 notes

·

View notes

Photo

Human becomes organs of the Machine. #humanmachine#parthuman#sexymetal#fighttosurvive #trippyart#humanoids #humanoid #humanoidrobot #aliens #robotics #ai #robots #artfuture #alien #artist #oc #robotic #artwork #scifi #robot #digitalart #artistsoninstagram #ufo #human #mechanical #teknologi #electrical #robotdiy #openhardware (at Thunder Rock Cove, Oregon) https://www.instagram.com/p/CalRcdRPQTb/?utm_medium=tumblr

#humanmachine#parthuman#sexymetal#fighttosurvive#trippyart#humanoids#humanoid#humanoidrobot#aliens#robotics#ai#robots#artfuture#alien#artist#oc#robotic#artwork#scifi#robot#digitalart#artistsoninstagram#ufo#human#mechanical#teknologi#electrical#robotdiy#openhardware

0 notes

Text

Human Machine

Um projeto prestes à começar.

1 note

·

View note

Text

That ideal society is also reflected in the book Angel of Mortality: Defender of Life by David W. Stewart. It centered around a necessary battle to save humanity from the creation of a powerful machine that is chaos incarnate. Readers will find themselves in a science fiction thriller of many sorts with encounters of xenoborgs – nanobots with artificial intelligence that evolved into efficient living machines...

0 notes

Text

Building Trusted Human-Machine Partnerships | Science and Technology Research News

Notional vignettes portray competence-aware devices carrying out jobs in vibrant environments.

A crucial component in efficient groups – whether athletic, service, or military – is trust, which is based in part on good understanding of staff member’ skills to satisfy appointed functions. When it pertains to forming efficient groups of human beings and self-governing systems, human beings require prompt and precise insights about their device partners’ abilities, experience, and dependability to trust them in vibrant environments. At present, self-governing systems cannot offer real-time feedback when altering conditions such as weather condition or lighting trigger their proficiency to change. The devices’ absence of awareness of their own skills and their failure to interact it to their human partners minimizes trust and weakens group efficiency.

To assist change devices from easy tools to trusted partners, DARPA today revealed the Competency-Aware Artificial intelligence (CAML) program. CAML intends to establish artificial intelligence systems that constantly evaluate their own efficiency in time-critical, vibrant circumstances and interact that details to human team-members in a quickly comprehended format.

“If the machine can say, ‘I do well in these conditions, but I don’t have a lot of experience in those conditions,’ that will allow a better human-machine teaming,” stated Jiangying Zhou, a program supervisor in DARPA’s Defense Sciences Workplace. “The partner then can make a more informed choice.”

That dynamic would support a force-multiplying impact, because the human would understand the abilities of his/her device partners at all times and might use them effectively and successfully.

On the other hand, Zhou kept in mind the difficulty with cutting edge self-governing systems, which cannot evaluate or interact their skills in quickly altering circumstances.

“Under what conditions do you let the machine do its job? Under what conditions should you put supervision on it? Which assets, or combination of assets, are best for your task? These are the kinds of questions CAML systems would be able to answer,” she stated.

Utilizing a streamlined example including self-governing vehicle technology, Zhou explained how important CAML technology might be to a rider attempting to choose which of 2 self-driving cars would be much better fit for driving at night in the rain. The very first lorry may interact that in the evening in the rain it understands if it is seeing an individual or an inanimate things with 90 percent precision, and that it has actually finished the job more than 1,000 times. The 2nd lorry may interact that it can compare an individual and an inanimate things in the evening in the rain with 99 percent precision, however has actually carried out the job less than 100 times. Geared up with this details, the rider might make an educated choice about which lorry to utilize.

DARPA has actually set up a pre-recorded webcast CAML Proposers Day for possible proposers on February 20, 2019. Information are offered at: https://go.usa.gov/xE9aQ.

The CAML program looks for knowledge in artificial intelligence, expert system, pattern acknowledgment, understanding representation and thinking, self-governing system modeling, human-machine user interface, and cognitive computing. To take full advantage of the swimming pool of ingenious proposition ideas, DARPA highly motivates involvement by non-traditional proposers, consisting of small companies, scholastic and research organizations, and novice Federal government specialists.

DARPA expects publishing a CAML Broad Company Statement solicitation to the Federal Company Opportunities site in mid-February 2019.

New post published on: https://livescience.tech/2019/02/04/building-trusted-human-machine-partnerships-science-and-technology-research-news/

0 notes

Photo

The Rated Republic of China, part of the "(dot) gov" series is currently on show @romantsoathens until Nov 15th in Athens. Curation by @katerina_gkoutz & @voltnoi w/@neongreece #filipevilasboas - Ethical drifts like Cambridge Analytica at least had the virtue of warning us: equipped with mass surveillance and influence tools, our digital regimes abuse their power and threaten our fundamental freedoms. In an increasingly computerized, interconnected and automated society, the quantitative and the logical emerge as dominant values. In The Rated Republic of China, like a “sousveillance” act, Filipe Vilas-Boas subtly modifies the Chinese flag and alludes to the star-based system of ranking and the radical Chinese system of assigning a social credit score. In United Likes of America, Vilas-Boas replaces the stars on the American flag with an icon similar to Facebook's "like". This graphic détournement makes reference to the power and omnipresence of the social network, which even affected the American elections in 2016 and, as a consequence, marked the history of the nation through the electoral triumph of Donald Trump. - #nointernet #athens #katerinagkoutziouli #voltnoibrege #sousveillance #surveillance #surveillancecapitalism #shoshanazuboff #stevemann #humanmachine #ratingart #rating #mediaart #newmediaart #politicalart #digitalart #postdigital #artnumerique #artcontemporain #contemporaryart #contemporaryartist #artexhibition #romantso #starrating #socialcreditsystem #imagineyouwakeupandthereisnointernet (à Romantso) https://www.instagram.com/p/CGrtuMSAy7_/?igshid=1o0g3yyv12zgx

#filipevilasboas#nointernet#athens#katerinagkoutziouli#voltnoibrege#sousveillance#surveillance#surveillancecapitalism#shoshanazuboff#stevemann#humanmachine#ratingart#rating#mediaart#newmediaart#politicalart#digitalart#postdigital#artnumerique#artcontemporain#contemporaryart#contemporaryartist#artexhibition#romantso#starrating#socialcreditsystem#imagineyouwakeupandthereisnointernet

0 notes

Photo

#ICHMI2023 is now accepting papers. Submit your papers before 03/11/2023 and be part of the extravaganza event happening in #Chennai #India #November #2023. Submit at:https://asdf.events/ichmi/ #ASDFInt #HostedEvents #Conference #2023Conferences #Event #HumanMachine...

0 notes

Text

Learning Nothing

How can we train a machine to recognise the difference between ‘something’ and ‘nothing’? Over the past few months, I have been working with Despina Papadopoulos on an R&D wearable project – Embodied Companionship, funded by Human Data Interaction.

“Embodied Companionship seeks to create a discursive relationship between machine learning and humans, centered around nuance, curiosity and second order feedback loops. Using machine learning to not only train and “learn” the wearers behaviour but create a symbiotic relationship with a technological artifact that rests on a mutual progression of understanding, the project aims to embody and make legible the process and shed some light on the black box.” – Text by Despina Papadopoulos

The project builds on the work we did last year in collaboration with Bless, where we created a prototype of a new form of wearable companion – Stylefree, a scarf that becomes ‘alive’ the more the wearer interacts with it. In Embodied Companionship, we wanted to further explore the theoretical, physical and cybernetic relationship between technology, the wearable (medium), and its wearer.

vimeo

*Stylefree – a collaboration between Despina Papadopoulos, Umbrellium and Bless.

In this blog post, I wanted to share some of the interesting challenges I faced through experimentations using machine learning algorithms and wearable microcontrollers to recognise our body movements and gestures. There will be more questions raised than answers in this post as this is a work in progress, but I am hoping to share more insights at the end of the project.

My research focuses on the use of the latest open-source machine learning library; Tensorflow Lite developed for Arduino Nano Ble Sense 33. Having designed, fabricated and programmed many wearable projects over the years (e.g Pollution Explorers – explore air quality with communities using wearables and machine learning algorithms), large scale performances (e.g SUPERGESTURES – each audience wore a gesture-sensing wearable to listen to geolocated audio stories and perform gestures created by young people) and platforms (e.g WearON – a platform for designers to quickly prototype connected IoT wearables), the board is a step up from any previous wearable-friendly controllers I have used. It contains many useful body-related sensors such as 9 axis inertial sensors, microphone, and a few other environmental sensors such as light and humidity sensors. With the type of sensors embedded, it becomes much easier to create smaller size wearables that can better sense the user’s position, movement and body gestures depending on where the board is placed on the body. And with its TinyML which allows the running of Edge Computing applications (AI), we can start to (finally!) play with more advanced gesture recognition. For the purpose of our project, the board is positioned on the arm of the wearer.

*Image of the prototype wearable of Embodied Companionship

Training a Machine

With the constraints, I started exploring a couple of fundamental questions – How does a machine understand a body gesture or a movement? How does it tell (or how can we tell it to tell…) one gesture apart from another? With any machine learning project, we require training data, it is used to provide examples of data patterns that correspond to user-defined categories of those patterns so that in future the machine can compare streams of data that are being captured to the examples and try to match them. However the algorithm doesn't simply match them, it returns a confidence level that the captured stream of data matches any particular pattern. Tensorflow offers a very good basic tutorial on gesture recognition using the arduino board, however, it is based on recognising simple and big gestures (e.g arm flexing and punching) which are easily recognisable. In order for the machine to learn a wearer’s gestural behaviour, it will involve learning many different types of movement patterns that a person might perform with their arm. So our first task is to check whether we can use this arduino and Tensorflow lite to recognise more than 2 types of gestures.

I started with adjusting various parameters of the machine learning code, for e.g, training more than 2 sets of distinct gestures, training with more subtle gestures, increasing the training data set for each gesture, increasing the epochs. The results were not satisfactory, the board could not recognise any of the gestures with high confidence mainly because each gestural data was not distinct enough for the machine to distinguish and hence it spreads its confidence level to the few gestures that it was taught with. It also highlighted a key question for me, i.e. how would a machine ‘know’ when a gesture is happening and when it is not happening? Without having an explicit button press to signify the start and end of a gesture (which is synonymous to the Alexa or Siri wake-up call), I realised that it would also need to recognise when a gesture was not happening.

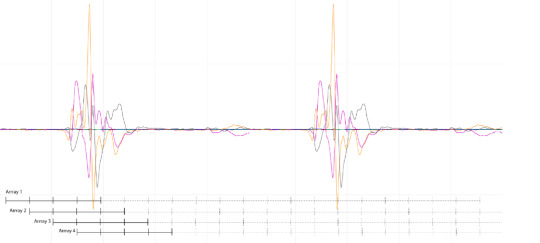

*How a gesture/movement is read on the serial plotter through its 3-axis accelerometer data

The original code from the tutorial was based on detecting a gesture the moment a significant motion is detected which could be a problem if we are trying to recognise more subtle gestures such as a slow hand waving or lifting the arm up slowly. I started experimenting with a couple of other ways for the arduino board to recognise a gesture at the ‘right’ time. First, I programmed a button where the wearer presses it to instruct the board to start recognising the gesture while it's being performed – this is not ideal as the wearer will have to consciously instruct the wearable whenever he/she is performing a gesture, but it allows me to understand what constitute a ‘right’ starting time to recognise a gesture. Lastly I tried programming the board to capture buckets of data at multiple short milliseconds time instances and run multiple analysis at once to compare each bucket and determine which bucket’s gesture at any instance returns the highest confidence level. However that does not return any significantly better result, it’s memory intensive for the board and reinforces the challenge, i.e. the machine needs to know when a person is not performing any gesture.

*Capturing buckets of data at multiple short milliseconds time

While the arduino board might be good at distinguishing between 2 gestures, if you perform a 3rd gesture that is untrained for the board, it will return either one of the learnt gestures with very low confidence level. This is because it was not taught with examples of other gestures. However, if we want it to learn a wearer’s behaviour over time, not only do we need to teach the machine with a set of gestures just like any language that comes with a strict set of components e.g alphabets, but it is equally important to teach it to recognise when the wearer is not doing anything significant. And with that it poses a major challenge, i.e. how much training do we need to teach a machine when the wearer is doing nothing?

Making Sense of the Nuances

When it comes to making sense of body gestures, our recognition of any gesture is guided by our background, culture, history, experience and interaction with each other. It is something that in this day and age, an advanced machine is still incapable of doing, e.g recognising different skin colours. Therefore, as much as we can train a machine to learn a body gesture through its x,y, z coordinates, or its speed of movement, we cannot train it with the cultural knowledge, experience or teach it to detect the subtle nuances of the meaning of a gesture (e.g the difference between crossing your arm when you are tired vs when you are feeling defensive).

Photo of one of the SUPERGESTURES workshops where young people design body gestures that can be detected by the wearable on their arm, and represent their story and vision of Manchester

It is worthwhile to remember that while this R&D project explores the extent to which machine learning can help create a discursive interaction between the wearer and the machine, there are limitations to the capability of a machine and it is important for us as designers and developers to help define a set of parameters that ensure that the machine can understand the nuances in order to create interaction that is meaningful for people of all backgrounds and colours.

While machine learning in other familiar fields such as camera vision do have some form of recognising “nothing” (e.g background subtraction), the concept of recognising “nothing” gestures (e.g should walking and standing up be considered ‘nothing’?) for wearable or body-based work is fairly new and has not been widely explored. A purely technological approach might say that ‘nothing’ simply requires adequate error-detection or filtering. But I would argue that the complexity of deciding what constitutes ‘nothing’ and the widely varying concept of what kinds of movement should be ‘ignored’ during training are absolutely vital to consider if we want to develop a wearable device that is trained for and useful for unique and different people. As this is work in progress, I will be experimenting more with this to gather more insights.

A blogpost by Ling Tan

#wearable technology#embodiedcompanionship#machinelearning#gesturerecognition#arduino#humanmachine#humandata#embodiedexperience#blog

0 notes

Photo

Treininho feito!!!... Crossfit Power 💪💪💪 #crossfit #crossfitpower #humanmachine #nofitnesshipster #strong #squat #deadlift #portugalweightlifting #bvdg #dosb #wirfuerd #fitness #sportportugal #teameumais #sporthilfe #roadtotokio #motivation #hipster #heavyweightcalisthenics #crossfitboys #crossfitlife #wod #eatclean #healthy #snatch #weightlifting #gym #fit (em Vila Nova de Gaia, Portugal)

#gym#roadtotokio#portugalweightlifting#crossfitboys#fit#nofitnesshipster#snatch#eatclean#humanmachine#sporthilfe#bvdg#dosb#motivation#teameumais#strong#wod#crossfitlife#crossfit#heavyweightcalisthenics#hipster#sportportugal#deadlift#crossfitpower#squat#healthy#fitness#weightlifting#wirfuerd

2 notes

·

View notes