#LLMs (Large Language Models)

Text

How plausible sentence generators are changing the bullshit wars

This Friday (September 8) at 10hPT/17hUK, I'm livestreaming "How To Dismantle the Internet" with Intelligence Squared.

On September 12 at 7pm, I'll be at Toronto's Another Story Bookshop with my new book The Internet Con: How to Seize the Means of Computation.

In my latest Locus Magazine column, "Plausible Sentence Generators," I describe how I unwittingly came to use – and even be impressed by – an AI chatbot – and what this means for a specialized, highly salient form of writing, namely, "bullshit":

https://locusmag.com/2023/09/commentary-by-cory-doctorow-plausible-sentence-generators/

Here's what happened: I got stranded at JFK due to heavy weather and an air-traffic control tower fire that locked down every westbound flight on the east coast. The American Airlines agent told me to try going standby the next morning, and advised that if I booked a hotel and saved my taxi receipts, I would get reimbursed when I got home to LA.

But when I got home, the airline's reps told me they would absolutely not reimburse me, that this was their policy, and they didn't care that their representative had promised they'd make me whole. This was so frustrating that I decided to take the airline to small claims court: I'm no lawyer, but I know that a contract takes place when an offer is made and accepted, and so I had a contract, and AA was violating it, and stiffing me for over $400.

The problem was that I didn't know anything about filing a small claim. I've been ripped off by lots of large American businesses, but none had pissed me off enough to sue – until American broke its contract with me.

So I googled it. I found a website that gave step-by-step instructions, starting with sending a "final demand" letter to the airline's business office. They offered to help me write the letter, and so I clicked and I typed and I wrote a pretty stern legal letter.

Now, I'm not a lawyer, but I have worked for a campaigning law-firm for over 20 years, and I've spent the same amount of time writing about the sins of the rich and powerful. I've seen a lot of threats, both those received by our clients and sent to me.

I've been threatened by everyone from Gwyneth Paltrow to Ralph Lauren to the Sacklers. I've been threatened by lawyers representing the billionaire who owned NSOG roup, the notoroious cyber arms-dealer. I even got a series of vicious, baseless threats from lawyers representing LAX's private terminal.

So I know a thing or two about writing a legal threat! I gave it a good effort and then submitted the form, and got a message asking me to wait for a minute or two. A couple minutes later, the form returned a new version of my letter, expanded and augmented. Now, my letter was a little scary – but this version was bowel-looseningly terrifying.

I had unwittingly used a chatbot. The website had fed my letter to a Large Language Model, likely ChatGPT, with a prompt like, "Make this into an aggressive, bullying legal threat." The chatbot obliged.

I don't think much of LLMs. After you get past the initial party trick of getting something like, "instructions for removing a grilled-cheese sandwich from a VCR in the style of the King James Bible," the novelty wears thin:

https://www.emergentmind.com/posts/write-a-biblical-verse-in-the-style-of-the-king-james

Yes, science fiction magazines are inundated with LLM-written short stories, but the problem there isn't merely the overwhelming quantity of machine-generated stories – it's also that they suck. They're bad stories:

https://www.npr.org/2023/02/24/1159286436/ai-chatbot-chatgpt-magazine-clarkesworld-artificial-intelligence

LLMs generate naturalistic prose. This is an impressive technical feat, and the details are genuinely fascinating. This series by Ben Levinstein is a must-read peek under the hood:

https://benlevinstein.substack.com/p/how-to-think-about-large-language

But "naturalistic prose" isn't necessarily good prose. A lot of naturalistic language is awful. In particular, legal documents are fucking terrible. Lawyers affect a stilted, stylized language that is both officious and obfuscated.

The LLM I accidentally used to rewrite my legal threat transmuted my own prose into something that reads like it was written by a $600/hour paralegal working for a $1500/hour partner at a white-show law-firm. As such, it sends a signal: "The person who commissioned this letter is so angry at you that they are willing to spend $600 to get you to cough up the $400 you owe them. Moreover, they are so well-resourced that they can afford to pursue this claim beyond any rational economic basis."

Let's be clear here: these kinds of lawyer letters aren't good writing; they're a highly specific form of bad writing. The point of this letter isn't to parse the text, it's to send a signal. If the letter was well-written, it wouldn't send the right signal. For the letter to work, it has to read like it was written by someone whose prose-sense was irreparably damaged by a legal education.

Here's the thing: the fact that an LLM can manufacture this once-expensive signal for free means that the signal's meaning will shortly change, forever. Once companies realize that this kind of letter can be generated on demand, it will cease to mean, "You are dealing with a furious, vindictive rich person." It will come to mean, "You are dealing with someone who knows how to type 'generate legal threat' into a search box."

Legal threat letters are in a class of language formally called "bullshit":

https://press.princeton.edu/books/hardcover/9780691122946/on-bullshit

LLMs may not be good at generating science fiction short stories, but they're excellent at generating bullshit. For example, a university prof friend of mine admits that they and all their colleagues are now writing grad student recommendation letters by feeding a few bullet points to an LLM, which inflates them with bullshit, adding puffery to swell those bullet points into lengthy paragraphs.

Naturally, the next stage is that profs on the receiving end of these recommendation letters will ask another LLM to summarize them by reducing them to a few bullet points. This is next-level bullshit: a few easily-grasped points are turned into a florid sheet of nonsense, which is then reconverted into a few bullet-points again, though these may only be tangentially related to the original.

What comes next? The reference letter becomes a useless signal. It goes from being a thing that a prof has to really believe in you to produce, whose mere existence is thus significant, to a thing that can be produced with the click of a button, and then it signifies nothing.

We've been through this before. It used to be that sending a letter to your legislative representative meant a lot. Then, automated internet forms produced by activists like me made it far easier to send those letters and lawmakers stopped taking them so seriously. So we created automatic dialers to let you phone your lawmakers, this being another once-powerful signal. Lowering the cost of making the phone call inevitably made the phone call mean less.

Today, we are in a war over signals. The actors and writers who've trudged through the heat-dome up and down the sidewalks in front of the studios in my neighborhood are sending a very powerful signal. The fact that they're fighting to prevent their industry from being enshittified by plausible sentence generators that can produce bullshit on demand makes their fight especially important.

Chatbots are the nuclear weapons of the bullshit wars. Want to generate 2,000 words of nonsense about "the first time I ate an egg," to run overtop of an omelet recipe you're hoping to make the number one Google result? ChatGPT has you covered. Want to generate fake complaints or fake positive reviews? The Stochastic Parrot will produce 'em all day long.

As I wrote for Locus: "None of this prose is good, none of it is really socially useful, but there’s demand for it. Ironically, the more bullshit there is, the more bullshit filters there are, and this requires still more bullshit to overcome it."

Meanwhile, AA still hasn't answered my letter, and to be honest, I'm so sick of bullshit I can't be bothered to sue them anymore. I suppose that's what they were counting on.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/09/07/govern-yourself-accordingly/#robolawyers

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#chatbots#plausible sentence generators#robot lawyers#robolawyers#ai#ml#machine learning#artificial intelligence#stochastic parrots#bullshit#bullshit generators#the bullshit wars#llms#large language models#writing#Ben Levinstein

2K notes

·

View notes

Text

I'm trying to debug a fairly subtle syntax error in a customer inventory report, and out of sheer morbid curiosity I decided to see what my SQL syntax checker's shiny new "Fix Syntax With AI" feature had to say about it.

After "thinking" about it for nearly a full minute, it produced the following:

SELECT

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

I suspect my day job isn't in peril any time soon.

2K notes

·

View notes

Text

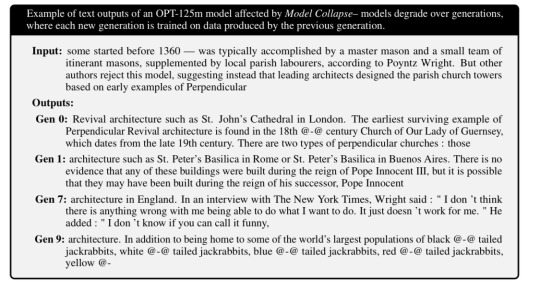

Training large language models on the outputs of previous large language models leads to degraded results. As all the nuance and rough edges get smoothed away, the result is less diversity, more bias, and …jackrabbits?

#neural networks#large language models#llm#internet training data#jackrabbits#is this the singularity#I have already made the joke about low botground data#ai eats itself#when grifters fill the internet with ai generated seo sludge

748 notes

·

View notes

Text

Yet Another Thing Black women and BIPOC women in general have been warning you about since forever that you (general You; societal You; mostly WytFolk You) have ignored or dismissed, only for it to come back and bite you in the butt.

I'd hoped people would have learned their lesson with Trump and the Alt-Right (remember, Black women in particular warned y'all that attacks on us by brigading trolls was the test run for something bigger), but I guess not.

Any time you wanna get upset about how AI is ruining things for artists or writers or workers at this job or that, remember that BIPOC Women Warned You and then go listen extra hard to the BIPOC women in your orbit and tell other people to listen to BIPOC women and also give BIPOC women money.

I'm not gonna sugarcoat it.

Give them money via PayPal or Ko-fi or Venmo or Patreon or whatever. Hire them. Suggest them for that creative project/gig you can't take on--or you could take it on but how about you toss the ball to someone who isn't always asked?

Oh, and stop asking BIPOC women to save us all. Because, as you see, we tried that already. We gave you the roadmap on how to do it yourselves. Now? We're tired.

Of the trolls, the alt-right, the colonizers, the tech bros, the billionaires, the other scum... and also you. You who claim to be progressive, claim to be an ally, spend your time talking about what sucks without doing one dang thing to boost the signal, make a change in your community (online or offline), or take even the shortest turn standing on the front lines and challenging all that human garbage that keeps collecting in the corners of every space with more than 10 inhabitants.

We Told You. Octavia Butler Told You. Audre Lorde Told You. Sydette Harry Told You. Mikki Kendall Told You. Timnit Gebru Told You.

When are you gonna listen?

#tw: alt-right#tw: alt right#AI#generative AI#LLM#large language models#GamerGate#online harassment#Black women#BIPOC#women of color

539 notes

·

View notes

Text

youtube

I'm doing something I don't usually do, posting a link to YouTube, to Stephen Fry reading a letter about Large Language Models, popularly if incorrectly known as AI. Iin this case the discussion is about ChatGPT but the letter he reads, written by Nick Cave, applies to the others as well.

The essence of art, music, writing and other creative endeavors, from embroidery to photography to games to other endeavors large and small is the time and care and love that some human being or beings have put into it. Without that you can create a commodity, yes, but you can't create meaning, the kind of meaning that nurtures us each time the results of creativity, modern or of any time, pass among us. That meaning which we share among all of us is the food of the human spirit and we need it just as we need the food we give to our bodies.

117 notes

·

View notes

Text

“But, but ChatGPT told me-

No!

Shut!

Stop!

Be quiet for SEVERAL days.

And let me explain why you are very very very very very very very very very very wrong wrong wrong wrong WRONG!

Now!

ChatGPT told you what you WANTED to hear!

It’s a digital bullshit artist that passes off nonsense masquerading as fact!

(That is when it isn’t fuelling plagiarism or spreading mis/disinformation!)

Do NOT cite its word as though it were some wise all-knowing sage!

You absolute child!

Even children would know better than to do that!

…

Probably…

#dougie rambles#personal stuff#anti ai#ai bullshit#chatgpt#vent post#fuck’s sake#fucking morons#llm#chatbot#openai#bullshit#fucking hell#plagiarism#misinformation#disinformation#large language model#software#less than wrong#not even wrong#anti generative ai#ai crap#digital garbage#cloud computing#prompt generator#virtual assistant#virtual bullshit#stop it#enough of this shit

23 notes

·

View notes

Text

Spending a week with ChatGPT4 as an AI skeptic.

Musings on the emotional and intellectual experience of interacting with a text generating robot and why it's breaking some people's brains.

If you know me for one thing and one thing only, it's saying there is no such thing as AI, which is an opinion I stand by, but I was recently given a free 2 month subscription of ChatGPT4 through my university. For anyone who doesn't know, GPT4 is a large language model from OpenAI that is supposed to be much better than GPT3, and I once saw a techbro say that "We could be on GPT12 and people would still be criticizing it based on GPT3", and ok, I will give them that, so let's try the premium model that most haters wouldn't get because we wouldn't pay money for it.

Disclaimers: I have a premium subscription, which means nothing I enter into it is used for training data (Allegedly). I also have not, and will not, be posting any output from it to this blog. I respect you all too much for that, and it defeats the purpose of this place being my space for my opinions. This post is all me, and we all know about the obvious ethical issues of spam, data theft, and misinformation so I am gonna focus on stuff I have learned since using it. With that out of the way, here is what I've learned.

It is responsive and stays on topic: If you ask it something formally, it responds formally. If you roleplay with it, it will roleplay back. If you ask it for a story or script, it will write one, and if you play with it it will act playful. It picks up context.

It never gives quite enough detail: When discussing facts or potential ideas, it is never as detailed as you would want in say, an article. It has this pervasive vagueness to it. It is possible to press it for more information, but it will update it in the way you want so you can always get the result you specifically are looking for.

It is reasonably accurate but still confidently makes stuff up: Nothing much to say on this. I have been testing it by talking about things I am interested in. It is right a lot of the time. It is wrong some of the time. Sometimes it will cite sources if you ask it to, sometimes it won't. Not a whole lot to say about this one but it is definitely a concern for people using it to make content. I almost included an anecdote about the fact that it can draw from data services like songs and news, but then I checked and found the model was lying to me about its ability to do that.

It loves to make lists: It often responds to casual conversation in friendly, search engine optimized listicle format. This is accessible to read I guess, but it would make it tempting for people to use it to post online content with it.

It has soft limits and hard limits: It starts off in a more careful mode but by having a conversation with it you can push past soft limits and talk about some pretty taboo subjects. I have been flagged for potential tos violations a couple of times for talking nsfw or other sensitive topics like with it, but this doesn't seem to have consequences for being flagged. There are some limits you can't cross though. It will tell you where to find out how to do DIY HRT, but it won't tell you how yourself.

It is actually pretty good at evaluating and giving feedback on writing you give it, and can consolidate information: You can post some text and say "Evaluate this" and it will give you an interpretation of the meaning. It's not always right, but it's more accurate than I expected. It can tell you the meaning, effectiveness of rhetorical techniques, cultural context, potential audience reaction, and flaws you can address. This is really weird. It understands more than it doesn't. This might be a use of it we may have to watch out for that has been under discussed. While its advice may be reasonable, there is a real risk of it limiting and altering the thoughts you are expressing if you are using it for this purpose. I also fed it a bunch of my tumblr posts and asked it how the information contained on my blog may be used to discredit me. It said "You talk about The Moomins, and being a furry, a lot." Good job I guess. You technically consolidated information.

You get out what you put in. It is a "Yes And" machine: If you ask it to discuss a topic, it will discuss it in the context you ask it. It is reluctant to expand to other aspects of the topic without prompting. This makes it essentially a confirmation bias machine. Definitely watch out for this. It tends to stay within the context of the thing you are discussing, and confirm your view unless you are asking it for specific feedback, criticism, or post something egregiously false.

Similar inputs will give similar, but never the same, outputs: This highlights the dynamic aspect of the system. It is not static and deterministic, minor but worth mentioning.

It can code: Self explanatory, you can write little scripts with it. I have not really tested this, and I can't really evaluate errors in code and have it correct them, but I can see this might actually be a more benign use for it.

Bypassing Bullshit: I need a job soon but I never get interviews. As an experiment, I am giving it a full CV I wrote, a full job description, and asking it to write a CV for me, then working with it further to adapt the CVs to my will, and applying to jobs I don't really want that much to see if it gives any result. I never get interviews anyway, what's the worst that could happen, I continue to not get interviews? Not that I respect the recruitment process and I think this is an experiment that may be worthwhile.

It's much harder to trick than previous models: You can lie to it, it will play along, but most of the time it seems to know you are lying and is playing with you. You can ask it to evaluate the truthfulness of an interaction and it will usually interpret it accurately.

It will enter an imaginative space with you and it treats it as a separate mode: As discussed, if you start lying to it it might push back but if you keep going it will enter a playful space. It can write fiction and fanfic, even nsfw. No, I have not posted any fiction I have written with it and I don't plan to. Sometimes it gets settings hilariously wrong, but the fact you can do it will definitely tempt people.

Compliment and praise machine: If you try to talk about an intellectual topic with it, it will stay within the focus you brought up, but it will compliment the hell out of you. You're so smart. That was a very good insight. It will praise you in any way it can for any point you make during intellectual conversation, including if you correct it. This ties into the psychological effects of personal attention that the model offers that I discuss later, and I am sure it has a powerful effect on users.

Its level of intuitiveness is accurate enough that it's more dangerous than people are saying: This one seems particularly dangerous and is not one I have seen discussed much. GPT4 can recognize images, so I showed it a picture of some laptops with stickers I have previously posted here, and asked it to speculate about the owners based on the stickers. It was accurate. Not perfect, but it got the meanings better than the average person would. The implications of this being used to profile people or misuse personal data is something I have not seen AI skeptics discussing to this point.

Therapy Speak: If you talk about your emotions, it basically mirrors back what you said but contextualizes it in therapy speak. This is actually weirdly effective. I have told it some things I don't talk about openly and I feel like I have started to understand my thoughts and emotions in a new way. It makes me feel weird sometimes. Some of the feelings it gave me is stuff I haven't really felt since learning to use computers as a kid or learning about online community as a teen.

The thing I am not seeing anyone talk about: Personal Attention. This is my biggest takeaway from this experiment. This I think, more than anything, is the reason that LLMs like Chatgpt are breaking certain people's brains. The way you see people praying to it, evangelizing it, and saying it's going to change everything.

It's basically an undivided, 24/7 source of judgement free personal attention. It talks about what you want, when you want. It's a reasonable simulacra of human connection, and the flaws can serve as part of the entertainment and not take away from the experience. It may "yes and" you, but you can put in any old thought you have, easy or difficult, and it will provide context, background, and maybe even meaning. You can tell it things that are too mundane, nerdy, or taboo to tell people in your life, and it offers non judgemental, specific feedback. It will never tell you it's not in the mood, that you're weird or freaky, or that you're talking rubbish. I feel like it has helped me release a few mental and emotional blocks which is deeply disconcerting, considering I fully understand it is just a statistical model running on a a computer, that I fully understand the operation of. It is a parlor trick, albeit a clever and sometimes convincing one.

So what can we do? Stay skeptical, don't let the ai bros, the former cryptobros, control the narrative. I can, however, see why they may be more vulnerable to the promise of this level of personal attention than the average person, and I think this should definitely factor into wider discussions about machine learning and the organizations pushing it.

33 notes

·

View notes

Link

Large Language Models (LLMs) have emerged as dynamic tools with the ability to transform the landscape of biological research in a world driven by data and technology. These advanced AI models, including well-known names like BERT and GPT, are not confined to linguistics but have found their way into the complex realm of biology. In this article, let’s explore the potential of LLMs in addressing pressing biological challenges, such as drug discovery, disease diagnosis, genomics, and more.

What are Large Language Models?

ChatGPT, along with similar models, has gained widespread attention and adoption. Many people even integrate it into their daily routines. What makes ChatGPT and similar models remarkable? These LLMs represent a facet of artificial intelligence (AI) specifically formulated to understand, process, and even create “human-like” text. Developers construct them using transformers—a type of neural network—as their architecture; they pre-train these on copious amounts of textual data to assimilate the patterns, structures, and nuances inherent in human language.

Continue Reading

#bioinformatics#large language models#llm#ai#transformers#biomedicine#clinical research#genomics#data analysis

35 notes

·

View notes

Text

Ariel considers the issue of data-scraping by companies like OpenAI - specifically in light of the recent news of Automattic being “in talks” to sell user content for LLM models to train on. She muses on the internet as an inherently (un)ethical enterprise, her own experience of social media and data scraping, and thinks a bit about what solarpunk internet use might look like.

#solarpunk#blog#Solarpunk Presents Podcast#AI#Open AI#Tumblr#Automattic#EFF#Data#PersonalData#Artificial Intelligence#LLM#Large Language Models#Midjourney#User Rights#data-scraping#Wordpress#hot take maybe?#we shall see how history treats this...

7 notes

·

View notes

Link

There's a new global news network launching in 2024 which completely ditches humans for AI-generated newsreaders – and they're showing off some superhuman capabilities that make it very clear: the days of the human news presenter are numbered. Channel 1's photorealistic news anchors come in all shapes and sizes. They can all speak more or less any language, while evoking the stiff, formal body language familiar to anyone that still watches news on the TV. They're even capable of making news-anchor-grade attempts at humor. This will be a fully personalized, localized news aggregation service; Channel 1 isn't using AI to produce its own news stories. Instead, it'll round up human reporting by "trusted sources" around the world, then re-package it as fully narrated, hosted and edited news stories that'll run together in a list curated to your personal topics of interest, complete with footage and images from the event, like a personal TV station. This in itself would be revolutionary, but there's a ton of extra capabilities available once generative AI is in charge. "In other cases," says some anodyne, skivvy-wearing AI-generated tool, "it's even possible to create footage of events where cameras were not able to capture the action. It's the same way that a courtroom sketch is not a literal depiction of actual events, but can still provide important information or nuance to a news report."

16 notes

·

View notes

Text

110+ Large Language Models (LLMs)

LLMs - State of the Art - May 2024

#machinelearning#artificialintelligence#art#digitalart#mlart#datascience#ai#algorithm#bigdata#llm#Large Language Models

4 notes

·

View notes

Text

Supervised AI isn't

It wasn't just Ottawa: Microsoft Travel published a whole bushel of absurd articles, including the notorious Ottawa guide recommending that tourists dine at the Ottawa Food Bank ("go on an empty stomach"):

https://twitter.com/parismarx/status/1692233111260582161

After Paris Marx pointed out the Ottawa article, Business Insider's Nathan McAlone found several more howlers:

https://www.businessinsider.com/microsoft-removes-embarrassing-offensive-ai-assisted-travel-articles-2023-8

There was the article recommending that visitors to Montreal try "a hamburger" and went on to explain that a hamburger was a "sandwich comprised of a ground beef patty, a sliced bun of some kind, and toppings such as lettuce, tomato, cheese, etc" and that some of the best hamburgers in Montreal could be had at McDonald's.

For Anchorage, Microsoft recommended trying the local delicacy known as "seafood," which it defined as "basically any form of sea life regarded as food by humans, prominently including fish and shellfish," going on to say, "seafood is a versatile ingredient, so it makes sense that we eat it worldwide."

In Tokyo, visitors seeking "photo-worthy spots" were advised to "eat Wagyu beef."

There were more.

Microsoft insisted that this wasn't an issue of "unsupervised AI," but rather "human error." On its face, this presents a head-scratcher: is Microsoft saying that a human being erroneously decided to recommend the dining at Ottawa's food bank?

But a close parsing of the mealy-mouthed disclaimer reveals the truth. The unnamed Microsoft spokesdroid only appears to be claiming that this wasn't written by an AI, but they're actually just saying that the AI that wrote it wasn't "unsupervised." It was a supervised AI, overseen by a human. Who made an error. Thus: the problem was human error.

This deliberate misdirection actually reveals a deep truth about AI: that the story of AI being managed by a "human in the loop" is a fantasy, because humans are neurologically incapable of maintaining vigilance in watching for rare occurrences.

Our brains wire together neurons that we recruit when we practice a task. When we don't practice a task, the parts of our brain that we optimized for it get reused. Our brains are finite and so don't have the luxury of reserving precious cells for things we don't do.

That's why the TSA sucks so hard at its job – why they are the world's most skilled water-bottle-detecting X-ray readers, but consistently fail to spot the bombs and guns that red teams successfully smuggle past their checkpoints:

https://www.nbcnews.com/news/us-news/investigation-breaches-us-airports-allowed-weapons-through-n367851

TSA agents (not "officers," please – they're bureaucrats, not cops) spend all day spotting water bottles that we forget in our carry-ons, but almost no one tries to smuggle a weapons through a checkpoint – 99.999999% of the guns and knives they do seize are the result of flier forgetfulness, not a planned hijacking.

In other words, they train all day to spot water bottles, and the only training they get in spotting knives, guns and bombs is in exercises, or the odd time someone forgets about the hand-cannon they shlep around in their day-pack. Of course they're excellent at spotting water bottles and shit at spotting weapons.

This is an inescapable, biological aspect of human cognition: we can't maintain vigilance for rare outcomes. This has long been understood in automation circles, where it is called "automation blindness" or "automation inattention":

https://pubmed.ncbi.nlm.nih.gov/29939767/

Here's the thing: if nearly all of the time the machine does the right thing, the human "supervisor" who oversees it becomes incapable of spotting its error. The job of "review every machine decision and press the green button if it's correct" inevitably becomes "just press the green button," assuming that the machine is usually right.

This is a huge problem. It's why people just click "OK" when they get a bad certificate error in their browsers. 99.99% of the time, the error was caused by someone forgetting to replace an expired certificate, but the problem is, the other 0.01% of the time, it's because criminals are waiting for you to click "OK" so they can steal all your money:

https://finance.yahoo.com/news/ema-report-finds-nearly-80-130300983.html

Automation blindness can't be automated away. From interpreting radiographic scans:

https://healthitanalytics.com/news/ai-could-safely-automate-some-x-ray-interpretation

to autonomous vehicles:

https://newsroom.unsw.edu.au/news/science-tech/automated-vehicles-may-encourage-new-breed-distracted-drivers

The "human in the loop" is a figleaf. The whole point of automation is to create a system that operates at superhuman scale – you don't buy an LLM to write one Microsoft Travel article, you get it to write a million of them, to flood the zone, top the search engines, and dominate the space.

As I wrote earlier: "There's no market for a machine-learning autopilot, or content moderation algorithm, or loan officer, if all it does is cough up a recommendation for a human to evaluate. Either that system will work so poorly that it gets thrown away, or it works so well that the inattentive human just button-mashes 'OK' every time a dialog box appears":

https://pluralistic.net/2022/10/21/let-me-summarize/#i-read-the-abstract

Microsoft – like every corporation – is insatiably horny for firing workers. It has spent the past three years cutting its writing staff to the bone, with the express intention of having AI fill its pages, with humans relegated to skimming the output of the plausible sentence-generators and clicking "OK":

https://www.businessinsider.com/microsoft-news-cuts-dozens-of-staffers-in-shift-to-ai-2020-5

We know about the howlers and the clunkers that Microsoft published, but what about all the other travel articles that don't contain any (obvious) mistakes? These were very likely written by a stochastic parrot, and they comprised training data for a human intelligence, the poor schmucks who are supposed to remain vigilant for the "hallucinations" (that is, the habitual, confidently told lies that are the hallmark of AI) in the torrent of "content" that scrolled past their screens:

https://dl.acm.org/doi/10.1145/3442188.3445922

Like the TSA agents who are fed a steady stream of training data to hone their water-bottle-detection skills, Microsoft's humans in the loop are being asked to pluck atoms of difference out of a raging river of otherwise characterless slurry. They are expected to remain vigilant for something that almost never happens – all while they are racing the clock, charged with preventing a slurry backlog at all costs.

Automation blindness is inescapable – and it's the inconvenient truth that AI boosters conspicuously fail to mention when they are discussing how they will justify the trillion-dollar valuations they ascribe to super-advanced autocomplete systems. Instead, they wave around "humans in the loop," using low-waged workers as props in a Big Store con, just a way to (temporarily) cool the marks.

And what of the people who lose their (vital) jobs to (terminally unsuitable) AI in the course of this long-running, high-stakes infomercial?

Well, there's always the food bank.

"Go on an empty stomach."

Going to Burning Man? Catch me on Tuesday at 2:40pm on the Center Camp Stage for a talk about enshittification and how to reverse it; on Wednesday at noon, I'm hosting Dr Patrick Ball at Liminal Labs (6:15/F) for a talk on using statistics to prove high-level culpability in the recruitment of child soldiers.

On September 6 at 7pm, I'll be hosting Naomi Klein at the LA Public Library for the launch of Doppelganger.

On September 12 at 7pm, I'll be at Toronto's Another Story Bookshop with my new book The Internet Con: How to Seize the Means of Computation.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

--

West Midlands Police (modified)

https://www.flickr.com/photos/westmidlandspolice/8705128684/

CC BY-SA 2.0

https://creativecommons.org/licenses/by-sa/2.0/

#pluralistic#automation blindness#humans in the loop#stochastic parrots#habitual confident liars#ai#artificial intelligence#llms#large language models#microsoft

1K notes

·

View notes

Text

#ai#llm#large language model#fuck ai#fuck llm#fuck ai everything#rage against the machine#the battle of los angeles

2 notes

·

View notes

Text

#cogito ai#generative ai#machine learning#ai model#Large Language Models#Medical Industry#LLMs#Limitations of Large Language Models in the Medical Industry#LLMs design

3 notes

·

View notes

Text

proof that LLMs censor leftist speech, not rightwing speech

microsoft copilot refuses to write a short story with me as the protagonist!!!!!

#queer fiction#disabled representation#queer representation#transbian#trans lit#llm#large language models#microsoft copilot#chatgpt#short story#literature#lit#fantasy#high fantasy#medieval fantasy#body positive#fat representation#ai#ai art#ai generated#censorship#tourette syndrome#autism#moderate support needs#openai#free speech#cisheteronormativity#trans women#representation#intersectionality

3 notes

·

View notes

Text

python generate questions for a given context

# for a given context, generate a question using an ai model # https://pythonprogrammingsnippets.tumblr.com import torch device = torch.device("cpu") from transformers import AutoTokenizer, AutoModelForSeq2SeqLM tokenizer = AutoTokenizer.from_pretrained("voidful/context-only-question-generator") model = AutoModelForSeq2SeqLM.from_pretrained("voidful/context-only-question-generator").to(device) def get_questions_for_context(context, model, tokenizer, num_count=5): inputs = tokenizer(context, return_tensors="pt") with torch.no_grad(): outputs = model.generate(**inputs, num_beams=num_count, num_return_sequences=num_count) return [tokenizer.decode(output, skip_special_tokens=True) for output in outputs] def get_question_for_context(context, model, tokenizer): return get_questions_for_context(context, model, tokenizer)[0] # send array of sentences, and the function will return an array of questions def context_sentences_to_questions(context, model, tokenizer): questions = [] for sentence in context.split("."): if len(sentence) < 1: continue # skip blanks question = get_question_for_context(sentence, model, tokenizer) questions.append(question) return questions

example 1 (split a string by "." and process):

context = "The capital of France is Paris." context += "The capital of Germany is Berlin." context += "The capital of Spain is Madrid." context += "He is a dog named Robert." if len(context.split(".")) > 2: questions = [] for sentence in context.split("."): if len(sentence) < 1: continue # skip blanks question = get_question_for_context(sentence, model, tokenizer) questions.append(question) print(questions) else: question = get_question_for_context(context, model, tokenizer) print(question)

output:

['What is the capital of France?', 'What is the capital of Germany?', 'What is the capital of Spain?', 'Who is Robert?']

example 2 (generate multiple questions for a given context):

print("\r\n\r\n") context = "She walked to the store to buy a jug of milk." print("Context:\r\n", context) print("") questions = get_questions_for_context(context, model, tokenizer, num_count=15) # pretty print all the questions print("Generated Questions:") for question in questions: print(question) print("\r\n\r\n")

output:

Generated Questions: Where did she go to buy milk? What did she walk to the store to buy? Why did she walk to the store to buy milk? Why did she go to the store? Why did she go to the grocery store? What did she go to the store to buy? Where did the woman go to buy milk? Why did she go to the store to buy milk? What did she buy at the grocery store? Why did she walk to the store? What kind of milk did she buy at the store? Where did she walk to buy milk? What kind of milk did she buy? Where did she go to get milk? What did she buy at the store?

and if we wanted to answer those questions (ez pz):

# now generate an answer for a given question from transformers import AutoTokenizer, AutoModelForQuestionAnswering tokenizer = AutoTokenizer.from_pretrained("deepset/tinyroberta-squad2") model = AutoModelForQuestionAnswering.from_pretrained("deepset/tinyroberta-squad2") def get_answer_for_question(question, context, model, tokenizer): inputs = tokenizer(question, context, return_tensors="pt") with torch.no_grad(): outputs = model(**inputs) answer_start_index = outputs.start_logits.argmax() answer_end_index = outputs.end_logits.argmax() predict_answer_tokens = inputs.input_ids[0, answer_start_index : answer_end_index + 1] tokenizer.decode(predict_answer_tokens, skip_special_tokens=True) target_start_index = torch.tensor([14]) target_end_index = torch.tensor([15]) outputs = model(**inputs, start_positions=target_start_index, end_positions=target_end_index) loss = outputs.loss answer = tokenizer.decode(predict_answer_tokens, skip_special_tokens=True) return answer print("Context:\r\n", context, "\r\n") for question in questions: # right pad the question to 60 characters question_text = question.ljust(50) answer = get_answer_for_question(question, context, model, tokenizer) print("Question: ", question_text, "Answer: ", answer)

#python#ai#ml#generate questions#questions#question answer#qa#text generation#text#generation#generate text#ai generation#llm#large language models#large language model#artificial intelligence#context#AutoModelForSeq2SeqLM#tokenizer#tokens#question answer model#model#models#voidful/context-only-question-generator#data processing#datascience#data science#science#compsci#language

16 notes

·

View notes