#these are the people mentioned by name

Text

Life Series AU

Wrote some lore about the life series characters as Gods with Witchcraft SMP in mind. It got out of hand, and now I have a 1.4k story below.

As everyone knows, from the oldest, crankiest elder to the youngest, brightest toddler, magic is a part of this world as surely as Winter melts into Spring.

We can see it in people, like the illusionist who passed by the town with the circus group. In the wild, we can see it flitting between the trees, lighting up the world. It is in the ground, seeping up when it is called.

But we haven’t always had magic. Once, the only ones to possess it were the Gods.

Seventeen of them, each with a different domain. They lived in harmony, watching over the world., creating and destroying as they saw fit. Anytime they wished to meet, they met around the Watching Crystal. None of them knew what it was, only that if any of them died, it would store their powers and souls. Of course, they had varying ways of interaction with mortals. For instance, the Sky God liked to reveal himself to humans in the hope that they would worship him. He was a fickle god, that one. Or the God of Fire, who played deadly games with life-changing prizes.

Among them was the Starborne. He had perhaps the strangest and least explainable gift. He was the first prophet. Though he often advocated for humans to get a taste of divine magic, he was usually overruled.

One day, he had a vision. The Scarlet Witch, the harbinger of death, was standing in a clearing, ichor dripping from her scythes.

Now, the Scarlet Witch was an enigma to most of the others, almost never turning up to the meetings the other sixteen called. She was a grim woman who often laughed at the suffering of those around her. She was hard to get alone with, so most of the other Gods shunned her, with only three exceptions. The God of Emotions, also known as the patron of bakers, was sympathetic to her. The Lady of Time was her partner in chaos. And the Starborne was her closest friend, her confidant. If anyone was going to be on her side, he would.

When the Starborne received this vision of things to come, he naturally became scared. His trusted ally, who had a reputation for being unhinged, was going to kill another God. So he did what he always did, and sketched out what he had seen to flesh out all the important details.

The Sky God had been in a silly, goofy mood, so he decided he was going to snoop around. His first target: a certain prophet. He walked into the room where all the visions were gathered. And lo and behold: in the center of the pictures of prophecies, was the recently sketched vision, the one that foretold the death of at least one God.

This scared the Sky God. He had never liked the Scarlet Witch. She was always a little too chaotic, a little too bloody for his liking. So, he decided to make sure this prophecy wouldn’t come true. He called a meeting with as many Gods as he could. Under the light of the Watching Crystal, he wove the tale of what was to come. Seeing as the Scarlet Witch had not been invited, and the Starborne had not made an appearance, she had no warning of the prophecy. After gathering five of his most trusted friends, he went after her.

It was a close fight. It is said that the famous scar over the Scarlet Witch’s eye was made during that battle. None of the Gods that fought were weak. But the Scarlet Witch was Death itself, ready to collect a divine life at long last. The Canary, the god of luck, was the first to fall. Two more of them were slain before the Sky God called for a retreat that ultimately failed. The Queen of Shadows was cut down, and her husband followed soon after. The final attacker, the God of Chaos, died of his injuries soon after.

After that, she disappeared for a few years. Alone in a forest in the North, she had a lot of time to think, to reflect. She knew that if a God died, their powers would be stored in the Watching Crystal. And the seventeen - well, eleven now - of them were far from orderly. If there were fewer of them, they could rule more effectively. Besides, they could probably find a way to access the powers of the Gods who were deemed unfit through the Crystal. So she watched and waited for someone to find her. Death is patient, after all.

The other Gods were scared. The Scarlet Witch was one of the most formidable among them, and would not listen to anything they had to say. So, they decided to try and flush her out of hiding by using her best friend. Some of them wanted the Starborne dead, because their friends and loved ones were gone. Others wanted to use him as a hostage. So they went to his board of visions, where all of his visions were recorded. He would be there, after all. The Lady of Time tried to stall the others, back them down. But the desperation and fear had caught up to them, and they forced past her.

They went into the room he used, covered with notes and pictures and scattered papers. On the table in the center, they saw a picture. It was a mid action shot of the Starborne exploding in a rush of light. It is said that he was smiling. There was neither hide nor hair of him anywhere. So, they moved back to the search for the threat. And, one or two expeditions were successful. But they did not consider that anyone they sent was liable to death themselves.

The God of Illusions powers of persuasion couldn’t save him when he needed them the most. The God of Emotions was in the wrong place at the wrong time, and couldn’t back down his former friend. Then came three more deaths. Then the Scarlet Witch emerged from hiding to cut down two more.

Finally, the Lady of Time had enough. There were only four Gods left. Knowing that the Starborne would not be helpful in a fight, even if they could find him and convince him to help kill his best friend, the Lady banded together with the Runemaster to end the threat at last.

The Runemaster used his powers of Listening to find the Scarlet Witch. They both tracked her down to the forest where she had spent so much time. The three of them fought. It was a hard, bitter battle. In the end, ichor dripping from her scythes, the Scarlet Witch claimed a hard-earned victory.

She celebrated her success. There would be no more chaos now that it was just her. There would be no more wars, no more extortion of the mortals by any Gods. The only divine force would be Death, which would take without remorse.

Then she remembered that she was not alone. There was still one more God. And she knew just where to find him.

Nobody knows what would have happened if the Starborne had backed down. Maybe they would have ruled side by side. Maybe he would have been killed for lack of loyalty.

But when the Scarlet Witch went to the Watching Crystal to meet with the only being that could compare to her. The inside of the Crystal pulsed with moving lights, like the souls trapped inside were still alive, waiting to be released.

The Scarlet Witch went in there, sure she’d win again. But she was not up against a soul that wished to remain in this world. The Starborne believed that neither of them deserved life. He had been a coward who ran away instead of helping his friends, and their blood still stained the Witch’s red cloak. So he smiled as he activated his secret power. When he wished to die, he would explode, taking everything in the nearby radius down with him.

The Watching Crystal shattered, then fell down, down, down. The pieces scattered all across the lands, seeping magic into the earth. The magic of the Gods started to exhibit itself in nearby mortals, the first magic users.

But Gods do not die that easily, some say. There are whispers that they could be reborn, into mortal bodies like ours. We may be walking among them right now.

Of course, those are just myths. Nobody is that optimistic or pessimistic to believe in such tales. The Gods are dead. We all go to Death, even the Reaper herself.

Nobody knows why we do not have prophets. Maybe the Starborne decided not to pass down his gift, knowing the burden it placed upon the holder. But to this day, the magic of Death, necromancy, has been shunned from all parts of society. And in honor of the catalyst of this whole mess, we call ourselves witches.

So, if you ever see magic, remember how it got here and who it came from. Because every good thing has a price. This one was paid in divine blood.

#double life smp#trafficblr#life series#pearlescentmoon#scott smajor#zombiecleo#tangotek#smallishbeans#ldshadowlady#grian#goodtimeswithscar#inthelittlewood#solidaritygaming#witchcraft smp#bigbstatz#bigbst4tz2#these are the people mentioned by name

65 notes

·

View notes

Text

this post sucks so bad massachusetts takes its name from the indigenous massachusett people who were genocided and whose land was stolen and that would be obvious if you would think for a single second and look up the etymology before posting. mocking a native language that was eradicated for centuries and is only now beginning to be revived is not fucking funny it is ignorant and racist and cruel

#ribbits#genocide mention#i am actually quite mad that that has 10k notes. scrolled through the notes and only saw one correction#someone asked ‘why did the founding fathers name it that’ first of all the fucking people who were already living here named it that#hundreds of years before this country was founded. second the first colonist to call it massachusetts was fucking john smith in 1610

6K notes

·

View notes

Text

hi heres art so you pay attention to me now go read the tags

#ive been rewatching episode 7 like its the only thing on youtube and made note of many things#first off. the solver can only have one host. nori mentions skyn wants to kill off all the other solver hosts (with the dds) and disregards#the idea of both uzi and doll being its current host when they get chased#plus the main solver possessions only occur when skyn is out of the picture (the fightt in ep 7 is only after n decapitates “tessa”)#solver uzi is possible too but i dont count her cause she doesn't have the yellow#personal theory is that its more an instinctual response to overheating or something and not full on possession#second off nori calls the solver cyn. how does she know that name#cyn was on earth and only showed up to copper 9 recently and i presume nori's been here her whole life#it probably wasn't the other dds cause none of them made it down and they're all more savage beasts#since cyn specifies n's team retained their personalities and that makes me think the other teams didnt#also also we should've immediately questioned tessa arriving in the same type of pod as the mds when they were revealed to not be sent by j#im running out of characters also the people who dont like when i use tags like this can bite me#murder drones#murder drones nori#artori? that sounds cool#ill probably just stick with nori though#i have so many solver heart refs now#art#episode 7#murder drones episode 7#murder drones episode 7 spoilers#using the same black for shadows as my lineart doesn't work when i have to draw thin things over it#murder drones spoilers

1K notes

·

View notes

Text

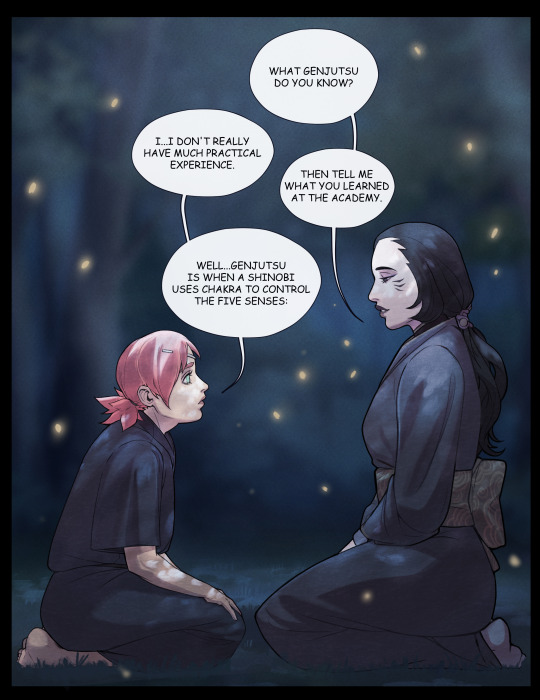

Sakura gets her first lesson in Genjutsu with Saeko. Based on the idea that humans have more than 5 basic senses (though there's no real science behind how many, with some saying 21, while others say up to 33).

Saeko is not actually a Hoshigaki- though they are very very distantly related. She is actually a Ningyo (mermaid) exiled from her coven, and living as a pariah, cursed to wander the earth, barred from the seas and rivers until she can get her pearl back.

As a mermaid-siren, she is intimately aware of the power of genjutsu (especially audial) and is a vicious teacher, forcing Sakura through rigorous training to teach her young charge how to understand and control her own senses/emotions before she attempts to do so on others.

Once again, thank you so much to everyone whose shown interest in this AU, you guys are giving me LIFE <3 <3. I'm so happy other people really feel that Sakura deserved a fighting chance. Together, we'll fix her!!!

#naruto#haruno sakura#saeko#baby's first genjutsu lesson#sakura learns how to perform tea ceremonies#if there's anything that would test her control it would be court customs#oh yeah did i mention we have covens in this AU?#we're pivoting from medic-nin to shaman-nin because no one told me i couldn't#I feel like Sakura could absolutely wreak havoc with people's sense of time space and gravity#me and unhinged women- name a more iconic duo i'll wait#coven!Sakura

1K notes

·

View notes

Text

Phil: So apparently, me talking about Tajín the other day got the attention of Roier's girlfriend, and she messaged Kristin and was like, "Oh, if Phil really likes Tajín, he might try this!" and it's called "Miguelito" I think? It's like a sweeter version of Tajín, almost. So I ordered some, it wasn't cheap, and- here you go– [Phil holds the small orange packet up to the camera] I haven't tried it yet. Gonna try it. It might my new favorite.

Sabi (Roier's girlfriend) recommending new Mexican treats and spices to Phil is so sweet 🥺💕

#Philza#Phil#Sabi#Phil plays Hardcore#IM SO EMBARRASSED. I ACCIDENTALLY CALLED HER MAFER FOR A SECOND#THAT'S MISSA'S GIRLFRIEND#listen man it takes me months to learn people's names#OTL#April 24 2024#anyways whenever Phil (or any of the streamers I watch) mention Mexican stuff I get so happy

275 notes

·

View notes

Text

information flow in transformers

In machine learning, the transformer architecture is a very commonly used type of neural network model. Many of the well-known neural nets introduced in the last few years use this architecture, including GPT-2, GPT-3, and GPT-4.

This post is about the way that computation is structured inside of a transformer.

Internally, these models pass information around in a constrained way that feels strange and limited at first glance.

Specifically, inside the "program" implemented by a transformer, each segment of "code" can only access a subset of the program's "state." If the program computes a value, and writes it into the state, that doesn't make value available to any block of code that might run after the write; instead, only some operations can access the value, while others are prohibited from seeing it.

This sounds vaguely like the kind of constraint that human programmers often put on themselves: "separation of concerns," "no global variables," "your function should only take the inputs it needs," that sort of thing.

However, the apparent analogy is misleading. The transformer constraints don't look much like anything that a human programmer would write, at least under normal circumstances. And the rationale behind them is very different from "modularity" or "separation of concerns."

(Domain experts know all about this already -- this is a pedagogical post for everyone else.)

1. setting the stage

For concreteness, let's think about a transformer that is a causal language model.

So, something like GPT-3, or the model that wrote text for @nostalgebraist-autoresponder.

Roughly speaking, this model's input is a sequence of words, like ["Fido", "is", "a", "dog"].

Since the model needs to know the order the words come in, we'll include an integer offset alongside each word, specifying the position of this element in the sequence. So, in full, our example input is

[

("Fido", 0),

("is", 1),

("a", 2),

("dog", 3),

]

The model itself -- the neural network -- can be viewed as a single long function, which operates on a single element of the sequence. Its task is to output the next element.

Let's call the function f. If f does its job perfectly, then when applied to our example sequence, we will have

f("Fido", 0) = "is"

f("is", 1) = "a"

f("a", 2) = "dog"

(Note: I've omitted the index from the output type, since it's always obvious what the next index is.

Also, in reality the output type is a probability distribution over words, not just a word; the goal is to put high probability on the next word. I'm ignoring this to simplify exposition.)

You may have noticed something: as written, this seems impossible!

Like, how is the function supposed to know that after ("a", 2), the next word is "dog"!? The word "a" could be followed by all sorts of things.

What makes "dog" likely, in this case, is the fact that we're talking about someone named "Fido."

That information isn't contained in ("a", 2). To do the right thing here, you need info from the whole sequence thus far -- from "Fido is a", as opposed to just "a".

How can f get this information, if its input is just a single word and an index?

This is possible because f isn't a pure function. The program has an internal state, which f can access and modify.

But f doesn't just have arbitrary read/write access to the state. Its access is constrained, in a very specific sort of way.

2. transformer-style programming

Let's get more specific about the program state.

The state consists of a series of distinct "memory regions" or "blocks," which have an order assigned to them.

Let's use the notation memory_i for these. The first block is memory_0, the second is memory_1, and so on.

In practice, a small transformer might have around 10 of these blocks, while a very large one might have 100 or more.

Each block contains a separate data-storage "cell" for each offset in the sequence.

For example, memory_0 contains a cell for position 0 ("Fido" in our example text), and a cell for position 1 ("is"), and so on. Meanwhile, memory_1 contains its own, distinct cells for each of these positions. And so does memory_2, etc.

So the overall layout looks like:

memory_0: [cell 0, cell 1, ...]

memory_1: [cell 0, cell 1, ...]

[...]

Our function f can interact with this program state. But it must do so in a way that conforms to a set of rules.

Here are the rules:

The function can only interact with the blocks by using a specific instruction.

This instruction is an "atomic write+read".

It writes data to a block, then reads data from that block for f to use.

When the instruction writes data, it goes in the cell specified in the function offset argument. That is, the "i" in f(..., i).

When the instruction reads data, the data comes from all cells up to and including the offset argument.

The function must call the instruction exactly once for each block.

These calls must happen in order.

For example, you can't do the call for memory_1 until you've done the one for memory_0.

Here's some pseudo-code, showing a generic computation of this kind:

f(x, i) {

calculate some things using x and i;

// next 2 lines are a single instruction

write to memory_0 at position i;

z0 = read from memory_0 at positions 0...i;

calculate some things using x, i, and z0;

// next 2 lines are a single instruction

write to memory_1 at position i;

z1 = read from memory_1 at positions 0...i;

calculate some things using x, i, z0, and z1;

[etc.]

}

The rules impose a tradeoff between the amount of processing required to produce a value, and how early the value can be accessed within the function body.

Consider the moment when data is written to memory_0. This happens before anything is read (even from memory_0 itself).

So the data in memory_0 has been computed only on the basis of individual inputs like ("a," 2). It can't leverage any information about multiple words and how they relate to one another.

But just after the write to memory_0, there's a read from memory_0. This read pulls in data computed by f when it ran on all the earlier words in the sequence.

If we're processing ("a", 2) in our example, then this is the point where our code is first able to access facts like "the word 'Fido' appeared earlier in the text."

However, we still know less than we might prefer.

Recall that memory_0 gets written before anything gets read. The data living there only reflects what f knows before it can see all the other words, while it still only has access to the one word that appeared in its input.

The data we've just read does not contain a holistic, "fully processed" representation of the whole sequence so far ("Fido is a"). Instead, it contains:

a representation of ("Fido", 0) alone, computed in ignorance of the rest of the text

a representation of ("is", 1) alone, computed in ignorance of the rest of the text

a representation of ("a", 2) alone, computed in ignorance of the rest of the text

Now, once we get to memory_1, we will no longer face this problem. Stuff in memory_1 gets computed with the benefit of whatever was in memory_0. The step that computes it can "see all the words at once."

Nonetheless, the whole function is affected by a generalized version of the same quirk.

All else being equal, data stored in later blocks ought to be more useful. Suppose for instance that

memory_4 gets read/written 20% of the way through the function body, and

memory_16 gets read/written 80% of the way through the function body

Here, strictly more computation can be leveraged to produce the data in memory_16. Calculations which are simple enough to fit in the program, but too complex to fit in just 20% of the program, can be stored in memory_16 but not in memory_4.

All else being equal, then, we'd prefer to read from memory_16 rather than memory_4 if possible.

But in fact, we can only read from memory_16 once -- at a point 80% of the way through the code, when the read/write happens for that block.

The general picture looks like:

The early parts of the function can see and leverage what got computed earlier in the sequence -- by the same early parts of the function.

This data is relatively "weak," since not much computation went into it. But, by the same token, we have plenty of time to further process it.

The late parts of the function can see and leverage what got computed earlier in the sequence -- by the same late parts of the function.

This data is relatively "strong," since lots of computation went into it. But, by the same token, we don't have much time left to further process it.

3. why?

There are multiple ways you can "run" the program specified by f.

Here's one way, which is used when generating text, and which matches popular intuitions about how language models work:

First, we run f("Fido", 0) from start to end.

The function returns "is."

As a side effect, it populates cell 0 of every memory block.

Next, we run f("is", 1) from start to end.

The function returns "a."

As a side effect, it populates cell 1 of every memory block.

Etc.

If we're running the code like this, the constraints described earlier feel weird and pointlessly restrictive.

By the time we're running f("is", 1), we've already populated some data into every memory block, all the way up to memory_16 or whatever.

This data is already there, and contains lots of useful insights.

And yet, during the function call f("is", 1), we "forget about" this data -- only to progressively remember it again, block by block. The early parts of this call have only memory_0 to play with, and then memory_1, etc. Only at the end do we allow access to the juicy, extensively processed results that occupy the final blocks.

Why? Why not just let this call read memory_16 immediately, on the first line of code? The data is sitting there, ready to be used!

Why? Because the constraint enables a second way of running this program.

The second way is equivalent to the first, in the sense of producing the same outputs. But instead of processing one word at a time, it processes a whole sequence of words, in parallel.

Here's how it works:

In parallel, run f("Fido", 0) and f("is", 1) and f("a", 2), up until the first write+read instruction.

You can do this because the functions are causally independent of one another, up to this point.

We now have 3 copies of f, each at the same "line of code": the first write+read instruction.

Perform the write part of the instruction for all the copies, in parallel.

This populates cells 0, 1 and 2 of memory_0.

Perform the read part of the instruction for all the copies, in parallel.

Each copy of f receives some of the data just written to memory_0, covering offsets up to its own.

For instance, f("is", 1) gets data from cells 0 and 1.

In parallel, continue running the 3 copies of f, covering the code between the first write+read instruction and the second.

Perform the second write.

This populates cells 0, 1 and 2 of memory_1.

Perform the second read.

Repeat like this until done.

Observe that mode of operation only works if you have a complete input sequence ready before you run anything.

(You can't parallelize over later positions in the sequence if you don't know, yet, what words they contain.)

So, this won't work when the model is generating text, word by word.

But it will work if you have a bunch of texts, and you want to process those texts with the model, for the sake of updating the model so it does a better job of predicting them.

This is called "training," and it's how neural nets get made in the first place. In our programming analogy, it's how the code inside the function body gets written.

The fact that we can train in parallel over the sequence is a huge deal, and probably accounts for most (or even all) of the benefit that transformers have over earlier architectures like RNNs.

Accelerators like GPUs are really good at doing the kinds of calculations that happen inside neural nets, in parallel.

So if you can make your training process more parallel, you can effectively multiply the computing power available to it, for free. (I'm omitting many caveats here -- see this great post for details.)

Transformer training isn't maximally parallel. It's still sequential in one "dimension," namely the layers, which correspond to our write+read steps here. You can't parallelize those.

But it is, at least, parallel along some dimension, namely the sequence dimension.

The older RNN architecture, by contrast, was inherently sequential along both these dimensions. Training an RNN is, effectively, a nested for loop. But training a transformer is just a regular, single for loop.

4. tying it together

The "magical" thing about this setup is that both ways of running the model do the same thing. You are, literally, doing the same exact computation. The function can't tell whether it is being run one way or the other.

This is crucial, because we want the training process -- which uses the parallel mode -- to teach the model how to perform generation, which uses the sequential mode. Since both modes look the same from the model's perspective, this works.

This constraint -- that the code can run in parallel over the sequence, and that this must do the same thing as running it sequentially -- is the reason for everything else we noted above.

Earlier, we asked: why can't we allow later (in the sequence) invocations of f to read earlier data out of blocks like memory_16 immediately, on "the first line of code"?

And the answer is: because that would break parallelism. You'd have to run f("Fido", 0) all the way through before even starting to run f("is", 1).

By structuring the computation in this specific way, we provide the model with the benefits of recurrence -- writing things down at earlier positions, accessing them at later positions, and writing further things down which can be accessed even later -- while breaking the sequential dependencies that would ordinarily prevent a recurrent calculation from being executed in parallel.

In other words, we've found a way to create an iterative function that takes its own outputs as input -- and does so repeatedly, producing longer and longer outputs to be read off by its next invocation -- with the property that this iteration can be run in parallel.

We can run the first 10% of every iteration -- of f() and f(f()) and f(f(f())) and so on -- at the same time, before we know what will happen in the later stages of any iteration.

The call f(f()) uses all the information handed to it by f() -- eventually. But it cannot make any requests for information that would leave itself idling, waiting for f() to fully complete.

Whenever f(f()) needs a value computed by f(), it is always the value that f() -- running alongside f(f()), simultaneously -- has just written down, a mere moment ago.

No dead time, no idling, no waiting-for-the-other-guy-to-finish.

p.s.

The "memory blocks" here correspond to what are called "keys and values" in usual transformer lingo.

If you've heard the term "KV cache," it refers to the contents of the memory blocks during generation, when we're running in "sequential mode."

Usually, during generation, one keeps this state in memory and appends a new cell to each block whenever a new token is generated (and, as a result, the sequence gets longer by 1).

This is called "caching" to contrast it with the worse approach of throwing away the block contents after each generated token, and then re-generating them by running f on the whole sequence so far (not just the latest token). And then having to do that over and over, once per generated token.

#ai tag#is there some standard CS name for the thing i'm talking about here?#i feel like there should be#but i never heard people mention it#(or at least i've never heard people mention it in a way that made the connection with transformers clear)

302 notes

·

View notes

Text

Shout out to Tommy, cos despite his whole silly, goofy, dirty-crime-boy persona, that motherfucker is handling all this drama super maturely.

Cos like Tommy waited months for the QSMP drama to die down before making his little joke, a joke that was fairly lighthearted all things considered.

But then there was the backlash he got, and harassment — a good portion of which was aimed towards his mother and his family — and like he’s said he was upset and angry about all that, but instead of logging onto Twitter and firing off a bunch of angry tweets he just took a step back, didn’t stream for a week and just.. got all his frustrations out in a silly little vlog that may never see the light of day.

Like I shouldn’t really need to be congratulating people for healthily dealing with their drama in a private manner… but idk, apparently some people can’t do the bare minimum. And TommyInnit — that one guy half the internet likes to think is still a child — knows how to handle drama like a mature adult and well.. Thanks Tommy for being the Big Man in this whole situation!

#tommyinnit#discourse#(I didn’t mention him by name surely this won’t provoke the wrong people right)#(it definitely will)#(anyway Tommy doesn’t owe us that vlog#but he also deserves to throw hands if he wishes#and I’ll be sitting here cheering from the sidelines)

501 notes

·

View notes

Text

a moment of solitude

#xingyun#chongqiu#xingqiu#chongyun#genshin impact#genshin#xingqiu genshin impact#chongyun genshin impact#genshin fanart#genshin impact fanart#fanart#my art#hes been explaining the lore of this book he likes for the past 4 hours chongyun doesn't have it in him to tell him to stop tho#not inspired by true events btw^^#this artwork is fictional any mentions of current events names people locations etc is purely coincidental#cough cough

307 notes

·

View notes

Text

Byler Week Day 5 — Secret Identities

very loosely interpreting the prompt for today but i've had this idea for a while and... secret identities, Superheroes, that works. anyone who knows me well probably could have seen something like this coming LMAO

also trying to draw Robin & Superboy costumes that look thrown together and home-made when i have spent so much time drawing their actual designs was a challenge

#'sam this is 10 years after where canon is rn why do they look the same' because shhhhh#it had to be 1996 because that's when Worlds Finest Three: Superboy/Robin came out !!!!!!!#also yes specifically issue 59 of Wizard (cover date july 96 publication date may 96) is when WF3 got a mention/announcement#bylerweek2023#Day 5 Secret Identities#byler#byler art#mike wheeler#will byers#stranger things#anyways listen timkon & byler are different ships in many ways however#i DO believe mike would carry tim's 'im gonna desperately try to clone him back to life 100 times' energy if#anything bad happened to will ever again#PLUS like. some theories ive seen about birthdaygate with like the idea of will getting slowly erased from people's memories........#i don't want that to happen but IF IT DID. getting a detective comics 967 'he talked about a friend i should have.. someone named conner...#and i feel that name tugging on my heart but i don't know why' moment with mike about will........ oughghghghg#sam draws shit#<- almost forgot my damn art tag

956 notes

·

View notes

Text

Dazai has the most basic average anime character hair in all of bsd. But how is it so hard to get his hair to look right?!? If one streak is only a bit off he suddenly looks like every other Isekai protagonist, like asumu dazai from hit-isekai “I killed myself and now I’m reborn to suffer through another life”

#I make jokes but isn’t an isekai announced for this year where actual author osamu dazai is isekaied?#bungou stray dogs#bsd#bsd dazai#osamu dazai#dazai osamu#I spelt Dazai’s name wrong on purpose I actually wanted to spell his entire name wrong but idk any Japanese last name that sounds similar#to Dazai so now it isn’t that funny but i don’t care#do people tag canon typical suicide mentions/jokes for Dazai with any tw tags?#ask to tag#tw suicide joke

247 notes

·

View notes

Text

had a thought earlier that spirallwd out of control

#ocarina of time#oot#aveil#nabooru#ganondorf#drug mention#extremely extremely rare Aveil Post#i still call her OoT version that because she. only has a name in MM asfdgjsfs#idk the thought of them hanging out like normal people. its funny#i figure hyrule could have the equivalent of a Roman senate right

220 notes

·

View notes

Text

girl boss + her serial killer

#naomi misora#beyond birthday#birthdaymassacre#'birthday massacre the people not the band.png'#was what i named this file moths ago#finally finished it hehe#i told the mututal abt this one then never mentioned it again#i had to do this one yk#i wanted to do smth simple & wholesome with these 2 man#i used to do so much ship art back in the day with this exact format#anyways my sillys#theyre so special to me there isnt enough bdaymassacre#death note#death note fanart#death note another note#b death note#labb#more bdaymassacre fics WHEN /hj#v’s gallery

888 notes

·

View notes

Text

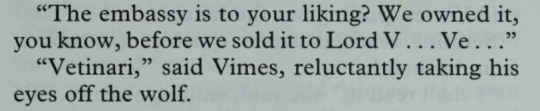

DAMN IT TERRY

#THE DOG PEOPLE DON'T LIKE HIM CAUSE HIS NAME SOUNDS LIKE 'VETERINARY'#bonus points: sam mentions a bath at the end to throw them off their rhythm#discworld#honor reads discworld#the fifth elephant#sam vimes

523 notes

·

View notes

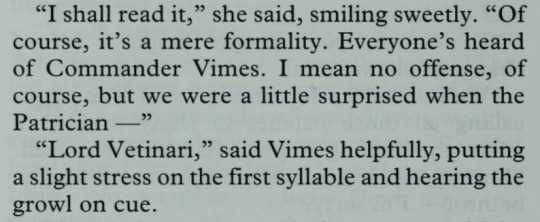

Text

#just looking at the dates/travel distances for Danhausen's toy signing tour makes me feel tired#I have no idea how he does it because he never seems to stop working even when he's injured#but I've heard literally nothing but good things about people's experiences with him#not just from fans either!#he was one of the first names mentioned as being above and beyond nice when I was chatting with someone who works wrestling events in the US#plus if RJ is saying something genuine about danhausen it must be true. RJ would never risk journalistic integrity by lying like that#anyway love that danhausen#AEW#RJ City#Danhausen

281 notes

·

View notes

Text

Warning: Depictions of character death

[Image Description: A 4 panel colored Legend of Zelda AU comic “Linked Spirit”. Panel 1: The Darknut's head is turned to the viewer, one eye and a splash of the dark purple ooze pouring out of the visor space. "HA ha ha I see you now" it says. Panel 2: Hero's Spirit, appearing as breath of the wild Link, looks disturbed and wide eyed. "Malice." Panel 3: The background turns black, and Hero, back in their green tunic, looks up at the Malice's giant yellow eye looming over them. "Killing the Hero again and again clearly doesn't work." It says "You must be destroyed" ('destroyed' is italic and bold). Panel 4: A glowing teal wheel with clipped scenes in each of in the 6 segments, each Link in the segments are colored in the same tunic colors as when Hero is shapeshifted as them. Hero's Spirit is in the center, curled up and clutching their hair. Their face looks fractured and generally featureless. The 1st segment features a hand reaching toward a blue ocarina, with Beast Ganon's hoof in front of it. 2nd segment Rinku is seen from behind a hand hovering over a headstone with the name "Link" in Hylian on it. 3rd Rinku sinking in water, indicated by small bubbles. 4th: Engineer sprawled on the ground, the pan flute in one hand, the Spirit of Healing hovering over him. Maladus's clawed paw beyond him. 5th: Hope hovering over his own body as a spirit 6th: Breath of the Wild Zelda (Bloom) holding onto Glider like within the Final Memory in botw. Under this segment, a ribbon like line turns into a glowing droplet. End ID]

masterpost

smh time travel makes the order of events so confusing sometimes huh?

First- Previous (30) - 31^ - Next (32)

#linked spirit#hopefully only confusing for a second before you go 'oh shoot wait'#loz au#legend of zelda#loz#linked spirit au#ls hero's spirit#linked spirit comic#botw is next can you tell :P#ls malice#finally I can tag my beautiful monster's name :P#I'm sure most people knew it was malice but i mean Dramatic Name Drop#ls hope#ls rinku#ls engineer#ls glider#death mention tw#tw major character death

115 notes

·

View notes

Text

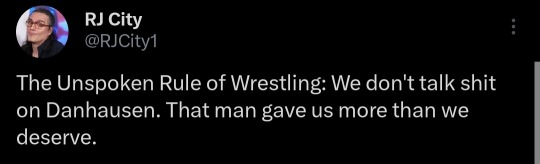

i found Yomiel's ancestor LMAOOOOOOOOOOO

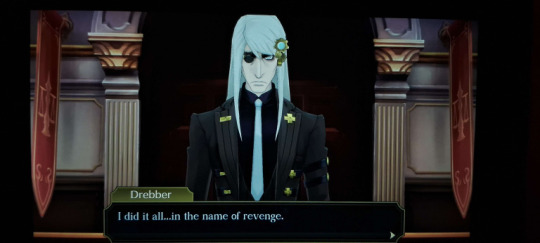

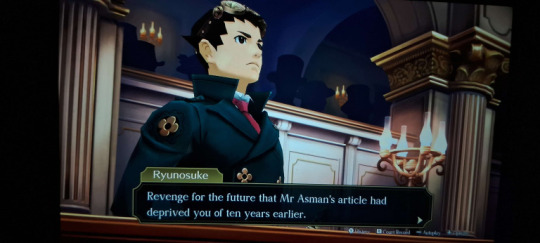

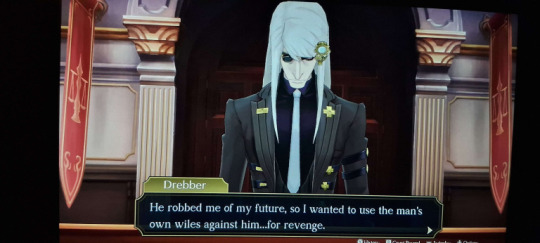

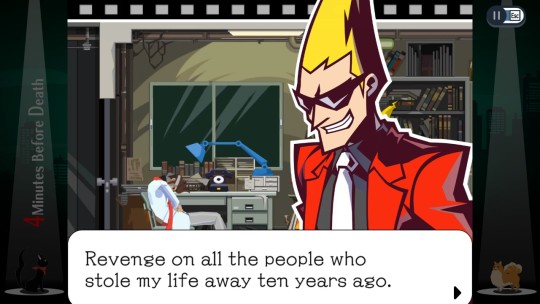

#yomiel#enoch drebber#tgaa spoilers#tgaa2#tgaa2 spoilers#ghost trick#ghost trick spoilers#ghost trick phantom detective#the great ace attorney#the great ace attorney 2#the great ace attorney resolve#no but for real both are engineers in a way#have a tragic backstory#they want revenge on people who ruined their lives TEN YEARS AGO#i love him your honor#another fun note: Yomiel’s name comes from the book of ENOCH as one of the fallen angels mentioned in it

310 notes

·

View notes