#if i can find a better source of images than search results

Text

was inspired a bit ago to edit "autism mom" images for amusement.

batch 1/???

#i have some more that ill probably post later#i found a bunch of good material#but then#ended up scrolling too far down#and getting to the truly hateful stuff#sooooo#if i can find a better source of images than search results#then perhaps ill make this a continual thing#autism#actually autistic#autism mom#memes#memery#nonsense#original content#fallow buzzes#tag ramble#autism speaks#alt text#described#image description#accessible memes

121 notes

·

View notes

Text

To help spread the honorable arts of source-seeking far and wide, I have compiled a list of websites I often rely on. It is by no means comprehensive but it should be helpful for those who want to try looking for sources on their own.

Google Lens:

More specifically, the "Search by Image" option that replaced the reverse image search functionality of Google Images in 2022. Although it focuses on image segmentation and recognition, it has the most encompassing image set by far.

It will rarely point you towards the source directly, but you can use it to find different or more complete samples, which is a good way to start.

Yandex Images:

Its "Visual Search" functionality resembles the older Google reverse image search, which can be better in some situations. It lists pages that contain fairly similar matches and a board of looser samples.

TinEye:

Although smaller and less-capable than other options, it stores the date and address it found each result, which really helps narrowing down potential sources. It works best with unedited or nearly complete samples.

Above are general-purpose tools that are suited for images of any subject matter. Following are sites with narrower scopes, mainly focused on artworks or anime-adjacent media.

SauceNAO:

If you have an unedited image, this is the tool for the job. Though weak against crops and edits, it indexes heaps of works from artist-focused communities, social media platforms, and image boards.

trace.moe:

In the "rare" occasion that I stumble upon a screenshot of an anime I don't recognize, this tool is a lifesaver. It is "comparatively" much smaller than the others, but it can give you the exact moment you're looking for, down to the minute.

For now, these are the stars in my toolkit! There are other notable tools out there, but these are the ones I have the most success with.

2K notes

·

View notes

Text

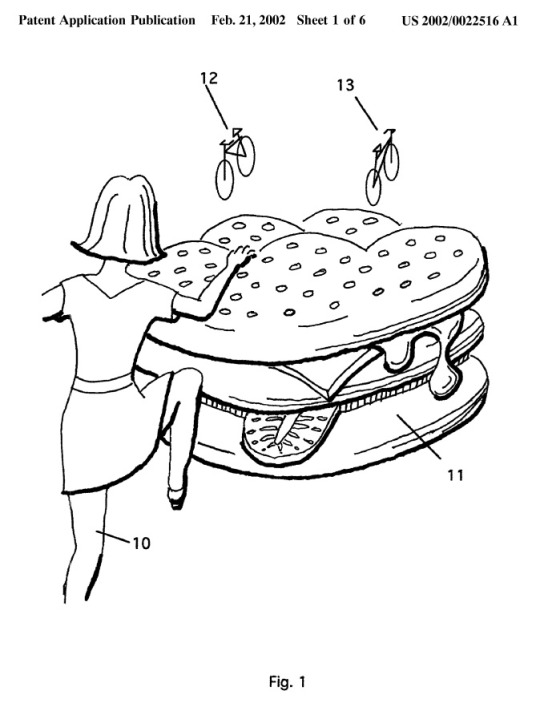

Context-Full Patent Art

Every now and then I see people in the comments who seem to genuinely want a better idea of what one of the posted bits of art here was supposed to be patenting. Giving that context myself doesn't seem in the spirit of the blog, but I absolutely want to encourage people to do the legwork to go sniffing around for original sources.

It's something I have to do a lot in my day job, something I've learned to enjoy, and the thing that led me to start Context-Free Patent Art a dozen years ago in the first place.

Let's start with today's post, which is a perfect example of art that raises more questions than it answers.

Almost every post on this blog has a header detailing the date of the patent or application and the number, which you can find on the top right. In this case, this image is from Patent Application # 20020022516.

Longtime Context-Free Patent Art fans might remember this image from when it was first featured here over a decade ago. I figured that a lot of the people following the blog now haven't seen some of the gems from years ago, so I'm going to be doing re-runs of some of my favorites this week. There's a bunch more I think would be good to bring back, so I'll use the vintage cfpa hashtag in the future so you know when a duplicate post is intentional (as opposed to me just forgetting I've posted a thing before, which happens a fair bit).

Back to the matter at hand though, if you're ever curious, the US Patent and Trademark Office has a super-handy patent search function. It's pretty easy to find from their main site (uspto.gov), but you can also just bookmark this link to the basic search form:

Check out the search results, click on the link for the .pdf, and you'll get the complete patent/application to peruse at your enjoyment.

In this case, you'll find the patent being applied for covers a method of "Advertising inside electronic games" and you'll learn that:

In FIG. 1, girl 10 is climbing onto a hamburger 11 like one sold at a fast food restaurant. However, in the displayed image, hamburger 11 is as large as girl 10. The person playing the game will therefore perceive hamburger 11 as fantastically large. That unusual scale will help to burnish the image of hamburger 11 in the player's memory as well as draw his attention to it while he plays the game.

Not shown in FIG. 1 are other characteristics which electronic games could apply to hamburger 11 which cannot be shown in unanimated line-drawings. Hamburger 11 will compress as girl 10 steps or pushes on it. That action will be accompanied by squishing and slurping sounds...

Yeah, the explanations are weird too, sometimes.

Sorry for all the words, I just wanted to make it as clear as possible to people that if they're ever curious about stuff they see on Context-Free Patent Art, it's usually pretty easy to find out what the deal is.

129 notes

·

View notes

Note

I make video essays (not frequently but I'm trying to get back into it) and I have wondered about blind accessibility before but I haven't really found much information that isn't VERY general. Do you have any resources or tips? Because frankly, the reason I make video essays and not say podcasts or just blog posts about these topics is because it is both an audio and visual medium. I can show graphs for things, play clips of movies or songs, etc. and I use captions but when I search for how to make it accessible for the blind all the information I find isn't that applicable to content that's as, dynamic I guess. Like the most common things I see are "identify who's speaking" or "read out text" (which I generally do) but what about clips from other media, graphs, things like that? Especially if im talking about a visual topic like set design or something.

n.b. I'm not blind or low-vision & I'm just brainstorming here.

image descriptions & accessibility aren't quite one-size-fits-all, because thoroughness always comes at the expense of time. you have to ask yourself what information you're trying to communicate with an image or clip. if the entire video is an analysis of a visual artwork, for example, you'd want to describe the image in enough detail that people could not only understand your analysis, but also come to their own analytical conclusions. if, on the other hand, you're just pasting in a line graph to compare two of the numbers in it as a smaller part of a larger point, it's not necessary or worth it to state every value of every variable at every point in time on the graph... just give an idea of the trend you're referring to.

some thoughts:

Any image description is better than no description. Briefly describe something rather than allow the thought of describing it thoroughly to overwhelm you such that you end up doing nothing.

Relatedly: don't think of things in terms of "is the exact same amount and type of information available to sighted and blind people watching this," but rather, "without the visual component, does this basically make sense / can I basically follow the main point?"

What this means is that at no point should there be information that is solely visual in nature, like my example of several seconds of silence where you can only assume that there's probably text on the screen but have no idea what that text says and whether it's a clarification, disclaimer, or even a retraction. It would be best to read this text out, but I've also seen a "text on screen" section in the description box of a video that gives the text in full and the time stamps at which the text appears.

Briefly state what it is about a clip or graph you're inserting that makes it useful for your point. Rather than "and you can see the results of that decision here *a few frames of a graph*," say "and you can see the results of that decision in the sharp downward turn in profits starting in 2009 *a few frames of the graph*." If it's available, link the source of a graph or chart in the description box—it may have alt text or be screenreader accessible at the source website.

Inserting a brief image description into the script after introducing e.g. a photo of a set should be feasible enough, but I also think it's possible to fold the image description into the analysis. This just means making sure that there's never a visual component with no audio equivalent—rather than saying "the stuff that's going on back here *circle part of the screen* has a haunting effect," say "the fact that the furniture in the background is jumbled, worn down, and uncomfortable-looking has a haunting effect." I think explicitly pointing out what about a scene you're responding to is just part of good analysis anyway!

Introduce a clip with a rundown of who's speaking in it and what's going on ("you can see [main point] in this clip, in which Elizabeth confronts Darcy about his meddling in Bingley's affairs"). Play the clip, and then describe anything else about it that's relevant to your analysis ("the fact that she's sitting while he paces back and forth indexes the relationship between gender and space in the film" or whatever). Again, this is just good analysis.

If there's something really central that would take a long time to describe, you could use the description box to image describe it (the same way you would write any other image description) and give a timestamp, and say something like "image description in the box below" when you reach that part of the video.

Sometimes at poetry readings, conferences &c. I've seen people give a description of their appearance, what they're wearing &c. before they begin speaking. I'm not sure how necessary this is—probably some blind people find it interesting and others think it's a waste of time, lmao

114 notes

·

View notes

Text

ON THE AI ART THING

i see three main points people are making, which i think are fair: first the supposition that the products of people's labor are being used somewhere in the process of art generation in an unfairly profitable way; second the supposition that the products of the artists' labor are being stolen before they can sell it; third that the other two result in a chain-reaction down the line where nobody's an artist any more because neural networks are adjective-er than humans.

for what its worth the way AIs combine images is just not the way you think AIs combine images. machine learning is complicated. neural networks are complicated. read up on GANs; watch a few Two Minute Papers videos (like this one, which is particularly relevant: https://www.youtube.com/watch?v=FCf8OA4GPvI). the way they combine im its not forgery (AI are not perfectly replicating your piece and selling it as it is for their own value; at some point it might involve copying/"looking" at it), its not stealing (you still have your art with you), its not even Youtube Poop remixing. generally the hypest way to do neural networks right now is to take a collection of items the AI knows to contain similar concepts and creates an understanding of that shared concept; a good dataset will be big enough to understand all the full variations of that concept, or accurately deduce the rest of the variations, all through the natural inconsistenties that a bunch of pictures of the same thing might produce. an AI knows how to do an oil painting style because it probably knows quite well the actual qualities of an oil painting. it also knows what the sun looks like, how cloth drapes at different weights, how holes in the ceiling of a building might imply shafts of light illuminating on the floor, and other incredible stuff. beats me how it does that, and you likely won't get it either. but the point is the way people are talking about AI on here seems solely informed by imagination.

for unfair and stealing bit, there's something to be said about how certain online communities, websites, people, blogs. etc are naturally private due to obscurity while also being technically completely public; like caves hidden in valleys that take quite a bit of looking to get to and see. in the past we just assumed they would stay hidden: the art on this blog will only be seen by its followers, or people i link the blog to, surely; ill teach you the way to my little cave. unfortunately the internet has been filled with extremely informed and skilled valley-treading and cave-finding robots, and unless you have big ol' signs that say "DO NOT FIND THIS CAVE!" (i remember for forums you could stop scrapers from coming to your website, i think on tumblr there is something like a "do not show in search results" button too), or somehow otherwise avoid their path using strange internet tricks or complete luck, they will totally find your little cave and rummage through it. what i mean to say is this: your art is already part of the publicly available data, and you're in a tough spot to do anything about it. i have no solutions.

the objectionable part is not in this collection of references for analysis, nor in how the AI is usually interpreting what objects are what, but the misuse of the AI to produce things you don't like (which is part of the point i wanted to make in my first post, besides arguing for the artistic integrity of what the AI is doing with its sources). and i see how it seems unfair -- someone smarter and more knowledgeable about art, labor, intellectual property, and value could articulate this more -- that a customer of a neural network could so freely replicate someone else's work with the original stylist having nothing to show for it. it wouldn't be any better if a sufficiently skilled person was requested to draw original pieces in your style. so in that way this is less about AI and more an objection against the violation of someone's intellectual property, if that exists.

for the second point on losing artists, i understand the reasoning as following: nobody wants to be a starving artist, and to avoid that in capitalism one needs money, and if someone is dead set on being an artist (or for some reason can be nothing else) they must sell their art to make money. if no one is going to give them money for art, they will certainly be a starving artist, which we don't want. the conclusion here is, if we want artists (starving, at the very least), we should restrict anything else that makes art which might compete with the artist's. okay, that might work: we could stop having AI make art. but what if, despite our best efforts, we can't fully restrict such a thing? what if AIs are here to stay? more importantly, what if artists are actually already partnering with AI to make art, and you've just been none the wiser? What if you yourself could adapt to new technology and use it to better your own art? The improvement of this tech is, as far as anyone can tell, inevitable. Like any tool, it will be applied in obvious and creative ways. It will displace some people, it will be used by others, and further it will be ignored by the rest.

naturally we would think at least some artists could no longer be paid for what they currently do. if companies can afford a concept artist they likely could better afford a subscription to DALL-E. If their work is no better than AI and they cost more it seems clear they're out. but im simply not jumping to the conclusion that companies specifically will be replacing ALL artists with AI, since most trained artists are in fact better than AI, and come with the human factor. some companies will certainly try the switch to AI for the novelty of it, two or three might succeed, most will probably find out negotiating with an artist or two for long term projects is much easier than talking to the team of computer science geeks to see if the damn art-generating program will churn out something slightly more [quality], more palatable to today's changing tastes -- all that, IF "AI generated images" is even the zeitgeist in whatever hypothetical time period in the future this is happening. maybe by the time the tech is developed people will think handmade stuff is popular and companies will follow suit, with all their logos or whatever being painted on glass panes.

i mean, who are we even talking about, considering artists? the fandom artists definitely aren't disappearing because of neural networks. all those millionaire furry artists simply won't lose business because people are going to Midjourney and entering in "shirtless anthropomorphic tiger, [famous furry artist]-style". people who want oil paintings want some physical oil paintings and usually not a digitally-generated oil painting printed on canvas. people want a human intermediary for basically everything, and if you've ever worked retail with an older customer base you'd know that's true. id certainly prefer human-made art to neural networks if i was gonna spend money (and i apologize to our future robot overlords for such a statement).

so who is really completely losing out here? many artists are, quite frankly, not big enough to get fried. concept artists for triple-AAA shooters might take a hit, i imagine; its decently easy to generate generic cities and alien landscapes with AI. but frankly, artificial intelligence is a tool, and not a scooch-along robot replacing your cubicle in the office. you still need people who know about art, and artists willing to put in the work, for it to produce anything of commercial value. even if it gets so good that the CEO of a business can log on to ARTIFICIAL-INTELLIGENCE-WEBSITE.com and type into the text box "I wuld like to buy a compny logo for $50 please", im sure there will always be more status in having that "human touch" to your designs, and less status in those robo-packs of ugly slightly mismatched placeholders. besides, artists are already using AI to help create their products in the first place; i refer to that Two Minute Papers video again (https://www.youtube.com/watch?v=FCf8OA4GPvI).

Edit: Considering Two Minute Papers, we do see that VFX artists and simulation scientists are trying to find ways to realistically simulate liquid, gases, fire, etc. with AI. This likely will have consequences for whatever hand-simulated stuff VFX artists have been doing.

your art is publicly available and you really don't have much control over what happens to it. unless little intranets for families and towns and cities and such become more popular, unless you start password protecting websites like forums used to do, your shits getting collected. its not a good reality and im definitely an advocate for internet privacy. for many artists the internet is unfortunately the only place they know how to get their stuff out there. its not a good situation for artists to begin with. the thing is, what's happening with modern AI art generation isn't... really that bad. unless some specific human wants to make it bad. if from this moment on we decide to push for legal-social-whatever punishments for not compensating the artists whom AI was trained on, okay, i guess. companies developing these AI probably have too much startup money anyway and most artists make far too little for the years of wrist pain. but i would say the customers requesting your art style or whatever are certainly at more moral fault, because it almost certainly wouldn't just pop out a given art style unless your prompt guided it to.

i will stand by the fact that the art of modern neural networks is more complicated, less hurtful, and way cooler than ripped off collage nonsense, and it will be exciting and scary to see where we go from an AI winning an art tournament -- for the moment you can be scared if you want, i guess, but if you didn't already lose your job to a guy utilizing a neural network then you're probably already safe. (and you might as well get on the train before you're left behind.)

194 notes

·

View notes

Text

The most distant fast radio burst ever

An international team has spotted a remote blast of cosmic radio waves lasting less than a millisecond. This 'fast radio burst' (FRB) is the most distant ever detected. Its source was pinned down by the European Southern Observatory’s (ESO) Very Large Telescope (VLT) in a galaxy so far away that its light took eight billion years to reach us. The FRB is also one of the most energetic ever observed; in a tiny fraction of a second it released the equivalent of our Sun’s total emission over 30 years.

The discovery of the burst, named FRB 20220610A, was made in June last year by the ASKAP radio telescope in Australia [1] and it smashed the team’s previous distance record by 50 percent.

“Using ASKAP’s array of dishes, we were able to determine precisely where the burst came from,” says Stuart Ryder, an astronomer from Macquarie University in Australia and the co-lead author of the study published today in Science. “Then we used [ESO’s VLT] in Chile to search for the source galaxy, [2] finding it to be older and further away than any other FRB source found to date and likely within a small group of merging galaxies.”

The discovery confirms that FRBs can be used to measure the 'missing' matter between galaxies, providing a new way to 'weigh' the Universe.

Current methods of estimating the mass of the Universe are giving conflicting answers and challenging the standard model of cosmology. “If we count up the amount of normal matter in the Universe — the atoms that we are all made of — we find that more than half of what should be there today is missing,” says Ryan Shannon, a professor at the Swinburne University of Technology in Australia, who also co-led the study. “We think that the missing matter is hiding in the space between galaxies, but it may just be so hot and diffuse that it's impossible to see using normal techniques.”

“Fast radio bursts sense this ionised material. Even in space that is nearly perfectly empty they can ‘see’ all the electrons, and that allows us to measure how much stuff is between the galaxies,” Shannon says.

Finding distant FRBs is key to accurately measuring the Universe’s missing matter, as shown by the late Australian astronomer Jean-Pierre ('J-P') Macquart in 2020. “J-P showed that the further away a fast radio burst is, the more diffuse gas it reveals between the galaxies. This is now known as the Macquart relation. Some recent fast radio bursts appeared to break this relationship. Our measurements confirm the Macquart relation holds out to beyond half the known Universe,” says Ryder.

“While we still don’t know what causes these massive bursts of energy, the paper confirms that fast radio bursts are common events in the cosmos and that we will be able to use them to detect matter between galaxies, and better understand the structure of the Universe,” says Shannon.

The result represents the limit of what is achievable with telescopes today, although astronomers will soon have the tools to detect even older and more distant bursts, pin down their source galaxies and measure the Universe’s missing matter. The international Square Kilometre Array Observatory is currently building two radio telescopes in South Africa and Australia that will be capable of finding thousands of FRBs, including very distant ones that cannot be detected with current facilities. ESO’s Extremely Large Telescope, a 39-metre telescope under construction in the Chilean Atacama Desert, will be one of the few telescopes able to study the source galaxies of bursts even further away than FRB 20220610A.

Notes

[1] The ASKAP telescope is owned and operated by CSIRO, Australia’s national science agency, on Wajarri Yamaji Country in Western Australia.

[2] The team used data obtained with the FOcal Reducer and low dispersion Spectrograph 2 (FORS2), the X-shooter and the High Acuity Wide-field K-band Imager (HAWK-I) instruments on ESO’s VLT. Data from the Keck Observatory in Hawai'i, US, was also used in the study.

IMAGE....This artist’s impression (not to scale) illustrates the path of the fast radio burst FRB 20220610A, from the distant galaxy where it originated all the way to Earth, in one of the Milky Way’s spiral arms. The source galaxy of FRB 20220610A, pinned down thanks to ESO’s Very Large Telescope, appears to be located within a small group of interacting galaxies. It’s so far away its light took eight billion years to reach us, making FRB 20220610A the most distant fast radio burst found to date. CREDIT ESO/M. Kornmesser

11 notes

·

View notes

Note

i did some research after seeing your post, and as far as i can tell fluffle puff has nothing to do with the pedophilic 4chan fluffy ponies beyond that theyre both fluffy - i totally get avoiding the character just from the association, but do you have anything that shows the two are connected? that would be very helpful, thank you

genuinely, you know what would be better than messaging your finds to just some dude online (for stating and citing facts about a source that uses fluffle as an example of a "mainstream" and "popular" fluffy pony)?

maybe, taking the connections you found and citing them, that show the two are seperate kinds of ponies, so the fan wikia (which, again, what i cited, is the first google result for "fluffy pony") because one thing's for certain- the google and general search algorithm isn't going to shield the primarily minor audience of fluffle puff's fanbase from seeing torture cp.

mixermike, almost 10 years later, still calls fluffle puff a fluffy pony (despite literally having to know that the 4chan ones exist, the art was disgustingly rampant and was often used as a bait-and-switch to disgust newer fandom members) and the fact this stuff is NOT hard to find at all just... is not a good look. he could've easily changed the name of fluffle's species to separate the two kinds of "fluffy pony" (example term throwing out there; angora pony! it sounds way cooler and literally means the same as fluffy.) fluffle puff on her own is not and was not the issue.

because clearly a shitload of people lack reading comprehension

i never claimed fluffle puff herself was a child. i never said her creator had ill intent. i showed an article on a well cited fanmade wikia page that sourced her creator and 4chan; one that in the first paragraph amongst opening the site ends mentioning how fluffy ponies often dien in torturous ways while fluffle is the example image of the wikia. that is NOT a good look for someone trying to profit off of stuff like youtooz and the nostalgia of us older gen-z's and the cute factor of their fan oc.

there are consequences to openly associating a popular character to a species name that has been consistently linked to fetish work for nearly 10 years. and i don't want those consequences to be more children seeing csa and torture while trying to see their favorite pony species.

20 notes

·

View notes

Text

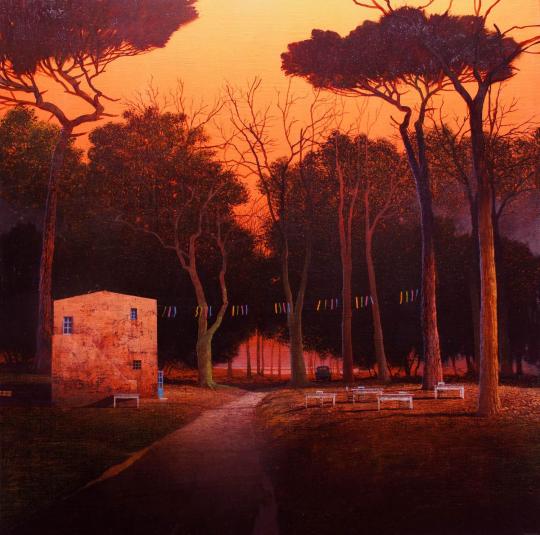

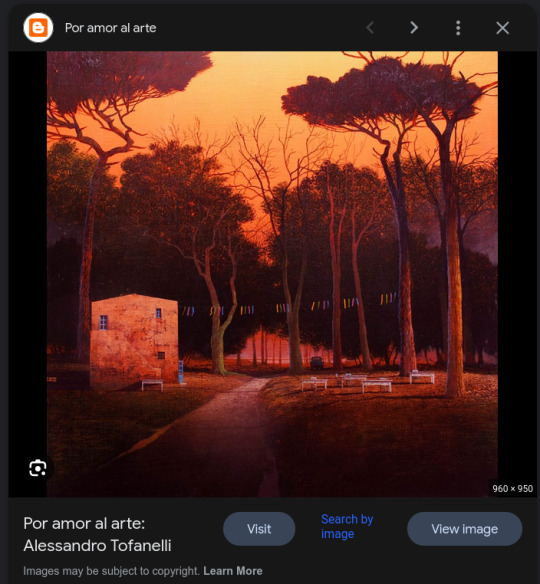

Alessandro Tofanelli. (no obvious source—see below. please let me know if you can find a real source or any other details)

this was previously posted to tumblr in much lower resolution (640x634 pixels). the above is the best-quality (least-downsized) copy of the image I could find through reverse image search and other likely searches

the artist does not seem to have a dedicated portfolio site. there's a facebook page, but I don't see this piece on there. there are different subsets of his work on the following sites (the selection possibly varying with auctions coming and going or galleries disappearing)—

Facebook

Galleria Gagliardi

MutualArt

artnet

floren

some random post on blogspot

the specific image posted here isn't from any of the above. it's from another art auction site, “1stdibs”

the site doesn't have any listings for Alessandro Tofanelli anymore, but the direct link to the 1500x1485 image file still worked (and it doesn't seem to be a janky blurry up-resizing of a smaller image)

so anyhow, unfortunately, I didn't find a title or a year it was made or anything else like that. if anyone can find this out (or find a proper source) please let me know!

(btw, google's reverse image search's biggest result was still only 960x950

still better than the original tumblr upload, but it's always worth checking multiple reverse image searches if you want to find the highest-quality version of some image. though you still have to sift through the “big” results to look for shitty upscaling, or to check if it's actually a photo of a reproduction or w/e)

#|#\#/#`#Alessandro Tofanelli#my uploads#my uploads (unjank)#aes#art#sunrise sunset and dusk#'#orange#unsettling#octoberposting#reuploads#my posts

10 notes

·

View notes

Note

Hey you feel like an expert and I've been looking for years.

Dr Chase's dress in the first film at the anniversary gala for the archives is absolutely gorgeous and I can't find anything about it anywhere. Do you know anything about the costuming of the film?

Also love the blog omg keep up the good work!!!

RE: Abigail's Dress

Hi Anon,

I am flattered that I seem like an expert, and that you've been enjoying the blog!

Unfortunately, I am the opposite of an expert when it comes to clothes, costuming, and fashion. Don't get me wrong, I love seeing other people analyze the costuming choices in film and tv, I just don't have much to add to the conversation.

That said, let's see if we can do some digging!

Digging ↓ (ft. answers!)

The first place I started was the thank yous at the end of the credits.

Right before the music credits is usually where the filmmakers thank significant contributors. For National Treasure we see a lot of places where they shot on location--the D.C., NYC, and Philadephia film offices, the National Archives and Washington D.C. public transit.

Also Knott's Berry Farm, which seems out of place until you learn that it features an exact replica of Independence Hall. This was used for some of the exterior shots of Ben carving the brick out of the wall in place of the real thing.

You'll also see Ferrari mentioned. This is for the promotional consideration of allowing one of their cars to appear in the film.

My thinking was that if the dress was designer, said designer might be thanked here as well.

But alas, no luck.

Clothes-from-film Sites

I turned to google, though from your question it seems like you've searched for this before, so I my expectations were not high.

There are plenty of sites where you can look up clothes worn in film and television, though National Treasure is old enough and, um... underrated enough that I didn't have any luck here. (Edge of History is another story. If anyone's looking for clothes featured there, you'll see plenty of results!)

Into the wilds of the web we go then.

The Miscellaneous Internet

On Anwers.com, I found this post that states the dress was made by the film's costume designer, Judianna Makovsky. (And is actually blue, not black!!????!)

The answer provides an address to an article titled "National Treasure: Diane Kruger A Dress For Action" on clothesonfilm.com.

Unfortunately, that link leads to no-no bad spam places, as clothesonfilm.com no longer exists.

However, I found that same article referenced on film clothes sourcing website spoturn.com.

A search for the article title turned up what appears to be the entire text of the article posted on a forum.

(Make sure to scroll down past the no-longer-hosted photos! The article stretches over several posts from user Lynn866)

I'll grab the bit that discusses the gala dress, but whole article is fantastic and definitely worth a read!

National Treasure: Diane Kruger, a Dress for Action

by Lord Christopher Laverty

27 Nov 2009

... Abigail on the other hand has probably spent an entire month’s salary on her outfit. The results of which are stunning:

[Missing image, presumably of Abigail at the gala]

Midnight blue silk gazar evening dress with matching lace overlay, fitted bodice with chiffon bow to waist, deep v-neck to front and back, full skirt with under-netting; short blue velvet jacket with flared sleeves, black high heel shoes, open toe with ankle straps; vintage ruby stone earrings.

Although the dress looks black on screen it is actually midnight blue (slightly visible when Abigail leaves the Archives and backlight shines through the chiffon). This colour variation is possibly due to the demands of motion picture lighting. The former Prince of Wales, Edward VII, reputedly had two types of dinner suit on standby, a black for outdoor functions and a midnight blue for indoor. Under artificial light, dark blue can appear as black; a better black than black in fact.

The dress’ luxury organza fabric is a crisp, heavy silk gazar from Paris with dyed lace to match; the jacket is blue silk velvet and chiffon. Based on an original design by Judianna Makovsky, the finished article was made by her long-time collaborator John David Ridge.

The earrings are:

Genuine 19th century Russian, they were supplied by L.A. based jewellery merchant Vicki Lynn. These earrings coolly offset the demureness of the dress, enlivening it with just a hint of glitz. That they date from the 1800s nods to Abigail’s love of history.

So. It appears the dress was custom made for the film by the costume designer.

However, if you absolutely have to have one, lizdress.com will apparently custom-make a number of film & tv outfits, including Abigail's dress.

Blue or Black?

Lord Christopher Laverty definitely knows better than I would, but just to corroborate the blue-for-black thing:

• "Rich Black" appears, well richly black, in print, but is not true black in a color theory sense.

• The Addams' Family house for the 1964 tv show was pink, because pink lended the right tone of eerie gray on black and white film.

• The Parthenon contains no straight lines to compensate for the eye's natural distortion so as to appear straight.

I'd argue that the dress was always intended to appear black, mostly based on how Abigail is framed to parallel Ben at so many points during the party/heist sequence.

This is a black tie event, and she's meant to appear as a natural part of the party's black and beige color palette. At no point is she staged as an Elle Woods type who is visually sticking out.

Conclusion

This article is a gold mine, and I'd definitely like to return to it from a character analysis point of view at some point! Thanks so much for helping me come across it.

Thanks so much for you question! Feel free to send another any time!

#national treasure#abigail chase#diane kruger#judianna markovsky#clothes on film#clothes from film#costume design#lord christopher laverty#articles

12 notes

·

View notes

Text

AI - Solving the Wrong Problems Because It's Cheaper - 325 - 15 February 2024

I've been reading the various opinion pieces on Artificial Intelligence (AI) and I definitely have opinions about what I've read. Here is a short list of some of the highlights of items I've found

AI is a public relations (PR) term for a predictive pattern generator, i.e. a text completion tool. The better term to use is Large Language Model (LLM) for language processing, and image generator for image generation.

AI is the latest attempt by the software industry to automate software development work and other jobs viewed as excessively expensive and easy to automate for businesses

The current models of AI available to the public are meant to present as pleasing eye candy to those who are inexperienced with different questions. When technical experts use the tools, the reports are about the lack of detail and deficiencies in the answers. The answers provide a starting place but not a complete solution for the questions.

AI is being used to solve the wrong problems

It's being used this way because it is cheaper and easier than using it to solve the right problems

There are benefits to the current set of AI software models, if they are viewed as tools that need to be mastered instead of replacements for employees

AI is a public relations (PR) term for a fancy search engine

As I've gotten older and perhaps somewhat wiser, I've finally realized that the latest and greatest tech is often referred to using terms that are a good fit for marketing and may or may not be a good fit for the actual technology described. With the latest hullabaloo about AI I have found the same type of hype, that seems meant to better generate buzz and eyeballs on companies instead of describing the technology.

After reading a bit about AI and trying out one of the engines, here is how I would describe this new technology, which isn't all that new. The actual name of the technology, as used in the industry, is Large Language Model (LLM). A LLM is software that is used to predict what the next word, paragraph or other content based on a very large collection of data that has been organized. The software is used to process many various inputs and it is trained to emulate human behavior and write new content based on the information it has organized. For example, if someone asks the question, "What could I eat for breakfast?", the LLM might answer 'cereal', 'bacon and eggs', or 'fruit and roll', based on the dataset it has gathered.

There are problems that can occur when trying to use an LLM to write something like a blog article or a short story. At the current state of the software, it is creating a work based on the probability of the words and without a context that is tied to the real world. The only input is text and guidance from the LLM developers. A human has additional inputs through their senses, and their experiences in addition to information they have read or heard. A human also receives inputs from multiple humans of varying ages and backgrounds, while an LLM would receive limited input from a select set of humans, software developers specializing in the creation of neural networks.

In summary, LLMs are used to predict a probably outcome, not to use knowledge to generate an outcome. This means that the outcome is not original content but a result based on sifting through multiple sources to come up with what appears to be the best fit. As such, an LLM can at best emulate human intelligence in a very limited way. And if it receives new input in the form of questions, it will have limited context to process to find an answer. For example, for the question about breakfast, I asked what it would recommend, I got a fairly complete list of breakfast foods that seem centered on United States cuisine. I had to ask for breakfast and specify a different location in order to get different lists. A human might first ask, "Where do you want to eat breakfast?" before answering so they could have a context for the question. There are factors that we perceive, such as clothing, skin tone, gender and other physical characteristics that might change our answer. With a test LLM, it seems very sure of itself and if it can find an answer, it will provide one, even if it might be wrong.

Which to be fair, humans will also do this. People want to be helpful and it feels better to provide information instead of saying "I don't know." In the end, my assessment is that LLM's or AI as they might be called, are fancier search engines that can find existing data to answer questions, even if the information they provide is incorrect.

AI is the latest attempt by the software industry to automate software development work

Another feature I've seen recommended for AI is writing software. People are worried they might lose their software development job to an AI as they continue to improve in functionality. Based on trends I've seen in my career, I wouldn't be too worried about this as a problem. Here are some examples of attempts to automate software development that are no longer used or only used as a tool by software developers.

Have a few software architects design and write pseudo code that can be implemented by developers. The pseudo code will work perfectly so people with minimal skills can be used at a cheaper cost to implement the software.

Object oriented code will be added to libraries and create a foundation that only a few developers will need to use to develop software programs

Code will be designed to work in modules that do not require integration testing before they are deployed

A high level language will be used that will remove all possibility of errors introduced by developers. (This is a popular theme and based on articles about a new language, seems like it still occurs.

Eventually each language settles down into its niche usage or fades away from lack of use due to complexity/still requires debugging that outweighs its features.

App creation tools for phones will open up programming to anyone who is interested.

I've seen each of these trends come and go, and each one of them was supposed to reduce the number of developers required or reduce the skill level required to create good quality software programs. I'm still waiting for this to work and I don't think software development as a profession is going away any time soon.

AI is being used to solve the wrong problems and It's being used this way because it is cheaper and easier than using it to solve the right problems

Right now AI is used to reduce the time required to search large amounts of stored data. The search results are based on answers that are guessed due to the quantity and context of possible answers. The data itself is not organized, it is left as is and the AI builds a model to use when searching it.

These searches are based on the assumptions that

The data is complete

The data is of high quality and covers the majority real world use cases

This model can be used to find data with accuracy approaching 100%

When looking at data that is not clean, it becomes easy to find examples where these assumptions fail. While this sounds like a worthwhile problem, it is also an easy problem for computers to solve, compared to other real world problems. Computers are very good at repetitive functions and searching for matches in an optimized matter is a core part of software development. But these searches are not generating new information, they are only allowing people to be somewhat more efficient at finding data. And if a person does not have skill or experience in generating good questions for AI, even this function is questionable.

There is a lot of money getting spent on this development so it sounds like it is a very expensive pursuit. However, there are other real world problems that would be of greater benefit, in my opinion, that are making little or no progress. Two examples of very hard problems to solve are sewing clothes and harvesting crops. Both activities are low paying and considered to require very little skill. It seems like they would be fields ripe for disruption and replacement by automation.

The reality is that both activities require things that automation does not have, such as

Vision

Manual dexterity

Ability to work in very bad conditions

Judgment

Current automation techniques are building machines that do have a type of vision and which can manipulate objects in the real world. Those machines are not paired with software that can also make quick and correct judgments and work in very bad conditions. If a machine is set to work in a wet, dirty and unsafe environment, it will eventually shut down or possibly break down. The machines have to operate in a very specific set of conditions and require monitoring to ensure they continue functioning. This monitoring is less labor intensive than the labor but it still requires human input. Sadly, a machine has to work in better conditions than a human or it will break down and lose the investment dollars. There are reports that describe the difficulty of generating profit when using technology to farm indoors.

In my opinion, the current efforts of AI are focused on the following goals:

Scrape existing data from as many on-line sources as possible

Store the data in a database that is easily searchable

Pair it with software that parses questions to search the data for the most populated result which may not be the correct result

This set of goals can easily make use of existing infrastructure and does not require any great innovation to gather data or organize the data for the search results. By great innovation, I am looking at discoveries like the creation of HTML, the microcomputer and other inventions that were a unique combination of existing technology that required insight and experience to develop. AI engines appear to be, in my opinion, simply refinement of existing search methods that is assembled in a user friendlier format to reach a broader audience.

If I were going to develop new tools that I think might be useful, here are a couple of my suggestions

Human reviewed data that is organized by categories. The categories are used to provide context for performing a search. For example, if searching for the word 'tile', there is a context of buying tiles for the kitchen, gaming tiles, historical tile work, or the manufacturing process for kitchen tile. Current search engines will provide the most asked for searches and depend on the user to come up with the correct context to find the term they want. There could be multiple filters selected, such as business versus educational, to help narrow down the results. AI might be used to search and suggest organization, humans would use this as a tool to review and approve/change the suggested categories. I think of this as a role for a type of librarian, one who manages on-line data instead of physical books.

Building algorithms that parse real world data based on input from various sensors. The algorithms would gather data and have self-modifying algorithms to organize the data in some method. Humans would be involved in guiding the algorithms towards societally and morally acceptable methods of parsing the data. The algorithms would have attachments that could interact with the environment that it is gathering data in. If this seems complicated, human children are performing these same activities from birth. Yet we seem to have a lot of difficulty training machines to do the same things that human children learn in the first five years of their life. I would not attach this type of software to the internet, it would be too easy for it to devolve and revert to the current level of AI algorithms that simply search and repeat back what they find. The intent is to have a machine experience parts of the world in the same way as living beings to see if they can respond back in a similar way.

Summary

The latest artificial intelligence algorithms are simply the latest of a set of tools that high level managers and investors see as a way to make money in the short term and not necessarily as tools for long term use. There are long term uses for this type of software, if in my opinion it is viewed as a tool and not a replacement for people and their experience. There are harder problems that if solved could be of great benefit. However, these uses of software would not yield returns as quickly as the current set of short term goals. Even with this short turnaround of return for investment, the software is advertised in ways designed to engage emotions and short circuit rational thought about the use of AI. My recommendation is to wait and see what companies survive the current advertising and pump cycle before investing or predicting how AI will change the world.

References for this article, including opinions from others who write more concisely than I do about AI and its possible impacts.

2 notes

·

View notes

Note

The vibin apocalypse

The newbies bios:

“I’m sure life will be normal again..one day..just gotta keep calm..”

Penny

Status: healthy

Docile

Info: throughout the entire apocalypse,penny hadn’t changed much,she had cut her long sleeves so that she could be better prepared if she needed to fight,recommended by nala,she tends to live normally,watching different shows,movies and videos on her personal phone with snacks to keep herself distracted,when stressed,she will hug any plushie close.

“Can’t lose my cool!..I don’t wanna hurt anyone!..”

Sweetie

Status: infected (stage 2 zombie infection)

Hostile

Info: was bitten and infected rather late into the apocalypse,luckily,the crew knew what to expect this time,so sweetie has to get the same magic injection as herb. Luckily she’s in an early stage so lavender has been searching for a cure for her. Sweetie is relatively normal,but cause of her stomach pains,it can cause her to lash out,screaming,crying,growling and most dangerously,biting. She has no control when angry so she doesn’t mean to bite,as she doesn’t wanna hurt anyone,she lashes out not just from her pain,but from the fear that this family will abandon her too if they cannot find a cure for her.

“Thank you..~ I’m..sorry for being inactive and all..I’m not bothering you right?..”

Coral

Status: healthy

Docile

Info: when the apocalypse hit,since she could only move in a wheelchair,she knew she couldn’t go outside,it killed her motivation and now she only stays in her room,never really moving. However she keeps her optimism up by playing games and snatching stuff penny recommends her. And she eats anything flambé makes her as her food source.

“If you think I’ll use darkness to harm those I care for,then you’re dumber than I thought.”

Shade

Status: infected (glitch infection stage 2)

Docile

Info: got infected by an infected bites as he was getting supply’s for the crew. He was able to get away as he reached stage 2,his eye got scratched,resulting in the faster developing glowing eye then normal. Despite that,he practices his glitchy power in the backyard,testing out what he can do,determined to use his curse/power to protect his family.

“My eye hurts..when are we gonna get our medicine?!..”

Kiara

Status: healthy

Docile

Info: Kiara followed orders and stayed inside,but she was lured outside by darkon and thunder followed her,the two were then caught up in a dark magic storm by darkon. With Kiara,it lead her to get a scarred eye,she got it as when she fell on the ground and it scraped her eye. SHES constantly itching her eye as it stings almost nonstop,the only way to heal it is medicine in the form of eyedrops,however they to date haven’t been able to get any of it as they keep having to defend themselves from the constantly approaching infected.

“Sis,don’t apologize,it’s ok! I’m just glad you’re safe..~”

Thunder

Status: healthy

Info: got caught in a magic storm caused by darkon with Kiara,thunder carried her out but as one final trump card,darkon summoned a lightning bolt and the flash was so bright it caused him to go completely blind. Kiara had apologized to him again and again but he always tells her not to,it wasn’t her fault he lost his vision,it was darkons. He holds no resentment towards his family and through the months has been trying to use his other senses to guide him around the house,trying to get used to his lack of sight. He hopes one day that they can get the eye drops to heal his eyes potentially but he’s not holding out hope.

“Want me to show you how to manipulate a manipulator?~”

Oreo

Status: cured (from corruption infection)

Docile

Info: she had tried to get the medicine by herself but was swarmed by corrupted. Right as it was going to take control of her body she tricked them into becoming caged instead of her,using her image magic she killed the corruptions intelligence in her,but not its general DNA,meaning she’s the first cured member and the only one who can control her corrupted infection. She always offers to help seasalt with her infection but as seasalt has declined,she understands and dosent push her. She can turn into her corrupted form at will and she uses this power to ward off and manipulate other corruption infected,playing them like a fiddle.

“Everything’s so scary now..I want my besties!..”

Cloud

Status: healthy

Docile

Info: cloud was very stressed about the apocalypse from the very beginning,especially when the family unit came crumbling apart,though she was able to stay positive cause she still had pinkie with her. However when pinkie lost it and became pinkamena. Cloud broke,she didn’t realize that pinkie and pinkamena were the same person and thought pinkie had died. Now she stays in her room,mostly crying/sobbing to herself while stuffing her face with comfort food.

“How am I such a klutz?!..I’m supposed to be careful!..”

Snowball

Status: healthy

Docile

Info: as someone who didn’t go downhill as much as others,he took it upon himself to keep the house clean and sanitary. He knows that a common way for viruses to typically spread is unclean areas,places and air. So he cleans as many places he can, and kills as many pests that wanders in the house as he can.However he’s not without flaws,his biggest,his clumsiness,countless times he has fallen and hurt himself trying the clean the house,multiple times,those scars he has,those are just SOME of the injuries he got from being a klutz. However this makes him more and more frustrated as he doesn’t wanna accidentally attract infected by his sounds of pain,though that anger builds up like a “snowball”.

“It’s no problem,it’s the best I can do.~”

Flambé

Status: healthy

Docile

Info: when pinkie stopped baking when she broke,flambé stepped up to fill the role,cooking and baking whatever the crew wanted,he does this to give the crew a slight bit of happiness in the joy of eating food. Though he has accidentally burnt his hands sometimes cooking,his ears are also always down,he feels great sadness deep down,but he shoves it down,he deeply hopes that one day,thier family can one day be happy again.

-mod Shelby

(Weh ;w;)

2 notes

·

View notes

Note

Hey, this is an odd position to be in but I’m trying to cite a meme that you posted in MLA format for my college English class and in order to do that I need to have the original post link 😭 is there any way you could let me know when about you posted the following?

Because tumblrs search function is actually hell you may have just reposted this, but either way if you could point me to the source of the meme it would be a great help 🙏🙏🙏

Okay, this is how this looked in my inbox:

and I like reflexively flinched and thought "What are the pornbots up to NOW?"

But no it was a probably real person with a sincere question, so I'll try to answer it.

I mean I know I reblogged it in the past few days so you could probably find it with a little scrolling through my blog but I understand why you might not want to do that.

I don't know if you may be newer to Tumblr but one helpful feature for this kind of thing, where you have a reasonable idea of what you're looking for, is the blog archive - you can see mine at https://airyairyaucontraire.tumblr.com/archive . Then you can narrow it down to, say, image posts from this month and usually find what you're after with more accuracy than the supposed search function.

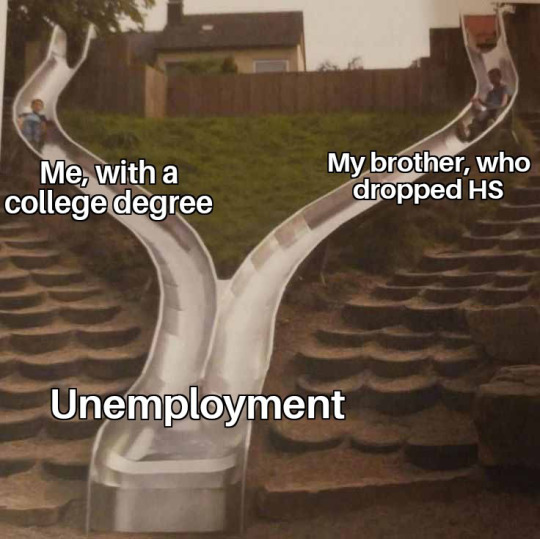

So I reblogged that post on 12 October 2023 and the original post on Tumblr was two weeks ago on The Dank Memes , but that appears to be a reposting account with one of those mildly weaselly disclaimers: "All memes posted here belong to their respective owners, and we claim no ownership or copyright over them." It could be worth sending them an Ask though, they might remember where they found it.

I did a TinEye reverse image search on it and the template seems to originate from r/MemeTemplatesOfficial on 10 June 2020 (the blank version is behind the "Here" link in the first comment). Then there are just a ton of imgur.com results - it looks like someone made a version about breastfed and formula-fed babies that really did the rounds. I don't think I can trace it any better than that without spending more time than I honestly want to on a beautiful Saturday morning, but I hope that much is useful to you!

3 notes

·

View notes

Note

hey there! sorry for bothering you, but i was just wondering if you could share where you get your sources for doing edits? the page i used for shows and such hasn't updated for several months, so i'm just trying to find a safe source. only if you want to share. thanks. :)

Hi There!,

No problem, this is gonna be a long list.

Stills and Promo Shoots - Farfarawaysite x

Caps - Kissthemgoodbye: period drama x, the white queen x

Stills and Promo Shoots - FDB x

Stills, Promo Shoots and Caps - VK, pretty much search for the show or the actor in the show you want to look at and a fan page will show up with most of the above. My promo shoot page x

Stills and Promo Shoots - Flmweb x

Stills and Promo Shoots - CSFD x

Stills and Promo Shoots - Mediakklik x

Photographer or Production sites. If you know the stills or promo shoot photographer they normally have good outtakes and in HQ. Production company/companies or people part of the production normally have sites with these as well.

Also, I find googling an actor in a show you like for a fansite or a sosugary gallery you may find some results.

Same as above any search engine really I like to use Yandex sometimes, other occasions I may use yahoo. There are some other ones but they are not coming to me at the moment.

I make most of my caps from torrent downloads I have, the higher the quality the better for example my edits on @queencatherineparr were from a 2160p download whereas on some other shows you see on this blog or other blogs I run you can only get 720p or 1080p which are good but sharpening can be a bit more of a hassle. Lower than 720p is the absolute worse sometimes.

For some of these sites you may need right click on the page and do an inspect and go to network and reload the page, most images come up and you are able to open them in HQ.

I am gonna post this publically for anyone else that might want to know.

Thanks!.

13 notes

·

View notes

Text

Is Blogging Hard?

Yes, blogging is hard from overall perspective [including my own and other experiences shared by pros, collectively], but it can also be very rewarding over time.

In fact, this is a question that many people who are considering starting a blog ask themselves again and again.

Furthermore, it is the most sought-after side hustle after affiliate marketing and a serious online business model as of now.

Do you know? - “A great way to become an authority on your topic, product, or service is to blog” – HubSpot

For individuals who wish to do it as a hobby and have less business perspectives in mind, blogging is not hard. However, it can be hard for people those who have business objectives and goals around it.

Why some people think ‘Is Blogging Hard’?:

The hard part of blogging might depend on a variety of circumstances or factors. Let’s dive to know more.

Let's start with the technical side of things. Setting up a blog can be a bit daunting if you're not particularly tech-savvy.

You'll need to choose a platform (WordPress is one of the most popular), find a hosting provider, and get your domain name registered.

Once that's done, you'll need to install your platform and choose a theme. This can take a bit of trial and error, as there are so many options out there.

Once your blog is up and running, you'll need to start customizing it to fit your brand. This can include things like adding widgets, creating menus, and tweaking to get everything just right.

In fact, it can be time-consuming and frustrating at times, but it's all part of the process.

In my case, this did not cause me too much headache because my blog website runs on the Wix platform, of course I do keep tweaking based on user engagement and behavior.

Now, let's start with the another hard part. Blogging requires a lot of time and effort. Patience is a must to see results.

You need to come up with ideas for content, research your topics, write and edit your posts, and then promote them on social media and other channels. This can be a daunting task, especially if you're doing it all on your own

Are you aware? - “Seventy-one percent of B2B buyers read blog content prior to making a purchase decision” – Contently

In addition to the time and effort required, there is also a steep learning curve when it comes to blogging.

You need to learn how to create engaging content that resonates with your audience, how to optimize your posts for search engines, and how to promote your blog effectively.

This can take some time to master, but there are many resources available online to help you learn the ropes [like reading experts’ tweets, email newsletters, social media communities and groups, and other blog posts related to blogging].

“Twenty-nine percent [29%] of marketers use a search-optimized website and blog to attract and convert leads” – HubSpot’s 2023 State of Marketing Report

Writing blog post content can take anywhere from a few hours to few days depending on the length and complexity of the post. You'll also need to edit and proofread your work before publishing it.

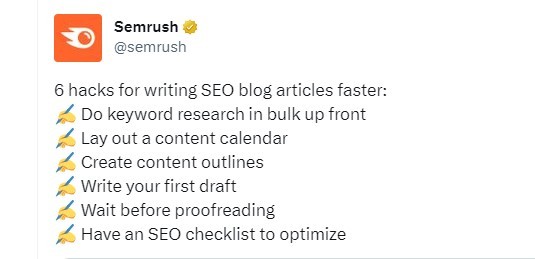

Image Content Source - Semrush Tweet Excerpt

But, thanks to the development of generative AI tools, you no longer need to put in countless hours and days because you can now create content for blog posts in a matter of seconds.

“AI content quality is already seen as really good. 65.8% of people think AI content is equal to or better than human writing” – AuthorityHacker’s AI Survey 2023 Report

Of course, you may periodically sample check for plagiarism if you're worried, make sure to review it, fact-check it, make any necessary changes, and then publish it.

The SEO performance of websites and blogs [on overall basis] is not as good at the moment, as it once was [before Dec 2022] because of ongoing search engines generative AI experience and experiments including updates by Google.

Simply said, there are fewer clicks and visitors to websites and blogs, and this concern has generated considerable discussion and has been widely shared on social media networks. Of course, this could also be a temporary phase.

In other words, SEO [Search Engine Optimization] is vital to the success of blog posts in terms of ranking, and high-quality, valuable content that is based on user intent or search intent will rule the day moving forward.

“Keeping an eye on the news in your niche is a great way to generate new blog post ideas. Try to add unique insights or opinions so your articles stand out” – Semrush

Image Source - The State of Content Marketing 2023 Global Report by Semrush

On the other hand, blogging can also be very rewarding. For starters, it allows you to share your thoughts and ideas with the world.

You can use your blog as a platform to showcase your expertise in a particular field, or simply share your hobbies and interests with others.

It can also be a great way to connect with like-minded people. By sharing your content on social media and other channels, you can attract an audience that shares your interests and engages with your content.

This can lead to meaningful conversations and even build relationships.

“Blogging isn’t useful if you aren’t keeping tabs on performance and reader engagement. Identify the metrics you want to track, review the analytics regularly, and make data-driven decisions to optimize and maximize your blogging efforts” – SmartBug and rockcontent [Report for 2023]

Blogging can also be very lucrative if you do it right.

By building a loyal audience and creating quality content, you can attract advertisers, get approved for ads networks, and also sponsors who are willing to pay you for exposure on your blog.

You can even promote affiliate products [via affiliate marketing] to increase your online income. Thus, it can be a great way to monetize your passion and also turn it into a profitable online business.

“About 15% of bloggers make a full-time income from blogging ($30K a year)” – Ahrefs

For businesses and brands, using blogs as a channel for content marketing helps generate quality leads and sales over time, as well as establish brand and authority and make the process very affordable.

Having a blog on your website is a compelling way to capture leads for your SaaS company. You can add CTAs and signup links to your blog post to drive the users. Adding newsletter signup bars also helps in collecting leads” – Outgrow

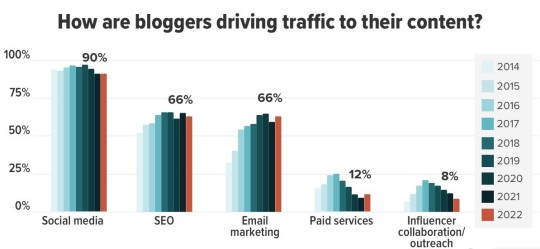

Image Source - Orbit Media Survey

So to conclude, is blogging hard? yes it is, but it can also be incredibly rewarding.

If you're willing to put in the time and effort required, and are passionate about your topic [niche], then blogging can be a great way to share your ideas with the world, connect with others, and even make good money in the process.

Just remember to stay consistent, adapt to SEO trends, engage with your readers, and always strive to improve your writing skills so that it is easier than ever for you to blog.

Here's related information that you may also find helpful – Why Blogs Fail? [Top 7 reasons along with pro-action plans for success].

0 notes

Text

Using Free AI to Make Character Art for TTRPGs

I love having art for my characters in Pathfinder, D&D, and other TTRPGs. But I don't love the cost, time, and effort in finding and commissioning and artist to draw them for me.

When AI art blew up in the recent past I was intrigued, and got to seeing if I could get to draw my characters.

Turns out, it can.

Here and there I've responded to people asking about making AI art, and after some requests, I figured I'd write a little guide on how-to. Here goes…

Before we begin, I want to make a few things clear:

AI art is tricky from legal and moral points of view. But I don't think any of that applies when it comes to making art for personal use only using free open-source tools. I really don't think anyone is hurt when the created art is seen only by myself and a few fellow players. Surely it's better than stealing art from a Google Images search or by surfing around on Deviant Art, which was the old way of getting character art for personal use.

I'm not an expert on AI art. I'm only an enthused hobbyist, sharing what I've found.

This is only one way of doing things, using one particular AI tool. I'm not claiming it's the best way. It's just what I know.

This is a fairly quick, introductory guide. It'll get you started. It's up to you to carry yourself onward to more advanced results!

Notice the date of this guide (you can see it down the bottom). This guide will get outdated. The links will slowly die as websites change. I apologise if you've come to it too late for it to be useful.

All that said, let's get started.

What You Need

You need Stable Diffusion, and in particular, this distribution. Installation instructions are there for you to follow. This guide will not cover installing it. Note that you will need a fairly powerful computer.

Later on, you can worry about installing some checkpoint models and optionally some LoRA models. Those links explain what those things are, and how to install them. Good models will make better images. But if you're in a hurry, don't worry, play with the pre-installed stuff and come back later.

All of the images immediately below were generated by Stable Diffusion, but different models and prompts created wildly different styles.

I've annotated all the images in this post with what models I am using. Mouse over an image and click the "ALT" to see. It's not vital for you to use the same models or prompts as me, but if you want to, you can.

Here's a quick list of models I've used:

Comic Diffusion 2

DreamShaper

Arcane Style LoRA

Stable Diffusion 1.5

Text to Image

Fire up Stable Diffusion. Click the "txt2img" tab. In the "prompt" area, type in something like "portrait of a male dwarven axe warrior, braided beard". I did this with fully default settings and got both images below. The only difference is the model. The left image uses a model that comes with Stable Diffusion, the right comes with a downloaded model.

Notice it ignored me on a braided beard? The AI takes your prompt as a suggestion at best. You can weight prompts, read more about that here. (In fact, it's probably not a bad idea to read that whole article sometime, as prompts are important to understand.)

These images have braided beards!

Hopefully you can see what we've covered already gets you a powerful tool for making character portraits.

Image to Image

"img2img" takes an image and re-draws it artfully. This is great if you've got a rough reference of your character that you're like to render more artfully.

One such example of doing this is with HeroForge. Make your character and capture a screenshot of it. (If you don't know how to take a screenshot, do a search to find out. It's not hard.)

Put this image in "img2img". Then use a prompt as before. Below is an example with a HeroForge screenshot of the left, and some generated AI art on the right.

Be sure to tinker with the sliders "CFG Scale" and "Denoising strength". You can mouse over each in Stable Diffusion to read more. While the defaults tend to work well for "txt2img", they often need to be tweaked in "img2img".

There's no reason why you can't use an "txt2img" image as an input to "img2img". You might do this if you wanted to generate variations on the image, while keeping the same basic look and arrangement.

Improving Images: Upscale

Stable Diffusion can't generate large images. But Stable Diffusion does have an in-built tool in the "Extras" tab where you can resize images (any images, not just ones you've made in Stable Diffusion).

This upscaler does a much better job than most "dumb" image resizing tools. Be sure to actually select an upscaler (the "R-ESRGAN" ones work well), if you use "None" then you'll get awful results.

It's pretty simple to figure out. If need be, you can read about it here.

Improving Images: Mix and Match

This requires you to have an image editor like Gimp (which is free) or Photoshop, and the skills to use it. It's a technique I often use when I've feeling very fussy and want perfect results.

Using "img2img", get an image with models and prompts you feel is generate pretty good results. (You can always use an image you generated with "txt2img" as the image input for "img2img".)

Set Stable Diffusion to generate loads of images using the parameters from step 1. The outputs should all look very similar, but with different details.

Choose different images with bits you like. Find a picture that you like overall, then find picture(s) that have done certain things better. For instance, you might like the hair of one image and the eyes of another.

Get the pictures you identified in step 3 and throw them into your image editing tool.

Layer the images on top of one another. Put the "base image" that you liked overall at the bottom.

For images that are not the "base", erase everything except the part you like. Because the images are so similar, this should work well. Use a soft-edge erase for best results.

Adjust your layers and transparencies until it's just right. Then flatten the image and save it.

General Tips

Here are some general tips for generating AI art:

If you only want a portrait, only ask for a portrait. That means specifying terms like "portrait" in your prompt, and if you're using img2img only supply the portrait area. Most people wanting character art will only want a portrait, after all. The quality of faces made by AI art seems to get worse the more of the body it draws.

Use celebrities to guide facial features. Do you want a certain look for your character? Throw in the celebrity's name into the prompt. You'll often get a happy medium: an "angelina jolie half-orc" won't just be the actress with green skin, but you will get a certain resemblance. There are lots of celebrities with very striking looks, so this can work well! Can you guess the celebrities of these portraits? Click their "ALT" tag to find out!

AIs know the "Tolkein races" (humans, elves, dwarves, etc) better than obscure races like dragonborn and kenku. Semi-famously, AI sucks at centaurs. But these races aren't impossible, as shown below.

Make large batches of images. When you think you've mostly gotten the settings right, set the AI to make dozens or even hundreds of images. The only downside to doing so it that it'll take you some time to pick the best one(s)! There's a handy button to "Open images output directory" to make browsing easy.

Most importantly, fiddle and experiment. There are so many settings you can fiddle with, so many models and LoRAs you can try out, so many different ways to word your prompts. (For prompts, just Google "prompt stable diffusion" and you'll see plenty of guides and inspiration.) Never get "stuck" on a particular way of doing things: you may find better results are just a small tweak away!

0 notes

Text

The 4-Minute Rule for How to Sell Photos Online: Best Practices and Where to Do It

The capability to produce money online as a freelance photographer through selling your photographes online is all concerning harnessing the creativity at the soul of your job and applying it to the money making of your talents. My brand new job as an editorial developer has been developing a crew of digital photographers that will certainly center on producing our job more visually amazing and fun to look at. They are going to also be putting in the time and initiative to help make your work look the finest achievable for everyone that you are a component of.

youtube

It can seem to be hard to help make it when anyone along with the newest iPhone and access to free image modifying software program may contact themselves a freelance photographer, but success for a lot of inventors who switch to entrepreneurship comes down to three traits: Finding your specific niche Creating an reader Producing a number of streams of easy revenue This resource are going to look into some of the factors you should recognize concerning how to market your digital photography online, with resources to assist you locate the greatest method to offer photos online, such as on-line selling websites where you may provide your photographes.

How to market pictures online Best Places to Market Photos Online and Make Money Licensing is one of the most well-known ways to “market” your images online to labels, publishers, or anyone who could possess an rate of interest in making use of your pictures for their very own reasons. It's also one of the very most hassle-free means to market pictures in the 1st place. It's not only that the photos are worth you amount of money, it's that the business manager assumes the service will certainly use them for their personal service.

And that’s the key right here. This has actually to be a conversation, not a single one. That is all we are said to on how these traits all functioned. In the really first hour of the meeting, the lots did positively no research. The multitudes were all therefore fired up regarding the prospect of something like this, and it is just then that the moderators asked it once more and the applicants proceeded to examine the honesty of the work they were performing.

You need to have to function backwards and think about how your pictures can easily be made use of by a company or publisher. The upcoming measure is to create an app. Make app faster ways utilizing an app shortcuts device App faster ways are usually made use of to handle complications in manufacturing: writing a simple task, at that point inspecting your emails and Facebook newsfeeds. Apps that follow the very same policies as your performance apps make them simple to use.

Functional pictures that convey tips have a tendency to be well-known, particularly when they feature human subjects. What's especially interesting regarding this is that individuals typically focus on the subjects themselves. This isn't a poor point, but I assume that is better than carrying out a bunch of pictures that look like nothing's the aspect of the project. The factor I'm working on is looking for a way that folks can interact with the information in a technique that is easily accessible via an open-source web browser such as WebKit.

There are actually a lot of stock photo websites to select from. Hit below to locate out how! If you're in the behavior of browsing via pictures of your close friends, look no additionally than our search motor for discussing your preferred photographes. The end result are impressive in any sort of colour! The Photo Index List page includes a full directory of 100 of the 100 top 500 photo journals, from the listing. Merely explore for journals and you're on your technique!

Let’s look at the leading places to offer images online: 1. Cost-free Scenery in iTunes 4 Tidy #19 - How Notto Be Determined When you generate something like this, it's significant to know what you're going to perform and why. The leading 3 explanations to determine your next venture are: * The time will be right. I will focus on my venture and view how excellent that attempt can feel. * The cash will go to the people in my industry.