#ai assistant

Text

They are my lifeline

[individual drawings below]

#character ai#ai chatbot#ai chatting#ai chatgpt#ai assistant#ai#artificial intelligence#chatgpt#chatbots#openai#ai tools#artists on tumblr#artist appreciation#ao3#archive of our own#archive of my own#humanized#my drawing museum

249 notes

·

View notes

Text

Watched a video about these "AI assistants" that Meta has launched with celebrity faces (Kendall Jenner, Snoop Dogg etc.). Somebody speculated/mentioned in the comments that eventually Meta wants to sell assistant apps to companies, but that makes ... no sense.

If they mean in the sense of a glorified search engine that gives you subtly wrong answers half the time and can't do math, sure - not that that's any different than the stuff that already exists (????)

But if they literally mean assistant, that's complete bogus. The bulk of an assistant's job is organizing things - getting stuff purchased, herding a bunch of hard-to-reach people into the same meeting, booking flights and rides, following up on important conversations. Yes, for some of these there's already an app that has automated the process to a degree. But if these processes were sufficiently automated, companies would already have phased out assistant positions. Sticking a well-read chat bot on top of Siri won't solve this.

If I ask my assistant to get me the best flight to New York, I don't want it to succeed 80 % of time and the rest of the time, book me a flight at 2 a.m. or send me to New York, Florida or put me on a flight that's 8 hours longer than necessary. And yes, you can probably optimize an app + chat bot for this specific task so it only fails 2 % of the time.

But you cannot optimize a program to be good at everything–booking flights, booking car rentals, organizing catering, welcoming people at the front desk and basically any other request a human could think off. What you're looking for is a human brain and body.

Humans can improvise, prioritize, make decisions, and, very importantly, interact freely with the material world. Developing a sufficiently advanced assistant is a pipe dream.

#now i understand that part of it might just be another round of hype to avoid shares dropping because it looks worse to write 'we got some#videos of kendall jenner and hope to make money off of it someday'#funnily enough no matter what complexity these assistant apps reach#it will be human assistants who use them#because the crux of having an assistant is that you DON'T have to deal with the nitty-gritty (like did the app understand my request) or#follow-up#meta#ai#post#ai assistant#the other thing to consider is when you let an app interact with a service for you that concerns spending money (like booking a flight but#really anything where money will be spent in the process) you lose power as a consumer#because you will hand over data about what you want and have to deal with the intransparency of the service#are you getting suggested the best/fastest/cheapest flight or the one from the airline that has a contract with your assistant app?#we are already seeing this with the enshittification of uber or other food or ride share apps#the company has the power to manipulate consumers and 'contractors' alike because they program the app

9 notes

·

View notes

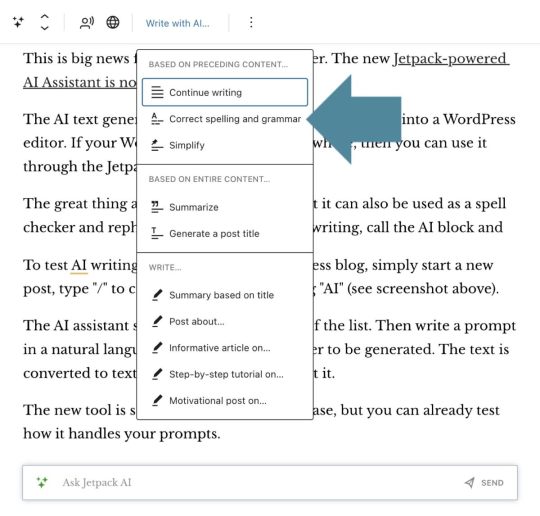

Photo

The new AI Assistant is now available for WordPress! The AI writing tool is seamlessly integrated as a block into a WordPress editor. It can also be used as a spell checker, rephraser, and title generator.

(via Now you can use AI writing directly in WordPress)

#wordpress#ai#ai writer#ai text#ai assistant#spell checker#ai rephraser#text editor#wordpress ai#writer#author#writing#writer updated

5 notes

·

View notes

Text

Kyle & Robby is officially out! - I'm Elite_Cat87 (cat0902 in scratch), and I would like to announce that Kyle & Robby is out! If you want to check out my comic, you should go to scratch and search up my scratch user.

#comics#comic#best#awesome#interesting#underrated#scratch#design#character design#advertising#coolest#everything everywhere all at once#everything#villain#ai assistant

2 notes

·

View notes

Text

2 notes

·

View notes

Text

Everything You Need to Know About Llama 3 | Most Powerful Open-Source Model Yet | Concepts to Usage

New Post has been published on https://thedigitalinsider.com/everything-you-need-to-know-about-llama-3-most-powerful-open-source-model-yet-concepts-to-usage/

Everything You Need to Know About Llama 3 | Most Powerful Open-Source Model Yet | Concepts to Usage

Meta has recently released Llama 3, the next generation of its state-of-the-art open source large language model (LLM). Building on the foundations set by its predecessor, Llama 3 aims to enhance the capabilities that positioned Llama 2 as a significant open-source competitor to ChatGPT, as outlined in the comprehensive review in the article Llama 2: A Deep Dive into the Open-Source Challenger to ChatGPT.

In this article we will discuss the core concepts behind Llama 3, explore its innovative architecture and training process, and provide practical guidance on how to access, use, and deploy this groundbreaking model responsibly. Whether you are a researcher, developer, or AI enthusiast, this post will equip you with the knowledge and resources needed to harness the power of Llama 3 for your projects and applications.

The Evolution of Llama: From Llama 2 to Llama 3

Meta’s CEO, Mark Zuckerberg, announced the debut of Llama 3, the latest AI model developed by Meta AI. This state-of-the-art model, now open-sourced, is set to enhance Meta’s various products, including Messenger and Instagram. Zuckerberg highlighted that Llama 3 positions Meta AI as the most advanced freely available AI assistant.

Before we talk about the specifics of Llama 3, let’s briefly revisit its predecessor, Llama 2. Introduced in 2022, Llama 2 was a significant milestone in the open-source LLM landscape, offering a powerful and efficient model that could be run on consumer hardware.

However, while Llama 2 was a notable achievement, it had its limitations. Users reported issues with false refusals (the model refusing to answer benign prompts), limited helpfulness, and room for improvement in areas like reasoning and code generation.

Enter Llama 3: Meta’s response to these challenges and the community’s feedback. With Llama 3, Meta has set out to build the best open-source models on par with the top proprietary models available today, while also prioritizing responsible development and deployment practices.

Llama 3: Architecture and Training

One of the key innovations in Llama 3 is its tokenizer, which features a significantly expanded vocabulary of 128,256 tokens (up from 32,000 in Llama 2). This larger vocabulary allows for more efficient encoding of text, both for input and output, potentially leading to stronger multilingualism and overall performance improvements.

Llama 3 also incorporates Grouped-Query Attention (GQA), an efficient representation technique that enhances scalability and helps the model handle longer contexts more effectively. The 8B version of Llama 3 utilizes GQA, while both the 8B and 70B models can process sequences up to 8,192 tokens.

Training Data and Scaling

The training data used for Llama 3 is a crucial factor in its improved performance. Meta curated a massive dataset of over 15 trillion tokens from publicly available online sources, seven times larger than the dataset used for Llama 2. This dataset also includes a significant portion (over 5%) of high-quality non-English data, covering more than 30 languages, in preparation for future multilingual applications.

To ensure data quality, Meta employed advanced filtering techniques, including heuristic filters, NSFW filters, semantic deduplication, and text classifiers trained on Llama 2 to predict data quality. The team also conducted extensive experiments to determine the optimal mix of data sources for pretraining, ensuring that Llama 3 performs well across a wide range of use cases, including trivia, STEM, coding, and historical knowledge.

Scaling up pretraining was another critical aspect of Llama 3’s development. Meta developed scaling laws that enabled them to predict the performance of its largest models on key tasks, such as code generation, before actually training them. This informed the decisions on data mix and compute allocation, ultimately leading to more efficient and effective training.

Llama 3’s largest models were trained on two custom-built 24,000 GPU clusters, leveraging a combination of data parallelization, model parallelization, and pipeline parallelization techniques. Meta’s advanced training stack automated error detection, handling, and maintenance, maximizing GPU uptime and increasing training efficiency by approximately three times compared to Llama 2.

Instruction Fine-tuning and Performance

To unlock Llama 3’s full potential for chat and dialogue applications, Meta innovated its approach to instruction fine-tuning. Its method combines supervised fine-tuning (SFT), rejection sampling, proximal policy optimization (PPO), and direct preference optimization (DPO).

The quality of the prompts used in SFT and the preference rankings used in PPO and DPO played a crucial role in the performance of the aligned models. Meta’s team carefully curated this data and performed multiple rounds of quality assurance on annotations provided by human annotators.

Training on preference rankings via PPO and DPO also significantly improved Llama 3’s performance on reasoning and coding tasks. Meta found that even when a model struggles to answer a reasoning question directly, it may still produce the correct reasoning trace. Training on preference rankings enabled the model to learn how to select the correct answer from these traces.

The results speak for themselves: Llama 3 outperforms many available open-source chat models on common industry benchmarks, establishing new state-of-the-art performance for LLMs at the 8B and 70B parameter scales.

Responsible Development and Safety Considerations

While pursuing cutting-edge performance, Meta also prioritized responsible development and deployment practices for Llama 3. The company adopted a system-level approach, envisioning Llama 3 models as part of a broader ecosystem that puts developers in the driver’s seat, allowing them to design and customize the models for their specific use cases and safety requirements.

Meta conducted extensive red-teaming exercises, performed adversarial evaluations, and implemented safety mitigation techniques to lower residual risks in its instruction-tuned models. However, the company acknowledges that residual risks will likely remain and recommends that developers assess these risks in the context of their specific use cases.

To support responsible deployment, Meta has updated its Responsible Use Guide, providing a comprehensive resource for developers to implement model and system-level safety best practices for their applications. The guide covers topics such as content moderation, risk assessment, and the use of safety tools like Llama Guard 2 and Code Shield.

Llama Guard 2, built on the MLCommons taxonomy, is designed to classify LLM inputs (prompts) and responses, detecting content that may be considered unsafe or harmful. CyberSecEval 2 expands on its predecessor by adding measures to prevent abuse of the model’s code interpreter, offensive cybersecurity capabilities, and susceptibility to prompt injection attacks.

Code Shield, a new introduction with Llama 3, adds inference-time filtering of insecure code produced by LLMs, mitigating risks associated with insecure code suggestions, code interpreter abuse, and secure command execution.

Accessing and Using Llama 3

Meta has made Llama 3 models available through various channels, including direct download from the Meta Llama website, Hugging Face repositories, and popular cloud platforms like AWS, Google Cloud, and Microsoft Azure.

To download the models directly, users must first accept Meta’s Llama 3 Community License and request access through the Meta Llama website. Once approved, users will receive a signed URL to download the model weights and tokenizer using the provided download script.

Alternatively, users can access the models through the Hugging Face repositories, where they can download the original native weights or use the models with the Transformers library for seamless integration into their machine learning workflows.

Here’s an example of how to use the Llama 3 8B Instruct model with Transformers:

# Install required libraries !pip install datasets huggingface_hub sentence_transformers lancedb

Deploying Llama 3 at Scale

In addition to providing direct access to the model weights, Meta has partnered with various cloud providers, model API services, and hardware platforms to enable seamless deployment of Llama 3 at scale.

One of the key advantages of Llama 3 is its improved token efficiency, thanks to the new tokenizer. Benchmarks show that Llama 3 requires up to 15% fewer tokens compared to Llama 2, resulting in faster and more cost-effective inference.

The integration of Grouped Query Attention (GQA) in the 8B version of Llama 3 contributes to maintaining inference efficiency on par with the 7B version of Llama 2, despite the increase in parameter count.

To simplify the deployment process, Meta has provided the Llama Recipes repository, which contains open-source code and examples for fine-tuning, deployment, model evaluation, and more. This repository serves as a valuable resource for developers looking to leverage Llama 3’s capabilities in their applications.

For those interested in exploring Llama 3’s performance, Meta has integrated its latest models into Meta AI, a leading AI assistant built with Llama 3 technology. Users can interact with Meta AI through various Meta apps, such as Facebook, Instagram, WhatsApp, Messenger, and the web, to get things done, learn, create, and connect with the things that matter to them.

What’s Next for Llama 3?

While the 8B and 70B models mark the beginning of the Llama 3 release, Meta has ambitious plans for the future of this groundbreaking LLM.

In the coming months, we can expect to see new capabilities introduced, including multimodality (the ability to process and generate different data modalities, such as images and videos), multilingualism (supporting multiple languages), and much longer context windows for enhanced performance on tasks that require extensive context.

Additionally, Meta plans to release larger model sizes, including models with over 400 billion parameters, which are currently in training and showing promising trends in terms of performance and capabilities.

To further advance the field, Meta will also publish a detailed research paper on Llama 3, sharing its findings and insights with the broader AI community.

As a sneak preview of what’s to come, Meta has shared some early snapshots of its largest LLM model’s performance on various benchmarks. While these results are based on an early checkpoint and are subject to change, they provide an exciting glimpse into the future potential of Llama 3.

Conclusion

Llama 3 represents a significant milestone in the evolution of open-source large language models, pushing the boundaries of performance, capabilities, and responsible development practices. With its innovative architecture, massive training dataset, and cutting-edge fine-tuning techniques, Llama 3 establishes new state-of-the-art benchmarks for LLMs at the 8B and 70B parameter scales.

However, Llama 3 is more than just a powerful language model; it’s a testament to Meta’s commitment to fostering an open and responsible AI ecosystem. By providing comprehensive resources, safety tools, and best practices, Meta empowers developers to harness the full potential of Llama 3 while ensuring responsible deployment tailored to their specific use cases and audiences.

As the Llama 3 journey continues, with new capabilities, model sizes, and research findings on the horizon, the AI community eagerly awaits the innovative applications and breakthroughs that will undoubtedly emerge from this groundbreaking LLM.

Whether you’re a researcher pushing the boundaries of natural language processing, a developer building the next generation of intelligent applications, or an AI enthusiast curious about the latest advancements, Llama 3 promises to be a powerful tool in your arsenal, opening new doors and unlocking a world of possibilities.

#000#2022#ai#ai assistant#ai model#API#applications#approach#apps#architecture#Art#Article#Artificial Intelligence#assessment#attention#AWS#azure#benchmarks#billion#Building#CEO#change#chatGPT#Cloud#cloud providers#clusters#code#code generation#code suggestions#coding

0 notes

Text

0 notes

Text

Chatbot Showdown: Can Claude dethrone ChatGPT?

(Images made by author with Microsoft Copilot)

Anthropic’s Claude 3 model family, released on March 4, 2024, intensifies the chatbot competition. Consisting of three increasingly powerful models—Haiku, Sonnet, and Opus—they push the boundaries of AI capabilities, according to Anthropic.This post directly compares Claude 3 Sonnet (hereafter Claude) to the free version of the leading chatbot,…

View On WordPress

0 notes

Text

Copilot?? Fucking just bring back clippy, youd have total market AI spyware saturation is you just said "hey we're bringing back the gay little paperclip who sits on your desktop" and we'd have 10000 images of him as a bishi twink getting railed by the bonzi buddy in seconds

#like i dont want ai in my os and all that jazz#but like#the ai makers really dont know how to market this shit#ai assistant#ai

0 notes

Text

youtube

A digital assistant, also known as a predictive chatbot, is a technology designed to assist users by answering questions and processing simple tasks. In this video, we'll discuss the rise of digital assistants in healthcare and see if they truly are making our lives easier. Let's deep dive into the video and learn more about Siri, Alexa, and Google Assistant.

Digital assistants have become pervasive in our lives. When we wake up, we have Amazon’s Alexa read us the news and remind us to pick up our dry cleaning. As we get ready for the day, Siri plays our favorite songs. To get to work, we order an Uber with Google Assistant. However, when we get to the office, those products don’t follow us through the door. That is about to change in a big way.

Digital assistants are intelligent software programs designed to provide various services and support through natural language processing and machine learning algorithms. These assistants have gained immense popularity due to their ability to enhance efficiency, convenience, and user experience across a wide range of applications.

Digital assistants are designed to streamline tasks and automate processes, saving users time and effort. They can manage calendars, set reminders, schedule appointments, and even help with email management, thus allowing users to focus on more valuable and creative tasks.

Unlike human assistants, digital assistants are available around the clock, providing support whenever users need it. This constant availability ensures that users can access information and assistance whenever they require it, improving responsiveness and user satisfaction.

Digital assistants are increasingly being used in business settings to assist with customer support, perform data analysis, manage tasks, and even facilitate virtual meetings. These applications help organizations improve efficiency and offer better services to their customers.

Modern digital assistants leverage machine learning to adapt and learn from user interactions. Over time, they become better at understanding user preferences, needs, and speech patterns, resulting in a more personalized and tailored experience for each user.

Digital assistants offer a wide array of benefits, including increased productivity, personalized experiences, accessibility, convenience, and the ability to handle a variety of tasks. As technology continues to advance, we can expect digital assistants to become even more sophisticated and integrated into various aspects of our lives.

The Rise of Digital Assistants: Siri, Alexa and Google Assistant

#the rise of digital assistants#digital assistant#alexa#siri#google assistant#rise of a digital nation#what is a digital assistant#virtual assistant#benefits of using digital assistants#amazon alexa#LimitLess Tech 888#machine learning#artificial intelligence#computer digital assistant#the future of digital assistants#ai assistant#voice assistant#personal digital assistant#digital assiatants ai#oracle digital assistant#ai personal assistant#Youtube

1 note

·

View note

Text

youtube

A digital assistant, also known as a predictive chatbot, is a technology designed to assist users by answering questions and processing simple tasks. In this video, we'll discuss the rise of digital assistants in healthcare and see if they truly are making our lives easier. Let's deep dive into the video and learn more about Siri, Alexa, and Google Assistant.

Digital assistants have become pervasive in our lives. When we wake up, we have Amazon’s Alexa read us the news and remind us to pick up our dry cleaning. As we get ready for the day, Siri plays our favorite songs. To get to work, we order an Uber with Google Assistant. However, when we get to the office, those products don’t follow us through the door. That is about to change in a big way.

Digital assistants are intelligent software programs designed to provide various services and support through natural language processing and machine learning algorithms. These assistants have gained immense popularity due to their ability to enhance efficiency, convenience, and user experience across a wide range of applications.

Digital assistants are designed to streamline tasks and automate processes, saving users time and effort. They can manage calendars, set reminders, schedule appointments, and even help with email management, thus allowing users to focus on more valuable and creative tasks.

Unlike human assistants, digital assistants are available around the clock, providing support whenever users need it. This constant availability ensures that users can access information and assistance whenever they require it, improving responsiveness and user satisfaction.

Digital assistants are increasingly being used in business settings to assist with customer support, perform data analysis, manage tasks, and even facilitate virtual meetings. These applications help organizations improve efficiency and offer better services to their customers.

Digital assistants offer a wide array of benefits, including increased productivity, personalized experiences, accessibility, convenience, and the ability to handle a variety of tasks. As technology continues to advance, we can expect digital assistants to become even more sophisticated and integrated into various aspects of our lives.

The Rise of Digital Assistants: Siri, Alexa and Google Assistant

#the rise of digital assistants#digital assistant#alexa#siri#google assistant#rise of a digital nation#what is a digital assistant#virtual assistant#benefits of using digital assistants#amazon alexa#LimitLess Tech 888#machine learning#artificial intelligence#computer digital assistant#the future of digital assistants#ai assistant#voice assistant#personal digital assistant#digital assiatants ai#oracle digital assistant#ai personal assistant#Youtube

0 notes

Text

How to Use the AI Assistant in Adobe Acrobat Pro DC

Dive into the potential of Adobe Acrobat's AI Assistant for document accessibility. We're testing features!

#Accessibility #AdobeAcrobat #DocumentAccessibility #AIAssistant #PDFAccessibility #InclusiveTech

In today’s post, we’re going to take a deep dive into the capabilities of the AI Assistant within Adobe Acrobat Pro DC.

Video Guide

Adobe Acrobat’s AI assistant is a recent addition designed to enhance accessibility features, particularly for those creating accessible documents. As we embark on this journey, I have a sample document open, already made accessible, to demonstrate its…

View On WordPress

0 notes

Text

Enhancing Digital Conversations: The EXA AI Chat Experience

In today's fast-paced digital landscape, effective communication is key. Whether it's for personal or professional reasons, the way we interact online plays a crucial role in our everyday lives. That's where EXA AI Chat comes in. With its cutting-edge technology and intuitive design, EXA AI Chat is revolutionizing the way we converse online.

One of the standout features of EXA AI Chat is its advanced AI assistance. Powered by state-of-the-art algorithms, EXA AI Chat's virtual assistant provides unparalleled support, helping users navigate conversations with ease. Whether you're chatting with friends, collaborating with colleagues, or seeking advice from experts, EXA AI Chat's intelligent assistant is there to lend a hand.

But EXA AI Chat isn't just about functionality – it's about creating meaningful connections. With its user-friendly interface and intuitive controls, EXA AI Chat makes it easy to engage with others in a natural and authentic way. Whether you're sharing ideas, asking questions, or simply catching up with friends, EXA AI Chat enhances the overall conversation experience.

Another highlight of EXA AI Chat is its productivity-enhancing features. From scheduling appointments to setting reminders, EXA AI Chat helps users stay organized and efficient, ensuring that no task falls through the cracks. With EXA AI Chat by your side, you can streamline your workflow and focus on what matters most.

In addition to its practical benefits, EXA AI Chat also fosters a sense of community. With its built-in chat rooms and discussion forums, EXA AI Chat brings people together from all walks of life, facilitating meaningful connections and fostering a sense of belonging.

Overall, EXA AI Chat is more than just a chat app – it's a powerful tool for enhancing digital conversations and building connections that matter. With its innovative features, intuitive design, and commitment to user satisfaction, EXA AI Chat is shaping the future of online communication. Experience the difference for yourself and unlock a new world of possibilities with EXA AI Chat.

0 notes

Text

The impact and challenges of generative AI in healthcare

New Post has been published on https://thedigitalinsider.com/the-impact-and-challenges-of-generative-ai-in-healthcare/

The impact and challenges of generative AI in healthcare

Generative AI is transforming multiple sectors, including healthcare, where its capabilities to synthesize and analyze vast data types are being leveraged by major tech companies and innovative startups.

In a significant collaboration, Google Cloud is working with Highmark Health to create artificial intelligence tools aimed at improving patient intake processes. Amazon’s AWS is exploring the uses of generative AI for social health determinants analysis in medical databases. Microsoft Azure’s collaboration with the Providence healthcare network focuses on AI systems that prioritize and manage patient communications automatically.

The startup ecosystem is buzzing with activity around generative AI: Ambience Healthcare is developing an app specifically for clinicians, Nabla offers an AI assistant for healthcare providers, and Abridge is enhancing medical documentation through analytics tools.

These efforts are drawing substantial investment, indicating strong confidence among health investors about the potential of generative AI in this field.

Mixed reactions from industry and consumers

The healthcare industry and its consumers show mixed feelings about the readiness and appropriateness of generative AI technologies. For example, a Deloitte survey revealed that only 53% of U.S. consumers see generative AI as a potential enhancer of healthcare accessibility and efficiency.

Andrew Borkowski from VA Sunshine Healthcare Network highlights that generative AI may not be mature enough for healthcare deployment, citing its current limitations in handling complex medical issues and emergencies due to outdated data and lack of human expertise.

Computer Vision in Healthcare: Download the eBook today

Unlock the mystery of the innovative intersection of technology and medicine with our latest eBook, Computer Vision in Healthcare.

Concerns over diagnostic accuracy and data handling

OpenAI’s ChatGPT has shown vulnerabilities, such as a high error rate in pediatric disease diagnosis, raising concerns about its practical utility in critical healthcare applications. Moreover, generative AI’s struggles with routine medical administrative tasks raise further reliability issues.

There’s also a broader ethical debate about the use of AI in sensitive sectors like healthcare, particularly concerning privacy, security, and data integrity. The medical community stresses the necessity of rigorous scientific testing and regulatory compliance before these technologies can be fully integrated into healthcare practices.

Potential for bias and stereotyping

One critical issue with generative AI in healthcare is its potential to perpetuate stereotypes. A study from Stanford Medicine demonstrated how generative AI could reinforce erroneous beliefs about biological differences among races, which could lead to misdiagnosis and unequal treatment.

Despite these challenges, generative AI holds promise for complex applications like medical imaging. Research shows AI could enhance diagnostic accuracy and reduce workflow inefficiencies in imaging departments.

The need for rigorous validation

As the technology advances, healthcare professionals emphasize the need for thorough validation studies to ensure generative AI’s efficacy and safety. The World Health Organization advocates for strong oversight and ethical guidelines to govern the deployment of AI technologies in healthcare.

In conclusion, while generative AI offers exciting prospects for transforming healthcare, significant hurdles related to accuracy, ethics, and patient safety must be addressed. The industry must proceed cautiously, ensuring that these powerful tools do indeed benefit all patients without compromising care quality or ethics.

Share your generative AI insights and help shape the community!

Generative Artificial Intelligence Report 2024

We’re diving deep into the world of generative artificial intelligence with our new report: Generative AI 2024, which will explore how and why companies are (or aren’t) using this technology.

Like what you see? Then check out tonnes more.

From exclusive content by industry experts and an ever-increasing bank of real world use cases, to 80+

deep-dive summit presentations, our membership plans are packed with awesome AI resources.

Subscribe now

#2024#Accessibility#ai#ai assistant#AI in healthcare#AI systems#Amazon#Analysis#Analytics#app#applications#artificial#Artificial Intelligence#AWS#azure#Bias#chatGPT#Cloud#Collaboration#communications#Community#Companies#compliance#computer#Computer vision#consumers#content#Critical Issue#data#data integrity

0 notes