#ETL (Extract

Text

What sets Konnect Insights apart from other data orchestration and analysis tools available in the market for improving customer experiences in the aviation industry?

I can highlight some general factors that may set Konnect Insights apart from other data orchestration and analysis tools available in the market for improving customer experiences in the aviation industry. Keep in mind that the competitive landscape and product offerings may have evolved since my last knowledge update. Here are some potential differentiators:

Aviation Industry Expertise: Konnect Insights may offer specialized features and expertise tailored to the unique needs and challenges of the aviation industry, including airports, airlines, and related businesses.

Multi-Channel Data Integration: Konnect Insights may excel in its ability to integrate data from a wide range of sources, including social media, online platforms, offline locations within airports, and more. This comprehensive data collection can provide a holistic view of the customer journey.

Real-Time Monitoring: The platform may provide real-time monitoring and alerting capabilities, allowing airports to respond swiftly to emerging issues or trends and enhance customer satisfaction.

Customization: Konnect Insights may offer extensive customization options, allowing airports to tailor the solution to their specific needs, adapt to unique workflows, and focus on the most relevant KPIs.

Actionable Insights: The platform may be designed to provide actionable insights and recommendations, guiding airports on concrete steps to improve the customer experience and operational efficiency.

Competitor Benchmarking: Konnect Insights may offer benchmarking capabilities that allow airports to compare their performance to industry peers or competitors, helping them identify areas for differentiation.

Security and Compliance: Given the sensitive nature of data in the aviation industry, Konnect Insights may include robust security features and compliance measures to ensure data protection and adherence to industry regulations.

Scalability: The platform may be designed to scale effectively to accommodate the data needs of large and busy airports, ensuring it can handle high volumes of data and interactions.

Customer Support and Training: Konnect Insights may offer strong customer support, training, and consulting services to help airports maximize the value of the platform and implement best practices for customer experience improvement.

Integration Capabilities: It may provide seamless integration with existing airport systems, such as CRM, ERP, and database systems, to ensure data interoperability and process efficiency.

Historical Analysis: The platform may enable airports to conduct historical analysis to track the impact of improvements and initiatives over time, helping measure progress and refine strategies.

User-Friendly Interface: Konnect Insights may prioritize a user-friendly and intuitive interface, making it accessible to a wide range of airport staff without requiring extensive technical expertise.

It's important for airports and organizations in the aviation industry to thoroughly evaluate their specific needs and conduct a comparative analysis of available solutions to determine which one aligns best with their goals and requirements. Additionally, staying updated with the latest developments and customer feedback regarding Konnect Insights and other similar tools can provide valuable insights when making a decision.

#DataOrchestration#DataManagement#DataOps#DataIntegration#DataEngineering#DataPipeline#DataAutomation#DataWorkflow#ETL (Extract#Transform#Load)#DataIntegrationPlatform#BigData#CloudComputing#Analytics#DataScience#AI (Artificial Intelligence)#MachineLearning#IoT (Internet of Things)#DataGovernance#DataQuality#DataSecurity

2 notes

·

View notes

Text

What is a Data Pipeline? | Data Pipeline Explained in 60 Seconds

If you've been curious about data pipelines but don't know what they are, this video is for you! Data pipelines are a powerful way to manage and process data, and in this video, we'll explain them in 60 seconds.

If you're looking to learn more about data pipelines, or want to know what they are used for, then this video is for you! We'll walk you through the data pipeline architecture and share some of the uses cases for data pipelines.

By the end of this video, you'll have a better understanding of what a data pipeline is and how it can help you with your data management needs!

#data pipelines#data#data science#data analyses#data integration#data replication#data virtualization#business intelligence#data mining#etl#extract transform load#machine learning#batch processing#what is data pipeline architecture#data pipeline#big data#data pipeline data science#data warehouse#what is data pipeline#batch vs stream processing#data pipeline explained#real time data processing

2 notes

·

View notes

Text

Seamless Data Integration with IntelliData Labs: ETL Excellence Redefined

Experience seamless data integration with IntelliData Labs' expert ETL services. Our specialized approach to extract, transform, and load (ETL) processes ensures the efficient transfer of data between systems, enabling real-time insights and informed decision-making. Whether you're migrating data, consolidating sources, or building data warehouses, our team delivers reliable ETL solutions tailored to your unique requirements, driving operational efficiency and accelerating business growth.

0 notes

Text

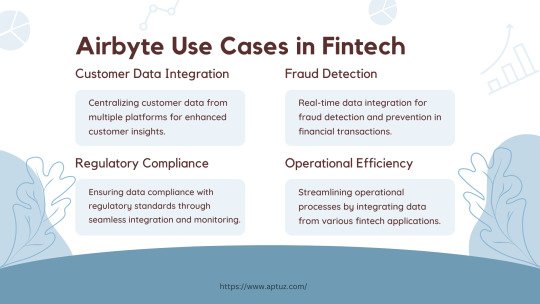

Explore the impactful use cases of Airbyte in the fintech industry, from centralizing customer data for enhanced insights to real-time fraud detection and ensuring regulatory compliance. Learn how Airbyte drives operational efficiency by streamlining data integration across various fintech applications, providing businesses with actionable insights and improved processes.

Know more at: https://bit.ly/3UbqGyT

#Fintech#data analytics#data engineering#technology#Airbyte#ETL#ELT#Cloud data#Data Integration#Data Transformation#Data management#Data extraction#Data Loading#Tech videos

0 notes

Text

At the core of our engineering services lies our commitment to ETL (Extract, Transform, Load) excellence. This involves seamlessly extracting data from diverse sources, including databases, cloud storage, and streaming platforms, APIs, and IoT devices. Once extracted, we meticulously transform the data into actionable insights by cleaning, formatting, and enriching it to ensure accuracy and relevance. This transformation process also involves advanced techniques such as data aggregation and normalization to enhance the quality of the dataset. Finally, we efficiently load the transformed data into the systems or databases, selecting the appropriate storage infrastructure and optimizing the loading process for speed and reliability. Our ETL excellence approach ensures that the data is handled with precision and care, resulting in valuable insights that drive informed decision-making and business success.

Learn more about services at https://rtctek.com/data-engineering-services/

Contact us at https://rtctek.com/contact-us/

#etl#extract#transform#load#dataintegration#dataengineering#dataloading#dataextraction#datatransformation#datawarehousing#dataanalytics#bigdata#dataquality#dataarchitecture#datamigration

0 notes

Text

Lets cook (ETL, ELT and ET)!!!

Lets cook (ETL, ELT and ET)!!!

Not long ago we were all enthralled to see huge amounts of data being transformed into desirable beautiful graphs basing on which multi dollar companies are taking their business decisions. But, has it ever occurred how this is being done, I can hardly guarantee people who came across this article already have a clue about the terms ETL, ELT and ET. The methodologies, techniques that are involved…

View On WordPress

0 notes

Link

ETL process plays a vital role in making business decisions by collecting insights from their data. Make this process with the 8 listed ETL Tools for Data Extraction, Transformation, and Load.

0 notes

Text

ETL stands for “Extract, Transform and Load”. ETL is a process of extracting data from different data sources, cleansing and organizing it, and eventually, loading it to a target data warehouse or a Unified data repository. Check here Top 21 ETL Tools for 2023.

0 notes

Text

1 note

·

View note

Text

ETL tools and it's benefits

#etl software#etl data#etl testing#etl process#etl tool#etl in testing#performance testing#data testing#benefits of etl#ETL#extract transform load

0 notes

Text

next time I'm asked to tell someone a little bit about myself, I'm going to explain how when I was organizing my computer I found a file labeled “etl pipeline" on my desktop, which confused me – what might I have written about a data pipeline and titled so unhelpfully?? turns out I had used etl as an abbreviation for “enemies to lovers,” not “extract, transform, and load”

#i feel like this anecdote covers the important facts about me#fact one: data nerd#fact two: too into fandom#it was from long enough ago that I don't even remember if I'd intended the filename to be a joke#oh computer

12 notes

·

View notes

Text

Demystifying Data Engineering: The Backbone of Modern Analytics

Hey friends! Check out this in-depth blog on #DataEngineering that explores its role in building robust data pipelines, ensuring data quality, and optimizing performance. Discover emerging trends like #cloudcomputing, #realtimeprocessing, and #DataOps

In the era of big data, data engineering has emerged as a critical discipline that underpins the success of data-driven organizations. Data engineering encompasses the design, construction, and maintenance of the infrastructure and systems required to extract, transform, and load (ETL) data, making it accessible and usable for analytics and decision-making. This blog aims to provide an in-depth…

View On WordPress

#artificial intelligence#big data#Cloud Computing#data analytics#data architecture#data catalog#data democratization#data engineering#data engineering best practices#data governance#data infrastructure#data ingestion#data integration#data lifecycle#data migration#data modeling#data pipelines#data privacy#data processing#data quality#data science#data security#data storage#data streaming#data transformation#data warehouse#DataOps#Distributed Computing#ETL#machine learning

2 notes

·

View notes

Text

How to Become a Data Analyst in 2022?-

In the past decade, data has become of prime importance. Organizations are investing heavily to ensure the maximum yield universalize of information from the firm’s database. The need for this extract has risen after the revolution in trade brought about by data analytics. Data Analytics has revolutionized the way the higher management or the owner of the business see’s the data. The insights gained post evaluation and analysis of data and showcasing the same in a visually appealing format or report have modified the approach to business and the campaigns that the firms run to push sales and improve the goodwill of the brand.

How to become a Data Analyst With No Experience?

Data Analytics is a path of untold possibilities and is expected to grow larger than ever before. Since the revolution of digitization of records has lowered the operating costs for companies. The digitized data is stored in huge data silos called databases either through an outsourced connection or through cloud servers whichever fits the need of the business or startup. Data Analytics helps in gaining insights that might be hidden inside the data.

The future of data analytics, in general, is democratization. We have come a long way from only the statisticians or only the number crunchers being able to work with data and then hand it over to the analysts. The term that has been buzzing around the conference rooms is self-service data analytics. Being able to answer the questions of our customers which they don’t even know makes it easily achievable by employing tools like Power BI & Tableau which make it accessible to anybody. These tools do a great job of integrating and implementing a lot of features that require no coding.

Real-time decision-making based on real-time data becomes possible by taking or utilizing some of these advanced data analytics tools which help the user to create a connection between artificial intelligence and machine learning. Data analytics enables the operators to take those complex problems/issues and break them down for business users to understand whilst keeping it simple. The way Power BI & Tableau can drive insights from any basic data set extracted from any database.

Everything around us is data and we just need ways to harness, understand, learn and make good choices based on data analytics. It is here to stay and the next big wave is how do we implement it so it stays forever and continues to expand.

A Step-by-Step Guide to Become a Data Analytic:-There are basic steps with which anyone can start a career as a Data Analyst: –

Get a bachelor’s degree in Math or Computer science with priority on statistical or analytical skills.

How to become a data analyst without a degree – The easiest way to do this is to master important data analytical skills.

opt for a certification course with Analytics Training Hub to start a data analyst learning path.

Get a job at an entry-level as a data analyst.

Earn a Master’s in Data Analytics.

What does a Data Analyst do?

The job profile of a data analyst entails multiple steps, starting from: –

Discover the problem or determine what the owner needs.

Do they need a dashboard, do they need reports, do they need to do some type of analysis on their product and give some type of recommendation?

When the analysts finally get the idea of what they need to do, they have to create a plan of action.

As to when will the user be getting this data and where is it coming from.

Often it can be the user’s job to communicate that to the team.

The next thing that the user would want to do is to collect the data.

Data can come from a ton of different sources so whether that is an SQL backup, a flat file, or an API.

After extraction, the analyst should be able to get all that data into one place.

Then as a user, you would need to work with your programmers to create an extract, transform and load (ETL) process.

So, the user is going to work with the programmer to get the data, and then both the user and the coder are going to create business rules to transform it for how the data analyst wants it to look in your system.

Then the operator loads the data and this can also be known as creating an ETL pipeline.

if you have data that’s going to be coming in either weekly or monthly the operator wouldn’t want to repeat this process manually every single time.

So, creating a pipeline is creating an automated process to bring that data, in the same way, every single time and that’s going to save you a lot of time.

The very last thing is aggregating your data which just means standardizing data and putting it all together instead of having it as separate sources.

the next step would be to clean the data Data is always messy.

Sometimes they use three different date formats, people’s names are capitalized for absolutely no reason and sometimes somebody forgets to add the customer id. So, you can’t map the patient in your system.

The analyst needs to do all this because it makes the data a lot more usable for later processes and part of this is normalizing and standardizing the data so that when you do your visualizations or your reports later all the data looks the same that can be used in any part that you need to be used in.

The next thing that the user needs to do is set up the data for reports and visualizations and oftentimes the user achieves this is by creating views.

A view allows the operator to combine several tables into one and then choose a subset of that. A data that the user wants to use for the reports and visualizations and each view may need to be formatted differently based on what the operator is going to be using it for in the report or the visualization.

Last and foremost is creating the reports and along with automation of that process so that if the owner wants it every week or every month it can just generate the report from a stored procedure or a job that automatically sends it out with the latest data every week or month.

The user can also connect that data to a data visualization tool like Tableau, power bi, python, or R.

What is the future Data Analyst job?

As per leading data connoisseurs of the data industry, the job profile of a data analyst seems to hold an extremely promising prospect in the next coming decade or two. The data Analyst job is a stepping stone and may lead to many of the below-mentioned job profiles depending on your interests: –

Data engineers:

data engineer would create the platform and the data structure within which all the data from the users would be captured for example what items they buy that is in their cart currently and what is on their wish list they have to make sure that the captured data is stored in such a fashion that is not only well-organized but it’s also easily retrievable. They should be comfortable working with every data source and employ ETL queries to collate data from multiple data sources and then organize all of this data in data warehouses or databases so that colleagues in the company can make the best use of it. To become a data engineer you need to acquire knowledge of languages such as Python, Java, SQL, Hadoop, Spark, Ruby, and C++. Now all of these are not mandatory but they vary from company to company for the job profile of a data engineer.

Business Analysts:

Business analysts are expected to draw insights from the data which would directly impact business decisions. Business analysts are directly involved in day-to-day business activities and there are a lot of ad hoc analyses that business analyst is expected to do, for example in an e-commerce company a business analyst would help the marketing team identify the customer segments that require marketing or the best time to market a certain product or why the last marketing campaign failed and what to do in future to prevent such mistakes hence for a business analyst a good understanding of business data and statistics is essential.

The tools and languages that would be most commonly used by you as a business analyst would be Excel, SQL, power bi, and tableau. Job profile of a business analyst may also be known as a data visualizer or a business intelligence professional who’s are responsible for creating weekly dashboards to inform the management about weekly sales of different products, the average delivery time, or the number of daily cancellations of orders, etc.

Data scientists:

A data scientist is a rare gem that employs data that has been existing in the organization to design business-oriented machine learning models. As a starting point, a data scientist can go through the available data of the company to look at various buying patterns identify similar items on the website, and then create algorithms around the same so that the website can automatically endorse products to the users based on the navigation history purchase of the consumer. Now this solution has to be effective enough that it can predict future purchases in real-time for visitors of the website.

Data analysts are expected to perform a lot of unplanned analyses which can facilitate decision-making within an organization. Data scientists on the other hand not only perform ad hoc analysis and create prototypes but also create data products that make intelligent decisions by themselves and this is where machine learning becomes extremely critical. For example, the suggestion you get after you buy a particular item or based on the items that you have on your wish list are because of machine learning models built by a data scientist.

The requisite skill for a data scientist is knowledge of algorithms, statistics, mathematics, machine learning, and programming languages such as Python, C, etc. They should also have an understanding of trade and the aptitude to frame the right questions to ask and find the answers from the available data. Finally, a data scientist should be able to communicate the outcomes efficiently to the team members and all the involved stakeholders.

Salary of a Data Analyst:

The salary for a Data Analyst may differ in different organizations. But, a Senior Data Analyst with the right skill and software knowledge may command a high price for the services offered.

The average salary for an entry-level Data Analyst may start from INR 2.9 lakhs per annum.

The average salary for a mid-level Data Analyst may start from INR 4.5 lakhs per annum.

The average salary for a Senior level Data Analyst may start from INR 9.5 lakhs per annum.

Some useful links are Below:

To Know more about Data Analyst visit - Analyticstraininghub.com

To Know more about our Data Analyst Certification courses visit - Analyticstraininghub.com

Must visit our official youtube channel - Analyticstraininghub.com

#data analyst course#data analysis#datavisualization#datamanagement#data analyst jobs#data analytics course in delhi

7 notes

·

View notes

Text

Using the ETL Tool in AWS Database Migration

AWS (Amazon Web Service) is a cloud-based platform providing infrastructure, platform, and packaged software as a service. However, the most critical service of Amazon Web Service Database Migration Service (AWS DMS) is migration of data between relational databases, data warehouses, and NoSQL databases for which ETL in AWS is the most optimized tool.

To understand why ETL in AWS is preferred for migrating databases, one should know how the ETL tool works. ETL is short for Extract, Transform, Load. Through these three steps data is combined from several sources into a centralized data warehouse. In the ETL in AWS process, data is extracted from a source, transformed into a format that matches the structure of the target database, and then loaded into a data warehouse or a storage repository.

The Benefits of ETL in AWS

There are several benefits of ETL in AWS for database migration.

· Organizations need not install and configure additional drivers and applications or change the structure of the source database when migration is done with ETL in AWS. The process is initiated directly through the AWS Management Console whereby any change or update is replicated to the target database through its Change Data Capture (CDC) feature.

· Changes that occur in the source database are updated at regular intervals to the target database by ETL in AWS. However, for this to happen, the source and the target databases should always be kept in sync. Most importantly, the migration process can take place even when the source database is fully functional. Hence, there is no system shutdown, a big help for large data-driven enterprises where any downtime upsets operating schedules.

· Most common and popular databases in use today are supported by AWS. Hence, ETL in AWS can handle any migration activity regardless of the structure of the databases. These include both homogeneous and heterogeneous migration. In the first, the database engines, data structure, data type and code, and the schema structures of the source and the target databases are similar while in the second case, each is different from the other.

· ETL in AWS is also widely-used for migrating on-premises databases to Amazon RDS or Amazon EC2, Aurora, and databases running on EC2 to RDS or vice versa. Apart from these possibilities, database migration can be done between SQL, text-based data, and NoSQL with DMS AWS also.

High-Performing AWS Glue

There are various tools for ETL in AWS, but the most high-performing one is AWS Glue. It has a fully managed ETL platform that eases data processing for analysis. The tool is very user-friendly and can be set up and running with only a few clicks on the AWS Management Console. The advantage here is that AWS Glue discovers data automatically and stores the connected metadata in the AWS Glue Data Catalog. Later, the data can be searched and queried instantly.

4 notes

·

View notes

Text

Explore Airbyte, the open-source data integration platform tailored for fintech firms. Streamline your integration process with its extensive connector library, real-time capabilities, user-friendly interface, and vibrant community support. Elevate your data workflows effortlessly with Airbyte.

Know more at: https://bit.ly/3w8qHf1

#Airbyte#fintech#Technology#Data transformation#data Integration#data extraction#ETL#ELT#Data loading#Cloud data#Data management#Clound Data Management#Trending

0 notes

Text

Empower your business's expansion by utilizing our Data Engineering Services. We specialize in optimizing and managing your data, ensuring it's readily available for informed decision-making, ultimately fueling your company's growth and success.

Learn more about our services at Data Engineering Services - Round The Clock Technologies (rtctek.com).

#dataengineering#bigdata#datapipeline#dataintegration#etl (extract#transform#load)#dataprocessing#dataarchitecture#datawarehousing#dataops#dataquality#dataanalytics#datascience#datamanagement#datainfrastructure#database#datatechnology#streamingdata

1 note

·

View note