#reverse centaurs

Text

“Humans in the loop” must detect the hardest-to-spot errors, at superhuman speed

I'm touring my new, nationally bestselling novel The Bezzle! Catch me SATURDAY (Apr 27) in MARIN COUNTY, then Winnipeg (May 2), Calgary (May 3), Vancouver (May 4), and beyond!

If AI has a future (a big if), it will have to be economically viable. An industry can't spend 1,700% more on Nvidia chips than it earns indefinitely – not even with Nvidia being a principle investor in its largest customers:

https://news.ycombinator.com/item?id=39883571

A company that pays 0.36-1 cents/query for electricity and (scarce, fresh) water can't indefinitely give those queries away by the millions to people who are expected to revise those queries dozens of times before eliciting the perfect botshit rendition of "instructions for removing a grilled cheese sandwich from a VCR in the style of the King James Bible":

https://www.semianalysis.com/p/the-inference-cost-of-search-disruption

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn't optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable - errors ("hallucinations"). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don't care about the odd extra finger. If the chatbot powering a tourist's automatic text-to-translation-to-speech phone tool gets a few words wrong, it's still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company's perspective – is that these aren't just low-stakes, they're also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principle income from high-value applications, the servers shut down, and the low-value applications disappear:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

Some businesses may be insensitive to those consequences. Air Canada replaced its human customer service staff with chatbots that just lied to passengers, stealing hundreds of dollars from them in the process. But the process for getting your money back after you are defrauded by Air Canada's chatbot is so onerous that only one passenger has bothered to go through it, spending ten weeks exhausting all of Air Canada's internal review mechanisms before fighting his case for weeks more at the regulator:

https://bc.ctvnews.ca/air-canada-s-chatbot-gave-a-b-c-man-the-wrong-information-now-the-airline-has-to-pay-for-the-mistake-1.6769454

There's never just one ant. If this guy was defrauded by an AC chatbot, so were hundreds or thousands of other fliers. Air Canada doesn't have to pay them back. Air Canada is tacitly asserting that, as the country's flagship carrier and near-monopolist, it is too big to fail and too big to jail, which means it's too big to care.

Air Canada shows that for some business customers, AI doesn't need to be able to do a worker's job in order to be a smart purchase: a chatbot can replace a worker, fail to their worker's job, and still save the company money on balance.

I can't predict whether the world's sociopathic monopolists are numerous and powerful enough to keep the lights on for AI companies through leases for automation systems that let them commit consequence-free free fraud by replacing workers with chatbots that serve as moral crumple-zones for furious customers:

https://www.sciencedirect.com/science/article/abs/pii/S0747563219304029

But even stipulating that this is sufficient, it's intrinsically unstable. Anything that can't go on forever eventually stops, and the mass replacement of humans with high-speed fraud software seems likely to stoke the already blazing furnace of modern antitrust:

https://www.eff.org/de/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

Of course, the AI companies have their own answer to this conundrum. A high-stakes/high-value customer can still fire workers and replace them with AI – they just need to hire fewer, cheaper workers to supervise the AI and monitor it for "hallucinations." This is called the "human in the loop" solution.

The human in the loop story has some glaring holes. From a worker's perspective, serving as the human in the loop in a scheme that cuts wage bills through AI is a nightmare – the worst possible kind of automation.

Let's pause for a little detour through automation theory here. Automation can augment a worker. We can call this a "centaur" – the worker offloads a repetitive task, or one that requires a high degree of vigilance, or (worst of all) both. They're a human head on a robot body (hence "centaur"). Think of the sensor/vision system in your car that beeps if you activate your turn-signal while a car is in your blind spot. You're in charge, but you're getting a second opinion from the robot.

Likewise, consider an AI tool that double-checks a radiologist's diagnosis of your chest X-ray and suggests a second look when its assessment doesn't match the radiologist's. Again, the human is in charge, but the robot is serving as a backstop and helpmeet, using its inexhaustible robotic vigilance to augment human skill.

That's centaurs. They're the good automation. Then there's the bad automation: the reverse-centaur, when the human is used to augment the robot.

Amazon warehouse pickers stand in one place while robotic shelving units trundle up to them at speed; then, the haptic bracelets shackled around their wrists buzz at them, directing them pick up specific items and move them to a basket, while a third automation system penalizes them for taking toilet breaks or even just walking around and shaking out their limbs to avoid a repetitive strain injury. This is a robotic head using a human body – and destroying it in the process.

An AI-assisted radiologist processes fewer chest X-rays every day, costing their employer more, on top of the cost of the AI. That's not what AI companies are selling. They're offering hospitals the power to create reverse centaurs: radiologist-assisted AIs. That's what "human in the loop" means.

This is a problem for workers, but it's also a problem for their bosses (assuming those bosses actually care about correcting AI hallucinations, rather than providing a figleaf that lets them commit fraud or kill people and shift the blame to an unpunishable AI).

Humans are good at a lot of things, but they're not good at eternal, perfect vigilance. Writing code is hard, but performing code-review (where you check someone else's code for errors) is much harder – and it gets even harder if the code you're reviewing is usually fine, because this requires that you maintain your vigilance for something that only occurs at rare and unpredictable intervals:

https://twitter.com/qntm/status/1773779967521780169

But for a coding shop to make the cost of an AI pencil out, the human in the loop needs to be able to process a lot of AI-generated code. Replacing a human with an AI doesn't produce any savings if you need to hire two more humans to take turns doing close reads of the AI's code.

This is the fatal flaw in robo-taxi schemes. The "human in the loop" who is supposed to keep the murderbot from smashing into other cars, steering into oncoming traffic, or running down pedestrians isn't a driver, they're a driving instructor. This is a much harder job than being a driver, even when the student driver you're monitoring is a human, making human mistakes at human speed. It's even harder when the student driver is a robot, making errors at computer speed:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

This is why the doomed robo-taxi company Cruise had to deploy 1.5 skilled, high-paid human monitors to oversee each of its murderbots, while traditional taxis operate at a fraction of the cost with a single, precaratized, low-paid human driver:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

The vigilance problem is pretty fatal for the human-in-the-loop gambit, but there's another problem that is, if anything, even more fatal: the kinds of errors that AIs make.

Foundationally, AI is applied statistics. An AI company trains its AI by feeding it a lot of data about the real world. The program processes this data, looking for statistical correlations in that data, and makes a model of the world based on those correlations. A chatbot is a next-word-guessing program, and an AI "art" generator is a next-pixel-guessing program. They're drawing on billions of documents to find the most statistically likely way of finishing a sentence or a line of pixels in a bitmap:

https://dl.acm.org/doi/10.1145/3442188.3445922

This means that AI doesn't just make errors – it makes subtle errors, the kinds of errors that are the hardest for a human in the loop to spot, because they are the most statistically probable ways of being wrong. Sure, we notice the gross errors in AI output, like confidently claiming that a living human is dead:

https://www.tomsguide.com/opinion/according-to-chatgpt-im-dead

But the most common errors that AIs make are the ones we don't notice, because they're perfectly camouflaged as the truth. Think of the recurring AI programming error that inserts a call to a nonexistent library called "huggingface-cli," which is what the library would be called if developers reliably followed naming conventions. But due to a human inconsistency, the real library has a slightly different name. The fact that AIs repeatedly inserted references to the nonexistent library opened up a vulnerability – a security researcher created a (inert) malicious library with that name and tricked numerous companies into compiling it into their code because their human reviewers missed the chatbot's (statistically indistinguishable from the the truth) lie:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

For a driving instructor or a code reviewer overseeing a human subject, the majority of errors are comparatively easy to spot, because they're the kinds of errors that lead to inconsistent library naming – places where a human behaved erratically or irregularly. But when reality is irregular or erratic, the AI will make errors by presuming that things are statistically normal.

These are the hardest kinds of errors to spot. They couldn't be harder for a human to detect if they were specifically designed to go undetected. The human in the loop isn't just being asked to spot mistakes – they're being actively deceived. The AI isn't merely wrong, it's constructing a subtle "what's wrong with this picture"-style puzzle. Not just one such puzzle, either: millions of them, at speed, which must be solved by the human in the loop, who must remain perfectly vigilant for things that are, by definition, almost totally unnoticeable.

This is a special new torment for reverse centaurs – and a significant problem for AI companies hoping to accumulate and keep enough high-value, high-stakes customers on their books to weather the coming trough of disillusionment.

This is pretty grim, but it gets grimmer. AI companies have argued that they have a third line of business, a way to make money for their customers beyond automation's gifts to their payrolls: they claim that they can perform difficult scientific tasks at superhuman speed, producing billion-dollar insights (new materials, new drugs, new proteins) at unimaginable speed.

However, these claims – credulously amplified by the non-technical press – keep on shattering when they are tested by experts who understand the esoteric domains in which AI is said to have an unbeatable advantage. For example, Google claimed that its Deepmind AI had discovered "millions of new materials," "equivalent to nearly 800 years’ worth of knowledge," constituting "an order-of-magnitude expansion in stable materials known to humanity":

https://deepmind.google/discover/blog/millions-of-new-materials-discovered-with-deep-learning/

It was a hoax. When independent material scientists reviewed representative samples of these "new materials," they concluded that "no new materials have been discovered" and that not one of these materials was "credible, useful and novel":

https://www.404media.co/google-says-it-discovered-millions-of-new-materials-with-ai-human-researchers/

As Brian Merchant writes, AI claims are eerily similar to "smoke and mirrors" – the dazzling reality-distortion field thrown up by 17th century magic lantern technology, which millions of people ascribed wild capabilities to, thanks to the outlandish claims of the technology's promoters:

https://www.bloodinthemachine.com/p/ai-really-is-smoke-and-mirrors

The fact that we have a four-hundred-year-old name for this phenomenon, and yet we're still falling prey to it is frankly a little depressing. And, unlucky for us, it turns out that AI therapybots can't help us with this – rather, they're apt to literally convince us to kill ourselves:

https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#automation#humans in the loop#centaurs#reverse centaurs#labor#ai safety#sanity checks#spot the mistake#code review#driving instructor

713 notes

·

View notes

Text

More centaurs!!

#kamaetedraws#svsss#bingliu#luo binghe#liu qingge#centaurs#idk…. I just like horses#I should draw sqq as a centaur but in my head he’s a researcher#realized after committing that this is basically the same pose set as my last centaur image….#but reversed…#Binghe asking for kissies this time

184 notes

·

View notes

Text

shizuai as blossica :) my two fav ships

#i should probably do blossica in mmj outfit too but idk. i dont wanna take jessicas charm away (her being a centaur)#anyways! yeah. yeah#project sekai#prsk#shizuai#shizuku hinomori#airi momoi#reverse 1999#r1999#blossica

160 notes

·

View notes

Text

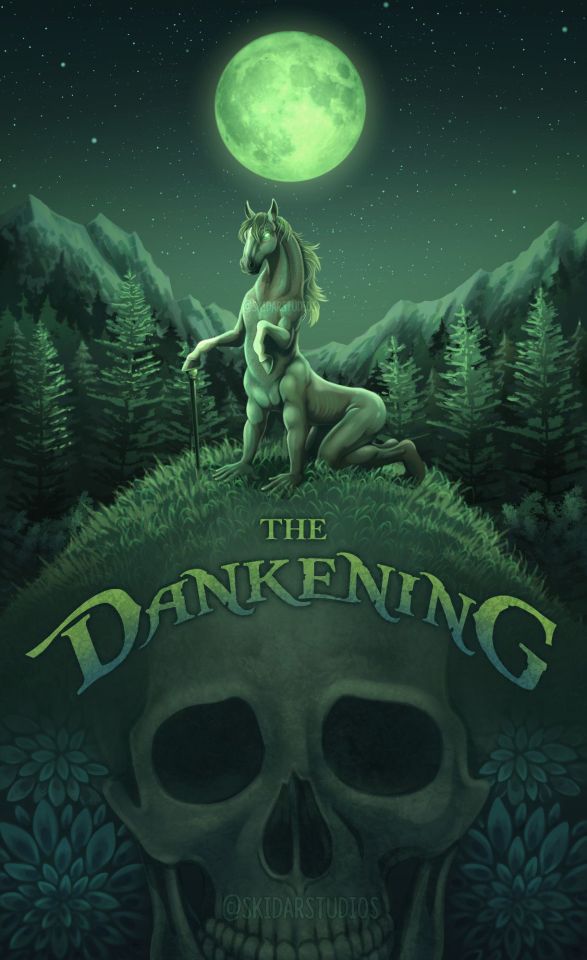

There is so much chaotic bar dnd shenanigans that led to this that I wouldn't know where to begin but here's a fun poster I made for my bar dnd crew of The Dankening. A 6 week ordeal of all different tables of people attempting to thwart an evil reverse-centaur, Dank Dave, from taking over the world.

#skidar art#skidar studios#the dankening#dnd#dungeons and dragons#dank dave#reverse centaur#bellinghamlet#orcus#god of undeath

57 notes

·

View notes

Text

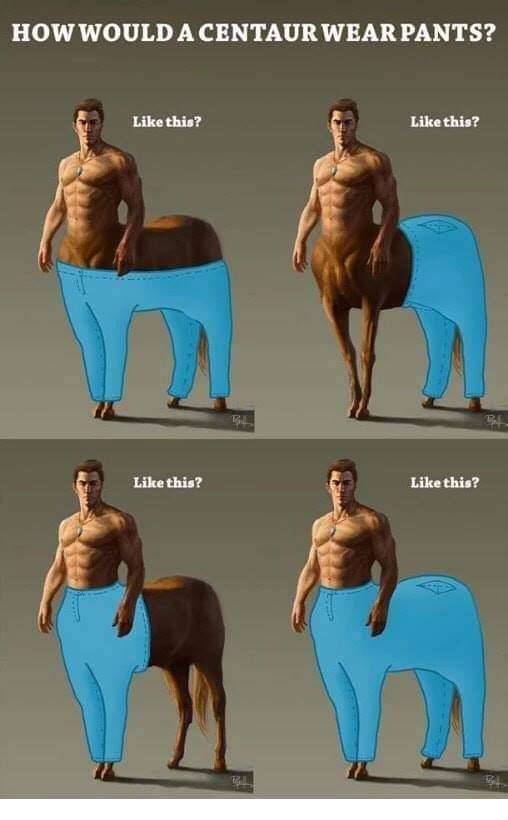

Kohtasin hyvän ketjun kentaureista ja käänteiskentaureista

155 notes

·

View notes

Text

he’s a horse girl

17 notes

·

View notes

Text

ONE OF THE WINNERS OF THE #2SENTENCEHORROR SENDS ME RN

#reverse 1999#certified storm moments#im a little sad the one submission about human centaur jessica wasn't here but alas

19 notes

·

View notes

Photo

Centaurworld reverse AU

Ko-fi

#amazing how great the show would've been if they had just had these four in it#centaurworld#reverse au#nowhere king#elk#rider#horse#centaur#horsetaur#fanart#princess#mysterious woman

67 notes

·

View notes

Text

Idk WHERE my sister found this but thank her for this post

7 notes

·

View notes

Text

Kennedy family photo

#Ryoukios art#fanart#re#resident evil#leon s kennedy#centaur leon#I am in fact just copy and pasting the family from Léon: The Professional#into resident evil but that’s what happens when you base the fucker’s character on the movie#Also I really love the idea that Leon had a sister who was just reverse of him#he keeps this photo in his wallet btw

4 notes

·

View notes

Text

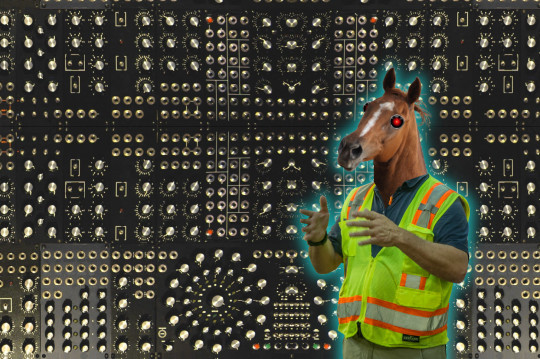

Gig apps trap reverse centaurs in Skinner boxes

Enshittification is the process by which digital platforms devour themselves: first they dangle goodies in front of end users. Once users are locked in, the goodies are taken away and dangled before business customers who supply goods to the users. Once those business customers are stuck on the platform, the goodies are clawed away and showered on the platform’s shareholders:

https://pluralistic.net/2023/01/21/potemkin-ai/#hey-guys

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/04/12/algorithmic-wage-discrimination/#fishers-of-men

Enshittification isn’t just another way of saying “fraud” or “price gouging” or “wage theft.” Enshittification is intrinsically digital, because moving all those goodies around requires the flexibility that only comes with a digital businesses. Jeff Bezos, grocer, can’t rapidly change the price of eggs at Whole Foods without an army of kids with pricing guns on roller-skates. Jeff Bezos, grocer, can change the price of eggs on Amazon Fresh just by twiddling a knob on the service’s back-end.

Twiddling is the key to enshittification: rapidly adjusting prices, conditions and offers. As with any shell game, the quickness of the hand deceives the eye. Tech monopolists aren’t smarter than the Gilded Age sociopaths who monopolized rail or coal — they use the same tricks as those monsters of history, but they do them faster and with computers:

https://doctorow.medium.com/twiddler-1b5c9690cce6

If Rockefeller wanted to crush a freight company, he couldn’t just click a mouse and lay down a pipeline that ran on the same route, and then click another mouse to make it go away when he was done. When Bezos wants to bankrupt Diapers.com — a company that refused to sell itself to Amazon — he just moved a slider so that diapers on Amazon were being sold below cost. Amazon lost $100m over three months, diapers.com went bankrupt, and every investor learned that competing with Amazon was a losing bet:

https://slate.com/technology/2013/10/amazon-book-how-jeff-bezos-went-thermonuclear-on-diapers-com.html

That’s the power of twiddling — but twiddling cuts both ways. The same flexibility that digital businesses enjoy is hypothetically available to workers and users. The airlines pioneered twiddling ticket prices, and that naturally gave rise to countertwiddling, in the form of comparison shopping sites that scraped the airlines’ sites to predict when tickets would be cheapest:

https://pluralistic.net/2023/02/27/knob-jockeys/#bros-be-twiddlin

The airlines — like all abusive businesses — refused to tolerate this. They were allowed to touch their knobs as much as they wanted — indeed, they couldn’t stop touching those knobs — but when we tried to twiddle back, that was “felony contempt of business model,” and the airlines sued:

https://www.cnbc.com/2014/12/30/airline-sues-man-for-founding-a-cheap-flights-website.html

And sued:

https://www.nytimes.com/2018/01/06/business/southwest-airlines-lawsuit-prices.html

Platforms don’t just hate it when end-users twiddle back — if anything they are even more aggressive when their business-users dare to twiddle. Take Para, an app that Doordash drivers used to get a peek at the wages offered for jobs before they accepted them — something that Doordash hid from its workers. Doordash ruthlessly attacked Para, saying that by letting drivers know how much they’d earn before they did the work, Para was violating the law:

https://www.eff.org/deeplinks/2021/08/tech-rights-are-workers-rights-doordash-edition

Which law? Well, take your pick. The modern meaning of “IP” is “any law that lets me use the law to control my competitors, competition or customers.” Platforms use a mix of anticircumvention law, patent, copyright, contract, cybersecurity and other legal systems to weave together a thicket of rules that allow them to shut down rivals for their Felony Contempt of Business Model:

https://locusmag.com/2020/09/cory-doctorow-ip/

Enshittification relies on unlimited twiddling (by platforms), and a general prohibition on countertwiddling (by platform users). Enshittification is a form of fishing, in which bait is dangled before different groups of users and then nimbly withdrawn when they lunge for it. Twiddling puts the suppleness into the enshittifier’s fishing-rod, and a ban on countertwiddling weighs down platform users so they’re always a bit too slow to catch the bait.

Nowhere do we see twiddling’s impact more than in the “gig economy,” where workers are misclassified as independent contractors and put to work for an app that scripts their every move to the finest degree. When an app is your boss, you work for an employer who docks your pay for violating rules that you aren’t allowed to know — and where your attempts to learn those rules are constantly frustrated by the endless back-end twiddling that changes the rules faster than you can learn them.

As with every question of technology, the issue isn’t twiddling per se — it’s who does the twiddling and who gets twiddled. A worker armed with digital tools can play gig work employers off each other and force them to bid up the price of their labor; they can form co-ops with other workers that auto-refuse jobs that don’t pay enough, and use digital tools to organize to shift power from bosses to workers:

https://pluralistic.net/2022/12/02/not-what-it-does/#who-it-does-it-to

Take “reverse centaurs.” In AI research, a “centaur” is a human assisted by a machine that does more than either could do on their own. For example, a chess master and a chess program can play a better game together than either could play separately. A reverse centaur is a machine assisted by a human, where the machine is in charge and the human is a meat-puppet.

Think of Amazon warehouse workers wearing haptic location-aware wristbands that buzz at them continuously dictating where their hands must be; or Amazon drivers whose eye-movements are continuously tracked in order to penalize drivers who look in the “wrong” direction:

https://pluralistic.net/2021/02/17/reverse-centaur/#reverse-centaur

The difference between a centaur and a reverse centaur is the difference between a machine that makes your life better and a machine that makes your life worse so that your boss gets richer. Reverse centaurism is the 21st Century’s answer to Taylorism, the pseudoscience that saw white-coated “experts” subject workers to humiliating choreography down to the smallest movement of your fingertip:

https://pluralistic.net/2022/08/21/great-taylors-ghost/#solidarity-or-bust

While reverse centaurism was born in warehouses and other company-owned facilities, gig work let it make the leap into workers’ homes and cars. The 21st century has seen a return to the cottage industry — a form of production that once saw workers labor far from their bosses and thus beyond their control — but shriven of the autonomy and dignity that working from home once afforded:

https://doctorow.medium.com/gig-work-is-the-opposite-of-steampunk-463e2730ef0d

The rise and rise of bossware — which allows for remote surveillance of workers in their homes and cars — has turned “work from home” into “live at work.” Reverse centaurs can now be chickenized — a term from labor economics that describes how poultry farmers, who sell their birds to one of three vast poultry processors who have divided up the country like the Pope dividing up the “New World,” are uniquely exploited:

https://onezero.medium.com/revenge-of-the-chickenized-reverse-centaurs-b2e8d5cda826

A chickenized reverse centaur has it rough: they must pay for the machines they use to make money for their bosses, they must obey the orders of the app that controls their work, and they are denied any of the protections that a traditional worker might enjoy, even as they are prohibited from deploying digital self-help measures that let them twiddle back to bargain for a better wage.

All of this sets the stage for a phenomenon called algorithmic wage discrimination, in which two workers doing the same job under the same conditions will see radically different payouts for that work. These payouts are continuously tweaked in the background by an algorithm that tries to predict the minimum sum a worker will accept to remain available without payment, to ensure sufficient workers to pick up jobs as they arise.

This phenomenon — and proposed policy and labor solutions to it — is expertly analyzed in “On Algorithmic Wage Discrimination,” a superb paper by UC Law San Franciscos Veena Dubal:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4331080

Dubal uses empirical data and enthnographic accounts from Uber drivers and other gig workers to explain how endless, self-directed twiddling allows gig companies pay workers less and pay themselves more. As @[email protected] explains in his LA Times article on Dubal’s research, the goal of the payment algorithm is to guess how often a given driver needs to receive fair compensation in order to keep them driving when the payments are unfair:

https://www.latimes.com/business/technology/story/2023-04-11/algorithmic-wage-discrimination

The algorithm combines nonconsensual dossiers compiled on individual drivers with population-scale data to seek an equilibrium between keeping drivers waiting, unpaid, for a job; and how much a driver needs to be paid for an individual job, in order to keep that driver from clocking out and doing something else.

@

Here’s how that works. Sergio Avedian, a writer for The Rideshare Guy, ran an experiment with two brothers who both drove for Uber; one drove a Tesla and drove intermittently, the other brother rented a hybrid sedan and drove frequently. Sitting side-by-side with the brothers, Avedian showed how the brother with the Tesla was offered more for every trip:

https://www.youtube.com/watch?v=UADTiL3S67I

Uber wants to lure intermittent drivers into becoming frequent drivers. Uber doesn’t pay for an oversupply of drivers, because it only pays drivers when they have a passenger in the car. Having drivers on call — but idle — is a way for Uber to shift the cost of maintaining a capacity cushion to its workers.

What’s more, what Uber charges customers is not based on how much it pays its workers. As Uber’s head of product explained: Uber uses “machine-learning techniques to estimate how much groups of customers are willing to shell out for a ride. Uber calculates riders’ propensity for paying a higher price for a particular route at a certain time of day. For instance, someone traveling from a wealthy neighborhood to another tony spot might be asked to pay more than another person heading to a poorer part of town, even if demand, traffic and distance are the same.”

https://qz.com/990131/uber-is-practicing-price-discrimination-economists-say-that-might-not-be-a-bad-thing/

Uber has historically described its business a pure supply-and-demand matching system, where a rush of demand for rides triggers surge pricing, which lures out drivers, which takes care of the demand. That’s not how it works today, and it’s unclear if it ever worked that way. Today, a driver who consults the rider version of the Uber app before accepting a job — to compare how much the rider is paying to how much they stand to earn — is booted off the app and denied further journeys.

Surging, instead, has become just another way to twiddle drivers. One of Dubal’s subjects, Derrick, describes how Uber uses fake surges to lure drivers to airports: “You go to the airport, once the lot get kind of full, then the surge go away.” Other drivers describe how they use groupchats to call out fake surges: “I’m in the Marina. It’s dead. Fake surge.”

That’s pure twiddling. Twiddling turns gamification into gamblification, where your labor buys you a spin on a roulette wheel in a rigged casino. As a driver called Melissa, who had doubled down on her availability to earn a $100 bonus awarded for clocking a certain number of rides, told Dubal, “When you get close to the bonus, the rides start trickling in more slowly…. And it makes sense. It’s really the type of

shit that they can do when it’s okay to have a surplus labor force that is just sitting there that they don’t have to pay for.”

Wherever you find reverse-centaurs, you get this kind of gamblification, where the rules are twiddled continuously to make sure that the house always wins. As a contract driver Amazon reverse centaur told Lauren Gurley for Motherboard, “Amazon uses these cameras allegedly to make sure they have a safer driving workforce, but they’re actually using them not to pay delivery companies”:

https://www.vice.com/en/article/88npjv/amazons-ai-cameras-are-punishing-drivers-for-mistakes-they-didnt-make

Algorithmic wage discrimination is the robot overlord of our nightmares: its job is to relentlessly quest for vulnerabilities and exploit them. Drivers divide themselves into “ants” (drivers who take every job) and “pickers” (drivers who cherry-pick high-paying jobs). The algorithm’s job is ensuring that pickers get the plum assignments, not the ants, in the hopes of converting those pickers to app-dependent ants.

In my work on enshittification, I call this the “giant teddy bear” gambit. At every county fair, you’ll always spot some poor jerk carrying around a giant teddy-bear they “won” on the midway. But they didn’t win it — not by getting three balls in the peach-basket. Rather, the carny running the rigged game either chose not to operate the “scissor” that kicks balls out of the basket. Or, if the game is “honest” (that is, merely impossible to win, rather than gimmicked), the operator will make a too-good-to-refuse offer: “Get one ball in and I’ll give you this keychain. Win two keychains and I’ll let you trade them for this giant teddy bear.”

Carnies aren’t in the business of giving away giant teddy bears — rather, the gambit is an investment. Giving a mark a giant teddy bear to carry around the midway all day acts as a convincer, luring other marks to try to land three balls in the basket and win their own teddy bear.

In the same way, platforms like Uber distribute giant teddy bears to pickers, as a way of keeping the ants scurrying from job to job, and as a way of convincing the pickers to give up whatever work allows them to discriminate among Uber’s offers and hold out for the plum deals, whereupon then can be transmogrified into ants themselves.

Dubal describes the experience of Adil, a Syrian refugee who drives for Uber in the Bay Area. His colleagues are pickers, and showed him screenshots of how much they earned. Determined to get a share of that money, Adil became a model ant, driving two hours to San Francisco, driving three days straight, napping in his car, spending only one day per week with his family. The algorithm noticed that Adil needed the work, so it paid him less.

Adil responded the way the system predicted he would, by driving even more: “My friends they make it, so I keep going, maybe I can figure

it out. It’s unsecure, and I don’t know how people they do it. I don’t know how I am doing it, but I have to. I mean, I don’t

find another option. In a minute, if I find something else, oh man, I will be out immediately. I am a very patient person, that’s why I can continue.”

Another driver, Diego, told Dubal about how the winners of the giant teddy bears fell into the trap of thinking that they were “good at the app”: “Any time there’s some big shot getting high pay outs, they always shame everyone else and say you don’t know how to use the app. I think there’s secret PR campaigns going on that gives targeted payouts to select workers, and they just think it’s all them.”

That’s the power of twiddling: by hoarding all the flexibility offered by digital tools, the management at platforms can become centaurs, able to string along thousands of workers, while the workers are reverse-centaurs, puppeteered by the apps.

As the example of Adil shows, the algorithm doesn’t need to be very sophisticated in order to figure out which workers it can underpay. The system automates the kind of racial and gender discrimination that is formally illegal, but which is masked by the smokescreen of digitization. An employer who systematically paid women less than men, or Black people less than white people, would be liable to criminal and civil sanctions. But if an algorithm simply notices that people who have fewer job prospects drive more and will thus accept lower wages, that’s just “optimization,” not racism or sexism.

This is the key to understanding the AI hype bubble: when ghouls from multinational banks predict 13 trillion dollar markets for “AI,” what they mean is that digital tools will speed up the twiddling and other wage-suppression techniques to transfer $13T in value from workers and consumers to shareholders.

The American business lobby is relentlessly focused on the goal of reducing wages. That’s the force behind “free trade,” “right to work,” and other codewords for “paying workers less,” including “gig work.” Tech workers long saw themselves as above this fray, immune to labor exploitation because they worked for a noble profession that took care of its own.

But the epidemic of mass tech-worker layoffs, following on the heels of massive stock buybacks, has demonstrated that tech bosses are just like any other boss: willing to pay as little as they can get away with, and no more. Tech bosses are so comfortable with their market dominance and the lock-in of their customers that they are happy to turn out hundreds of thousands of skilled workers, convinced that the twiddling systems they’ve built are the kinds of self-licking ice-cream cones that are so simple even a manager can use them — no morlocks required.

The tech worker layoffs are best understood as an all-out war on tech worker morale, because that morale is the source of tech workers’ confidence and thus their demands for a larger share of the value generated by their labor. The current tech layoff template is very different from previous tech layoffs: today’s layoffs are taking place over a period of months, long after they are announced, and laid off tech worker is likely to be offered a months of paid post-layoff work, rather than severance. This means that tech workplaces are now haunted by the walking dead, workers who have been laid off but need to come into the office for months, even as the threat of layoffs looms over the heads of the workers who remain. As an old friend, recently laid off from Microsoft after decades of service, wrote to me, this is “a new arrow in the quiver of bringing tech workers to heel and ensuring that we’re properly thankful for the jobs we have (had?).”

Dubal is interested in more than analysis, she’s interested in action. She looks at the tactics already deployed by gig workers, who have not taken all this abuse lying down. Workers in the UK and EU organized through Worker Info Exchange and the App Drivers and Couriers Union have used the GDPR (the EU’s privacy law) to demand “algorithmic transparency,” as well as access to their data. In California, drivers hope to use similar provisions in the CCPA (a state privacy law) to do the same.

These efforts have borne fruit. When Cornell economists, led by Louis Hyman, published research (paid for by Uber) claiming that Uber drivers earned an average of $23/hour, it was data from these efforts that revealed the true average Uber driver’s wage was $9.74. Subsequent research in California found that Uber drivers’ wage fell to $6.22/hour after the passage of Prop 22, a worker misclassification law that gig companies spent $225m to pass, only to have the law struck down because of a careless drafting error:

https://www.latimes.com/california/newsletter/2021-08-23/proposition-22-lyft-uber-decision-essential-california

But Dubal is skeptical that data-coops and transparency will achieve transformative change and build real worker power. Knowing how the algorithm works is useful, but it doesn’t mean you can do anything about it, not least because the platform owners can keep touching their knobs, twiddling the payout schedule on their rigged slot-machines.

Data co-ops start from the proposition that “data extraction is an inevitable form of labor for which workers should be remunerated.” It makes on-the-job surveillance acceptable, provided that workers are compensated for the spying. But co-ops aren’t unions, and they don’t have the power to bargain for a fair price for that data, and coops themselves lack the vast resources — “to store, clean, and understand” — data.

Co-ops are also badly situated to understand the true value of the data that is extracted from their members: “Workers cannot know whether the data collected will, at the population level, violate the civil rights of others or amplifies their own social oppression.”

Instead, Dubal wants an outright, nonwaivable prohibition on algorithmic wage discrimination. Just make it illegal. If

firms cannot use gambling mechanisms to control worker behavior through variable pay systems, they will have to find ways to maintain flexible

workforces while paying their workforce predictable wages under an employment model. If a firm cannot manage wages through digitally-determined variable pay systems, then the firm is less likely to employ algorithmic management.”

In other words, rather than using market mechanisms too constrain platform twiddling, Dubal just wants to make certain kinds of twiddling illegal. This is a growing trend in legal scholarship. For example, the economist Ramsi Woodcock has proposed a ban on surge pricing as a per se violation of Section 1 of the Sherman Act:

https://ilr.law.uiowa.edu/print/volume-105-issue-4/the-efficient-queue-and-the-case-against-dynamic-pricing

Similarly, Dubal proposes that algorithmic wage discrimination violates another antitrust law: the Robinson-Patman Act, which “bans sellers from charging competing buyers different prices for the same commodity. Robinson-Patman enforcement was effectively halted under Reagan, kicking off a host of pathologies, like the rise of Walmart:

https://pluralistic.net/2023/03/27/walmarts-jackals/#cheater-sizes

I really liked Dubal’s legal reasoning and argument, and to it I would add a call to reinvigorate countertwiddling: reforming laws that get in the way of workers who want to reverse-engineer, spoof, and control the apps that currently control them. Adversarial interoperability (AKA competitive compatibility or comcom) is key tool for building worker power in an era of digital Taylorism:

https://www.eff.org/deeplinks/2019/10/adversarial-interoperability

To see how that works, look to other jursidictions where workers have leapfrogged their European and American cousins, such as Indonesia, where gig workers and toolsmiths collaborate to make a whole suite of “tuyul apps,” which let them override the apps that gig companies expect them to use.

https://pluralistic.net/2021/07/08/tuyul-apps/#gojek

For example, ride-hailing companies won’t assign a train-station pickup to a driver unless they’re circling the station — which is incredibly dangerous during the congested moments after a train arrives. A tuyul app lets a driver park nearby and then spoof their phone’s GPS fix to the ridehailing company so that they appear to be right out front of the station.

In an ideal world, those workers would have a union, and be able to dictate the app’s functionality to their bosses. But workers shouldn’t have to wait for an ideal world: they don’t just need jam tomorrow — they need jam today. Tuyul apps, and apps like Para, which allow workers to extract more money under better working conditions, are a prelude to unionization and employer regulation, not a substitute for it.

Employers will not give workers one iota more power than they have to. Just look at the asymmetry between the regulation of union employees versus union busters. Under US law, employees of a union need to account for every single hour they work, every mile they drive, every location they visit, in public filings. Meanwhile, the union-busting industry — far larger and richer than unions — operate under a cloak of total secrecy, Workers aren’t even told which union busters their employers have hired — let alone get an accounting of how those union busters spend money, or how many of them are working undercover, pretending to be workers in order to sabotage the union.

Twiddling will only get an employer so far. Twiddling — like all “AI” — is based on analyzing the past to predict the future. The heuristics an algorithm creates to lure workers into their cars can’t account for rapid changes in the wider world, which is why companies who relied on “AI” scheduling apps (for example, to prevent their employees from logging enough hours to be entitled to benefits) were caught flatfooted by the Great Resignation.

Workers suddenly found themselves with bargaining power thanks to the departure of millions of workers — a mix of early retirees and workers who were killed or permanently disabled by covid — and they used that shortage to demand a larger share of the fruits of their labor. The outraged howls of the capital class at this development were telling: these companies are operated by the kinds of “capitalists” that MLK once identified, who want “socialism for the rich and rugged individualism for the poor.”

https://twitter.com/KaseyKlimes/status/821836823022354432/

There's only 5 days left in the Kickstarter campaign for the audiobook of my next novel, a post-cyberpunk anti-finance finance thriller about Silicon Valley scams called Red Team Blues. Amazon's Audible refuses to carry my audiobooks because they're DRM free, but crowdfunding makes them possible.

Image:

Stephen Drake (modified)

https://commons.wikimedia.org/wiki/File:Analog_Test_Array_modular_synth_by_sduck409.jpg

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/deed.en

—

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

—

Louis (modified)

https://commons.wikimedia.org/wiki/File:Chestnut_horse_head,_all_excited.jpg

CC BY-SA 2.0

https://creativecommons.org/licenses/by-sa/2.0/deed.en

[Image ID: A complex mandala of knobs from a modular synth. In the foreground, limned in a blue electric halo, is a man in a hi-viz vest with the head of a horse. The horse's eyes have been replaced with the sinister red eyes of HAL9000 from Kubrick's '2001: A Space Odyssey.'"]

#pluralistic#great resignation#twiddler#countertwiddling#wage discrimination#algorithmic#scholarship#doordash#para#Veena Dubal#labor#brian merchant#app boss#reverse centaurs#skinner boxes#enshittification#ants vs pickers#tuyul#steampunk#cottage industry#ccpa#gdpr#App Drivers and Couriers Union#shitty technology adoption curve#moral economy#gamblification#casinoization#taylorization#taylorism#giant teddy bears

3K notes

·

View notes

Text

Meet my coquettecore centipedegirl, Molly.

#i just really like bugs#centipede#artists on tumblr#original art#pompoison#centaur#reverse centaur#bug girl#digital art#coquette#cute#drawing#art#oc

20 notes

·

View notes

Text

youtube

#reverse centaur#video#north of the border#clay#creative#create#jorts#short shorts#pony#horse#Youtube

0 notes

Text

from left to right, top to bottom

39 notes

·

View notes