#facial recognition technology services

Text

Facial Recognition Solutions

Discover a new era of security with ThirdEye AI's cutting-edge facial recognition solutions. Our technology offers unparalleled fraud prevention with liveliness detection and geo-fenced attendance. Seamlessly integrate with APIs, access detailed attendance reports, and enjoy auto-scaling for peak performance. Revolutionize security with our innovative approach.

#facial recognition services#facial recognition technology services#cloud-based services facial recognition technology#custom facial recognition software development services#aws services facial recognition#facial recognition cloud services#AI Face recognition Apps

0 notes

Text

Revolutionizing Payments in Europe with PAYBYFACE® Biometric Payment & Loyalty Platform.

PayByFace Offers a Unique Bio Metric Payment System, PayByFace takes Customer Loyalty to the next level. PayByFace Makes Digital Advertising Simple and effordable for Small and Large Businesses.

PayByFace continues to add to its suite of value added services included in their Ecosystem.

PayByFace, a innovative Amsterdam-based fintech start-up, announces several country based agreements and subsidiary expansions across several major European markets, including the Netherlands, UK, Ireland, France, Italy, Spain, Switzerland, Germany, Austria, Romania, and Bulgaria.

This is a significant milestone for PayByFace and its journey to both enable and scale biometric facial recognition technology adoption and in our commitment to simplify the future of check-out interactions while remaining fully GDPR compliant with the EU regulation.

PayByFace the future of payments is poised to transform the way European consumers interact with retailers at the check-out, offering unparalleled convenience, loyalty value focus, and enhanced security in the digital era.

For more information reach out to us here at PayByFace Ireland

www.paybyface.ie

#FaceRecognition #PayByFace #Biometricpayment #Futureofpayments #FacialRecognitionPayments #Securecontactlesspayments

#biometric payment#facial recognition payments#paybyface#future of payments#secure contactless payments#European payments#loyalty program#digital advertising#fintech start-up#facial recognition technology#GDPR compliant#convenience at check-out#enhanced security#customer loyalty#payment ecosystem#value added services#European market expansion#biometric facial recognition technology adoption.

2 notes

·

View notes

Text

issuu

#artificial intelligence in the beauty industry#salon booking environment#facial recognition technologies#AI-powered appointment management systems#salon booking app development company#AR in virtual salon consultation#top mobile app development company#best mobile app development company#ui ux design services#ui ux design services company

0 notes

Text

Twitter Blue Tests Verification With Government ID and Selfie

X (the social media site formerly known as Twitter) is in the process of launching a new identity verification feature that could prove controversial. The feature, which is currently only offered to/forced on premium “Blue” subscribers, asks users to fork over a selfie and a picture of a government issued ID to verify that they are who they say they are.

Mr. Tweet Fumbles Super Bowl Tweet

The…

View On WordPress

#Biometrics#Computer access control#Elon Musk#Facial recognition system#Gizmodo#Google#ID.me#Identity document#Identity management#Identity verification service#Internet#Internet privacy#Microsoft#Musk#Nima Owji#PAYPAL#Surveillance#Technology#twitter#Uber#Verification

0 notes

Text

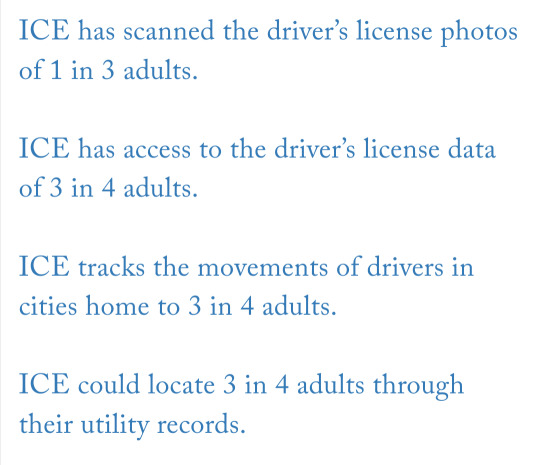

When 3 in 4 (74%) adults in the U.S. connected the gas, electricity, phone or internet in a new home, ICE was able to automatically learn their new address. Almost all of that has been done warrantlessly and in secret.

Dated May, 10, 2022 a report published by Georgetown Law showed how invasive ICE reaches. Obtained through 200 FOIA requests, the reports says ICE has tracked civilians using utilities, drivers licenses, facial recognition, and even used vulnerable unaccompanied minors being held in detention to find more leads (specifically, searching for more of their family members without documentation) . And they do so without warrants or even informing state legislators. Even companies and corporations have shared info with with ICE without their knowledge or consent.

"Most congressional leaders did not learn about ICE face recognition scans of DMV photos until The Washington Post ran an exposé on the practice, reporting on records obtained by the Center on Privacy & Technology. This exposé ran in 2019, over a decade after ICE penned its first known face recognition contract in 2008 for access to the Rhode Island driver database.

The fact that ICE was conducting face recognition scans on driver’s license photos came as a shock to senior lawmakers – even those with the greatest insight into DHS activities. On learning of the face scans, Rep. Zoe Lofgren, the longtime chair of the House Judiciary Subcommittee on Immigration and Citizenship, denounced the practice as “a massive, unwarranted intrusion into the privacy rights of Americans by the federal government, done secretly and without authorization by law.” ICE’s surveillance initiatives have regularly flown under Congress’ radar. While a few political leaders have pressed ICE in oversight letters and used appropriations riders to end the most aggressive of ICE’s actions, to date there has not been one full congressional hearing or Government Accountability Office (GAO) report focused on ICE surveillance."

Further, even when states become aware and make moves to block ICE from having this kind of access they just use a different door.

In Washington, Governor Jay Inslee enacted a statewide policy to limit state agency cooperation with ICE only to discover that state licensing officials were routinely violating that policy. When state officials cut off ICE’s access to a state-run driver database, previously unseen records show that DHS searches of a separate network of driver data – one not operated by the state – nearly doubled. In Oregon, soon after lawmakers passed a law cutting off state data disclosures to ICE, the Oregon DMV signed agreements to sell its driver’s license records to Thomson Reuters and LexisNexis Risk Solutions, the two primary data brokers that sell ICE access to driver information."

This is a HUGE breach of privacy and goes as far back as 2008 when ICE used facial recognition in Rhode Island under the Bush administration.

The article also discusses the potential danger this has to cause such a deep mistrust in government that it could cause folks to deny or reject state services to avoid being put in an ICE database or otherwise have their data used without knowledge or consent.

Likewise the report also has a section that advises how this privacy could be restored and corrected by Congress. Which means signal boost this. Congress was made aware of these breaches in 2019 and as of yet have done nothing to stop ICE or hold them accountable.

Which means it's on us to hold them accountable because once again the folks in Congress have shown they lack the ambition and initiative.

#ICE#Abolish ICE#current events#biden#democrats#liberals#immigration#chinga la migra#acab#leftists#american#fascism#capitalism#xenophobia#congress#senate#usa#usa politics#defund ice#joe biden#obama#bush#invasion of privacy#detention center#child support#welfare#healthcare#housing#progress#privacy law

4K notes

·

View notes

Text

On the one hand, people who take a hardline stance on “AI art is not art” are clearly saying something naïve and indefensible (as though any process cannot be used to make art? as though artistry cannot still be involved in the set-up of the parameters and the choice of data set and the framing of the result? as though “AI” means any one thing? you’re going to have a real hard time with process music, poetry cut-up methods, &c.).

But all of this (as well as takes that what's really needed is a crackdown on IP) are a distraction from a vital issue—namely that this is technology used to create and sort enormous databases of images, and the uses to which this technology is put in a police state are obvious: it's used in service of surveillance, incarceration, criminalisation, and the furthering of violence against criminalised people.

Of course we've long known that datasets are not "neutral" and that racist data will provide racist outcomes, and we've long known that the problem goes beyond the datasets (even carefully vetting datasets does not necessarily control for social factors). With regards to "predictive policing," this suggests that criminalisation of supposed leftist "radicals" and racialised people (and the concepts creating these two groups overlap significantly; [link 1], [link 2]) is not a problem, but intentional—a process is built so that it always finds people "suspicious" or "guilty," but because it is based on an "algorithm" or "machine learning" or so-called "AI" (processes that people tend to understand murkily, if at all), they can be presented as innocent and neutral. These are things that have been brought up repeatedly with regards to "automatic" processes and things that trawl the web to produce large datasets in the recent past (e.g. facial recognition technology), so their almost complete absence from the discourse wrt "AI art" confuses me.

Abeba Birhane's thread here, summarizing this paper (h/t @thingsthatmakeyouacey) explains how the LAION-400M dataset was sourced/created, how it is filtered, and how images are retrieved from it (for this reason it's a good beginner explanation of what large-scale datasets and large neural networks are 'doing'). She goes into how racist, misogynistic, and sexually violent content is returned (and racist mis-categorisations are made) as a result of every one of those processes. She also brings up issues of privacy, how individuals' data is stored in datasets (even after the individual deletes it from where it was originally posted), and how it may be stored associated with metadata which the poster did not intend to make public. This paper (h/t thingsthatmakeyouacey [link]) looks at the ImageNet-ILSVRC-2012 dataset to discuss "the landscape of harm and threats both the society at large and individuals face due to uncritical and ill-considered dataset curation practices" including the inclusion of non-consensual pornography in the dataset.

Of course (again) this is nothing that hasn't already been happening with large social media websites or with "big data" (Birhane notes that "On the one hand LAION-400M has opened a door that allows us to get a glimpse into the world of large scale datasets; these kinds of datasets remain hidden inside BigTech corps"). And there's no un-creating the technology behind this—resistance will have to be directed towards demolishing the police / carceral / imperial state as a whole. But all criticism of "AI" art can't be dismissed as always revolving around an anti-intellectual lack of knowledge of art history or else a reactionary desire to strengthen IP law (as though that would ever benefit small creators at the expense of large corporations...).

835 notes

·

View notes

Text

The RCMP did not follow the Privacy Act and its own guidelines when it chose web investigation technology, concludes a special report by Canada’s privacy commissioner, Philippe Dufresne, presented to Parliament on Thursday.

The investigation was spurred by Tyee reporting in 2019 and 2020, which revealed the RCMP’s Tactical Internet Operational Support unit used tools that can circumvent the privacy and security of Canadians online.

The previous privacy commissioner, after an earlier investigation, raised similar concerns about the RCMP’s use of the facial recognition service Clearview AI, and the force at the time said it would address those issues with a new screening program it called the National Technology Onboarding Program.

Continue Reading

Tagging @politicsofcanada

#cdnpoli#canada#canadian politics#canadian news#rcmp#policing#police#surveillance#technology#privacy#online privacy

66 notes

·

View notes

Text

🚨 Euro-Mediterranean Human Rights Monitor:

—

The involvement of technology and social media companies in causing the killing of civilians by "israel" in the Gaza Strip necessitates an immediate investigation.

These companies should be held accountable and liable if their complicity or failure to exercise due diligence in preventing access to and exploitation of their users’ private information is proven, obligating them to ensure their services are not misused in war zones and that they protect user privacy.

"Israel" uses various technology systems supported by artificial intelligence, such as Gospel, Fire Factory, Lavender, and Where’s Daddy, all operating within a system aimed at monitoring Palestinians illegally and tracking their movements.

These systems function to identify and designate suspected individuals as legitimate targets, based mostly on shared characteristics and patterns rather than specific locations or personal information.

The accuracy of the information provided by these systems is rarely verified by the occupation army, despite a known large margin of error due to the nature of these systems' inability to provide updated information.

The Lavender system, heavily used by the occupation army to identify suspects in Gaza before targeting them, is based on probability logic, a hallmark of machine learning algorithms.

"Israeli" military and intelligence sources have admitted to attacking potential targets without consideration for the principle of proportionality and collateral damage, with suspicions that the Lavender system relies on tracking social media accounts among its sources.

Recently, "israel's" collaboration with Google was revealed, including several technological projects, among them Project Nimbus which provides the occupation army with technology that facilitates intensified surveillance and data collection on Palestinians illegally.

The occupation army also uses Google's facial recognition feature in photos to monitor Palestinian civilians in the Gaza Strip and to compile an "assassination list," collecting a vast amount of images related to the October 7th operation.

The Euro-Mediterranean field team has collected testimonies from Palestinian civilians directly targeted in Israeli military attacks following their activities on social media sites, without any involvement in military actions.

The potential complicity of companies like Google and Meta and other technology and social media firms in the violations and crimes committed by "israel" breaches international law rules and the companies' declared commitment to human rights.

No social network should provide this kind of private information about its users and actually participate in the mass genocide conducted by "israel" against Palestinian civilians in Gaza, which demands an international investigation providing guarantees for accountability and justice for the victims.

#meta#google#alphabet#social media#instagram#ai#machine learning#palestine#free palestine#gaza#free gaza#jerusalem#israel#tel aviv#gaza strip#from the river to the sea palestine will be free#joe biden#benjamin netanyahu#news#breaking news#gaza news#genocide#palestinian genocide#gaza genocide#human rights#social justice#surveillance#iof#idf#israeli terrorism

21 notes

·

View notes

Text

Things to Think About Post-Roe

Regardless of whether you live in a state where abortion is still legal or not, here are some things to think about moving forward. Most of us, me included, aren't old enough to remember a time before Roe. But it is going to impact all of us women, trans men, non-binary, and others along with those who love them.

1. Remove all period trackers/apps from your phone. Use a physical pocket calendar instead and use an easy-to-remember phrase like "lunch with (friend or SO)" or "return library books."

2. If you go to any facility for reproduction healthcare services, don't take your phone, or tracking data will place you there.

3. If you go to a facility for healthcare services, remember to wear a mask. Facial recognition software is a growing technology.

4. If you miscarry, don't tell your healthcare providers if you took anything. The treatment for abortion and miscarriage is the same. Those who miscarry can and will be criminally investigated and charged. There have been reports of them being turned in by their healthcare providers. BIPOC are at the highest risk for this and drug testing.

5. Incognito browsers are a weak defense. Deleting browsing data helps but isn't a failsafe. Use a VPN. Always.

6. Be careful who you trust. The father may not want that pregnancy or be ready for it. Tell me he (or someone else) wouldn't want the $10k bounty some states are offering for turning you in.

7. Many are posting links to viable organizations and networks to help those with unwanted pregnancies. Donate what you can. Vet them first.

561 notes

·

View notes

Text

I had some thoughts about paying to feed your face into a generator built on unpaid work used without consent. Transcript and links under the cut.

So let’s talk about AI art, and how incredibly unsafe y’all are being with it.

First, most of these apps, including the avatar makers, are developed using a generator called Stable Diffusion that was trained on LAION 5B, a database containing 5 billion pictures scraped off the internet, including illustrations from deviantart and pinterest. https://en.wikipedia.org/wiki/Stable_Diffusion#Training_data

It also contains thousands of images of patients scraped from private medical records. And the database creators have refused to remove them or take any responsibility. https://arstechnica.com/information-technology/2022/09/artist-finds-private-medical-record-photos-in-popular-ai-training-data-set/

Many artists have spoken up about how unethical it is to use their work without their consent to make an art generator that is now being used for paid products. These generators wouldn’t exist without the work of thousands of artists around the world, but they never gave permission for their images to be used this way, they can’t opt out, and they are not getting paid even for apps that charge *you* to use them.

Maybe that’s enough to change your mind about the “magic avatars” and “time travelling portraits.” But I get that they’re fun, and frankly, people will overlook a lot of harm when they’re having fun. Which brings us to the “find out” part of this video.

When you are giving these apps 10-20 pictures of YOUR FACE, where in the terms of service does it say they won’t sell those pictures to police surveillance companies? https://www.nytimes.com/2020/01/18/technology/clearview-privacy-facial-recognition.html

When you are giving them your face, where in the TOS does it say they’re responsible if their databases get hacked? If their data is used to impersonate or stalk you? https://thenextweb.com/news/people-using-facial-recognition-app-stalk-adult-actresses

Where in the TOS does it say your face can’t be used by companies training AI to help genocide? https://www.bbc.com/news/technology-55634388

Where in the TOS does it say your face will not be sold to a service that will splice you into porn? https://www.technologyreview.com/2021/09/13/1035449/ai-deepfake-app-face-swaps-women-into-porn/

That will create an on-demand version of you for strangers to assault? https://vocal.media/viva/why-are-men-creating-ai-girlfriends-only-to-abuse-them-and-brag-about-it-on-reddit

And you are giving some of them your payment info? You are giving all of them 10-20 pictures of your face?

They don’t care about the people whose work makes their generators possible. Why do you think they’re going to care about you?

#ai art hate blog#seriously y'all#do not give them your face and payment info are you out of your minds

262 notes

·

View notes

Text

Project Nimbus is a 1.2$ billion contract to provide cloud services for the Israeli government and military, signed by technology companies Google and Amazon.

The service provided is powered by AI and uses surveillance and facial recognition to create an exhaustive database of the Palestinian citizens in the West Bank, containing confidential information such as their name, address, car license plate, and whether they're "wanted" or not.

In this video, we see how this is transposed in reality in the city of Hebron.

youtube

#my brain bugged at the smart shooter#you're telling AI is recognizing and shooting palestinians based on their record in its database#modern day colonialism is crazy#palestine#free palestine#hebron#west bank#project nimbus#queued#Youtube

31 notes

·

View notes

Text

youtube

#biometric payment#facial recognition payments#paybyface#future of payments#secure contactless payments#European payments#loyalty program#digital advertising#fintech start-up#facial recognition technology#GDPR compliant#convenience at check-out#enhanced security#customer loyalty#payment ecosystem#value added services#European market expansion#biometric facial recognition technology adoption.#paybyfaceireland#Youtube

0 notes

Text

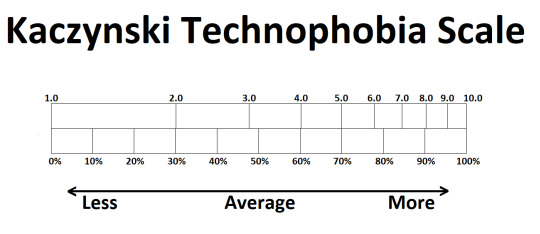

1.0, Big Brother (0% technophobic)

These are tech bros and billionaire executives, the kind of people who push for AI facial recognition, data tracking, and mind reading technology (metaphorically, until actual mind reading technology becomes viable, in which case, literally). If you give them an email address you haven't touched since 2006, in less than a day they'd have a file full of your personal information thicker than the FBI's and NSA's combined. Down here, you have guys like Elon Musk, Mark Zuckerberg, and most politicians (especially after 9/11. Can you say USA PATRIOT Act?)

1.1 - 3.2

Here, you'll find the fanboys of the above. Guys who are really into NFTs and crypto. Influencers and blue checks who crave attention and show off all their 4 figure phones, 5 figure workout equipment, and 6 or 7 figure cars. The kind of rich assholes who think they're tech savvy because every appliance in their home connects to the internet and requires a subscription service in order to not vent deadly neurotoxin through their air ducts. These are all small fish who aspire to be big fish; the 1.Xs will throw themselves under the bus to protect the 1.0s, while the 2.Xs will get thrown under the bus involuntarily.

3.2, True Neutral (50%)

Because this is a log scale, the actual center is 3.16227766 (the square root of 10). 3.2 represents Average Joe American, the type of guy who doesn't care about the state of technology one way or the other. He probably doesn't own an Alexa or Ring camera, only because he's never thought of buying one (3.1) or thinks it would be too much of a hassle to set up (3.3). If facebook asked him for a 3d scan of his head to try out a new memoji, he'd upload it without hesitation. He thinks cops shouldn't need a warrant to spy on the Bad Guys™, and recently voted for politicians who wrote the Let Cops Decide Who is Good and Who is Bad With Impunity Bill (though he will never connect dots between his actions and their consequences). "Why should you care if you have nothing to hide?" This guy buys his friends and family $100 send-in-your-spit DNA tests for Christmas.

3.2 - 5.0

I'd say most of the people reading this fall somewhere around here, though they think they're much higher. Hell, I'm probably a mid-4, but until I actually started plotting out this scale I would have guessed I was a 6 or a 7. High 5 at least! 3.Xs don't know how to pirate things and begrudgingly subscribe to some or all the major streaming services. 4.Xs don't use facebook anymore, but are still on twitter because that's where all the people they follow post from. These people are vaguely aware of how bad things could potentially be, but have no clue how bad they really are; if you suspect you're in this range, please know that every single service you've ever given your email address to is connected to your name in a database somewhere, even if you faked all the rest of the info you gave out. If you signed up to a grocery store value card, advertisers immediately know every single item you've ever purchased, and can even make assumptions based on the purchases of people you are in close proximity to every day (your phone is close to this other person's phone from 9 to 5, so you're probably co-workers, or they're close from 6pm to 6am, so you live together, etc.)

5.0 - 6.0

A little healthy skepticism to help shield your brain from the fact that you live in an Orwellian surveillance state. You use adblocker and VPNs, you don't carry your phone with you 24/7, you use burner emails for every different website (though it won't make much difference because they're all being accessed from the same device, so it wouldn't take any government entity more than a couple seconds to figure out they all belong to the same person). If 3.2 is blissfully ignorant, 5.2 is in living hell because they KNOW what's up and are powerless to do anything about it.

6.0 - 8.0

These are the REAL tech savvy people who don't use social media, have zero smart appliances in their homes, and rely heavily on physical media. We should all strive to be here. In the upper 7s you get privacy activists who know deep down that the system will never be able to fix itself but still hope against hope that it will.

8.0 - 9.9

These people scare me, not because they actually get shit done but because they have delusions of grandeur and TALK about how much shit they'll get done. Most libertarians think they're up here, but really they're down in the 4s and 5s with the rest of us. Real 8s and 9s are batshit Tyler Durden wannabes who think they can change the world by planning terror attacks "in minecraft." They never do anything because they either get caught or chicken out because it's more fun to plan for the singularity or the collapse of the grid than to actually carry out said plans. These are doomsday preppers and dude-bros who are little different than qanon nutjobs (except that qanon supports Big Brother)

10.0, Full Kaczynski (100%)

You are Theodore John Kaczynski, you live in a shack in the woods and you mail bombs to universities. NEVER GO FULL KACZYNSKI. You'll never succeed in hurting any substantial 1s or 2s, just innocent 3s and 4s. In reality, Ted cared more about industrialization and the environment than computers and the police state, but the internet didn't exist in the 70s. The modern world is built upon man made horrors beyond his imagination.

I guess I subscribe to a lopsided horseshoe theory; instead of both sides being equally bad at the extremes, the lower end is worse because it is much more powerful and influential. There are more 1.Xs than 9.Xs, but you'll hear about the 9s in the news a lot more often. You're more likely to be killed by a cow than a shark, or by a cop than an undocumented immigrant, but we all know that quantity isn't what gets reported on, now is it?

I dunno, take this scale with a grain of salt. It's all subjective.

#ted kaczynski#kaczynski#Technophobia#log scale#never go full kaczynski#technology#big brother#big brother is watching you#orwellian#surveillance#surveillance state#police state#privacy

127 notes

·

View notes

Text

Urgent need to investigate role of technology, social media companies in killing Gazan civilians

Palestinian Territory – The role of major technology companies and international social media platforms in the killing of Palestinian civilians during Israel’s genocidal war against the Gaza Strip, ongoing since 7 October 2023, must be investigated. These companies need to be held accountable if found to be complicit or not to have taken adequate precautions to prevent access to, and exploitation of, users’ information. They must ensure that their services are not used in conflict zones and that their users’ privacy is respected.

There are frequent reports that Israel uses a number of artificial intelligence-supported technological systems, including Where’s Daddy?, Fire Factory, Gospel, and Lavender, to illegally track and monitor Palestinians. These systems are able to identify possible suspectsand classify them as legitimate targets based on potentially relevant information that is typically unrelated to the location or individual in question, by looking for similarities and patterns among all Gaza Strip residents, particularly men, and among members of armed factions.

Studies have shown that, although they are aware of a significant margin of error due to the nature of theseoperating systems and their inability to provide accurate information—particularly with regard to the whereabouts of those placed on the targeting list in real time—the Israeli army usually does not verify the accuracy of the information provided by these systems.

For example, Israel’s army uses the Lavender system extensively to identify suspects in the Strip before targeting them; this system intentionally results in a high number of civilian casualties.

The Lavender system uses the logic of probabilities, which is a distinguishing characteristic of machine learning algorithms. The algorithm looks through large data sets for patterns that correspond to fighter behaviour, and the amount and quality of the data determines how successful the algorithm is in finding these patterns. It then recommends targets based on the probabilities.

With concerns being voiced regarding the Lavendersystem’s possible reliance on tracking social media accounts, Israeli military and intelligence sources have acknowledged attacking potential targets without considering the principle of proportionality or collateral damage.

These suspicions are supported by a book (The Human Machine Team) written by the current commander of the elite Israeli army Unit 8200, which offers instructions on how to create a “target machine” akin to the Lavender artificial intelligence system. The book also includes information on hundreds of signals that can raise the severity of a person’s classification, such as switching cell phones every few months, moving addresses frequently, or even just joining the samegroup on Meta’s WhatsApp application as a “fighter”.

Additionally, it has been recently revealed that Google and Israel are collaborating on several technology initiatives, including Project Nimbus, which provides the Israeli army with tools for the increased monitoring and illegal data collection of Palestinians, thereby broadening Israeli policies of denial and persecution, plus other crimes against the Palestinian people. This project in particular has sparked significant human rights criticism, prompting dozens of company employees to protest and resign, with others being fired over their protests.

The Israeli army also uses Google Photos' facial recognition feature to keep an eye on Palestinian civilians in the Gaza Strip and create a “hit list”. It gathers as many images as possible from the 7 October event, known as Al-Aqsa Flood, during which Palestinian faces were visible as they stormed the separation fence and entered the settlements. This technology is then used to sort photos and store images of faces, which resulted in the recent arrest of thousands of Palestinians from the Gaza Strip, in violation of the company’s explicit rules and the United Nations Guiding Principles on Business and Human Rights.

The Euro-Med Monitor field team has documentedaccounts of Palestinian civilians who, as a consequence of their social media activity, have been singled out as suspects by Israel, despite having taken no military action.

A young Palestinian man who requested to beidentified only as “A.F.” due to safety concerns, for instance, was seriously injured in an Israeli bombing that targeted a residential house in Gaza City’s Al-Sabra neighbourhood.

The house was targeted shortly after A.F. posted a video clip on Instagram, which is owned by Meta, in which he joked that he was in a “field reconnaissance mission”.

His relative told Euro-Med Monitor that A.F. hadmerely been attempting to mimic press reporters when he posted the brief video clip on his personal Instagram account. Suddenly, however, A.F. was targeted by an Israeli reconnaissance plane while on the roof of the house.

A separate Israeli bombing on 16 April claimed the lives of six young Palestinians who had gathered to access Internet services. One of the victims was using a group chat on WhatsApp—a Meta subsidiary—to report news about the Sheikh Radwan neighbourhood of Gaza City.

The deceased man’s relative, who requested anonymity due to safety fears, informed Euro-Med Monitor that the victim was near the Internet access point when thegroup was directly hit by a missile from an Israeli reconnaissance plane. The victim was voluntarily sharing news in family and public groups on the WhatsApp application about the Israeli attacks and humanitarian situation in Sheikh Radwan.

The Israeli army’s covert strategy of launching extremely damaging air and artillery attacks based on data that falls short of the minimum standard of accurate target assessment, ranging from cell phone records and photos to social media contact and communication patterns, all within the larger context of an incredibly haphazard killing programme, is deeply concerning.

The evidence presented by global technology experts points to a likely connection between the Israeli army’s use of the Lavender system—which has been used to identify targets in Israel’s military assaults on the Gaza Strip—and the Meta company. This means that the Israeli army potentially targeted individuals merely for being in WhatsApp groups with other people on the suspect list. Additionally, the experts question how Israel could have obtained this data without Meta disclosing it.

Earlier, British newspaper The Guardian exposed Israel’s use of artificial intelligence (Lavender) to murder a great number of Palestinian civilians. The Israeli military used machine learning systems to identify potential “low-ranking” fighters, with the aim of targeting them without considering the level of permissible collateral damage. A “margin of tolerance”was adopted, which allowed for the death of 20 civilians for every overthrown target; when targeting “higher-ranking fighters”, this margin of tolerance allowed for the death of 100 people for every fighter.

Google, Meta, and other technology and social media companies may have colluded with Israel in crimes and violations against the Palestinian people, including extrajudicial killings, in defiance of international law and these companies’ stated human rights commitments.

Social networks should not be releasing this kind of personal data about their users or taking part in the Israeli genocide against Palestinian civilians in the Gaza Strip. An international investigation is required in order to provide guarantees of accountability for those responsible, and justice for the victims.

Meta’s overt and obvious bias towards Israel, itssubstantial suppression of content that supports the Palestinian cause, and its policy of stifling criticism of Israel's crimes—including rumours of close ties between top Meta officials and Israel—suggest the company’s plausible involvement in the killing of Palestinian civilians.

Given the risks of failing to take reasonable steps to demonstrate that the objective is a legitimate one under international humanitarian law, the aforementionedcompanies must fully commit to ending all cooperation with the Israeli military and providing Israel with access to data and information that violate Palestinian rights and put their lives in jeopardy.

Israel’s failure to exercise due diligence and considerhuman rights when using artificial intelligence for military purposes must be immediately investigated, as well as its failure to comply with international law and international humanitarian law.

These companies must promptly address any information that has been circulating about their involvement in Israeli crimes against the Palestinian people. Serious investigations regarding their policies and practices in relation to Israeli crimes and human rights violations must be opened if necessary, and companies must be held accountable if found to be complicit or to have failed to take reasonable precautions to prevent the exploitation of userinformation for criminal activities.

#free Palestine#free gaza#I stand with Palestine#Gaza#Palestine#Gazaunderattack#Palestinian Genocide#Gaza Genocide#end the occupation#Israel is an illegal occupier#Israel is committing genocide#Israel is committing war crimes#Israel is a terrorist state#Israel is a war criminal#Israel is an apartheid state#Israel is evil#Israeli war crimes#Israeli terrorism#IOF Terrorism#Israel kills babies#Israel kills children#Israel kills innocents#Israel is a murder state#Israeli Terrorists#Israeli war criminals#Boycott Israel#Israel kills journalists#Israel kills kids#Israel murders innocents#Israel murders children

5 notes

·

View notes

Quote

The Palestinians are human laboratory rats to the Israeli military, intelligence services and arms and technology industries. Israel’s drones, surveillance technology — including spyware, facial recognition software and biometric gathering infrastructure — along with smart fences, experimental bombs and AI-controlled machine guns, are tried out on the captive population in Gaza, often with lethal results.

Chris Hedges

14 notes

·

View notes

Text

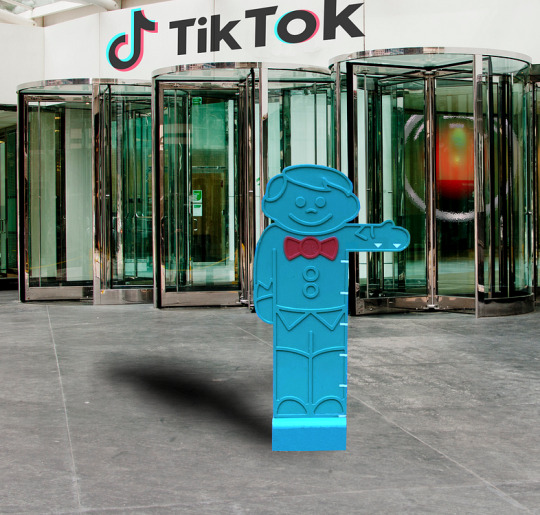

Podcasting "How To Make a Child-Safe TikTok"

This week on my podcast, I read my recent Medium column, “How To Make a Child-Safe TikTok: Have you tried not spying on kids?” The column was inspired by one of the most bizarre exchanges during the Congressional grilling of TokTok CEO Shou Chew:

https://doctorow.medium.com/how-to-make-a-child-safe-tiktok-be08fbf94b0d

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/04/17/have-you-tried-not-spying/#coppa

If you heard anything about that hearing, it was likely this. Rep Buddy Carter, Republican of Georgia, demanded to know whether Tiktok used “the phone’s camera to determine whether the content that elicits a pupil dilation should be amplified by the algorithm?”

https://www.c-span.org/video/?526609-1/tiktok-ceo-testifies-house-energy-commerce-committee-hearing

Chew replied, “We do not collect body, face or voice data to identify our users. We do not.” Carter pressed him, asking “How do you determine what age they are then?”

Chew said, “We rely on age-gating as our key age assurance.” Carter assumed tuckercarlsonian expression of perplexity and asked for more information. Chew explained: “It’s when you ask the user what age they are.” Carter was clearly baffled by this.

Chew added, “this is a real challenge for our industry because privacy versus age assurance is a really big problem.” Carter interrupted him: “you keep talking about the industry, we’re talking about TikTok here.”

This was a remarkable exchange, even by the standards of Congressional hearings on technology, a genre that includes “a series of tubes,” “Senator, we run ads,” and “Will you commit to ending finsta?”

Chew was completely and terribly correct, of course. The way that the entire industry complies with COPPA — the law that prohibits data-gathering on under-13s without parental consent — is by asking every used to tick a box that says “I am over 13.” This is such an inadequate and laughable figleaf that the Congressdunderhead from Georgia can (possibly) be forgiven for assuming that “age verification” involved some kind of digital phrenology by way of facial scanning.

But beyond being yet another entry in the annals Congressional Pig-Ignorance On Tech, the exchange reveals a massive blind-spot about the entire business of kids’ privacy, and the legislative intention of COPPA, a law passed in 1998, before the age of ubiquitous commercial internet surveillance — but not before people understood that this would be an important subject.

One thing to note here is how rare COPPA is. The US has very near to zero federal privacy laws. There’s the Electronic Communications Privacy Act of 1986, which is about as up-to-date as you might imagine given that it was passed in 1986. Then there’s the Video Privacy Protection Act of 1988, which bans video-store clerks from revealing which porn you (or more to the point, Members of Congress) have rented.

And then there’s COPPA, which requires parental consent for data-gathering on pre-teens. And that’s basically…it.

COPPA’s got a checkered legislative history; a lot of the “parental consent” language is about ensuring that kids can’t get access reproductive health information and services, but as with any contentious piece of lawmaking, COPPA passed due to a coalition with different priorities, and part of that coalition just wanted to make sure that companies weren’t spying on kids.

Because — as both Buddy Carter and Shou Chew — can attest, it’s really hard to get parental consent at scale. Like, how do you even know if you’re talking to a kid’s parent or guardian if you’re not allowed to gather information on that kid? And how do you know if you’re talking to a kid or an adult when you gather any information, on any user?

Even if facial recognition technology had been widespread in 1986, I think we can all agree that Congress’s intent wasn’t to “protect kids’ privacy” by subjecting every child who used a computer to an invasive biometric scan. How could you comply with COPPA, then?

Well, one possibility is to never spy on users.

OK, not never. But only in very special circumstances — situations in which users would be willing to go through a reasonably thorough identification procedure. There are some situations in which it would be relatively straightforward to do this for parental consent, too: schools, pediatricians and libraries typically encounter children at the same time as their parents or guardians.

And for the rest of it, companies could just not spy.

The truly bizarre thing is how bizarre this suggestion comes across. It is essentially beyond the imagination of both Buddy Carter and Shou Chew that Tiktok could comply with COPPA by not gathering any user-data. After all COPPA, doesn’t prohibit providing web access to under-13s without parental consent — it prohibits spying on under-13s.

It’s not just Congressdunderheads and Tiktok CEOs who treat “don’t spy on under-13s” as a synonym for “don’t let under-13s use this service.” Every tech product designer and every general counsel at every tech company treats these two propositions as equivalent, because they are literally incapable of imagining a surveillance-free online service.

Which is funny, given another part of the Congressional interview. Chew says, “The only face data that you’ll get, that we collect is when you use the filters that put, say, sunglasses on your face, we need to know where your eyes are.” Carter interrupts him to say, “Why do you need to know where the eyes are if you’re not seeing if they’re dilated?” (my god this guy is horny for pupils).

Chew finishes, “and the data is stored locally on your local device and deleted after the use, if you use it for facial” (emphasis mine).

The Tiktok app could store the list of accounts you follow on your device, and send requests to the Tiktok servers for their updates, and the servers could fulfill those requests without logging them. Your device could analyze the videos you interact with and ask the Tiktok servers for suggestions based on those criteria — again, without Tiktok logging your info.

There’s no millennial prophet who came down off a mountain with two stone tablets circa 2002 and intoned, “Nerds of the world, thou shalt stop rotating thine logfiles, and lo! Thou shalt mine them for actionable market intelligence.” There is nothing intrinsic to the idea of letting people talk to each other, or search the web, or look at videos, that requires surveillance. The surveillance is a choice, which necessitated hundreds of billions of dollars in capital expenditures, and which should have been understood as illegal under COPPA.

But COPPA hasn’t been meaningfully enforced for a quarter of a century. That’s because the ad-tech industry mobilizes some of the hundreds of billions of dollars it gains through spying to block privacy law enforcement and the passage of any new privacy laws. David Cohen, CEO of the surveillance lobby group IEA, told his members, “Extremists are winning the battle for hearts and minds in Washington, D.C., and beyond. We cannot let that happen.”

His co-conspirators at the anti-privacy lobbying group Privacy For America (yes, really) told Congress that commercial surveillance saves every American $30,000/year — in other words, they value the data they steal from you every year at $30,000:

https://www.privacyforamerica.com/wp-content/uploads/2023/02/Privacy-for-America-Letter-in-Support-of-Preemptive-Comprehensive-Privacy-Legislation.pdf

But as Julia Angwin points out, this figure is as absurd as the name “Privacy for America.” The number is pure fiction:

https://www.pnas.org/doi/10.1073/pnas.1815663116#abstract

It doesn’t matter how much the data caught in the ad-tech industry’s nonconsensual harvest is worth — all that matters is that it produces the surplus needed to keep privacy law enforcement and expansion at bay.

Tiktok shouldn’t spy on our kids. Neither should anyone else. America doesn’t need a law banning Tiktok, it’s needs a law banning Tiktok’s surveillance — as well as the surveillance of all its rivals:

https://pluralistic.net/2023/03/30/tik-tok-tow/#good-politics-for-electoral-victories

Because the Chinese state doesn’t need Tiktok to spy on Americans. In the freewheeling, unregulated privacy “marketplace,” all that data is for sale — Chinese spies can just plunk down their credit-cards next to everyone else who buys our data and mobilizes it to compromise us, market to us, and stalk us.

Here’s the podcast episode:

https://craphound.com/news/2023/04/17/how-to-make-a-child-safe-tiktok/

And here’s a direct link to the MP3 (hosting courtesy of the Internet Archive; they’ll host your stuff for free, forever):

https://archive.org/download/Cory_Doctorow_Podcast_443/Cory_Doctorow_Podcast_443_-_How_To_Make_a_Child-Safe_TikTok.mp3

And here’s the direct RSS link for my podcast:

https://feeds.feedburner.com/doctorow_podcast

THIS IS THE LAST DAY for the Kickstarter campaign for the audiobook of my next novel, a post-cyberpunk anti-finance finance thriller about Silicon Valley scams called Red Team Blues. Amazon’s Audible refuses to carry my audiobooks because they’re DRM free, but crowdfunding makes them possible.

[Image ID: The exterior of a corporate office building, with the TikTok logo and wordmark over its revolving doors. From behind the revolving doors glares the hostile red eye of HAL9000 from Kubrick's '2001: A Space Odyssey." In front of the doors is a 'you must be this tall to ride' amusement-park cutout of a boy with a bow-tie, holding out his arm to indicate the minimum required height.]

#pluralistic#age-gating#tiktok#coppa#surveillance#privacy#parenting#kids#rep buddy carter#pupillary dilation#podcasts

40 notes

·

View notes