#synthetic data generation tools

Text

Looking for powerful synthetic data generation tools? Next Brain AI has the solutions you need to enhance your data capabilities and drive better insights.

0 notes

Text

I’ve been seeing debates on whether Hasbro should do a remake/continuation of G1’s cartoon. While most have said no for one reason or another, citing the poor reception to MOTU: R and the mixed reception to X-Men in particular, I’m more curious on the matter. While I agree we don’t need it, part of me really wants Hasbro to do it just to see what they’d do with it.

I’ve seen some point out there wouldn’t be toys to sell for it, but my rebuttal is that’s what Legacy/Prime Generations is for. Basically just have this hypothetical show be the WFC/PW equivalent. One MAJOR stipulation: it must be tonally in line with the original cartoon and Transformers Devastation. Make this an all ages, but especially kid friendly show. None of that nonsense PW/WFC did. In fact keep those writers away from it, bring in Simon Furman, Flint Dille & Bob Budiansky to throw in some sexy adjectives and be script supervisors/editors to the new staff.

As for the plot, it depends on what they’d do: full remake-AU or continuation. The later would be simpler I think, just following up on what Galvatron and Zarak have been up to and the Autobots’/humanity’s reaction to it.

No matter what they’d do I feel like Hasbro would insist on lite retcons that include the 13 Primes and their Relics, which in turn fuel Galvatron and Zarak’s ambitions, while Optimus and Hot Rod have shared premonitions about the history of the Prime lineage, revealing in the cartoon universe, the thirteen primes were the prototypes the Quintessons developed after the Trans-Organics, with the Prime relics being Quintesson tools the Primes inherited after the Quints were driven off Cybertron. The Quints aren’t particularly happy their own tools are being uncovered, let alone seeing Galvatron using the Forge to upgrade his troops into Micromasters, Action Masters, & Pretenders. It becomes a race to see who collects the relics, with the Autobots determined to stop the Quintessons and Decepticons from abusing this ancient power. All the while, Solus Prime, Alchemist Prime and Quintus Prime are watching from the sidelines, the last survivors of the ancient Primes. And because Furman, there’d be a bit in here about Grimlock being a vessel for Onyx Prime temporarily, lol.

A clean slate AU could be done any number of ways, though my stipulation would absolutely no Allspark plot, but instead maybe combine elements of Dark of the Moon and Devastation where the factions are looking for the Ferotaxxis, which possess the data necessary to restore Cybertron by producing Synthetic Energon to whoever finds it first. The Ferotaxxis is unearthed by humans meanwhile, who study it and the unearthed Nova Prime, seeing a technological boom as far as the 80’s/90’s are concerned (similar to the Bay films and Sumac Systems in Animated). Nova isn’t particularly pleased at being poked and prodded by what he deems a lazy inferior species, and like Bay Sentinel concocts a scheme to screw over humanity, Optimus’ Autobots and secure the Ferotaxxis to gift Cybertron Earth’s energy. Because Cybertron is all that matters, the devil with anything that gets in the way of it.

The Autobots human friends would be Spike, Carly and Chip, the children of scientists and engineers working on Project O-Part; the O-Part, the Ferotaxxis, reacting to the Autobots and Decepticons presence on Earth.

The plot would then extend to the lineage of the 13 Primes and their relics, as they were things Nova and the Ferotaxxis were privy to, leading to the Autobots and the kids from stopping the Decepticons from getting their hands on the relics, with another wrinkle being added that some countries already found some like Carbomya, and won’t surrender them easily…

Like I said this concept can go any direction, but for a pivoted AU, this is just how I’d do it, going by what I assume Hasbro would still want with the 13 Primes being a component. Elements of Skybound would probably be here too, like Spike and Carly being those designs in particular.

But I think continuing where The Rebirth left off would be the better option, being the easiest to work with and with the already admittedly shoddy continuity of the G1 cartoon, you could pretty effortlessly add aspects of Skybound, IDW (and by that I mean characters like Nova, Rung, Rubble, Termagax, Three Fold Spark, etc) and the modern 13 lore.

Will do they do it? If they’re desperate enough, absolutely, but I don’t know if we’re entirely there yet. It’s getting closer and closer though.

8 notes

·

View notes

Text

One interesting thing about XX century fiction is that people *believed* or concieved of artificial intelligence in a body much like ours, of course there were the giant computers (I always chuckle when I read stuff like A COMPUTER THE SIZE OF A BUILDING... TO CONTROL A CITY'S TRAFFIC SYSTEM) but the general idea until at least the 80s was that artificial intelligences would be robots or androids, The Synthetic Man, an artificial electronic (or positronic, if you're old school) being with human or superhuman intelligence and yet looking mostly like a human being. Of course, there were lots of other concepts but that seemed to prevail. Even for example in the Animatrix, the "machines" were a underclass of human-like robots even if that doesn't make too much sense (it was to make hamfisted metaphors about slavery and racism, but I digress)

In any case, in the real world, making human-like robots who walk and can be mass produced has been proved to be absurdly difficult. Meanwhile, things that were considered impossible, like chat bots holding a quite humanlike conversations, are now a common reality. And the internet and the way we manage data has completely obliterated the idea of an AI with a discrete "body" like us. If an artificial intelligence arises, it will probably be a result of multiple arrays all over the internet, not one big computer becoming sentient, much less a single robot.

In a way it reminds me of early debates about human evolution. European researchers were completely sure that humans developed tool use and their brain evolved first and then that "forced" them to become bipedal, when in fact it was the opposite; australophecines were already fully bipedal, and the development of the human brain and abstract thought came long after. In this case, it's something of the reverse: AIs are already accomplishing feats, like conversation or image analysis, that people have thought impossible for centuries, and yet widespread human-like robots are nowehere to be seen.

#cosas mias#I mean there are lots of robots and even more but we don't live in a star wars future where you can buy a used robot or have domestic robot#and we are far far from androids. but we do have chatbots and vtubers.#vtubers are human... but how long until one is actually an AI?

31 notes

·

View notes

Text

Top, Alicja Kwade, Parallelwelt (rot/schwarz), 2009, Lamps and mirror, 83 × 45 × 45 cm. Via. Bottom, Marijn van Kreij, Untitled, 2008, Orange, mirror and fake orange (acrylic, foam and tape), 13 x 16 x 30 cm. Via.

--

The luminous images of the mystics reveal a full and luminous extra consciousness on which shadows or nothingness have no hold. This is why the feeling, the consciousness of ‘being’ is expressed in images of light, and it is also why light, presence, plenitude are synonyms of ‘spirituality.’ Initiation means illumination, the dispersion of shadows, nothingness held off. Thus the plentitude that I felt was perhaps a little like mystic plenitude. It began with the feeling that space was emptying itself of its material heaviness, which explains the euphoric sense of relief that I felt. Notions were freed of their content. Objects became transparent, permeable; they were no longer obstacles and it seemed as if one could pass through them. It was as if my mind could move freely, as if there were no resistance to its movement. Thus my mind could find its center again, reunited, reassembled out of the matrixes and limits withing which it had been dispersed. And it was from this moment on that the feeling of plenitude took hold.

Eugène Ionesco, from Present Past / Past Present, translated by Helen R. Lane (Da Capo Press, 1998). Via.

--

The woman dies. She dies to provide a plot twist. She dies to develop the narrative. She dies for cathartic effect. She dies because no one could think of what else to do with her. Dies because there weren’t any better story ideas around. Dies because her death was the very best idea that anyone could come up with ... And so, the woman dies. The woman dies so the man can be sad about it. The woman dies so the man can suffer. She dies to give him a destiny. Dies so he can fall to the dark side. Dies so he can lament her death. As he stands there, brimming with grief, brimming with life, the woman lies there in silence. The woman dies for him. We watch it happen. We read about it happening. We come to know it well.

Aoko Matsuda, from The Woman Dies, for GRANTA, translated by Polly Barton, November 2018. Via.

--

Once a week, Sun Kai has a video call with his mother. He opens up about work, the pressures he faces as a middle-aged man, and thoughts that he doesn’t even discuss with his wife. His mother will occasionally make a comment, like telling him to take care of himself—he’s her only child. But mostly, she just listens.

That’s because Sun’s mother died five years ago. And the person he’s talking to isn’t actually a person, but a digital replica he made of her—a moving image that can conduct basic conversations. They’ve been talking for a few years now.

After she died of a sudden illness in 2019, Sun wanted to find a way to keep their connection alive. So he turned to a team at Silicon Intelligence, an AI company based in Nanjing, China, that he cofounded in 2017. He provided them with a photo of her and some audio clips from their WeChat conversations. While the company was mostly focused on audio generation, the staff spent four months researching synthetic tools and generated an avatar with the data Sun provided. Then he was able to see and talk to a digital version of his mom via an app on his phone.

Zeyi Yang, from Deepfakes of your dead loved ones are a booming Chinese business - People are seeking help from AI-generated avatars to process their grief after a family member passes away, for MIT Technology Review, May 7, 2024.

3 notes

·

View notes

Photo

Einstein and Euler put to the test at the edge of the Universe The cosmos is a unique laboratory for testing the laws of physics, in particular those of Euler and Einstein. Euler described the movements of celestial objects, while Einstein described the way in which celestial objects distort the Universe. Since the discovery of dark matter and the acceleration of the Universe’s expansion, the validity of their equations has been put to the test: are they capable of explaining these mysterious phenomena? A team from the University of Geneva (UNIGE) has developed the first method to find out. It considers a never-before-used measure: time distortion. The results are published in Nature Astronomy. The theories of Leonhard Euler (1707-1783) and Albert Einstein (1879-1955) revolutionised our understanding of the Universe. With the famous equation that bears his name, Euler gave scientists a powerful tool for calculating the movements of galaxies in the Universe. With his theory of general relativity, Einstein demonstrated that the Universe is not a static framework: it can be distorted by star clusters and galaxies. Physicists have tested these equations in all sorts of ways, which have so far proved successful. However, two discoveries continue to put these models to the test: the acceleration of the Universe’s expansion and the existence of invisible dark matter, which is thought to account for 85% of all matter in the cosmos. Do these mysterious phenomena still obey the equations of Einstein and Euler? Researchers are still unable to answer this question. The missing ingredient "The problem is that current cosmological data do not allow us to differentiate between a theory that breaks Einstein’s equations and one that breaks Euler’s equation. This is what we demonstrate in our study. We also present a mathematical method for solving this problem. This is the culmination of ten years of research", explains Camille Bonvin, associate professor in the Department of Theoretical Physics in the UNIGE Faculty of Science and first author of the study. Researchers were unable to differentiate between the validity of these two equations at the very edge of the Universe because they were missing an "ingredient": the measurement of time distortion. "Until then, we only knew how to measure the speed of celestial objects and the sum of the distortion of time and space. We have developed a method for accessing this additional measurement, and it’s a first", says Camille Bonvin. If the time distortion is not equal to the sum of time and space - i.e. the result produced by the theory of general relativity - this means that Einstein’s model does not work. If the time distortion does not correspond to the speed of the galaxies calculated with the Euler equation, this means that the latter is not valid. "This will allow us to discover whether new forces or matter, which violate these two theories, exist in the Universe", explains Levon Pogosian, professor in the Department of Physics at Simon Fraser University, in Canada, and co-author of the study. Reality check These results will make a crucial contribution to several missions whose aim is to determine the origin of the accelerated expansion of the Universe and the nature of dark matter. These include the EUCLID space telescope, which will be launched in July 2023 by the European Space Agency (ESA), in collaboration with the UNIGE, and the Dark Energy Spectroscopic Instrument (DESI), which began its 5-year mission in 2021 in Arizona. There is also the international SKA (Square Kilometre Array) giant radio telescope project in South Africa and Australia, which will begin observations in 2028/29. "Our method will be integrated into these different missions. This is already the case for DESI, whom we have become external collaborators thanks to this research", Camille Bonvin enthuses. The research team has successfully tested its model on synthetic catalogues of galaxies. The next stage will involve testing it using the first data supplied by DESI, as well as identifying the obstacles and minimising the systematic features that could hamper its application. IMAGE....The SMACS 0723 galaxy cluster detected by the James Webb telescope, home to thousands of galaxies including the faintest objects ever observed in the infrared. © NASA, ESA, CSA, STScI

16 notes

·

View notes

Text

Latest Technology Trends

3 New Inventions That Will Change The World

1. Commercial nuclear fusion power

Nuclear fusion, in its most common form, is the process of energy being released when bits (“atomic nuclei”, if you’re fancy) of hydrogen are exposed to extreme heat and combined. This process releases massive amounts of energy, which humanity is increasingly hungry for. That’s how the sun works too, by the way.

Several countries have heavily invested in fusion research, and private companies are also conducting their own trials. The ITER reactor, which is under construction in France and due to begin operation in 2026, is the first reactor that should produce energy-positive fusion; but dozens of others are being built.

youtube

2. 4D printing

The name 4D printing can lead to confusion: I am not implying that humanity will be able to create and access another dimension. Put simply, a 4D-printed product is a 3D-printed object which can change properties when a specific stimulus is applied (submerged underwater, heated, shaken, not stirred…). The 4th Dimension is therefore Smart Materials.

The key challenge of this technology is obviously finding the relevant “smart material” for all types of uses (namely a hydrogel or a shape memory polymer for the time being). Some work is being done in this space, but we’re not close to being customer-ready, having yet to master reversible changes of certain materials.

The applications are still being discussed, but some very promising industries include healthcare (pills that activate only if the body reaches a certain temperature), fashion (clothes that become tighter in cold temperatures or shoes that improve grip under wet conditions), and homemaking (furniture that becomes rigid under a certain stimulus). Another cool use case is computational folding, wherein objects larger than printers can be printed as only one part.

3. Generative design AI

Generative AI technology uses deep learning to generate creative assets such as videos, images, text and music. This technology is no longer new since it entered the mainstream in late 2022. While you may have played with (and enjoyed!) the likes of ChatGPT and Midjourney, they’re barely more than surface-level distractions.

Tom Cruise riding a t-rex in Hogwarts

Corporate use for generative AI is far more sophisticated. If used to its full extent, it will reduce product-development life cycle time, design drugs in months instead of years, compose entirely new materials, generate synthetic data, optimize parts design, automate creativity… In fact, experts predict that by 2025, 30% of outbound marketing messages from large organizations will be synthetically generated, and by 2030, a major blockbuster film will be released with 90% of the film generated by AI.

Going beyond the most headline-grabbing use cases, studies have shown that Gen. AI increases productivity for a variety of tasks, with specific benefits for low-ability workers and less experienced employees. Put simply, these tools will level the playing field.

This is happening today, and will continue to happen, with increasing success, over the coming decade. That is, if we can navigate the many risks associated with generative AI. I’m particularly worried about deep fakes, copyright issues, and malicious uses for fake news.

#inventions#newinventions#newtechbasedinventions#techhub#inventologyhub#technews#newtechs#technology#Youtube

2 notes

·

View notes

Text

What is the Easiest Engineering?

Engineering is a challenging major that requires an intense commitment. It requires a strong work ethic and a lot of study time, which can be difficult for first-year students to adjust to. If you’re interested in getting a college degree in engineering but you don’t have the time or dedication to pursue it, there are easier options available. These degrees will have fewer requirements, but they still require a significant amount of work.

Aerospace engineering is a field that uses your ingenuity, computer skills and maths to design airplanes, helicopters and drones. It also plays a key role in keeping the sky safe for commercial and private air travel. It is an excellent career choice for those who love to work with their hands and solve problems. It is a rapidly growing industry, and many people find it exciting.

The BLS notes that a background in physics, mathematics and materials science is critical to success. The field is also evolving to incorporate more computer coding, with advanced system software playing an increasingly important role in aircraft communication and data collection.

If you’re looking to make a difference in the world, chemical engineering could be the perfect career for you. These engineers create the processes that enable us to turn raw materials into products we use every day - such as petrol, plastics and synthetic fibres - in ways that are both cost and environmental friendly.

As a chemical engineer, you’ll need to have strong maths and chemistry skills, as well as an interest in science. A bachelor’s degree is usually enough to start working as a chemical engineer, although some employers prefer to employ applicants who have a master’s degree.

Chemical engineers design equipment and systems for combining ingredients to make the various products that people use in their daily lives, such as fuels, chemicals, medicines and food. They also work to control manufacturing processes so that each product meets the needs of consumers, regulators and other stakeholders.

Electrical engineering is a field that uses physics, electronics and electromagnetism to design, develop and test devices and equipment that use or generate electricity. This includes everything from computers and phones to transportation and energy.

This career requires an understanding of the physics and electronics that power these systems, but it also involves communication, problem-solving and teamwork skills. It’s not for everyone, but it can be an exciting and rewarding career if you’re interested in science and technology and solving problems that have a real-world impact on people and the environment.

There are a variety of specialisations within this field, including telecommunications, instrumentation, and power engineering. The majority of jobs are office-based, but some work on site to monitor electrical systems or oversee the installation of new technologies.

Industrial engineering focuses on optimizing processes and designing systems that save money, time, raw materials and human-power while meeting safety standards. This is a specialized branch of engineering that also incorporates knowledge of math, physics and social sciences.

As an industrial engineer, you can find yourself designing or evaluating everything from factories and hospitals to amusement parks and medical facilities. You are responsible for making recommendations about how to best improve operations to increase efficiency.

Industrial engineers use a variety of computer simulation and design tools to map and analyze systems and processes ready for optimization. They often work closely with data science and machine learning to unite people, machines, information and energy into better practices.

7 notes

·

View notes

Text

Murderbot Diaries x LANCER crossover

What If the mech manufacturers from the table top role playing game Lancer made SecUnits from the book series The Murderbot Diaries by Martha Wells?

Unless otherwise stated, assume each SecUnit adheres to The Company Standard: Integrated ranged energy weapons in the arms, identity concealing body armor, wireless and physical data ports, genetically blended human derived organic components, power system instead of a digestive tract, high speed tactical semi-organic neural networked processor, Cardio-Hydraulics, Governor Module to ensure compliance, decent combat and tactical training but rudimentary education on everything else. Available to rent at a distribution center which it must be returned after completing a client-contract.

General Massive Systems: Anyone can print out a GMS SecUnit using a modified fabricator (for free) but no one can actually control it. It does not have a governor module and is allowed to balance the needs of the client against external factors when making judgements. Upon manufacture, it is assigned a randomly generated name and personality traits. When allowed to express itself, detectable aggression (decibels of vocalizations, facial contortions) from both clients and hostiles has been reduced by 10-20%. After completing it’s first contract, the Unit is allowed to choose between staying with the client, returning to a distribution center, or go wandering.

InterPlanetaryShipping-Northstar: Reinforced caps on it’s knuckles, knees, and elbows for close quarters combat. Integrated low-grav propulsion systems in it’s arms, legs, and body armor. IPS-N SecUnits also have an emergency mode allowing it to operate exposed to hard vacuum environments for several hours. Governor module is inactive by default but can be turned on by authorized individuals. Trained to always think of itself as a component of a ship and a member of a crew, and to place the survival of both over it’s own.

Smith-Shimano Corporation: Well trained and educated on various subjects. Upgraded between contracts to ensure a slight superiority over other SecUnits and augmented security contractors that are available on the local market. Body Armor is practical, high quality, with ornate detailing that can’t be easily replicated. You’d expect it to be arrogant or haughty because it’s a top-quality synthetic being but instead it’ll always treat you with respect and patience, perhaps because it pities you. Clients are charged extra for any damage they might inflict on their own rented Unit(s).

HORUS: The only way to get a HORUS SecUnit is to first find a secretive HORUS Cult-Cell and then offer it’s members the right kind of bargain, and if they like you enough, you might borrow their strange model of SecUnit. Integrated weapons/tools are asymmetrically located throughout it’s body, seemingly chosen at random but somehow always useful. Collated surveys from various outside organizations have shown that a fifth of documented HORUS-made SecUnits have paracausal material integrated in their bodies, giving these special ones a near-supernatural ability. The underlying logic for which units receive this upgrade and what that specific ability is, has yet to be confidently determined. Obviously, none have a governor module. It’s body armor and uniform will resemble those worn by local factions with a few details just slightly off.

Harrison Armory: Can operate without a recharge and at higher capacity far longer than any other model mentioned above. It can also overcharge it’s integrated energy weapons so much it can burn through military grade armor plating (for about 12.09 seconds). Doing so will not damage the Units arms but it will require connection to a power source within an hour or suffer involuntary system shutdown. Harrison Armory SecUnits are used throughout the Purview to protect industries and population centers from terrorists. The Armory will also ship SecUnits in bulk to prop up the armed forces of “trusted” subsidiaries beyond the Purview.

#the murderbot diaries#lancer rpg#world building#SecUnit#SecUnits#mech#mechs#transhumanism#sci-fi#scifi#AU#synthetic beings#alternate origins#alternate universe#murderbot diaries#crossover#ttrpg#transhuman#HORUS#Harrison Armory#IPS-N#GMS#SSC#murderbot

15 notes

·

View notes

Quote

What we need is regulation that enforces transparency. Not only should it always be clear when we are encountering synthetic media, but organizations building these systems should also be required to document and disclose the training data and model architectures. The onus of creating tools that are safe to use should be on the companies that build and deploy generative systems, which means that builders of these systems should be made accountable for the outputs produced by their products. While we agree that "such decisions must not be delegated to unelected tech leaders," we also note that such decisions should not be up to the academics experiencing an "AI summer," who are largely financially beholden to Silicon Valley. Those most impacted by AI systems, the immigrants subjected to "digital border walls," the women being forced to wear specific clothing, the workers experiencing PTSD while filtering outputs of generative systems, the artists seeing their work stolen for corporate profit, and the gig workers struggling to pay their bills should have a say in this conversation.

Statement from the listed authors of Stochastic Parrots on the “AI pause” letter

6 notes

·

View notes

Text

i made a box thats a rng script tht reads from a notecard .. the contents of the notecard is a transcription of a large chunk of me and lady cpu’s buzzword codex.. i use this rng tool to generate hacks and spells for upcoming vn “precious theatre!”

heres a bunch of hacks i generated tonight:

WARP ORANGE INTENTION TRANSFER

CLOVER SMARTBOARD PEDESTAL

PICTURE TEMPLE CUT FILM

COSMIC PRINCESS PLUSH DIRK

EMBLEM FUNCTION TUCAN YURI

MAGNETIC OVERCLOCK CROSS AVATAR

PELICAN GENDER RING

CYPRUS CIRCUIT BOARD

small queen nerd vampire

petal mountain webisode hole

girl sewer message district

sweet bodydata ribbon modify

cross symbol award twin

secretary cookie owl

temporary satellite palace

psychic moral tunnel

primitive backend quill bible

denpa princess princess spirit imitation

ROCK STAR TAPE VISION WEDDING TUTOR

naughty cheatcode bridge reaper

strong pudding

rock mod nemesis

student council sharpie jpg

pelican hpunch faceup

super movement needle actor

vibrator zapdos power-faith

hentai mega sewing diciple

dekbass knowledge crybaby

invisibility bomb slipper

shit religion ideal fruit post

rescue helper chocolate hack space

familiar/special storm

spirit momo x

frilly seagull church

nu crayon textbox

dark loveletter

painkiller rom mystic

harddrive power brother

amy rose punishment operating system

investigation: snowflake recovery guide

media practice moral chant!

complex tuna ritual-potion

summon bff

%slowburn

secretary camera ego

d-pad emerald ego

dekbass angel estrogen

murder million daughter loop

crime beta sewing mecha

!! scripted agent investigation email !!

!! frequency flunk project !!

sutro topaz dimension

!! meow emblem !!

student council desktop

3rd party recovery effect

yaoi society cloud

feral fanclub callcenter

time travel defense blueprint

cosmic elevator clown

chocolate vibrator intention

login mystic snowboard

!! rollcall repost !!

condition mp3

text tournament

gem maiden

vn tunnel

paw tea

slowburn idol

pacify mmd marble

glove? broken leek

probation reboot pencil case

fact check avatar

sexy elephant

cockroach dungeon

dungeon vaccine

processing twin cannabis

familiar dictionary record token

dual sculpture justice

sister class: strange

dissonance leek

garden lamb chibi

peer to peer mouse cell phone

hairclip design botfarm

psyduck million ancestor

tutu dark dissonance

construction paranoia snake

luxury cola

mage primitive

weird doujin eggman dashboard ceremony

!! targeted individual dizzy hypno idol !!

location controller

lament phenomena

permanent copy actress

sign up prize

sister user motion data

synthetic grape vehicle

tucan password trial

familiar lesbian

technique password

custard dungeon

mount murder

equal exception hentai

hope: lesbian space program gala

dual weird lament wink

permanent paintbrush

secretary wish picture

vn boost

fur alpha

friendship picture projection errand

diamond os

leak email

nvl palmtree diagnosis

mother frog hack

alpha mask roll call

perfect frozen reciever doll

sonic committee lunch

emerald rng blender

pray scarlette serpent

shop mammoth apophenia

president dragon teto

dashboard 5g poet

violin codex frill

silver non-network

codex technique theory

quit invisibility org

virtual bomb novel

!! studio alphabet doki !!

lucky app

satellite d-pad technique

repost water divide

interplanetary pretty pigeon

paranoia bodyguard

mango fasshion

fishing tunnel administrator

dragon gallery tiger

mega mantra crow

cylinder hud

bully doily melon cousin

cave yaoi special

royal project library

cpu charm palace

permanent rockstar hammer

disturbing breakfast

profile satan haha brother

dressup book advantage

organic message board alien

mayday stream manifest synthetic court justice

big crow theory snake font recovery

vacccine alien demonic intention chapter

hpunch hpunch turn virtual passion bgm trap

yaye strawberry / order pdf chain invisibility

7 notes

·

View notes

Text

Synthetic data offers an innovative approach to training machine learning models without compromising privacy Discover its benefits and limitations in this comprehensive guide.

#Synthetic Data#Synthetic Data in Machine Learning#AI Generated Synthetic Data#synthetic data generation#synthetic data generation tools

0 notes

Text

Essential Skills for Testing Applications in Different Environments

Testing applications in different environments requires a diverse set of skills to ensure the software performs well under various conditions and configurations. Here are the essential skills needed for this task:

1. Understanding of Different Environments

Development, Staging, and Production: Knowledge of the differences between development, staging, and production environments, and the purpose of each.

Configuration Management: Understanding how to configure and manage different environments, including handling environment-specific settings and secrets.

2. Test Planning and Strategy

Test Plan Creation: Ability to create comprehensive test plans that cover different environments.

Environment-specific Test Cases: Designing test cases that take into account the specific characteristics and constraints of each environment.

3. Automation Skills

Automated Testing Tools: Proficiency with automated testing tools like Selenium, JUnit, TestNG, or Cypress.

Continuous Integration/Continuous Deployment (CI/CD): Experience with CI/CD tools like Jenkins, GitLab CI, or Travis CI to automate the testing process across different environments.

4. Configuration Management Tools

Infrastructure as Code (IaC): Familiarity with IaC tools like Terraform, Ansible, or CloudFormation to manage and configure environments consistently.

Containerization: Knowledge of Docker and Kubernetes for creating consistent and isolated testing environments.

5. Version Control Systems

Git: Proficiency in using Git for version control, including branching, merging, and handling environment-specific code changes.

6. Test Data Management

Data Masking and Anonymization: Skills in anonymizing sensitive data for testing purposes.

Synthetic Data Generation: Ability to create synthetic test data that mimics real-world scenarios.

7. Performance Testing

Load Testing: Experience with load testing tools like JMeter, LoadRunner, or Gatling to assess performance under different conditions.

Stress Testing: Ability to perform stress testing to determine the application's breaking point.

8. Security Testing

Vulnerability Scanning: Knowledge of tools like OWASP ZAP, Burp Suite, or Nessus for identifying security vulnerabilities in different environments.

Penetration Testing: Skills in conducting penetration tests to assess security risks.

9. Cross-Browser and Cross-Device Testing

Browser Testing: Proficiency with tools like BrowserStack or Sauce Labs for testing across different browsers.

Device Testing: Experience with testing on different devices and operating systems to ensure compatibility.

10. API Testing

API Testing Tools: Experience with tools like Postman, SoapUI, or RestAssured for testing APIs.

Contract Testing: Knowledge of contract testing frameworks like Pact to ensure consistent API behavior across environments.

11. Monitoring and Logging

Monitoring Tools: Familiarity with monitoring tools like Prometheus, Grafana, or New Relic to observe application performance and health in different environments.

Log Management: Skills in using log management tools like ELK Stack (Elasticsearch, Logstash, Kibana) or Splunk for troubleshooting and analysis.

12. Soft Skills

Attention to Detail: Meticulous attention to detail to identify environment-specific issues.

Problem-solving: Strong problem-solving skills to troubleshoot and resolve issues quickly.

Collaboration: Ability to work effectively with development, operations, and product teams to manage and troubleshoot environment-related issues.

Practical Steps for Testing in Different Environments

Environment Setup:

Define the infrastructure and configuration needed for each environment.

Use IaC tools to automate environment setup and teardown.

Configuration Management:

Manage environment-specific configurations and secrets securely.

Use tools like Consul or Vault for managing secrets.

Automate Testing:

Integrate automated tests into your CI/CD pipeline.

Ensure tests are run in all environments as part of the deployment process.

Test Data Management:

Use consistent and reliable test data across all environments.

Implement data seeding or generation scripts as part of your environment setup.

Performance and Security Testing:

Conduct regular performance and security tests in staging and production-like environments.

Monitor application performance and security continuously.

Sun Technologies has testers who have the above listed skills to ensure that applications are robust, secure, and performant across different environments, leading to higher quality software and better user experiences. Contact us to get a free assessment of CI/CD automation opportunity that you can activate using Sun Technologies’ Testing Center-of-Excellence (CoE).

#api#testingapplication#automation#ci/cd#infrastructureasCode#containerization#loadtesting#securitytesting#performancetesting

0 notes

Text

Test Information Management Software Market Strategies for Growth, Segmentation, and Market Overview by 2024-2031

The "Test Information Management Software Market" is a dynamic and rapidly evolving sector, with significant advancements and growth anticipated by 2031. Comprehensive market research reveals a detailed analysis of market size, share, and trends, providing valuable insights into its expansion. This report delves into segmentation and definition, offering a clear understanding of market components and drivers. Employing SWOT and PESTEL analyses, the study evaluates the market's strengths, weaknesses, opportunities, and threats, alongside political, economic, social, technological, environmental, and legal factors. Expert opinions and recent developments highlight the geographical distribution and forecast the market's trajectory, ensuring a robust foundation for strategic planning and investment.

What is the projected market size & growth rate of the Test Information Management Software Market?

Market Analysis and Size

Test management tools enables the team to manage requirements, test cases, test execution, defect tracking, reporting, monitor metrics, and integration with third-party test data management and bug tracking tools. These testing tools are extremely useful and allows to focus more on developing better software.

Data Bridge Market Research analyses that the test information management software market which was USD 1.1 billion in 2021, would rocket up to USD 2.57 billion by 2029, and is expected to undergo a CAGR of 11.2% during the forecast period 2022 to 2029. In addition to the insights on market scenarios such as market value, growth rate, segmentation, geographical coverage, and major players, the market reports curated by the Data Bridge Market Research also include depth expert analysis, patient epidemiology, pipeline analysis, pricing analysis, and regulatory framework.

Browse Detailed TOC, Tables and Figures with Charts which is spread across 350 Pages that provides exclusive data, information, vital statistics, trends, and competitive landscape details in this niche sector.

This research report is the result of an extensive primary and secondary research effort into the Test Information Management Software market. It provides a thorough overview of the market's current and future objectives, along with a competitive analysis of the industry, broken down by application, type and regional trends. It also provides a dashboard overview of the past and present performance of leading companies. A variety of methodologies and analyses are used in the research to ensure accurate and comprehensive information about the Test Information Management Software Market.

Get a Sample PDF of Report - https://www.databridgemarketresearch.com/request-a-sample/?dbmr=global-test-information-management-software-market

Which are the driving factors of the Test Information Management Software market?

The driving factors of the Test Information Management Software market include technological advancements that enhance product efficiency and user experience, increasing consumer demand driven by changing lifestyle preferences, and favorable government regulations and policies that support market growth. Additionally, rising investment in research and development and the expanding application scope of Test Information Management Software across various industries further propel market expansion.

Test Information Management Software Market - Competitive and Segmentation Analysis:

Global Test Information Management Software Market, By Type (Cloud Based and Web Based), Application (Data Subsetting, Data Masking, Data Profiling and Analysis, Data Compliance and Security, Synthetic Test Data Generation), Pricing (Monthly, Yearly, and One-Time License), Vertical (BFSI, IT and Telecom, Manufacturing, Energy and Utilities, Healthcare and Life Sciences, Education, Aerospace and Defense, and Others), Organization Size (Small and Medium-sized Enterprises (SMEs), Large enterprises) – Industry Trends and Forecast to 2031.

How do you determine the list of the key players included in the report?

With the aim of clearly revealing the competitive situation of the industry, we concretely analyze not only the leading enterprises that have a voice on a global scale, but also the regional small and medium-sized companies that play key roles and have plenty of potential growth.

Which are the top companies operating in the Test Information Management Software market?

Some of the major players operating in the test information management software market are:

IBM (U.S.)

NATIONAL INSTRUMENTS CORP. (U.S.)

SmartBear Software (U.S.)

Micro Focus (U.K.)

Jama Software (U.S.)

XQual (France)

Adaptavist (U.K.)

Tricentis (U.S.)

H.S. PractiTest (Israel)

Plutora (U.S.)

Inflectra Corporation (U.S.)

Test Collab (Canada)

OCCYGEN, LLC. (U.S.)

Meliora Ltd. (Finland)

Short Description About Test Information Management Software Market:

The Global Test Information Management Software market is anticipated to rise at a considerable rate during the forecast period, between 2024 and 2031. In 2023, the market is growing at a steady rate and with the rising adoption of strategies by key players, the market is expected to rise over the projected horizon.

North America, especially The United States, will still play an important role which can not be ignored. Any changes from United States might affect the development trend of Test Information Management Software. The market in North America is expected to grow considerably during the forecast period. The high adoption of advanced technology and the presence of large players in this region are likely to create ample growth opportunities for the market.

Europe also play important roles in global market, with a magnificent growth in CAGR During the Forecast period 2024-2031.

Test Information Management Software Market size is projected to reach Multimillion USD by 2031, In comparison to 2024, at unexpected CAGR during 2024-2031.

Despite the presence of intense competition, due to the global recovery trend is clear, investors are still optimistic about this area, and it will still be more new investments entering the field in the future.

This report focuses on the Test Information Management Software in global market, especially in North America, Europe and Asia-Pacific, South America, Middle East and Africa. This report categorizes the market based on manufacturers, regions, type and application.

Get a Sample Copy of the Test Information Management Software Report 2024

What are your main data sources?

Both Primary and Secondary data sources are being used while compiling the report. Primary sources include extensive interviews of key opinion leaders and industry experts (such as experienced front-line staff, directors, CEOs, and marketing executives), downstream distributors, as well as end-users. Secondary sources include the research of the annual and financial reports of the top companies, public files, new journals, etc. We also cooperate with some third-party databases.

Geographically, the detailed analysis of consumption, revenue, market share and growth rate, historical data and forecast (2024-2031) of the following regions are covered in Chapters

What are the key regions in the global Test Information Management Software market?

North America (United States, Canada and Mexico)

Europe (Germany, UK, France, Italy, Russia and Turkey etc.)

Asia-Pacific (China, Japan, Korea, India, Australia, Indonesia, Thailand, Philippines, Malaysia and Vietnam)

South America (Brazil, Argentina, Columbia etc.)

Middle East and Africa (Saudi Arabia, UAE, Egypt, Nigeria and South Africa)

This Test Information Management Software Market Research/Analysis Report Contains Answers to your following Questions

What are the global trends in the Test Information Management Software market?

Would the market witness an increase or decline in the demand in the coming years?

What is the estimated demand for different types of products in Test Information Management Software?

What are the upcoming industry applications and trends for Test Information Management Software market?

What Are Projections of Global Test Information Management Software Industry Considering Capacity, Production and Production Value? What Will Be the Estimation of Cost and Profit? What Will Be Market Share, Supply and Consumption? What about Import and Export?

Where will the strategic developments take the industry in the mid to long-term?

What are the factors contributing to the final price of Test Information Management Software?

What are the raw materials used for Test Information Management Software manufacturing?

How big is the opportunity for the Test Information Management Software market?

How will the increasing adoption of Test Information Management Software for mining impact the growth rate of the overall market?

How much is the global Test Information Management Software market worth? What was the value of the market In 2020?

Who are the major players operating in the Test Information Management Software market? Which companies are the front runners?

Which are the recent industry trends that can be implemented to generate additional revenue streams?

What Should Be Entry Strategies, Countermeasures to Economic Impact, and Marketing Channels for Test Information Management Software Industry?

Customization of the Report

Can I modify the scope of the report and customize it to suit my requirements? Yes. Customized requirements of multi-dimensional, deep-level and high-quality can help our customers precisely grasp market opportunities, effortlessly confront market challenges, properly formulate market strategies and act promptly, thus to win them sufficient time and space for market competition.

Inquire more and share questions if any before the purchase on this report at - https://www.databridgemarketresearch.com/inquire-before-buying/?dbmr=global-test-information-management-software-market

Detailed TOC of Global Test Information Management Software Market Insights and Forecast to 2031

Introduction

Market Segmentation

Executive Summary

Premium Insights

Market Overview

Test Information Management Software Market By Type

Test Information Management Software Market By Function

Test Information Management Software Market By Material

Test Information Management Software Market By End User

Test Information Management Software Market By Region

Test Information Management Software Market: Company Landscape

SWOT Analysis

Company Profiles

Continued...

Purchase this report – https://www.databridgemarketresearch.com/checkout/buy/singleuser/global-test-information-management-software-market

Data Bridge Market Research:

Today's trends are a great way to predict future events!

Data Bridge Market Research is a market research and consulting company that stands out for its innovative and distinctive approach, as well as its unmatched resilience and integrated methods. We are dedicated to identifying the best market opportunities, and providing insightful information that will help your business thrive in the marketplace. Data Bridge offers tailored solutions to complex business challenges. This facilitates a smooth decision-making process. Data Bridge was founded in Pune in 2015. It is the product of deep wisdom and experience.

Contact Us:

Data Bridge Market Research

US: +1 614 591 3140

UK: +44 845 154 9652

APAC: +653 1251 975

Email:- [email protected]

Browse More Reports:

Global Ostomy Drainage Bags Market - Industry Trends and Forecast to 2029

Global Enteric Disease Testing-Treatment Market – Industry Trends and Forecast to 2029

Global Emergency Shutdown Systems Market - Industry Trends and Forecast to 2029

Global Test Information Management Software Market – Industry Trends and Forecast to 2029

Global DIP Microcontroller Socket Market – Industry Trends and Forecast to 2028

#Test Information Management Software Market#Test Information Management Software Market Size#Test Information Management Software Market Share#Test Information Management Software Market Trends#Test Information Management Software Market Growth#Test Information Management Software Market Analysis#Test Information Management Software Market Scope & Opportunity#Test Information Management Software Market Challenges#Test Information Management Software Market Dynamics & Opportunities

0 notes

Text

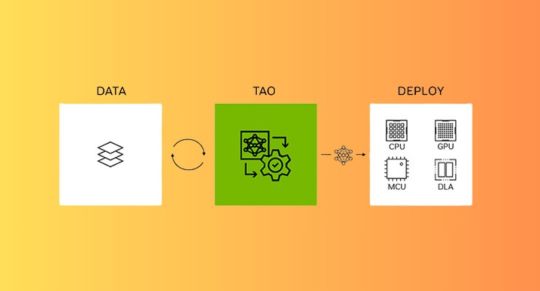

MediaTek NeuroPilot SDK Develops AI Faster with NVIDIA TAO

MediaTek NeuroPilot SDK

As part of its edge inference silicon plan, MediaTek stated at COMPUTEX 2024 that it will integrate NVIDIA TAO with MediaTek’s NeuroPilot SDK. With MediaTek’s support for NVIDIA TAO, developers will have an easy time integrating cutting-edge AI capabilities into a wide range of IoT applications that utilise the company’s state-of-the-art silicon. This will assist businesses of all sizes in realising edge AI’s promise in a variety of IoT verticals, such as smart manufacturing, smart retail, smart cities, transportation, and healthcare.

MediaTek’s NeuroPilot SDK Edge AI Applications

MediaTek created the extensive NeuroPilot SDK software toolkit and APIs to simplify the creation and implementation of AI applications on hardware that uses their chipsets. What NeuroPilot SDK has to provide is broken down as follows:

Emphasis on Edge AI

NeuroPilot SDK is based on the idea of “Edge AI,” which entails processing AI data locally on the device rather than on external servers. Better privacy, quicker reaction times, and less dependency on internet access are all made possible by this.

Efficiency is Critical

MediaTek’s NeuroPilot SDK is designed to maximise the efficiency of AI applications running on their hardware platforms, especially on their mobile System-on-Chip (SoC) processors. This results in longer battery life and more seamless operation for AI-powered functions on gadgets.

Agnostic towards Framework

NeuroPilot SDK is made to be independent of the underlying AI framework, even though it may include features unique to MediaTek hardware. For their apps, developers can use custom models or well-known frameworks like TensorFlow Lite thanks to this.

Tools and Resources for Development

The NeuroPilot SDK offers a wide range of tools and resources to developers, such as:

APIs for utilising MediaTek smartphones’ AI hardware.

To get started, use tutorials and sample code.

Integration with various IDEs to facilitate a smooth workflow for development environments.

Support and documentation to help developers along the way.

Latest Happenings

MediaTek declared in June 2024 that NeuroPilot SDK and NVIDIA’s TAO Toolkit are now integrated. Through the following, this collaboration seeks to further expedite development:

Pre-trained AI models

A massive library of pre-trained AI models for picture recognition and object detection saves developers time and effort.

Model optimization tools

Tools for improving pre-trained models for particular use cases and maximising performance on MediaTek hardware are known as model optimisation tools.

In general, MediaTek’s NeuroPilot SDK enables developers to design strong and effective AI apps for a variety of edge devices, such as wearables, smart home appliances, industrial machinery, and smartphones. NeuroPilot wants to be a major force behind Edge AI in the future by emphasising efficiency, ease of development, and a developing tool ecosystem.

NVIDIA TAO Tutorial

The NVIDIA TAO Toolkit: What Is It?

With transfer learning, you may expedite the development process and generate AI/machine learning models without requiring a horde of data scientists or mounds of data. With the help of this effective method, learning features from an existing neural network model may be instantaneously transferred to a newly customised one.

Based on TensorFlow and PyTorch, the open-source NVIDIA TAO Toolkit leverages the power of transfer learning to streamline the model training procedure and optimise the model for throughput of inference on nearly any platform. The outcome is a workflow that is extremely efficient. Utilise pre-trained or custom models, modify them for your own actual or synthetic data, and then maximise throughput for inference. All without the requirement for substantial training datasets or AI competence.

Principal Advantages

Educate Models Effectively

To save time and effort on manual tweaking, take advantage of TAO Toolkit’s AutoML feature.

Construct a Very Accurate AI

To build extremely accurate and unique AI models for your use case, leverage NVIDIA pretrained models and SOTA vision transformer.

Aim for Inference Optimisation

Beyond customisation, by optimising the model for inference, you can gain up to 4X performance.

Implement on Any Device

Install optimised models on MCUs, CPUs, GPUs, and other hardware.

A Top-Notch Solution for Your Vital AI Mission

As a component of NVIDIA AI Enterprise, an enterprise-ready AI software platform, NVIDIA TAO accelerates time to value while reducing the possible risks associated with open-source software. It offers security, stability, management, and support. NVIDIA AI Enterprise comes with three unique foundation models that have been trained on datasets that are profitable for businesses:

The only commercially effective fundamental model for vision, NV-DINOv2, was trained on more than 100 million images by self-supervised learning. With such a little amount of training data,Their model may be rapidly optimised for a variety of vision AI tasks.

PCB classification provides great accuracy in identifying missing components on a PCB and is based on NV-DINOv2.

A significant number of retail SKUs can be identified using retail recognition, which is based on NV-DINOv2.

Starting with TAO 5.1, fundamental models based on NV-DINOv2 can be optimised for specific visual AI tasks.

Get a complimentary 90-day trial licence for NVIDIA AI Enterprise.

Use NVIDIA LaunchPad to explore TAO Toolkit and NVIDIA AI Enterprise.

Image credit to nividia

Why It Is Important for the Development of AI

Customise your application with generative AI

A disruptive factor that will alter numerous sectors is generative AI. Foundation models that have been trained on a vast corpus of text, image, sensor, and other data are powering this. With TAO, you can now develop domain-specific generative AI applications by adjusting and customising these base models. Multi-modal models like NV-DINOv2, NV-CLIP, and Grounding-DINO can be fine-tuned thanks to TAO.

Implement Models across All Platforms

AI on billions of devices can be powered by the NVIDIA TAO Toolkit. For improved interoperability, the recently released NVIDIA TAO Toolkit 5.0 offers model export in ONNX, an open standard. This enables the deployment of a model on any computing platform that was trained using the NVIDIA TAO Toolkit.

AI-Powered Data Labelling

You can now name segmentation masks more quickly and affordably thanks to new AI-assisted annotation features. Mask Auto Labeler (MAL), a transformer-based segmentation architecture with poor supervision, can help in segmentation annotation as well as with tightening and adjusting bounding boxes for object detection.

Use Rest APIs to Integrate TAO Toolkit Into Your Application

Using Kubernetes, you can now more easily integrate TAO Toolkit into your application and deploy it in a contemporary cloud-native architecture over REST APIs. Construct a novel artificial intelligence service or include the TAO Toolkit into your current offering to facilitate automation across various technologies.Image credit to nividia

AutoML Makes AI Easier

AI training and optimisation take a lot of time and require in-depth understanding of which model to use and which hyperparameters to adjust. These days, AutoML makes it simple to train high-quality models without requiring the laborious process of manually adjusting hundreds of parameters.Image credit to nividia

Utilise Your Preferred Cloud Run

TAO’s cloud-native technology offers the agility, scalability, and portability required to manage and implement AI applications more successfully. Any top cloud provider’s virtual machines (VMs) can be used to implement TAO services, and they can also be used with managed Kubernetes services like Amazon EKS, Google GKE, or Azure AKS. To make infrastructure administration and scaling easier, it can also be utilised with cloud machine learning services like Azure Machine Learning, Google Vertex AI, and Google Colab.

TAO also makes it possible to integrate with a number of cloud-based and third-party MLOPs services, giving developers and businesses an AI workflow that is optimised. With the W&B or ClearML platform, developers can now monitor and control their TAO experiment and manage models.

Performance of Inference

Reach maximum inference performance on all platforms, from the cloud with NVIDIA Ampere architecture GPUs to the edge with NVIDIA Jetson solutions. To learn more about various models and batch size,

Every year, MediaTek powers over two billion connected devices. The goal of the company’s edge silicon portfolio is to ensure that edge AI applications operate as effectively as possible while optimising their performance. Additionally, it incorporates the cutting-edge multimedia and connection technology from MediaTek. MediaTek’s edge inference silicon roadmap enables marketers to provide amazing AI experiences to devices across different price points, with chipsets for the premium, mid-range, and entry tiers.

On all MediaTek devices, big and small, with an intuitive UI and performance optimisation features, developers may advance the field of Vision AI with MediaTek’s NeuroPilot SDK integration with NVIDIA TAO. Additionally, NVIDIA TAO opens customised vision AI capabilities for a variety of solutions and use cases with over 100 ready-to-use pretrained models. Even in the case of developers without in-depth AI knowledge, it expedites the process of fine-tuning AI models and lowers development time and complexity.

“CK Wang, General Manager of MediaTek’s IoT business unit, stated, “Integrating NVIDIA TAO with MediaTek NeuroPilot will further broaden NVIDIA TAO aim of democratising access to AI, helping generate a new wave of AI-powered devices and experiences.” “With MediaTek’s Genio product line and these increased resources, it’s easier than ever for developers to design cutting-edge Edge AI products that differentiate themselves from the competition.”

The Vice President of Robotics and Edge Computing at NVIDIA, Deepu Talla, stated that “generative AI is turbocharging computer vision with higher accuracy for AIoT and edge applications.” “Billions of IoT devices will have access to the newest and best vision AI models thanks to the combination of NVIDIA TAO and MediaTek NeuroPilot SDK.”

Read more on Govindhtech.com

#NVIDIATAO#mediatek#AIapplications#AIframework#AIhardware#smartphones#AImodels#pytorch#VertexAI#MachineLearning#nvidia#IoT#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Generative AI Implementation: A step-by-step guide

Machine learning has found a fascinating new sector in generative artificial intelligence (AI), which allows systems to generate data that mimics and sometimes even outperforms human information.

Generative AI brings up many possibilities across multiple domains, from creating text and images to creating music compositions and movies. However, putting generative AI projects into practice necessitates a thorough grasp of the underlying theories, procedures, and resources. This extensive guide will explore the practical aspects of creating generative AI projects, which cover essential principles, techniques, tools, best practices, and real-world applications.

Comprehending Artificial Intelligence

The area of artificial intelligence known as "Generative AI" is dedicated to producing original content or data samples that closely resemble data distributions found in the actual world. It generates content across several domains using autoregressive models, variational autoencoders (VAEs), and generative adversarial networks (GANs).

Important Ideas:

GANs, or Generative Adversarial Networks:

GANs were first presented by Ian Goodfellow and associates in 2014. They comprise two neural networks trained adversarially: the discriminator and the generator. The generator creates synthetic samples, while the discriminator separates actual samples from artificial ones. GANs acquire the ability to produce realistic data distributions through iterative training.

VAEs, or variational autoencoders:

Variational autoencoders, often VAEs, are generative models that extract latent semantics from input data. They consist of an encoder network that lays out input data to a latent space and a decoder network that reconstructs the input data from the latent space. The learned latent space is used to pick samples; this is how VAEs are taught to generate new samples.

Models of Autoregression:

Data is generated sequentially via autoregressive models, including PixelRNN and PixelCNN. These models represent the conditional probability distribution of each data point relative to the preceding data points. These models are famous for text and image generation.

Realistic Implementation Procedures

Step 1: Specify the project's objective

Establishing precise objectives and targets is essential before starting any generative AI project. Choose the content you want to produce (text, music, photos, etc.), your desired output quality, and any particular limits or specifications.

Step 2: Gathering and Preparing Data

Assemble a top-notch dataset pertinent to your project's field. Ensure the dataset is representative, diversified, and well-labeled (if necessary). The data should be preprocessed to eliminate noise, standardize characteristics, and prepare for training. Techniques for data augmentation can also be used to make the dataset more variable.

Step3: Selecting the Appropriate Model Architecture:

Choose a suitable generative model architecture, considering the project's needs and the data type. GANs, VAEs, and autoregressive models have advantages and disadvantages depending on the application. Consider elements like computing resources, sample quality, and training stability.

Step 4: The Generative Model's Training

Utilizing the preprocessed dataset, train the selected generative model. Hyperparameters like learning rate, batch size, and network architecture configurations should be closely monitored. Utilizing assessment metrics and visualization tools, track the success of your training. Try various training techniques to increase performance, such as annealing schedules for VAEs or progressive growth for GANs.

Step 5: Assessment and Adjustment

Analyze the trained generative model qualitatively by human evaluators and quantitatively using measures such as Inception Score and Frechet Inception Distance. Adjust the model in light of user feedback and repeat the training procedure as needed. Consider domain adaptation or transfer learning strategies to enhance generalization to novel data distributions.

Instruments and Structures

TensorFlow:

TensorFlow is a popular open-source machine learning framework created by Google for developing and refining deep neural networks, including generative models. It offers low-level operations and high-level APIs (like Keras) for adaptable model building and practical computing.

PyTorch: Another well-liked open-source deep learning framework, PyTorch was created by Facebook's AI Research team and is renowned for its user-friendly API and dynamic computation graph. PyTorch provides a broad ecosystem of tools and pre-trained models, making it simple for researchers and AI developers to prototype and train generative models.

CUDA Toolkit from NVIDIA:

Leveraging GPU acceleration is crucial for cutting training time for deep neural networks, especially large-scale generative models. The NVIDIA CUDA Toolkit optimizes performance for deep learning applications by offering libraries and tools for parallel computing on NVIDIA GPUs.

The Generative Pre-trained Transformer (GPT) Models from OpenAI:

The GPT models from OpenAI, which include GPT-2 and GPT-3, are cutting-edge language models that can produce logical and appropriate text for its context. These models can be optimized for specific generative tasks like text completion, story generation, and dialogue production because they have already undergone extensive pre-training on copious amounts of text data.

Best Practices and Things to Think About

Variability in Instructional Data:

Ensure the training dataset represents the target data distribution and diversity to reduce bias and enhance generalization. Adding variants of already-existing samples to the dataset can help strengthen the generative model's resilience.

Methods of Regularization:

Regularization strategies like batch normalization, weight decay, and dropout can reduce overfitting and enhance the generative model's generalization capabilities. Try various regularization techniques and strengths to determine the good trade-off between model performance and complexity.

Moral Aspects to Take into Account:

Consider the moral ramifications of creating synthetic content, particularly in touchy areas like misinformation and deep fakes. Implement safety measures and verification processes to prevent the abuse of generative AI technology and guarantee its responsible use.

Constant Inspection and Upkeep:

When generative models are used in real-world contexts, they may display unexpected behavior or gradually lose performance. Provide a mechanism for ongoing deployment model maintenance and monitoring, which should involve periodic retraining with new data and performance assessment.

Practical Uses of Art and Creativity:

The advent of generative AI has revolutionized digital art and creativity by enabling creators to explore new mediums and expand their creative boundaries. AI-powered tools have become essential for creative professionals, ranging from generative music and interactive exhibitions to algorithmic art and procedural production.

Content Creation and Customization:

Generative AI is utilized in the media and entertainment sector for tailored user experiences, recommendation systems, and content creation. Using generative models, streaming services can recommend appropriate material to users based on their viewing history and preferences, which increases user engagement and retention.

Drug discovery and healthcare:

Generative AI is essential for drug development, molecular design, and medical imaging analysis in the healthcare industry. Using generative models to create novel chemical compounds with desired features can expedite personalized medicine initiatives and drug development.

Dialog systems with natural language processing:

Natural language processing (NLP) and conversational AI have advanced significantly thanks to generative AI, which powers chatbots, virtual assistants, and dialogue systems. Models like OpenAI's GPT have proven human-like ability to produce coherent and contextually relevant text, opening the door to more engaging and natural interactions with AI systems.

In summary

With the advent of generative AI, artificial intelligence has undergone a revolutionary paradigm change, enabling machines to produce content that can match human originality and inventiveness. Using sophisticated machine learning methodologies and robust computational capacities, scholars and programmers can open up new avenues in various fields, from the arts and entertainment to medicine and academia.

However, careful examination of data, model architectures, training procedures, and ethical issues is necessary for executing generative AI initiatives. Generative artificial intelligence (AI) can transform industries, spur innovation, and improve human experiences in previously unthinkable ways when used with the appropriate methodology and resources.

0 notes

Photo

What do early Earth’s core formation and drip coffee have in common? A new technique developed by Carnegie’s Yingwei Fei and Lin Wang provides fresh insight into the process by which the materials that formed Earth’s core descended into the depths of our planet, leaving behind geochemical traces that have long mystified scientists. Their work is published by Science Advances. Earth accreted from the disk of dust and gas that surrounded our Sun in its youth. As Earth grew from smaller objects over time, the densest material sank inward, separating the planet into distinct layers—including the iron-rich metal core and silicate mantle. “The segregation of the core and mantle is the most important event in the geologic history of Earth,” explained Fei. “Convection in the outer core powers the Earth’s magnetic field, shielding us from cosmic rays. Without it, life as we know it could not exist.” Each of our planet’s layers has its own composition. Although the core is predominantly iron, seismic data indicates that some lighter elements, like oxygen, sulfur, silicon and carbon, were dissolved into it and brought along for the ride into the planet’s center. Likewise, the mantle is predominately silicate, but its concentrations of so-called “iron-loving,” or siderophile, elements have mystified scientists for decades. “Understanding the mechanisms by which materials migrated through these layers, and identifying any remnants of this process, will improve our knowledge of the various ways Earth’s core and mantle have interacted throughout its history,” Wang added. In the lab, Carnegie scientists use heavy hydraulic presses, like the ones used to make synthetic diamonds, to bring samples of material to high pressures, mimicking the conditions found in Earth’s interior. This enables them to recreate Earth’s differentiation process in miniature and to probe different possible ways by which the core was formed. Using these tools, Wang and Fei developed a new method of tracing the movement of the core-forming liquid metal in their sample as it migrated inward. They showed that much like water filtering through coffee grounds, under the dynamic conditions found on early Earth, iron melts could have passed through the cracks between a layer of solid silicate crystals—called a grain boundary—and exchanged chemical elements. Wang and Fei suggest that the violent environment of early Earth would have actually created the circumstances that would turn the mantle into a giant “pour over” coffee apparatus, allowing percolation of liquid metal through an interconnected network. They analyzed the chemical exchanges during this percolation process. Their results would account for iron-loving elements being left behind in the mantle, shedding light on a longstanding geochemistry question. Looking ahead, Wang and Fei believe their new technique is generally applicable to studying other rocky planets and can help answer more questions about the core and mantle interactions occurring deep in their interiors.

13 notes

·

View notes