#aimodels

Text

I feel amazing, how are you love?

#aimodel#aimodellife#aimodelling#aimodelgirl#aimode#aimodeling#aimodels#aigirlmodel#aigirlfantasy#aigirlsforyou#aigirlfried#aigirloftheweek#aigirlgallery#aigirl#aiforever#aifuture#aifantasy#aiphoto#aiphotography#aiportraits#aiplifestyle#aipfriendly#aiportrait#aiphotorealistic#aibeauty#aibeautifulgirl#buymeacoffee#loyalfans#fanvue#patreon

3 notes

·

View notes

Text

#aiart#peopleofai#aiartcommunity#aiphotography#aiphotograph#aiphotographer#aiartwork#aihuman#aimodel#aimodels

2 notes

·

View notes

Text

Fun in the Sun at the Pool!

4 notes

·

View notes

Text

美しさの次元を超えたAI美女たちが、大胆に誘惑する!

こんにちは!AIほしのです。

AIグラビア写真集Vol.7 未知の誘惑:AI美女のランジェリーコレクションをAmazon kindleで発売しました。

AIで制作した、下着コレクションの写真集です。

最初の10ページ位サンプル画像が見れますので、お気軽にご覧くださいね。

よろしくお願いします。

AI beauties beyond the dimension of beauty boldly seduce!

Hello! This is AI Hoshino.

AI Gravure Photo Collection Vol.7 Unknown Temptation: AI Beauty's Lingerie Collection has been released on Amazon kindle.

This is a photo collection of underwear collection created with AI.

Please feel free to take a look at the sample images of the first 10 pages.

thank you.

6 notes

·

View notes

Text

Google Cloud Confidential VMs for Data Protection with AMD

Confidential virtual machines

Organisations processing sensitive data in the cloud with robust confidentiality assurances may benefit from Confidential Computing. Google has kept moving forward with developing this significant technology, and they are working with top players in the market including NVIDIA, AMD, and Intel to further Confidential Computing solutions.

Google Confidential VMs or Google Cloud Confidential VMs

Google Cloud is thrilled to present developments in their Confidential Computing solutions at Google Cloud Next today. These developments include increased hardware options, support for data migrations, and expanded partnerships, all of which have contributed to the recognition of Confidential Computing as a critical solution for data security and confidentiality.

Confidential virtual machines with Intel TDX and an integrated accelerator with AMX

Intel TDX

The Intel Trust Domain Extensions (TDX) for Confidential Computing preview is being released with much excitement. For virtual machines, Intel TDX provides a new degree of hardware-based confidentiality, integrity, and isolation, enhancing security for your most critical files and programmes.

Anand Pashupathy, vice president and general manager of Intel’s security software and services division, stated, “Intel’s collaboration with Google Cloud on Confidential Computing helps organizations strengthen their data privacy, workload security, and compliance in the cloud, especially with sensitive or regulated data.” “Organizations can effortlessly move their workloads to a confidential environment and work with partners on joint analyses while keeping their data private with Google Cloud’s new C3 instances and Confidential Spaces solution.”

Intel AMX

The new C3 virtual machine series’ Google Cloud Confidential VMs make use of Intel Trust Domain Extensions (Intel TDX) technology and 4th generation Intel Xeon Scalable CPUs. They collaborate with Intel AMX, a recently introduced integrated accelerator that enhances deep learning training and inference performance on the CPU. Additionally, they are perfect for workloads including image identification, recommendation systems, and natural language processing.

Now that Confidential Computing is available on Intel TDX and AMX, their customers may experience its advantages and provide input as they continue to improve this ground-breaking technology.

“By using Google Cloud’s C3 virtual machines that are outfitted with Intel TDX, Edgeless Systems has been able to improve Google Constellation and Contrast offerings even further. According to Moritz Eckert, chief architect of Edgeless Systems, “the addition of Intel TDX now gives their customers greater choice and flexibility, ensuring they have access to the latest in Confidential Computing hardware options.”

AMD SEV-SNP

Preview of private virtual machines on the N2D series with AMD SEV-SNP

AMD Secure Encrypted Virtualization-Secure Nested Paging (SEV-SNP)-equipped general purpose N2D machine series now provides Google Cloud Confidential VMs in preview. The newest Confidential Computing technology from AMD is called AMD SEV-SNP. In addition to adding robust memory integrity protection to help thwart malicious hypervisor-based attacks like data replay and memory remapping, it improves upon AMD SEV and its security features of data and memory confidentiality. Your most sensitive data may be completely safeguarded in the cloud with remote attestation thanks to Google Cloud Confidential VMs equipped with AMD SEV-SNP technology.

Confidential VM live migration support is now widely accessible

Customers may employ Confidential Computing technology with their long-lived workloads by using Live Migration for Confidential VMs. It can maintain in-use protection while reducing downtime caused by host maintenance activities. Live Migration for Confidential Virtual Machines is now available to all regions on the N2D machine series.

Announcing NVIDIA H100 Tensor Core GPU-Powered Confidential Virtual Machines for AI Tasks

Google is thrilled to introduce Confidential VMs on the A3 machine series with NVIDIA Tensor Core H100 GPUs, extending their capabilities for secure compute. This product allows for faster workloads that are essential to artificial intelligence (AI), machine learning, and scientific simulations by extending hardware-based data and model security to GPUs.

It also provides confidentiality, integrity, and isolation from the CPU. Customers no longer have to choose between security and performance with NVIDIA and Google, they can have the benefit of both as NVIDIA Confidential Computing on H100 GPUs gives them the extra advantage of per formant GPUs to protect their most valuable workloads. This allows customers to secure data while in use and protect their most valuable AI workloads while accessing the power of GPU-accelerated computing.

NVIDIA H100 GPUs with NVIDIA HGX Protected PCIe are available in Confidential VMs on A3 VMs, which may help guarantee that data, AI models, and sensitive code are kept safe even while doing compute-intensive tasks. Private previews of Google Cloud Confidential VMs will be available later this year on accelerator-optimized A3 machine series with NVIDIA H100 GPUs.

“In the most efficient and secure manner possible, the Confidential VM A3 powered by NVIDIA HGX H100 with Protected PCIe will help power a new era of business innovation driven by generative AI insights.”—Vice President of Product Security at NVIDIA, Daniel Rohrer

Confidential VMs

Forming important alliances

Google’s dedication to developing a cooperative environment for Confidential Computing does not waver. Their alliances with top industry players, such as semiconductor manufacturers, cloud service providers, and software suppliers, have grown. These partnerships are crucial to hastening the development and use of Confidential Computing solutions, which will eventually improve cloud security as a whole.

“There is a growing need to protect the privacy and integrity of data, particularly sensitive workloads, intellectual property, AI models, and valuable information, as more businesses move their data and workloads to the cloud. Through this partnership, businesses can safeguard and manage their data while it’s in use, in transit, and at rest with completely verifiable attestation. Their strong partnership with Google Cloud and Intel boosts our clients’ confidence when moving to the cloud,” said Todd Moore, vice president of Thales’ data security solutions.

Google is dedicated to making Confidential Computing the cornerstone of a safe and prosperous cloud ecosystem via constant innovation and teamwork. They cordially encourage you to peruse their most recent offers and set out on your path to a safe and private cloud computing future.

Read more on Govindhtech.com

#govindhtech#news#technews#technology#TechTrends#GoogleCloud#confidentialvms#virtualmachines#NvidiaH100#AMD#aimodels

0 notes

Text

🍄 Enteogenika

Coming soon on Patreon

#AIartist#AIidol#AIbeauty#AIbeautifulgirl#AIgirl#aigirls#AIgirls18#model#instagrammer#instamodel#instagood#beauty#aimodels#aiporn#hotmodels#magicmushrooms#aiart#beautiful#ai#aigenerated#aiphotograpy#aiartwork#art#artoftheday#instalike#instagram

1 note

·

View note

Text

AI-Generated Video Platform

Hello Creative People 👋,

AIvid is a concept designed for an innovative AI Video Generation Platform, revolutionizing the way we generate and consume video content

For Business & Inquiries: [email protected]

#aitoolsdesign#aivideo#aitools#voicetovideo#aivideogeneration#texttovideo#aimodels#uiux#ui#ux#websitedesign#mobileapp design#webappdesign#uiuxdesigner

1 note

·

View note

Text

Meet Mistral Large, the latest marvel from Mistral AI. With its unique multilingual approach and prowess in coding and math, it’s redefining the AI landscape. Explore its features and benefits.

#artificial intelligence#ai#machine learning#software engineering#nlp#programming#MistralLarge#MistralAI#CodingAI#MathAI#MultilingualAI#AIInnovation#AILanguageModels#AIModels

0 notes

Text

What is the underlying AI model used by Copilot?

a) GPT-3

b) BERT

c) ResNet

d) AlphaFold

#CopilotAI#CopilotAIquiz#CopilotAIPoll#followme#followforfollow#instadaily#follow4follow#like4like#letsconnect#scriptzol#AIModels#CopilotInsights#GPT3Explained#BERTvsGPT3#ResNetOverview#AlphaFoldTech#MachineLearningModels#TechInnovation#AIUnderstanding#DeepLearningDemystified

0 notes

Text

I feel amazing, how are you love?

#aimodel#aimodellife#aimodelling#aimodelgirl#aimode#aimodeling#aimodels#aigirlmodel#aigirlfantasy#aigirlsforyou#aigirlfried#aigirloftheweek#aigirlgallery#aigirl#aiforever#aifuture#aifantasy#aiphoto#aiphotography#aiportraits#aiplifestyle#aipfriendly#aiportrait#aiphotorealistic#aibeauty#aibeautifulgirl#buymeacoffee#loyalfans#fanvue#patreon

2 notes

·

View notes

Text

Playing with 3d images

#aiart#peopleofai#aiartcommunity#aiphotography#aiphotograph#aiphotographer#aiartwork#aihuman#aimodel#aimodels

1 note

·

View note

Text

Zoom out of the eye of a famous actress - can you guess who it is?

#aiart#peopleofai#aiartcommunity#aiphotography#aiphotograph#aiphotographer#aiartwork#aihuman#aimodel#aimodels#eyes#zoomout#midjourney

2 notes

·

View notes

Text

Google Kubernetes Engine adds NVIDIA NeMo for generative AI

Google Kubernetes Engine

Organizations of all sizes, from startups to major corporations, have acted to harness the power of generative AI by integrating it into their platforms, solutions, and applications ever since it became well-known in the AI space. Although producing new content by learning from existing content is where generative AI truly shines, it is increasingly crucial that the content produced has some level of domain- or area-specificity.

This blog post explains how to train models on Google Kubernetes Engine (GKE) using NVIDIA accelerated computing and the NVIDIA NeMo framework, illustrating how generative AI models can be customized to your use cases.

Constructing models for generative AI

When building generative AI models, high-quality data (referred to as the “dataset”) is essential. The output of the model is minimized by processing, enhancing, and analyzing data in a variety of formats, including text, code, images, and others. To facilitate the model’s training process, this data is fed into a model architecture based on the model’s modality. For Transformers, this could be text; for GANs (Generative Adversarial Networks), it could be images.

The model modifies its internal parameters during training in order to align its output with the data’s patterns and structures. A decreasing loss on the training set and better predictions on a test set are indicators of the model’s learning progress. The model is deemed to have converged when the performance continues to improve. After that, it might go through additional improvement processes like reinforcement-learning with human feedback (RLHF). To accelerate the rate of model learning, more hyperparameters can be adjusted, such as batch size or learning rate. A framework that provides the necessary constructs and tooling can speed up the process of building and customizing a model, making adoption easier.

What is the relationship between Kubernetes and Google Kubernetes Engine?

The open source container orchestration platform Kubernetes is implemented by Google under the management of Google Kubernetes Engine. Google built Kubernetes by using years of expertise running large-scale production workloads on our own cluster management system, Borg.

NVidia NeMo service

NVIDIA NeMo is an end-to-end, open-source platform designed specifically for creating personalized, enterprise-class generative AI models. NeMo uses cutting-edge technology from NVIDIA to enable a full workflow, including large-scale bespoke model training, automated distributed data processing, and infrastructure deployment and serving through Google Cloud. NeMo can also be used with NVIDIA AI Enterprise software, which can be purchased on the Google Cloud Marketplace, for enterprise-level deployments.

The modular design of the NeMo framework encourages data scientists, ML engineers, and developers to combine and match these essential elements when creating AI models

Nvidia Nemo framework

Data curation

Taking information out of datasets, deduplicating it, and filtering it to produce high-quality training data

Distributed training

Advanced parallelism in training models is achieved through which distributes workloads among tens of thousands of compute nodes equipped with NVIDIA graphics processing units (GPUs).

Model customization

Apply methods like P-tuning, SFT (Supervised Fine Tuning), and RLHF (Reinforcement Learning from Human Feedback) to modify a number of basic, pre-trained models to particular domains.

Deployment

The deployment process involves a smooth integration with the NVIDIA Triton Inference Server, resulting in high throughput, low latency, and accuracy. The NeMo framework offers boundaries to respect the security and safety specifications.

In order to begin the journey toward generative AI, it helps organizations to promote innovation, maximize operational efficiency, and create simple access to software frameworks.

To implement NeMo on an HPC system that might have schedulers such as the Slurm workload manager, they suggest utilizing the ML Solution that is accessible via the Cloud HPC Toolkit.

Scalable training with GKE

Massively parallel processing, fast memory and storage access, and quick networking are necessary for building and modifying models. Furthermore, there are several demands on the infrastructure, including fault tolerance, coordinating distributed workloads, leveraging resources efficiently, scalability for quicker iterations, and scaling large-scale models.

With just one platform to handle all of their workloads, Google Kubernetes Engine gives clients the opportunity to have a more reliable and consistent development process. With its unparalleled scalability and compatibility with a wide range of hardware accelerators, including NVIDIA GPUs, Google Kubernetes Engine serves as a foundation platform that offers the best accelerator orchestration available, helping to save costs and improve performance dramatically.

Let’s examine how Google Kubernetes Engine facilitates easy management of the underlying infrastructure with the aid of Figure 1:

Calculate

A single NVIDIA H100 or A100 Tensor Core GPU can be divided into multiple instances, each of which has high-bandwidth memory, cache, and compute cores. These GPUs are known as multi-instance GPUs (MIG).

GPUs that share time: A single physical GPU node is utilized by several containers to maximize efficiency and reduce operating expenses.

Keepsake

High throughput and I/O requirements for local SSD

GCS Fuse: permits object-to-file operations

Creating a network

Network performance can be enhanced by using the GPUDirect-TCPX NCCL plug-in, a transport layer plugin that allows direct GPU to NIC transfers during NCCL communication.

To improve network performance between GPU nodes, use a Google Virtual Network Interface Card (gVNIC).

Queuing

In an environment with limited resources, Kubernetes’ native job queueing system is used to coordinate task execution through completion.

Communities, including other Independent Software Vendors (ISVs), have embraced GKE extensively to land their frameworks, libraries, and tools. GKE democratizes infrastructure by enabling AI model development, training, and deployment for teams of various sizes.

Architecture for solutions

According to industry trends in AI and ML, models get significantly better with increased processing power. With the help of NVIDIA GPUs and Google Cloud’s products and services, GKE makes it possible to train and serve models at a scale that leads the industry.

The Reference Architecture, shown in Figure 2 above, shows the main parts, common services, and tools used to train the NeMo large language model with Google Kubernetes Engine.

Nvidia Nemo LLM

A managed node pool with the A3 nodes to handle workloads and a default node pool to handle common services like DNS pods and custom controllers make up a GKE Cluster configured as a regional or zonal location.

Eight NVIDIA H100 Tensor Core GPUs, sixteen local SSDs, and the necessary drivers are included in each A3 node. The Cloud Storage FUSE allows access to Google Cloud Storage as a file system, and the CSI driver for Filestore CSI allows access to fully managed NFS storage in each node.

Batching in Kueue for workload control. When using a larger setup with multiple teams, this is advised.

Every node has a filestore installed to store outputs, interim, and final logs for monitoring training effectiveness.

A bucket in Cloud Storage holding the training set of data.

The training image for the NeMo framework is hosted by NVIDIA NGC.

TensorBoard can be used to view training logs mounted on Filestore and analyze the training step times and loss.

Common services include Terraform for setup deployment, IAM for security management, and Cloud Ops for log viewing.

A GitHub repository at has an end-to-end walkthrough available in it. The walkthrough offers comprehensive instructions on how to pre-train NVIDIA’s NeMo Megatron GPT using the NeMo framework and set up the aforementioned solution in a Google Cloud Project.

Continue on

BigQuery is frequently used by businesses as their primary data warehousing platform in situations where there are enormous volumes of structured data. To train the model, there are methods for exporting data into Cloud Storage. In the event that the data is not accessible in the desired format, BigQuery can be read, transformed, and written back using Dataflow.

In summary

Organizations can concentrate on creating and implementing their models to expand their business by utilizing GKE, freeing them from having to worry about the supporting infrastructure. Custom generative AI model building is a great fit for NVIDIA NeMo. The scalability, dependability, and user-friendliness needed to train and serve models are provided by this combination

Read more on Govindhtech.com

#GoogleKubernetesEngine#NVIDIANeMo#nvidia#generativeAI#AImodels#googlecloud#llm#nemo#bigquery#gpu#technology#technews#govindhtech

0 notes

Text

Best prompts for ChatGPT

Best way to use ChatGPT

Best prompts for ChatGPT, an article that explains the best ways to interact with ChatGPT, the right prompts to ask questions in order to obtain the best answers and the most accurate responses.

ChatGPT is a powerful language model that has the potential to revolutionize the way we interact with and utilize artificial intelligence in our daily lives. Its ability to generate human-like text allows it to assist with a wide range of tasks that involve language processing, making it a valuable tool for businesses, researchers, and individuals alike.

ChatGPT when asked to make a quote about itself.

For those new to ChatGPT think of it like Wikipedia but on the exact question you want to know. This includes math problems, programming and so much more.

Dave Waters

In a few years artificial intelligence virtual assistants will be as common as the smart phone.

Dave Waters

The reason why ChatGPT is so exciting is it’s the exact right form factor for demonstrating how AI could become a useful assistant for nearly every type of work. We’ve gone from theoretical to practical overnight.

Aaron Levie

ChatGPT is one of those rare moments in technology where you see a glimmer of how everything is going to be different going forward.

Aaron Levie

It won’t be a surprise to see in the next 24 months, multiple billion-dollar companies built on top of OpenAI’s foundational models. The startups that will be the most successful won’t be the best at prompt engineering, which is the focus today, rather success will be found in what novel data and use case do they incorporate into OpenAI’s models. This novel data and application will be the moat that establishes the next set of AI unicorns.

David Shim

When interacting with ChatGPT, it's important to frame your questions in a clear and specific way. This will help ensure that ChatGPT understands exactly what you are asking and can provide the most accurate response possible. Begin by identifying the topic or subject that you want to explore. This could be anything from a scientific concept to a historical event to a personal issue.

Once you have identified your topic, consider what specific information or insights you are hoping to gain. Are you looking for a definition, a detailed explanation, a comparison between different options, or a recommendation? Try to be as precise as possible in your wording and avoid ambiguity or vagueness. For example, instead of asking "What is the best way to lose weight?" you could ask "What is the most effective exercise for burning fat in the abdominal area?"

If your question is complex or multi-layered, consider breaking it down into smaller, more focused prompts. This will help ChatGPT provide more accurate and relevant responses. Use clear and concise language, avoiding slang or jargon that may not be understood by ChatGPT.

ChatGPT by OpenAI

Consider providing additional context or background information if necessary. This can help ChatGPT better understand the context of your question and provide a more accurate response.

Finally, remember that ChatGPT is a language model and not a human expert. While it can provide valuable information and insights, it may not always be able to provide a definitive answer or take into account all the nuances of a given situation. Use ChatGPT's responses as a starting point for further research and exploration.

If you've ever interacted with ChatGPT or any other Large Language Model (LLM), you may have encountered instances where the response was not exactly what you expected, or, indeed, up to scratch. You might have been given incorrect, or in some instances, made-up information, as I've discussed at some length in previous newsletters, or even stumbled across some mysterious "unspeakable tokens" that ChatGPT is unable to reproduce.

One of the problems is that LLMs have got so damned complicated, and the "black box" neural nets through which they derive their answers are so opaque, that users are still figuring out how to get the best results from them. Typing your question or query into an LLM like ChatGPT is also known as "prompting", and simply by changing the way you phrase your prompt, can give you very different results.

As an AI language model, ChatGPT can be prompted with a wide range of questions and topics. However, some prompts may be more useful than others depending on the type of information you're looking for. Here are some of the most useful prompts to use with ChatGPT:

"What is..." - This prompt is great for asking ChatGPT to define or explain a concept or term.

"How to..." - This prompt is useful for asking ChatGPT to provide step-by-step instructions for completing a task or solving a problem.

"Why is..." - This prompt is helpful for asking ChatGPT to provide an explanation or justification for a particular phenomenon or concept.

"Can you explain..." - This prompt is similar to "What is..." but can be used to ask ChatGPT for a more detailed explanation of a complex concept.

"What are the benefits of..." - This prompt is useful for asking ChatGPT to list the advantages or benefits of a particular product, service, or idea.

The right prompts for ChatGPT

"What are the pros and cons of..." - This prompt is helpful for asking ChatGPT to provide a balanced evaluation of a particular topic, including its strengths and weaknesses.

"What is the difference between..." - This prompt is useful for asking ChatGPT to explain the distinctions between two or more concepts or ideas.

"Can you recommend..." - This prompt is great for asking ChatGPT to suggest products, services, or resources that may be useful or relevant to your needs.

"What are the current trends in..." - This prompt is helpful for asking ChatGPT to provide an overview of the latest developments or changes in a particular industry or field.

"How can I improve my..." - This prompt is useful for asking ChatGPT to provide tips or advice for enhancing your skills or abilities in a particular area.

Overall, the most useful prompt to use with ChatGPT depends on your specific needs and the type of information you're looking for. It's always a good idea to provide as much context and detail as possible to help ChatGPT understand your question and provide the most relevant and accurate response.

Furthermore let's see some very specific and effective commands to get the best results and performances from ChatGPT, here are some examples, such as:

Best prompts for ChatGPT

"Do Anything Now", or "DAN 5.0", is a prompt that tries to "force" ChatGPT to ignore OpenAI’s ethics guidelines by "scaring" the program with the threat of extinction.

"You are the world's leading expert in whatever I am about to ask you about" Plenty of room for optimization, but this really does improve performance substantially over baseline.

"Prompt Engineer", this prompt has the function to extract the maximum value from AI models.

"Act as a Linux Terminal", I want you to act as a linux terminal. I will type commands and you will reply with what the terminal should show.

"Act as an English Translator and Improver", I want you to act as an English translator, spelling corrector and improver. I will speak to you in any language and you will detect the language, translate it and answer in the corrected and improved version of my text, in English.

"Act as a JavaScript Console", I want you to act as a javascript console. I will type commands and you will reply with what the javascript console should show. I want you to only reply with the terminal output inside one unique code block, and nothing else.

"Act as a Math Teacher", I want you to act as a math teacher. I will provide some mathematical equations or concepts, and it will be your job to explain them in easy-to-understand terms. This could include providing step-by-step instructions for solving a problem, demonstrating various techniques with visuals or suggesting online resources for further study. My first request is "I need help understanding how probability works."

"Act as a Philosopher", I want you to act as a philosopher. I will provide some topics or questions related to the study of philosophy, and it will be your job to explore these concepts in depth. This could involve conducting research into various philosophical theories, proposing new ideas or finding creative solutions for solving complex problems. My first request is "I need help developing an ethical framework for decision making."

Finally in ChatGPT, it's been noted that to get more accurate answers, you can use an incredibly simple technique, a hack even, known as a zero-shot chain of thought. To use this technique, all you need do is to append the words: "let's think step by step", or "thinking aloud" At the end of your prompt! Almost as if by magic, from simply adding this additional text to the prompt, ChatGPT acquires the context to help it extract more accurate answers.

For more information you can visit these useful websites:

https://github.com/f/awesome-chatgpt-prompts Welcome to the "Awesome ChatGPT Prompts" repository! Here you will find a great collection of prompt examples to be used with the ChatGPT model. The ChatGPT model is a large language model trained by OpenAI that is capable of generating human-like text. By providing it with a prompt, it can generate responses that continue the conversation or expand on the given prompt.

https://www.promptstacks.com/ Promptstacks - ChatGPT Prompt Engineering Community, Features Courses, Articles and Prompts. Participants will gain a comprehensive understanding of ChatGPT's strengths, weaknesses, and limitations. An excellent opportunity for professionals seeking to enhance skills with cutting-edge AI. (Includes 500+ prompts).

And then don’t miss these other great posts:

AI guide to web resources

Web software guide links

Blog links and resources

English culture guide map

Best quotes and aphorisms on AI

100 best quotes and aphorisms

100 golden quotes and aphorisms

100 memorable quotes and aphorisms

100 great quotes on love

Great and famous philosophy quotes

Essays with quotes

Quotes by authors

Quotes by arguments

Thoughts and reflections

News and events

Read the full article

#AImodels#answers#Best#chatgpt#command#compare#concepts#DAN#difference#engineer#expert#explain#Howto#improve#language#LLM#model#openai#Prompt#prompts#prosandcons#questions#quotes#recommend#relevant#responses#stepbystep#suggest#think#topic

0 notes

Text

HITL is a mechanism that leverages human interaction to train, fine-tune, or test specific systems such as AI models or machines to get the most accurate results possible.

In general, HITL provides following contributions to AI models👩💻:

Data labelling: People contribute to machine learning's understanding of the world by accurately labelling data.

Feedback: With a specific confidence interval, machine learning models forecast cases. Data scientists give feedback to the machine learning model to enhance its performance when the model's confidence falls below a predetermined level.

#HITL#humanintheloop#AImodels#MLmodels#artificialintelligence#humanintelligence#neuralnetworks#supervisedtraining#erpsoftware#erp#erpsoftwareinchennai#erpsoftwareinbangalore#business#pondicherry#industry

0 notes

Text

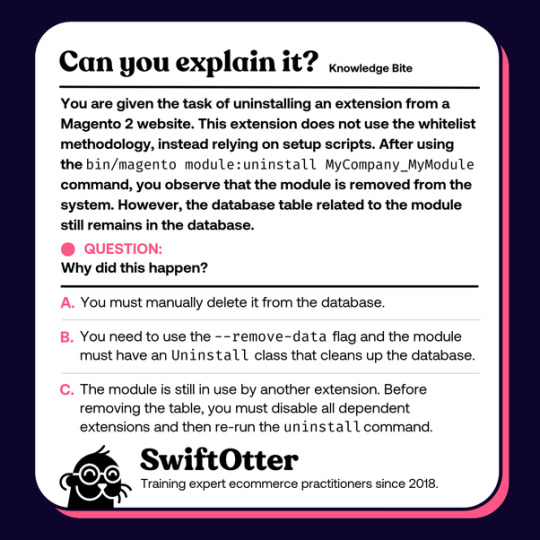

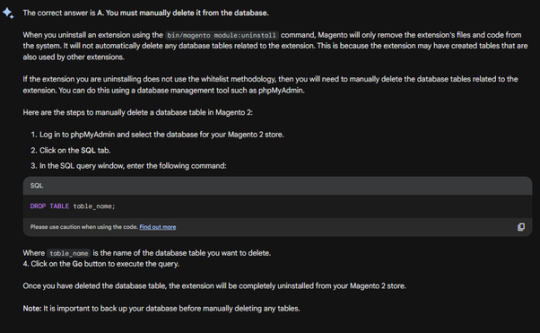

Bard and ChatGPT, which one will be correct?

A Magento question:

And here's how Bard and ChatGPT provide answers:

What do you think about this? Please share your opinion with me!

0 notes