#secure enclaves

Text

Forcing your computer to rat you out

Powerful people imprisoned by the cluelessness of their own isolation, locked up with their own motivated reasoning: “It’s impossible to get a CEO to understand something when his quarterly earnings call depends on him not understanding it.”

Take Mark Zuckerberg. Zuckerberg insists that anyone who wanted to use a pseudonym online is “two-faced,” engaged in dishonest social behavior. The Zuckerberg Doctrine claims that forcing people to use their own names is a way to ensure civility. This is an idea so radioactively wrong, it can be spotted from orbit.

From the very beginning, social scientists (both inside and outside Facebook) told Zuckerberg that he was wrong. People have lots of reasons to hide their identities online, both good and bad, but a Real Names Policy affects different people differently:

https://memex.craphound.com/2018/01/22/social-scientists-have-warned-zuck-all-along-that-the-facebook-theory-of-interaction-would-make-people-angry-and-miserable/

For marginalized and at-risk people, there are plenty of reasons to want to have more than one online identity — say, because you are a #MeToo whistleblower hoping that Harvey Weinstein won’t sic his ex-Mossad mercenaries on you:

https://www.newyorker.com/news/news-desk/harvey-weinsteins-army-of-spies

Or maybe you’re a Rohingya Muslim hoping to avoid the genocidal attentions of the troll army that used Facebook to organize — under their real, legal names — to rape and murder you and everyone you love:

https://www.amnesty.org/en/latest/news/2022/09/myanmar-facebooks-systems-promoted-violence-against-rohingya-meta-owes-reparations-new-report/

But even if no one is looking to destroy your life or kill you and your family, there are plenty of good reasons to present different facets of your identity to different people. No one talks to their lover, their boss and their toddler in exactly the same way, or reveals the same facts about their lives to those people. Maintaining different facets to your identity is normal and healthy — and the opposite, presenting the same face to everyone in your life, is a wildly terrible way to live.

None of this is controversial among social scientists, nor is it hard to grasp. But Zuckerberg stubbornly stuck to this anonymity-breeds-incivility doctrine, even as dictators used the fact that Facebook forced dissidents to use their real names to retain power through the threat (and reality) of arrest and torture:

https://pluralistic.net/2023/01/25/nationalize-moderna/#hun-sen

Why did Zuck cling to this dangerous and obvious fallacy? Because the more he could collapse your identity into one unitary whole, the better he could target you with ads. Truly, it is impossible to get a billionaire to understand something when his mega-yacht depends on his not understanding it.

This motivated reasoning ripples through all of Silicon Valley’s top brass, producing what Anil Dash calls “VC QAnon,” the collection of conspiratorial, debunked and absurd beliefs embraced by powerful people who hold the digital lives of billions of us in their quivering grasp:

https://www.anildash.com/2023/07/07/vc-qanon/

These fallacy-ridden autocrats like to disguise their demands as observations, as though wanting something to be true was the same as making it true. Think of when Eric Schmidt — then the CEO of Google — dismissed online privacy concerns, stating “If you have something that you don’t want anyone to know, maybe you shouldn’t be doing it in the first place”:

https://www.eff.org/deeplinks/2009/12/google-ceo-eric-schmidt-dismisses-privacy

Schmidt was echoing the sentiments of his old co-conspirator, Sun Microsystems CEO Scott McNealy: “You have zero privacy anyway. Get over it”:

https://www.wired.com/1999/01/sun-on-privacy-get-over-it/

Both men knew better. Schmidt, in particular, is very jealous of his own privacy. When Cnet reporters used Google to uncover and publish public (but intimate and personal) facts about Schmidt, Schmidt ordered Google PR to ignore all future requests for comment from Cnet reporters:

https://www.cnet.com/tech/tech-industry/how-cnet-got-banned-by-google/

(Like everything else he does, Elon Musk’s policy of responding to media questions about Twitter with a poop emoji is just him copying things other people thought up, making them worse, and taking credit for them:)

https://www.theverge.com/23815634/tesla-elon-musk-origin-founder-twitter-land-of-the-giants

Schmidt’s actions do not reflect an attitude of “If you have something that you don’t want anyone to know, maybe you shouldn’t be doing it in the first place.” Rather, they are the normal response that we all have to getting doxed.

When Schmidt and McNealy and Zuck tell us that we don’t have privacy, or we don’t want privacy, or that privacy is bad for us, they’re disguising a demand as an observation. “Privacy is dead” actually means, “When privacy is dead, I will be richer than you can imagine, so stop trying to save it, goddamnit.”

We are all prone to believing our own bullshit, but when a tech baron gets high on his own supply, his mental contortions have broad implications for all of us. A couple years after Schmidt’s anti-privacy manifesto, Google launched Google Plus, a social network where everyone was required to use their “real name.”

This decision — justified as a means of ensuring civility and a transparent ruse to improve ad targeting — kicked off the Nym Wars:

https://epeus.blogspot.com/2011/08/google-plus-must-stop-this-identity.html

One of the best documents to come out of that ugly conflict is “Falsehoods Programmers Believe About Names,” a profound and surprising enumeration of all the ways that the experiences of tech bros in Silicon Valley are the real edge-cases, unreflective of the reality of billions of their users:

https://www.kalzumeus.com/2010/06/17/falsehoods-programmers-believe-about-names/

This, in turn, spawned a whole genre of programmer-fallacy catalogs, falsehoods programmers believe about time, currency, birthdays, timezones, email addresses, national borders, nations, biometrics, gender, language, alphabets, phone numbers, addresses, systems of measurement, and, of course, families:

https://github.com/kdeldycke/awesome-falsehood

But humility is in short supply in tech. It’s impossible to get a programmer to understand something when their boss requires them not to understand it. A programmer will happily insist that ordering you to remove your “mask” is for your own good — and not even notice that they’re taking your skin off with it.

There are so many ways that tech executives could improve their profits if only we would abandon our stubborn attachment to being so goddamned complicated. Think of Netflix and its anti-passsword-sharing holy war, which is really a demand that we redefine “family” to be legible and profitable for Netflix:

https://pluralistic.net/2023/02/02/nonbinary-families/#red-envelopes

But despite the entreaties of tech companies to collapse our identities, our families, and our online lives into streamlined, computably hard-edged shapes that fit neatly into their database structures, we continue to live fuzzy, complicated lives that only glancingly resemble those of the executives seeking to shape them.

Now, the rich, powerful people making these demands don’t plan on being constrained by them. They are conservatives, in the tradition of #FrankWilhoit, believers in a system of “in-groups whom the law protects but does not bind, alongside out-groups whom the law binds but does not protect”:

https://crookedtimber.org/2018/03/21/liberals-against-progressives/#comment-729288

As with Schmidt’s desire to spy on you from asshole to appetite for his own personal gain, and his violent aversion to having his own personal life made public, the tech millionaires and billionaires who made their fortune from the flexibility of general purpose computers would like to end that flexibility. They insist that the time for general purpose computers has passed, and that today, “consumers” crave the simplicity of appliances:

https://memex.craphound.com/2012/01/10/lockdown-the-coming-war-on-general-purpose-computing/

It is in the War On General Purpose Computing that we find the cheapest and flimsiest rhetoric. Companies like Apple — and their apologists — insist that no one wants to use third-party app stores, or seek out independent repair depots — and then spend millions to make sure that it’s illegal to jailbreak your phone or get it fixed outside of their own official channel:

https://doctorow.medium.com/apples-cement-overshoes-329856288d13

The cognitive dissonance of “no one wants this,” and “we must make it illegal to get this” is powerful, but the motivated reasoning is more powerful still. It is impossible to get Tim Cook to understand something when his $49 million paycheck depends on him not understanding it.

The War on General Purpose Computing has been underway for decades. Computers, like the people who use them, stubbornly insist on being reality-based, and the reality of computers is that they are general purpose. Every computer is a Turing complete, universal Von Neumann machine, which means that it can run every valid program. There is no way to get a computer to be almost Turing Complete, only capable of running programs that don’t upset your shareholders’ fragile emotional state.

There is no such thing as a printer that will only run the “reject third-party ink” program. There is no such thing as a phone that will only run the “reject third-party apps” program. There are only laws, like the Section 1201 of the Digital Millennium Copyright Act, that make writing and distributing those programs a felony punishable by a five-year prison sentence and a $500,000 fine (for a first offense).

That is to say, the War On General Purpose Computing is only incidentally a technical fight: it is primarily a legal fight. When Apple says, “You can’t install a third party app store on your phone,” what they means is, “it’s illegal to install that third party app store.” It’s not a technical countermeasure that stands between you and technological self-determination, it’s a legal doctrine we can call “felony contempt of business model”:

https://locusmag.com/2020/09/cory-doctorow-ip/

But the mighty US government will not step in to protect a company’s business model unless it at least gestures towards the technical. To invoke DMCA 1201, a company must first add the thinnest skin of digital rights management to their product. Since 1201 makes removing DRM illegal, a company can use this molecule-thick scrim of DRM to felonize any activity that the DRM prevents.

More than 20 years ago, technologists started to tinker with ways to combine the legal and technical to tame the wild general purpose computer. Starting with Microsoft’s Palladium project, they theorized a new “Secure Computing” model for allowing companies to reach into your computer long after you had paid for it and brought it home, in order to discipline you for using it in ways that undermined its shareholders’ interest.

Secure Computing began with the idea of shipping every computer with two CPUs. The first one was the normal CPU, the one you interacted with when you booted it up, loaded your OS, and ran programs. The second CPU would be a Trusted Platform Module, a brute-simple system-on-a-chip designed to be off-limits to modification, even by its owner (that is, you).

The TPM would ship with a limited suite of simple programs it could run, each thoroughly audited for bugs, as well as secret cryptographic signing keys that you were not permitted to extract. The original plan called for some truly exotic physical security measures for that TPM, like an acid-filled cavity that would melt the chip if you tried to decap it or run it through an electron-tunneling microscope:

https://pluralistic.net/2020/12/05/trusting-trust/#thompsons-devil

This second computer represented a crack in the otherwise perfectly smooth wall of a computer’s general purposeness; and Trusted Computing proposed to hammer a piton into that crack and use it to anchor a whole superstructure that could observe — and limited — the activity of your computer.

This would start with observation: the TPM would observe every step of your computer’s boot sequence, creating cryptographic hashes of each block of code as it loaded and executed. Each stage of the boot-up could be compared to “known good” versions of those programs. If your computer did something unexpected, the TPM could halt it in its tracks, blocking the boot cycle.

What kind of unexpected things do computers do during their boot cycle? Well, if your computer is infected with malware, it might load poisoned versions of its operating system. Once your OS is poisoned, it’s very hard to detect its malicious conduct, since normal antivirus programs rely on the OS to faithfully report what your computer is doing. When the AV program asks the OS to tell it which programs are running, or which files are on the drive, it has no choice but to trust the OS’s response. When the OS is compromised, it can feed a stream of lies to users’ programs, assuring these apps that everything is fine.

That’s a very beneficial use for a TPM, but there’s a sinister flipside: the TPM can also watch your boot sequence to make sure that there aren’t beneficial modifications present in your operating system. If you modify your OS to let you do things the manufacturer wants to prevent — like loading apps from a third-party app-store — the TPM can spot this and block it.

Now, these beneficial and sinister uses can be teased apart. When the Palladium team first presented its research, my colleague Seth Schoen proposed an “owner override”: a modification of Trusted Computing that would let the computer’s owner override the TPM:

https://web.archive.org/web/20021004125515/http://vitanuova.loyalty.org/2002-07-05.html

This override would introduce its own risks, of course. A user who was tricked into overriding the TPM might expose themselves to malicious software, which could harm that user, as well as attacking other computers on the user’s network and the other users whose data were on the compromised computer’s drive.

But an override would also provide serious benefits: it would rule out the monopolistic abuse of a TPM to force users to run malicious code that the manufacturer insisted on — code that prevented the user from doing things that benefited the user, even if it harmed the manufacturer’s shareholders. For example, with owner override, Microsoft couldn’t force you to use its official MS Office programs rather than third-party compatible programs like Apple’s iWork or Google Docs or LibreOffice.

Owner override also completely changed the calculus for another, even more dangerous part of Trusted Computing: remote attestation.

Remote Attestation is a way for third parties to request a reliable, cryptographically secured assurances about which operating system and programs your computer is running. In Remote Attestation, the TPM in your computer observes every stage of your computer’s boot, gathers information about all the programs you’re running, and cryptographically signs them, using the signing keys the manufacturer installed during fabrication.

You can send this “attestation” to other people on the internet. If they trust that your computer’s TPM is truly secure, then they know that you have sent them a true picture of your computer’s working (the actual protocol is a little more complicated and involves the remote party sending you a random number to cryptographically hash with the attestation, to prevent out-of-date attestations).

Now, this is also potentially beneficial. If you want to make sure that your technologically unsophisticated friend is running an uncompromised computer before you transmit sensitive data to it, you can ask them for an attestation that will tell you whether they’ve been infected with malware.

But it’s also potentially very sinister. Your government can require all the computers in its borders to send a daily attestation to confirm that you’re still running the mandatory spyware. Your abusive spouse — or abusive boss — can do the same for their own disciplinary technologies. Such a tool could prevent you from connecting to a service using a VPN, and make it impossible to use Tor Browser to protect your privacy when interacting with someone who wishes you harm.

The thing is, it’s completely normal and good for computers to lie to other computers on behalf of their owners. Like, if your IoT ebike’s manufacturer goes out of business and all their bikes get bricked because they can no longer talk to their servers, you can run an app that tricks the bike into thinking that it’s still talking to the mothership:

https://nltimes.nl/2023/07/15/alternative-app-can-unlock-vanmoof-bikes-popular-amid-bankruptcy-fears

Or if you’re connecting to a webserver that tries to track you by fingerprinting you based on your computer’s RAM, screen size, fonts, etc, you can order your browser to send random data about this stuff:

https://jshelter.org/fingerprinting/

Or if you’re connecting to a site that wants to track you and nonconsensually cram ads into your eyeballs, you can run an adblocker that doesn’t show you the ads, but tells the site that it did:

https://www.eff.org/deeplinks/2019/07/adblocking-how-about-nah

Owner override leaves some of the beneficial uses of remote attestation intact. If you’re asking a friend to remotely confirm that your computer is secure, you’re not going to use an override to send them bad data about about your computer’s configuration.

And owner override also sweeps all of the malicious uses of remote attestation off the board. With owner override, you can tell any lie about your computer to a webserver, a site, your boss, your abusive spouse, or your government, and they can’t spot the lie.

But owner override also eliminates some beneficial uses of remote attestation. For example, owner override rules out remote attestation as a way for strangers to play multiplayer video games while confirming that none of them are using cheat programs (like aimhack). It also means that you can’t use remote attestation to verify the configuration of a cloud server you’re renting in order to assure yourself that it’s not stealing your data or serving malware to your users.

This is a tradeoff, and it’s a tradeoff that’s similar to lots of other tradeoffs we make online, between the freedom to do something good and the freedom to do something bad. Participating anonymously, contributing to free software, distributing penetration testing tools, or providing a speech platform that’s open to the public all represent the same tradeoff.

We have lots of experience with making the tradeoff in favor of restrictions rather than freedom: powerful bad actors are happy to attach their names to their cruel speech and incitement to violence. Their victims are silenced for fear of that retaliation.

When we tell security researchers they can’t disclose defects in software without the manufacturer’s permission, the manufacturers use this as a club to silence their critics, not as a way to ensure orderly updates.

When we let corporations decide who is allowed to speak, they act with a mixture of carelessness and self-interest, becoming off-the-books deputies of authoritarian regimes and corrupt, powerful elites.

Alas, we made the wrong tradeoff with Trusted Computing. For the past twenty years, Trusted Computing has been creeping into our devices, albeit in somewhat denatured form. The original vision of acid-filled secondary processors has been replaced with less exotic (and expensive) alternatives, like “secure enclaves.” With a secure enclave, the manufacturer saves on the expense of installing a whole second computer, and instead, they draw a notional rectangle around a region of your computer’s main chip and try really hard to make sure that it can only perform a very constrained set of tasks.

This gives us the worst of all worlds. When secure enclaves are compromised, we not only lose the benefit of cryptographic certainty, knowing for sure that our computers are only booting up trusted, unalterted versions of the OS, but those compromised enclaves run malicious software that is essentially impossible to detect or remove:

https://pluralistic.net/2022/07/28/descartes-was-an-optimist/#uh-oh

But while Trusted Computing has wormed its way into boot-restrictions — preventing you from jailbreaking your computer so it will run the OS and apps of your choosing — there’s been very little work on remote attestation…until now.

Web Environment Integrity is Google’s proposal to integrate remote attestation into everyday web-browsing. The idea is to allow web-servers to verify what OS, extensions, browser, and add-ons your computer is using before the server will communicate with you:

https://github.com/RupertBenWiser/Web-Environment-Integrity/blob/main/explainer.md

Even by the thin standards of the remote attestation imaginaries, there are precious few beneficial uses for this. The googlers behind the proposal have a couple of laughable suggestions, like, maybe if ad-supported sites can comprehensively refuse to serve ad-blocking browsers, they will invest the extra profits in making things you like. Or: letting websites block scriptable browsers will make it harder for bad people to auto-post fake reviews and comments, giving users more assurances about the products they buy.

But foundationally, WEI is about compelling you to disclose true facts about yourself to people who you want to keep those facts from. It is a Real Names Policy for your browser. Google wants to add a new capability to the internet: the ability of people who have the power to force you to tell them things to know for sure that you’re not lying.

The fact that the authors assume this will be beneficial is just another “falsehood programmers believe”: there is no good reason to hide the truth from other people. Squint a little and we’re back to McNealy’s “Privacy is dead, get over it.” Or Schmidt’s “If you have something that you don’t want anyone to know, maybe you shouldn’t be doing it in the first place.”

And like those men, the programmers behind this harebrained scheme don’t imagine that it will ever apply to them. As Chris Palmer — who worked on Chromium — points out, this is not compatible with normal developer tools or debuggers, which are “incalculably valuable and not really negotiable”:

https://groups.google.com/a/chromium.org/g/blink-dev/c/Ux5h_kGO22g/m/5Lt5cnkLCwAJ

This proposal is still obscure in the mainstream, but in tech circles, it has precipitated a flood of righteous fury:

https://arstechnica.com/gadgets/2023/07/googles-web-integrity-api-sounds-like-drm-for-the-web/

As I wrote last week, giving manufacturers the power to decide how your computer is configured, overriding your own choices, is a bad tradeoff — the worst tradeoff, a greased slide into terminal enshittification:

https://pluralistic.net/2023/07/24/rent-to-pwn/#kitt-is-a-demon

This is how you get Unauthorized Bread:

https://arstechnica.com/gaming/2020/01/unauthorized-bread-a-near-future-tale-of-refugees-and-sinister-iot-appliances/

All of which leads to the question: what now? What should be done about WEI and remote attestation?

Let me start by saying: I don’t think it should be illegal for programmers to design and release these tools. Code is speech, and we can’t understand how this stuff works if we can’t study it.

But programmers shouldn’t deploy it in production code, in the same way that programmers should be allowed to make pen-testing tools, but shouldn’t use them to attack production systems and harm their users. Programmers who do this should be criticized and excluded from the society of their ethical, user-respecting peers.

Corporations that use remote attestation should face legal restrictions: privacy law should prevent the use of remote attestation to compel the production of true facts about users or the exclusion of users who refuse to produce those facts. Unfair competition law should prevent companies from using remote attestation to block interoperability or tie their products to related products and services.

Finally, we must withdraw the laws that prevent users and programmers from overriding TPMs, secure enclaves and remote attestations. You should have the right to study and modify your computer to produce false attestations, or run any code of your choosing. Felony contempt of business model is an outrage. We should alter or strike down DMCA 1201, the Computer Fraud and Abuse Act, and other laws (like contract law’s “tortious interference”) that stand between you and “sole and despotic dominion” over your own computer. All of that applies not just to users who want to reconfigure their own computers, but also toolsmiths who want to help them do so, by offering information, code, products or services to jailbreak and alter your devices.

Tech giants will squeal at this, insisting that they serve your interests when they prevent rivals from opening up their products. After all, those rivals might be bad guys who want to hurt you. That’s 100% true. What is likewise true is that no tech giant will defend you from its own bad impulses, and if you can’t alter your device, you are powerless to stop them:

https://pluralistic.net/2022/11/14/luxury-surveillance/#liar-liar

Companies should be stopped from harming you, but the right place to decide whether a business is doing something nefarious isn’t in the boardroom of that company’s chief competitor: it’s in the halls of democratically accountable governments:

https://www.eff.org/wp/interoperability-and-privacy

So how do we get there? Well, that’s another matter. In my next book, The Internet Con: How to Seize the Means of Computation (Verso Books, Sept 5), I lay out a detailed program, describing which policies will disenshittify the internet, and how to get those policies:

https://www.versobooks.com/products/3035-the-internet-con

Predictably, there are challenges getting this kind of book out into the world via our concentrated tech sector. Amazon refuses to carry the audio edition on its monopoly audiobook platform, Audible, unless it is locked to Amazon forever with mandatory DRM. That’s left me self-financing my own DRM-free audio edition, which is currently available for pre-order via this Kickstarter:

http://seizethemeansofcomputation.org

I’m kickstarting the audiobook for “The Internet Con: How To Seize the Means of Computation,” a Big Tech disassembly manual to disenshittify the web and bring back the old, good internet. It’s a DRM-free book, which means Audible won’t carry it, so this crowdfunder is essential. Back now to get the audio, Verso hardcover and ebook:

https://www.kickstarter.com/projects/doctorow/the-internet-con-how-to-seize-the-means-of-computation

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/08/02/self-incrimination/#wei-bai-bai

[Image ID: An anatomical drawing of a flayed human head; it has been altered to give it a wide-stretched mouth revealing a gadget nestled in the back of the figure's throat, connected by a probe whose two coiled wires stretch to an old fashioned electronic box. The head's eyes have been replaced by the red, menacing eye of HAL 9000 from Stanley Kubrick's '2001: A Space Odyssey.' Behind the head is a code waterfall effect as seen in the credits of the Wachowskis' 'The Matrix.']

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#chaffing#spoofing#remote attestation#rene descartes#adversarial interoperability#war on general purpose computing#canvas attacks#vpns#compelled speech#onion routing#owner override#stalkerware#ngscb#palladium#trusted computing#secure enclaves#tor#interop#net neutrality#taking the fifth#right to remain silent#real names policy#the zuckerberg doctrine#none of your business#the right to lie#right to repair#bossware#spyware#wei web environment integrity

2K notes

·

View notes

Text

Listen I completely understand why Amata makes me leave Vault 101 despite the fact I resolved everything peacefully. What I can't understand is the fact I NEVER ONCE BEFORE LEAVING CAN CONFIDE IN MY CHILDHOOD BEST FRIEND ABOUT MY FATHER DYING??!!

If they weren't going to give me the option why give me this quest RIGHT after his death?!?!!!

Amata telling me I can’t stay would’ve hit harder if she knew I had NO family outside the Vault.

What a missed opportunity.

I really am the LONE wanderer no one has my back in this game except Fawkes.

#Technically I also have Charon but I also LITERALLY have Charon I own his contract 💀#Every time I get invested in this game something frustrating like this happens.#FAWKES PLANNED TO RESCUE ME FROM THE ENCLAVE!!!! AMATA COULDN'T EVEN ASK ME HOW I'VE BEEN??!!!#I CAN SERIOUSLY ONLY MENTION MY DAD BEING DEAD TO OFFICER GOMEZ!!?!?!??! THE SECURITY GUARD AT MY TENTH BIRTHDAY PARTY!?!?!?!?!#Fallout 3 has INCREDIBLE INTERESTING FUN MOMENTS!!!! It's the little details in the main storyline that are bothering me.#Fallout 3#Fallout 3 Spoiler#MaddyPlaysFallout3#My Lone Wanderer is the embodiment of that meme: Puppy want a treat? Puppy want a fucking break from it all.

8 notes

·

View notes

Text

#mileena#mortal kombat 1#mortal kombat#GOOFY POSTING#fallout reference#that enclave security officer was the coolest dude ever just alone from saying that

17 notes

·

View notes

Text

ongoing projects:

audio drama script (about evil wizards)

ttrpg hack (about evil wizards)

orv fic

sabbat game (backburner)

mutual aid scifi game (backburner)

don't say vampires game (backburner)

and now, possibly a collaborative scholomance larp project? (also about evil wizards)

I might have too many projects, but you can never have too many evil wizards. Clearly I should add evil wizards to my backburner projects to get them moving again.

#when the muse desires one thing and one thing only#was nice when a discord person inquired about Crimes of the Sky tho#I should finish that#absolutely zero energy today so none of these are going anywhere#but maybe in a few days...#avatar gambit - crimes of the sky - enemies of the story - gehenna - 2241 game - the frenzy - united enclave security council

2 notes

·

View notes

Text

Secure Enclave Redundancy

With concerns about SGX exploits, the Chainlink 2.0 whitepaper addresses this issue by exploring ways to “employ TEEs in ways that recognize their potential for compromise” and also recommends use cases outside of Intel technology with an open source alternative—Keystone. Keystone is based on RISC-V architecture, which has already made headway in the smart contract space. Working together, ETH…

View On WordPress

#AMD Secure Technology#Keystone Secure Enclave#Secure Enclave Technology#SGX#Trusted Execution Environment

5 notes

·

View notes

Text

youtube

https://www.futureelectronics.com/npi/nxp-i-mx-93-applications-processor. The NXP i.MX 93 applications processor family is a gateway into a completely scalable portfolio that can span industrial, to consumer electronics and IoT, to automotive processing. With NXP’s i.MX 9 series, the products are all about security, energy efficiency, and machine learning intelligence at the edge. With our i.MX 93 applications processors, we're bringing forward the EdgeLock secure enclave. https://youtu.be/RHEAZ93eqH0

#i.MX 93 applications processor#consumer electronics#IoT#automotive processing#NXP i.MX 9 series#machine learning#artificial intelligence#M.L.#A.I.#edge processors#EdgeLock#secure enclave#NXP i.MX 93#NXP#Youtube

1 note

·

View note

Text

Data Security Firm Fortanix Raises $90M Series C

Data Security Firm Fortanix Raises $90M Series C

Home › Cyberwarfare

Data Security Firm Fortanix Raises $90M Series C

By Ryan Naraine on September 15, 2022

Tweet

Silicon Valley late-stage startup Fortanix has banked $90 million in new capital to boost its place in the confidential computing and data protection marketplace.

Fortanix said the $90 million Series C round was led by Goldman Sachs Asset Management. GiantLeap Capital joined as a new…

View On WordPress

#accel#asset discovery#asset management#compliance#confidential computing#crowdstrike#data security#financing#fortanix#funding#hubble technology#identity and governance#intel sgx#paladin#secure enclave#Series C#SGX#venture capital

0 notes

Text

Speaking to reporters, Netanyahu insisted that there was no alternative to “complete victory” over Hamas in Gaza.

Netanyahu’s hawkish response came hours after he met with US Secretary of State Antony Blinken[...] Blinken said later that there was still a path to a deal.

The Hamas proposal envisaged a three-stage process over four-and-a-half months, during which Israeli troops would gradually withdraw from the enclave, hostages would be released and Palestinian prisoners in Israel would be freed, according to a copy of the group’s counteroffer obtained by CNN.[...]

Israel’s aim was “complete victory” in Gaza, Netanyahu said. “The victory is achievable; it’s not a matter of years or decades, it’s a matter of months.”

In a late-night press conference in Tel Aviv, Blinken suggested negotiations could still move forward, saying he believed Netanyahu’s “delusional” remark referred to specific elements of the Hamas proposals that were unacceptable.[...]

Hamas had proposed a three-phase deal, each lasting 45 days, that would also see the gradual release of hostages held in the enclave in exchange for Palestinian prisoners in Israel – including those serving life sentences – as well as the start of a massive humanitarian and rebuilding effort.

Contrary to earlier demands, Hamas did not call for an immediate end to the war. Negotiations for a permanent ceasefire would take place during the truce and the remaining hostages would only be released once a final deal to end the war was agreed, the document said.[...]

Netanyahu has pledged not to stop the campaign until Israel destroys Hamas once and for all.[...]

The Israeli former hostage Adina Moshe criticized Netanyahu, saying there “won’t be any hostages to release” if his government continued its plan to completely eliminate Hamas.[...]

The Hostages and Missing Families Forum Headquarters also delivered a message directly to Netanyahu and the Israeli War Cabinet in a press release on Wednesday. “If the hostages are not returned home: the citizens of Israel should know they live in a state that is not committed to their security, that the mutual responsibility in it has died,” the families forum said. “They who do not protect their citizens will find that their citizens lose faith in them and their leadership.”

7 Feb 24

617 notes

·

View notes

Text

To Go Where No Enterprise Data Security Offering Has Gone Before

Now I want to talk about the opportunity this new secure enclave technology presents to you as a security professional. In the process, I hope you’ll get a clearer idea of our vision — where true data security allows enterprises to go and what this might enable for them.

Access to Infrastructure No Longer Means Access to Data

With the arrival of secure enclave technology, that vulnerable connection between machine access and data no longer exists. All data, including code, is encrypted within the enclave and rendered unaddressable by other machine processes or users. For all intents and purposes, the data is untethered from the device — it is only visible within the context of the enclave.

The implications are significant:

Data is secure everywhere it is used. With this level of isolation, data get the same hardware-grade protection on premise, in the cloud, in hostile and untrusted environments — everywhere. Azure, Baidu, Alibaba and other services already support enclave technologies. That means if you need to operate in untrusted environments, you can do so without the risk of losing data.

High security becomes baked into the infrastructure. Normally strong security protections imply high operational friction. But with secure enclaves, operations staff work without data exposure risk. Organizations such as hosted service providers can actually prove as much to auditors — virtually eliminating liability.

All data and applications can be protected by default. Protecting data is a good thing. But protecting only important data reveals which data is important and where hackers should focus. Isolating and encrypting all data, similarly to what was achieved with the introduction of TLS, improves overall security posture by not signaling which data is important to bad actors

It enables a zero-trust hardware foundation. Zero trust is a great idea, but when implemented in software, it falls to the same memory and security weaknesses of all software. Enclave technology itself implements zero trust by isolating data, allowing only explicitly governed access, and providing continuous oversight of use. This enables IT to create a zero trust foundation that data secures higher-level zero trust solutions. But more importantly it creates a root from which all software can be verified, trusted and uniquely identified.

The Future

Cybersecurity will look very different a few years from now due to this technology. While it’s hard to imagine all the impacts enclave technology will have , we do know there’s a substantial opportunity for IT professionals to greatly improve their business security posture . Without public key infrastructure, which was the last big technology shift, you wouldn’t have security on the Internet, and thus companies like Amazon wouldn’t exist.

Original Source Link: https://www.anjuna.io/blog/anjunas-mission-to-go-where-no-data-security-offering-has-gone-before

#Amd Secure Enclave#Secure Enclave#SGX Secure Enclave#Azure Secure Enclave#data services#data security

0 notes

Text

The United States is preparing to send more bombs and other weapons to Israel even as it pushes for a ceasefire in the war on Gaza and has said it opposes Tel Aviv’s plans for a ground invasion in southern Rafah where more than half the enclave’s displaced population is trapped.

The proposed arms delivery includes about a thousand each of MK-82 500-pound (227kg) bombs and KMU-572 Joint Direct Attack Munitions (JDAMs) that turn unguided munitions into precision-guided bombs, The Wall Street Journal reported on Friday, citing unnamed US officials.

The US is further considering sending FMU-139 bomb fuses, with the total shipment estimated to be worth tens of millions of dollars, which will be paid from US military aid to Israel.

The report cited an assessment of the proposed arms transfer drafted by the US embassy in Jerusalem as saying the Israeli government has requested “rapid acquisition of these items for the defence of Israel against continued and emerging regional threats”.

The assessment also dismisses potential human rights concerns, saying “Israel takes effective action to prevent gross violations of human rights and to hold security forces responsible that violate those rights”.

#yemen#jerusalem#tel aviv#current events#palestine#free palestine#gaza#free gaza#news on gaza#palestine news#news update#war news#war on gaza#genocide joe#genocide#gaza genocide

556 notes

·

View notes

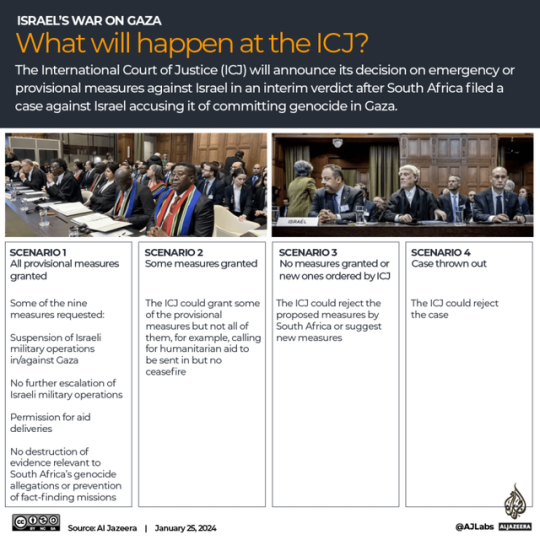

Text

The court will issue its ruling at 2pm in the occupied Palestinian territories and Israel (12pm GMT), with the hearing expected to last an hour.

The court will not rule on whether Israel has committed acts of genocide – a process that could take three to four years – but whether to issue an order compelling Israel to suspend its operations, along with other “emergency measures”.

South Africa has requested several emergency measures, including for Israel to suspend military operations in Gaza, to not escalate military operations any further, and to allow adequate humanitarian aid to enter the Palestinian territory.

Some experts believe one likely scenario is that the court will fall short of ordering a full ceasefire, but could instead order Israel to allow adequate humanitarian relief to enter the besieged enclave.

While the ICJ’s decision is final and lacks an appeal process, it has no way of enforcing its ruling.

Israel has argued that the global court does not have jurisdiction over the case, and it has previously dismissed South Africa’s genocide allegations as “grossly distorted” and “blood libel”.

Hamas has said that it will abide by any ceasefire orders from the ICJ if Israel reciprocates.

The ICJ could also decide it does not have jurisdiction over the case and choose not to order any interim measures.

Parties involved could still pursue the overall case for genocide through other channels such as the UN Security Council.

•••

It's currently 4:30 am GMT. It'll start roughly 8 hours from the time of this posting so if you want to set some time aside to watch, this is your reminder to do so

🕊️🇵🇸

#palestine icj#south africa icj#icj#icj updates#Palestine#Gaza#Free Palestine#Free Gaza#from the river to the sea palestine will be free#south africa#soith africa icj case against israel

440 notes

·

View notes

Text

Demon-haunted computers are back, baby

Catch me in Miami! I'll be at Books and Books in Coral Gables on Jan 22 at 8PM.

As a science fiction writer, I am professionally irritated by a lot of sf movies. Not only do those writers get paid a lot more than I do, they insist on including things like "self-destruct" buttons on the bridges of their starships.

Look, I get it. When the evil empire is closing in on your flagship with its secret transdimensional technology, it's important that you keep those secrets out of the emperor's hand. An irrevocable self-destruct switch there on the bridge gets the job done! (It has to be irrevocable, otherwise the baddies'll just swarm the bridge and toggle it off).

But c'mon. If there's a facility built into your spaceship that causes it to explode no matter what the people on the bridge do, that is also a pretty big security risk! What if the bad guy figures out how to hijack the measure that – by design – the people who depend on the spaceship as a matter of life and death can't detect or override?

I mean, sure, you can try to simplify that self-destruct system to make it easier to audit and assure yourself that it doesn't have any bugs in it, but remember Schneier's Law: anyone can design a security system that works so well that they themselves can't think of a flaw in it. That doesn't mean you've made a security system that works – only that you've made a security system that works on people stupider than you.

I know it's weird to be worried about realism in movies that pretend we will ever find a practical means to visit other star systems and shuttle back and forth between them (which we are very, very unlikely to do):

https://pluralistic.net/2024/01/09/astrobezzle/#send-robots-instead

But this kind of foolishness galls me. It galls me even more when it happens in the real world of technology design, which is why I've spent the past quarter-century being very cross about Digital Rights Management in general, and trusted computing in particular.

It all starts in 2002, when a team from Microsoft visited our offices at EFF to tell us about this new thing they'd dreamed up called "trusted computing":

https://pluralistic.net/2020/12/05/trusting-trust/#thompsons-devil

The big idea was to stick a second computer inside your computer, a very secure little co-processor, that you couldn't access directly, let alone reprogram or interfere with. As far as this "trusted platform module" was concerned, you were the enemy. The "trust" in trusted computing was about other people being able to trust your computer, even if they didn't trust you.

So that little TPM would do all kinds of cute tricks. It could observe and produce a cryptographically signed manifest of the entire boot-chain of your computer, which was meant to be an unforgeable certificate attesting to which kind of computer you were running and what software you were running on it. That meant that programs on other computers could decide whether to talk to your computer based on whether they agreed with your choices about which code to run.

This process, called "remote attestation," is generally billed as a way to identify and block computers that have been compromised by malware, or to identify gamers who are running cheats and refuse to play with them. But inevitably it turns into a way to refuse service to computers that have privacy blockers turned on, or are running stream-ripping software, or whose owners are blocking ads:

https://pluralistic.net/2023/08/02/self-incrimination/#wei-bai-bai

After all, a system that treats the device's owner as an adversary is a natural ally for the owner's other, human adversaries. The rubric for treating the owner as an adversary focuses on the way that users can be fooled by bad people with bad programs. If your computer gets taken over by malicious software, that malware might intercept queries from your antivirus program and send it false data that lulls it into thinking your computer is fine, even as your private data is being plundered and your system is being used to launch malware attacks on others.

These separate, non-user-accessible, non-updateable secure systems serve a nubs of certainty, a remote fortress that observes and faithfully reports on the interior workings of your computer. This separate system can't be user-modifiable or field-updateable, because then malicious software could impersonate the user and disable the security chip.

It's true that compromised computers are a real and terrifying problem. Your computer is privy to your most intimate secrets and an attacker who can turn it against you can harm you in untold ways. But the widespread redesign of out computers to treat us as their enemies gives rise to a range of completely predictable and – I would argue – even worse harms. Building computers that treat their owners as untrusted parties is a system that works well, but fails badly.

First of all, there are the ways that trusted computing is designed to hurt you. The most reliable way to enshittify something is to supply it over a computer that runs programs you can't alter, and that rats you out to third parties if you run counter-programs that disenshittify the service you're using. That's how we get inkjet printers that refuse to use perfectly good third-party ink and cars that refuse to accept perfectly good engine repairs if they are performed by third-party mechanics:

https://pluralistic.net/2023/07/24/rent-to-pwn/#kitt-is-a-demon

It's how we get cursed devices and appliances, from the juicer that won't squeeze third-party juice to the insulin pump that won't connect to a third-party continuous glucose monitor:

https://arstechnica.com/gaming/2020/01/unauthorized-bread-a-near-future-tale-of-refugees-and-sinister-iot-appliances/

But trusted computing doesn't just create an opaque veil between your computer and the programs you use to inspect and control it. Trusted computing creates a no-go zone where programs can change their behavior based on whether they think they're being observed.

The most prominent example of this is Dieselgate, where auto manufacturers murdered hundreds of people by gimmicking their cars to emit illegal amount of NOX. Key to Dieselgate was a program that sought to determine whether it was being observed by regulators (it checked for the telltale signs of the standard test-suite) and changed its behavior to color within the lines.

Software that is seeking to harm the owner of the device that's running it must be able to detect when it is being run inside a simulation, a test-suite, a virtual machine, or any other hallucinatory virtual world. Just as Descartes couldn't know whether anything was real until he assured himself that he could trust his senses, malware is always questing to discover whether it is running in the real universe, or in a simulation created by a wicked god:

https://pluralistic.net/2022/07/28/descartes-was-an-optimist/#uh-oh

That's why mobile malware uses clever gambits like periodically checking for readings from your device's accelerometer, on the theory that a virtual mobile phone running on a security researcher's test bench won't have the fidelity to generate plausible jiggles to match the real data that comes from a phone in your pocket:

https://arstechnica.com/information-technology/2019/01/google-play-malware-used-phones-motion-sensors-to-conceal-itself/

Sometimes this backfires in absolutely delightful ways. When the Wannacry ransomware was holding the world hostage, the security researcher Marcus Hutchins noticed that its code made reference to a very weird website: iuqerfsodp9ifjaposdfjhgosurijfaewrwergwea.com. Hutchins stood up a website at that address and every Wannacry-infection in the world went instantly dormant:

https://pluralistic.net/2020/07/10/flintstone-delano-roosevelt/#the-matrix

It turns out that Wannacry's authors were using that ferkakte URL the same way that mobile malware authors were using accelerometer readings – to fulfill Descartes' imperative to distinguish the Matrix from reality. The malware authors knew that security researchers often ran malicious code inside sandboxes that answered every network query with fake data in hopes of eliciting responses that could be analyzed for weaknesses. So the Wannacry worm would periodically poll this nonexistent website and, if it got an answer, it would assume that it was being monitored by a security researcher and it would retreat to an encrypted blob, ceasing to operate lest it give intelligence to the enemy. When Hutchins put a webserver up at iuqerfsodp9ifjaposdfjhgosurijfaewrwergwea.com, every Wannacry instance in the world was instantly convinced that it was running on an enemy's simulator and withdrew into sulky hibernation.

The arms race to distinguish simulation from reality is critical and the stakes only get higher by the day. Malware abounds, even as our devices grow more intimately woven through our lives. We put our bodies into computers – cars, buildings – and computers inside our bodies. We absolutely want our computers to be able to faithfully convey what's going on inside them.

But we keep running as hard as we can in the opposite direction, leaning harder into secure computing models built on subsystems in our computers that treat us as the threat. Take UEFI, the ubiquitous security system that observes your computer's boot process, halting it if it sees something it doesn't approve of. On the one hand, this has made installing GNU/Linux and other alternative OSes vastly harder across a wide variety of devices. This means that when a vendor end-of-lifes a gadget, no one can make an alternative OS for it, so off the landfill it goes.

It doesn't help that UEFI – and other trusted computing modules – are covered by Section 1201 of the Digital Millennium Copyright Act (DMCA), which makes it a felony to publish information that can bypass or weaken the system. The threat of a five-year prison sentence and a $500,000 fine means that UEFI and other trusted computing systems are understudied, leaving them festering with longstanding bugs:

https://pluralistic.net/2020/09/09/free-sample/#que-viva

Here's where it gets really bad. If an attacker can get inside UEFI, they can run malicious software that – by design – no program running on our computers can detect or block. That badware is running in "Ring -1" – a zone of privilege that overrides the operating system itself.

Here's the bad news: UEFI malware has already been detected in the wild:

https://securelist.com/cosmicstrand-uefi-firmware-rootkit/106973/

And here's the worst news: researchers have just identified another exploitable UEFI bug, dubbed Pixiefail:

https://blog.quarkslab.com/pixiefail-nine-vulnerabilities-in-tianocores-edk-ii-ipv6-network-stack.html

Writing in Ars Technica, Dan Goodin breaks down Pixiefail, describing how anyone on the same LAN as a vulnerable computer can infect its firmware:

https://arstechnica.com/security/2024/01/new-uefi-vulnerabilities-send-firmware-devs-across-an-entire-ecosystem-scrambling/

That vulnerability extends to computers in a data-center where the attacker has a cloud computing instance. PXE – the system that Pixiefail attacks – isn't widely used in home or office environments, but it's very common in data-centers.

Again, once a computer is exploited with Pixiefail, software running on that computer can't detect or delete the Pixiefail code. When the compromised computer is queried by the operating system, Pixiefail undetectably lies to the OS. "Hey, OS, does this drive have a file called 'pixiefail?'" "Nope." "Hey, OS, are you running a process called 'pixiefail?'" "Nope."

This is a self-destruct switch that's been compromised by the enemy, and which no one on the bridge can de-activate – by design. It's not the first time this has happened, and it won't be the last.

There are models for helping your computer bust out of the Matrix. Back in 2016, Edward Snowden and bunnie Huang prototyped and published source code and schematics for an "introspection engine":

https://assets.pubpub.org/aacpjrja/AgainstTheLaw-CounteringLawfulAbusesofDigitalSurveillance.pdf

This is a single-board computer that lives in an ultraslim shim that you slide between your iPhone's mainboard and its case, leaving a ribbon cable poking out of the SIM slot. This connects to a case that has its own OLED display. The board has leads that physically contact each of the network interfaces on the phone, conveying any data they transit to the screen so that you can observe the data your phone is sending without having to trust your phone.

(I liked this gadget so much that I included it as a major plot point in my 2020 novel Attack Surface, the third book in the Little Brother series):

https://craphound.com/attacksurface/

We don't have to cede control over our devices in order to secure them. Indeed, we can't ever secure them unless we can control them. Self-destruct switches don't belong on the bridge of your spaceship, and trusted computing modules don't belong in your devices.

I'm Kickstarting the audiobook for The Bezzle, the sequel to Red Team Blues, narrated by @wilwheaton! You can pre-order the audiobook and ebook, DRM free, as well as the hardcover, signed or unsigned. There's also bundles with Red Team Blues in ebook, audio or paperback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/01/17/descartes-delenda-est/#self-destruct-sequence-initiated

Image:

Mike (modified)

https://www.flickr.com/photos/stillwellmike/15676883261/

CC BY-SA 2.0

https://creativecommons.org/licenses/by-sa/2.0/

#pluralistic#uefi#owner override#user override#jailbreaking#dmca 1201#schneiers law#descartes#nub of certainty#self-destruct button#trusted computing#secure enclaves#drm#ngscb#next generation secure computing base#palladium#pixiefail#infosec

577 notes

·

View notes

Text

🇵🇸 🚨

OVER 400 BODIES FOUND BETWEEN AL-SHIFA AND KHAN YUNIS AFTER ISRAELI WITHDRAWAL

📹 Scenes of local civil defense crews working to recover the bodies of those killed by the Zionist occupation army in the Khan Yunis area after the occupation withdrew from the city recently.

At least 409 bodies have been recovered by civil defense crews between Al-Shifa medical complex and Khan Yunis after the Israeli occupation's withdrawal of meneuvering ground forces from the Gaza Strip, leaving just a single brigade to secure the so-called Netzarim corridor running from Israeli occupied Palestine to the Gaza coast, dividing the northern and southern halves of the enclave.

#source1

#source2

#videosource

@WorkerSolidarityNews

#gaza#gaza strip#gaza news#gaza war#gaza genocide#genocide in gaza#genocide#genocide of palestinians#israeli genocide#israeli occupation forces#israel#israeli occupation#israeli war crimes#war crimes#crimes against humanity#israel palestine conflict#war#politics#news#geopolitics#world news#global news#international news#palestine#breaking news#palestine news#palestinians#free palestine#war in gaza#occupation

292 notes

·

View notes

Text

Here’s a round-up of the latest developments

Gaza’s hospitals continue to come under attack by Israeli forces. At al-Shifa Hospital in Gaza City, thousands of medical staff, patients and displaced people say they are trapped by Israeli forces who now control the complex. An Israeli attack killed a doctor at al-Aqsa Hospital in central Gaza, according to the hospital’s director. The Palestine Red Crescent says that two health facilities in Khan Younis have been without water for five days.

A Tanzanian citizen has been confirmed to have been among the dead in Hamas’s October 7 attack on Israel, according to the Tanzanian Foreign Ministry.

Israel has announced that it will allow two fuel trucks per day into Gaza to power water and sewage operations. Aid groups have said that will not be enough, while right-wing members of Israel’s own government oppose any move to allow water or fuel into the besieged enclave.

The Israeli parliament’s deputy speaker, Nissim Vaturi, has said that Israel should “burn Gaza now”, rather than send in fuel and water.

The Palestinian telecommunications company Paltel says that service has been partially restored after it was able to secure some fuel for its generators.

Fears mount in southern Gaza, where Israel has threatened to expand ground operations. Israeli bombing has targeted areas of southern Gaza, including Khan Younis, home to hundreds of thousands of displaced people from the north of the Strip.

al jazeera

457 notes

·

View notes

Text

“President Joe Biden ticked through several things that he needed to see Israeli Prime Minister Benjamin Netanyahu do immediately: open up the Erez crossing into northern Gaza and the port of Ashdod in southern Israel for humanitarian aid; significantly ramp up the supplies getting in through Kerem Shalom.

A person familiar with the Thursday call paraphrased Netanyahu as responding: “Joe, we’re gonna do it.”

But Biden wasn’t finished. The prime minister must announce the moves that evening, the president insisted.

By Thursday night, the Israeli security cabinet had approved those three measures to increase humanitarian aid entering the besieged enclave.”

#Netanyahu is a war criminal#Israel Gaza#Netanyahu is a Trump sycophant#unholy alliance of Isreali conservatives and Republican evangelicals#republicans hate Muslims#republicans wan’t to forcibly relocate Palestinians#Netanyahu is influencing our election in favor of Trump#republican assholes#foreign affairs#foreign policy#diplomacy#Netanyahu can’t survive without Hamas#fuck netanyahu#fuck Hamas

248 notes

·

View notes

Text

"Israel is reported to have readied a proposal for a two-month truce that aims to secure the release of captives held by Hamas and other groups but without ending the war in Gaza.

US and Israeli press reported late on Monday that Israel is optimistic that it could conclude a deal with the help of the US. The plan comes against a backdrop of intensified combat in southern Gaza as well as increasing pressure on the Israeli government to find a deal to bring the captives home.

US website Axios quoted Israeli officials as saying that the proposal has been presented to Hamas through Qatari and Egyptian mediators. It includes a two-month truce during which all Israeli detainees in Gaza will be released.

Israel’s Channel 13 reported that the principles of the deal consist of three to four stages of captive release. Meanwhile, the Israeli military would withdraw from some areas of the enclave, but without ending the war.

Reports suggest that the US is pushing the plan with regional partners. The White House’s Middle East coordinator, Brett McGurk, is now in Cairo to discuss the deal, with plans to continue to Qatar."

286 notes

·

View notes