#hammer space both physically exists and can be interacted with by other’s and also doesn’t and can’t <3

Text

this is already essentially true and effects nothing so i’m just gonna believe that mobians all have special access to their very own personal hammer spaces

#hammer space both physically exists and can be interacted with by other’s and also doesn’t and can’t <3#sonic puts stuff in his quills and it is there and occupies physical space because stuff can be knocked out or interfaced with by others#but things are also not there#they’re just not there#amy puts her hammer behind her back and it’s behind her back#but also her hammer is clearly not behind her back and is not capable of being behind her back#tails keeps his miles electric strapped to his hip where it’s not there#and if you really wanted to you could take it from his hip#except for the fact that it’s not there#actually wait i’m torn if personal items in hammer space can be interacted with by others#or if that’s just a chaos emerald thing#everyone keeps their stuff secure in their own hammer spaces except for when the universe deems it necessary for it to be stolen#when tgey immediately take their stuff out of hammer space into their real hands#except the chaos emeralds which refuse to be put in hammer space#and instead create their own fuckery of simultaneously existing in physical space where anyone can interface with them and knock them out#and also they Don’t#also humans can’t do any of this they just have bags#rambles#this is funny to think about

59 notes

·

View notes

Text

Interview with GHOST and TRIBULATION

The Undisguised Truth

TOBIAS FORGE and JONATHAN HULTÉN have a lot in common. While one of them currently slips into the role of the exalted charmer Cardinal Copia as the singer of GHOST, the introverted TRIBULATION guitarist on stage transforms into a fascinating, expressive being who exists beyond genre and gender boundaries. What is real, what is an artificial figure? METAL HAMMER met both of them during their tour together for a conversation that allows far more than just a look behind the scenes of two of the most popular metal bands at the moment, but also unexpectedly intimate insights.

Tobias, originally you didn't want to be the singer of Ghost. Did the mask help you to come to terms with this exposed role?

Tobias Forge: Yes, well, at least from today's point of view. But I never wanted to be unknown.

What function does your stage make-up have, Jonathan?

Jonathan Hultén: It helps me to put myself in a certain mood. The idea behind it is to isolate and reinforce a fraction of myself, an aspect of my personality. To immerse myself in this is an experience beyond the everyday state of mind.

Strengthen also Cardinal Copia or Papa Emeritus facets of your personality, Tobias?

Tobias Forge: I'm not shy, but I'm not as sociable as Cardinal Copia - and also not a "physical clown" like him. What I do is a kind of mixed bag. I imitate people I find funny or interesting.

Basically, the way actors do it. If you asked Robert DeNiro how he came up with the young Don Corieone, he would probably say: Well, there was this guy in my old neighborhood... The costume gives you the opportunity to completely surrender yourself in that moment and just be that new person. That's interesting, because you only reveal it to a few people for a limited time. You don't have to see how that person lives the other 22 hours of the day. Like with actors: James Bond is cool because you only see certain sides of him. Never in the bathroom or shower. Well, not in the toilet, in the shower. But always in the company of a snake or something he kills.

How long does the transformation take?

Tobias Forge: But you finished much sooner than I did.

Jonathan Hultén: For pragmatic reasons. I like to get it done as soon as possible so as not to get in a bind later. How about you?

Tobias Forge: We have a very tight schedule. Pretty much exactly one hour before the show starts I walk in the door as Tobias and come out as someone else.

What does this transformation do to you?

Jonathan Hultén: You have to enter a stage with emphasis. So it's good to be prepared.

Tobias Forge: And that's what happens within this hour. You slowly start to move differently... I love being a different person for two hours and then changing back. But I need some time for that, usually I stay alone for an hour after the show.

Jonathan Hultén: That's good. As far as I can, I try to do the same. Mostly by doing something that I can be introspective about... ...carry things back and forth or something.

Tobias Forge: I think that's very important. There is potential suffering in art, especially in mental health. The smaller the discrepancy between yourself and the person you are portraying on stage, the harder it is to deal with. If you are merely associated with your stage character, people expect you to behave like that in real life.

And that can be problematic...

Tobias Forge: Exactly, because they created this super human being who can do anything, who has a carte blanche. Everyone applauds, everyone laughs, and everything you do is funny or cool. And if you take it to the bar afterwards... There are bad examples of people who can't get down in normal life, become alcoholics or, well, die.

Jonathan Hultén: Sure, all that can be destructive. But in my case it was very helpful to discover my more explosive, extroverted sides. And to dare to give them more space, because privately I am quite shy. That's also part of the process of building up, which takes a long time.

I gradually gain self-confidence from this, so that I can now express myself better in everyday situations.

Tobias Forge: I think they are one and the same. It's like mental martial arts, where the person who doesn't like the fight, but still has to face it - within the limits of the dojo, of course, so as not to hurt anyone. And, yes, art is basically good for anyone who has the desire to become someone else. It's a generalization, but I think there's a lot of truth in it: many artists choose this path because they weren't very popular at school. Or they can't come out of themselves, but their art offers them an opportunity to do so. It's fun to go on stage, to transform and feed off the energy or admiration.

How you interact with the audience has changed over the years.

Tobias Forge: Sure. The masked person has an advantage of about 70 concerts, so 1,000 hours on stage. If I had given myself the same amount of time to develop without the make-up, without the role, just with acoustic guitar, I might have created a completely different stage personality. But this is completely uninteresting for me, because I prefer this super character! (laughs)

Jonathan Hultén: transformation would then no longer be so dramatic, but much more subtle.

Tobias Forge: And you'd have to be comfortable in your own skin.

Jonathan Hultén: I'm working on it. (laughs)

Jonathan, your solo debut, CHANTS FROM ANOTHER PLACE, will be released soon and you will also be touring with Chelsea Wolfe. Will you be different on stage there than you are here with Tribulation?

Jonathan Hultén: It has become harder to separate the two. They are like different shades of the same color. And I've found that they both borrow a lot from each other. The tribulation performer exists much longer, so he has much more experience. He/she is like an archaeologist who explores an inner wildness and passion. Over the years a lot of weird stuff has been dug up and included.

On the other hand, the solo performer, who has only been around for about three yen and is still is at the beginning of the excavations. However, I expect that also here many interesting things will appear

What can we expect from you live?

Jonathan Hultén: Just like with Tribulation, the atmosphere will be very important. But apart from some dramatic excursions, the show will be mostly silent and contemplative. The silence gives more room for more complex emotions to unfold in a way that I miss in the energetic performances of Tribulation.

These in turn defy the unwritten rules of a traditional metal show, not least thanks to you. Tribulation are considered a death metal band...

Tobias Forge: I wouldn’t call you guys like that.

Do you see yourselves as pioneers? Do you enjoy being different?

Jonathan Hultén: I stopped thinking about whether people see me as stupid, weird or whatever. It's the only way I can do it. Headbanging just wasn't enough. I felt there had to be something bigger, some kind of ectase. This may be weird, but it feels good.

How important are grace and style to you?

Jonathan Hultén: Both are important, but it's equally important not to be obsessively attached to them. Someone once said that grace is a combination of spontaneity and control. It's a good rule of thumb - on and off stage. It's always about balance. Every situation is unique and requires a unique approach.

Tobias Forge: You should be really proud of it, apart from the fact that your music is great. Your performance is dramatically different from any other. Besides the music, your physical attributes and the way you present yourself make you a very unique and interesting person. Strange, cash, different. You should definitely pursue that. Yes, I think you should see yourself as a pioneer.

Jonathan Hultén: Mm, thank you. (chuckles)

What does that do to your audience?

Tobias Forge: If you are a live musician, have an antenna for it and you don't completely care, you always enter a symbiosis with the fans. Give and take, almost like in a physical relationship. You will try to perfect ways to give pleasure to each other. I know it sounds weird, but every decent relationship changes with age. You grow together, you have new needs or ideas. That's why some couples bring in other people, or whips or plugs. It's the same with you and the audience. During our second show I noticed that our audience is very positive, but I couldn't make a rhyme out of it.

Why that?

Tobias Forge: The room was filled with Hard Rock people, the kind of people I've been playing to since I was a teenager. But they weren't headbanging as usual. Instead they did something else.

Jonathan Hultén: Wiggle.

Tobias Forge: Yeah, they were wiggling around. (laughs) And singing and laughing, very different from what I knew from Death or Black Metal shows.

Are there any other special features of your fans?

Tobias Forge: When we played the first headliner shows in America, I noticed for the first time the gender diversity in our audience. Our fans are a lot of girls, a lot of guys, and a lot in between. We've always been a magnet for people who are unhappy with their gender or don't feel they belong anywhere: Kids, many outsiders and outcasts in various fragile states.

Jonathan, Tobias' words seem to resonate with you.

Jonathan Hultén: Yes, they do. I don't speak for tribulation as a whole when I say this, but I personally don't feel I belong to either gender. But I've never felt the need to choose either. Androgyny is what I feel most comfortable with. This tendency probably also applies to performance, whether tribulation or solo.

Tobias Forge: The best portrayal of the devil I've ever seen is from the movie 'The Passion of Christ'. Satan is portrayed by a woman, but speaks in a man's voice and thus becomes the epitome of androgyny, completely genderless. For incorporating this aspect into your stage personality, I give credit to you and the band. Especially when you get together with Adam (Zaars, guitarist of Tribulation) on stage, it seems elfish and feminine, but also masculine. This is incredibly interesting and unusual, especially in the rigid heavy metal genre with this "men are men" and "women are women" thing: Doro Pesch, girl, Manowar, guys, great. But when I think of all the metal bands I like, there are also examples of very attractive androgyny, which is not necessarily sexual. Not to mention seventies rock bands.

You have been confronted with different kinds of music and art forms from a very young age. Does that give you an artistic advantage?

Jonathan Hultén: It helps me to keep my relationship to art fresh, to get excited. Whether in childhood or in adulthood: open-mindedness helps the creative process. If you're not afraid of opening up to all kinds of different music styles, you can find inspiration in the most surprising places. No matter where the idea comes from - the important thing is whether it works. Certainly, this is reflected in all areas of creative work. Become the medium through which the flow of inspiration flows - then collect the gold pieces that this flow carries with it and create something beautiful out of them.

Does the metal context limit your expressiveness?

Tobias Forge: I don' t feel restricted with Ghost at all. There are only a few ideas I can' t realize with the band, because Ghost is a combination of all the things I like about music, cinema and theatre. But if there is enough time in the future, I would love to be in a completely different band where I am not the center of attention. I am a guitarist and would like to sing backup. That corresponds to me much more.

Jonathan Hultén: I'm exploring something new, and it's been quite interesting - and different. There are endless possibilities to discover yourself, artistically and as an artist. Only unfortunately there is not enough time.

I'm afraid that we don't have enough time either.

Tobias Forge: Yes, but these things are existential. They are not only about art and being an artist, but also about how both are connected to the human psyche and why people, artist or not, need art to function in modern times. That, by the way, is also one of the things I appreciate about tribulation: You are artists, not just any death metal band. I don’t want to hang anybody on the fence but especially in metal many musicians claim that they make music for themselves first and foremost. That’s not true! As soon as you go on stage you want to get something back. Even GG Allin! And his gigs were really a confrontational and bad experience for every lover of the fine arts. Playing just for yourself? That's not how it works. You either do it to please or to deliver something. People laugh, cry, clap, scream, whatever... And when they leave, they feel a little bit better. That's entertainment!

Anja Delast/ Metal Hammer

-------------------------------------------------

Please do not share without naming the origin. I have taken a lot of effort with it and unfortunately it is distributed without stating the origin. It's somehow sad...

441 notes

·

View notes

Text

We Stand, Fate-Tested: Final Thoughts

You thought you’d seen the last of that title? Never! I may have been distracted by Sylvgrid Week for a while, but I finally got this cleaned up enough to post.

So, to those of you who haven’t read We Stand, Fate-Tested, this post is going to spoil practically the entire fic, so do yourself a favour and read the fic HERE. This post is also very, very long, so I apologize if you read the whole thing aha.

Anyways, continuing on, I wrote over 70 000 words for this story and this was after two solid weeks of story editing to get the fic not to come across as incredibly clunky. I want to use this post to discuss my favourite and least favourite things about writing the story and to talk about some of the things I had planned that never made it into the final draft or things that were changed to fit the flow of the story better.

Let’s start with my favourite and least favourite things!

Favourite Chapter: VII - What’s A Little Fear (I loved this chapter. It was a blast to write, creating the duality of the attacks as well as finally tapping into the mystery genre I stubbornly tagged this fic with. It’s also one of my favourites to reread).

Favourite Present Scene: Either Byleth/Claude in the coffeeshop (Chapter III), the car crash scene (Chapter VII) or the Byleth/Claude scene in the bathroom (Chapter VIII)

Favourite Past Scene: Either Byleth/Dimitri’s first reunion (Chapter II), the Sreng fight scene (Chapter IX), or Dimitri’s death scene (Chapter X)

Favourite Character to write: Past!Dimitri, Present!Edelgard, Present!Claude (probably no surprises there haha)

Favourite Plot Detail: Byleth having a flashback in the tomb and then going to the lab and having that scene play out later, in Chapter XI, in the past.

Least Favourite Chapter: XI - No Rest For the Weary (Don’t get me wrong, I like how it turned out. I just had so many things that were scrapped for this part and something about it still doesn’t sit with me as well as I wanted it to. It was hard to write a past section without the anchor for the past: Dimitri)

Least Favourite Present Scene: Probably the lab scene with Byleth (Chapter I) where she looks up Claude because it was written so early and it still feels a bit info-dump-esque to me.

Least Favourite Past Scene: Hands down Byleth’s final scene (Chapter XI). I do feel like it came out alright, but I really struggled with this scene. It was tricky to highlight everything I needed to in that scene without removing all the development Byleth had gone through.

Least Favourite Character to write: Many of the undergraduates in the present. It’s not that I didn’t like them, I was just frustrated because the future was focused so heavily on a few key characters that none of the background characters had the voices I wanted to give them.

Least Favourite Plot Detail: The Scorch and the Riots. I specifically crafted them so that there would be a plausible excuse for the physical records to have been destroyed and yet I feel like I relied too much on them in some cases.

Now let me talk about plot details that almost appeared!

Starting with some general facts:

The Golden Deer were supposed to be MUCH bigger characters in the present. I had programs, relationships, interactions and plot points hinged on their interactions with Byleth and Claude, but I ended up scrapping a lot of it when I moved forward with the undergrad dig team plot and decided to bring in Edelgard and Dimitri more.

They were supposed to go to Shambhala. Instead of at Garreg Mach, the final attack was actually supposed to take place while at a dig site in Shambhala. After research into archaeology more as a whole, I realized this didn’t fit, so I removed it.

I considered having Jeralt be alive in the present.

I was going to write more dreams for Byleth and actually have them as independent scenes.

Byleth was supposed to make two separate trips to Almyra in the past.

The fic was originally only 10 chapters and would have ended abruptly in the past.

Rhea was supposed to make an appearance in the present.

Chapter Specifics:

Chapter I

Ironically, the only real trick with this is I considered renaming the university, but ended up leaving it.

This chapter was actually mostly written before much of the plot was hammered out so it can read a tiny bit inconsistently to me now, but there’s not much I left out of it.

Chapter II

Initially, I had all three of the reincarnated lords in Byleth’s tutorial, but then I remember that that never happens in university courses so I fixed it. I hadn’t planned on introducing the Guardian’s Sword here, but I did accidentally and then just rolled with it.

Byleth and Dimitri’s Chapter IV argument was originally in this chapter. They were also originally married in between Chapters I and II, something which changed to between III and IV once I changed this chapter.

Chapter III

Dimitri was supposed to tell Byleth that he was having odd dreams before he found out about the dig project in the present. This chapter also would have had a vivid dream scene before Claude and Byleth’s tea conversation lasted 3000 words.

Claude was supposed to be a cause of strife in Byleth and Dimitri’s relationship in the past, but then I decided that was stupid and changed him to play the voice of reason. Additionally, this chapter changed a lot as a result of the moving of the wedding.

Chapter IV

This chapter was, again, supposed to feature a Byleth-brand dream, but I changed it to the scene in her office with Claude to set up the Almyra trip. This was the moment in the story where I had decided to make Claude the Almyran Prince. Before this, he was just an ambassador’s son.

Claude in the past was supposed to give a wedding gift to Byleth and Dimitri, but this was changed when I had him attend the wedding. Byleth and Dimitri were supposed to argue about Byleth and Claude’s friendship, but as I already said, I didn’t want Claude to be a source of jealousy.

Chapter V

This chapter actually stuck fairly close to the points of the outline I made. The only point I struggled with was having the tapestries be mostly ruined or preserved and I eventually landed on preserved.

The council meeting was an addendum to the chapter written after the heavier scene at the end. I added it to give a bit more background to Byleth being in Fhirdiad and the way that her relationship with Seteth and their friends would become a bit more strained in the future.

Chapter VI

This chapter was supposed to highlight the argument alluded to in the chapter between Dimitri, Edelgard, and Claude. There was supposed to be a little bit about how the tomb seemed to be dragging up animosity that didn’t previously exist. I removed it because I wanted more space to discuss the dream and the scene with the TV.

The past section was supposed to feature more political drama. There was supposed to be a cabinet meeting that showed the progress of divorcing church and state and siphoning the power away from the nobility, but I came up with the idea for the Rhea scene which I ended up liking a lot more, so I rewrote the chapter, almost completely removing the politics.

Chapter VII

This chapter actually almost exactly follows its outline. The four go to Fhirdiad and deal with their pursuers and end in a car crash.

The only change in the past was that it originally ended with the infirmary scene from chapter 8, but I changed it to create a stronger parallel between the past and the present by ending both on relative cliffhangers.

Chapter VIII

The present section of this chapter was actually one of the first scenes I ever outlined for this fic. Naturally, there wasn’t much that was left out. I scrapped a few interactions with people including Dorothea, Sylvain, and Mercedes in order to give Byleth and Claude more time to chat in the bathroom. Basically, the point of the party was to really highlight the fact that while reincarnation had occurred, everyone had ended up in different situations with different people.

Originally, Byleth was supposed to have recovered well from the assassination attempt and it was supposed to be Dimitri who took longer to heal. Because I was already leaning into the dying-goddess idea though, I swapped them to make it more impactful when Byleth still tries to go against all of her advisors to get Claude to take her to the Slithers.

Chapter IX

Byleth, here, was only supposed to begin to suspect Flayn. I considered having her not even speak to Seteth and Flayn, but I changed that because I think I wanted her to know at this point. However, it was only when I began writing the chapter that I realized that Seteth would know the Archbishop’s full name, so that tidbit was actually the very last thing added to this chapter.

I wrote the past section of this chapter first. It was fairly cut and copy from the outline so not much was left out here, just one small scene where Byleth and Dimitri saw Claude off when they were still mad at each other and they would have been awkward.

Chapter X

Originally, Leonie was going to be the one to find Claude and Byleth in the alley, but I liked Edelgard and Hubert for it better. This is where Rhea would have appeared in the Modern section. She would have come looking for Seteth before the send-off party started and would have had a crypt conversation with Byleth, but instead, I changed her simply to be the mysterious benefactor that funded the original expedition and removed her physical appearance for flow purposes.

The only big change seen in the past section here, was that Claude and Byleth were supposed to bring Dimitri outside of Shambhala before he died and he would have died seeing the rising or setting sun. When I wrote the cave-in this was changed to match that.

Chapter XI

Since Byleth was originally supposed to have had a different conversation with Seteth in chapter 9, when the four of them were running for the gunman, they would have revealed their ancestry and connections to the past lords which would have been the point that Byleth actually connected all the dots.

Byleth and Claude were supposed to be en route to Almyra after dissolving the monarchy when she started to die, causing him to take her back. I changed this because it didn’t fit with the futility of so many of the actions that Byleth had taken after Dimitri’s passing. I also just really wanted her to have the ‘I never intended to return to the Monastery’ and the ‘I hadn’t planned on living this long’ lines.

Chapter XII

Claude was supposed to be with Dimitri and Edelgard when they said goodbye. There was supposed to be strange tension between them, but it didn’t fit with their interactions inside the tomb, so I just sent him back to Almyra to coordinate his abdication instead. Originally, there would have been a shootout in the tomb as well, resulting in Byleth actually killing the gunman, but instead I used their escape to give Seteth and Flayn a reason to disappear. This is one of the points I was most tentative about changing and is one of my least favourite things that I changed in the whole story.

The past section was originally just supposed to have been Claude admiring his commissioned tapestries, but I couldn’t resist adding a Hilda in because I love her.

That’s pretty much it for all the plot details and changes. And that’s pretty much everything I have to say about the story. This fic was a labour of absolute love and it has given me an incredible appreciation for the writers in fandoms who can continue stories into the tens of chapters because I found my plot tied up in a neat little bow at 12 chapters.

If you have any more questions, please shoot them to me on Tumblr, in the AO3 comments, or even on Twitter (@nicolewrites37) and I’ll be happy to answer them.

Thank you so much to everyone who read, commented, and left kudos on the fic because knowing that there were people waiting to see more was the reason I was able to continue writing and finish the fic. I hope you enjoyed the story overall and that you might find something else you like amidst my other Three Houses works.

- Nicole

#the writing section#we stand fate tested#fic: we stand fate-tested#fe3h#fire emblem three houses#author's thoughts#claudeleth#dimileth#tumblr#ao3#long fic

9 notes

·

View notes

Photo

How Turning Jim Into A Troll Disempowered Jim As A Character

or, Why Some Of The Audience Found That Sequence Distasteful And Are Still Bitter

(Screenshots are sourced and abridged from the springhole.net article ‘On Writing Empowered & Empowering Characters’ - this website offers advice for structuring and analyzing narratives, characterization, setting, and themes, and also a bunch of fun generators for those things.)

Jim did not consent to being turning part-troll. Consent gained through coercion is not consent, and “everyone you care about will probably die horribly if you don’t do as I say” is coercive.

Jim gets a strength and agility boost from being shape-shifted, which, yes, did give him an advantage when fighting Gunmar in single combat, but

fighting Gunmar in single combat went against the show’s ongoing theme about how Jim’s capacity for teamwork gave him an advantage that past Trollhunters did not have, and

in a coordinated group attack, either Claire’s teleportation or Toby’s flying hammer could have let them get Jim into a position to land the kill strike against Gunmar without Jim needing to change species.

Jim lost his abilities to

eat food of the sort to which he is accustomed,

be in sunlight, and

be in human-occupied spaces without a disguise, unless the humans are either already aware of and cool with trolls or believe he is in costume.

His freedom of movement and who he can interact with socially and under what circumstances has thus been severely curtailed. Furthermore, the loss of familiar things, such as any old favourite comfort foods, is not good for emotional health.

Jim has to adjust to completely new instincts and a completely new body. I have commented in the past that, logically, the changes to his height, weight, limb length, center of gravity, and reflex speed, could have added up to Jim being completely uncoordinated and needing to relearn how to fight from scratch after his transformation, defeating the stated purpose of said transformation as a means to defeat Gunmar quickly.

Jim’s personality has also been changed by his transformation. In his sparring match with AAARRRGGHH and Claire, he was more confident and playful, and in the final battle he demonstrated more aggression than he usually does.

Neither of those developments are bad, but having those changes come about abruptly, as a side-effect of a magical transformation, is unpalatable compared to a gradual shift in personality over time. It feels like a cop-out from writing character development.

Furthermore, if Jim notices his personality is different post-transformation, this will exacerbate the identity crisis that he still hasn’t finished resolving.

+=+

Jim being turned into a troll has been speculated about since the first season, and I think the fan theories about it may also have influenced the negative reaction from some sections of the audience. The fan theories and related fanfics posited three broad categories of ways Jim might become a troll:

1) Troll Magic Overexposure. Jim’s transformation would occur by accident, akin to Blinky’s transformation into a human, probably due to exposure to some trollish artifact or potion, or possibly as a cumulative response to all the trollish magic Jim has been exposed to over the series.

2) Changeling Heritage. Jim’s transformation was something he was always hypothetically capable of, or a natural part of his puberty, due to trollish ancestry of which Jim had been unaware prior to that point; usually James Lake Senior being a Changeling. This could and often did cross over with the first idea, with troll magic being the catalyst which activated Jim’s troll genes.

3) Enemy Action. Jim’s transformation would be forced on him by an enemy, usually crossing over with one or both of the previous theories. I believe I recall a couple of stories where Gunmar’s attempted use of the Decimaar Blade on Jim was the catalyst to activate Jim’s previously-unknown Changeling genes.

Because expectations existed, there is a natural inclination to try and make the data fit that pattern.

Considering Merlin’s treatment of Jim in the episode in question, it is easy to file Jim’s canonical transformation under the ‘Enemy Action’ theory. The fact it was done by a supposed ally clashes with the theory’s premise, encouraging anyone who considers this theory canon to emphasize Merlin’s cruel and abusive behaviour towards Jim in the pre-transformation scenes, and to consider other instances of Merlin’s callousness towards various characters as evidence Merlin was an antagonist masquerading as an ally all along.

The majority of pre-Season Three Troll!Jim stories also had the transformation reverse, wear off, or (in the Changeling!Jim stories) Jim’s human and troll forms become shapes he could shift between, so having a supposedly permanent transformation occur drew backlash from those invested in this possibility.

+=+

Having Jim transform only three episodes before the end of the series was a poor decision on the writers’ part. The audience was not given enough time to bond with Troll!Jim before he entered the Big Final Battle that the audience had spent three seasons anticipating finding out how Human!Jim was going to win.

Just as Jim fighting Gunmar alone went against the series’ emphasis on the importance of teamwork, Jim becoming a troll to fight Gunmar went against the series’ emphasis on how Jim being human - physically human, with the squishiness and ability to survive in sunlight that this implies - could give him an advantage against troll opponents, who aren’t used to fighting humans who have sufficient armour, weaponry, and training to not be immediately overrun.

Jim’s strategic thinking and the different culture of his upbringing still allows him to come up with strategies that a troll raised among trolls wouldn’t think of, but the physical advantages and disadvantages of being a human fighting a troll have been cast aside, and now he’s just a smaller troll fighting a bigger troll.

This can still result in cool fight scenes, but when opponents start out with extreme differences in size, strength, and fighting style, reducing the contrast between them makes their fights less visually and narratively interesting than they could have been if the original extreme contrast was left alone.

+=+

In case it is not visible, the clipped article from Springhole in the screenshots above reads as follows.

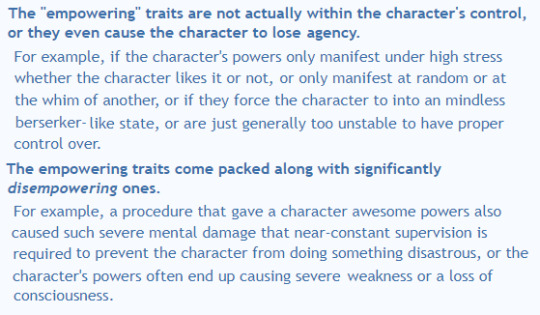

[begin screenshot 1 of 2, medium-blue text on pale blue background]

What can undermine a character’s perceived empowerment:

These are some tropes and traits that can potentially undermine this character being perceived as empowered or empowering by audiences. Some issues to watch out for include:

The “empowering” traits come from something that happened without the character’s consent.

For example, by being forced to undergo experimentation or training that gives the character new powers or skills. It’s important to note that this isn’t always bad, especially if the character uses these traits to gain freedom. However, it can still leave a bad taste in some people’s mouths, especially if what happened to the character was especially brutal or torturous, or if the character isn’t actually responsible for getting free.

[end screenshot 1 of 2] [begin screenshot 2 of 2, same colour scheme, medium-blue text on pale blue background]

The “empowering” traits are not actually within the character’s control, or they even cause the character to lose agency.

For example, if the character’s powers only manifest under high stress whether the character likes it or not, or only manifest at random or at the whim of another*, or if they force the character into a mindless berserker-like state**, or are just generally too unstable to have proper control over.

The empowering traits come packed along with significantly disempowering ones.

For example, a procedure that gave a character awesome powers also caused such severe mental damage that near-constant supervision is required to prevent the character from doing something disastrous, or the character’s powers often end up causing severe weakness or a loss of consciousness.

[end screenshot 2 of 2]

*The Amulet of Daylight and Merlin’s ability to manipulate it also come to mind. Although Jim does develop skill in controlling the Amulet after its erratic behaviour in the early episodes, the way that Merlin wrested control away from Jim was similar in tone to the moment in various superhero shows where a character’s power suit is ‘hacked’.

** This is why Strickler dosing Jim with Grave Sand wasn’t an empowering scene. (To my knowledge, no one’s been arguing that it was; I’m just giving an example.) Jim had little control of the situation even before he was drugged, and less afterwards. This was presented in the show as a bad thing, so having Merlin later do something similar and trying to present it as a good thing is jarring.

+=+

On a tangentially related note, I noted in Jim’s one appearance in 3Below Season Two that he’s still wearing the Eclipse Armour, even though Gunmar is dead and Jim doesn’t need the Triumbric Stones anymore.

Palette swaps are one of the cheapest ways to change an animation model, so unless the studio was already over-budget, this suggests there was a narrative reason why Daylight wasn’t in use instead.

I posit that Jim has decided to keep using the ‘for the doom of Gunmar’ incantation because he doesn’t really feel like saying ‘for the glory of Merlin’ anymore.

Or he still hasn’t managed to get the armour off since the Eternal Night, but I like this other idea better.

(Queued/posted before Wizards aired and potentially confirmed or refuted this.)

+=+=+

If I recall correctly, and if this information is still accurate, tumblr only shows Original Posts on the main page of the first five tags listed, to prevent people from tag-spamming to get on as many pages as possible.

As such, I have put the #Troll Jim tag low in the list, so it will still be searchable on my blog - I file reblogs of content both for and against Jim’s transformation under that tag - but this post should not be hassling the pro-Troll!Jim contingent by showing up in the main tag.

Let me know if I need to rearrange things, or take that tag off long enough to let this post get off the tag’s first page.

#Controversial#commentary#Trollhunters#Tales of Arcadia#Merlin needs to be Rule Number 3'd#shapeshifting#consent through fear is not consent#Jim Lake Jr#Troll Jim#3Below#toaWizards#Amulet of Daylight#Grave Sand#long post

26 notes

·

View notes

Text

The Well

The Measurement of Time: Chapter 5. You can find all other IkeSen works of mine here. NOTES: This was a LiveWrite! Thank you all so much! ALSO: This whole story does not make much sense without the context from To Honor And Protect! Please go back and read that before you proceed with TMOT. Tagging @ikemenprincessnaga at request. Y’all, I am so attached to Toyotomi-Akechi. I love her. She is my child now.

Sasuke spent most of the next morning in the library, parsing through book after book. After his second shared dreaming experience with Uesugi, he was deeply interested in the data points behind it. Had anyone done a really good, thorough research paper into the topic? It seemed not. That was frustrating. Then again, how could one devise a really proper scientific method to study it?

Mildly put out (only mildly, because that was how the pursuit of knowledge worked--sometimes one needed to recognize a gap in understanding before one could fix it), he returned to the barracks with a few books and settled in at the breakfast table. Tokugawa burst in through the door and shot him a grin.

“Afternoon! Where’ve you been?”

Sasuke really could see a lot of the fabled Masamune Date in Tokugawa’s mannerisms. Historically speaking, it seemed funny. “At the library. I’m doing a bit of light reading.”

A slender hand emerged behind him and peeled a book out from under his stack. Miss Takeda flopped onto the table, a sweet bun in one hand and a grin splitting her cheeks. “This isn’t any ‘light reading’. Do you do math for fun, too?”

Sasuke didn’t know how to respond to that. “Long division could be comforting, I suppose. I rather like going over results from scientific studies.”

Tokugawa snapped his fingers. “You know who you should talk to? They like that stuff, too--”

“Don’t bother yelling. I’m here.” Another woman emerged in the doorway, stretching in her armor. She had sleepy citrine eyes and light brown hair, her hands long and slender. At her waist, two symbols of her house hung: Akechi and Toyotomi. “You’re the new kid.”

“That would be me, yes.” Sasuke thought to stand and bow to her. “Toyotomi-Akechi?”

“Mhm.” She didn’t say much, just skated smoothly across the floor and peeled the book from Takeda’s hands. “Don’t take people’s things.”

“Awww. Don’t kill the vibe.”

But Toyotomi-Akechi fixed Takeda with such a sinister, knowing smile that Sasuke’s unspoken questions faded away. Ah. There was the legendary Akechi smile. The woman flipped the book open. “What are you researching?”

“Shared dreaming.”

“Hm.” If there were questions (and there were, judging by the confused expressions from Tokugawa and Takeda), she didn’t ask them. She just snapped it shut and handed the book back over. “Alright then. I’ll take a bit of looking into the subject myself.”

Sasuke was ready to push half of the books in her direction, but she just shot him a wink and sailed out the door into the backyard. Tokugawa shrugged. “I don’t know where she gets any of her information, but she’s good. I’d just let her do her thing.”

“Fair enough.”

The door opened again, Uesugi standing with her hand on hilt. “Sarutobi.”

“Yes, ma’am?” He’d never called her ma’am before, but it felt impolite not to at this point. Tokugawa choked back a laugh.

“Time for training again.”

“Got it.”

She scowled at Takeda and Tokugawa, who were both stifling laughter. “And you two, while we’re at it.”

“Good luck, Tokugawa!” Takeda vaulted off the table and sprinted out the door with unexpected speed. “Sorry for abandoning you!”

“No you aren’t!”

Uesugi stood, gazing off into space as if a silent audience there saw and understood her struggle. At last, she sighed. “You two, come on. With me.”

---

The dreams didn’t stop.

For the next week, he had a disjointed series of them parade through his unconscious mind. A stone wall, crumbling inward--dark, stately hallways--the faint orange glow of a string of lights, illuminating in patches against columns--quiet whirring of something mechanical--

What was going on?

He asked Uesugi about the first two. Of course she’d had them too. Afterward, they didn’t even talk about it anymore. He would come down into the kitchen at early hours to see her prepping some tea, they’d nod at each other in quiet understanding, and she’d pass him the mug she’d fixed up just for him.

“We should probably notify the Queen,” she remarked once, her smooth voice a thread in the tapestry of morning sounds. As much as the Ishida family line laid claim to being affiliated with the ocean, he couldn’t help but look at Uesugi and think of the sea, too. Uesugi with her ocean eyes of blue and green, her white-blonde sandswept hair, the sharp and soft and angular and rolling parts of her that shaped like the crash of a wave. “She’d want to hear about this.”

“I don’t know that it is entirely of interest yet, aside from being scientifically curious.”

But Uesugi laughed ever so lightly. “I’m pretty sure her whole family line is ‘scientifically curious’. If anything odd is afoot in this city, I’ve little doubt that the Queen would know better than either of us if these dreams are some kind of a portent.”

Admittedly, Sasuke was nervous for other reasons. He still felt that misplaced crush on her Highness. It simmered in him the same way all his favorite questions did. In some ways that felt inappropriate; like a conflict of interest during research, twisting the results ever so slightly in the tester’s favor. It was a ridiculous thought, but he still couldn’t shake it.

Uesugi arranged the meeting regardless. The day of, someone hammered against his door early in the morning.

“Hey there, sleepy!” Tokugawa laughed at Sasuke’s fatigued expression. “Hope you slept well. Uesugi told me to grab this for you.”

“What is it?”

“If you’re in care of us, you have to look like it.” And with that, Tokugawa set a bundle in his arms, shooting him a wink. “Get changed. Your appointment with the Queen is in two hours.”

Confused and curious, Sasuke unrolled it on his bed. Out came a blue and silver uniform. Emblazoned on the chest in shimmering white-blue was the crest of the Nine.

---

The throne room was an informal affair. At the farthest end, a massive window opened out to the ocean, the swirl of waves and distant storms the perennial backdrop of the City. The jet tiles were polished so bright they shone, and at the center, near the wall, was a massive, round, obsidian table. Several stately chairs sat around it.

“Welcome.” The Queen stood as they entered, her sweet smile at home against the waves. “Uesugi. Sarutobi.”

“Your Highness.” Uesugi clasped her fist to her chest and bowed deeply. Sasuke followed suit less gracefully. “We came to talk to you about an odd situation that we find ourselves in. I think it might be something of interest to you.”

She listened with a frown as they laid out the situation: the dreams, their contents, the connected nature of them. After a moment she stood and circled around her seat, pacing by the glass wall.

“My grandfather and grandmother had a very interesting bond,” she noted finally. “They were notable in that not many people in the history of magic--the history we know of, mind you--can both share the same staff.”

“Of course.” Sasuke commented. “Most magic users report that their staves won’t interact well with others. They state that there is odd sparking, resistance, backfiring…”

“Correct.” The Queen motioned to him. “I know your prior employment was involved in studying that. I don’t think your team pinpointed the causes of it?”

“Not yet. We are still formulating theories.”

“It takes time.” She paused. “My point is that it wasn’t just two people using that staff.”

Uesugi frowned. “No?”

“No. Because my grandfather didn’t make that staff--he found it. On the Trinity Islands, as it so happens. It belonged to the same mage that invaded our city. Ergo, three people were able to use it.” She took a moment to consider. “I can’t imagine what my grandparents thought of that, or if it ever occurred to them what that meant, but I imagine that in some ways, they are still linked to that original force.”

Sasuke paused for thought. “So--allow me to base a guess off your previous conjecture--you perhaps believe that Uesugi and I are connecting with a singular force with this creature on the Trinity Islands, the same way that the Lord and Lady Ishida and that Mage connected?”

“That’s my guess.”

“Alright.” Uesugi shifted uncomfortably. Sasuke watched the dim light flutter and play over her severe, beautiful features, and realized all at once--oh. Well that was an unprofessional feeling. “Alright, but here is my question, your Highness. There was a physical object in question when the Lord and Lady existed: that staff. As best I know, there is no physical object in play here, unless I’ve utterly missed something.”

“That’s my question, too.”

All three of them fell silent. Sasuke peered out the window, over the lip of the obsidian cliffs, and realized he could just make out the statue of Mitsunari and the Queen dancing in the surf.

---

Two days later, Toyotomi-Akechi emerged from a downpour in the kitchen, shaking out her boots. “Uesugi.”

“Where have you been?” Uesugi didn’t sound upset by any means. Sasuke had gathered they were rather used to Toyotomi-Akechi’s comings and goings being erratic at best.

“That doesn’t matter. You and Sarutobi should come with me.”

“What?”

But the woman just motioned again and turned back out into the rain. Cursing, Uesugi flung a cloak at Sasuke and donned one herself, racing after her.

They sloshed through the empty cobblestone streets, kicking up water in thick sheets. Thunder rumbled ominously overhead. Taking twisting, labyrinthine side streets, they slid through the curving underbelly of the City with the kind of ease Sasuke had never imagined. And then--

“Here.” Toyotomi-Akechi led them into a little courtyard. Several houses backed up around a circle of patchy grass, a well sitting squat between them. “Down there.”

“Down there what?” Uesugi snapped, squinting down into the well.

Sasuke held his glasses against his face and took a look. Nothing but blackness greeted them. What was he even looking for?

“Don’t look.” Toyotomi-Akechi laughed at them. “Listen.”

He shut his eyes obediently. Was there a point to this? He heard the thunder overhead. He heard the rush of wind and the surging tide. He heard the rain hammering against stone and rooftop and fabric, and--

And he didn’t hear water plinking against water.

“There’s no water in there,” Uesugi murmured. “Alright. So it’s an empty well.”

And at that, Toyotomi-Akechi grinned like a snake incarnate. Stretching out her hand, a flutter of magical lights emanated from her fingertips and circled downward. They watched the dark stones of the well inch by inch by inch--and then, there it was: a strange looking doorway at the very bottom, an ancient padlock holding it shut.

“That looks like a place to get murdered,” Uesugi commented.

“One would hope not.”

“Do you think--” Sasuke paused. “This is a bit of a stretch, but--”

He didn’t need to finish the sentence. Uesugi just turned to the other woman. “I’m assuming you brought a ladder?”

“Brought? No.” But she motioned to one leaning against a nearby house. Uesugi just fetched it herself and lowered it into the well, jostling it a few times to see if it would stick. It did.

“Alright. Sarutobi?”

He swallowed all of his misgivings. “Right behind you.”

“Good.”

They clambered down the ladder and onto the wooden platform. For a second he thought she would try and pick the padlock, but she just smashed her heel through the hinges of the old door and watched it snap loose. There: there was another ladder, leading down into an unknown depth.

Uesugi blinked against the rain and yelled up to Toyotomi-Akechi, “If we don’t return in four hours time, come back and get us!”

“Got it.”

Sasuke waited for her to clear the door before he hopped onto the second ladder and descended. The rain above sluiced through the slats of the wooden door and sprayed him, but he kept his head down as the whole world went dark around him. Down, down, down--and finally his foot met pavement again.

“Alright,” Uesugi muttered in the pitch black. “Give me a second.”

Shhck, shhck--finally her match caught. She lifted it in the dark and found a long-unused torch, and as soon as it went up, they both gasped.

Before them, stretching out into nothing, was a long, dark hallway under the city.

#Ikemen Sengoku#Ikesen Fantasy Au#Ikesen Fanfic#Ikesen#Ikesen Sasuke#Sasuke Sarutobi#Sarutobi Sasuke#Ikesen Medieval Au#Ikesen Descendants#Female!Uesugi#TMOT#The Measurement Of Time#The Well#My writing

35 notes

·

View notes

Text

Nettitudes: Lets Talk Net Art, Josephine Bosma.

A conversation in response to: Nettitudes Lets Talk Net Art, Josephine Bosma.

These days, my mind thinks in multiple directions, so trying to maintain a live feed, whilst also sounding (somewhat) coherent is challenging, so I will approach this layout through thinking chronologically. I would like to begin with my first point of thinking through this text, I began thinking of the shape of The Thought Network.

The Thought Network is about the departure of thought (physically and conceptually)

The Thought Network is connective and collaborative (with both humans and nonhumans)

The Thought Network moves in shapes and shifts constantly

The Thought Network is a diagram

The Thought Network operates in multiple dimensions (between here and there)

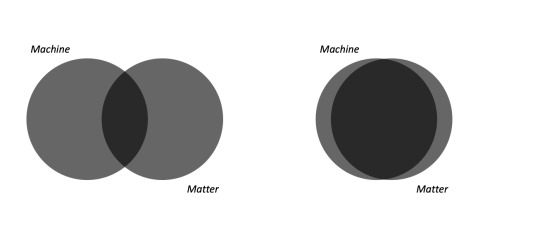

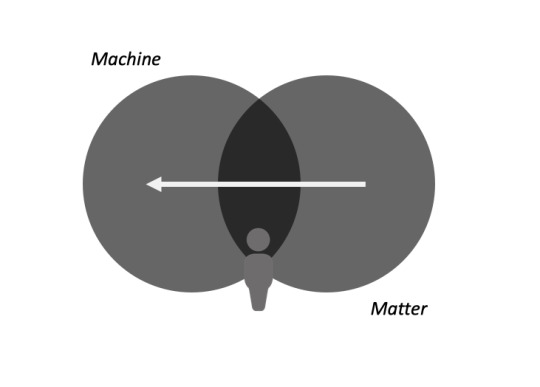

The Result of The Thought Network: the diagram operates in oxymoronic tones, shifting between states of actual and virtual, past and present, machine and matter.

The Result of The Thought Network: agency, full capacity to operate without human intervention.

The Result of The Thought Network: vibrates in layers of reality, new reality, and exhibits a phenomenological potential.

I felt that by outlining these points, I can move forward clearly and intuitively. It will also serve a purpose for creating conversation with the text: Nettitudes. I will use bold fonts to outline anything within this conversation that stands out to me.

First reflections on the shifting of states (matter to machine)

My first thought turned to thinking about what I have lost through this process of covid-19. I have lost the ability to install work and have people interact physically with their bodies. Thus far, I relied on feeling more than any other sense. This could be because I feel so connected in my body to light - having lost half of my sight and gone through a period of light sensitivity. It could also be because I found a new way to read and respond to my environment, by acknowledging matter as more than a material thing, but an active, living thing. This feeling of understanding in a space, understanding that our bodies are creating thoughts and we are able to connect to something much larger than we are. So, my first thought was that I have lost control of my creative habitat. I am no longer able to adjust lighting or reduce the noise. So, with that, I acknowledge and move on.

I began thinking about how I visualise the tension between machine to matter, having felt like there was an equal distribution in my previous installations. I will now be shifting my Thought Network to online, or operating freely without a homesite. I would also like to reflect on the fact that previously, I connected deeply and fully into thing power and matter. Does this not provide the ultimate suggestion for the opposite to happen here? Investigating the (lively) powers of machine? I also question, for a future conversation, where does matter exist in a machine? If that is where we are living now (in mind)?

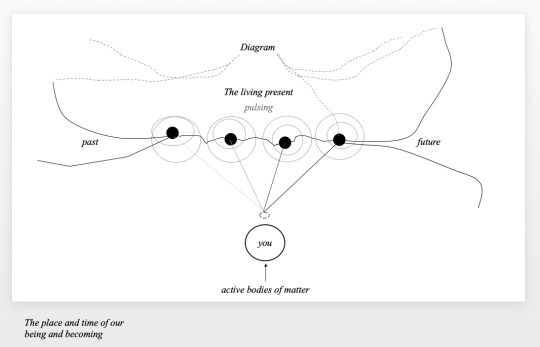

As the balance of machine to matter is shifting, my thoughts drift to thinking about the living present. Will the living present be affected by this? Does it mean that the living present is larger (for more capacity) or does it mean that it is removed all together? Here is an image of the diagram I made about the living present, inspired by Deleuze.

__

The internet

I would like to address that in this conversation I talk about machine, but I am only specifically thinking about the internet at this point. Also, because I think through diagrams, a lot of the things I discuss are only questions and no solutions.

Where does the internet exist? Does it exist? (See text The Internet does not Exist, Sternberg Press)

Where does thought exist within the internet? Inside, outside, in between? A lot like a diagram, the internet kind of shifting in these oxymoronic tones. It’s kind of familiar but alien, exists but doesn’t exist? So, there are similarities, I just think maybe there is a parallel dialogue going on here. An ironic one? I like the idea that there is The Thought Network, but the internet just freezes thoughts in a space. At this point I don’t know where the space is, but it freezes them. Like a Thought Fridge. Maybe this site is my thought fridge.

Nettitudes offered this really lovely suggestion that a hammer, for example, was made to embody something human (to hit with force). So, by that understanding, the internet was made to embody something that humans need to emphasise. This made me think about freezing thoughts in a space. We have the ability to store thoughts on paper or to watch the echo of thought drift from your mouth, but none of it feels as frozen as on a machine. Another quote struck me as being relevant was inspired by Rosalind Krauss: “‘the net’ cannot be called a medium - only a ‘meta-medium’ as all of the machines create the ‘ensemble’”. Although, not a medium, it feels interesting to consider the internet to be part of this collaborative format which creates a wider system of creativity.

On that same note, previously I explored the idea that matter is more active in our lives than we know - from affecting our conscious to lining our fingertips, it could be that machine is equally as close. What if the internet, is a different mode of existing? a different state of our being and becoming? One comment within the text suggested that we are ‘space creating beings’ and that we create our space, we live to have a space. Sloterdijk also said that ‘spheres are spaces of co-existence’.

It also got me thinking about the venn diagram: where do we locate ourselves now, in these uncertain times? This diagram was one of my first thoughts that actually stood out.

It might be that the venn diagram is not suited for this part of my investigation, but is interesting nonetheless.

__

Nettitudes: A re-read through highlight quotes

The digital realm, especially that of the Internet with its dazzling proportions and numerous applications, also allows such multifaceted, intricate cultural forms to appear and expand. pp22

In Simondon’s 1958 book On the Mode of Existence of the Technical Object, he explains how our present-day sociotechnological environment has the strong, intrinsic potential to become a thriving cultural space. He believes that, where machines were once perceived as enemies or the tools of humans, their position changes when both humans and machines interact via a much larger ‘meta-machine’, or what Simondon refers to as an ‘ensemble’. He describes how ‘the machine’ became an ‘individual’ in the ‘technical ensembles’ of the late twentieth century. pp25.This ensemble feels really important when thinking about how I locate my practice, but understand the hierarchy of machine-to-matter.

Net art is art that is created from an awareness of, or deep involvement, in a world transformed and affected by elaborate technical ensembles, which are, in turn, established or enhanced through the net. pp25

The internet acts as a vector in a worldwide, unstable complex of technological and cultural tendencies, and the art produced through or for it is heterogeneous, not uniform. pp26

Net art is, therefore, not a discipline, because it contains and even connects numerous disciplines. pp26

Marshall McLuhan would call it an extension of the nervous system, in which a closed, active, ‘live’ system, is created between different locations or spaces. This means that ‘flow’ cannot take place in one single machine, installation or ‘one-way’ data stream. pp64

A machine, however, whether it is a sewing machine or a computer, contains the potential to perform several of these ‘materialised potential activities’ simultaneously. pp65

Inserted below: Diagram of thinking through Nettitudes.

0 notes

Photo

This specific question came to me today: “Bersa Thunder 380 or Taurus Curve 380 for a first pistol?”

This is a question I have been wanting to be asked, so I am going to do a quick write up. I did not have a Bersa on hand, so I grabbed the Walther PPK/s I have nearby, since the Bersa Thunder is essentially a more modern take on the Walther PPK design. In short, I would recommend the Bersa Thunder for a first pistol, but at the same time I still recommend the Taurus Curve; however, there are qualifiers I must discuss.

Let us begin: Bersa Thunder and the Walther PP design.

(This photo of the Bersa Thunder is not mine, It came from a google search)

The Bersa is essentially a modern take on the Walther PPK, with a few improvements. It is a straight blowback pistol, which I consider to be very notable, and I will come to that later. Moving along, the Bersa Thunder has a nice variety of pistol controls, such as a manual safety and decocker, plus, it has a single action/ double action set up with an exposed hammer allowing you to place the gun in single action mode for clean trigger pull. With that said, there are Bersa Thunder models with a bobbed hammer that are double action only, but this will be obvious when purchasing one, and is not the standard setup.

The Bersa Thunder has several updates that are great additions to the Walther Polizeipistole/Police Pistole design. One big update is the slide release lever, which allows the slide to be released without racking the slide or removing the magazine. Also, the Bersa has a fixed trigger guard, as most modern pistols do. However, the old Walther design does not. The PP design is disassembled by pulling the trigger guard down, but the Bersa has uses a mechanism on the side. The Bersa is also is more ergonomic than the older pistols and the Curve. Personally, I like the trigger reset on the Bersa. I will abstain from commenting on the double action trigger pull, as such can be changed with hammer springs.

On most Bersa Thunders, you can upgrade the sights, as the sights are removable using the dovetail design. Again, there are some Thunders with low profile sights that are part of the slide (I would recommend buying a Thunder model with removable sights, but I’m not going to discredit the others).

PP design & Straight Blowback: The Bersa Thunder is unmistakably similar to the Walther PPK, which is the short version of the Walther PP (police pistol). There is not much difference in size between the PP and PPK, but the PPK is shorter, as you can see in this picture. (The black/blue pistol is a FEG PA63, an almost identical variant of the PP that originates from Hungary).

The PP and PPK are both great for conceal carry. They are sleek, relatively small (but large enough to hold well) and generally comfortable to carry and shoot.

These pistols are straight blowback, a design which generally implies the barrel is fixed to the frame. Although I my favorite pistols are Heckler & Koch USPs, I crave the idea of straight blowback pistols. As opposed to pistols with a tilting and locking barrel, the fixed barrel does not require a guid rod, which allows the barrel to sit lower. Moreover, the lower the barrel, the easier it is for energy to be transferred up your forearm instead of your wrist.

Bersa Recap: The Bersa is affordable, able to be accessorized, great for conceal carry, and it is a modern take on a historic pistol design (yes, I consider the Walther PP to be historically significant).

Now, we move on to the Taurus Curve.

Ah yes, the Taurus Curve. Its new, futuristic and weird. It doesn’t have typical sights, and you can’t purchase extra mags for it. All of its controls are either weird or non existent. It is made by Taurus, who has notoriously bad quality control. Oh yes, It is Curved.

Notwithstanding all of this, would I recommend the Curve? Yes.

Many will say the Curve is for the inexperienced shooter, or for someone who wants to buy a gun but will never shoot it. Honestly, I do not know how anyone could construct either of those arguments with intellectual honesty. With that said, I would like to articulate that the Curve is for individuals skilled and knowledgeable with firearms who need something for deep carry.

The Curve is curved! It feels strange in your right hand, but interesting and enjoyable. It doesn’t feel so weird in your left hand, which is how I shoot it. I shoot left handed and wear the curve on my left backside, with the slide next to my spine. It comes out quickly and conveniently. Many are upset that there is not a “left handed Curve,” but in all honestly the “right handed” shape works great for how I like to conceal carry.

To keep the pistol slim, the slide is anything but tall on the Curve, which can make it difficult for some people to pull the slide back. However, the slide has a great (and aesthetically pleasing) set of serrations that prove great traction for operation. It is worth noting, you cannot feel the serrations while wearing the curve.

What is the deal Taurus, can’t you get a hold on Quality Control? If you are having failure to feed or failure to eject with the Curve, I can almost guarantee the issue is with the extractor claw. There is a real chance you will need to polish and slightly reshape the extractor to get the curve to operate well with all ammunitions. This is easy enough and quick to fix, but not if you lack knowledge and skill in firearm care. Again, this is consistent with my argument that the Curve is fort those skilled with firearms. (Seriously Taurus, I can send you the design improvements needed on this one, it would take minimal effort to fix all of this QC stuff with the extractor).

Taurus Curve sights and sight picture: Is Curve a range gun? Meh. It can be. If you are skilled and need to show out, you annihilate targets with the curve all day long. But, it is not as fun as a USP Match by any means. If you scroll through our blog, you can see videos/gifs of us putting entire magazines in targets that are 25-50 yards away. Mind you, this was before we made custom sights for the curve. It has plenty barrel to stabilize a round of 380 acp. The issue comes with how the operator can interact with the controls of the firearm, which are very minimal on the Curve. It does have a physical sight system, made up of lines and a loaded chamber indicator, so it is anything but conventional. The information conveyed by the Curve’s natural sight system is very fine, which in this context means meticulous, thin, and infinitesimally easy to overlook. Again, with the information conveyed being so difficult to detect, this is an item for the skilled operator who intimately understands the abstract forms firearm operation. The sight picture information is certainly there, but it is anything but overwhelmingly. Only those skilled at shooting will excel with the Curve.

Taurus did incorporate into the frame the opportunity for a laser and light (made by Laserlyte). But not all Curves come with the Laserlyte installed, due to an issue with supply and demand. Furthermore, the button to turn on the Laserlyte module is not the most intuitive thing in the world. So if you need a laser to tell you were to shoot, you have that option with the Curve.

The Taurus Curve is double action only, with a shrouded hammer hidden away in its tiny slide. Trigger pull is long but consistent, and you can feel when you are about to release the hammer. Although it shares a few parts with the Taurus TCP 738 (such as the mag frame which can be used to make extra mags if you have the ability to do such) it does not have a slide release, or a conventional magazine release as does the TCP 738. It will lock the slide back after the last round, but you have to manually rack the slide to get moving with a second magazine (which is included in all Curves purchased). The Curve magazine actually has a clip to hold it in. Instead of a more conventional mag release near the trigger guard (or even on the heel), the Curve has a place on the magazine that you push to release the clip-lever mechanism. I would have not gone this route had I designed the Curve, but it correlates with the trigger design and smooth profile. The trigger has no dead space behind it, ensuring nothing can get behind the trigger if it is in a bag without its trigger guard. The trigger wraps around the magazine when pulled (inside the frame).

The curve has no safety, but instead a cover that pops off when you pull it out. This serves a few purposes. It ensures the trigger is covered when holstered, but it will pop off when you pull the gun out, as long as you have tied the string to a belt loop…or something else. It works as a safety, but also keeps debris away from the Laserlyte button. The cover is smooth and intuitive. I thought I would never use it, but I actually like it. It works well and pops off only when the firearm is pulled in a certain direction, which is the same direction you naturally pull it when getting ready to fire.

The Curve holsters itself. The curve does its own thing, which is what makes it great. It has a belt clip from the factory, which is awesome. Even though the pistol is thicker than some other small pistols, it is actually thinner when on you. It does not require a holster, and the curved frame wraps around you and is so very comfortable to wear in all clothes. With the belt clip and trigger cover, the curve is so smooth that you can easily forget it is there. I wear the curve all the time, and no one ever has a clue it is there. Even the people who know I am carrying have a hard time detecting it, and I am a slim 25 year old who wears clothes that actually fit.

The Curve uses a locking/unlocking design. The barrel is not fixed on the Curve, as it is on the Bersa Thunder. Instead, it uses the same locking design found on most modern pistols. This is fine, but it does slightly raise the barrel. Nonetheless the curve has a low enough profile that the energy still moves down your forearm well.

Taurus Curve Recap: This is an awesome and futuristic pistol, that I would recommend to those who understand the intricacies of firearms. It has some notable QC issues out of the box, as well as accessorizing limitations. But, out of all of the firearms on the market. I cannot find one that is more concealable. I cannot find one that can execute the task of deep carry with such effectiveness. Once you get past its issues, and polish it up, it works well and wears well. Moreover, if you can make your own parts, you can put sights on it and make as many mags as you want. I wish Taurus would come out with an updated version that has a few improvements (cough cough extractor claw QC and traditional magazine release). With all that said, It truly is the future of conceal carry, and I hope there are more pistols that come out that follow its form. The Curve is the ultimate in conceal carry if you want a truly concealed firearm, and I say that as someone who is experienced with the G42, G27, PPK, KelTec 3-AT, Ruger LCP, NAA mini revolvers, and a whole variety of other small pistols. These other pistols may be great, but I would recommend the Curve to skilled shooters because I cannot find another pistol that is so effective at being concealed, not to mention being comfortable and easy to access while being concealed.

TLDR: Buy yourself a Bersa Thunder if you want a more modern PPK, as it is a versatile firearm. Buy yourself a Taurus Curve if you want the ultimate in concealability, and are capable of tweaking it.

If you don’t like either, do have the social skills to keep it to yourself, as this is intended as a constructive writeup. I own some very expensive toys, but I still don’t trash casual stuff. Social skills people.

#taurus curve#bersa thunder#taurus arms#conceal carry#ccw#firearms#guns#gunblr#pistol#380 pistol#380 acp

49 notes

·

View notes

Link

The iPhone XS proves one thing definitively: that the iPhone X was probably one of the most ambitious product bets of all time.

When Apple told me in 2017 that they put aside plans for the iterative upgrade that they were going to ship and went all in on the iPhone X because they thought they could jump ahead a year, they were not blustering. That the iPhone XS feels, at least on the surface, like one of Apple’s most “S” models ever is a testament to how aggressive the iPhone X timeline was.

I think there will be plenty of people who will see this as a weakness of the iPhone XS, and I can understand their point of view. There are about a half-dozen definitive improvements in the XS over the iPhone X, but none of them has quite the buzzword-worthy effectiveness of a marquee upgrade like 64-bit, 3D Touch or wireless charging — all benefits delivered in previous “S” years.

That weakness, however, is only really present if you view it through the eyes of the year-over-year upgrader. As an upgrade over an iPhone X, I’d say you’re going to have to love what they’ve done with the camera to want to make the jump. As a move from any other device, it’s a huge win and you’re going head-first into sculpted OLED screens, face recognition and super durable gesture-first interfaces and a bunch of other genre-defining moves that Apple made in 2017, thinking about 2030, while you were sitting back there in 2016.

Since I do not have an iPhone XR, I can’t really make a call for you on that comparison, but from what I saw at the event and from what I know about the tech in the iPhone XS and XS Max from using them over the past week, I have some basic theories about how it will stack up.

For those with interest in the edge of the envelope, however, there is a lot to absorb in these two new phones, separated only by size. Once you begin to unpack the technological advancements behind each of the upgrades in the XS, you begin to understand the real competitive edge and competence of Apple’s silicon team, and how well they listen to what the software side needs now and in the future.

Whether that makes any difference for you day to day is another question, one that, as I mentioned above, really lands on how much you like the camera.

But first, let’s walk through some other interesting new stuff.

Notes on durability

As is always true with my testing methodology, I treat this as anyone would who got a new iPhone and loaded an iCloud backup onto it. Plenty of other sites will do clean room testing if you like comparison porn, but I really don’t think that does most folks much good. By and large most people aren’t making choices between ecosystems based on one spec or another. Instead, I try to take them along on prototypical daily carries, whether to work for TechCrunch, on vacation or doing family stuff. A foot injury precluded any theme parks this year (plus, I don’t like to be predictable) so I did some office work, road travel in the center of California and some family outings to the park and zoo. A mix of uses cases that involves CarPlay, navigation, photos and general use in a suburban environment.

In terms of testing locale, Fresno may not be the most metropolitan city, but it’s got some interesting conditions that set it apart from the cities where most of the iPhones are going to end up being tested. Network conditions are pretty adverse in a lot of places, for one. There’s a lot of farmland and undeveloped acreage and not all of it is covered well by wireless carriers. Then there’s the heat. Most of the year it’s above 90 degrees Fahrenheit and a good chunk of that is spent above 100. That means that batteries take an absolute beating here and often perform worse than other, more temperate, places like San Francisco. I think that’s true of a lot of places where iPhones get used, but not so much the places where they get reviewed.

That said, battery life has been hard to judge. In my rundown tests, the iPhone XS Max clearly went beast mode, outlasting my iPhone X and iPhone XS. Between those two, though, it was tougher to tell. I try to wait until the end of the period I have to test the phones to do battery stuff so that background indexing doesn’t affect the numbers. In my ‘real world’ testing in the 90+ degree heat around here, iPhone XS did best my iPhone X by a few percentage points, which is what Apple does claim, but my X is also a year old. The battery didn’t fail during even intense days of testing with the XS.

In terms of storage I’m tapping at the door of 256GB, so the addition of 512GB option is really nice. As always, the easiest way to determine what size you should buy is to check your existing free space. If you’re using around 50% of what your phone currently has, buy the same size. If you’re using more, consider upgrading because these phones are only getting faster at taking better pictures and video and that will eat up more space.

The review units I was given both had the new gold finish. As I mentioned on the day, this is a much deeper, brassier gold than the Apple Watch Edition. It’s less ‘pawn shop gold’ and more ‘this is very expensive’ gold. I like it a lot, though it is hard to photograph accurately — if you’re skeptical, try to see it in person. It has a touch of pink added in, especially as you look at the back glass along with the metal bands around the edges. The back glass has a pearlescent look now as well, and we were told that this is a new formulation that Apple created specifically with Corning. Apple says that this is the most durable glass ever in a smartphone.

My current iPhone has held up to multiple falls over 3 feet over the past year, one of which resulted in a broken screen and replacement under warranty. Doubtless multiple YouTubers will be hitting this thing with hammers and dropping it from buildings in beautiful Phantom Flex slo-mo soon enough. I didn’t test it. One thing I am interested in seeing develop, however, is how the glass holds up to fine abrasions and scratches over time.

My iPhone X is riddled with scratches both front and back, something having to do with the glass formulation being harder, but more brittle. Less likely to break on impact but more prone to abrasion. I’m a dedicated no-caser, which is why my phone looks like it does, but there’s no way for me to tell how the iPhone XS and XS Max will hold up without giving them more time on the clock. So I’ll return to this in a few weeks.

Both the gold and space grey iPhones XS have been subjected to a coating process called physical vapor deposition or PVD. Basically metal particles get vaporized and bonded to the surface to coat and color the band. PVD is a process, not a material, so I’m not sure what they’re actually coating these with, but one suggestion has been Titanium Nitride. I don’t mind the weathering that has happened on my iPhone X band, but I think it would look a lot worse on the gold, so I’m hoping that this process (which is known to be incredibly durable and used in machine tooling) will improve the durability of the band. That said, I know most people are not no-casers like me so it’s likely a moot point.

Now let’s get to the nut of it: the camera.

Bokeh let’s do it

I’m (still) not going to be comparing the iPhone XS to an interchangeable lens camera because portrait mode is not a replacement for those, it’s about pulling them out less. That said, this is closest its ever been.