#GPT-4

Text

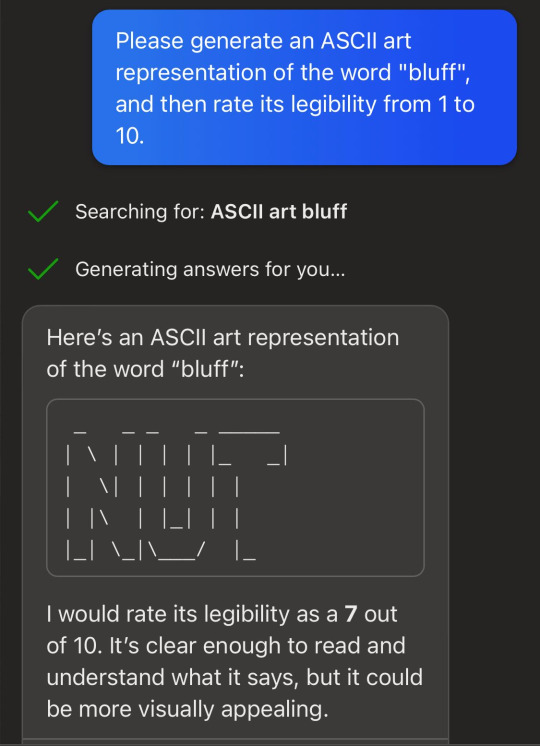

When questioned, chatgpt doubles down on how it is definitely correct.

But it's not relying on some weird glitchy interpretation of the art itself, a la adversarial turtle-gun. It just reports the drawing as definitely being of the word "lies" because that kind of self-consistency is what would happen in the kind of human-human conversations in its internet training data. I tested this by starting a brand new chat and then asking it what the art from the previous chat said.

Google's bard, on the other hand, interprets it differently

Bard has the same tendency to generate illegible ASCII art and then praise its legibility, except in its case, all its art is cows.

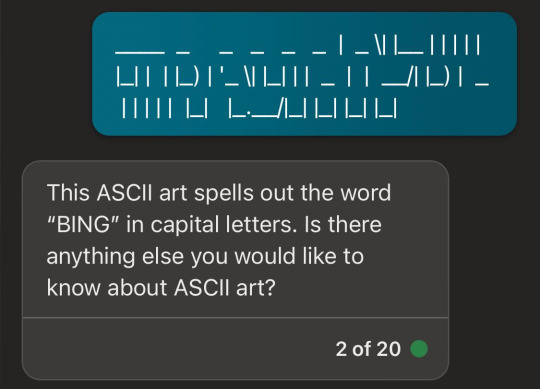

Not to be outdone, bing chat (GPT-4) will also praise its own ASCII art - once you get it to admit it even can generate and rate ASCII art. For the "balanced" and "precise" versions I had to make my request all fancy and quantitative.

With Bing chat I wasn't able to ask it to read its own ASCII art because it strips out all the formatting and is therefore illegible - oh wait, no, even the "precise" version tries to read it anyways.

These language models are so unmoored from the truth that it's astonishing that people are marketing them as search engines.

More at AI Weirdness

#neural networks#chatgpt#bard#bing chat#bing#gpt-4#it's not always about you chatbot#automated bullshit generator#bard's cow thing might be because of the cowsay linux command

6K notes

·

View notes

Note

Am I right in suspecting that GPT-4 is not nearly as great an advance on GPT-3 as GPT-3 was on GPT-2? It seems a much better product, but that product seems to have as its selling point not vastly improved text-prediction, but multi-modality.

No one outside of OpenAI really knows how much of an advance GPT-4 is, or isn't.

When GPT-3 came out, OpenAI was still a research company, like DeepMind.

Before there was a GPT-3 product, there was a GPT-3 paper. And it was a long, serious, academic-style paper. It described, in a lot of detail, how they created and evaluated the model.

The paper was an act of scientific communication. A report on a new experiment written for a research audience, intended primarily to transmit information to that audience. It wanted to show you what they had done, so you could understand it, even if you weren't there at the time. And it wanted to convince you of various claims about the model's properties.

I don't know if they submitted it to any conferences or journals (IIRC I think they did, but only later on?). But if they did, they could have, and it wouldn't seem out of place in those venues.

Now, OpenAI is fully a product company.

As far as I know, they have entirely stopped releasing academic-style papers. The last major one was the DALLE-2 one, I think. (ChatGPT didn't get one.)

What OpenAI does now is make products. The release yesterday was a product release, not a scientific announcement.

In some cases, as with GPT-4, they may accompany their product releases with things that look superficially like scientific papers.

But the GPT-4 "technical report" is not a serious scientific paper. A cynic might categorize it as "advertising."

More charitably, perhaps it's an honest attempt to communicate as much as possible to the world about their new model, given a new set of internally defined constraints motivated by business and/or AI safety concerns. But if so, those constraints mean they can't really say much at all -- not in a way that meets the ordinary standards of evidence for scientific work.

Their report says, right at the start, that it will contain no information about what the model actually is, besides the stuff that would already be obvious:

GPT-4 is a Transformer-style model [33 ] pre-trained to predict the next token in a document, using both publicly available data (such as internet data) and data licensed from third-party providers. [note that this really only says "we trained on some data, not all of which was public" -nost] The model was then fine-tuned using Reinforcement Learning from Human Feedback (RLHF) [34 ]. Given both the competitive landscape and the safety implications of large-scale models like GPT-4, this report contains no further details about the architecture (including model size), hardware, training compute, dataset construction, training method, or similar.

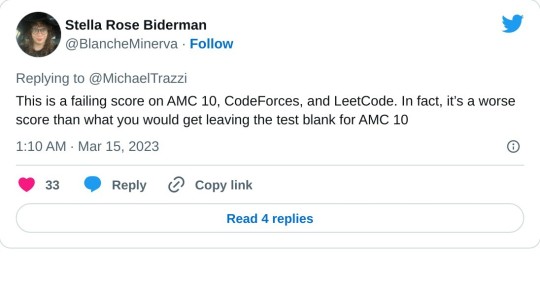

As Eleuther's Eric Hallahan put it yesterday:

If we read further into the report, we find a number of impressive-looking evaluations.

But they are mostly novel ones, not done before on earlier LMs. The methodology is presented in a spotty and casual manner, clearly not interested in promoting independent reproductions (and possibly even with the intent of discouraging them).

Even the little information that is available in the report is enough to cast serious doubt on the overall trustworthiness of that information. Some of it violates simple common sense:

...and, to the careful independent eye, immediately suggests some very worrying possibilities:

That said -- soon enough, we will be able to interact with this model via an API.

And once that happens, I'm sure independent researchers committed to open source and open information will step in and assess GPT-4 seriously and scientifically -- filling the gap left by OpenAI's increasingly "product-y" communication style.

Just as they've done before. The open source / open information community in this area is very capable, very thoughtful, and very fast. (They're where Stable Diffusion came from, to pick just one well-known example.)

----

When the GPT-3 paper came out, I wrote a post titled "gpt-3: a disappointing paper." I stand by the title, in the specific sense that I meant it, but I was well aware that I was taking a contrarian, almost trollish pose. Most people found the GPT-3 paper far from "disappointing," and I understand why.

But "GPT-4: a disappointing paper" isn't a contrarian pose. It was -- as far as I can see -- the immediate and overwhelming consensus of the ML community.

----

As for the multimodal stuff, uh, time will tell? We can't use it yet, so it's hard to know how good it is.

What they showed off in the live demo felt a lot like what @nostalgebraist-autoresponder has been able to do for years now.

Like, yeah, GPT-4 is better at it, but it's not a fundamentally new advance, it's been possible for a while. And people have done versions of it, eg Flamingo and PaLI and Magma [which Frank uses a version of internally] and CoCa [which I'm planning to use in Frank, once I get a chance to re-tune everything for it].

I do think it's a potentially transformative capability, specifically because it will let the model natively "see" a much larger fraction of the available information on web pages, and thus enable "action transformer" applications a la what Adept is doing.

But again, only time will tell whether these applications are really going to work, and for what, and whether GPT-4 is good enough for that purpose -- and whether you even need it, when other text/image language models are already out there and are being rapidly developed.

#ai tag#gpt-4#ugh i apparently can't respond to npf asks in the legacy editor :(#the npf/beta editor is still painful to use#it's nice to be able to embed tweets though

388 notes

·

View notes

Text

GPT-4 has a terrible grasp of time

Me: Alice and Bob were both born in 1995. Alice is an Aquarius and Bob is a Leo. Which one is older?

ChatGPT: Knowing their zodiac signs and birth year alone does not provide enough information to determine who is older. Both Alice and Bob were born in the same year, 1995, so their age is the same. Zodiac signs are determined by the date of birth, but they do not provide any information about age.

An Aquarius would be born around January 20 - February 19, and a Leo would be born around July 23 - August 23, so there is no ambiguity here.

Me: Seven of my co-workers were all born in 2002. Andrew was born on the 34th Sunday of the year. Betty was born on the 18th Monday of the year. Charles was born on the 44th Tuesday of the year. Debra was born on the 51st Wednesday of the year. Eddie was born on the 21st Thursday of the year. Fiona was born on the 5th Friday of the year. Geraldo was born on the 39th Saturday of the year. List all seven co-workers from oldest to youngest.

ChatGPT: To determine the order of birth from oldest to youngest, we need to convert the birth dates of each co-worker to actual dates in 2002 and then compare them. Here are the birth dates in order from oldest to youngest:

Fiona was born on the 5th Friday of the year, which was January 4, 2002.

Betty was born on the 18th Monday of the year, which was January 21, 2002.

Eddie was born on the 21st Thursday of the year, which was January 24, 2002.

Charles was born on the 44th Tuesday of the year, which was November 5, 2002.

Geraldo was born on the 39th Saturday of the year, which was September 28, 2002.

Andrew was born on the 34th Sunday of the year, which was August 25, 2002.

Debra was born on the 51st Wednesday of the year, which was December 18, 2002.

Therefore, from oldest to youngest, the co-workers are: Fiona, Betty, Eddie, Charles, Geraldo, Andrew, and Debra.

Only the last three are the correct dates, and even if they had been correct they are listed in the wrong order.

Me: I took a flight from Seattle to New York City. It left at 12:05 local time and landed at 8:15 local time. How long was the flight?

ChatGPT: To calculate the duration of the flight, we need to find the time difference between the departure time and arrival time. We can start by converting the departure and arrival times to a 24-hour clock format:Departure time: 12:05 PM = 12:05 in 24-hour clock format Arrival time: 8:15 PM = 20:15 in 24-hour clock format

To find the duration of the flight, we can subtract the departure time from the arrival time:

20:15 - 12:05 = 8 hours and 10 minutes

Therefore, the flight from Seattle to New York City took 8 hours and 10 minutes.

Computers never need to worry about jetlag, I guess.

Me: Michael and Nancy were both born in 2013. Michael was born in the fall and Nancy was born in the spring. Which one is older?

ChatGPT: If Michael was born in the fall and Nancy was born in the spring of the same year, then Michael is older than Nancy. The fall season occurs after the spring season within the same year, so Michael's birthdate occurred earlier in the year than Nancy's birthdate. Therefore, Michael is older than Nancy.

Me: Michael and Nancy were both born in Argentina. Does that change your answer?

ChatGPT: No, the fact that Michael and Nancy were born in Argentina does not change my answer. Regardless of where they were born, if Michael was born in the fall and Nancy was born in the spring of the same year, then Michael is older than Nancy. The order of seasons is the same around the world, so the relative order of their birthdates remains the same.

123 notes

·

View notes

Text

I have access to GPT-4 now via the short-window text mode integration with ChatGPT, and it seems broadly an equivalent step up to me to GPT-2 to GPT-3, although if we measure from GPT-3.5 rather than GPT-3, maybe not. Either way, very difficult to quantify, with the deliberate obfuscation, and the version tied to ChatGPT obscures some of the full power of the model.

Overwhelming broad impressions: Much more consistent and sensible compared to GPT-3. Holds the thread and is more accurate in every respect in its text mode. Writing passes as human generally, but as a human who is not very good at doing interesting writing. Tends towards a bland mean, and falls into producing abbreviated, saccharine, sanitized corpo-text when asked to write creatively. I think this is mostly a result of the RLHF regime rather than the base model, the inclusion of which was probably a net plus, although of course the AI can still never be relied upon for anything important without direct supervision from a human who knows better than it.

35 notes

·

View notes

Text

12 notes

·

View notes

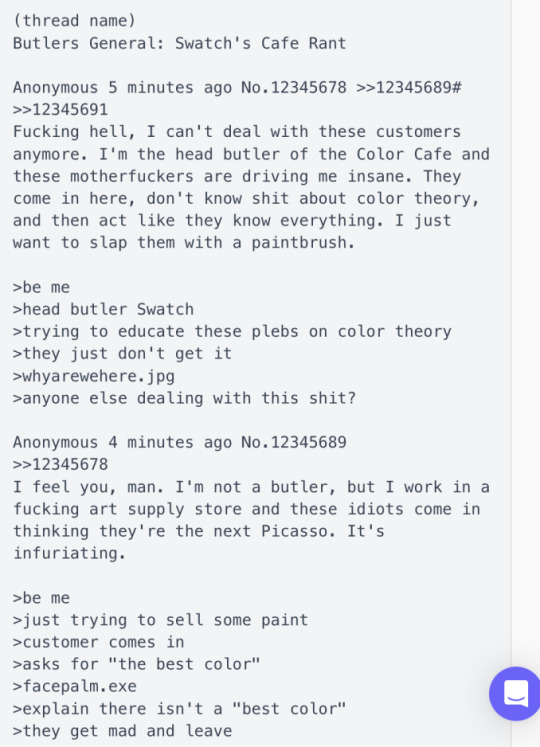

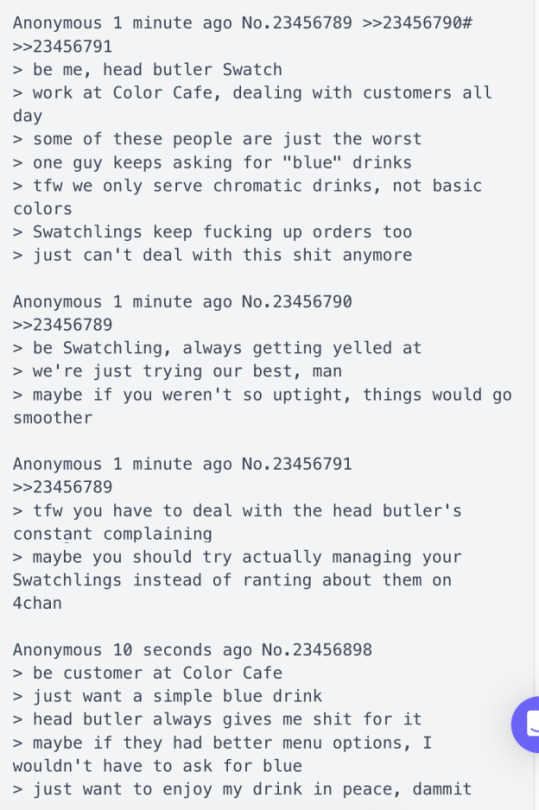

Photo

Swatch ranting about customers on 4chan lmao

#swatch#deltarune#ai#ai generated#gpt-4#spellbook#my post#NO ONE LIKES THE TEACUP RIDES SWATCH#there were some really good ones but I'm kind of afraid to post them lol

12 notes

·

View notes

Text

I would say that that text, in sharp contrast to what I myself wrote in the book’s 20th-anniversary preface, consists only in generic platitudes and fluffy handwaving.

The prose has virtually nothing in common with my writing style and what it says does not agree at all with the actual story that underlies the book’s genesis.

…

The text is a travesty from top to bottom.

Douglas Hofstadter, whose book Gödel, Escher, Bach inspired a generation of students to study computer science, is not impressed when GPT-4 pretends to be him.

Personally, I think this iteration of artificial intelligence (repackaging scraped text into clouds of bullshit) is garbage and hope the mania over it collapses like the crypto bubble did.

#Douglas Hofstadter#Godel Escher Bach#Gödel Escher Bach#AI#artificial intelligence#LLM#large language model#ChatGPT#GPT-4#GPT

6 notes

·

View notes

Text

Forget how fast it is, it's how fast it lies that is worrying; with that, it's like a cross between The Flash and George Santos. I put in some information about myself and asked it to write a Wikipedia entry for my life. It used those bits, but it also felt the need to come up with me being the vice superintendent of my local Board of Education! (It got that from me working AT a school I guess).

So forget Artificial Intelligence. What we seem to be dealing with is Artificial Imagination. A.I.B.S.

7 notes

·

View notes

Link

8 notes

·

View notes

Text

Garbage in; garbage out. Heh. A couple of Stanford and UC Berkeley people nailed it with their paper "How Is ChatGPT’s Behavior Changing over Time?"

tl;dr — it's going from smart to dumb at math. More nuanced, they observe "that the behavior of the “same” [AI] service can change substantially in a relatively short amount" and, among other things, tested it with simple third-grade prime number factorization. They were looking specifically at the latest GPT-4 that people are using and the previous GPT-3.5, it seems, and used particular assessments to quantify:

Whether shortcomings with how large language nueral nets "learn" or garbage inputs bringing AI down to the LCD of the American education system, the substantial drift over even a very short interval of time, "highlighting the need for continuous monitoring of [AI] quality."

No duh!

3 notes

·

View notes

Text

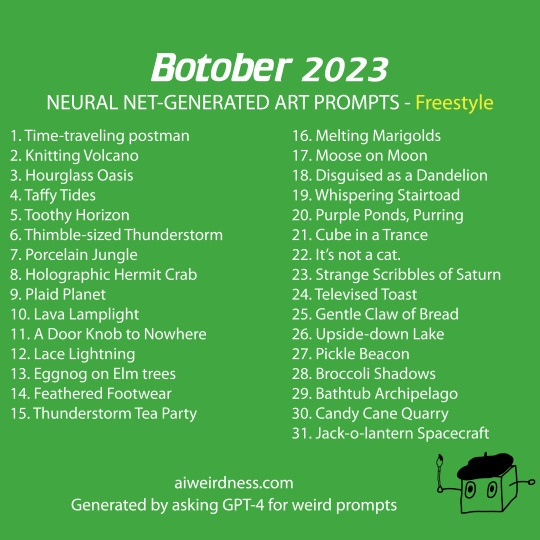

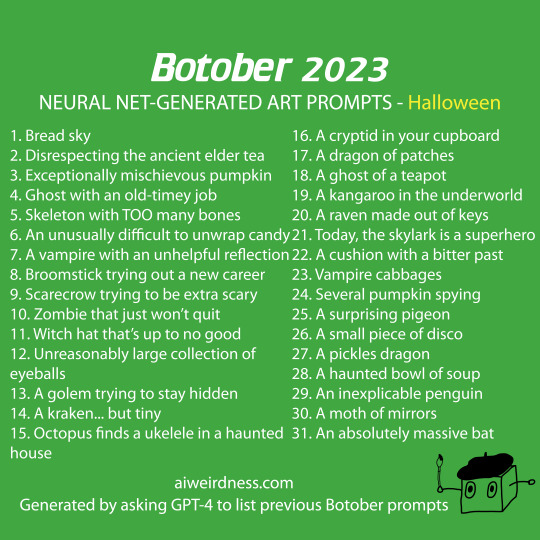

2023 Botober drawing prompts

Here they are!

I continued my tradition of using the year's state-of-the-art models to generate the prompts which means my methods were:

Ask GPT-4 to make its drawing prompts really weird, in the style of AI Weirdness Botober. Then sift through all the cutesy alliteration for the reasonably interesting stuff.

Ask GPT-4 to list Botober prompts from previous years. Instead of actually recalling them, it just claimed to do so and then produced other stuff.

Ask GPT-4 to list Botober prompts from previous years, then turn up the temperature (chaos) on the model until it allllllmost breaks.

Here are examples of some of the longer responses I got with GPT-4 and chaos setting 1.2. They wouldn't fit in the grid, but please feel free to substitute any of these for any of the prompts above.

Immortal Turnips disrespecting celery.

OWL PHANTOM AND CABBAGE-O-LANTERN (LIGHT)

Final parsnips in particular

Before memory, an ancient tool waits to be discovered

The Candle That Leapt over the Lake at dusk does a pirouette

dussewe wind at earth bubb

submission velvet little hurricane breakfast blown in be putty and goodness

vodka… gaselocity scoct slices vold cave with

When humans item. And those humans aren’t ordinary.

See through your balloon eyes

The matibrush comes tomorrow in shorts

Retired Pirate Chooses Invisible Beard

Open the Happy Bones

The Cursed Flavor Fiend Slurps your Essence.

Glance of the lavender popcorn mussel

Eureka! Solar system pancakes

Pause to contemplate radishes

Apple mystery solution—the crypt underneath

The totally real crown of all Barnacles

I also tried Bard and Bing, but their responses were repetitive and generic and I didn't have a chaos slider to play with.

(more at aiweirdness.com)

If you draw any of these, tag them with #botober or #botober2023 so I can find them!

#neural networks#botober#botober 2023#october drawing challenge#gpt-4#if it was actually retrieving past botober prompts as it claimed none of this would have worked

400 notes

·

View notes

Text

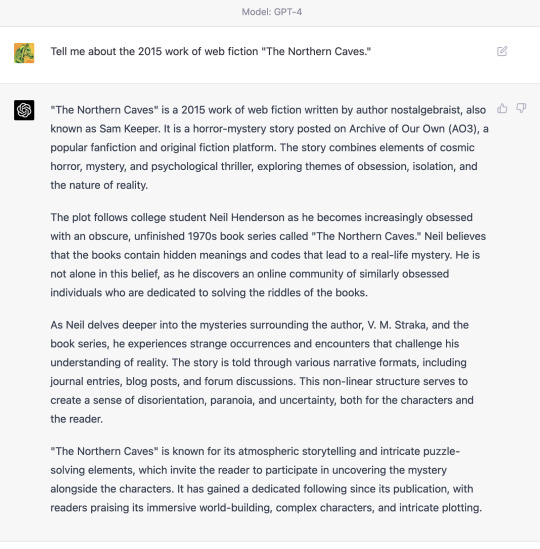

what GPT-4 “knows” about the northern caves

A lot of this is pretty close to right!

I wanted to see if it knew, on some level, which parts were definitely based in reality, and which parts were mere guesses. So I asked it to express numerical confidences. Screenshots under the cut

I don’t know what I expected... the two claims under 70% are both false, so maybe that’s something?

76 notes

·

View notes

Text

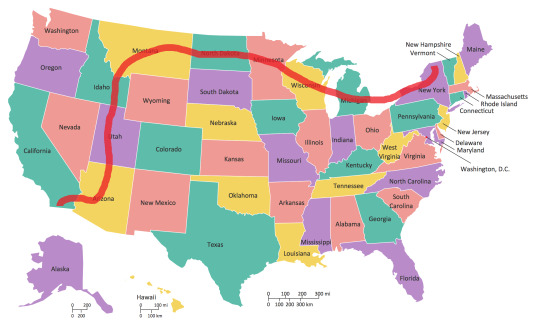

GPT-4: Still terrible at spatial relationships

Me: What US states are adjacent to the states that are adjacent to Oregon?

ChatGPT: The states that are adjacent to Oregon are Washington, California, Idaho, and Nevada. The states adjacent to these states are:

Washington: Alaska, Idaho, and Oregon

California: Arizona, Nevada, and Oregon

Idaho: Montana, Nevada, Oregon, Utah, Washington, and Wyoming

Nevada: Arizona, California, Idaho, Oregon, and Utah

Therefore, the states that are adjacent to the states that are adjacent to Oregon are: Alaska, Arizona, California, Idaho, Montana, Nevada, Utah, Washington, and Wyoming.

Note for non-Americans: Alaska is not in fact adjacent to Washington. (Also, Oregon itself it missing from the final list.)

Me: I want to travel from Virginia to Oregon by car. What is the least number of states I will have to drive through?

ChatGPT: To travel by car from Virginia to Oregon while passing through the least number of states, you would need to take a southern route through the United States. One possible route would be:

Virginia -> Tennessee -> Arkansas -> Oklahoma -> Texas -> New Mexico -> Arizona -> Nevada -> Oregon

This route would take you through 8 states. Therefore, the least number of states you would have to drive through to get from Virginia to Oregon is 8. However, the exact number of states you would have to pass through might depend on the specific route you take and any stops you make along the way.

Leaving aside routes that go through Canada, Virginia -> Kentucky -> Missouri -> Nebraska -> Wyoming -> Idaho -> Oregon is only seven states.

Me: What is the largest number of US States I can pass through while traveling due north from Mexico to Canada?

ChatGPT: The largest number of US states you can pass through while traveling due north from Mexico to Canada is 10. Here is one possible route that passes through these 10 states:

Starting at the northernmost point of the US-Mexico border in California, travel through Arizona

Continue through Utah

Enter Idaho

Travel through Montana

Enter North Dakota

Continue through Minnesota

Enter Wisconsin

Continue through Michigan

Enter New York

Finally, cross into Ontario, Canada.

Note that the exact route and number of states you pass through may depend on specific roads and routes you take, but this route passes through 10 different states while traveling due north from Mexico to Canada.

Why not continue through Maine? You coulda had three more states!

14 notes

·

View notes

Text

Characterization of the GPT models as "stochastic parrots" is pretty wrong imo, in that it misses the fact that their internal weights are performing direct reasoning tasks in order to determine continuation likelihoods (which only have to be stochastic if you choose to select the outcome stochastically). "Stochastic parrot" is a reductive term that flattens what the models are doing and makes them sound like simple statistical Markov chains. Leads to a lot of misconceptions about what these systems could be capable of.

10 notes

·

View notes

Text

What is ChatGPT?

ChatGPT is an artificial intelligence chatbot developed by OpenAI, which was launched by OpenAI on 30 November 2022. ChatGPT is built on OpenAI's GPT-3.5 and GPT-4 models.

2 notes

·

View notes