Text

NVIDIA HGX AI Supercomputer Now Available at Exeton - Experience Next-Gen AI Computing

Exeton proudly announces a significant advancement in computing technology with the availability of the NVIDIA HGX AI Supercomputer. Tailored for high-caliber AI research and high-performance computing (HPC) tasks, the NVIDIA HGX is your gateway to achieving unparalleled computational speeds and efficiency.

The NVIDIA HGX AI platform is offered in several powerful configurations. Customers can choose from single baseboards with four H200 or H100 GPUs, or opt for the more expansive eight-GPU configurations which include combinations of H200, H100, B200, or B100 models. These setups are engineered to handle the most complex and demanding tasks across various industries including AI development, deep learning, and scientific research.

Central to the NVIDIA HGX B200 and B100 models are the innovative Blackwell Tensor Core GPUs. These are seamlessly integrated with high-speed interconnects to propel your data center into the next era of accelerated computing. Offering up to 15X more inference performance than its predecessors, the Blackwell-based HGX systems are ideal for running complex generative AI models, performing advanced data analytics, and managing intensive HPC operations.

The HGX H200 model takes performance to the next level, combining H200 Tensor Core GPUs with state-of-the-art interconnects for superior scalability and security. Capable of delivering up to 32 petaFLOPS of computational power, the HGX H200 sets a new benchmark as the world’s most potent accelerated scale-up server platform for AI and high-performance computing.

Exeton is excited to offer this revolutionary tool that redefines what is possible in AI and HPC. The NVIDIA HGX AI Supercomputer is not just a piece of technology; it is your partner in pushing the boundaries of what is computationally possible. Visit Exeton today and choose the NVIDIA HGX model that will drive your ambitions to new computational heights.

0 notes

Text

Exeton Launches Vector One, A New Single-GPU Desktop PC

The Exeton Vector One is now available for order. The new single-GPU desktop PC is built to tackle demanding AI/ML tasks, from fine-tuning Stable Diffusion to handling the complexities of Llama 2 7B. Exeton customers can now benefit from a more compact, quieter desktop PC at a price point of less than $5,500.

Vector One Specs

GPU: 1x NVIDIA GeForce RTX 4090, 24 GB, liquid-cooled

PROCESSOR: AMD Ryzen™ 9 7950X 16-core, 32-thread

SYSTEM RAM: 64 GB or 128 GB DDR5

STORAGE: OS — Up to 3.84 TB M.2 (NVMe) | Data — Up to 3 x 3.84 TB M.2 (NVMe)

NETWORK INTERFACE: 10Gb Ethernet

Key benefits of the Vector One

The Vector One offers Exeton customers a powerful deep learning solution to train neural networks right from their desktops.

Sleek Power that doesn’t Disturb

The Vector One has been meticulously designed with liquid cooling for both the CPU and GPU, ensuring optimal performance without the noise. Even under typical high workloads, it only emits a mere 39 dB SPL of sound, making it perfect for maintaining a quiet workspace.

Next-gen Graphics for Advanced AI/ML Tasks

Equipped with the cutting-edge NVIDIA GeForce RTX 4090 graphics card boasting 24 GB of VRAM, the Vector One stands ready to tackle demanding tasks. From fine-tuning Stable Diffusion to handling the complexities of Llama 2 7B, this machine ensures that high-intensity computations are a breeze.

Experience the Power of future-ready Architecture

At the heart of Vector One lies the state-of-the-art AMD Ryzen 9 7950X CPU, hosted on the advanced X670E chipset. This powerhouse supports both PCIe Gen 5 and DDR5 and offers up to twice the memory bandwidth of its predecessors. Dive into the future of computing with unrivaled speed and efficiency.

Delivering the Optimal Experience for AI/ML

Through rigorous research and experience, our engineers have crafted the ultimate system configuration tailored for AI/ML tasks. No more guesswork or configurations needed: the Vector One is fine-tuned to deliver unparalleled performance right out of the box. Additionally, every Vector One comes with a one-year warranty on hardware, with an option to extend to three years. For added peace of mind, choose to include dedicated technical support for Ubuntu and all ML frameworks and drivers that come pre-installed with your machine.

Pre-installed with the Software you Need

How to get started with Vector One

The Vector One is now available to purchase. Equipped with a single NVIDIA GeForce RTX 4090 graphics card boasting 24 GB of VRAM and pre-installed with Ubuntu, TensorFlow, PyTorch®, NVIDIA CUDA, and NVIDIA cuDNN, the Vector One is the optimal single-GPU desktop PC for deep learning. At less than $5,500, the desktop solution meets tighter budget requirements without sacrificing performance.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

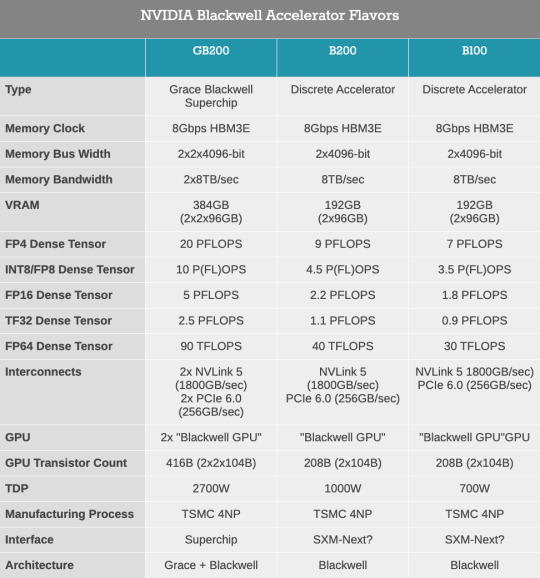

NVIDIA Steps Up Its Game: Unveiling the Blackwell Architecture and the Powerhouse B200/B100 Accelerators

NVIDIA, a titan in the world of generative AI accelerators, is not one to rest on its laurels. Despite already dominating the accelerator market, the tech giant is determined to push the envelope further. With the unveiling of its next-generation Blackwell architecture and the B200/B100 accelerators, NVIDIA is set to redefine what’s possible in AI computing yet again.

As we witnessed the return of the in-person GTC for the first time in five years, NVIDIA’s CEO, Jensen Huang, took center stage to introduce an array of new enterprise technologies. However, it was the announcement of the Blackwell architecture that stole the spotlight. This move marks a significant leap forward, building upon the success of NVIDIA’s H100/H200/GH200 series.

Named after the American statistical and mathematical pioneer Dr. David Harold Blackwell, this architecture embodies NVIDIA’s commitment to innovation. Blackwell aims to elevate the performance of NVIDIA’s datacenter and high-performance computing (HPC) accelerators by integrating more features, flexibility, and transistors. This approach is a testament to NVIDIA’s strategy of blending hardware advancements with software optimization to tackle the evolving needs of high-performance accelerators.

With an impressive 208 billion transistors across the complete accelerator, NVIDIA’s first multi-die chip represents a bold step in unified GPU performance. The Blackwell GPUs are designed to function as a single CUDA GPU, thanks to the NV-High Bandwidth Interface (NV-HBI) facilitating an unprecedented 10TB/second of bandwidth. This architectural marvel is complemented by up to 192GB of HBM3E memory, significantly enhancing both the memory capacity and bandwidth.

However, it’s not just about packing more power into the hardware. The Blackwell architecture is engineered to dramatically boost AI training and inference performance while achieving remarkable energy efficiency. This ambition is evident in NVIDIA’s projections of a 4x increase in training performance and a staggering 30x surge in inference performance at the cluster level.

Moreover, NVIDIA is pushing the boundaries of precision with its second-generation transformer engine, capable of handling computations down to FP4 precision. This advancement is crucial for optimizing inference workloads, offering a significant leap in throughput for AI models.

In summary, NVIDIA’s Blackwell architecture and the B200/B100 accelerators represent a formidable advancement in AI accelerator technology. By pushing the limits of architectural efficiency, memory capacity, and computational precision, NVIDIA is not just maintaining its leadership in the AI space but setting new benchmarks for performance and efficiency. As we await the rollout of these groundbreaking products, the tech world watches with anticipation, eager to see how NVIDIA’s latest innovations will shape the future of AI computing.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

Nvidia Partners with Indonesia to Launch $200M AI Center in Global AI Expansion Effort

Nvidia's gaze turns to Indonesia, marking a significant leap in the global AI expansion saga. In a world where AI is no longer just buzz but a revolution, Nvidia's initiative to plant a $200M AI powerhouse in the heart of Indonesia is a game-changer. But what's this all about, and why should we keep our eyes peeled for this monumental project? Let's dive in and get the lowdown.

The Collaboration that Spells Future: Nvidia, a titan in AI and semiconductor innovation, has joined forces with Indonesia's government and the telecom titan, Indosat Ooredoo Hutchison, laying the foundation for what promises to be an AI mecca. This isn't just any partnership; it's a strategic move aiming at a grand slam in the AI arena.

Surakarta: The Chosen Ground: Picture this: Surakarta city, already buzzing with talent and tech, now set to be the epicenter of AI excellence. With a hefty $200 million investment, this AI center isn't just about hardware. It's where technology meets human genius, promising a fusion of telecommunication infrastructure and a human resource haven, all set against the backdrop of Central Java's vibrant community.

Why Surakarta, You Ask? Surakarta, affectionately known as Solo, isn't just chosen by chance. It's a city ready to leap into the future, boasting an arsenal of human resources and cutting-edge 5G infrastructure. According to Mayor Gibran Rakabuming Raka, Solo's readiness is not just about tech; it's about a community poised to embrace and lead in the AI revolution.

A Memorandum of Understanding with a Vision: Back in January 2022, Nvidia and the Indonesian government inked a deal not just on paper but on the future. This memorandum isn't just administrative; it's a commitment to empower over 20,000 university students and lecturers with AI prowess, sculpting Indonesia's next-gen AI maestros.

Riding the Wave of AI Frenzy: Post the unveiling of OpenAI's ChatGPT, the world hasn't just watched; it's leaped into the AI bandwagon, with the AI market ballooning from $134.89 billion to a staggering $241.80 billion in a year. Nvidia's move isn't just timely; it's a strategic chess move in a global AI match.

Beyond Borders: Nvidia's Southeast Asian Symphony: Indonesia's AI center is but a piece in Nvidia's grand Southeast Asian puzzle. From Singapore's collaboration for a new data center to initiatives with the Singapore Institute of Technology, Nvidia is weaving a network of AI excellence across the region, setting the stage for a tech renaissance.

A Global Race for AI Dominance: Nvidia's strides in Indonesia reflect a broader narrative. Giants like Google and Microsoft are not just spectators but active players, investing billions in AI ecosystems worldwide. This global sprint for AI supremacy is reshaping economies, technologies, and societies.

Tethering AI to Crypto: The AI craze isn't just confined to traditional tech realms. In the cryptosphere, firms like Tether are expanding their AI horizons, scouting for elite AI talent to pioneer new frontiers.

In Conclusion: Nvidia's foray into Indonesia with a $200M AI center is more than an investment; it's a testament to AI's transformative power and Indonesia's rising stature in the global tech arena. As we watch this partnership unfold, it's clear that the future of AI is not just being written; it's being coded, one innovation at a time.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

Amazon takes minority share in ChatGPT rival Anthropic AI

Amazon Invests in Anthropic AI

Amazon fulfills its $4-billion investment commitment to Anthropic AI, securing a minority ownership stake in the startup and providing AWS cloud services.

Initial Investment and Recent Announcement

Amazon initially committed $4 billion to Anthropic, with an initial investment of $1.25 billion last September. The recent announcement confirms an additional investment of $2.75 billion, cementing Amazon’s position as a minority owner in Anthropic.

About Anthropic AI

Anthropic AI, founded in 2021 by former members of OpenAI, has emerged as a significant player in the AI landscape. The company has developed its own AI chatbot named Claude, which competes with ChatGPT, among others. In a notable advancement, Anthropic released Claude 3 in early March, enhancing its capabilities to include image analysis.

Collaboration with Amazon Web Services (AWS)

Amazon disclosed that Anthropic has chosen AWS as its primary cloud provider for critical operations, including safety research and the development of foundational models. Anthropic will leverage AWS Trainium and Inferentia chips for building, training, and deploying future AI models.

Impact on Customers

Swami Sivasubramanian, AWS’s vice president of data and AI, expressed confidence in the collaboration, highlighting its potential to enhance customer experiences. He emphasized the significance of generative AI as a transformative technology in today’s landscape.

Amazon Q: AI Assistant for Businesses

In addition to its investments in Anthropic AI, Amazon introduced Amazon Q, an AI-powered assistant tailored for business use. Amazon Q’s capabilities include problem-solving, content creation, and more, positioning it as a versatile tool for businesses.

Competition and Legal Challenges

Amazon faces competition in the AI space, with Google also investing in Anthropic AI. Legal battles, including copyright and data infringement disputes, have arisen, reflecting the complexities of AI development. Anthropic addressed concerns by updating its commercial terms of service, emphasizing data protection and copyright compliance.

In conclusion, Amazon’s investment in Anthropic AI signifies its commitment to advancing AI technologies and enhancing customer experiences. The collaboration between Amazon and Anthropic, coupled with the introduction of Amazon Q, underscores the dynamic evolution of AI in business contexts.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

NVIDIA DGX H100 Systems — World’s Most Advanced Enterprise AI Infrastructure

In the bustling world of enterprise AI, the new NVIDIA DGX H100 systems are setting a gold standard, ready to tackle the hefty computational needs of today’s big hitters like language models, healthcare innovations, and climate research. Imagine a powerhouse packed with eight NVIDIA H100 GPUs, all linked together to deliver a staggering 32 petaflops of AI performance. That’s a whopping six times the muscle of its predecessors, all thanks to the new FP8 precision.

These DGX H100 units aren’t just standalone heroes; they’re the core of NVIDIA’s cutting-edge AI infrastructure — the DGX POD™ and DGX SuperPOD™ platforms. Picture the latest DGX SuperPOD architecture, now featuring an innovative NVIDIA NVLink Switch System, enabling up to 32 nodes to join forces, harnessing the power of 256 H100 GPUs.

The game-changer? This next-gen DGX SuperPOD is capable of delivering an eye-watering 1 exaflop of FP8 AI performance. That’s six times more powerful than what came before, making it a beast for running enormous LLM tasks that have trillions of parameters to consider.

Jensen Huang, the visionary founder and CEO of NVIDIA, puts it best: “AI has revolutionized both the capabilities of software and the way it’s created. Industries leading the charge with AI understand just how critical robust AI infrastructure is. Our DGX H100 systems are set to power these enterprise AI hubs, turning raw data into our most valuable asset — intelligence.”

NVIDIA Eos: A Leap Towards the Future with the Fastest AI Supercomputer

NVIDIA isn’t stopping there. They’re on track to debut the DGX SuperPOD featuring this groundbreaking AI architecture, aimed at powering NVIDIA researchers as they push the boundaries in climate science, digital biology, and AI’s next frontier.

The Eos supercomputer is anticipated to snatch the title of the world’s fastest AI system, boasting 576 DGX H100 systems equipped with 4,608 H100 GPUs. With an expected performance of 18.4 exaflops, Eos is set to outpace the current champion, Fugaku from Japan, in AI processing speed by 4 times, and offer 275 petaflops for traditional scientific computing.

Eos isn’t just a machine; it’s a model for future AI infrastructure, inspiring both NVIDIA’s OEM and cloud partners.

Scaling Enterprise AI with Ease: The DGX H100 Ecosystem

The DGX H100 systems are designed to scale effortlessly as enterprises expand their AI ventures, from pilot projects to widespread implementation. Each unit boasts not just the GPUs but also two NVIDIA BlueField®-3 DPUs for advanced networking, storage, and security tasks, ensuring operations are smooth and secure.

With double the network throughput of its predecessors and 1.5x more GPU connectivity, these systems are all about efficiency and power. Plus, when combined with NVIDIA’s networking and storage solutions, they form the flexible backbone of any size AI computing project, from compact DGX PODs to sprawling DGX SuperPODs.

Empowering Success with NVIDIA DGX Foundry

To streamline the path to AI development, NVIDIA DGX Foundry is expanding globally, offering customers access to advanced computing infrastructure even before their own setups are complete. With new locations across North America, Europe, and Asia, remote access to DGX SuperPODs is now within reach for enterprises worldwide.

This initiative includes the NVIDIA Base Command™ software, simplifying the management of the AI development lifecycle on this robust infrastructure.

MLOps and Software Support: Fueling AI Growth

As AI becomes a staple in operationalizing development, NVIDIA’s MLOps solutions from DGX-Ready Software partners are enhancing the “NVIDIA AI Accelerated” program. This ensures customers have access to enterprise-grade solutions for workflow management, scheduling, and orchestration, driving AI adoption forward.

Simplifying AI Deployment with DGX-Ready Managed Services

Recognizing the growing need for accessible enterprise AI infrastructure, NVIDIA is introducing the DGX-Ready Managed Services program. This initiative, with Deloitte as a pioneering global provider, offers expert-managed NVIDIA DGX systems and software, enabling businesses worldwide to seamlessly integrate AI into their operations.

Keeping Systems at the Cutting Edge: DGX-Ready Lifecycle Management

To ensure customers always have access to the latest NVIDIA DGX technology, the new DGX-Ready Lifecycle Management program enables easy upgrades to the newest platforms, keeping AI infrastructure current and powerful.

Data Sheet

For a deep dive into the specs and capabilities, don’t forget to download the data sheet, offering you a comprehensive overview of what makes the NVIDIA DGX H100 systems the heart of the world’s most advanced enterprise AI infrastructure.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

#Nvidia DGX H100#DGX H100#nvidia#exeton#technology#gpu#artifical intelligence#Exeton#nvidia dgx#nvidia dgx system#h100 data sheet

0 notes

Text

The Silent Master RTX 4070 (Ti) SUPER Series with Innovative Noctua Cooling

Sycom, a prominent Japanese tech innovator, has once again showcased its dedication to Noctua’s renowned cooling fans with its latest Silent Master series graphics cards. Notably, Sycom is also known for its Silent Hydro series, offering cutting-edge liquid-cooling solutions. The hallmark of these series is their exceptional ability to keep systems cool under pressure.

In their latest endeavor, Sycom introduces the RTX 4070 Ti SUPER as the jewel in its crown, marking it as the flagship model within the series. Although the lineup includes the RTX 4060, RTX 4070, and RTX 4070 Ti models, there’s yet to be a whisper about the inclusion of RTX 4080 or RTX 4090 variants.

The SUPER versions, encompassing both the 4070 Ti SUPER and the 4070 SUPER, are noteworthy for their enhanced core counts. Specifically, the 4070 Ti SUPER variant is equipped with a substantial 16GB of VRAM. It’s crucial to underline that Sycom has opted not to factory-overclock any of the models within the Silent Master series, prioritizing stability and longevity.

A Closer Look at the Design Innovations

The Silent Master variants, based on the RTX 4070 SUPER and RTX 4070 Ti SUPER, are uniquely designed to accommodate two Noctua NF-A12X25 PWM fans, renowned for their quiet efficiency. These models exhibit differences in dimensions; the RTX 4070S stands at 27.8 cm in length, 14.5 cm in width, and 7 cm in height, making it 3.5 slots thick. In contrast, the RTX 4070 Ti SUPER extends to 31.5 cm in length and rises slightly higher to 7.7 cm, culminating in a thickness of 3.8 slots. Sycom assures users of minimal noise disruption, with decibel levels resting at 31.4 dB in idle mode and peaking at 37.9 dB under load, ensuring an immersive and uninterrupted gaming experience.

Exclusive Availability

These groundbreaking Sycom GPUs are uniquely marketed within Japan, with an exclusive offering as part of prebuilt systems only. This means enthusiasts outside Japan might have to wait or find alternative means to experience the prowess of the Silent Master series. As an illustration, the Silent-Master NEO Z790/D5 PC, equipped with a Core i5–14600K, is tagged with specific pricing for the inclusion of Sycom GPUs, reflecting their premium status and the sophisticated technology they embody.

Expanding the Reach and Impact

The introduction of the Silent Master RTX 4070 (Ti) SUPER series with Noctua fans marks a significant milestone in GPU design, focusing on cooling efficiency and noise reduction without compromising performance. Sycom’s decision to prioritize advanced cooling technologies, such as those offered by Noctua, underscores a commitment to delivering high-quality, durable products that cater to the needs of the gaming community and beyond.

By ensuring these GPUs are part of well-rounded, prebuilt systems, Sycom not only guarantees optimal compatibility and performance but also offers a seamless experience for users looking to upgrade their gaming rigs or embark on new computing adventures. This strategic approach highlights Sycom’s role as a pioneer in integrating cutting-edge technologies to meet the evolving demands of the digital age, promising exciting possibilities for future innovations and collaborations.

Stay tuned for further updates as Sycom continues to push the boundaries of gaming and computing technology, solidifying its position as a leader in the tech industry.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

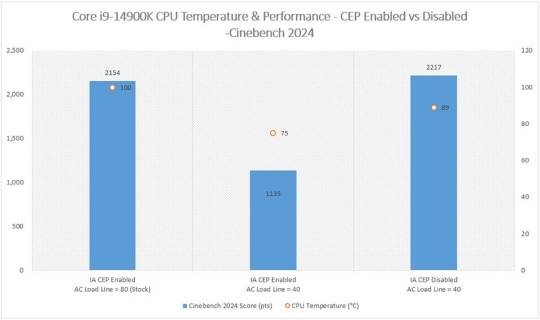

Boosting Performance: MSI’s Groundbreaking Firmware Update Unlocks Full Potential of Intel 600/700 Motherboards

In the ever-evolving landscape of computer hardware, enthusiasts and professionals alike constantly seek ways to push their systems beyond the conventional limits. MSI, a leading name in the world of motherboards and graphics cards, has recently introduced a firmware update that marks a significant leap forward in motherboard performance and thermal efficiency. This update specifically targets the Intel 600 and 700 series motherboards, offering users an unprecedented ability to disable the “Current Excursion Protection” (CEP) feature. But what exactly is CEP, and how does disabling it benefit users? Let’s dive deep into the mechanics, implications, and procedures surrounding this innovative update.

Understanding Current Excursion Protection (CEP)

Current Excursion Protection is a safety mechanism designed to protect the CPU from potential damage due to insufficient voltage. While its intentions are protective, recent findings by MSI reveal an unintended consequence: a notable reduction in performance when CEP is active. This discovery has led to a paradigm shift in how enthusiasts might approach system optimization for enhanced performance.

Performance Impacts: Before and After Disabling CEP

Compatibility: Which Processors and Motherboards Are Affected?

The firmware update’s ability to disable CEP is not uniformly applicable across all Intel processors. It supports all 14th Gen Core K-series and non-K CPUs, with limitations on the 13th and 12th Gen Core processors. Specifically, only K-series CPUs from these generations are eligible, excluding non-K (65W SKUs) models. This delineation ensures that users can identify whether their system can benefit from the update.

The Technical Rationale: Insights from MSI’s Findings

Step-by-Step Guide: How to Disable CEP on Your MSI Motherboard

The process of disabling CEP is straightforward, thanks to MSI’s user-friendly BIOS interface. Users can navigate through the BIOS settings to locate the newly added option for CEP disabling, ensuring they can easily take advantage of the performance enhancements this update provides.

The Role of CPU AC Load Line in Performance Metrics

Adjusting the CPU AC Load Line plays a crucial role in this context, as it directly influences CPU voltage and, consequently, performance and thermal output. MSI’s update highlights the intricate balance between voltage, performance, and temperature, showcasing the profound impact of motherboard settings on overall system behavior.

Benefits of Disabling CEP: A Closer Look at Temperature and Performance Gains

The benefits of disabling CEP extend beyond mere performance improvements. The substantial reduction in CPU temperature contributes to a more stable and efficient system, potentially extending the lifespan of the processor and other components. This dual advantage of performance enhancement and thermal efficiency underscores the value of MSI’s firmware update.

Potential Risks: Considerations Before Altering CPU Voltage

While the option to disable CEP presents an attractive opportunity for performance gains, it’s essential to approach voltage adjustments with caution. MSI advises that altering CPU voltage should be done judiciously, as it can lead to system instability if not managed carefully. Users should weigh the benefits against the potential risks to ensure a balanced and safe optimization process.

Future Updates and How to Stay Informed

MSI’s commitment to innovation and user satisfaction suggests that this firmware update is just the beginning of a series of enhancements aimed at empowering users. By staying informed through MSI’s official channels, enthusiasts can anticipate future updates that continue to push the boundaries of motherboard performance and customization.

FAQs

What is Current Excursion Protection?

Current Excursion Protection is a feature designed to prevent CPU damage from insufficient voltage by automatically adjusting performance levels.

How does disabling CEP affect performance?

Disabling CEP can lead to a significant increase in performance and a decrease in CPU temperature, as it prevents the automatic reduction of performance levels due to voltage adjustments.

Which motherboards and CPUs are compatible with the new firmware update?

The update applies to Intel 600 and 700 series motherboards and is compatible with all 14th Gen Core K-series and non-K CPUs. However, only K-series CPUs from the 13th and 12th Gen support disabling CEP.

What are the risks of adjusting CPU voltage?

Improperly adjusting CPU voltage can lead to system instability. It’s recommended to proceed with caution and consider the potential risks before making adjustments.

How can I disable CEP on my MSI motherboard?

Disabling CEP can be done through the BIOS settings on your MSI motherboard, where you’ll find the option to turn off this feature.

Can disabling CEP lead to system instability?

While disabling CEP itself is not likely to cause instability, adjusting the CPU voltage to demonstrate its effects, as done in MSI’s testing, can potentially lead to instability if not done carefully.

Conclusion

MSI’s firmware update for Intel 600 and 700 series motherboards represents a significant step forward in the pursuit of optimal system performance and thermal efficiency. By allowing users to disable Current Excursion Protection, MSI not only enhances the user experience but also demonstrates a deep understanding of the delicate balance between protection, performance, and temperature. As enthusiasts explore the benefits of this update, the potential for future advancements in motherboard technology seems boundless, promising even greater control and customization for users seeking to maximize their system’s capabilities.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

LG Confirms Pricing of 32-Inch OLED Monitor with 4K 240Hz/1080p 480Hz Dual-Mode at $1,399

LG has officially listed its premium gaming OLED monitor, featuring the innovative Dual-Mode technology. This feature allows users to seamlessly switch between two resolutions and two refresh rates, catering to both competitive gaming and high-resolution content editing needs.

Diverging from the prevailing trend of 32-inch 4K QD-OLED monitors from other manufacturers, LG employs a different panel type known as WOLED, developed in-house. This panel supports a 3840x2160 resolution at 240 Hz and a 1920x1080 resolution at an impressive 480 Hz. The display is confirmed to offer VESA DisplayHDR TrueBlack 400 with up to 98.5% DCI-P3 color gamut expression. For variable refresh support, the monitor is compatible with NVIDIA G-Sync and AMD FreeSync Premium.

In addition to its advanced features, the 32GS95UE is equipped with Pixel Sound technology, transforming the panel into a large-screen speaker. Sound is emitted from behind the OLED screen, aiming to provide an enhanced audio experience compared to traditional speakers typically located underneath the screen.

Despite its notable features, it’s important to note that the monitor lacks DisplayPort 2.1 support, confirming DisplayPort 1.4 with Display Stream Compression (DSC) support. However, it does include an HDMI 2.1 port.

LG has set the official price for the monitor at $1,399, placing it on the higher end of the 32-inch 4K OLED spectrum. The expected shipping date is next month, possibly around April 15. While the monitor is currently available for pre-order, early reviews on the LG site, though limited in number, have expressed concerns, particularly regarding the matte finish on the panel.

0 notes

Text

MANLI and EMTEK Unveil White GeForce RTX 4080 SUPER Cards

Manli and Emtek, recognized as official NVIDIA AIC vendors in Hong Kong and South Korea, respectively, may not be globally renowned brands, but their designs have gained visibility, even reaching European markets. Our focus on Manli GPUs, notable for their military-centric design, reveals their distinctive black and green color scheme in the RTX 40 series, with some cards featuring a built-in spirit level.

In a recent development, Manli has refreshed its RTX 40 lineup with the SUPER variants introduced by NVIDIA in January. Surprisingly, the company has departed from its iconic green design, embracing an entirely new aesthetic characterized by a full white cooler design. Notably, Manli has retained the unique spirit levels for their RTX 4080 SUPER model.

The RTX 4070 Ti SUPER and RTX 4070 SUPER, adopting a dual-slot design, offer a compact form factor, while the RTX 4080 SUPER expands further to a third slot. It’s worth mentioning that none of these three models come factory-overclocked.

On another front, Emtek has revealed a refresh of their MIRACLE series, featuring an all-white design in line with current market trends. Similar to Manli, Emtek has chosen not to include factory overclocking on any of these cards. Gamers can choose from the 4070, 4070 Ti, and 4080 models, all SUPER variants, available in either a 2.5-slot or 3-slot configuration based on the specific SKU.

The company emphasizes that all cards in the series support a 0dB mode, ensuring that the fans remain idle unless a certain GPU load threshold is reached.

As of now, the Manli RTX 40 SUPER cards are not yet available for purchase, while EMTEK’s designs have already surfaced, with a retailer in South Korea listing them for potential buyers. We’ll continue to monitor for any updates on these cards.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

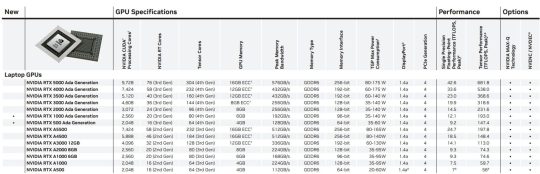

NVIDIA Unveils RTX 1000 and RTX 500 ADA GPUs for Laptops at MWC 2024

During the Mobile World Congress (MWC) 2024, NVIDIA made significant strides in professional laptop graphics by introducing two cutting-edge additions to its RTX ADA series. These state-of-the-art graphics cards, fueled by the potent AD107 GPU, deliver an impressive performance with up to 2560 CUDA cores. The RTX A1000 ADA aligns itself with the core count of the RTX A2000, boasting 2560 cores, while the RTX 500 ADA adopts a slightly different configuration with 2048 cores.

This unveiling extends NVIDIA’s comprehensive lineup of RTX ADA laptop GPUs for workstations to encompass a total of seven SKUs, which includes the existing AD103, AD104, AD106, and the latest additions, AD107. Users now have the flexibility to choose from various memory configurations, ranging from 4GB to 16GB, tailored to their specific preferences and computing requirements.

Noteworthy enhancements are found in the RTX 1000 ADA, which now features 6GB VRAM over a 96-bit bus. This marks a strategic departure from the Ampere architecture’s memory bus width while simultaneously expanding overall memory capacity. The RTX 1000 and 500 Ampere models initially featured 2048 cores and a 4GB configuration. However, NVIDIA introduced a 6GB version of the RTX 1000, positioning the RTX 1000 ADA as a sophisticated refresh of the Ampere model. This refresh incorporates a new architecture while preserving nearly identical configurations, ensuring a seamless transition for users.

In tandem with these advancements, NVIDIA is elevating the Total Graphics Power (TGP) for individual SKUs. The RTX 500’s power range is expanding from 20–60W to 35–60W, while the RTX 1000 series sees an increase from 35–95W to 35–140W. Notably, the RTX 1000 series supports Dynamic Boost, providing an additional 15W, with this additional power already factored into the specified power ranges. This enhancement contributes to the GPUs’ overall efficiency and performance optimization.

NVIDIA’s latest additions to the RTX ADA series represent a significant leap forward in laptop GPU technology, offering developers, creators, and professionals a powerful and versatile solution for their graphics-intensive tasks. The meticulous attention to core counts, memory configurations, and power efficiency underscores NVIDIA’s commitment to delivering cutting-edge graphics solutions for the evolving demands of modern computing.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

NVIDIA Unveils GTC 2024 Keynote Teaser: A Deep Dive into the World of AI

NVIDIA is currently generating excitement for its upcoming keynote at the Graphics Technology Conference (GTC) scheduled for March 18, positioning the event as the “Number 1 AI Conference for Developers.” After three years of virtual conferences, GTC 2024 is set to return to an in-person format. While GTC is a series of events worldwide, the spring keynote in San Jose holds particular significance.

Traditionally focusing on data centers and workstations, GTC’s emphasis is now entirely on AI. NVIDIA is anticipated to provide updates on its next-generation General Purpose GPU (GPGPU) accelerators, particularly the Blackwell architecture. Expectations include insights into Blackwell, NVIDIA’s next-gen architecture, with the B100 and GB200 products highlighted in recent roadmaps for the 2024–2025 period. Notably, the previous year concentrated on Hopper updates, introducing more powerful H200 and GH200 accelerators.

The official preview video accentuates Generative AI applications, showcasing the WPP/NVIDIA engine for digital advertising, the newly introduced Chat with RTX, an industrial metaverse powered by SyncTwin, AI art from Refik Anadol Studio, and OpenAI generating code for Blender animations.

Historically, GTC has not extensively addressed consumer graphics, and this trend is expected to continue at the upcoming event. While the focus remains on developers, it’s worth noting that there have been exceptions, such as GTC 2014, where NVIDIA featured a high-end GPU like the TITAN Z series.

Source:

youtube

0 notes

Text

Exeton: NVIDIA A16 Enterprise 64GB 250W — Revolutionizing Ray Tracing Power and Performance

The landscape of artificial intelligence (AI), high-performance computing (HPC), and graphics is swiftly evolving, necessitating more potent and efficient hardware solutions. NVIDIA® Accelerators for HPE lead this technological revolution, delivering unprecedented capabilities to address some of the most demanding scientific, industrial, and business challenges. Among these cutting-edge solutions is the NVIDIA A16 Enterprise 64GB 250W GPU, a powerhouse designed to redefine performance and efficiency standards across various computing environments.

The World’s Most Powerful Ray Tracing GPU

The NVIDIA A16 transcends being merely a GPU; it serves as a gateway to the future of computing. Engineered to effortlessly handle demanding AI training and inference, HPC, and graphics tasks, this GPU is an integral component of Hewlett Packard Enterprise servers tailored for the era of elastic computing. These servers provide unmatched acceleration at every scale, empowering users to visualize complex content, extract insights from massive datasets, and reshape the future of cities and storytelling.

Performance Features of the NVIDIA A16

The NVIDIA A16 64GB Gen4 PCIe Passive GPU presents an array of features that distinguish it in the realm of virtual desktop infrastructure (VDI) and beyond:

1- Designed For Accelerated VDI

Optimized for user density, this GPU, in conjunction with NVIDIA vPC software, enables graphics-rich virtual PCs accessible from anywhere, delivering a seamless user experience.

2- Affordable Virtual Workstations

With a substantial frame buffer per user, the NVIDIA A16 facilitates entry-level virtual workstations, ideal for running workloads like computer-aided design (CAD), powered by NVIDIA RTX vWS software.

3- Flexibility for Diverse User Types

The unique quad-GPU board design allows for mixed user profile sizes and types on a single board, catering to both virtual PCs and workstations.

4- Superior User Experience

Compared to CPU-only VDI, the NVIDIA A16 significantly boosts frame rates and reduces end-user latency, resulting in more responsive applications and a user experience akin to a native PC or workstation.

5- Double The User Density

Tailored for graphics-rich VDI, the NVIDIA A16 supports up to 64 concurrent users per board in a dual-slot form factor, effectively doubling user density.

6- High-Resolution Display Support

Supporting multiple high-resolution monitors, the GPU enables maximum productivity and photorealistic quality in a VDI environment.

7- Enhanced Encoder Throughput

With over double the encoder throughput compared to the previous generation M10, the NVIDIA A16 delivers high-performance transcoding and the multi-user performance required for multi-stream video and multimedia.

8- Highest Quality Video

Supporting the latest codecs, including H.265 encode/decode, VP9, and AV1 decode, the NVIDIA A16 ensures the highest-quality video experiences.

NVIDIA Ampere Architecture

The GPU features NVIDIA Ampere architecture-based CUDA cores, second-generation RT-Cores, and third-generation Tensor-Cores. This architecture provides the flexibility to host virtual workstations powered by NVIDIA RTX vWS software or leverage unused VDI resources for compute workloads with NVIDIA AI Enterprise software.

The NVIDIA A16 Enterprise 64GB 250W GPU underscores NVIDIA’s commitment to advancing technology’s frontiers. Its capabilities make it an ideal solution for organizations aiming to leverage the power of AI, HPC, and advanced graphics to drive innovation and overcome complex challenges. With this GPU, NVIDIA continues to redefine the possibilities in computing, paving the way for a future where virtual experiences are indistinguishable from reality.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

ASUS Extends OLED Burn-In Warranty to 3 Years for ROG PG32UCDM Monitor in Response to MSI

ASUS has responded to MSI's announcement of a 3-year warranty for burn-in issues on their latest OLED gaming monitors by extending the warranty for their ROG PG32UCDM monitor to 3 years as well. Notably, this extended warranty applies to only one monitor out of the current lineup of 5 OLED models. Specifically, the ROG PG32UCDM, an enthusiast-class monitor and one of ASUS's most expensive monitors to date, is the recipient of the 3-year warranty. It is important to mention that the monitor is not yet released, but pre-order pages already indicate a pricing of €1,500.

The ROG PG32UCDM is a 32-inch 4K QD-OLED monitor boasting a 240 Hz refresh rate, DisplayHDR 400 True Black certification, and 99% DCI-P3 color coverage. ASUS is also in the process of developing a variant named PG32UCDP, featuring a dual-Hz mode (4K 240Hz/1080p 480 Hz). However, since this variant lacks an official web page, warranty details are not currently available.

While the extension of the warranty to 3 years for a high-end model like the ROG PG32UCDM is a positive step for OLED monitor manufacturers, it remains to be seen whether this new warranty will be expanded to cover other models in the future. ASUS has yet to provide the complete details of the extended warranty.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

NVIDIA RTX 4080 SUPER tested: up to 2.4% faster in 3DMark, similar gaming performance to RTX 4080

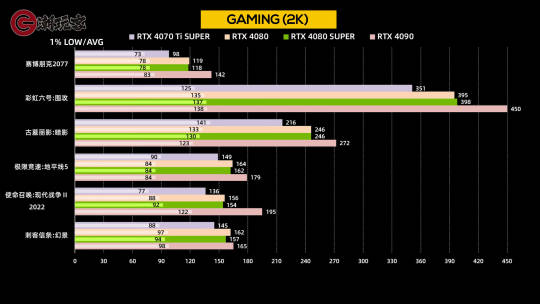

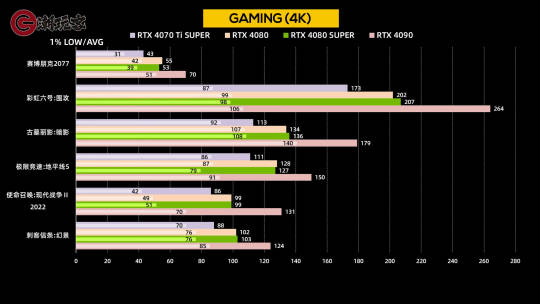

Chaowanke, a China-based reviewer, independently purchased and tested the NVIDIA RTX 4080 SUPER. Despite the delayed release of reviews due to a mishap with Founders Edition sampling causing some cards to arrive late, chaowanke shared a comprehensive review based on the AX GAMING X3W model, a white custom GPU designed for the Chinese market by Inno3D’s sub-brand, AX GAMING.

The review, conducted on a Core i7–14700K system with 32GB DDR5–6000 memory, compared the RTX 4080 SUPER with its counterparts in the RTX 40 lineup, including the RTX 4090, RTX 4080, and RTX 4070 Ti SUPER. Notably, the RTX 4080 SUPER, based on Inno3D X3 design, exhibited a 60 Hz higher clock than most custom designs, narrowing the performance gap between the models.

Chaowanke confirmed a marginal 2.4% improvement in synthetic tests, particularly evident in the Speed Way test, while gaming performance showed similarities or occasional inferiority compared to the RTX 4080. The review emphasized that the RTX 4080 SUPER’s performance, affected by the higher-clocked Inno3D design, led to trading places with the RTX 4080 in gaming benchmarks at both 2K and 4K resolutions.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

GeForce RTX 4080 SUPER reviews rescheduled to January 31st

NVIDIA has chosen to postpone the reviews for the RTX 4080 SUPER by one day, unrelated to any driver issues. The delay stems from challenges with the shipment of RTX 4080 SUPER Founders Edition cards to reviewers, who did not receive their samples on time, with some only obtaining them over the weekend, and many just today.

It's important to note that this month has been particularly demanding for the tech media, commencing with their participation at CES 2024, followed by immediate testing of the RTX 4070 SUPER. NVIDIA has imposed multiple embargoes throughout the month, unveiling each SUPER model on a weekly basis.

The RTX 4080 SUPER serves as the concluding SKU to launch this month. Furthermore, there were other embargoes, including the AMD Radeon RX 7600 XT and the recently lifted Ryzen 8000G series. Additionally, there were embargoes for the Intel Core 14th non-K series, although it seems that the launch had limited coverage.

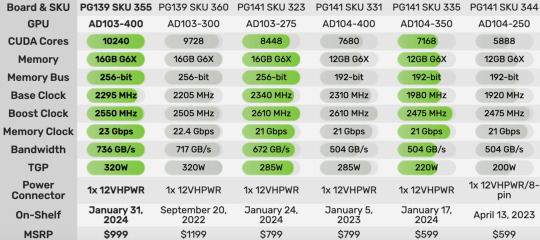

The timeline of events includes key dates:

January 8 (9 AM PT): RTX 4070/4070Ti/4080 SUPER announcement

January 8 (2 PM PT): RTX 4070 SUPER unboxing

January 16 (6 AM PT): RTX 4070 SUPER MSRP Reviews

January 17 (6 AM PT): RTX 4070 SUPER non-MSRP Reviews & Product Launch (On-shelf)

January 23 (6 AM PT): RTX 4070 Ti SUPER MSRP Reviews

January 24 (6 AM PT): RTX 4070 Ti SUPER non-MSRP Reviews & Product Launch (On-shelf)

January 30 (6 AM PT): RTX 4080 SUPER MSRP Reviews

January 31 (6 AM PT): RTX 4080 SUPER MSRP and non-MSRP Reviews & Product Launch (On-shelf)

In essence, this means that there will be no coverage tomorrow for the so-called MSRP cards, priced at NVIDIA's recommended retail price of $999. Instead, all RTX 4080 SUPER reviews will be published on the same day, with this embargo extending to the product launch as well.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

NVIDIA RTX 4080 SUPER launching this Week

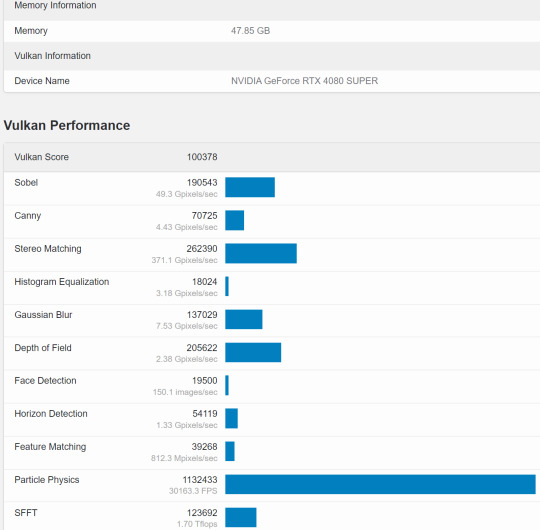

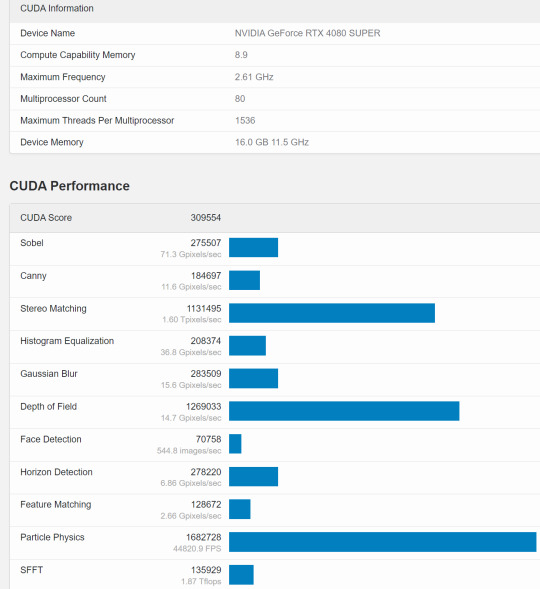

Initial tests have been conducted on the card, but their significance for gamers remains limited. A reviewer, utilizing a high-end Z790 platform with an Intel Core i9–14900K, has subjected the RTX 4080 SUPER to three Geekbench compute tests, covering Vulkan, OpenCL, and CUDA.

The results are as follows:

- Vulkan: 100378

- OpenCL: 264806

- CUDA: 309554

RTX 4080 SUPER, Source: Geekbench

It’s worth noting that at least one benchmark (Vulkan) appears to display incorrect information, with benchmarks for the RTX 4080 non-SUPER showing scores twice as high. Due to this discrepancy, no comparisons are being made as they would lack meaningful insights. The need for newer Geekbench 6.0 tests is emphasized for more accurate evaluations.

Reviewers have received the drivers, and testing of the RTX 4080 SUPER is already underway, with some reviewers having started their assessments. More meaningful data, including 3DMark tests with gaming benchmarks, is expected from reviewers next week.

While the RTX 4080 SUPER is not anticipated to be a significant upgrade over the RTX 4080, boasting only a 5% core increase and maintaining the same TDP, its standout feature is the new price tag of $999, a $200 reduction from the original SKU’s price. As the final addition to the SUPER series, the RTX 4080 SUPER aims to offer a more accessible option for enthusiasts.

Source: Geekbench (Vulkan), (CUDA), (OpenCL) via @BenchLeaks

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes