Text

10 rules that every time traveler needs to know

1. Traveling into the future is easy.

We travel into the future all the time, at a fixed rate: one second per second. Stick around, you'll be in the future soon enough. You can even get there faster than usual, by decreasing the amount of time you experience elapsing with respect to the rest of the world -- either by low-tech ways like freezing yourself, or by taking advantage of the laws of special relativity and zipping around near the speed of light. (Remember we're talking about what is possible according to the laws of physics here, not what is plausible or technologically feasible.) It's coming back that's hard.

2. Traveling into the past is hard -- but maybe not impossible.

If Isaac Newton's absolute space and time had been the correct picture of nature, we could simply say that traveling backwards in time was impossible, and that would be the end of it. But in Einstein's curved-spacetime universe, things are more flexible. From your own personal, subjective point of view, you always more forward in time -- more technically, you move on a timelike curve through spacetime. But the large-scale curvature of spacetime caused by gravity could, conceivably, cause timelike curves to loop back on themselves -- that is to say, become closed timelike curves -- such that anyone traveling on such a path would meet themselves in the past. That's what respectable, Einstein-approved time travel would really be like. Of course, there's still the little difficulty of warping spacetime so severely that you actually create closed timelike curves; nobody knows a foolproof way of doing that, or even whether it's possible, although ideas involving wormholes and cosmic strings and spinning universes have been bandied about.

3. Traveling through time is like traveling through space.

I'm only going to say this once: there would be no flashing lights. At least, there would only be flashing lights if you brought along some strobes, and decided to start them flashing as you traveled along your closed timelike curve. Likewise, there is no disappearance in a puff of smoke and re-appearing at some other time. Traveling through time is just like traveling through space: you move along a certain path, which (we are presuming) the universe has helpfully arranged so that your travels bring you to an earlier moment in time. But a time machine wouldn't look like a booth with spinning wheels that dematerializes now and rematerializes some other time; it would look like a rocket ship. Or possibly a DeLorean, in the unlikely event that your closed timelike curve started right here on Earth and never left the road. Think of it this way: imagine there were a race of super-intelligent trees, who could communicate with each other using abstract concepts but didn't have the ability to walk. They might fantasize about moving through space, and in their fantasies "space travel" would resemble teleportation, with the adventurous tree disappearing in a puff of smoke and reappearing across the forest. But we know better; real travel from one point to another through space is a continuous process. Time travel would be like that.

4. Things that travel together, age together.

If you travel through time, and you bring along with you some clocks or other objects, all those things experience time in exactly the same way that you do. In particular, both you and the clocks march resolutely forward in time, from your own perspective. You don't see clocks spinning wildly backwards, nor do you yourself "age" backwards, and you certainly don't end up wearing the clothes you favored back in high school. Your personal experience of time is governed by clocks in your brain and body -- the predictable beating of rhythmic pulses of chemical and biological processes. Whatever flow of time is being experienced by those processes -- and thus by your conscious perception -- is also being experienced by whatever accompanies you on your journey.

5. Black holes are not time machines.

Sadly, if you fell into a black hole, it would not spit you out at some other time. It wouldn't spit you out at all -- it would gobble you up and grow slightly more corpulent in the process. If the black hole were big enough, you might not even notice when you crossed the point of no return defined by the event horizon. But once you got close to the center of the hole, tidal forces would tug at you -- gently at first, but eventually tearing you apart. The technical term is spaghettification. Not a recommended strategy for would-be time adventurers. Wormholes -- tunnels through spacetime, which in principle can connect widely-separated events -- are a more promising alternative. Wormholes are to black holes as elevators are to deep wells filled with snakes and poisoned spikes. The problem is, unlike black holes, we don't know whether wormholes exist, or even whether they can exist, or how to make them, or how to preserve them once they are made. Wormholes want to collapse and disappear, and keeping them open requires a form of negative energies. Nobody knows how to make negative energies, although they occasionally slap the name "exotic matter" on the concept and pretend it might exist.

6. If something happened, it happened.

What people want to do with time machines is to go into the past and change it. You can't. The past already happened, and it can't un-happen. You might wonder what's to stop you from jumping in your time machine, finding your high-school self, and convincing them that they really shouldn't go to the senior prom after all, thereby saving yourself all sorts of humiliation. But if you really did go to the prom, then that can't happen. The simple way out, of course, is to suppose that travel into the past is simply impossible. But even if it's not, you can't change what already happened; every event in spacetime is characterized by certain things occurring, and those things are fixed once and for all once they happen. If you did manage to go back in time to your years in high school, something would prevent you from dissuading your younger self from doing anything other than what they actually did. Even if you tried really hard.

7. There is no meta-time

The least realistic time-travel movie of all time might be Back to the Future. When Marty McFly changes the past (violating Rule 6), the future "instantaneously" changes. What the hell is that supposed to mean? Time measures the temporal interval between different events in spacetime, and can be quantified by clocks. There is no set of clocks outside the universe, with respect to which you can go muck around in the past and have effects propagate into the future "at the same time." Likewise, your brain is not going to change to remember things differently, nor will any other record-keeping device such as diaries or photographs or embarrassing sex tapes. Sorry about that.

8. You can't travel back to before the time machine was built.

Right now, at the particular place you are sitting, at the time when you are sitting there, one of two things is true: either there is a closed timelike curve passing through that point in spacetime, or there is not. And that situation will never change -- no matter what clever engineers may do in the future, if they create closed timelike curves they cannot pass through events in spacetime through which closed timelike curves did not pass (corollary of Rule 6). Or in plain English: if you build a time machine where there wasn't one before, it may be possible for future travelers to come back to that time, but nothing can help you go back to times before the machine was built.

9. Unless you go to a parallel universe.

Parallel universes -- the kind we contemplate in the many-worlds interpretation of quantum mechanics (MWI) -- provide potential loopholes for some of the above rules. According to the MWI, there exist different "branches" of the wave function of the universe, distinguished by different observed outcomes for the measurement of quantum events. In the celebrated Schrödinger's cat thought experiment, there is a "universe" where the cat is alive, and one where it is dead. Some imaginative (but respectable) physicists, especially David Deutsch, have speculated that we could combine this idea with the possibility of closed timelike curves to contemplate travel into the past of a different universe. If time travel is unlikely, this idea is (unlikely)^2, but it's not inherently paradoxical. If you could travel to the past in a different branch of the wave function, then we are allowed to contemplate changing that past in a self-consistent way, because it's no longer really "your" past. So almost all cinematic invocations of time travel -- where they are constantly mucking about, changing the past in crucial ways -- would have to appeal to something along these lines to make any sense. But even if you can change what you thought was the past, all of the rules of continuity and sensibility still apply -- no flashing lights, no disappearing, no sudden changes in the future, no re-writing of your memories, etc.

10. And even then, your old universe is still there.

Remember Rule 0: no paradoxes. If you have reliable records of having made some unwise decisions regarding your social life in high school, then those decisions were made, and can't be un-made. Even if you go into a different branch of the wave function, where you bestow some wisdom-of-experience on your younger self, you would only be changing the history of that universe. There is still the universe you left behind, with all of your bad decisions still intact. That's life in the multiverse for you. It remains for future scholars to write Ph.D. theses along the lines of Utility Functions and Moral Dilemmas in an Ensemble of Multiple Interacting Universes. But it's just a matter of time.

9 notes

·

View notes

Text

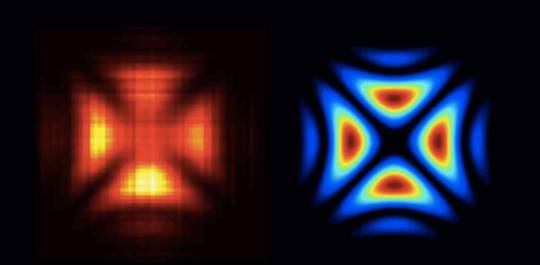

What is white reflected light, such as from a white sheet of paper or a white wall?

The real answer about reflection is not the one they teach in school. A flat specular mirror reflects all colours of light, and a roughened up metal such as sand-blasted aluminium reflects all colours of light and the reflection is diffuse, so why doesn’t it look white? So, reflecting all colours is not enough to make “white” and a rough surface is not enough to make “white” so what actually is white?

Most people completely misunderstand white. They have been taught that there are specular reflections (from polished metals such as a mirror or from polished dielectrics such as glass) and diffuse reflections from other objects with rough surfaces such as white paper.

In actual white objects, light is actually refracting (and reflecting) through extremely large numbers of small prisms or droplets of dielectric materials. Good examples are (1) a cloud of water droplets (2) a bowl of sugar crystals (3) a layer of snow. [Look carefully and all that is there in a “white” material is a lot of clear transparent refracting surfaces.] Most of the light is refracted around randomly and eventually some of it after several refractions and reflections comes back in your direction. The same is true in a glass of milk, in white paint, and in white paper.

The picture is a more accurate depiction of white diffuse “surfaces” but the refraction part is not shown, only secondary reflections from within the volume of the material. Note that the “volume” can be only a few thousandths of an inch thick as in the case of paint. I tried drawing all the refractions and reflections but the diagram got too crowded with light “rays” to understand.

This is why white paper looks fundamentally different from the rough surface of aluminium foil’s back side. “White” is not the result of light reflecting from a rough surface. That is a myth that absolutely must be dispelled when accurately modelling how light “reflects” from different objects. If you don’t realise what’s really going on, you end up modelling white objects that look like roughened aluminium foil and coloured objects that look like coloured foil. White only slightly darkens at shallow angles, a rough reflective surface darkens dramatically at shallow angles.

Most objects that we actually see in daily life are not rough “surfaces.” Light actually penetrates into the volume of the object. Human skin is a prime example. It was so misunderstood that early CGI movie made it look like plastic. Skin actually has more depth and volume to it. We find the appearance of subsurface scatter pleasing. Almost all paints (except metal paints) involve much more volume scattering than reflection from a rough surface. Many paints form a glossy dielectric surface over a volumetrically scattering body. This gives two different types of “reflections” from the same object, which we call a diffuse component and a specular component. The top dielectric layer provides a Fresnel reflection from its surface that can be very smooth (high gloss) or less smooth (semi-gloss, satin, eggshell) or almost non-existent (flat.) The large fraction of the light transmitted into the lower layers of the paint experiences volume scattering due to particles of different refractive index being suspended in a clear paint base. The reflection from the top layer will generally be full spectrum while the diffuse reflection from the volume will be affected by the dyes or pigments added to the paint (it will be coloured). The top layer reflection will also be polarised according to the Fresnel reflection equations, while the volume scattered light will be mostly random polarisation. (Note that even air bubbles in a clear liquid will appear as white.)

To really answer the question of why a white object looks completely different from a mirror, you have to understand (and look) a little deeper than the simple idea of “reflection” from a rough surface. Most of what we see can not be explained as reflections from rough surfaces, because that is not what actually happens unless you live in a tin foil world.

1 note

·

View note

Text

Was human life influenced by a huge explosion in space?

Nearby Supernova Explosions May Have Affected Human Evolution.

The closest supernovas to Earth may have blasted the planet with enough radiation to influence human evolution, researchers say.

Supernovas are the most powerful star explosions. These outbursts from enormous dying stars are visible all the way to the edge of the cosmos, and are bright enough to briefly outshine all of the stars in their host galaxies.

For more than 50 years, scientists have suggested that nearby stellar fireworks could have influenced life on Earth by disrupting global climate and even triggering mass extinctions. Previous research calculated that supernovas occurring within about 325 light-years of Earth, which are expected about once every 2 million to 4 million years, could deposit radioactive debris on this planet.

In 1999, researchers discovered significant levels of a mildly radioactive variety of iron known as iron-60 in deep-ocean rocks, indicating that supernovas have indeed sprayed Earth with radioactive material. Supernovas generate huge amounts of iron-60, whereas other natural ways of creating iron-60 produce only up to one-tenth as much.

Previous research suggested that the effects of high-energy particles from supernovas that explode within about 30 to 45 light-years of Earth would be catastrophic for life on the planet. However, prior work also estimated that explosions close enough to cause mass extinctions were very rare, "on the order of one every few billion years," study co-author Anton Wallner, a nuclear physicist at Australian National University in Canberra, told Space.com.

Now, researchers have pinpointed when and where the most recent supernovas closest to Earth might have occurred, and found that they happened recently enough to potentially influence human evolution.

Scientists investigated the origins of the "Local Bubble," the region of the Milky Way in which Earth's solar system is embedded. The Local Bubble — which measures about 600 by 600 by 1,200 light-years — is faintly lit by X-rays from hot plasma up to 1.8 million degrees Fahrenheit (1 million degrees Celsius), which likely came from a series of supernovas, said study lead author Dieter Breitschwerdt, an astrophysicist at the Berlin Institute of Technology.

The researchers focused on the origin of iron-60 in deep-sea rock that likely came from one or more supernovas 195 to 425 light-years from Earth about 2.2 million years ago.

"We can do a sort of galactic archaeology — like terrestrial archaeologists, we dig somewhere for traces of past events," Breitschwerdt told Space.com.

Using supercomputer models to calculate the likely masses of the dying stars and the complex trajectories this radioactive matter took, study team members were able to pinpoint the most probable times and sites of the explosions — two supernovas between about 290 and 325 light-years from the sun.

"Running several models took us about three to four years in total," Breitschwerdt said.

Breitschwerdt and his colleagues estimated that the closer of these two supernovas originated from a star with a mass about 9.2 times that of the sun and happened about 2.3 million years ago. The other supernova originated from a star with a mass about 8.8 times that of the sun and occurred about 1.5 million years ago. (In comparison, the oldest known member of the genus Homo — which includes modern humans, Homo sapiens — dates back to about 2.8 million years ago.)

These explosions were two of the 14 to 20 supernovas that supplied the hot plasma making up the Local Bubble, researchers said. The other supernovas likely showered iron-60 onto Earth as well, albeit to a smaller extent because they happened farther away and longer ago.

In a separate new study, Wallner and his colleagues focused on deep-sea rock from the Atlantic, Pacific and Indian oceans that had iron-60 levels about 40 times greater than average. Detecting this iron-60 was difficult — at best, "this extraterrestrial radioactive iron-60 is a million billion times less abundant in our samples than terrestrial stable iron," said Wallner, who led this second study. "We need extremely sensitive single-atom counting techniques to identify these iron-60 atoms," he said.

The researchers found that this radioactive debris was carried to Earth within interstellar dust grains in two relatively recent events — one 1.5 million to 3.2 million years ago, and the other 6.5 million to 8.7 million years ago. They suggested that these supernovas were less than 300 light-years away, close enough to be visible during the day and comparable to the brightness of the moon. This iron-60 may have come from supernovas that directly sprayed the solar system, but it's also possible that the solar system passed through interstellar clouds polluted with the remnants of multiple supernovas, researchers said.

Although Wallner noted that the supernovas he and his colleagues

investigated probably would not have been close enough to Earth to cause mass extinctions, he said that radiation from these explosions might have influenced the planet's climate. For instance, he noted that the older event they discovered coincided with temperature changes about 8 million years ago during the late Miocene epoch, when global changes in Earth's fauna occurred. In addition, the more recent event happened at about the same time that Earth began getting colder, near the end of the Pliocene epoch, about 3 million years ago — a climatic shift that might have helped spur the rise of the human lineage.

Moreover, Breitschwerdt noted that radiation from these explosions also might have triggered mutations in life-forms on Earth. "It might be possible that an increased rate of mutations directly influenced evolution — for example, increase in brain size," Breitschwerdt said.

In the future, Wallner and his colleagues will try to pinpoint exactly when supernova iron-60 fell onto Earth, to get a better idea of whether it might have influenced life here, Wallner said.

The scientists detailed their findings in two separate papers in the April 7 issue of the journal Nature.

http://www.space.com/32481-closest-supernova-star-explosions-human-evolution.html?ns_mchannel=social&ns_campaign=BBC_iWonder&ns_source=twitter&ns_linkname=knowledge_and_learning#sthash.PVGI2OJ9.dpuf

0 notes

Text

*UNIVERSE*

*The study of universe is known as

*Ans : Cosmology*

*The universe comprises of billions of

*Ans : Galaxies*

*The galaxies are made up of millions of

*Ans : Stars*

*Father of modern Astronomy

*Ans : Copernicus*

*The astronomer known as the Law Giver of Heavens

*Ans : Kepler*

*Light year and parsec are the units of measuring distances in the

*Ans : Universe*

*The distance travelled by light in one year is called

*Ans : One light year*

*One light year = 9.46 x 1012 kilometers

*An astronomical unit of distance

*Ans : Parsec*

*One parsec = 3.26 light year

*Scientist who found universe is expanding, in 1929

*Ans : Sir Edwin Hubble*

*Geocentric theory was propounded (the Earth was the centre of universe) by

*Ans : Ptolemy*

*Heliocentric theory was propounded (the Sun is the centre of the universe) by

*Ans : Copernicus*

*Laws of Planetary motion was discovered by

*Ans : Kepler*

*Study of celestial bodies and universe

*Ans : Astronomy*

*Study of the structure of the universe

*Ans : Cosmology*

*Study of the origin of universe

*Ans : Cosmogony*

*Study of life in outer space

*Ans : Exobiology*

*Study of moon

*Ans : Selenology*

*Study of Space Craft

*Ans : Astronautics*

*Study of Sun

*Ans : Heliology*

*International Astronomical year was observed by UNO in

*Ans : 2009*

*Father of Indian astronomy

*Ans : Aryabhattan*

*Father of Scientific astronomy

*Ans : Kepler*

*Father of modern Indian astronomy

*Ans : M.K.Vainu Bappu*

*Farther of Indian Space Programme

*Ans : Vikram Sarabhai*

*EVOLUTION OF UNIVERSE*

*There are three main theories put forward to explain origin and evolution of universe.

*1.BIG BANG THEORY*

*Most widely accepted theory regarding the origin of universe

*Ans : Big Bang Theory*

*Big Bang Theory was proposed by

*Ans : George Lemaitre*

*Big Bang Theory was modified by

*Ans : Edwin Hubble*

*The term big bang was coined by

*Ans : Fred Hoyle*

*Big bang theory is also called as

*Ans : Expanding Universe Hypothesis*

*2. STEADY STATE THEORY*

*Steady State Theory was proposed by

*Ans : Hermann Bondi, Thomas* Gold and Fred Hoyle

*Steady State Theory was proposed in

*Ans : 1948*

*Quasi Steady State Theory was proposed by

*Ans : Jayant Narlikar*

*3.THE PULSATING THEORY*

The Pulsating Theory was proposed by

*Ans : Dr. Allan Sandage*

*The theory which proposed that at present universe is expanding

*Ans : Pulsating Theory*

*GALAXIES*

*A galaxy is a vast collection of billions of stars, dust, gas and empty space held together by

Ans : Gravitational force

*The word galaxy was first introduced by

*Ans : William Herschel*

*First person to calculate distance between galaxies

*Ans : Sir Edwin Hubble*

*There are about 5Q billion galaxies exist in the universe

*Group of galaxies is known as

*Ans : Cluster*

*The most distant object visible to naked eye in the universe

*Ans : Andromeda Galaxy*

*Our sun and its planets belongs to the

*Ans : Milky Way Galaxy or Akash Ganga*

*First astronomer to demonstrate the existence of galaxies beyond milky way

*Ans : Edwin Hubble (1924)*

*TYPES OF GALAXIES*

1.Spiral Galaxies

2.Elliptical Galaxies

3.Irregular Galaxies

*The galaxy having central nucleus with great spiral arms

*Ans : Spiral Galaxy*

*Milky way and Andromeda are the examples of

*Ans : Spiral Galaxies*

*New stars are formed in Spiral Galaxies Largest spiral galaxy

*Ans : Andromeda Galaxy*

*The farthest object which can be seen with naked eyes

*Ans : Andromeda Galaxy*

*The milky way belongs to a cluster of galaxies called

*Ans : Local group*

*The galaxies having no spiral arms are called

*Ans : Elliptical,Galaxies*

*Red Giants and white dwarfs are seen in the

*Ans : Elliptical Galaxies*

*Dim collection of old stars are seen in the

*Ans : Elliptical Galaxies*

*Galaxies having no regular shape

*Ans : Irregular Galaxies*

*Large Magellanic cloud is an

*Ans : Irregular Galax*

0 notes

Text

Interesting Andromeda Galaxy

1. The Andromeda is a spiral galaxy which is located at a distance of 2.5 million light years from our galaxy. From earth, this spiral galaxy is at a distance of 2.4×1019 kilometers.

2. Andromeda is also known as Messier 31 or in short, M31.

3. In the galaxy cluster known as the Local Group, Andromeda is the largest galaxy. The Local Group is a cluster of 54 galaxies including Milky Way and Triangulum.

4. Scientists estimate that there are 1 trillion stars in the galaxy.

5. The estimated mass of Andromeda is 400 billion solar masses. Put in other words, it has the mass equivalent to the mass of 400 billion suns (by sun we are referring to the sun in our solar system).

0 notes

Text

Atomic Matter

The formation of all matter is on the order of the solar system. There is at the center of every minute universe of energy a relatively stable, comparatively stationary, nuclear portion of material existence. This central unit is endowed with a threefold possibility of manifestation. Surrounding this energy center there whirl, in endless profusion but in fluctuating circuits, the energy units which are faintly comparable to the planets encircling the sun of some starry group like your own solar system.

Within the atom the electrons revolve about the central proton with about the same comparative room the planets have as they revolve about the sun in the space of the solar system. There is the same relative distance, in comparison with actual size, between the atomic nucleus and the inner electronic circuit as exists between the inner planet, Mercury, and your sun.

The electronic axial revolutions and their orbital velocities about the atomic nucleus are both beyond the human imagination, not to mention the velocities of their component ultimatons. The positive particles of radium fly off into space at the rate of ten thousand miles a second, while the negative particles attain a velocity approximating that of light.

The local universes are of decimal construction. There are just one hundred distinguishable atomic materializations of space-energy in a dual universe; that is the maximum possible organization of matter in Nebadon. These one hundred forms of matter consist of a regular series in which from one to one hundred electrons revolve around a central and relatively compact nucleus. It is this orderly and dependable association of various energies that constitutes matter.

Not every world will show one hundred recognizable elements at the surface, but they are somewhere present, have been present, or are in process of evolution. Conditions surrounding the origin and subsequent evolution of a planet determine how many of the one hundred atomic types will be observable. The heavier atoms are not found on the surface of many worlds. Even on Urantia the known heavier elements manifest a tendency to fly to pieces, as is illustrated by radium behavior.

Stability of the atom depends on the number of electrically inactive neutrons in the central body. Chemical behavior is wholly dependent on the activity of the freely revolving electrons.

In Orvonton it has never been possible naturally to assemble over one hundred orbital electrons in one atomic system. When one hundred and one have been artificially introduced into the orbital field, the result has always been the instantaneous disruption of the central proton with the wild dispersion of the electrons and other liberated energies.

While atoms may contain from one to one hundred orbital electrons, only the outer ten electrons of the larger atoms revolve about the central nucleus as distinct and discrete bodies, intactly and compactly swinging around on precise and definite orbits. The thirty electrons nearest the center are difficult of observation or detection as separate and organized bodies. This same comparative ratio of electronic behavior in relation to nuclear proximity obtains in all atoms regardless of the number of electrons embraced. The nearer the nucleus, the less there is of electronic individuality. The wavelike energy extension of an electron may so spread out as to occupy the whole of the lesser atomic orbits; especially is this true of the electrons nearest the atomic nucleus.

The thirty innermost orbital electrons have individuality, but their energy systems tend to intermingle, extending from electron to electron and well-nigh from orbit to orbit. The next thirty electrons constitute the second family, or energy zone, and are of advancing individuality, bodies of matter exerting a more complete control over their attendant energy systems. The next thirty electrons, the third energy zone, are still more individualized and circulate in more distinct and definite orbits. The last ten electrons, present in only the ten heaviest elements, are possessed of the dignity of independence and are, therefore, able to escape more or less freely from the control of the mother nucleus. With a minimum variation in temperature and pressure, the members of this fourth and outermost group of electrons will escape from the grasp of the central nucleus, as is illustrated by the spontaneous disruption of uranium and kindred elements.

The first twenty-seven atoms, those containing from one to twenty-seven orbital electrons, are more easy of comprehension than the rest. From twenty-eight upward we encounter more and more of the unpredictability of the supposed presence of the Unqualified Absolute. But some of this electronic unpredictability is due to differential ultimatonic axial revolutionary velocities and to the unexplained “huddling” proclivity of ultimatons. Other influences—physical, electrical, magnetic, and gravitational—also operate to produce variable electronic behavior. Atoms therefore are similar to persons as to predictability. Statisticians may announce laws governing a large number of either atoms or persons but not for a single individual atom or person.

2 notes

·

View notes

Text

Richard Feynman could not get Niels Bohr to understand his ideas why?

Niels Bohr thought that Feynman diagrams show free particles moving along trajectories in space, which is a physical impossibility. What the lines in the diagrams actually symbolise is not trajectories, but the directions of momentum vectors. Here is what Feynman himself said later about that episode....

Feynman:

Bohr was at this meeting and somewhere, after I’d tried and tried and I talked about trajectories, then I’d swing back — I was forced back all the time to explain. Finally I go back to the idea of an amplitude for each path; that quantum mechanics can be described by the amplitude for each path, and after that Bohr got up and he said, “Already in l9” — something, 1924, ‘25, or something –- “we know that the classical idea of the trajectory in a path is not a legitimate idea in quantum mechanics” and so on. In other words, he’s telling me about the uncertainty principle, you see, and so on. And when I hear this, this was the least discouraging of the criticisms, because it was patently clear that there was no communication, as you like to say. Because he’d tell me that I don’t know the uncertainty principle, and I’m not doing quantum mechanics right. Well, I know I’m doing quantum mechanics right, so there wasn’t any fear or anything. I mean, it was no trouble. It’s just that he didn’t understand at all. And I simply got a feeling of resignation. It’s very simple, I’ll have to publish this and so on, let them read it and study it, because it’s right. I wasn’t unhappy from that, you understand me? From Bohr’s criticism.

Weiner:

Was there antagonism in this criticism?

Feynman:

No. No, only the usual personalities. I mean, Teller, full of excitement, and Dirac mumbling “Is it unitary?” No, there was no trouble. It wasn’t antagonism. But to tell a guy that he doesn’t know quantum mechanics is to say, you know — It didn’t make me angry; it just made me realize he didn’t know what I was talking about. And it was hopeless to try to explain it further. And I said so. I gave up. I gave up completely, and I simply decided to publish it, because see, I knew it was OK. First of all, I had the confirmation with Schwinger. We’d sit there and we’d get the right answers, you know. So that was just before the meeting broke up for a little temporary rest, and Bohr came up to me —

Weiner:

How long did the meeting last, by the way?

Feynman:

I don’t know maybe an afternoon. And Bohr came up and apologized. His son had told him that he didn’t understand it, that I really was consonant with the principles of quantum mechanics. But I said, “It’s not necessary to apologize,” — you know, something like that. After that, I don’t know what I did. I didn’t do any more, but just decided to publish it. There is one little thing, though, that’s interesting, that also added to the complications. When I got up to talk, I started out by saying, “I can do everything but I can’t do the closed loops, the self-energy of the electron, I mean the vacuum polarization.” Schwinger got up and said, “I can do everything, including the vacuum polarization.” And he worked something out, and he got a term which looked like vacuum polarization. He had to subtract, and it left the vacuum polarization. It later came out that he had not done the vacuum polarization, but he had left it out — he didn’t even notice the term — and he had another term that he’d been doing, and was doing it wrong. And it looked like a vacuum polarization correction, the error, which you could get rid of by saying he had vacuum polarization. He got rid of it. Well, I was doing it more right, and didn’t have any vacuum polarization term at all, and knew it was missing, and said it was missing — whereas, he thought he had it and included it and got it right. But neither of us knew how to do it. But we didn’t know it. He said he did. And I said I didn’t do it. So one of the criticisms they gave was, “Why should we bother with this, you haven’t done the vacuum polarization yet. And the other thing is all done.” So you see, that was another, a small thing. I’m just saying it wasn’t something that bothered me. It didn’t bother me. I’m just telling the difficulties that people have in paying attention to me. They thought I hadn’t as much as he had — actually, I happened to have more but I didn’t know it — and so on. I could describe the specific terms, but one time a few weeks later, when I was visiting MIT, Schwinger called me up and said, “According to what I understood from what we were discussing, the terms which you have included give no vacuum polarization term, and that you have this extra thing. Well, now I found this extra thing. But now what bothers me is that the terms which I thought I had, which were the same as yours — I have a correction, it looks like a charge correction from those terms, and you said you had none. How did you handle them?” So I had to discuss terms on the telephone. We could do it. And I explained to him which terms would cancel what, and he hadn’t noticed those. “Oh,” he said, “I forgot to put those in.” So he put them in, corrected the thing, and got the same result. So, you see, we understood each other. We corrected each other. You know, we each fixed the other up, by pointing things out to each other at the time. So we were cooperating very well. But it was hard for us to know exactly what we were doing, and we would sometimes misrepresent the situation a little bit.

Weiner:

You could even talk about this on the phone?

Feynman:

Even on the phone we could identify the terms, I remember, because we understood what we were doing. We could visualize. I would say, “The term I’m talking about is canceled by a term which comes from a photon which is first emitted before interaction with the nuclear potential, is first emitted and then absorbed before the interaction with the nuclear potential.” And he’d say, “But that’s just a mass correction.” I’d say, “No, because of the fact, the mass correction is when there’s a free particle, and because of the fact that a potential is going to act soon, there’s a slight correction near the end point of the integral.” “Oh, yeah!” You know? So it would go something like that. We could talk on the telephone to each other. We understood each other very well.

0 notes

Text

Introduction to String Theory

String theory is the most controversial theory in all of science today. On the one hand, it's a mathematically compelling framework that offers the potential to unify the Standard Model with General Relativity, providing a quantum description of gravity. On the other hand, its predictions are untestable in practice and require an enormous set of assumptions that are unsupported by scientific evidence.

A little bit of story

The story of String Theory begins in the late 1960s, as the string model of the strong nuclear interactions. The string model was interesting, but predicted a number of strange things that didn't appear to match reality (for example there's no Higgs mechanism). Some years later the theory of Quarks and quantum chromodynamics (QCD) came to be. It described the strong nuclear force extraordinarily well without these pathologies, so the string theory was abandoned.

But a decade or so later, this idea was reborn into what's now known as modern string theory. The idea was to take the energy scale all the way up to the Planck energy. Physicists could finally hope to unify all the fundamental forces together.

More than a theory

Today is incorrect talk about a string theory, because actually, there are several of them. They are: bosonic, type I, type IIA, type IIB, and two flavours of heterotic string theory (HO and HE). The different theories allow different types of strings, and the particles that arise at low energies exhibit different symmetries. For example, the type I theory includes both open strings (which are segments with endpoints) and closed strings (which form closed loops), while types IIA, IIB and heterotic include only closed strings. An interesting feature of these theories is that they predict the number of dimensions that the Universe should have. So, while the bosonic string theory has 26 dimensions, the others have 10. But is there a theory more likely than the others?

Duality and M-theory

One notable fact about string theory is that the different versions of the theory all turn out to be related. The relationships that can exist between different string theories is called Duality. There are two kinds of duality (S-Duality and T-Duality) but in short, they assert that some of the theories of strings are different mathematical descriptions of the same phenomenon. This fact, with many other mathematical findings, has allowed the formation of a new theory, that unifies (almost) all the other modern strings theories: The M-theory. This is a 11-dimensional theory that have spawned important theoretical results in physics and mathematics.

But is it a scientific theory?

Verifiability

To date, M-theory is not verifiable.

As Richard Feynman writes in The Character of the Physical Law, the key test of a scientific theory is to verify whether its consequences are in accord with the measurements obtained experimentally. No matter who invented the theory and not even how the theory can be aesthetically appealing: if it is not in agreement with the experimental reality, it is wrong'. It has many characteristics of mathematical interest and it could become important for our understanding of the Universe, but requires further development before it can become verifiable. These developments may be in the theory itself, as new methods to perform calculations and derive predictions, or may consist of advances in experimental sciences, which can make measurable quantities that are not currently available (as gravitons).

Falsifiability

However, considering the theory under the only profile of its verifiability, it's extremely reductive and opens the field to a series of problems. Contrary to popular belief, the agreement with the experimental data is not sufficient to confer the status of scientific theory! One of the fundamental characteristics of a scientific theory is the Popperian requirement of falsifiability, that is the ability to produce at least one statement from which the whole theory depends. This could be problematic if we consider string theory only as a theory of great unification, instead it gave precise predictions through an experiment on the correspondence on the viscosity of strongly coupled fluids that are in agreement with the experimental data observed at the RHIC (Relativistic Heavy Ion Collider). This is the real reason why M-Theory is so interesting.

Conclusion

So, strings theories are really interesting, but there is a last, big problem. From a more mathematical point of view, most of strings theories are formulated through the use of perturbative mathematical methods. These are mathematical methods used for finding an approximate solution to a problem, by starting from the exact solution of a related, simpler problem. Well, for strings theories, perturbation methods involve such a high degree of approximation that the theory can not identify which spaces are candidates for describing our universe! The consequence is that it doesn't describe a single universe, but something like 10^500 universes, each of which can have different physical and constant laws.

To date, a complete non-perturbative treatment of the theory is lacking.

1 note

·

View note

Text

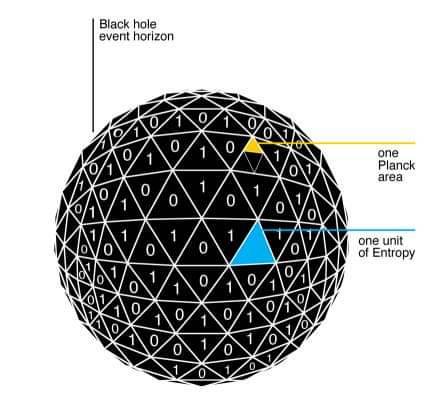

Black Holes and Entropy

Black holes are near-perfect black bodies that cause massive curvature of the four dimensional manifold of space-time around them, which causes intense gravity, These phenomena happen because black holes are massive, phenomenally massive. Electromagnetic radiation follows the curvature of space-time and gets "trapped" beyond the event horizon, so no light is emitted or reflected. They do give off black body radiation, aka hawking radiation, via quantum pair production virtual particles near the event horizon, one falls into the black hole, whilst the other escapes, taking with it gravitational energy, and thus, a small amount of mass from the black hole, it also becomes a "real" particle.

When we apply our current understanding of how the universe works, to the inner workings of gravitational singularities, it fails, in very much the same way that our current understanding of how the universe works fails when we apply it to what happened prior to event one, aka the big bang, because the fundamental physics were different back then, thus our current physics cannot be applied, and until we have some sort of base for comparison, we will probably never know what happened for sure.

We cannot explain or understand what happens to matter and energy once it crosses the threshold of the event horizon. I do have my own model, but that is another story lol (If you are interested in hearing what i believe to be the cause of the big bang in our universe, and all other universes, let me know and i will send you a copy of my model) If black hole systems had zero entropy, it would be possible to actually violate the second law of thermodynamics, but as it is believed that black holes are not isolated systems, this does not apply. Hawking radiation is predicted to "radiate" mass from the black hole over time via quantum/vacuum fluctuations near the event horizon, as virtual particle-antiparticle pair's are created, the negative counterpart is pulled into the black hole whilst the positive particle carries mass away and become real particles due to the gravity of the black hole. My question is whether or not these particle-antiparticle pair's are still entangled once the negative counterpart crosses the event horizon.

The mass that comprises a black hole is located at the point where the gravitational singularity is predicted to be, yet it is zero dimensional and has no spatial coordinate system that can be applied (X,Y,Z) as all parameters become zero, or recurring infinitely. The space-time manifold is curved to such extent, that light cannot escape and all future light cones would "tip" towards the singularity. The energy-momentum tensor (that determines the density of mass) is a Dirac delta function at the singularity, and Einsteins equations are depicted as r=0 in spherical coordinates. One also has to take into consideration which theory and model is being applied when calculating these variables, such as, is the black hole a Kerr black hole, a Schwarzschild black hole (rotating or static) ? etc .. But in spite of all of our predictions, calculations etc, we do not know where the mass is exactly located in a black hole, this is where a quantum theory of gravitation comes in, which is currently being devised, if at all possible lol.

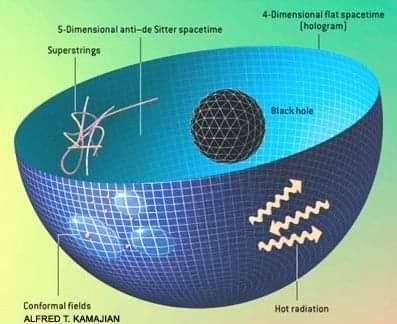

Anyway, I can only picture the entropy of black holes decrease, if the black holes are in some way connected to "somewhere else" like another meta/universe, or a bulk dimension located in between the membranes that define the multiverse, a good example is a five dimensional anti-de sitter space-time, in which our universe is located on a membrane, that is expanding into this higher meta dimension/space-time, this is a variation of the holographic principle of string theory. We only see entropy increasing in our universe, hence why we class it as a closed, isolated system, my model gets around this as there are more than one universe, but my model is still overall on the grandest of scales, classed as an isolated system comprised of smaller open systems (Universes)

White holes are hypothetical entities that are a near exact opposites to a black hole, so many of the values are reversed, such as where a black hole has a event horizon, whereas with a white hole, there comes a point beyond which inversely marks the point at which there can be no approach, nothing can progress forwards beyond this point, not even light, Black holes pull in everything that gets caught in their gravity well, whilst nothing in the universe can enter a white hole. There area few specific models that attempt to explain how black holes and white holes could be "connected" via a wormhole, as predicted by Einstein and Nathan Rosen in 1935, hence the name "Einstein-Rosen bridge"

Two of these are the positive and negative square root variations of the Schwarzschild metric. Now whilst the math involved in calculating such parameters predicts some pretty strange phenomena, trying to describe what it is that they predict is even stranger lol. Let's use the super-massive black hole (SMBH) at the center of our galaxy, Sagittarius A*, or more colloquially known as Sag A. Now this black hole would be connected to a white hole that is located in another universe, and everything in that universe where the white hole is located, would appear to be in reverse from our own, so time would appear to be moving backwards from our perspective, but if an observer from this universe were to somehow travel to this other universe that Sag A is connected to, when they arrive there, time would appear to be flowing forwards, and everything else would seem normal also, whats even stranger, is that the white hole would now be a black hole from their perspective in the new universe when compared with their original perspective when you observed it from our universe. It would infact now be our universe that seems to be running in reverse when compared to the new universe you are currently observing our universe from. All this is hypothetical of course, at least for the moment. Whilst the existence of white holes does not violate general or special relativity, no evidence of their existence has ever been observed to date.

0 notes

Text

Dimensions in the Universe

Talk about dimensions is conceptually complex, once we exceed the 4 known dimensions, we end up in complex mathematical structures, which give rise to equally complex physical theories, such as the different theories of strings. So today we're not going to talk about mathematics and geometry, but we're going to figure out what does it mean to live in a universe with more dimensions.

But first, what is a dimension?

3 Dimensions - Space

Dimensions are really just the number of coordinates we need to describe things. The first dimension is that which gives it length (for example, the x-axis). A good description of a one-dimensional object is a straight line, which exists only in terms of length and has no other discernible qualities. Add to it a second dimension (the y-axis) and you get an object that becomes a 2-dimensional shape (like a square). The third dimension involves depth (the z-axis) and gives all objects a sense of area and a cross-section. The perfect example of this is a cube, which exists in three dimensions and has a length, width, depth, and hence volume. Every single thing we can imagine is 3-D. Our mind can imagine something it has never seen, but it cannot imagine anything other than 3-D.

4 Dimensions - Time

The fourth dimension is an abstract concept derived by generalising the rules of three-dimensional space. It governs the properties of all known matter at any given point. Along with the three other dimensions, knowing an object position in time is essential to plotting its position in the universe. In the last century space-time was developed, which unifies space and time but with a different metric, so the time dimension is treated differently from the three spatial dimensions.

And then, what's beyond the fourth dimension? Well, there are some theories that require extra dimensions of spacetime for their mathematical consistency. In bosonic string theory, spacetime has 26 dimensions, while in superstring theory it has 10 dimensions and in M-theory it's 11-dimensional.

5 & 6 Dimensions

The fifth and sixth dimensions are where the notion of multiuniverse arises. These set of universes would start with the same initial conditions of ours (the Big Bang). So, if we could master the fifth and sixth dimension, we couldn't travel backward or forward in time in our universe, but we could travel to other universes physically identical to ours, at any time. So, for example, we could see different futures!

7 & 8 Dimensions

In the seventh dimension, we have access to the possible universes that start with different initial conditions.

While in the fifth and sixth dimensions, the initial conditions were the same and subsequent actions were different, here, everything is different from the beginning.

The eighth dimension, similar to sixth dimension, gives us an infinite plane of such possible universe histories, each of which begins with different initial conditions and branches out infinitely.

So, if we could master seventh and eighth dimensions, we could travel through different universe, with different law of physics! (try to imagine this is really impossible!)

9 & 10 Dimensions

In the ninth dimension, we can compare all the possible universe histories, starting with all the different possible laws of physics and initial conditions.

In the tenth dimension, we arrive at the point in which everything possible and imaginable is covered.

Beyond this, nothing can be imagined by us living creatures, which makes it the natural limitation of what we can conceive in terms of dimensions.

All subsequent dimensions may have a mathematical and geometric explanation, but physically, it's really hard to figure out what they would mean.

Gravity could play a significant role, or perhaps other dimensions would explain something we can't even imagine.

1 note

·

View note

Text

Holographic Principle of the Universe

1. Unusual Gyroscope

In the three-dimensional world in which as it seems to us we live, as a rule, everything revolves around one axis: yo-yo, engine rotors, mechanical gyroscopes, wheels, turbines, the impeller of a windmill, planets and solar systems, galaxies... Physicists would notice that: "the symmetry is reduced" but we try to restore the symmetry!

Is it possible to force and completely rotate a spherical ceramic rotor with magnets inside a fixed ceramic stator with electromagnets and a control system around three axes? Rotation in three-dimensional space keeps the line stationary, that is, in three-dimensional space, rotation always occurs around one axis. This is a consequence of Euler's rotation theorem. But there is nothing impossible and all problems can be circumvented, this even applies to the seemingly fundamental laws of physics, of course, if we have a "key" from the "secret door" that leads around. We’ll bypass Euler’s ban, and for this, we change the condition a little and try to rotate the rotor in a cycle (a cycle is when all the rotor points return to the old spatial coordinates and to the beginning of new cycle time). We make these rotor rotations for short and equal periods of time, first around one axis, then another, and finally, the third, but each time in accordance with the laws of sine and/or cosine, then the oscillations of the rotor points will be complete and a cycle will occur. We will improve the device and significantly reduce friction, make the rotor levitate in a vacuum inside the stator and at the same time we will rotate it around one point, the center of mass and three axes per cycle. That's all, the symmetry is restored!

And what to do next with such three-dimensional turns? How can they be applied? Gyroscopes are amazing instruments with amazing properties. And we invented a fundamentally new type of mechanical gyroscope and this is a guarantee that our device will never get into the "museum of faulty devices ". We are beginning to study its new properties!

2. Holographic Principle and anisotropy of the Universe

So we observe that everything naturally rotates around one axis, we can confidently conclude that the symmetry of the three-dimensional world at the level of "apples" is lowered. It has been for sure perfectly established that both at the quantum level and at the cosmological level, our world is not supersymmetric in the sense of exact symmetry. On the quantum level, as in any supersymmetric model, fermions and bosons connected by a supersymmetric transformation must have the same mass, charge, and other quantum numbers (with the exception of spin). This requirement is not satisfied with particles known in nature.

Why is the reason for the decrease in symmetry not visible? A good question for the possibility of suggesting the application of the Holographic Principle to the entire Universe and finding the reason for the decrease in symmetry. The hologram of the Universe is when it seems to you that you live in a three-dimensional world, but in fact, all physics and we ourselves as information (bits) are naturally encoded, blurred and move along the entire remote spherical inflationary surface of a holographic screen consisting of two halves (as bit).

Вecause of this blurred we cannot directly observe any symmetry breaking, or rather, we can, but indirectly. Why two halves? An example of the action of the holographic principle is the anti-de Sitter space-time, which has a border located at infinite distance. To cope with problematic infinity (the boundary of the AdS space is infinitely far from its center) Physicists have turned two saddle-shaped AdS spaces into bowl-shaped dS spaces. Then they could do the obvious thing: “glue” the two bowls together along their rims describing both hemispheres, where they become connected to each other, forming a single quantum system that is holographically dual to the entire de Sitter spherical space. This design is called "dS / dS compliance".

Well, where is the symmetry breaking? The answer is obvious if we understand that we are talking about a different amount of information related to matter and its distribution on two halves of one holographic screen - the cause of symmetry breaking is the well-known Anisotropy of the Universe, which is experimentally discovered and, according to the Holographic Principle, can be projected without loss of information into two halves of one holographic screen. Actually, projection is a speculative operation, if we understand that everything lives and interacts on this holographic horizon of the Universe. We temporarily stop criticizing this formalism, because we intend to prove (more precisely falsify) the Holographic Principle in an experiment using our invention - an unusual gyroscope. REF: https://www.quantamagazine.org/how-our-universe-could-emerge-as-a-hologram-20190221/#disqus_thread

3. To control gravity does not require the use of exotic masses and the speed of light.

In unusual gyroscope we use inductors, but only to create coherent oscillations of the rotor and its levitation in the evacuated cavity of the stator. It is possible to produce coherent oscillations in another way, but this is the easiest way. We need only coherent oscillations of the rotor, nothing else is required. We do not need electromagnetic fields and high voltages, we only need vibrations in a vacuum. As a result of such coherent oscillations, a motionless interference picture arises from the accelerations of mass (protons, electrons) rotor. From Newton’s law, we know that a force leads to a non zero acceleration. Of course, it is well known that acceleration and temperature closely related.

Namely, as Unruh showed, an observer in an accelerated frame experiences a temperature: kBT =ha/2пс: «In the limit of a very large region, the bonding surface can be taken to be a flat plane at infinity. In some way, the phenomena taking place in three-dimensional space can be projected onto a distant «viewing screen» with no loss of information». So, the most important assumption is that the information associated with a certain area of three-dimensional space obeys the conservation law it - the basis of the Holographic Principle. Information about the location of particles is stored in discrete bits on two halves of the spherical screen - one bit in the Planck-l² areas, but already two Planck areas contain three bits of information as additional information about the coordinates of each of the bits on the screen and are generated automatically. In other words, two bits contain exactly one bit of mutual information.

These types of information bits can accordingly be divided into the image kinetic and potential energy. Whatever information they contain, they contain it together. All information on the two halves of the spherical screen — the closed surface of region A we can be divided into numbers associated with the coordinates of the screen by number N and information represented by the temperature of the screen T. Suppose the system has full energy E. Suppose that this energy is evenly distributed between two halves of one spherical screen with a number of bits N (½kBT) as the average energy per bit, where kB is a constant Boltzmann:

E=N ½kBT

N= A/l², where l² is Planck area = hG/c³

Where G is a universal gravitational constant, c is the speed of light, and h is the given Planck's constant. Then:

N=Ac³/Gh

Taking a holographic screen as the sphere of radius r, the surface area will be equal to:

A=4пr²

Now we need another well-known formula E= Mc² and make a replacement:

E=N ½kBT=N ½kBha/2пckB=haN/4пc=aпr²c³/Gпс=ac²r²/G

then:

Mc²=ac²r²/G

consider a - the acceleration of the rotor when we change the angular displacements of its mass elements around two of the three fixed axes of the Cartesian coordinates:

a=GMc²/c²r²

Add the mass of the rotor unusual gyroscope to the left and right side of the equation and get the ability to control entropic gravity without exotic masses and speeds of light:

Fentr=mа= Fgrav = GMm/r² - Newton’s second law and gravity law

As expected, the speed of light in the equation is conquered.

The proportionality of gravitational and inertial masses was experimentally established with high accuracy.

The independence of the equation on the speed of light tells us about the possibility of artificially created forces in the selected direction on the screen without limiting the distance and speed of light to transmit and receive information creating a directed entropic force in an unusual gyroscope and measuring its remainder depending on the direction. This is equivalent to writing and reading information from the holographic screen as a grand computer memory chip, where the address is the spherical coordinates of the screen, and the data is the magnitude of the entropic force. If intelligent civilizations exist in the Universe, then they will use this particular holographic communication channel and use a non-reactive mode of movement.

REF:

Phys. Rev. Lett. 100, 041101 (2008): Test of the Equivalence Principle Using a Rotating Torsion Balance

https://arxiv.org/abs/0712.0607 Test of the Equivalence Principle Using a Rotating Torsion Balance

On the Origin of Gravity and the Laws of Newton Erik Verlinde https://arxiv.org/pdf/1001.0785.pdf

L. Susskind, «The World as a Hologram». J. Math. Phys. 36 (1995) 6377, arXiv:hep-th/9409089.

https://www.facebook.com/groups/Unusualgyro/

www.isan.com.ua

0 notes

Text

Black Dwarf Stars - The (Theoretical) End of Stellar Evolution

‘A main sequence star that lacks the mass necessary to explode in a supernova will become a white dwarf, a 'dead' star that has burned through all of its hydrogen and helium fuel. But the white dwarf remains hot for some time, much like a stove burner still emits heat even when it has been turned off.’

‘After an extremely long time, all of the leftover heat will have radiated away. No longer emitting heat or light, the white dwarf will become a black dwarf. Because it emits no radiation, it is nearly impossible to see. However, the black dwarf would still retain its mass, allowing scientists to detect the effects produced by its gravitational field.’

‘But there's no need to start searching for the elusive black dwarfs yet. At the moment, they're strictly theoretical. Scientists have calculated that a white dwarf will take at least a hundred million billion years to cool down and become a black dwarf, according to astronomer Ethan Siegel.’

‘Even if a white dwarf had formed at the moment of the Big Bang — which is impossible, since a star must pass through several evolutionary stages that take at least a billion years total — it would still be a white dwarf today, having not yet sufficiently cooled.’

‘Brown dwarfs, objects too small to have reached the point of fusion, were once called black dwarfs. A black dwarf should not be confused with a black hole or a neutron star, both of which have been observed.’

0 notes

Text

The Experimental Confirmation of the General Theory of Relativity

From a systematic theoretical point of view, we may imagine the process of evolution of an empirical science to be a continuous process of induction. Theories are evolved and are expressed in short compass as statements of a large number of individual observations in the form of empirical laws, from which the general laws can be ascertained by comparison. Regarded in this way, the development of a science bears some resemblance to the compilation of a classified catalogue. It is, as it were, a purely empirical enterprise.

But this point of view by no means embraces the whole of the actual process; for it slurs over the important part played by intuition and deductive thought in the development of an exact science. As soon as a science has emerged from its initial stages, theoretical advances are no longer achieved merely by a process of arrangement. Guided by empirical data, the investigator rather develops a system of thought which, in general, is built up logically from a small number of fundamental assumptions, the so-called axioms. We call such a system of thought a theory. The theory finds the justification for its existence in the fact that it correlates a large number of single observations, and it is just here that the " truth " of the theory lies.

Corresponding to the same complex of empirical data, there may be several theories, which differ from one another to a considerable extent. But as regards the deductions from the theories which are capable of being tested, the agreement between the theories may be so complete that it becomes difficult to find any deductions in which the two theories differ from each other. As an example, a case of general interest is available in the province of biology, in the Darwinian theory of the development of species by selection in the struggle for existence, and in the theory of development which is based on the hypothesis of the hereditary transmission of acquired characters.

We have another instance of far-reaching agreement between the deductions from two theories in Newtonian mechanics on the one hand, and the general theory of relativity on the other. This agreement goes so far, that up to the preseat we have been able to find only a few deductions from the general theory of relativity which are capable of investigation, and to which the physics of pre-relativity days does not also lead, and this despite the profound difference in the fundamental assumptions of the two theories. In what follows, we shall again consider these important deductions, and we shall also discuss the empirical evidence appertaining to them which has hitherto been obtained.

(a) Motion of the Perihelion of Mercury

According to Newtonian mechanics and Newton's law of gravitation, a planet which is revolving round the sun would describe an ellipse round the latter, or, more correctly, round the common centre of gravity of the sun and the planet. In such a system, the sun, or the common centre of gravity, lies in one of the foci of the orbital ellipse in such a manner that, in the course of a planet-year, the distance sun-planet grows from a minimum to a maximum, and then decreases again to a minimum. If instead of Newton's law we insert a somewhat different law of attraction into the calculation, we find that, according to this new law, the motion would still take place in such a manner that the distance sun-planet exhibits periodic variations; but in this case the angle described by the line joining sun and planet during such a period (from perihelion—closest proximity to the sun—to perihelion) would differ from 3600. The line of the orbit would not then be a closed one but in the course of time it would fill up an annular part of the orbital plane, viz. between the circle of least and the circle of greatest distance of the planet from the sun.

According also to the general theory of relativity, which differs of course from the theory of Newton, a small variation from the Newton-Kepler motion of a planet in its orbit should take place, and in such away, that the angle described by the radius sun-planet between one perhelion and the next should exceed that corresponding to one complete revolution by an amount given by

+24π³a²/[T²c²(1 -e²)]

(N.B. — One complete revolution corresponds to the angle 2π in the absolute angular measure customary in physics, and the above expression giver the amount by which the radius sun-planet exceeds this angle during the interval between one perihelion and the next.) In this expression a represents the major semi-axis of the ellipse, e its eccentricity, c the velocity of light, and T the period of revolution of the planet. Our result may also be stated as follows: According to the general theory of relativity, the major axis of the ellipse rotates round the sun in the same sense as the orbital motion of the planet. Theory requires that this rotation should amount to 43 seconds of arc per century for the planet Mercury, but for the other Planets of our solar system its magnitude should be so small that it would necessarily escape detection. ¹

In point of fact, astronomers have found that the theory of Newton does not suffice to calculate the observed motion of Mercury with an exactness corresponding to that of the delicacy of observation attainable at the present time. After taking account of all the disturbing influences exerted on Mercury by the remaining planets, it was found (Leverrier: 1859; and Newcomb: 1895) that an unexplained perihelial movement of the orbit of Mercury remained over, the amount of which does not differ sensibly from the above mentioned +43 seconds of arc per century. The uncertainty of the empirical result amounts to a few seconds only.

(b) Deflection of Light by a Gravitational Field

It has been already mentioned that according to the general theory of relativity, a ray of light will experience a curvature of its path when passing through a gravitational field, this curvature being similar to that experienced by the path of a body which is projected through a gravitational field. As a result of this theory, we should expect that a ray of light which is passing close to a heavenly body would be deviated towards the latter. For a ray of light which passes the sun at a distance of Δ sun-radii from its centre, the angle of deflection (a) should amount to

a = 1.7 seconds of arc/ Δ

It may be added that, according to the theory, half of Figure 05 this deflection is produced by the Newtonian field of attraction of the sun, and the other half by the geometrical modification (" curvature ") of space caused by the sun.

This result admits of an experimental test by means of the photographic registration of stars during a total eclipse of the sun. The only reason why we must wait for a total eclipse is because at every other time the atmosphere is so strongly illuminated by the light from the sun that the stars situated near the sun's disc are invisible. The predicted effect can be seen clearly from the accompanying diagram. If the sun (S) were not present, a star which is practically infinitely distant would be seen in the direction D₁, as observed front the earth. But as a consequence of the deflection of light from the star by the sun, the star will be seen in the direction D₂, i.e. at a somewhat greater distance from the centre of the sun than corresponds to its real position.

In practice, the question is tested in the following way. The stars in the neighbourhood of the sun are photographed during a solar eclipse. In addition, a second photograph of the same stars is taken when the sun is situated at another position in the sky, i.e. a few months earlier or later. As compared with the standard photograph, the positions of the stars on the eclipse-photograph ought to appear displaced radially outwards (away from the centre of the sun) by an amount corresponding to the angle a.

We are indebted to the [British] Royal Society and to the Royal Astronomical Society for the investigation of this important deduction. Undaunted by the [first world] war and by difficulties of both a material and a psychological nature aroused by the war, these societies equipped two expeditions — to Sobral (Brazil), and to the island of Principe (West Africa) — and sent several of Britain's most celebrated astronomers (Eddington, Cottingham, Crommelin, Davidson), in order to obtain photographs of the solar eclipse of 29th May, 1919. The relative discrepancies to be expected between the stellar photographs obtained during the eclipse and the comparison photographs amounted to a few hundredths of a millimetre only. Thus, great accuracy was necessary in making the adjustments required for the taking of the photographs, and in their subsequent measurement.

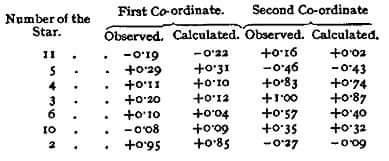

The results of the measurements confirmed the theory in a thoroughly satisfactory manner. The rectangular components of the observed and of the calculated deviations of the stars (in seconds of arc) are set forth in the following table of results.

(c) Displacement of Spectral Lines Towards the Red

It has been shown that in a system K’ which is in rotation with regard to a Galileian system K, clocks of identical construction, and which are considered at rest with respect to the rotating reference-body, go at rates which are dependent on the positions of the clocks. We shall now examine this dependence quantitatively. A clock, which is situated at a distance r from the centre of the disc, has a velocity relative to K which is given by

v = ωr

where ω represents the angular velocity of rotation of the disc K’ with respect to K. If v₀, represents the number of ticks of the clock per unit time (" rate " of the clock) relative to K when the clock is at rest, then the " rate " of the clock (v) when it is moving relative to K with a velocity v, but at rest with respect to the disc, will be given by

v = v₂√(1 -v²/c²)

or with sufficient accuracy by

v = v₀(1 - ½ v²/c²)

This expression may also be stated in the following form:

v = v₀[1 – (1/c²)(ω²r²/2)]

If we represent the difference of potential of the centrifugal force between the position of the clock and the centre of the disc by φ, i.e. the work, considered negatively, which must be performed on the unit of mass against the centrifugal force in order to transport it from the position of the clock on the rotating disc to the centre of the disc, then we have

φ = ω²r²/2

From this it follows that

v = v₀(1 – φ/c²)

In the first place, we see from this expression that two clocks of identical construction will go at different rates when situated at different distances from the centre of the disc. This result is also valid from the standpoint of an observer who is rotating with the disc.

Now, as judged from the disc, the latter is in a gravitational field of potential φ, hence the result we have obtained will hold quite generally for gravitational fields. Furthermore, we can regard an atom which is emitting spectral lines as a clock, so that the following statement will hold:

An atom absorbs or emits light of a frequency which is dependent on the potential of the gravitational field in which it is situated.

The frequency of an atom situated on the surface of a heavenly body will be somewhat less than the frequency of an atom of the same element which is situated in free space (or on the surface of a smaller celestial body).

Now φ = - K (M/r), where K is Newton's constant of gravitation, and M is the mass of the heavenly body. Thus a displacement towards the red ought to take place for spectral lines produced at the surface of stars as compared with the spectral lines of the same element produced at the surface of the earth, the amount of this displacement being

(v₀ - v)/v₀ = (K/c²)(M/r)

For the sun, the displacement towards the red predicted by theory amounts to about two millionths of the wavelength. A trustworthy calculation is not possible in the case of the stars, because in general neither the mass M nor the radius r are known.

It is an open question whether or not this effect exists, and at the present time (1920) astronomers are working with great zeal towards the solution. Owing to the smallness of the effect in the case of the sun, it is difficult to form an opinion as to its existence. Whereas Grebe and Bachem (Bonn), as a result of their own measurements and those of Evershed and Schwarzschild on the cyanogen bands, have placed the existence of the effect almost beyond doubt, while other investigators, particularly St. John, have been led to the opposite opinion in consequence of their measurements.

Mean displacements of lines towards the less refrangible end of the spectrum are certainly revealed by statistical investigations of the fixed stars ; but up to the present the examination of the available data does not allow of any definite decision being arrived at, as to whether or not these displacements are to be referred in reality to the effect of gravitation. The results of observation have been collected together, and discussed in detail from the standpoint of the question which has been engaging our attention here, in a paper by E. Freundlich entitled “Zur Prüfung der allgemeinen Relativitäts-Theorie” (Die Naturwissenschaften, 1919, No. 35, p. 520: Julius Springer, Berlin).

At all events, a definite decision will be reached during the next few years. If the displacement of spectral lines towards the red by the gravitational potential does not exist, then the general theory of relativity will be untenable. On the other hand, if the cause of the displacement of spectral lines be definitely traced to the gravitational potential, then the study of this displacement will furnish us with important information as to the mass of the heavenly bodies. [A]

Footnotes

1) Especially since the next planet Venus has an orbit that is almost an exact circle, which makes it more difficult to locate the perihelion with precision.

[A] The displacement of spectral lines towards the red end of the spectrum was definitely established by Adams in 1924, by observations on the dense companion of Sirius, for which the effect is about thirty times greater than for the Sun. R.W.L. — translator

0 notes

Text

Roger Penrose On Why Consciousness Does Not Compute