#realitykit

Text

Now I want the Vision Pro…

Started a little project for fun/hopefully will publish a simple Spatial App and tried out Simulator. Ahh; who’s ready to grab one of these Apple Vision Pros from the USA for me? Thank you!

Trying the browser out at tfp.la

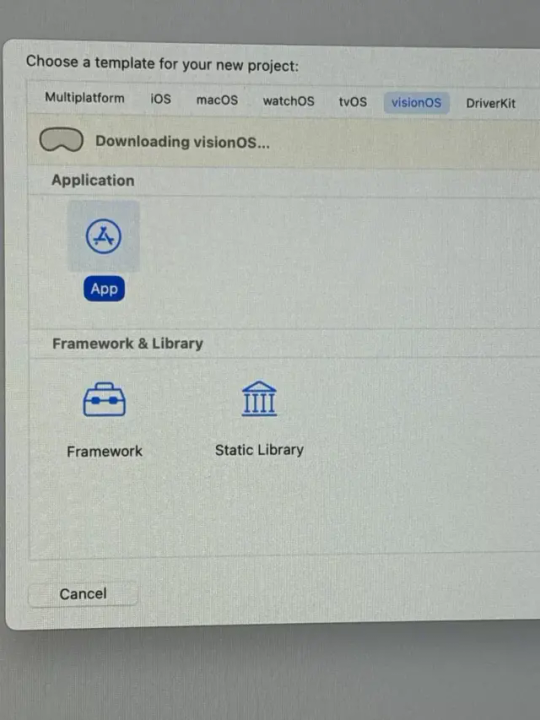

Downloading visionOS simulator for Xcode

Using Xcode with a plain-new visionOS project

View On WordPress

0 notes

Text

The Future of iOS Development: Skills Every Developer Should Learn

The landscape of iOS development is perpetually evolving driven by technological advancements. As consumer demands keep changing, the need to hire iOS developers with a competent skill set is more important than ever.

Understanding these emerging skills is the key to building teams capable of taking advantage of opportunities and addressing challenges to deliver innovative and user-centric applications. In this article, let’s look at the essential skills that define the future of iOS development.

Charting the Path: Key Skills for Tomorrow's iOS Developers

Understanding of SwiftUI

SwiftUI is transforming the way iOS apps are designed and produced by providing a more uniform foundation for UI development across all Apple platforms. Developers who master SwiftUI are capable of constructing more adaptive, responsive, and user-friendly interfaces.

Swift programming

Swift proficiency is a non-negotiable skill. Swift's frequent modifications and enhancements necessitate developers to stay up to speed on the latest versions and features to create efficient, scalable projects.

Augmented reality

ARKit and RealityKit have opened up new possibilities for immersive app experiences. When you hire iOS developers they must be proficient in using these tools to create new features and applications in a variety of industries, including gaming and retail.

Machine learning and AI

With Core ML and Create ML, Apple makes it easy for iOS developers to incorporate machine learning into their products. Understanding these frameworks, as well as AI and machine learning principles, is critical for rendering smarter and more personalized user experiences.

Familiarity with cloud integration

Cloud services such as iCloud are critical to delivering a consistent user experience across devices. Developers who want to enhance app functionality and data accessibility must understand cloud integration strategies and best practices.

As the demand for professionals with advanced skills is soaring, salary expectations are also rising. Therefore, you must conduct an iOS developer salary survey to get valuable insights related to the current salary trends. Utilizing tools like the Uplers salary analysis tool will help you with this type of information so that you can attract and retain top iOS talent.

Concluding Thoughts

The future of iOS app development looks bright with endless opportunities for innovation and creativity. By focusing on the evaluation of these key skills when you hire iOS developers, you can be assured of getting valuable assets to stay ahead in the tech industry.

0 notes

Text

How effective is Swift’s AR technology in developing immersive applications?

Introduction:

Since time immemorial, humans have been imaginative and have always envisioned new concepts, such as superheroes or aliens. However, the most recent obsession among innovators is immersive technology, i.e., integration of virtual content with the physical environment, with about 6.3 billion dollars in revenue generated in 2020. This is evident from the popularity of Nintendo’s Pokémon Go game and IKEA Place.

Escalated by the post-pandemic environment, rapid digitalization and increased reliance on smart devices have led businesses to look for ways to cater to people using immersive applications. Immersive applications improve processes by bringing digital elements for better visualization, increasing user experience that translates to profitability.

Immersive applications actualize distinct experiences by integrating the physical world with a simulated reality. These technologies allow humans to have new experiences by enhancing, extending, or creating a mixed reality.

Among the different types of immersive technology are Augmented Reality (AR), 360, Extended Reality (XR), Mixed Reality (MR), and Virtual Reality (VR). The augmented and virtual reality market will surge into the market size of 160-billion-dollars by 2023, according to Statista’s forecast. Consumers’ attraction to immersive applications is visible by the increasing market size encouraging developers to offer relevant solutions.

Developers can create AR application for Apple products using various tools, frameworks, and languages including, but not limited to Xcode, ARKit, RealityKit, Swift, and AR creation tools.

This article involves understanding the efficacy of Swift in AR technology for developing immersive applications for business initiatives. Prior to that, it is crucial to comprehend AR and its effect on immersive applications.

Augmented Reality Apps and Its Applications

AR works by layering a digital layer on top of the real world and enhancing the user experience. The digital layer or augmentation consists of information or content consisting of videos, images, and 3D objects, providing a naturally semi-immersive and visual experience for user interaction.

AR has unlimited scope for innovation due to its non-restrictiveness of user vision, cost-effectiveness, and high engagement. Mobile AR users are expected to surge from 200 million in 2015 to 1.7 billion worldwide by 2024.

AR Application across industry verticals:

Apps use augmented reality to bring products to life by using 360-degree views either with headsets or through smartphone cameras.

Automotive Industry is using AR for designing, testing, and sale of vehicles saving much money and improving products simultaneously, e.g., Jaguar Land Rover, BMW Virtual Viewer, Hyundai AR Lens for Kona.

The real estate industry is being transformed by using AR technology and is bringing the advantage of viewing properties from people’s homes.

The tourism industry uses AR technology to provide users with amazing experiences of the various vacation locations, e.g., World Around Me, Viewranger, Smartify, AR City, Guideo, Buuuk.

AR provides simulation of work areas and models to help healthcare professionals familiarize themselves. This helps them to work on real patient bodies expertly.

Users can experience an immersive experience in retail and try things before making purchases using the plethora of AR apps for online shopping, including clothing, furniture, beauty products, and many more, e.g., Houzz, YouCam Makeup, GIPHY World, Augment.

AR also has wide applications in the education industry, bringing a new dimension to lessons and experiments, e.g., Mondly AR.

Educational, entertainment, and practical applications in real life, e.g., ARCube, AR-Watches, MeasureKit, Jigspace.

Many AR-powered navigation apps assist users by supplementing roadmaps with interactive features, e.g., google maps live view in iOS.

Gaming is one of the most popular applications of AR providing entertainment, e.g., Angry Birds AR.

AR has provided significant benefits in the defense sector too by offering AR-simulations of machines allowing safe and easy assimilation of information regarding the work environment and equipment.

Swift and AR Technology

Apple has introduced a comprehensive set of tools and technologies for creating amazing AR application as mentioned below:

Swift is an open-source general-purpose compiled programming language developed by Apple for its app development.

Xcode is an integrated development environment for iOS and Mac. It has all the tools needed to develop an application, including a compiler, a text editor, and a build system in one software package. Xcode uses the Swift programming language to deliver an assortment of AR templates to create AR application rapidly.

ARKit is a framework for developers that allow designing augmented reality apps for iOS devices, such as the iPad and iPhone. It helps to device AR experiences quickly using the camera, motion sensors, and processors of the iOS devices.

ARKit Features:

ARKit empowers the developers to construct AR features for apps regardless of their previous experience. It offers multiple features to benefit the users and developers alike.

Location Anchor: This allows the anchoring of AR creations to specific geographical locations and offers various angles for viewing.

Motion Capture: Intensifies AR experience using one camera to record the real-time motion.

Enhanced Face Tracking: Amplifies AR experience for users with a front-facing camera.

Scene Geometry: Prepares a topological map with labeled objects of any space.

People Occlusion: Enables realistic responses of AR creations in the presence of obstacles such as persons and green screen effects in all environments.

Depth API: Adds precision and occlusion to the AR object, increasing the user’s immersive experience.

Instant AR: This helps in immediate AR deployment in the real environment.

Simultaneous Camera Usage: Maximizes AR object performance and delivery by utilizing both the front and back camera for a new experience.

Apple’s AR Creation tools consist of a reality converter and reality composer. Reality Converter offers the display, customization, and conversion of 3D models to USDZ to integrate them effortlessly to Apple tools and AR-enabled devices. In addition, Reality Composer facilitates the construction, assessment, refinement, and simulation of AR experiences using an intuitive interface.

The creation tool offers the following advantages:

Powerful built-in AR library to create any virtual object or use USDZ files to continue working on a previous project.

Incorporates dynamism into the AR using animations and audio for adding little details like movement, vibrations, and more.

Record and play feature offers specific information capturing using camera and in-built sensors in predetermined locations.

Delivers smooth transition between all Apple platforms and devices.

Supports export to USDZ, including all components authored in Reality Composer.

Benefits of Swift for creating AR applications:

Xcode powered by Swift, plays a leading role in bringing AR to users with a short turnaround time. The following merits make Swift the favored choice by businesses.

1. Accelerated Development:

Swift has a low code requirement due to its simple syntax. In comparison to Objective-C, it is easier to read and write. With built-in concurrency support and reduced code size, there is faster coding, resulting in fewer problems and easy maintenance.

According to Apple Inc., a Swift application is up to 2.6 times faster than Objective-C and 8.4 times faster than Python. Swift’s LLVM, a compiler framework that enables speedier code processing, further optimizes this speed for better performance. All these qualities contribute to the faster development of AR applications.

2. Scalability:

The AR apps created with Swift are highly scalable, i.e., the apps can be updated with new features as and when needed without any worries resulting in future-proofing. The elementary readability and simple syntax combined with effortless onboarding for new developers to the team makes it a preferred choice.

3. Security:

Swift provides robust protection with its error control and typing system to avert code crashes. Hence, with a concise feedback loop, developers can promptly find and fix the code’s errors. This removes the risk of time and effort wastage due to bug fixing.

4. Interoperability with Objective-C:

With the excellent benefit of interoperability with Objective-C, Swift language provides the unique advantage of fluid cooperation for AR app extension or updates. Above all, more features are added quickly, and the risks associated with porting are prevented.

5. Memory Management:

With in-built memory management and tracking performed by Automatic Reference Counting (ARC), developers do not need to be bothered about conscious memory management. In other words, ARC also enhances the app’s performance and does not affect the CPU or memory.

Combined with Swift’s in-built dynamic libraries, it reduces the app size and memory footprint, eliminating the need to constantly oversee and retain every class count. For instance, Swift 5 introduced an Application Binary Interface that decreases the bundle size and increases version compatibility yielding a much more stable application.

6. Cross-device support:

Using Swift in both the backend and front-end of AR application development supercharges the development process by enabling extensive code sharing and reuse. This allows cross-device support across all Apple platforms, including iPhone, iPad, MacBook, Apple Watch, and Apple TV and Linux platforms.

Final Thoughts:

Swift has tremendous potential to transform businesses by revolutionizing user lifestyles through engaging and riveting AR experiences. The above benefits highlight how Swift empowers the developers to create stable, secure, and high-performance AR application.

With the demonstrated success of various AR games, creative design solutions, and e-commerce apps, Swift is the first choice for any custom AR application development for Apple products.

Like other businesses, if you too are looking for custom AR Application development Mindfire Solutions can be your partner of choice. We have a team of highly skilled and certified software professionals, who have developed many custom solutions for our global clients over the years.

Here are a few interesting projects we have done. Click here to know more:

Case study on device for medical compliance.

Case study on e-commerce site for freight.

0 notes

Text

The Future of iOS App Development: Trends and Innovations

In the fast-paced world of technology, iOS app development is constantly evolving. With each passing year, Apple introduces new features, tools, and capabilities, challenging developers to stay up-to-date with the latest trends and innovations.

As we look ahead to the future of iOS App Development, it's crucial to anticipate the trends that will shape the industry and innovations that are likely to define the future of iOS app development.

SwiftUI and Combine Frameworks: Apple's SwiftUI and Combine frameworks have been steadily gaining popularity among iOS developers. SwiftUI simplifies the UI development process, enabling developers to build interfaces using declarative syntax and a live preview feature. Combine, on the other hand, simplifies asynchronous programming by providing a reactive framework. These frameworks are likely to play a significant role in the future, making app development more efficient and reducing code complexity.

Augmented Reality (AR) and Virtual Reality (VR): ARKit and RealityKit have paved the way for immersive augmented reality experiences on iOS devices. As AR and VR technologies continue to advance, we can expect to see more innovative apps that merge the digital and physical worlds. These could include AR games, interactive shopping experiences, and educational applications.

Cross-Platform Development: Cross-platform development tools like SwiftUI and Flutter are gaining traction. Developers are looking for ways to write code once and deploy it across multiple platforms, including iOS, Android, and the web. This trend will likely continue to grow, making it easier for businesses to reach a broader audience.

Progressive Web Apps (PWAs): While native iOS app development is essential, Progressive Web Apps are gaining popularity. These web-based applications can offer a native-like experience without the need for installation from the App Store. PWAs can be a cost-effective way to reach a broader audience.

Conclusion: The future of iOS App development is brimming with exciting possibilities. As technology continues to evolve, developers must stay adaptable and embrace these trends and innovations.

0 notes

Text

RT @zhuowei: DS emulation in Augmented Reality: Displays game as a holographic 3D model. - DS emulated with melonDS (iOS port from rileytestut's Delta) - 3D model extracted with scurest's amazing MelonRipper tool - rendered with iOS #RealityKit #AugmentedReality #AR https://t.co/1blhyamLbo https://t.co/WgN3bnyVy2

RT @zhuowei: DS emulation in Augmented Reality: Displays game as a holographic 3D model. - DS emulated with melonDS (iOS port from rileytestut's Delta) - 3D model extracted with scurest's amazing MelonRipper tool - rendered with iOS #RealityKit #AugmentedReality #AR https://t.co/1blhyamLbo https://t.co/WgN3bnyVy2

— Retrograde Wear Gaming (@RetroGradeWear) Jun 23, 2023

from Twitter https://twitter.com/RetroGradeWear

0 notes

Photo

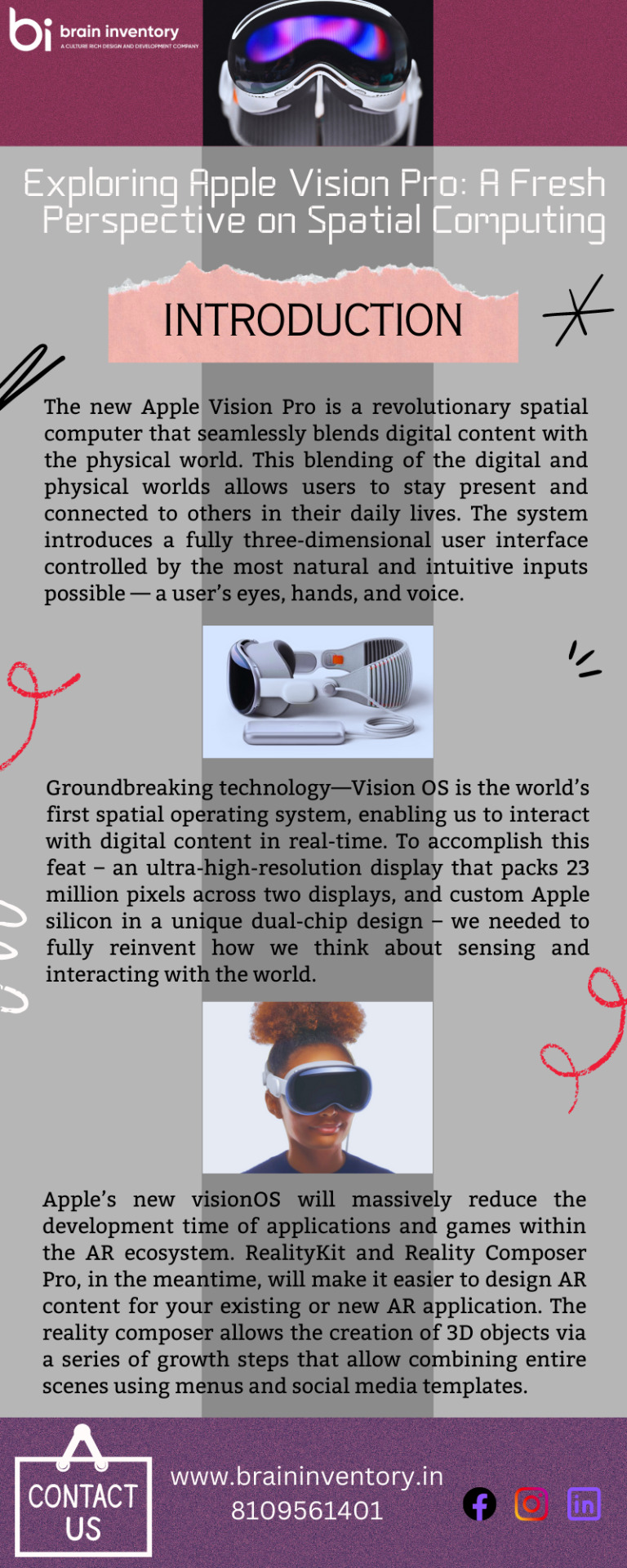

Apple’s new Vision OS will massively reduce the development time of applications and games within the AR ecosystem. RealityKit and Reality Composer Pro, in the meantime, will make it easier to design AR content for your existing or new AR application. The reality composer allows the creation of 3D objects via a series of growth steps that allow combining entire scenes using menus and social media templates.

For More Information: https://braininventory.in/posts/exploring-apples-vision-pro-a-fresh-perspective-on-spatial-computing

0 notes

Text

Exploring Apple Vision Pro: A Fresh Perspective on Spatial Computing

Quick Summary

The new Apple Vision Pro is a revolutionary spatial computer that seamlessly blends digital content with the physical world. This blending of the digital and physical worlds allows users to stay present and connected to others in their daily lives. The system introduces a fully three-dimensional user interface controlled by the most natural and intuitive inputs possible — a user’s eyes, hands, and voice.

Introduction Apple Vision Pro

Groundbreaking technology—Vision OS is the world’s first spatial operating system, enabling us to interact with digital content in real-time. To accomplish this feat – an ultra-high-resolution display that packs 23 million pixels across two displays, and custom Apple silicon in a unique dual-chip design – we needed to fully reinvent how we think about sensing and interacting with the world.

The Vision Pro is a headset with a bold design and big promises. It’s easy to see the headset, with its $3,499 price tag, showing up in high-end technology stores like Apple. With its premium design, the Vision Pro deserves that kind of reputation.

Apple’s new visionOS will massively reduce the development time of applications and games within the AR ecosystem. RealityKit and Reality Composer Pro, in the meantime, will make it easier to design AR content for your existing or new AR application. The reality composer allows the creation of 3D objects via a series of growth steps that allow combining entire scenes using menus and social media templates. The Vision Pro is based upon Apple technology; one example shown was voice recognition being able to trigger the execution of code; another is full support for Apple’s SwiftUI and Xcode.

The headset is made of a single piece of polished and anodized aluminum, much like the Apple Watch, with a leather-and-silicone strap. The first units will come in two sizes, one bigger than the other. An array of sensors including a gyroscope, an accelerometer, a magnetometer, and a barometer will provide orientation tracking and health monitoring. Display resolution is about twice that of the current Rift prototype shown to developers.

Extraordinary New Experiences

Apple vision pro is a stunning technology that brings elements of augmented reality, 3D, advanced gesture controls, and a powerful new interface to personal computing.

An infinite canvas for apps at work and at home: Apple today introduced a new version of its popular Pro app — Apple Vision Pro. The update brings several improvements that users will appreciate. To begin with, the updated program features a three-dimensional interface that frees apps from the boundaries of the display so they can appear side by side at any size. Apple says that this feature makes it easier to multitask and use more apps simultaneously. The update has been designed with professionals in mind and, as such, supports dual monitors.

Engaging entertainment experiences: Apple Vision Pro is the most advanced entertainment system Apple has ever created. It’s built to deliver an authentic and intensely immersive viewing experience, with two ultra-high-resolution displays (4K monitors for a combined total resolution of 32 million pixels) that provide a 100-foot wide screen and advanced Spatial Audio. Users can watch movies and TV shows, or enjoy stunning three-dimensional movies. And Apple Immersive Video offers 180-degree high-resolution recordings with Spatial Audio, bringing them to entirely new places.

Immersive Environments: With Environments app brings the power of spatial augmented reality to iOS. With it, users can create virtual environments which they can then adjust in myriad ways. This enables a user’s world to grow beyond the dimensions of a physical room with dynamic, beautiful landscapes that can help them focus or reduce clutter in busy spaces.

Memories come alive: Apple Vision Pro’s unwavering commitment to the highest standards of image capture and processing, combined with decades of experience delivering consumer experiences, make Apple Vision Pro an exceptional choice for everyone from casual photographers to industry professionals. The result is an ever-expanding collection of photos and videos to enjoy anytime, anywhere – either on iPhone or Mac – all beautiful and accessible across Apple devices.

FaceTime becomes spatial: With Apple Vision Pro, FaceTime calls take advantage of the room around the user. To reflect real-world environments in FaceTime, we looked to see how people are communicating today — taking in the whole scene and interacting with everyone within it.

A Revolutionary Operating System and User Interface

VisionOS is a revolutionary operating system that will take your computer to an all-new level that you’ve never seen before. Built from the ground up, this OS delivers powerful spatial computing experiences that can take advantage of the space around the user, unleashing new opportunities at work and at home. Apple Vision Pro with EyeSight features a revolutionary innovation that helps users stay connected with those around them. When a person approaches someone wearing Vision Pro, the device feels transparent — letting the user see them while also displaying the user’s eyes, providing a reassuring and welcoming experience. When a user is immersed in an environment or using an app, EyeSight gives visual cues to others about what the user is focused on.

What will Apple Vision Pro Bring to App Development?

Apple Vision Pro is the new spatial computing platform that allows Apple to market its augmented reality glasses. The new platform is poised to change the way software developers, software testers, and cybersecurity experts create applications.

For App Developers: The release of iOS 11 has introduced a new Augmented Reality (AR) platform called Vision. With this new framework, Apple is opening up the world of AR to developers and designers across the globe. Paired with Xcode 9 beta, developers will have access to tools that enable them to build even better AR experiences for iOS devices. Vision places over 200 computer vision APIs at our disposal; helping developers quickly build AR experiences.

For Cybersecurity Professionals: Vision Pro is a new mobile app that was recently released for iOS devices. It allows users to perform eye-based facial recognition scans using their device’s front camera. This type of authentication is often used by enthusiasts in the cybersecurity community for fun, but also by some enterprises to identify employees and gain access to secure areas. Personal use of this type of application has increased considerably since its introduction on Snapchat where it is used to prevent users from taking screenshots.

For Software Testers: Vision Pro was developed by Exploratory Testing Software. The software company provides the leading testing platform for QA teams to collaborate in real-time and produce more reliable apps. Vision Pro is changing the way developers and testers work together and is designed to improve the way quality assurance processes are conducted.

For UI/UX Designers: Vision Pro claims to be the world’s most advanced authoring tool for creating spatial user interfaces. It facilitates the creation of applications with features supporting all of the senses, providing a much more natural and intuitive way to interact with computers. The San Diego-based software company that builds Vision, called Immersion Corporation, thinks this new technology will change the way applications are developed in the future.

Apple Vision Pro: Pioneering a New Era of AR-VR Wearable Technology

Apple has recently launched its AR Kit to introduce the world to virtual reality. Along with a number of patents, it has been suggested that the new technology will be incorporated into all of their new iPhone models. Some developers are already porting their apps to this exciting new development. There are many organizations that are researching how to make better use of this technology for building engaging experiences and systems. Whatever your vision, or necessity, at Brain Inventory we’re experienced at leveraging our Virtual and Augmented Reality expertise to elevate the capabilities of your digital solutions.

0 notes

Text

Upgrade Your Mobile Game with the Newest iPhone Model

The iPhone is one of the most popular mobile devices in the world, and for good reason. With its powerful hardware and intuitive software, it provides an excellent platform for mobile gaming. However, with each new release of the iPhone, the hardware and software capabilities continue to evolve and improve, providing even more opportunities for game developers to create immersive and engaging experiences for players.

The latest iPhone model, the iPhone 13, is no exception. It comes with a variety of new features and improvements that can help game developers take their mobile games to the next level. In this article, we will explore some of the key ways that developers can use the latest iPhone model to upgrade their mobile games.

Enhanced Graphics and Performance

One of the biggest improvements in the latest iPhone model is the increased performance and graphics capabilities. The new iPhone 13 is powered by the A15 Bionic chip, which provides a significant increase in performance compared to previous models. This makes it possible for developers to create games with more complex graphics and animations, as well as provide smoother and more responsive gameplay.

Additionally, the iPhone 13 also features a new and improved graphics processing unit (GPU) that provides even better performance and graphics quality. This can help developers create games with even more detail and realism, making for a more immersive experience for players.

Improved Display and Camera Technology

Another key feature of the iPhone 13 is its improved display and camera technology. The latest model features a 6.1-inch Super Retina XDR display, which provides incredibly vivid and lifelike images. This makes it possible for developers to create games with high-quality graphics that take full advantage of the iPhone's display capabilities.

In addition, the new camera technology in the iPhone 13 can be used to enhance the gaming experience. For example, developers could create games that make use of the device's Face ID technology to create a more personalized and immersive experience for players. They could also take advantage of the device's advanced camera capabilities to create games that are based on real-world images and environments, such as augmented reality games.

Improved Battery Life

Another major improvement in the latest iPhone model is its longer battery life. The iPhone 13 features a larger battery than previous models, which provides even more power for longer gaming sessions. This can help developers create games that are designed for longer play sessions, without worrying about the device running out of power.

In addition, the iPhone 13 also features improved battery management capabilities, which help to conserve battery power and extend the device's battery life even further. This can be especially important for games that require long periods of play, such as strategy games or RPGs.

Improved Sound Quality

The latest iPhone model also features improved sound quality, which can be used to enhance the gaming experience. The new device features improved speakers and microphone technology, which provide clearer and more lifelike sound. This can be used by developers to create games with more immersive sound effects and music, as well as to support voice-controlled gameplay.

In addition, the iPhone 13 also supports Dolby Atmos audio, which provides an even more immersive and lifelike audio experience. This can be used by developers to create games with 3D sound effects, which can help to create a more engaging and immersive gaming experience for players.

ARKit and RealityKit

The latest iPhone model also features a variety of new technologies that can be used to enhance the gaming experience. For example, ARKit and RealityKit are two frameworks that provide a range of tools and technologies for developing augmented reality (AR) and mixed reality (MR) apps and games. These technologies can be used to create games that blend real-world environments and images.

0 notes

Text

Mac's blended reality headset could be known as the "Truth Pro":

Mac's blended reality headset could be known as the "Truth Pro": What to expect and different subtleties Step into the fate of gaming with GIGABYTE's AORUS 15 gaming PC OnePlus Nord Wired Earphones sent off in India Elista TT 14000AUFB Twin Tower Multimedia Speaker sent off, valued at Rs 10,500 LG's new screen can consequently change itself as per your stance

Assuming there is one Apple item that everybody has been hanging tight for much more than the new pill-molded iPhone and the new Mac Pro, it is the long-supposed blended reality headset, which has been doing the rounds of the web for some time now. There may be some time before the headset turns into a reality, yet Apple as of now supposedly has a name for its next enormous product.As found by Bloomberg, brand name filings recommend Apple prepares with the "Truth" marking for its impending blended reality headset.

Three brand names for "Reality One," "Reality Pro", and "Reality Processor" have been documented with the US Trademark and Patent Office. The applications for three brand names have additionally been recorded in the EU, UK, Canada, Australia, New Zealand, Saudi Arabia, Costa Rica and Uruguay.

This wouldn't be the initial time the "Truth" marking has been related with Apple's abundantly anticipated blended reality headset. Recently, the name "realityOS," alluding to the headset's working framework, showed up in a code bit and a brand name documenting. Further, back in 2019, Apple made another system for engineers, "RealityKit," for the making of expanded reality experiences.The three brand names have been documented for Immersive Health Solutions LLC, a supposed shell organization of Apple, consolidated in February this year. Strangely, the firm has been enrolled by another organization, The Corporation Trust Co., the very firm that documented the brand name for realityOS.

Apple's blended reality biological system could be a 'reality' soonIf one were to conjecture, the "Truth Processor," could be the name for the chip controlling the headset. In the interim, "Reality One" and "Reality Pro" appear to be names saved for the headset. With numerous names being reserved, Apple could deliver a scope of blended reality headsets later on, as once rumoured.The "Reality Processor," or the chip driving the headset, is supposed to be a modified M2 chip helped by a coprocessor to deal with AR/VR processing.The Cupertino goliath has been reputed to be chipping away at various AR/VR headsets - one being a top of the line headset, codenamed N301, expected to be the main headset to come to the market.

In the mean time, two different sets of headsets have been being worked on, inside alluded to as N602 and N421; be that as it may, these two are not supposed to make a big appearance for the following couple of coming years.The "Star" and "One" has been related with Apple items for quite a while. While the "Genius" moniker has been saved for the top of the line variations of the organization's items, like iPhone 12 Pro, MacBook Pro and AirPods Pro, the "One" has been utilized in Apple's administrations group, the Apple One.The Mandalorian chief, Jon Favreau, alongside other large Hollywood names, has been supposedly roped in by Apple to make AR/VR content for the headset.

Favreau's universe of dinosaurs, the Prehistoric World, could be one of the principal restricted series to show up on the headset.Apple's previously blended reality won't come modest as tales are overflowing that it might cost as much as $3,000 (~Rs 2,40,100). The headset might include a couple of 4K OLED shows, 15 cameras among different sensors empowering support for eye following and hand signals. Assuming tales are to be accepted, we probably won't see the headset until the following year

Read the full article

0 notes

Text

What you'll learn

Flutter AR Apps DevelopmentCreate Augmented Reality Apps that Run on iPhonesBuild AR Apps for your Business or OrganisationBuild Simple, Interactive Mobile Applications with Augmented Reality FunctionsIntegrate and Program ARKit with Flutter SDKCreate your own Augmented Reality AppsIn this course you will learn how to make mobile augmented reality apps using flutter sdk and flutter dart programming language with Apple ARKit for developing iOS apps.Augmented reality is an interactive experience of a real-world environment where the objects that reside in the real world are enhanced by computer-generated perceptual information, sometimes across multiple sensory modalities, including visual, auditory, haptic, somatosensory and olfactory.Augmented reality (AR) is a technology that lets people superimpose digital content (images, sounds, text) over real-life scenes. AR got a lot of attention in 2016 when the game Pokémon Go made it possible to interact with Pokémon superimposed on the world via a smartphone screen. Augmented Reality Apps are software applications which merge the digital visual (audio and other types also) content into the user's real-world environment. ... Some other popular examples of AR apps include AcrossAir, Google Sky Map, Layar, Lookator, SpotCrime, PokemonGo etc.We will use ArKit. ARKit combines device motion tracking, camera scene capture, advanced scene processing, and display conveniences to simplify the task of building an AR experience. You can create many kinds of AR experiences with these technologies using the front or rear camera of an iOS device. Build unparalleled augmented reality experiences for hundreds of millions of users on iOS and iPadOS, the biggest AR platforms in the world. With powerful frameworks like ARKit and RealityKit, and creative tools like Reality Composer and Reality Converter, it’s never been easier to bring your ideas to life in AR. ARKit introduces a brand-new Depth API, creating a new way to access the detailed depth information gathered by the LiDAR Scanner on iPhone 12 Pro, iPhone 12 Pro Max, and iPad Pro.Location Anchoring leverages the higher-resolution data in Apple Maps to place AR experiences at a specific point in the world in your iPhone and iPad apps.* And face tracking is now supported on all devices with the Apple Neural Engine and a front-facing camera, so even more users can experience the joy of AR in photos and videos.Who this course is for:Basic Programming KnowledgeBasic XCode IDE Knowledge

0 notes

Text

3D Scanning with Object Capture

by Ethan Saadia

🐨 Scanning a koala with just an iPhone! 📱

Object Capture is a new feature part of RealityKit that lets you create accurate 3D models just by taking photos of an object.

I 3D scanned this koala by taking 64 photos of it from all angles with Apple's sample capture app on my iPhone. RealityKit uses technology called photogrammetry to stitch together the overlapping parts of each photo, combining depth and accelerometer data if available, into a 3D model that you can export to USDZ for use in games, animations, or augmented reality.

Integrating 3D scanning into a social platform like Tumblr would make it easy to create and share immersive content with the world and view it right in front of you with AR Quick Look. From delicious food to unboxing reveals to travel scenes, the possibilities of Object Capture are endless!

My AR apps for learning circuits with Raspberry Pi and shopping at the Apple Store both require 3D models of real objects, so Object Capture will be a huge help to the development process. View my augmented reality work to learn more here!

#WWDC#wwdc21#wwdc 2021#ar#augmented reality#realitykit#arkit#3d scanning#3d printing#apple#ios#3D#3d modeling#iOS 15#xr#vr#virtual reality#USDZ

2K notes

·

View notes

Photo

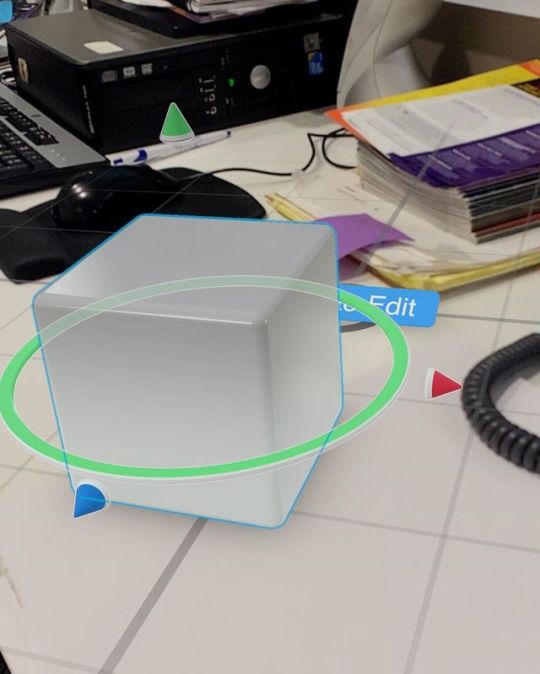

Introducing RealityKit Composer - it’s basically Unity for your iPhone and I’m here for it 🥰😛 . . . . . #360view #ar #photography #art #augmentedreality #gaming #gamer #vr #photooftheday #technology #love #startup #mixedreality #360photo #games #360camera #360photography #innovation #oculus #virtualreality #tech #littleplanet #future #htcvive #photosphere #3d #game #design #360 #travel (at MetroTech Center) https://www.instagram.com/p/B0ZKyoIgmDL/?igshid=1o0g01ole45v5

#360view#ar#photography#art#augmentedreality#gaming#gamer#vr#photooftheday#technology#love#startup#mixedreality#360photo#games#360camera#360photography#innovation#oculus#virtualreality#tech#littleplanet#future#htcvive#photosphere#3d#game#design#360#travel

3 notes

·

View notes

Photo

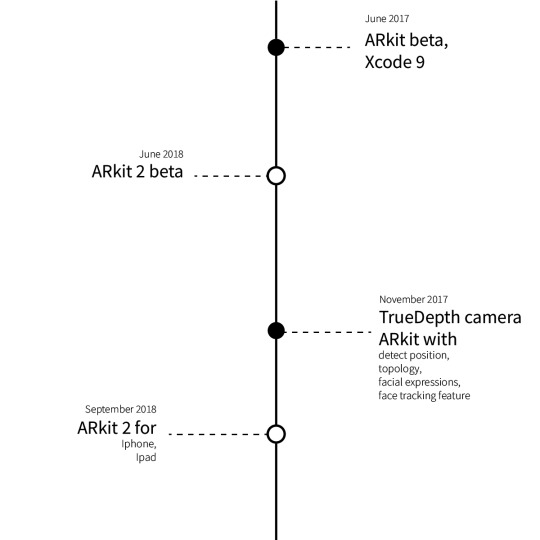

𝐇𝐨𝐰 𝐟𝐚𝐫 𝐡𝐚𝐬 𝐀𝐩𝐩𝐥𝐞 𝐠𝐨𝐧𝐞 𝐰𝐢𝐭𝐡 𝐭𝐡𝐞𝐢𝐫 𝐀𝐑𝐤𝐢𝐭

𝗝𝘂𝗻𝗲 𝟮𝟬𝟭𝟳: Apple released the beta of ARKit alongside Xcode 9 and iOS 11 betas.

𝗦𝗲𝗽𝘁𝗲𝗺𝗯𝗲𝗿 𝟮𝟬𝟭𝟳: Apple published iPhone X with a TrueDepth camera, allowing ARKit to detect position, topology, and the user's facial expressions. Besides, Xcode 9 was also introduced to developers with the Face Tracking features included in ARKit.

𝗡𝗼𝘃𝗲𝗺𝗯𝗲𝗿 𝟮𝟬𝟭𝟳: Apple will release the iPhone X to the public, and developers will benefit from the facial tracking features in the TrueDepth camera system.

𝗝𝘂𝗻𝗲 𝟮𝟬𝟭𝟴: iOS 12 beta is released to developers at WWDC, bringing a beta version of ARKit 2.

𝗦𝗲𝗽𝘁𝗲𝗺𝗯𝗲𝗿 𝟮𝟬𝟭𝟴: Apple releases iOS 12 to the public, bringing ARKit 2 to iPhones and iPads, allowing developers to release ARKit 2 apps.

𝗝𝘂𝗻𝗲 𝟮𝟬𝟭𝟵: iOS 13 beta is released to developers at WWDC, bringing a beta version of ARKit 3.

𝗦𝗲𝗽𝘁𝗲𝗺𝗯𝗲𝗿 𝟮𝟬𝟭𝟵: iOS 13 is released to the public, bringing ARKit 3 through new third-party apps.

𝗝𝘂𝗻𝗲 𝟮𝟬𝟮𝟬: iOS 14 beta is released to developers at WWDC, bringing a beta version of ARKit 4, with LiDAR improvements for the recently released 2020 iPad Pro with LiDAR sensor.

𝗦𝗲𝗽𝘁𝗲𝗺𝗯𝗲𝗿 𝟮𝟬𝟮𝟬: iOS 14 is released to the public, bringing apps to support ARKit 4 to the App Store.

𝗝𝘂𝗻𝗲 𝟮𝟬𝟮𝟭: Apple releases iOS 15 with ARKit 5 and RealityKit 2 alongside Xcode 13 for developing apps using these technologies.

With the Reality kit, Scenekit was also introduced to combine a high-performance rendering engine with a descriptive API to import, manipulate, and render 3D assets. With Scenekit, developers can create 3D games and add 3D content to apps using high-level scene descriptions. Adding animations, physics simulation, particle effects, and realistic physically based rendering is no more painful than before.

0 notes

Text

What is the Future of iOS App Development?

SwiftUI

SwiftUI, a user interface toolkit that allows developers to construct apps declarative, will be an essential UI trend. Tell SwiftUI how one wants the user interface to appear and function, and the tool will figure out how to make it happen when the user interacts with it.

When a user presses a button in the critical UI, a function can be called. We read a value and display a label within that function. Based on what happens regularly, one can change how the user interfaces look and function.

Imperative UI poses several difficulties, particularly those related to the concept of state. Developers must keep track of their code's current state and ensure that the UI accurately reflects that state.

Combining Reactive Programming and Combine

It's essentially a paradigm for dealing with streams of occurrences. Although this is not a new notion in software development, it has recently gained popularity.

One can operate on event streams since they are streams of values. Developers can easily change a stream into a different stream in-place using the operators included with a Reactive Framework. The iOS platform is no stranger to reactive programming. A variety of libraries could be used to do this.

The reactive design in iOS allowed developers to think about how events worked and interacted with one another and how this impacted business logic. It essentially solves the nested closures problem that arises while processing async values over time. Combine provides a high-level abstraction for developers that enforce a model that is purely based on data streams.

Catalyst for Mac

So many developers will use the catalyst of Mac to create native Mac applications through their iPad applications. The apps can share the same project and source code thanks to Mac Catalyst. That's why it's now easier than ever to convert an iPad app's desktop-class features to Mac.

You can develop a native Mac app by checking the "Mac" checkbox in the project settings of an iPad app and then expanding it with new features. Both apps will use the same project and source code, allowing you to make changes in one spot.

Confidentiality and safety

Security protocol advancements will continue to be a prominent iOS development theme. Apple is well-known for its sophisticated security layers and stringent procedures that prohibit unauthorized access to its gadgets.

They aspire to provide a secure environment for its app users, focusing on privacy and security concerns. The goal is to improve overall security by preventing cyber-attacks and data breaches. Given the recent revelations of data breaches and hacking, this makes a lot of sense.

Apple Home Kit

Apple HomeKit will be a significant trend in iOS app development.

Developers can use the framework to set up accessories and build actions to control them. HomeKit allows apps to operate and coordinate home automation accessories from various manufacturers, all while maintaining a consistent user interface.

Developers can use HomeKit to create apps that identify and add HomeKit-compatible automation accessories to the cross-device home configuration database. The data in the home configuration database can also be seen and edited. Furthermore, HomeKit allows apps to quickly communicate with connected accessories and services, allowing them to do tasks such as turning on lights.

Virtual and Augmented Reality

Another significant trend in iOS programming is Augmented Reality (AR). RealityKit, an excellent augmented framework that helps in developing AR apps easier, will also benefit developers. The feature can also track up to three faces at once and facilitates collaborative sessions. These capabilities make it simple for developers to incorporate human movement into their products.

Here are a few more highlights:

Simultaneous front and back cameras - you can use the front and back cameras for face and world tracking at the same time.

Multiple faces tracking — using the TrueDepth camera, the ARKit Face Tracking function can monitor up to three faces at once

Collaborative sessions - this feature enables developers to collaborate and create a collaborative global map more quickly. This is how you may create AR apps that can be shared

Flutter for iOS app development

Flutter combines the benefits of a native framework with the portability of a cross-platform framework to create a brand-new, more productive way of mobile app development.

Flutter was previously only accessible for Google products like Android and Chrome. When Flutter became a cross-platform framework, though, everything changed. More iOS developers will use Flutter as the technology evolves. The development of Flutter apps is becoming increasingly popular.

0 notes