#pion decay

Note

so I just watched BobbyBroccoli's video on Victor Ninov, and the whole "element hunt" has me wondering: what factors dictate whether an atom—when introduced to another atom with considerable force—either splits or fuses?

Oh hell yeah, this is a meaty one

The short answer to what determines whether a bound nucleus is formed and a new element is created is the total binding energy. It takes energy to remove a proton or neutron from an atom, and that energy is a nuclear potential energy similar to the potentials you encounter in other parts of physics. The nuclear force is not a fundamental force, but is instead regarded as its own composite force created by the interaction of strong and weak nuclear force effects (you can look into pion exchange and the quark sea for more on that, but in essence we can describe the total forces acting on a particle in the nucleus quite well without considering the complex individual processes that create it)

This nuclear binding potential is referred to as a “potential well”, because it’s a massive negative potential energy (which gives rise to an attractive force) that’s mostly consistent within the nucleus holding neutrons and protons together. Outside the nucleus, the positive charge of the protons within creates a strong electrostatic field, which strongly repels protons and other nuclei, creating this general map of potential energy:

The vertical axis is total potential energy, the horizontal axis is distance from the center of the nucleus, V0 is the nuclear potential, VC is the electrostatic Coulomb potential, and Eα is illustrating an alpha decay which we’re not going into rn. Sourced from this paper

The goal of atom smashing to hunt for new elements is to cram as many protons into that central potential well as possible. Seems straightforward in theory - if you have enough energy to overcome the peak of the Coulomb barrier, you can just slam protons into the center, right?

There’s a few key factors that make this dramatically more difficult to achieve in practice, especially with the superheavy transactinides that Ninov was claiming to have created. In order of importance:

You need a lot of neutrons. The attracting component of the nuclear force has a very short range, on the order of < 1 fm, which means that one can say with fairly accurate results that each proton and neutron only interacts with its immediate neighbors. This means that beyond a certain size of nucleus, the contribution of an individual nucleon to the strength of the overall nuclear binding force felt by any other nucleon is fairly small.

However, if the added nucleon is a proton, the added repulsive electric charge extends through the whole nucleus, affecting every other proton and making the nucleus less stable. This causes several effects in heavy high-Z nuclei, including deformation of nucleus shape and instability towards fission, but most importantly it means that as an element becomes heavier it needs more and more neutrons to space out the protons and remain relatively stable. If an element is too far from this ideal balance, it will undergo beta decay and transmute as protons change into neutrons or vice versa.

For the superheavy transactinides, this ideal neutron balance lies above 1.5n:1p, which is a serious issue - light elements simply don’t have the neutrons to contribute, which means you will always wind up with beta-unstable neutron-deficient product isotopes. The most popular remedies are to use multiple neutron-rich medium-weight isotopes like Zinc-70 or Lead-208, or to use the abnormally neutron-rich (1.4n:1p) Calcium-48 as a projectile, fired at long-lived neutron-rich actinides like Uranium-238 (1.58n:1p) to produce new nuclei. But that reaction only has enough protons to get you to Copernicum (Z = 92+20), and to get beyond that you need heavier targets. Which leads to the next issue…

You need a sample to hit for a long time. We can get a little bit heavier than Uranium without too much difficulty - Plutonium and Curium especially have long half-lives and are readily available in target quantities from high-flux isotope production reactors, but as the numbers get higher, the difficulty of production begins sharply increasing and the half-lives of isotopes rapidly fall. Fermium (Z=100) has a half life on the order of days, not years, and are prohibitively rare even when you have a isotope breeder on site that can make them.

If these high energy reactions were common, this wouldn’t be such a big problem. We only need one atom to be formed and observed, and even nanograms or picograms of target isotopes have billions and billions of atoms to transmute. But that’s the real problem - these heavy fusions are actually quite rare, and in the time it takes for one to occur, these heavy unstable target isotopes can undergo significant decay. Of course, even if you do form a fused nucleus, you have to deal with…

Fused nuclei are inherently less stable than they should be. This may seem odd considering that in common experience, identical isotopes are indistinguishable - an atom of deuterium is the same as any other atom of deuterium. But this is only true when your nucleus is in the ground state, and these atoms certainly are not. In the instant of fusion, multiple things immediately cause significant instability. The impact leaves the new fused compound nucleus in a highly excited state, with many nucleons in high, excited energy levels.

This causes a “boil off” effect, where the chaotic random energy exchanges between nucleons push some out of the nuclear potential and into free space. The mathematical modeling of this is actually very close to the models of vapor evaporating from hot water, and the Fermion Gas model of Neutron Evaporation is one of the best tools we have for analyzing these reactions.

And of course, it’s always neutrons that evaporate. The lighter mass, lack of Coulomb barrier, and mostly filled energy levels below the binding barrier mean that neutrons preferentially take up the majority of excitation energy and evaporate from the new nucleus, which leads to a neutron deficiency and thus even more instability.

This is part of why, even though these elements are in the island of stability, their measured half lives are so short - they are formed drastically lacking in neutrons, placing them far from peak stability, and their excited state leads to even more rapid decay than a ground state nucleus would exhibit. The high energy may also boil off or precipitate alpha particle emissions, reducing the mass of the compound atom further, and the neutron imbalance results in several beta decays in very fast succession.

Worse still, the geometry of the impact results in the compound nucleus having a terribly distorted, pancake-like state. This deformed state further reduces binding energy, further incentivizes decay, and frequently leads to the newly bound isotope undergoing immediate fission before its characteristics are observed. The impact angle of the projectile on the target nucleus plays a huge role in this process, and the actual point of impact is also highly important. In the fusion beam, glancing blows strange high energy exchanges occur, such as

⁴⁸Ca + ²³⁸U ➝ ⁸⁷Br* + ¹⁹⁷Ir + 2n (note no intermediate composite nucleus here!)

Due to these tremendously unfavorable conditions, new elements at the end of the periodic table are produced only after sources undergo months or years of constant bombardment with high-energy ions, as the minuscule chance of individual interactions producing a surviving nucleus eventually pays off. The worst part is that none of these effects can really be avoided - colliding heavy nuclei in hot fusion results in better neutron density but requires massively higher energy and often breaks interacting nuclei into several smaller pieces, while direct neutron irradiation requires dozens of sequential captures in isotopes of decreasing cross section. Attempts to rein in the energy excesses have been somewhat effective, but results are limited by the inherent energy release from forming a new bound state.

Perhaps the most heartbreaking bit is that we're there - we've arrived on the lower shores of the Island Of Stability, the energies and half-lives telling us its shape and position, but also showing us how far off course our navigation is.

This is an image from the IAEA Chart Of Nuclides, a great source for data on isotopes, colored by half-life (blue is longest, red is shortest, black is stable). You can see a clear blue line of long-lived, stable isotopes carving through the center of the chart, curving slightly down as it proceeds to the right...and then it disappears. The end of the line of stability on the chart corresponds to Fermium, the last element produced by neutron irradiation rather than heavy isotope fusion. As the fusion era of the element hunt began, the actual isotopes discovered departed from that line of stability, drifting further and further into neutron-deficient territory, away from any stability that could theoretically be observed.

I don't know what the future holds for element hunting. Oganesson required months of continuous bombardment to produce three atoms. Element 119 (Eka-Francium) is too heavy to make with Calcium-48 due to the fact that the required target, Einsteinium, has a maximum half-life of sightly more than a month and a predicted fusion cross section 100 times smaller than the Californium targets that produced the Oganesson atoms. Future isotope hunting should focus on creating more neutron-rich isotopes of existing elements to explore the limits of stability and refine nuclear models, but the glory simply isn't there to get such projects going.

4 notes

·

View notes

Photo

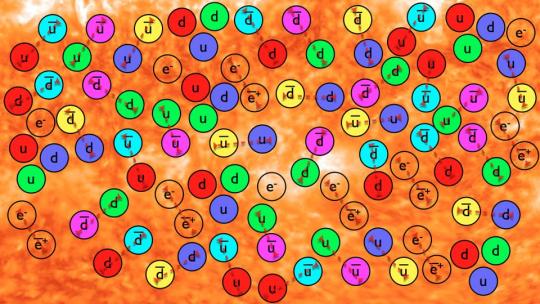

Teasing strange matter from the ordinary In a unique analysis of experimental data, nuclear physicists have made the first-ever observations of how lambda particles, so-called “strange matter,” are produced by a specific process called semi-inclusive deep inelastic scattering (SIDIS). What’s more, these data hint that the building blocks of protons, quarks and gluons, are capable of marching through the atomic nucleus in pairs called diquarks, at least part of the time. These results come from an experiment conducted at the U.S. Department of Energy’s Thomas Jefferson National Accelerator Facility. It’s a result that has been decades in the making. The dataset was originally collected in 2004. Lamiaa El Fassi, now an associate professor of physics at Mississippi State University and principal investigator of the work, first analyzed these data during her thesis project to earn her graduate degree on a different topic. Nearly a decade after completing her initial research with these data, El Fassi revisited the dataset and led her group through a careful analysis to yield these unprecedented measurements. The dataset comes from experiments in Jefferson Lab’s Continuous Electron Beam Accelerator Facility (CEBAF), a DOE user facility. In the experiment, nuclear physicists tracked what happened when electrons from CEBAF scatter off the target nucleus and probe the confined quarks inside protons and neutrons. The results were recently published in Physical Review Letters. “These studies help build a story, analogous to a motion picture, of how the struck quark turns into hadrons. In a new paper, we report first-ever observations of such a study for the lambda baryon in the forward and backward fragmentation regions,” El Fassi said. In like a lambda, out like a pion Like the more familiar protons and neutrons, each lambda is made up of three quarks. Unlike protons and neutrons, which only contain a mixture of up and down quarks, lambdas contain one up quark, one down quark and one strange quark. Physicists have dubbed matter that contains strange quarks “strange matter.” In this work, El Fassi and her colleagues studied how these particles of strange matter form from collisions of ordinary matter. To do so, they shot CEBAF’s electron beam at different targets, including carbon, iron, and lead. When a high-energy electron from CEBAF reaches one of these targets, it breaks apart a proton or neutron inside one of the target’s nuclei. “Because the proton or neutron is totally broken apart, there is little doubt that the electron interacts with the quark inside,” El Fassi said. After the electron interacts with a quark or quarks via an exchanged virtual photon, the “struck” quark(s) begins moving as a free particle in the medium, typically joining up with other quark(s) it encounters to form a new composite particle as they propagate through the nucleus. And some of the time, this composite particle will be a lambda. But the lambda is short-lived – after formation, it will swiftly decay into two other particles: a pion and either a proton or neutron. To measure different properties of these briefly created lambda particles, physicists must detect its two daughter particles, as well as the beam electron that scattered off the target nucleus. The experiment that collected this data, EG2, used the CEBAF Large Acceptance Spectrometer (CLAS) detector in Jefferson Lab’s Experimental Hall B. These recently published results, “First Measurement of Λ Electroproduction off Nuclei in the Current and Target Fragmentation Regions,” are part of the CLAS collaboration, which involves almost 200 physicists worldwide. SIDIS This work is the first to measure the lambda using this process, which is known as semi-inclusive deep inelastic scattering, in the forward and backward fragmentation regions. It’s more difficult to use this method to study lambda particles, because the particle decays so quickly, it can’t be measured directly. “This class of measurement has only been performed on protons before, and on lighter, more stable particles,” said coauthor William Brooks, professor of physics at Federico Santa María Technical University and co-spokesperson of the EG2 experiment. The analysis was so challenging, it took several years for El Fassi and her group to re-analyze the data and extract these results. It was her thesis advisor, Kawtar Hafidi, who encouraged her to pursue the investigation of the lambda from these datasets. “I would like to commend Lamiaa’s hard work and perseverance in dedicating years of her career working on this,” said Hafidi, associate laboratory director for physical sciences and engineering at Argonne National Lab and co-spokesperson of the EG2 experiment. “Without her, this work would not have seen fruition.” “It hasn’t been easy,” El Fassi said. “It’s a long and time-consuming process, but it was worth the effort. When you spend so many years working on something, it feels good to see it published.” El Fassi began this lambda analysis when she herself was a postdoc, a couple of years prior to becoming an assistant professor at Mississippi State University. Along the way, several of her own postdocs at Mississippi State have helped extract these results, including coauthor Taya Chetry. “I’m very happy and motivated to see this work being published,” said Chetry, who is now a postdoctoral researcher at Florida International University. Two for one A notable finding from this intensive analysis changes the way physicists understand how lambdas form in the wake of particle collisions. In similar studies that have used semi-inclusive deep inelastic scattering to study other particles, the particles of interest usually form after a single quark was “struck” by the virtual photon exchanged between the electron beam and the target nucleus. But the signal left by lambda in the CLAS detector suggests a more packaged deal. The authors’ analysis showed that when forming a lambda, the virtual photon has been absorbed part of the time by a pair of quarks, known as a diquark, instead of just one. After being “struck,” this diquark went on to find a strange quark and forms a lambda. “This quark pairing suggests a different mechanism of production and interaction than the case of the single quark interaction,” Hafidi said. A better understanding of how different particles form helps physicists in their effort to decipher the strong interaction, the fundamental force that holds these quark-containing particles together. The dynamics of this interaction are very complicated, and so is the theory used to describe it: quantum chromodynamics (QCD). Comparing measurements to models of QCD’s predictions allows physicists to test this theory. Because the diquark finding differs from the model’s current predictions, it suggests something about the model is off. “There is an unknown ingredient that we don’t understand. This is extremely surprising, since the existing theory can describe essentially all other observations, but not this one,” Brooks said. “That means there is something new to learn, and at the moment, we have no clue what it could be.” To find out, they’ll need even more measurements. Data for EG2 were collected with 5.014 GeV (billion electron-volt) electron beams in the CEBAF’s 6 GeV era. Future experiments will use electron beams from the updated CEBAF, which now extend up to 11 GeV for Experimental Hall B, as well as an updated CLAS detector known as CLAS12, to continue studying the formation of a variety of particles, including lambdas, with higher-energy electrons. The upcoming Electron-Ion Collider (EIC) at DOE’s Brookhaven National Laboratory will also provide a new opportunity to continue studying this strange matter and quark pairing structure of the nucleon with greater precision. “These results lay the groundwork for upcoming studies at the upcoming CLAS12 and the planned EIC experiments, where one can investigate the diquark scattering in greater detail,” Chetry said. El Fassi is also a co-spokesperson for CLAS12 measurements of quark propagation and hadron formation. When data from the new experiments is finally ready, physicists will compare it to QCD predictions to further refine this theory. “Any new measurement that will give novel information toward understanding the dynamics of strong interactions is very important,” she said. IMAGE.....Jefferson Lab's CEBAF Large Acceptance Spectrometer in Experimental Hall B CREDIT DOE's Jefferson Lab

2 notes

·

View notes

Text

Cosmic Rays

Science time! This week: cosmic rays.

Cosmic rays are not a strong concern for people on Earth, but they are constantly moving throughout the universe at nearly the speed of light.

What They Are

Cosmic rays are made up of particles. Most atoms start with a nucleus made up of protons and neutrons (subatomic particles), with an outer layer of negatively charged subatomic particles called electrons. Most atoms of cosmic rays have had their outer layers stripped and so are just nuclei.

As far as we know, they can come from the sun of our solar system, black holes, and exploding stars (supernovae). Scientists found this from studying particles and their energy levels, which can be used to find out the force and origin associated with the particles’ accelerations and velocities. Finding their exact origins is difficult because particles are pulled around by magnetic fields throughout the universe, but the origins listed above are the most likely due to their immense ‘strength’, able to send particles traveling at nearly the speed of light.

Many of the particles have the nuclei of hydrogen: one proton which has a positive charge. Cosmic rays have also been found to have helium, uranium, and other elements, though to a far rarer extent.

What They Do

These particles are considered high-energy, meaning they can cause a strong impact when they collide with something, such as cells of the body. Because of Earth’s magnetosphere and atmosphere, the majority of cosmic rays do not interact with life here.

Colliding with particles of the Earth’s atmosphere causes the particles from outer space to lose a lot of their energy, as well as turn into secondary particles, like neutrinos. Neutrinos are produced every time the nuclei of atoms collide or break apart. They are neutral in charge and completely harmless because they almost never interact with other particles.

(Neutrinos have no charge, so the energy associated with them comes only from the process that formed it. More energy in the reaction creates more energy in the neutrino).

Studying cosmic rays has helped us gain a lot of information about the universe.

Before the 1950s, studying cosmic rays was the only way to study particles smaller than atoms. From these studies, scientists discovered smaller particles like muons, pions, and kaons.

Blazars are another example. A blazar is a galaxy powered by a black hole. As matter goes into the black hole, large amounts of energy are emitted. We are able to discover blazars specifically because the energy ‘beam’ is in the direction of Earth, so the particles end up making their way here.

In addition to learning from cosmic rays, humans have faced limitations because of them. Cosmic rays are an ionizing radiation - they damage DNA and living tissue. Cosmic rays as they are in outer space, again, do not reach Earth to a harmful extent, but astronauts are exposed to them.

This exposure causes risks of cancer, cataracts, eye diseases, and bone marrow dysfunction. Recent studies, mostly on mice, have also associated cosmic ray exposure with mental states like anxiety and emotional reactivity, and impaired neurotransmission.

Scientists are still working on continuing manned missions in space, to the moon and, hopefully, Mars. The Artemis missions are working on being able to do so, but such missions have been halted for a long time since the Apollo missions.

Carbon-14 Dating

Another neat use of cosmic rays is in carbon-14 dating, to which they are essential.

Carbon dating is a type of absolute dating, meaning it provides exact years or periods of time for the creation and use of artifacts. It works on materials as old as about 60,000 years.

Carbon-14 dating relies on the decay of the carbon-14 isotope. The carbon-14 isotope is radioactive, has six protons, which makes it carbon, and 8 neutrons, while default carbon has six. It is one of the secondary particles caused by cosmic rays reacting to particles in Earth’s atmosphere; therefore, it could not exist without cosmic rays.

Carbon-14 exists in living organisms, and it starts to change when they die, gradually turning into other elements. Carbon-14 dating then measures how much carbon-14 remains in the organic material at the time of testing.

For example, carbon-14 dating was used to date the remains of Ötzi the Iceman, found frozen in the Alps. The hunter and his clothing, from carbon dating, were found to have died about 5,300 years ago.

Radiocarbon dating, like carbon-14 dating, is extremely valuable to archaeology and learning about the world around us. The existence of carbon-14, due to cosmic rays, has been essential to that learning.

Cosmic rays are an ever-present part of our world. They exist throughout the universe yet rarely directly affect us on Earth. While they have posed an issue on space travel, they have also provided invaluable information on the human past as well as the present universe.

Additional Resources

1. https://news.uchicago.edu/explainer/what-are-cosmic-rays#

2. https://news.wisc.edu/what-are-cosmic-rays-why-do-the-matter/

3. https://www.energy.gov/science/doe-explainsneutrinos#

4. https://news.wisc.edu/what-is-a-blazar/

5. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10044754/

6. https://news.uchicago.edu/explainer/what-is-carbon-14-dating#

#I was wrong here ya go#cosmic rays#cosmic radiation#particles#subatomic particles#space travel#article#research#outer space#cosmic#archaeology#radiocarbon#supernova#black hole#blazar

1 note

·

View note

Text

First observation of photons-to-taus in proton–proton collisions by CMS

First observation of photons-to-taus in proton–proton collisions by CMS

In March 2024, the CMS collaboration announced the observation of two photons creating two tau leptons in proton–proton collisions. It is the first time that this process has been seen in proton–proton collisions, which was made possible by using the precise tracking capabilities of the CMS detector. It is also the most precise measurement of the tau’s anomalous magnetic moment and offers a new way to constrain the existence of new physics.

The tau, sometimes called tauon, is a peculiar particle in the family of leptons. In general, leptons, together with quarks, make up the “matter” content of the Standard Model (SM). The tau was only discovered in the late 1980s at SLAC, and its associated neutrino – the tau neutrino – completed the tangible matter part upon its discovery in 2000 by the DONUT collaboration at Fermilab. Precise research for the tau is rather tricky though, as its lifetime is very short: it remains stable for only 290·10-15 s (a hundred quadrillionth of a second).

The two other charged leptons, the electron and the muon, are rather well studied. A lot is also known about their magnetic moments and their associated anomalous magnetic moments. The former can be understood as the strength and orientation of an imaginary bar magnet inside a particle. This measurable quantity, however, needs corrections at the quantum level arising from virtual particles tugging at the magnetic moment, deviating it from the predicted value. The quantum correction, referred to as anomalous magnetic moment, is of the order of 0.1%. If the theoretical and experimental results disagree, then this anomalous magnetic moment, al , opens doors to physics beyond the SM.

The anomalous magnetic moment of the electron is one of the most precisely known quantities in particle physics and agrees perfectly with the SM. Its muonic counterpart, on the other hand, is one of the most investigated ones, into which research is ongoing. Although theory and experiments have mostly agreed so far, recent results give rise to a tension that requires further investigation.

For the tau, however, the race is still going. It is especially hard to measure its anomalous magnetic moment, aτ, due to the tau’s short lifetime. The first attempts to measure aτ after the tau’s discovery came with an uncertainty that was 30 times higher than the size of the quantum corrections. Experimental efforts at CERN with the LEP and LHC detectors improved the constraints, reducing the uncertainties to 20 times the size of the quantum corrections.

In collisions, researchers look for a special process: two photons interacting to produce two tau leptons, also called a di-tau pair, which then decay into muons, electrons, or charged pions, and neutrinos. So far both ATLAS and CMS have observed this in ultra-peripheral lead–lead collisions. Now, CMS reports on the first observation of the same process during proton–proton collisions. These collisions offer a higher sensitivity to physics beyond the SM as new physics effects increase with the collision energy. With the outstanding tracking capabilities of the CMS detector, the collaboration was able to isolate this specific process from others, by selecting events where the taus are produced without any other track within distances as small as 1 mm. “This remarkable achievement of detecting ultra-peripheral proton–proton collisions sets the stage for many groundbreaking measurements of this kind with the CMS experiment,” said Michael Pitt, from the CMS analysis team.

This new method offers a new way to constrain the tau anomalous magnetic moment, which the CMS collaboration tried out immediately. While the significance will be improved with future run data, their new measurement places the tightest constraints so far, with higher precision than ever before. It reduces the uncertainty from… https://home.web.cern.ch/news/news/experiments/first-observation-photons-taus-proton-proton-collisions-cms (Source of the original content)

0 notes

Quote

The investigation of the two-particle source function in lead-lead collisions simulated by the EPOS model at a center of mass energy per nucleon pair of TeV is presented. The two-particle source functions are reconstructed directly on an event-by-event basis for pions, kaons and protons separately, using the final stage of EPOS. A Lévy source shape is observed for all three particle species in the individual events, deviating significantly from a Gaussian shape. The source parameters are extracted as functions of collision centrality and pair average transverse mass (). The Lévy exponent is found to be ordered accordingly to particle mass. The Lévy scale parameter is found to scale for all particle species with according to Gaussian hydrodynamic predictions; however, there is no -scaling found across these species. In case of pions, the effects of the decay products and hadronic rescattering are also investigated. The Lévy exponent is decreased when decay products are also included in the analysis. Without hadronic rescattering and decay products, the source shape is close to a Gaussian.

Event-by-event investigation of the two-particle source function in sNN=2.76 TeV PbPb collisions with EPOS - ScienceDirect

0 notes

Text

New type of entanglement lets scientists see inside nuclei

This technique is similar to how positron emission tomography (PET) scans image the brain and other body parts, but it works at the scale of femtometers — quadrillionths of a meter. It will help scientists understand how gluons build up the structure of protons, neutrons, and the atoms that make up visible matter. The quantum interference measurement is between dissimilar particles that strike meters apart in the STAR detector. This discovery could lead to new ways to harness quantum entanglement. Almost all such attempts to date, including in the field of quantum computing, have explored entanglement between identical particles.

This research used the Relativistic Heavy Ion Collider (RHIC), a Department of Energy Office of Science user facility that accelerates and collides the nuclei of atoms such as gold. These speeding nuclei are surrounded by a cloud of polarized photons — particles of light. Through a series of quantum fluctuations, the photons surrounding one speeding ion can interact with the gluons in the other. By tracking the velocity and angles at which certain particles emerge from these interactions, the scientists can measure the photon polarization very precisely. This allows them to map out the distribution of gluons both along the polarization direction and perpendicular to it, resulting in a more precise gluon distribution than measured previously.

To make these measurements, the scientists tracked two pions — one with a positive charge, the other with a negative charge. Each is made up of the combined wavefunctions of particles emerging from a decay process that occurs inside each of the two nuclei passing at a “long” distance (for nuclei). Interference patterns between these particles’ wavefunctions indicated that the oppositely charged particles striking RHIC’s STAR detector are entangled, or in sync with one another. This first-ever experimental observation of interference between dissimilar particles makes it possible to measure the photon polarization — and may open new avenues for harnessing quantum entanglement.

This work was funded by the Department of Energy Office of Science, Nuclear Physics program, the U.S. National Science Foundation, and a range of international agencies listed in the published paper.

0 notes

Text

Gamma rays are produced in many processes of particle physics. Typically, gamma rays are the products of neutral systems which decay through electromagnetic interactions (rather than a weak or stronginteraction). For example, in an electron–positron annihilation, the usual products are two gamma ray photons. If the annihilating electron and positron are at rest, each of the resulting gamma rays has an energy of ~ 511 keV and frequency of ~ 1.24×1020 Hz. Similarly, a neutral pion most often decays into two photons. Many other hadrons and massive bosons also decay electromagnetically. High energy physics experiments, such as the Large Hadron Collider, accordingly employ substantial radiation shielding.[citation needed] Because subatomic particles mostly have far shorter wavelengths than atomic nuclei, particle physics gamma rays are generally several orders of magnitude more energetic than nuclear decay gamma rays. Since gamma rays are at the top of the electromagnetic spectrum in terms of energy, all extremely high-energy photons are gamma rays; for example, a photon having the Planck energy would be a gamma ray.

0 notes

Text

Improved model of X-ray emission from hot accretion flows. (arXiv:2205.10918v1 [astro-ph.HE])

We have developed an improved model of X-ray emission from optically thin, two-temperature accretion flows, \texttt{kerrflow}, using an exact Monte Carlo treatment of global Comptonization as well as with a fully general relativistic description of both the radiative and hydrodynamic processes. It also includes pion-decay electrons, whose synchrotron emission dominates the seed photons yield at high accretion rates in flows around supermassive black holes. We consider in detail the dependence of the model spectra on the black hole spin, the electron heating efficiency, the plasma magnetization and the accretion rate, and we discuss feasibility of constraining these parameters by analyzing X-ray spectra of nearby low-luminosity active galactic nuclei. We note some degeneracies which hinder precise estimations of these parameters when individual X-ray spectra are analyzed. These degeneracies are eliminated when several spectra from a given source are fitted jointly, which then allows us to reliably measure the model parameters. We find significant differences with previous spectral models of hot-flow emission, related with the computational methods for Comptonization. Finally, we briefly consider and discuss the dependence on the viscosity parameter and on the outflow strength.

from astro-ph.HE updates on arXiv.org https://ift.tt/6sRz8pN

0 notes

Text

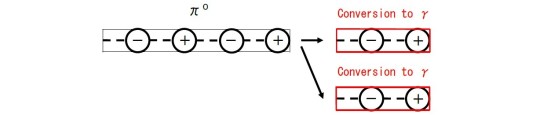

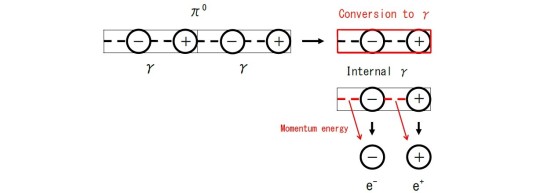

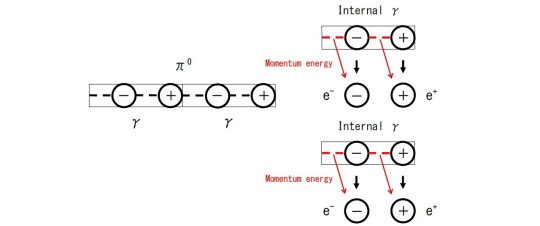

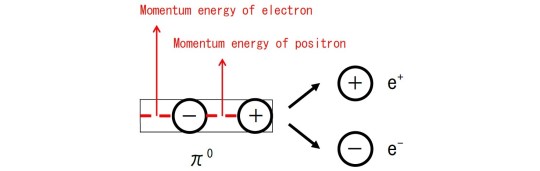

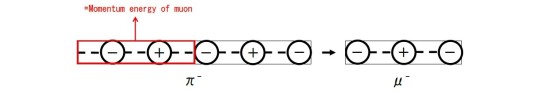

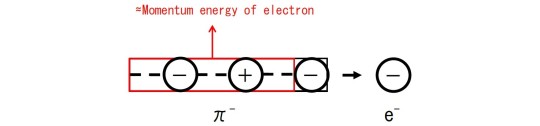

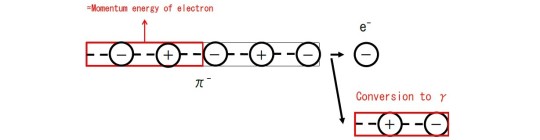

Pion decay

I ignore neutrinos here for conciseness.

The dominant decay mode of a neutral pion (π0) is into two light quanta (γ).

The second largest π0 decay mode is the Dalitz decay.

The third largest π0 decay mode is the double-Dalitz decay.

The fourth largest π0 decay mode is into an electron (e-)-positron (e+) pair.

The neutral pion has also been observed to decay into positronium.

The primary decay of a negative pion (π-) is a leptonic decay into a muon (μ-).

The second common π- decay mode is a leptonic decay into an electron.

Beyond the purely leptonic decays of pion, some radiative leptonic decays (that is, decay into the usual leptons and a light quantum) have also been observed.

The very rare "pion beta decay" is into a neutral pion and an electron.

The decay process of a negative pion (π-) becomes that of a positive pion (π+) by exchanging electrons and positrons with each other.

1 note

·

View note

Photo

Ask Ethan: Could An Unexplained Decay At The LHC Demolish The Standard Model?

“I want know more about the last announcement from the LHCb [collaboration] about CP Violating asymmetry in a charged B meson decay. What [does] this mean and/or this is a hint for new physics beyond the Standard Model??”

I hear your whining all the time. “The LHC is such a waste. They haven’t found anything other than the Higgs.” Well, maybe you’re not paying attention to the right things, then? The Standard Model is so successful because it makes exquisite predictions for how the composite particles we produce in accelerators ought to live and decay. Well, we have a series of particles, the mesons that contain bottom quarks, that clearly aren’t obeying the rules of the Standard Model. The most robust puzzle we have about this is known as the Kπ puzzle, since there’s a large and significant difference between the CP-asymmetry of neutral and charged B-mesons that decay to kaons and pions.

Could this be the hint of new physics beyond the Standard Model we’ve waited for for so long? Don’t count the LHC out yet, and keep looking for new anomalies!

51 notes

·

View notes

Photo

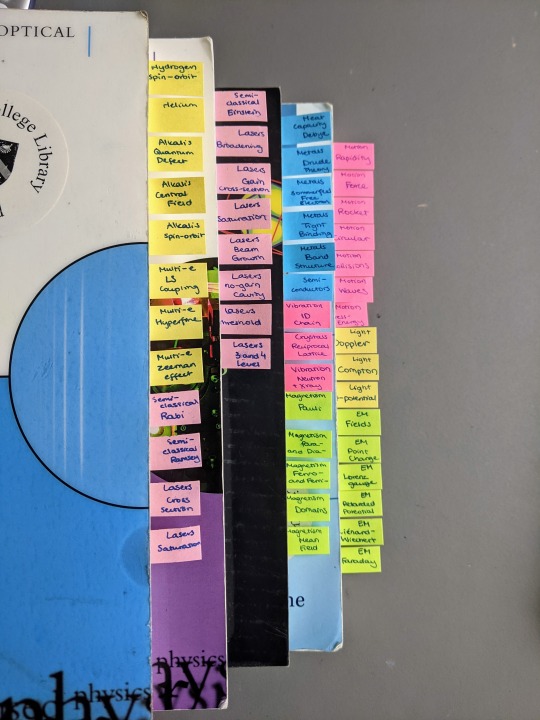

Yesterday morning the college welfare officers hosted snacks in the quad outside my window, so I had some fun and aggressively hinted at my college son about carnations for next Monday. The weather’s been greyer since then, though - my bike was wet when I left the diner last night, although I didn’t get caught in the rain, and I’ll wear a rain jacket when I cycle into central today to buy a vase. But on the plus side, I need a vase because my boyfriend has ordered me flowers for my exams (and I have ordered some for him) on top of the carnations I’ll hopefully get from my college son, so that’s exciting.

Yesterday I finished the questions I skipped on an atomic and laser physics exam on Wednesday (including finding a random Berkeley professor’s explanation of the Thomas-Fermi model of the atom, which I am certain we were never taught about). That means I’ve done all the 2020 exam papers! I also put bookmarks in my textbooks so if I do need to look something up in an exam I don’t spend forever searching the table of contents, and watched the nuclear and particle physics revision lectures and did a timed past exam on it. The plan for today is to correct that exam, and then ideally watch condensed matter revision lectures and do that past paper.

Photos: Feynmann diagrams for pion decays I drew in yesterday’s nuclear and particle physics past exam, and stickynotes in my textbooks for atomic physics, laser physics, condensed matter, and special relativity

04/06/2021

22 notes

·

View notes

Text

A decay of a kaon

Quantum mechanics is wack.

Now, first off, a full disclosure: I am _not_ a quantum physicist. This is all based on reading some wikipedia articles and other things and getting a only general idea of whatever the hell is going on here. I may have gotten things wrong—do check the notes to see if anyone has provided a correction.

Begin with a positive kaon. We'll ignore how you managed to get it—let's say that you got it for your birthday or something. It's made of an up quark and an antistrange quark.

The antistrange quark decays into an antiup quark and a positive W boson, the latter of which decays into an antidown quark and an up quark. The antidown quark bonds with the up quark from the original kaon to form a positive pion. The other up quark emits a gluon, which decays into an antidown quark, which combines with the new up quark to form another positive pion, and a down quark, which combines with the antiup quark to form a negative pion.

We're only about halfway there.

In one of the positive pions, the up quark and the antidown quark annihilate each other into a positive W boson, which then decays into a positron and an electron neutrino. In the negative pion, the antiup quark and the down quark annihilate each other into a negative W boson, which decays into a muon and a muon antineutrino. The muon then decays into a muon neutrino and another negative W boson (somehow?) that then decays into an electron and an electron antineutrino.

In the other positive pion, the up quark decays into a positive W boson and a down quark. The boson decays into a positron and an electron neutrino, and the down quark bonds with the antidown quark to make a neutral pion. Weird. The down quark in the pion then decays into a gamma ray, and the antidown quark decays into an electron and a positron, forming positronium. The constituents of the positronium then annihilate themselves, forming two gamma rays.

Here's all of this as an image. (Yes, I know that this isn't a proper Feynman diagram, but I can't be arsed to make it one. It at least looks nice.)

Now, we have two positrons, an electron, two electron neutrinos, an electron antineutrino, three gamma rays, a muon neutrino, and a muon antineutrino. There's probably some more stuff that would happen after this, but other than another positronium forming, I can't find any information on what would happen, since it seems like if either neutrino-antineutrino pair collided, they would just decay into themselves again? I dunno.

Quantum mechanics is wack.

5 notes

·

View notes

Text

Searching for new asymmetry between matter and antimatter

Once a particle of matter, always a particle of matter. Or not. Thanks to a quirk of quantum physics, four known particles made up of two different quarks—such as the electrically neutral D meson composed of a charm quark and an up antiquark—can spontaneously oscillate into their antimatter partners and vice versa.

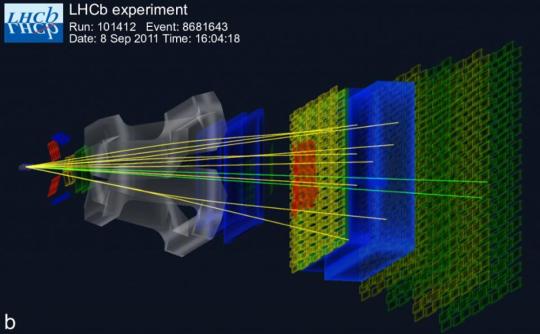

At a seminar held March 26 at CERN, the LHCb collaboration at the Large Hadron Collider (LHC) presented the results of its latest search for matter–antimatter asymmetry in the oscillation of the neutral D meson, which, if found, could help shed light on the mysterious matter–antimatter imbalance in the universe.

The weak force of the Standard Model of particle physics induces an asymmetry between matter and antimatter, known as CP violation, in particles containing quarks. However, these sources of CP violation are difficult to study and are insufficient to explain the matter–antimatter imbalance in the universe, leading physicists to both search for new sources and to study the known ones better than ever before.

In their latest endeavor, the LHCb researchers have rolled up their sleeves to measure with unprecedented precision a set of parameters that determine the matter–antimatter oscillation of the neutral D meson and enable the search for the hitherto unobserved but predicted CP violation in the oscillation.

The collaboration had previously measured the same set of parameters, which are linked to the decay of the neutral D meson into a positively charged kaon and a negatively charged pion, using its full data set from Run 1 of the LHC and a partial data set from Run 2.

This time around, the team analyzed the full Run-2 data set and, by combining the result with that of its previous analysis, excluding the partial Run-2 data set, it obtained the most precise measurements of the parameters to date—the overall measurement uncertainty is 1.6 times smaller than the smallest uncertainty achieved before by LHCb.

The results are consistent with previous studies, confirming the matter–antimatter oscillation of the neutral D meson and showing no evidence of CP violation in the oscillation. The findings call for future analyses of this and other decays of the neutral D meson using data from the third run of the LHC and its planned upgrade, the High-Luminosity LHC.

Other neutral D meson decays of interest include the decay into a pair of two kaons or two pions, in which LHCb researchers observed CP violation in particles containing charm quarks for the first time, and the decay into a neutral kaon and a pair of pions, with which LHCb clocked the speed of the particle's matter–antimatter oscillation. No avenue should be left unexplored in the search for clues to the matter–antimatter imbalance in the universe and other cosmic mysteries.

0 notes

Photo

Long-lived pionic helium: Exotic matter experimentally verified for the first time

Exotic atoms in which electrons are replaced by other subatomic particles of the same charge allow deep insights into the quantum world. After eight years of ongoing research, a group led by Masaki Hori, senior physicist at the Max Planck Institute of Quantum Optics in Garching, Germany, has now succeeded in a challenging experiment: In a helium atom, they replaced an electron with a pion in a specific quantum state and verified the existence of this long-lived "pionic helium" for the very first time. The usually short-lived pion could thereby exist 1000 times longer than it normally would in other varieties of matter. Pions belong to an important family of particles that determine the stability and decay of atomic nuclei. The pionic helium atom enables scientists to study pions in an extremely precise manner using laser spectroscopy. The research is published in this week's edition of Nature.

For eight years, the group worked on this challenging experiment, which has the potential to establish a new field of research. The team experimentally demonstrated for the first time that long-lived pionic helium atoms really exist. "It is a form of chemical reaction that happens automatically," explains Hori. The exotic atom was first theoretically predicted in 1964 after experiments at that time pointed toward its existence. However, it was considered extremely difficult to verify this prediction experimentally. Usually, in an atom, the extremely short-lived pion decays quickly. However, in pionic helium, it can be conserved in a sense so it lives 1000 times longer than it normally does in other atoms.

Read more.

50 notes

·

View notes

Text

First observation of photons-to-taus in proton–proton collisions by CMS

First observation of photons-to-taus in proton–proton collisions by CMS

In March 2024, the CMS collaboration announced the observation of two photons creating two tau leptons in proton–proton collisions. It is the first time that this process has been seen in proton–proton collisions, which was made possible by using the precise tracking capabilities of the CMS detector. It is also the most precise measurement of the tau’s anomalous magnetic moment and offers a new way to constrain the existence of new physics.

The tau, sometimes called tauon, is a peculiar particle in the family of leptons. In general, leptons, together with quarks, make up the “matter” content of the Standard Model (SM). The tau was only discovered in the late 1980s at SLAC, and its associated neutrino – the tau neutrino – completed the tangible matter part upon its discovery in 2000 by the DONUT collaboration at Fermilab. Precise research for the tau is rather tricky though, as its lifetime is very short: it remains stable for only 290·10-15 s (a hundred quadrillionth of a second).

The two other charged leptons, the electron and the muon, are rather well studied. A lot is also known about their magnetic moments and their associated anomalous magnetic moments. The former can be understood as the strength and orientation of an imaginary bar magnet inside a particle. This measurable quantity, however, needs corrections at the quantum level arising from virtual particles tugging at the magnetic moment, deviating it from the predicted value. The quantum correction, referred to as anomalous magnetic moment, is of the order of 0.1%. If the theoretical and experimental results disagree, then this anomalous magnetic moment, al , opens doors to physics beyond the SM.

The anomalous magnetic moment of the electron is one of the most precisely known quantities in particle physics and agrees perfectly with the SM. Its muonic counterpart, on the other hand, is one of the most investigated ones, into which research is ongoing. Although theory and experiments have mostly agreed so far, recent results give rise to a tension that requires further investigation.

For the tau, however, the race is still going. It is especially hard to measure its anomalous magnetic moment, aτ, due to the tau’s short lifetime. The first attempts to measure aτ after the tau’s discovery came with an uncertainty that was 30 times higher than the size of the quantum corrections. Experimental efforts at CERN with the LEP and LHC detectors improved the constraints, reducing the uncertainties to 20 times the size of the quantum corrections.

In collisions, researchers look for a special process: two photons interacting to produce two tau leptons, also called a di-tau pair, which then decay into muons, electrons, or charged pions, and neutrinos. So far both ATLAS and CMS have observed this in ultra-peripheral lead–lead collisions. Now, CMS reports on the first observation of the same process during proton–proton collisions. These collisions offer a higher sensitivity to physics beyond the SM as new physics effects increase with the collision energy. With the outstanding tracking capabilities of the CMS detector, the collaboration was able to isolate this specific process from others, by selecting events where the taus are produced without any other track within distances as small as 1 mm. “This remarkable achievement of detecting ultra-peripheral proton–proton collisions sets the stage for many groundbreaking measurements of this kind with the CMS experiment,” said Michael Pitt, from the CMS analysis team.

This new method offers a new way to constrain the tau anomalous magnetic moment, which the CMS collaboration tried out immediately. While the significance will be improved with future run data, their new measurement places the tightest constraints so far, with higher precision than ever before. It reduces the uncertainty from the… https://home.cern/news/news/experiments/first-observation-photons-taus-proton-proton-collisions-cms (Source of the original content)

0 notes

Text

Exploring new ways to see the Higgs boson

CERN - European Organization for Nuclear Research logo.

June 4, 2020

The ATLAS and CMS collaborations presented their latest results on new signatures for detecting the Higgs boson at CERN’s Large Hadron Collider

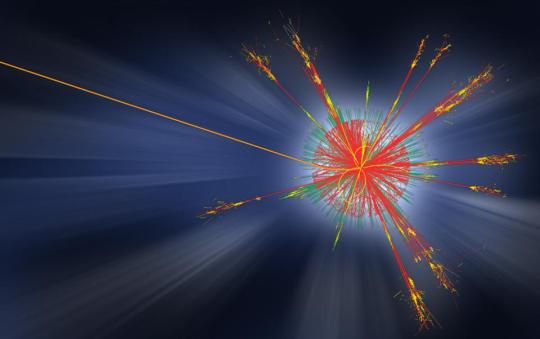

Image above: Collision events recorded by ATLAS (left) and CMS (right), used in the search for rare Higgs boson transformations (Image: CERN).

The ATLAS and CMS collaborations presented their latest results on new signatures for detecting the Higgs boson at CERN’s Large Hadron Collider. These include searches for rare transformations of the Higgs boson into a Z boson – which is a carrier of one of the fundamental forces of nature – and a second particle. Observing and studying transformations that are predicted to be rare helps advance our understanding of particle physics and could also point the way to new physics if observations differ from the predictions. The results also included searches for signs of Higgs transformations into “invisible” particles, which could shine light on potential dark-matter particles. The analyses involved nearly 140 inverse femtobarns of data, or around 10 million billion proton–proton collisions, recorded between 2015 and 2018.

The ATLAS and CMS detectors can never see a Higgs boson directly: an ephemeral particle, it transforms (or “decays”) into lighter particles almost immediately after being produced in proton–proton collisions, and the lighter particles leave telltale signatures in the detectors. However, similar signatures may be produced by other Standard-Model processes. Scientists must therefore first identify the individual pieces that match this signature and then build up enough statistical evidence to confirm that the collisions had indeed produced Higgs bosons.

When it was discovered in 2012, the Higgs boson was observed mainly in transformations into pairs of Z bosons and pairs of photons. These so-called “decay channels” have relatively clean signatures making them more easily detectable, and they have been observed at the LHC. Other transformations are predicted to occur only very rarely, or to have a less clear signature, and are therefore challenging to spot.

At LHCP, ATLAS presented the latest results of their searches for one such rare process, in which a Higgs boson transforms into a Z boson and a photon (γ). The Z thus produced, itself being unstable, transforms into pairs of leptons, either electrons or muons, leaving a signature of two leptons and a photon in the detector. Given the low probability of observing a Higgs transformation to Zγ with the data volume analysed, ATLAS was able to rule out the possibility that more than 0.55% of Higgs bosons produced in the LHC would transform into Zγ. “With this analysis,” says Karl Jakobs, spokesperson of the ATLAS collaboration, “we can show that our experimental sensitivity for this signature has now reached close to the Standard Model’s prediction.” The extracted best value for the H→Zγ signal strength, defined as the ratio of the observed to the predicted Standard-Model signal yield, is found to be 2.0+1.0−0.9.

CMS presented the results of the first search for Higgs transformations also involving a Z boson but accompanied by a ρ (rho) or φ (phi) meson. The Z boson once again transforms into pairs of leptons, while the second particle transforms into pairs of pions (ππ) in the case of the ρ and into pairs of kaons (KK) in the case of the φ. “These transformations are extremely rare,” says Roberto Carlin, spokesperson of the CMS collaboration, “and are not expected to be observed at the LHC unless physics from beyond the Standard Model is involved.” The data analysed allowed CMS to rule out that more than approximately 1.9% of Higgs bosons could transform into Zρ and more than 0.6% could transform into Zφ. While these limits are much greater than the predictions from the Standard Model, they demonstrate the ability of the detectors to make inroads in the search for physics beyond the Standard Model.

Large Hadron Collider (LHC). Animation Credit: CERN

The so-called “dark sector” includes hypothetical particles that could make up dark matter, the mysterious element that accounts for more than five times the mass of ordinary matter in the universe. Scientists believe that the Higgs boson could hold clues as to the nature of dark-matter particles, as some extensions of the Standard Model propose that a Higgs boson could transform into dark-matter particles. These particles would not interact with the ATLAS and CMS detectors, meaning they remain “invisible” to them. This would allow them to escape direct detection and manifest as “missing energy” in the collision event. At LHCP, ATLAS presented their latest upper limit – of 13% – on the probability that a Higgs boson could transform into invisible particles known as weakly interacting massive particles, or WIMPs, while CMS presented results from a new search into Higgs transformations to four leptons via at least one intermediate “dark photon”, also presenting limits on the probability of such a transformation occurring at the LHC.

The Higgs boson continues to prove invaluable in helping scientists test the Standard Model of particle physics and seek physics that may lie beyond. These are only some of the many results concerning the Higgs boson that were presented at LHCP. You can read more about them on the ATLAS and CMS websites.

ATLAS: https://atlas.cern/updates/atlas-news/new-results-lhcp-2020

CMS: https://cms.cern/news/cms-shows-new-results-lhcp2020-conference

This media update is part of a series related to the 2020 Large Hadron Collider Physics conference, which took place from 25 to 30 May 2020. Originally planned to take place in Paris, the conference was held entirely online due to the COVID-19 pandemic.

Technical note:

When data volumes are not high enough to claim a definite observation of a particular process, physicists can predict the limits that they expect to place on the process. In the case of Higgs transformations, these limits are based on the product of two terms: the rate at which a Higgs boson is produced in proton–proton collisions (production cross-section) and the rate at which it will undergo a particular transformation to lighter particles (branching fraction).

ATLAS expected to place an upper limit of 1.7 times the Standard Model expectation for the process involving Higgs transformations to a Z boson and a photon (H→Zγ) if such a transformation were not present; the collaboration was able to place an upper limit of 3.6 times this value, approaching the sensitivity to the Standard Model’s predictions. The CMS searches were for a much rarer process, predicted by the Standard Model to occur only once in every million Higgs transformations, and the collaboration was able to set upper limits of about 1000 times the Standard Model expectations for the H→Zρ and H→Zφ processes.

Note:

CERN, the European Organization for Nuclear Research, is one of the world’s largest and most respected centres for scientific research. Its business is fundamental physics, finding out what the Universe is made of and how it works. At CERN, the world’s largest and most complex scientific instruments are used to study the basic constituents of matter — the fundamental particles. By studying what happens when these particles collide, physicists learn about the laws of Nature.

The instruments used at CERN are particle accelerators and detectors. Accelerators boost beams of particles to high energies before they are made to collide with each other or with stationary targets. Detectors observe and record the results of these collisions.

Founded in 1954, the CERN Laboratory sits astride the Franco–Swiss border near Geneva. It was one of Europe’s first joint ventures and now has 23 Member States.

Links to the papers and notes:

ATLAS search for H→Zγ: https://cds.cern.ch/record/2717799

CMS search for H→Zρ or H→Zϕ: https://cds.cern.ch/record/2718949

ATLAS search for “invisible” transformations of the Higgs boson: https://cds.cern.ch/record/2715447

CMS search for Higgs transformations involving a dark photon: https://cds.cern.ch/record/2718976

Related links:

ATLAS: https://home.cern/science/experiments/atlas

CMS: https://home.cern/science/experiments/cms

Large Hadron Collider (LHC): https://home.cern/science/accelerators/large-hadron-collider

Standard Model: https://home.cern/science/physics/standard-model

Higgs boson: https://home.cern/science/physics/higgs-boson

For more information about European Organization for Nuclear Research (CERN), Visit: https://home.cern/

Image (mentioned), Animation (mentioned), Text, Credit: CERN.

Best regards, Orbiter.ch

Full article

28 notes

·

View notes