#alcf

Photo

Concours A La Claire Fontaine: gagne leur deux soins phare ✨ 🍃Contour des yeux Oeil De Biche : eau de bleuet, algue rouge Polysiphonia, extrait de lavande papillon, extrait de rose de Provins. 🍃L'huile sèche Elixir de Capucine : elle s’utilise sur le corps, les cheveux et le visage. Pour participer vous avez jusqu’au 7 juillet 23h59 pour: 🔸Suivre @alaclairefontainecosmetiques 🔶Liker mon post à côté 🔶Taguer 2 ami(e)s en commentaire Doublez vos chances en partageant ce concours en story. Ce concours est ouvert en France métropolitaine. Bonne chance à tous ! Tirage au sort dans les jours qui suivent. . . . #annsom #concours #concoursinstagram #concoursfrance #concoursinsta #concoursbeaute #huileseche #alcf #cosmetologia #skincarecommunity #soinbio #soindesyeux #cosmetiquesnaturels #naturalbeauty https://www.instagram.com/p/Cfb3QgpMpgO/?igshid=NGJjMDIxMWI=

#annsom#concours#concoursinstagram#concoursfrance#concoursinsta#concoursbeaute#huileseche#alcf#cosmetologia#skincarecommunity#soinbio#soindesyeux#cosmetiquesnaturels#naturalbeauty

0 notes

Text

Thanks Dad, For Not (Always) Showing Up

I’m so thankful my dad didn’t come to all of my football, basketball and baseball games. He was thankful too. He never even pretended that perfect attendance at our ball games was a goal, or that his identity was tied into whether or not he showed up. Of course I was excited to see him on occasion standing down the first base line just outside the fence, with his tie loosened cheering me on while I tried to crush the ball. But those days he wasn’t there I knew why—he was working. His absences were a real gift to me, a gift I didn’t fully appreciate until decades later. Dad refused to make me the center of his world.

I recently stumbled upon a pretty gross disorder called Pradar-Willi Syndrome (PWS). The few who are diagnosed with this annually, never get full when they eat. Left without the sensation of satisfaction the individual keeps eating and eating and eating, right into obesity and possibly an early grave. When an individual is inflicted with PWS, good things (like food) can become deadly things.

Many children today are being over-served in the attention department. When children take the place of Jesus as the center of the home, they’re set up for failure outside the home. A sociologist has quipped that ours is the boomerang age, where children leave the home only to return and settle in for extended adolescence. How did this happen? When you were the one everyone orbited around in your home, and then when you left and discovered you’re not the center of the world, of course you’d want to come back to the one place you were.

In hindsight, my father’s refusal to allow me to overdose on attention gave me three gifts:

1. The gift of not being number one. My parents are deep lovers of Jesus, and they always reminded us that we’ve been called into something so much bigger than us, the kingdom. Our extra-curricular activities were scheduled around church attendance, missions’ trips and service projects (not the other way around).

2. The gift of seeing a man work. Dad’s absence communicated loudly he works. When kids (on occasion) would ask where my dad was, I could tell them he was at work. Work is a good thing. His work paid for my athletic fees, cleats, equipment and uniforms.

3. Resilience. Children are a lot more resilient than we give them credit. My father was easily gone over 100 days a year, and that’s a conservative estimate. While he came to everything he could, he missed a lot. What were the results? My three siblings and I are all educated, contributing, healthy members of society. We’ve ventured into almost every region of the country hundreds and thousands of miles away from our parents and each other, where we’ve had to start lives and build churches, businesses and community. We’ve got a grit to us because our parents refused to coddle. Thanks dad (and mom).

So relax. Missing a game or a piano recital isn’t a bad thing. It can actually do your children some good.

4 notes

·

View notes

Photo

Merci @theprofspage #language ❤️ https://instagr.am/p/CEpQW3oBwwS/

0 notes

Video

the core of a supernova in the first half second after core collapse

In this simulation, the matter is colored by entropy, a measure of disorder. (Hotter colors like red indicate higher entropies.)

D. Vartanyan, A. Burrows. Thanks to ALCF, D. Radice and H. Nakagura

source: https://www.quantamagazine.org/supercomputer-simulations-reveal-the-power-inside-a-supernova-20210121/

9 notes

·

View notes

Photo

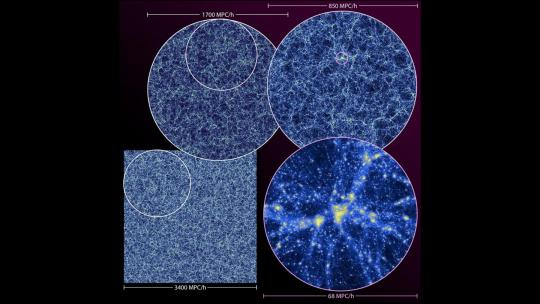

Mira's last journey: Exploring the dark universe A massive simulation of the cosmos and a nod to the next generation of computing A team of physicists and computer scientists from the U.S. Department of Energy's (DOE) Argonne National Laboratory performed one of the five largest cosmological simulations ever. Data from the simulation will inform sky maps to aid leading large-scale cosmological experiments. The simulation, called the Last Journey, follows the distribution of mass across the universe over time -- in other words, how gravity causes a mysterious invisible substance called "dark matter" to clump together to form larger-scale structures called halos, within which galaxies form and evolve. "We've learned and adapted a lot during the lifespan of Mira, and this is an interesting opportunity to look back and look forward at the same time." -- Adrian Pope, Argonne physicist The scientists performed the simulation on Argonne's supercomputer Mira. The same team of scientists ran a previous cosmological simulation called the Outer Rim in 2013, just days after Mira turned on. After running simulations on the machine throughout its seven-year lifetime, the team marked Mira's retirement with the Last Journey simulation. The Last Journey demonstrates how far observational and computational technology has come in just seven years, and it will contribute data and insight to experiments such as the Stage-4 ground-based cosmic microwave background experiment (CMB-S4), the Legacy Survey of Space and Time (carried out by the Rubin Observatory in Chile), the Dark Energy Spectroscopic Instrument and two NASA missions, the Roman Space Telescope and SPHEREx. "We worked with a tremendous volume of the universe, and we were interested in large-scale structures, like regions of thousands or millions of galaxies, but we also considered dynamics at smaller scales," said Katrin Heitmann, deputy division director for Argonne's High Energy Physics (HEP) division. The code that constructed the cosmos The six-month span for the Last Journey simulation and major analysis tasks presented unique challenges for software development and workflow. The team adapted some of the same code used for the 2013 Outer Rim simulation with some significant updates to make efficient use of Mira, an IBM Blue Gene/Q system that was housed at the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science User Facility. Specifically, the scientists used the Hardware/Hybrid Accelerated Cosmology Code (HACC) and its analysis framework, CosmoTools, to enable incremental extraction of relevant information at the same time as the simulation was running. "Running the full machine is challenging because reading the massive amount of data produced by the simulation is computationally expensive, so you have to do a lot of analysis on the fly," said Heitmann. "That's daunting, because if you make a mistake with analysis settings, you don't have time to redo it." The team took an integrated approach to carrying out the workflow during the simulation. HACC would run the simulation forward in time, determining the effect of gravity on matter during large portions of the history of the universe. Once HACC determined the positions of trillions of computational particles representing the overall distribution of matter, CosmoTools would step in to record relevant information -- such as finding the billions of halos that host galaxies -- to use for analysis during post-processing. "When we know where the particles are at a certain point in time, we characterize the structures that have formed by using CosmoTools and store a subset of data to make further use down the line," said Adrian Pope, physicist and core HACC and CosmoTools developer in Argonne's Computational Science (CPS) division. "If we find a dense clump of particles, that indicates the location of a dark matter halo, and galaxies can form inside these dark matter halos." The scientists repeated this interwoven process -- where HACC moves particles and CosmoTools analyzes and records specific data -- until the end of the simulation. The team then used features of CosmoTools to determine which clumps of particles were likely to host galaxies. For reference, around 100 to 1,000 particles represent single galaxies in the simulation. "We would move particles, do analysis, move particles, do analysis," said Pope. "At the end, we would go back through the subsets of data that we had carefully chosen to store and run additional analysis to gain more insight into the dynamics of structure formation, such as which halos merged together and which ended up orbiting each other." Using the optimized workflow with HACC and CosmoTools, the team ran the simulation in half the expected time. Community contribution The Last Journey simulation will provide data necessary for other major cosmological experiments to use when comparing observations or drawing conclusions about a host of topics. These insights could shed light on topics ranging from cosmological mysteries, such as the role of dark matter and dark energy in the evolution of the universe, to the astrophysics of galaxy formation across the universe. "This huge data set they are building will feed into many different efforts," said Katherine Riley, director of science at the ALCF. "In the end, that's our primary mission -- to help high-impact science get done. When you're able to not only do something cool, but to feed an entire community, that's a huge contribution that will have an impact for many years." The team's simulation will address numerous fundamental questions in cosmology and is essential for enabling the refinement of existing models and the development of new ones, impacting both ongoing and upcoming cosmological surveys. "We are not trying to match any specific structures in the actual universe," said Pope. "Rather, we are making statistically equivalent structures, meaning that if we looked through our data, we could find locations where galaxies the size of the Milky Way would live. But we can also use a simulated universe as a comparison tool to find tensions between our current theoretical understanding of cosmology and what we've observed." Looking to exascale "Thinking back to when we ran the Outer Rim simulation, you can really see how far these scientific applications have come," said Heitmann, who performed Outer Rim in 2013 with the HACC team and Salman Habib, CPS division director and Argonne Distinguished Fellow. "It was awesome to run something substantially bigger and more complex that will bring so much to the community." As Argonne works towards the arrival of Aurora, the ALCF's upcoming exascale supercomputer, the scientists are preparing for even more extensive cosmological simulations. Exascale computing systems will be able to perform a billion billion calculations per second -- 50 times faster than many of the most powerful supercomputers operating today. "We've learned and adapted a lot during the lifespan of Mira, and this is an interesting opportunity to look back and look forward at the same time," said Pope. "When preparing for simulations on exascale machines and a new decade of progress, we are refining our code and analysis tools, and we get to ask ourselves what we weren't doing because of the limitations we have had until now." The Last Journey was a gravity-only simulation, meaning it did not consider interactions such as gas dynamics and the physics of star formation. Gravity is the major player in large-scale cosmology, but the scientists hope to incorporate other physics in future simulations to observe the differences they make in how matter moves and distributes itself through the universe over time. "More and more, we find tightly coupled relationships in the physical world, and to simulate these interactions, scientists have to develop creative workflows for processing and analyzing," said Riley. "With these iterations, you're able to arrive at your answers -- and your breakthroughs -- even faster." IMAGE....Visualization of the Last Journey simulation. Shown is the large-scale structure of the universe as a thin slice through the full simulation (lower left) and zoom-ins at different levels. The lower right panel shows one of the largest structures in the simulation. CREDIT (Image by Argonne National Laboratory.)

5 notes

·

View notes

Text

ALCF Supercomputers Help Address LHC’s Growing Computing Needs

ALCF Supercomputers Help Address LHC’s Growing Computing Needs

Particle collision experiments at CERN’s Large Hadron Collider (LHC)—the world’s largest particle accelerator—will generate around 50 petabytes (50 million gigabytes) of data this year that must be processed and analyzed to aid in the facility’s search for new physics discoveries.

That already-massive amount of data is expected to grow significantly when CERN transitions to the High-Luminosity LHC

View On WordPress

0 notes

Text

Extreme-scale computing and AI forecast a promising future for fusion power

Summary : New computer simulation forecasts a surprisingly optimistic heat load for future fusion facilities designed to harvest on Earth the fusion that powers the sun and stars to generate electricity.

Efforts to duplicate on Earth the fusion reactions that power the sun and stars for unlimited energy must contend with extreme heat-load density that can damage the doughnut-shaped fusion facilities called tokamaks, the most widely used laboratory facilities that house fusion reactions, and shut them down. These loads flow against the walls of what are called divertor plates that extract waste heat from the tokamaks.

Far larger forecast

But using high-performance computers and artificial intelligence (AI), researchers at the U.S. Department of Energy’s (DOE) Princeton Plasma Physics Laboratory (PPPL) have predicted a far larger and less damaging heat-load width for the full-power operation of ITER, the international tokamak under construction in France, than previous estimates have found. The new formula produced a forecast that was over six-times wider than those developed by a simple extrapolation from present tokamaks to the much larger ITER facility whose goal is to demonstrate the feasibility of fusion power.

“If the simple extrapolation to full-power ITER from today’s tokamaks were correct, no known material could withstand the extreme heat load without some difficult preventive measures,” said PPPL physicist C.S. Chang, leader of the team that developed the new, wider forecast and first author of a paper that Physics of Plasmas has published as an Editor’s Pick. “An accurate formula can enable scientists to operate ITER in a more comfortable and cost-effective way toward its goal of producing 10 times more fusion energy than the input energy,” Chang said.

Fusion reactions combine light elements in the form of plasma — the hot, charged state of matter composed of free electrons and atomic nuclei that makes up to 99 percent of the visible universe — to generate massive amounts of energy. Tokamaks, the most widely used fusion facilities, confine the plasma in magnetic fields and heat it to million-degree temperatures to produce fusion reactions. Scientists around the world are seeking to produce and control such reactions to create a safe, clean, and virtually inexhaustible supply of power to generate electricity.

The Chang team’s surprisingly optimistic forecast harkens back to results the researchers produced on the Titan supercomputer at the Oak Ridge Leadership Computing Facility (OLCF) at Oak Ridge National Laboratory in 2017. The team used the PPPL-developed XGC high-fidelity plasma turbulence code to forecast a heat load that was over six-times wider in full-power ITER operation than simple extrapolations from current tokamaks predicted.

Surprise finding

The surprising finding raised eyebrows by sharply contradicting the dangerously narrow heat-load forecasts. What accounted for the difference — might there be some hidden plasma parameter, or condition of plasma behavior, that the previous forecasts had failed to detect?

Those forecasts arose from parameters in the simple extrapolations that regarded plasma as a fluid without considering the important kinetic, or particle motion, effects. By contrast, the XGC code produces kinetic simulations using trillions of particles on extreme-scale computers, and its six-times wider forecast suggested that there might indeed be hidden parameters that the fluid approach did not factor in.

The team performed more refined simulations of the full-power ITER plasma on the Summit supercomputer at the Oak Ridge Leadership Computing Facility (OLCF) at Oak Ridge National Laboratory to ensure that their 2017 findings on Titan were not in error.

The team also performed new XGC simulations on current tokamaks to compare the results to the much wider Summit and Titan findings. One simulation was on one of the highest magnetic-field plasmas on the Joint European Torus (JET) in the United Kingdom, which reaches 73 percent of the full-power ITER magnetic field strength. Another simulation was on one of the highest magnetic-field plasmas on the now decommissioned C-Mod tokamak at the Massachusetts Institute of Technology (MIT), which reaches 100 percent of the full-power ITER magnetic field.

The results in both cases agreed with the narrow heat-load width forecasts from simple extrapolations. These findings strengthened the suspicion that there are indeed hidden parameters.

Supervised machine learning

The team then turned to a type of AI method called supervised machine learning to discover what the unnoticed parameters might be. Using kinetic XGC simulation data from future ITER plasma, the AI code identified the hidden parameter as related to the orbiting of plasma particles around the tokamak’s magnetic field lines, an orbiting called gyromotion.

The AI program suggested a new formula that forecasts a far wider and less dangerous heat-load width for full-power ITER than the previous XGC formula derived from experimental results in present tokamaks predicted. Furthermore, the AI-produced formula recovers the previous narrow findings of the formula built for the tokamak experiments.

“This exercise exemplifies the necessity for high-performance computing, by not only producing high-fidelity understanding and prediction but also improving the analytic formula to be more accurate and predictive.” Chang said. “It is found that the full-power ITER edge plasma is subject to a different type of turbulence than the edge in present tokamaks due to the large size of the ITER edge plasma compared to the gyromotion radius of particles.”

Researchers then verified the AI-produced formula by performing three more simulations of future ITER plasmas on the supercomputers Summit at OLCF and Theta at the Argonne Leadership Computing Facility (ALCF) at Argonne National Laboratory. “If this formula is validated experimentally,” Chang said, “this will be huge for the fusion community and for ensuring that ITER’s divertor can accommodate the heat exhaust from the plasma without too much complication.”

The team would next like to see experiments on current tokamaks that could be designed to test the AI-produced extrapolation formula. If it is validated, Chang said, “the formula can be used for easier operation of ITER and for the design of more economical fusion reactors.”

0 notes

Photo

Antique Chinese Nephrite Jade Bat Pendant 19th Century at ObjetsD’Art #nephritejade,#antiquejade, www.objetsdartcorpuschristi.com (at Corpus Christi, Texas) https://www.instagram.com/p/CJ9dou-ALcf/?igshid=1bw20ydhedxyb

0 notes

Text

Mid-Year Top 10

As we head into summer, I thought I’d share with you the top 10 books I’ve read so far in 2017:

10. 42 Faith, by Ed Henry. A unique biography that looks at the peculiar role Jackie Robinson’s faith (along with Branch Rickey, the man who signed him to the Brooklyn Dodgers) played in sustaining the first African American Major League baseball player.

9. Sabbath as Resistance, Walter Brueggaemann. I’ve long loved his writings, but this short book on the importance of the Sabbath shows us how we can go to war with the consumer and capitalistic spirit of our western culture.

8. Dream With Me, John Perkins. I had the privilege of endorsing this book and, when I got my advance copy, I immediately went to the chapter chronicling the death of his son Spencer. I’ve never heard “pops” (as I call Dr. Perkins) talk about this, and finally and painfully he does. This is just a glimpse into the vulnerability of this work.

7. The Blood of Emmett Till, Timothy B. Tyson. It was the decision to have the open casket of this tortured teenager that sparked the Civil Rights Movement. But it was the lie of the white woman that began the whole journey. She now comes forward and confesses her deceit. What a read.

6. Elmer Gantry, Sinclair Lewis. I’m indebted to the preaching faculty at Gordon Conwell for turning me on to this book. It’s hard to believe this novel was released in the 1920s, because it’s tragically still relevant today. Every preacher must read this as it outlines the spirit of professionalism that tempts us all.

5. The Fire This Time, Jesmyn Ward. Released on the 50th anniversary of James Baldwin’s work, The Fire Next Time, this collection of essays offers the best book on race I’ve read in the last 5 years.

4. The Crucifixion, Fleming Rutledge. Most comprehensive book on the cross I’ve ever read. I will be returning to this book for the balance of my ministry.

3. Becoming Ms. Burton, Susan Burton. A painful true tale of an inner city woman who is sexually abused, beaten, becomes a prostitute and does several stints in jail, only to “break free” and become a vessel of hope in the age of mass incarceration. This redemptive tale follows the likes of A Piece of Cake, and other such works.

2. Lectures to My Students, Charles Spurgeon. Why am I just now reading this? Phenomenal.

1. Outliers, Malcolm Gladwell. I read this when it first came out and then promptly got rid of it, along with 5,000 or so of my other books when we moved to NYC. I missed this work so much I bought and read it again.

1 note

·

View note

Photo

☀️🙌🥰 #affirmation #affirmations #dailyaffirmations #motivation #positivity #lawofattraction #loa #gratitude #abundance #inspiration #manifestation https://www.instagram.com/p/CHlIUy-ALcf/?igshid=15smtpw91yflz

#affirmation#affirmations#dailyaffirmations#motivation#positivity#lawofattraction#loa#gratitude#abundance#inspiration#manifestation

0 notes

Photo

LEAD LAB SELECTED FOR NEXT-GENERATION COSMIC MICROWAVE BACKGROUND EXPERIMENT The largest collaborative undertaking yet to explore the relic light emitted by the infant universe has taken a step forward with the U.S. Department of Energy's selection of Lawrence Berkeley National Laboratory (Berkeley Lab) to lead the partnership of national labs, universities, and other institutions that are joined in the effort to carry out the DOE roles and responsibilities. This next-generation experiment, known as CMB-S4, or Cosmic Microwave Background Stage 4, is being planned to become a joint DOE and National Science Foundation project. CMB-S4 will unite several existing collaborations to survey the microwave sky in unprecedented detail with 500,000 ultrasensitive detectors for 7 years. These detectors will be placed on 21 telescopes in two of our planet's prime places for viewing deep space: the South Pole and the high Chilean desert. The project is intended to unlock many secrets in cosmology, fundamental physics, astrophysics, and astronomy. Combining a mix of large and small telescopes at both sites, CMB-S4 will be the first experiment to access the entire scope of ground-based CMB science. It will measure ever-so-slight variations in the temperature and polarization, or directionality, of microwave light across most of the sky, to probe for ripples in space-time associated with a rapid expansion at the start of the universe, known as inflation. CMB-S4 will also help to measure the mass of the neutrino; map the growth of matter clustering over time in the universe; shed new light on mysterious dark matter, which makes up most of the universe's matter but hasn't yet been directly observed, and dark energy, which is driving an accelerating expansion of the universe; and aid in the detection and study of powerful space phenomena such as gamma-ray bursts and jet-emitting blazars. On Sept. 1, DOE Office of Science Director Chris Fall authorized the selection of Berkeley Lab as the lead laboratory for the DOE roles and responsibilities on CMB-S4, with Argonne National Laboratory, Fermi National Accelerator Laboratory, and SLAC National Accelerator Laboratory serving as partner labs. The CMB-S4 collaboration now numbers 236 members at 93 institutions in 14 countries and 21 U.S. states. The project passed its first DOE milestone, known as Critical Decision 0 or CD-0, on July 26, 2019. It has been endorsed by the 2014 report of the Particle Physics Project Prioritization Panel (known as P5), which helps to set the future direction of particle physics-related research. The project also was recommended in the National Academy of Sciences Strategic Vision for Antarctic Science in 2015, and by the Astronomy and Astrophysics Advisory Committee in 2017. The NSF has been key to the development of CMB-S4, which builds on NSF's existing program of university-led, ground-based CMB experiments. Four of these experiments -- the Atacama Cosmology Telescope and POLARBEAR/Simons Array in Chile, and the South Pole Telescope and BICEP/Keck at the South Pole -- helped to start CMB-S4 in 2013, and the design of CMB-S4 relies heavily on technologies developed and deployed by these teams and others. NSF is also helping to plan its possible future role with a grant awarded to the University of Chicago. The CMB-S4 collaboration was established in 2018, and its current co-spokespeople are Julian Borrill, head of the Computational Cosmology Center at Berkeley Lab and a researcher at UC Berkeley's Space Sciences Laboratory, and John Carlstrom, a professor of physics, astronomy, and astrophysics at the University of Chicago and scientist at Argonne Lab. CMB-S4 builds on decades of experience with ground-based, satellite, and balloon-based experiments. What's unique about CMB-S4 is not the technology itself -- the detector technology has already been proven in earlier experiments, for example -- but the scale at which the technology will be deployed, including the sheer number of detectors, scale of the detector readout systems, number of telescopes, and volume of data to be processed. CMB-S4, which will exceed the capabilities of earlier generations of experiments by more than 10 times, will have the combined viewing power of three large telescopes and 18 small telescopes. The major technology challenge for CMB-S4 is in its scale. While previous generations of instruments have used tens of thousands of detectors, the entire CMB-S4 project will require half a million. The data-management challenges will be substantial, too, as these huge arrays of detectors will produce 1,000 times more data than the previous generation of experiments. A major hardware focus for the project will be the construction of new telescopes and the mass-fabrication of the detectors. The current detector design, adapted from current experiments, will feature over 500 silicon wafers that each contain 1,000 superconducting detectors. CMB-S4 plans to draw upon computing resources at the Argonne Leadership Computing Facility (ALCF) and Berkeley Lab's National Energy Research Scientific Computing Center (NERSC), and to apply to NSF's Open Science Grid and eXtreme Science and Engineering Discovery Environment (XSEDE). The project is hoping to deploy its first telescope in 2027, to be fully operational at all telescopes within a couple of years, and to run through 2035. Next steps include preparing a project office at Berkeley Lab, getting ready for the next DOE milestone, known as Critical Decision 1, working toward becoming an NSF project, and working across the community to bring in the best expertise and capabilities.

1 note

·

View note

Photo

22.22 Мой комментарий на видео ЗЭЛЭНИ о возврате Крыма, и 🇷🇺🍸на ДИБиЛОИДНЫЕ комментарии наших клиентов, людей, и народов. Почему у нас такой удивительно💕😍, поразительно "умный" Народ, который дeлит то, шо ему никогда не принадлежало, и никогда не будет принадлежать? Сколько можно уже ДУРКОВАТЬ то? Вы все, Единый народ, разделенный только одними названиями, менталитетами, ЯЗЫКАМИ, культурами, Безкультурьем, и границами для того, шоб управлять Вами, Порабощать Вас, и дурить Вас!!! 👻 Чего Вы всё делите то? Неужели Крым Вы делите!?! А с какого Вы решили, шо Крым Ваш, или ещё чей-то? Крым принадлежит Господу Богу, и народам там живущим. Крым был чей угодно; Скифский, Скандинавский, ГЕНУЭЗСКИЙ, сиракузский, Греческой, монгольский, туре��кий👻, но никогда он не был рУСО-УКРАНЬЯНО-с, и никогда больше не будет❌... Сначала разберитесь Хто Вы сами все есть то!?! Но это и ответ, почему у нас всех такие же умные президенты, которые посажены в одном ГАБИНЕТЕ в Швейцарском Предместье Берна, шоб жрать из одного корыта, и дурить, и травить друг на друга один 🇷🇺🍸Народ рУСО-УКРАНЬЯНО-с-монголо-татаро-словяно-скандинаВО-ГЕРМАНО-МАССОНСКО-ЖИДОВСКИЙ народ!!! Причём жид это национальность еврей по польский, а не оскорбление!!! Неужели Вам🙏💕 так трудно это всё догнать уже самим, без меня, господа, и Дамы, товарищи, граждане, Громадзяне, Ситроены?!? Когда уже Вы прекратите, и перестанете Грызню эту, СОБАЧИТЬСЯ, и делить то, чего у Вас никогда не было, и никогда больше не будет!?! Сначала научитесь 💭думать, размышлять, понимать, говорить, и Обьединяться, а не делится, и разделяться, и 🇷🇺🍸требуйте только то, шо Вам необходимо, то шо Вам принадлежит по праву, а не ведитесь, как коровы, и бараны на том коротком поводу войны, тупости, и повиновения на котором Вас всех, один народ, как Баранов ведут на убийство друг друга!!! Неужели правда интереснее быть баранами, чем умными, тонкими, добрыми, чистыми, размышляющими, думающими ЛЮДЬМИ, которыми невозможно управлять, как скотом!?! Неужели ПРАВДА один 🇷🇺🍸Народ рУСО-УКРАНЬЯНО-с-монголо-татаро-словяно-Скандинавов-ГЕРМАНО-МАССОНСКО-ЖИДОВСКИЙ народ!!! !?! Причём жид это национальность еврей по польский, а не оскорбление!!!🤔💭👻😮 (at Les Cots de Lloberola) https://www.instagram.com/p/CBvaf7-AlCF/?igshid=15s7cwc8vt0pc

0 notes

Text

Cartography of the Cosmos

There are hundreds of billions of stars in our own Milky Way galaxy. Estimates indicate a similar number of galaxies in the observable universe, each with its own large assemblage of stars, many with their own planetary systems. Beyond and between these stars and galaxies are all manner of matter in various phases, such as gas and dust. Another form of matter, dark matter, exists in a very different and mysterious form, announcing its presence indirectly only through its gravitational effects.

This is the universe Salman Habib is trying to reconstruct, structure by structure, using precise observations from telescope surveys combined with next-generation data analysis and simulation techniques currently being primed for exascale computing.

"We're simulating all the processes in the structure and formation of the universe. It's like solving a very large physics puzzle," said Habib, a senior physicist and computational scientist with the High Energy Physics and Mathematics and Computer Science divisions of the U.S. Department of Energy's (DOE) Argonne National Laboratory.

Habib leads the "Computing the Sky at Extreme Scales" project, or "ExaSky," one of the first projects funded by the recently established Exascale Computing Project (ECP), a collaborative effort between DOE's Office of Science and its National Nuclear Security Administration.

From determining the initial cause of primordial fluctuations to measuring the sum of all neutrino masses, this project's science objectives represent a laundry list of the biggest questions, mysteries, and challenges currently confounding cosmologists.

There is the question of dark energy, the potential cause of the accelerated expansion of the universe, called inflation. Another question is the nature and distribution of dark matter in the universe.

These are immense questions that demand equally expansive computational power to answer. The ECP is readying science codes for exascale systems, the new workhorses of computational and big data science.

Initiated to drive the development of an "exascale ecosystem" of cutting-edge, high-performance architectures, codes and frameworks, the ECP will allow researchers to tackle data and computationally intensive challenges such as the ExaSky simulations of the known universe.

In addition to the magnitude of their computational demands, ECP projects are selected based on whether they meet specific strategic areas, ranging from energy and economic security to scientific discovery and healthcare.

"Salman's research certainly looks at important and fundamental scientific questions, but it has societal benefits, too," said Paul Messina, Argonne Distinguished Fellow. "Human beings tend to wonder where they came from, and that curiosity is very deep."

HACC'ing the night sky

For Habib, the ECP presents a two-fold challenge -- how do you conduct cutting-edge science on cutting-edge machines?

The cross-divisional Argonne team has been working on the science through a multi-year effort at the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science User Facility. The team is running cosmological simulations for large-scale sky surveys on the facility's 10-petaflop high-performance computer, Mira. The simulations are designed to work with observational data collected from specialized survey telescopes, like the forthcoming Dark Energy Spectroscopic Instrument (DESI) and the Large Synoptic Survey Telescope (LSST).

Survey telescopes look at much larger areas of the sky -- up to half the sky, at any point -- than does the Hubble Space Telescope, for instance, which focuses more on individual objects. One night concentrating on one patch, the next night another, survey instruments systematically examine the sky to develop a cartographic record of the cosmos, as Habib describes it.

Working in partnership with Los Alamos and Lawrence Berkeley National Laboratories, the Argonne team is readying itself to chart the rest of the course.

Their primary code, which Habib helped develop, is already among the fastest science production codes in use. Called HACC (Hardware/Hybrid Accelerated Cosmology Code), this particle-based cosmology framework supports a variety of programming models and algorithms.

Unique among codes used in other exascale computing projects, it can run on all current and prototype architectures, from the basic X86 chip used in most home PCs, to graphics processing units, to the newest Knights Landing chip found in Theta, the ALCF's latest supercomputing system.

As robust as the code is already, the HACC team continues to develop it further, adding significant new capabilities, such as hydrodynamics and associated subgrid models.

"When you run very large simulations of the universe, you can't possibly do everything, because it's just too detailed," Habib explained. "For example, if we're running a simulation where we literally have tens to hundreds of billions of galaxies, we cannot follow each galaxy in full detail. So we come up with approximate approaches, referred to as subgrid models."

Even with these improvements and its successes, the HACC code still will need to increase its performance and memory to be able to work in an exascale framework. In addition to HACC, the ExaSky project employs the adaptive mesh refinement code Nyx, developed at Lawrence Berkeley. HACC and Nyx complement each other with different areas of specialization. The synergy between the two is an important element of the ExaSky team's approach.

A cosmological simulation approach that melds multiple approaches allows the verification of difficult-to-resolve cosmological processes involving gravitational evolution, gas dynamics and astrophysical effects at very high dynamic ranges. New computational methods like machine learning will help scientists to quickly and systematically recognize features in both the observational and simulation data that represent unique events.

A trillion particles of light

The work produced under the ECP will serve several purposes, benefitting both the future of cosmological modeling and the development of successful exascale platforms.

On the modeling end, the computer can generate many universes with different parameters, allowing researchers to compare their models with observations to determine which models fit the data most accurately. Alternatively, the models can make predictions for observations yet to be made.

Models also can produce extremely realistic pictures of the sky, which is essential when planning large observational campaigns, such as those by DESI and LSST.

"Before you spend the money to build a telescope, it's important to also produce extremely good simulated data so that people can optimize observational campaigns to meet their data challenges," said Habib.

But the cost of realism is expensive. Simulations can range in the trillion-particle realm and produce several petabytes -- quadrillions of bytes -- of data in a single run. As exascale becomes prevalent, these simulations will produce 10 to 100 times as much data.

The work that the ExaSky team is doing, along with that of the other ECP research teams, will help address these challenges and those faced by computer manufacturers and software developers as they create coherent, functional exascale platforms to meet the needs of large-scale science. By working with their own codes on pre-exascale machines, the ECP research team can help guide vendors in chip design, I/O bandwidth and memory requirements and other features.

"All of these things can help the ECP community optimize their systems," noted Habib. "That's the fundamental reason why the ECP science teams were chosen. We will take the lessons we learn in dealing with this architecture back to the rest of the science community and say, 'We have found a solution.'"

37 notes

·

View notes

Text

Launching a Supercomputer: How to Set Up Some of the World’s Fastest Computers

Launching a Supercomputer: How to Set Up Some of the World’s Fastest Computers

As you walk out of an electronics store clutching the cardboard box with your new computer, you dream of all of the cool things you’ll be able do, from writing your screenplay to slaying monsters in a multiplayer game. Managers of the Department of Energy (DOE) Office of Science‘s supercomputers have similar visions, whether crunching particle physics data or modeling combustion inside of engines.

View On WordPress

0 notes