#Data Annotation

Text

Our IT Services

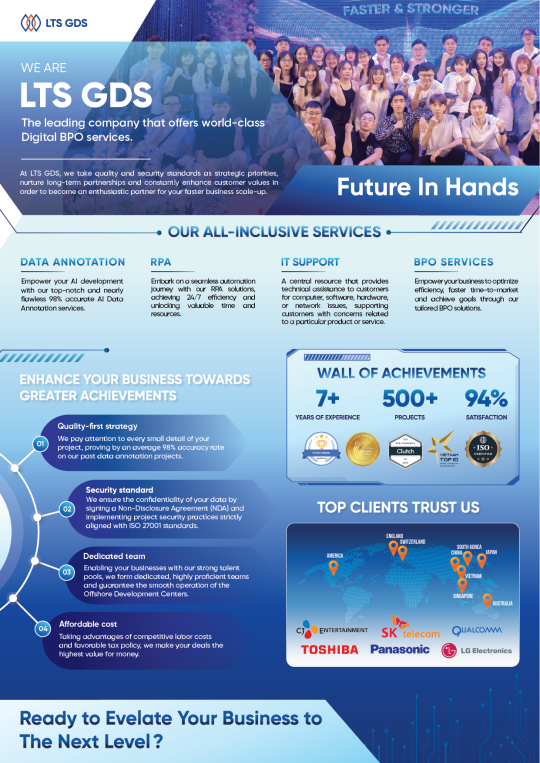

With more than 7 years of experience in the data annotation industry, LTS Global Digital Services has been honored to receive major domestic awards and trust from significant customers in the US, Germany, Korea, and Japan. Besides, having experienced hundreds of projects in different fields such as Automobile, Retail, Manufacturing, Construction, and Sports, our company confidently completes projects and ensures accuracy of up to 99.9%. This has also been confirmed by 97% of customers using the service.

If you are looking for an outsourcing company that meets the above criteria, contact LTS Global Digital Service for advice and trial!

2 notes

·

View notes

Text

Now Hiring!!!

This Ireland-based tech company is hiring international workers for the position of Map Evaluator. Purely Work at home part-time or full-time. Experience is not necessary. No phone/online interview. Click or copy the link to apply.

https://bit.ly/3IzrLeD

3 notes

·

View notes

Text

The Power of AI and Human Collaboration in Media Content Analysis

In today’s world binge watching has become a way of life not just for Gen-Z but also for many baby boomers. Viewers are watching more content than ever. In particular, Over-The-Top (OTT) and Video-On-Demand (VOD) platforms provide a rich selection of content choices anytime, anywhere, and on any screen. With proliferating content volumes, media companies are facing challenges in preparing and managing their content. This is crucial to provide a high-quality viewing experience and better monetizing content.

Some of the use cases involved are,

Finding opening of credits, Intro start, Intro end, recap start, recap end and other video segments

Choosing the right spots to insert advertisements to ensure logical pause for users

Creating automated personalized trailers by getting interesting themes from videos

Identify audio and video synchronization issues

While these approaches were traditionally handled by large teams of trained human workforces, many AI based approaches have evolved such as Amazon Rekognition’s video segmentation API. AI models are getting better at addressing above mentioned use cases, but they are typically pre-trained on a different type of content and may not be accurate for your content library. So, what if we use AI enabled human in the loop approach to reduce cost and improve accuracy of video segmentation tasks.

In our approach, the AI based APIs can provide weaker labels to detect video segments and send for review to be trained human reviewers for creating picture perfect segments. The approach tremendously improves your media content understanding and helps generate ground truth to fine-tune AI models. Below is workflow of end-2-end solution,

Raw media content is uploaded to Amazon S3 cloud storage. The content may need to be preprocessed or transcoded to make it suitable for streaming platform (e.g convert to .mp4, upsample or downsample)

AWS Elemental MediaConvert transcodes file-based content into live stream assets quickly and reliably. Convert content libraries of any size for broadcast and streaming. Media files are transcoded to .mp4 format

Amazon Rekognition Video provides an API that identifies useful segments of video, such as black frames and end credits.

Objectways has developed a Video segmentation annotator custom workflow with SageMaker Ground Truth labeling service that can ingest labels from Amazon Rekognition. Optionally, you can skip step#3 if you want to create your own labels for training custom ML model or applying directly to your content.

The content may have privacy and digitial rights management requirements and protection. The Objectway’s Video Segmentaton tool also supports Digital Rights Management provider integration to ensure only authorized analyst can look at the content. Moreover, the content analysts operate out of SOC2 TYPE2 compliant facilities where no downloads or screen capture are allowed.

The media analysts at Objectways’ are experts in content understanding and video segmentation labeling for a variety of use cases. Depending on your accuracy requirements, each video can be reviewed or annotated by two independent analysts and segment time codes difference thresholds are used for weeding out human bias (e.g., out of consensus if time code differs by 5 milliseconds). The out of consensus labels can be adjudicated by senior quality analyst to provide higher quality guarantees.

The Objectways Media analyst team provides throughput and quality gurantees and continues to deliver daily throughtput depending on your business needs. The segmented content labels are then saved to Amazon S3 as JSON manifest format and can be directly ingested into your Media streaming platform.

Conclusion

Artificial intelligence (AI) has become ubiquitous in Media and Entertainment to improve content understanding to increase user engagement and also drive ad revenue. The AI enabled Human in the loop approach outlined is best of breed solution to reduce the human cost and provide highest quality. The approach can be also extended to other use cases such as content moderation, ad placement and personalized trailer generation.

Contact [email protected] for more information.

2 notes

·

View notes

Text

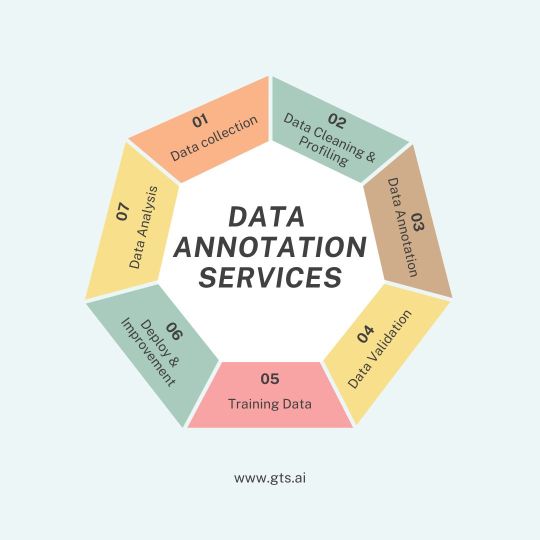

Explore the comprehensive process, procedure, and significance of data annotation in machine learning on our blog. This detailed guide covers everything from the basics to advanced techniques, providing valuable insights for anyone interested in AI and data science. Enhance your knowledge about how accurate data annotation drives machine learning models and contributes to their success. Visit our blog to delve into the intricacies of data annotation and its critical role in developing reliable AI systems.

#data annotation services#data annotation#outsourcing data annotation services#data annotation company

0 notes

Text

Unlock the potential of your NLP and speech recognition models with our high-quality text and audio annotation services. GTS offer precise transcription, sentiment analysis, entity recognition, and more. Our expert annotators ensure that your data is accurately labeled, helping your AI understand and process human language better. Enhance your chatbots, virtual assistants, and other language-based applications with our reliable and comprehensive annotation solutions.

0 notes

Text

From Data to Insights Developing a Solid Annotation Strategy

In the arena of system learning, information is king. But, without a solid annotation strategy, your data is just a pile of unorganized information. Annotation is the process of labeling and categorizing data, making it possible for machines to understand and learn from it. A well-annotated dataset is the foundation of a successful machine-learning project, enabling models to make accurate predictions and drive business insights.

The Importance of Annotation

Annotation is a crucial step in the machine-learning process, and its importance cannot be overstated. Here are a few reasons why annotation matters:

- Improved model accuracy: High-quality annotations enable models to learn from data and make accurate predictions. Without accurate annotations, models may learn incorrect patterns or biases, leading to poor performance.

- Reduced bias: Well-annotated data helps reduce bias in models, ensuring they are fair and inclusive. Biased models can perpetuate existing social inequalities, making annotation a critical step in developing ethical AI.

- Increased efficiency: Annotated data enables models to learn faster and require less training data. This reduces the time and resources required for training, making the machine-learning process more efficient.

Challenges of Annotation

Annotation is a complex process, and several challenges can arise when developing an annotation strategy. Here are a few common challenges:

- Data quality: Poor data quality can lead to inaccurate annotations, which can negatively impact model performance. Noisy or inconsistent data can make annotation difficult, leading to errors and inconsistencies.

- Scalability: Large datasets require significant annotation efforts, which can be time-consuming and costly. As datasets grow, annotation becomes increasingly complex, requiring specialized tools and expertise.

- Consistency: Ensuring consistency across annotations is crucial, but it can be challenging, especially with large teams. Inconsistent annotations can lead to biased models, making consistency a critical aspect of annotation.

The Importance of Dataset Size in Data Annotation

When it comes to data annotation, understanding the size of your dataset is crucial for success. Knowing the volume of data to be labeled allows annotators to assess project complexity, set realistic deadlines, and allocate resources efficiently. In this article, we'll explore the importance of dataset size in data annotation and provide a step-by-step guide to measuring dataset volume.

Why Dataset Size Matter?

- Assessing project complexity and setting realistic deadlines

- Allocating resources and distributing tasks efficiently

- Evaluating overall progress and identifying potential bottlenecks

- Understanding the link between model accuracy and annotated data volume

Measuring Dataset Volume: A Step-by-Step Guide

1. Count the number of instances: Determine the total number of data points or instances in your dataset.

2. Evaluate data complexity: Assess the complexity of the data, considering the variety, types, and diversity of labels or categories.

3. Examine feature space: Assess the dimensionality of the feature space, considering the number and types of features.

4. Consider annotation granularity: Understand the level of detail required for annotation.

5. Understand the difficulty of the labeling task: Assess the complexity of annotating each instance.

6. Analyze time requirements: Estimate the time required to label each data point.

7. Account for iterative annotation: Consider that some annotated data may be used to improve ML models and guide subsequent labeling efforts.

8. Use sampling techniques: Sample a subset of the dataset to estimate annotation effort.

9. Consult domain experts: Seek input from domain experts to understand the context and intricacies of the data.

By following these steps, you'll be able to measure the scope of your dataset volume and enhance the effectiveness of your data labeling strategy. Remember, understanding dataset size is crucial for efficient annotation, resource allocation, and ultimately, achieving accurate machine-learning models.

Developing a Solid Annotation Strategy

To overcome these challenges, it's essential to develop a solid annotation strategy. Step-by-Step guide:

Step 1: Define Your Annotation Goals

Before starting the annotation process, it's essential to define your goals. Identify your machine learning project's objectives and determine the type of annotations required. This will help you develop a clear plan and ensure your annotations meet your project's needs.

Step 2: Prepare Your Data

Data preparation is a critical step in the annotation process. Ensure information fine through cleansing and preprocessing it. Remove any duplicates, handle missing values, and normalize your data. Split your data into training, validation, and testing sets to ensure a robust annotation process.

Step 3: Choose an Annotation Tool

Selecting the right annotation tool is crucial for your project's success. Choose a tool that fits your project's needs, considering factors such as scalability, collaboration features, and annotation type. Popular annotation tools include labeling platforms, annotation software, and active learning tools.

Step 4: Develop an Annotation Guide

A comprehensive annotation guide is essential for ensuring consistency across annotations. Develop a guide outlining annotation rules, best practices, and examples. Ensure your guide is clear, concise, and easily accessible to your annotation team.

Step 5: Annotate Your Data

Begin annotating your data, following your guide, and using your chosen tool. Use active learning techniques to optimize annotation efforts, focusing on the most critical samples. Ensure your annotations are accurate, consistent, and meet your project's requirements.

Step 6: Review and Refine

Regularly review your annotations for quality and consistency. Refine your annotation strategy as needed, addressing any inconsistencies or errors. Continuously monitor your annotations' impact on model performance, adjusting your strategy to optimize results.

Best Practices for Annotation

Here are some best practices to keep in mind when developing your annotation strategy:

- Use clear and concise annotation guidelines

- Ensure consistency across annotations

- Use active learning techniques to optimize annotation efforts

- Continuously review and refine your annotations

- Use specialized tools and expertise for large datasets

Conclusion

Developing a solid annotation strategy is crucial for machine learning success. By understanding the importance of annotation, overcoming challenges, and following a step-by-step guide, you can create a well-annotated dataset that drives business insights. Remember, annotation is an ongoing procedure that calls for non-stop refinement and improvement. By investing time and effort into annotation, you'll reap the rewards of accurate and efficient machine learning models. In the world of machine learning, data is king, but annotation is the key to unlocking its potential. Don't neglect this critical step – develop a solid annotation strategy and unlock the insights hidden in your data.

For high-quality annotation services, consider partnering with TagX, the industry leader in data annotation. TagX expert annotators and cutting-edge tools ensure accurate and efficient annotation, empowering your machine-learning models to reach their full potential. Take the first step towards machine learning success.

Contact TagX for your data annotation needs today!

Visit Us, www.tagxdata.com

Original Source, www.tagxdata.com/from-data-to-insights-developing-a-solid-annotation-strategy

0 notes

Text

Reasons To Outsource Your Data Annotation: The Ultimate Guide

Businesses are looking to improve their data processing efficiency and accuracy within the budget. They collect and analyze the data to gain valuable insights. The critical aspect of this process is data annotation. It is a method of labeling and categorizing all the data to improve accuracy and usability. However, annotating data can be time-consuming and needs sufficient resources. This is why many companies outsource their project to professional service providers. Let’s explore everything about data annotation and the reasons to outsource your data annotation work.

0 notes

Text

https://saxon.ai/blogs/data-annotation-the-key-to-accurate-and-reliable-ai/

0 notes

Text

A Guide to Choosing a Data Annotation Outsourcing Company

Clarify the Requirements: Before evaluating outsourcing partners, it's crucial to clearly define your data annotation requirements. Consider aspects such as the type and volume of data needing annotation, the complexity of annotations required, and any industry-specific or regulatory standards to adhere to.

Expertise and Experience: Seek out outsourcing companies with a proven track record in data annotation. Assess their expertise within your industry vertical and their experience handling similar projects. Evaluate factors such as the quality of annotations, adherence to deadlines, and client testimonials.

Data Security and Compliance: Data security is paramount when outsourcing sensitive information. Ensure that the outsourcing company has robust security measures in place to safeguard your data and comply with relevant data privacy regulations such as GDPR or HIPAA.

Scalability and Flexibility: Opt for an outsourcing partner capable of scaling with your evolving needs. Whether it's a small pilot project or a large-scale deployment, ensure the company has the resources and flexibility to meet your requirements without compromising quality or turnaround time.

Cost and Pricing Structure: While cost is important, it shouldn't be the sole determining factor. Evaluate the pricing structure of potential partners, considering factors like hourly rates, project-based pricing, or subscription models. Strike a balance between cost and quality of service.

Quality Assurance Processes: Inquire about the quality assurance processes employed by the outsourcing company to ensure the accuracy and reliability of annotated data. This may include quality checks, error detection mechanisms, and ongoing training of annotation teams.

Prototype: Consider requesting a trial run or pilot project before finalizing an agreement. This allows you to evaluate the quality of annotated data, project timelines, and the proficiency of annotators. For complex projects, negotiate a Proof of Concept (PoC) to gain a clear understanding of requirements.

For detailed information, see the full article here!

2 notes

·

View notes

Link

Status update by Maruful95

I'm Md. Maruful Islam is a proficient Bangladeshi data annotator trainer. At the moment, I consider it an honour to be employed by Acme AI, the leader in the data annotation industry. Throughout my career, I've gotten better at using a range of annotation tools, including SuperAnnotate, Kili, Cvat, Tasuki, FastLabel, and others. I am a well-respected professional in the field, having produced consistently excellent annotations. My certifications for GDPR, ISO 27001, and ISO 9001 further guarantee that privacy and data security regulations are adhered to. I sincerely hope you will give my application some thought. As a data annotator, I'd like to know more about this project and provide recommendations based on my knowledge. Fiveer-https://www.fiverr.com/s/vqgwlL Upwork-https://www.upwork.com/services/product/design-ai-segmentation-labeling-bounding-box-for-precision-1746946743155208192?ref=project_share

0 notes

Text

Excited to share some insights on Best Practices in Data Annotation. By following these strategies, you can ensure reliable outcomes and uphold ethical standards in your Machine Learning projects.

Check out these key points:

1- Establish Clear Ethical Guidelines

2- Implement Rigorous Quality Control Measures

3- Provide Comprehensive Training for Annotators

4- Foster an Iterative Feedback Loop

5- Prioritize Anonymization and Privacy

6- Ensure Diversity and Bias Mitigation

Let's optimize, annotate, and succeed together

Ready to elevate your ML projects? Dive into these best practices and make a difference.

Read More: https://www.damcogroup.com/blogs/data-annotation-services-fueling-the-future-of-ai-ml-applications

0 notes

Text

Unlock the full potential of your AI and machine learning models with GTS expert data annotation services. Our team specializes in precise and accurate labeling, ensuring your data is meticulously prepared for high-performance algorithms. From image and video annotation to text and audio tagging, we offer a comprehensive range of services tailored to meet your specific needs. Trust us to deliver the quality and reliability that your AI projects demand.

1 note

·

View note

Text

OCR technology has revolutionized data collection processes, providing many benefits to various industries. By harnessing the power of OCR with AI, businesses can unlock valuable insights from unstructured data, increase operational efficiency, and gain a competitive edge in today's digital landscape. At Globose Technology Solutions, we are committed to leading innovative solutions that empower businesses to thrive in the age of AI.

#OCR Data Collection#Data Collection Compnay#Data Collection#globose technology solutions#datasets#technology#data annotation#data annotation for ml

0 notes

Text

Data Annotation Guide – Definition, Techniques, Tools, Types and More

Data annotation is crucial for machine learning and AI, as it allows these systems to interpret raw data. Its significance will increase as AI's potential is further explored.

To understand data annotation better, let's explore its definition, techniques, tools, types, and other aspects critical for effective machine learning and deep learning algorithms.

1 note

·

View note