#AND its not 8 years old + the 3 fans and gpu fan and cpu fan. surely thats enough. the case even has space for more than that!!

Text

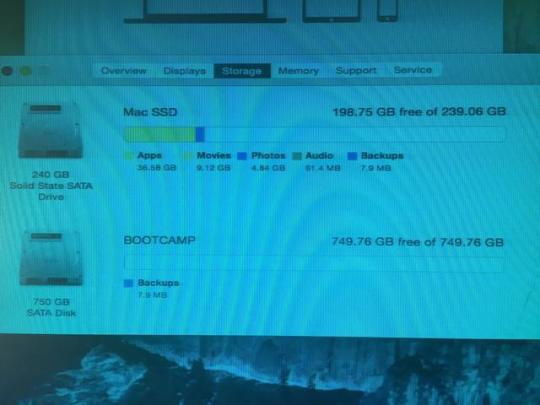

i cant believe the day but i finally got a full tower pc. bought it already built and at a considerable discount of some 320 dollars off. its fucking huge and theres so many things going on inside... i was initially planning on choosing the parts myself but finding the graphics card was so hard and everyone else convinced me to just buy it built and honestly? good. id probably have fucked this up so badly by myself

i cant use it yet bc i took too long to buy the monitor that was also on sale and now its regular price -_- tho i managed to find a discount used one for now. well see how that goes since ill get it tomorrow. i tested it on out living room tv and it had some kaspersky thingy open and like thats so cute. i hope they left some treats in the browsing history for me to search through before i wipe it clean

#its a hexer case and wouldnt you guess the front has a hexagonal pattern. so pretty..#it came with 3 fans installed there too that have a cmyk color style to them and it looks quite neat. im thinking of buying some leds to pu#inside the case to go with my keyboard tho idk if id go that far tbh (< gamer rot is setting in. im not immune to pretty lighting..)#its also got a lot of unused space inside. im thinking of making more sculptures to put in. though idk if thatd be safe for it#bc cold porcelain is glue and water. what if it evaporates inside and suddenly everythings covered in a glue film#i wonder if varnish would help? the transparent nail polish sure didnt do shit it came off like 2 days after sculpting the rw slug sleeping#which like yeah of course. its nail polish. but i didnt expect it to flake since all it does is sleep on top of my laptop keyboard#i need miniature glass cake cover tops to encapsule every sculpture inside for safety#looking at it still no wonder these are called towers gotdamn its legit so huge..#it looks awkward tho bc i cant fully make it glue to the wall bc of the cables so its like. awkwardly a bit in front of the wall#im scaared as to how to tell if it ever gets too hot. on a laptop u just press ur head against the left half and feel how hot it is#i think im gonna need software for this.. sigh. tho maybe ill never get to that point since its supposed to be decent#AND its not 8 years old + the 3 fans and gpu fan and cpu fan. surely thats enough. the case even has space for more than that!!#the acrylic side reflects my keyboard too. so niceys. stimulation for my creature eyes#my desk is gonna be so fucked up when i have to organize everything too bc the one i have now is perfecly laptop-oriented#it sits on a custom wooden desk and the keyboard+drawing tablet sit below. but theres a shelf on top of my desk thats too low for the>#>normal monitor to sit to so i wont be able to use the custom desk. and i dont even know what ill do with my laptop either#finally a good change in my sad life routine fr. i cant wait to play watchdogs on this and overgrowth and other ones#AND LAGLESS KRITA SMUDGE ENGINE BRUSHES!!! AND DOUBLE BRUSHES. THEYRE SO LAGGY#A N D ACTUAL FULL HD NORMAL MONITOR. maybe that will get me to not draw in small canvases anymore#now im anxious i just want the day to be over to get the monitor tomorrow aouugh.. just bc i started coding my resources neocities page#dextxt#<the 'major life events' ((sorta)) tag returns. one for the books.. if something bad happens.. itll be here to remind me of the good times

2 notes

·

View notes

Text

Best gaming laptops in 2020

Best gaming laptops in 2020The gaming industry is increasing day by day and has a lots of scope in the future. Many pro gamers make a handsome amount of money through gaming. And if you too want to step in the gaming industry then you must read this article.If you think you can be a pro gamer using an old PC which is thick like a suitcase then you are probably having a misconception. Nowadays there are a lots of laptops in the market that have raised the bars high of laptops. There are laptops as powerful as the

Asus ROG Zephyrus

and the

HP Pavilion

. If you are rich and have enough money then the

Dell alienware

is the best gaming laptop now.Nowadays you get good gaming laptops with Nvidia GeForce graphic processors which give an out of world experience.

Now before starting with the list I would like to tell you that I have done a lot of research to make this list. So please share this with your friends and drop down your feedback in the comments.

The best gaming laptop you can buy now

1. Asus ROG Zephyrus

There are a lots of laptop out there but I showcase the best gaming laptop here. Starting off with the list on number one we have the Asus ROG Zephyrus. Asus has consistently improved itself and now it is emerging as on of the best gaming laptop makers. It has a 9th generation Intel core i7-9750h processor which makes it an absolute beast .

You can check out th eful list of the features here:

Processor: 9th Gen Intel Core i7-9750H Processor base speed 2.6GHz (12M Cache, up to 4.5GHz, 6 Cores)

Memory & Storage: 16GB DDR4 2666MHz RAM upgradeable up to 32GB RAM with | Storage: PCIe NVMe 512GB M.2 SSD with additional 1x M.2 Slot for PCIe SSD expansion.

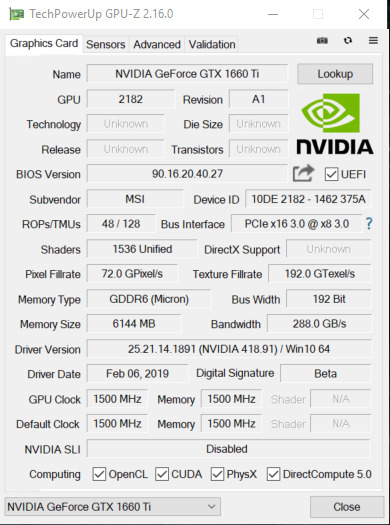

Graphics: NVIDIA GeForce GTX 1660 Ti GDDR6 6GB VRAM

Display: 15.6-inch Full HD (1920x1080) Anti glare IPS-level panel, Refersh Rate 144Hz, 3ms, 100% sRGB, Pantone Validated.

Operating System: Pre-loaded Windows 10 Home with lifetime validity

Design & battery: 18.9mm Thin | Magnesium-Alloy Chassis | Super-narrow bezels frame | Laptop weight 1.93kg | Lithium battery 4-Cell | Battery Life upto 8 hours*

Cooling System: Anti-Dust Self-Cleaning Tunnels | 83 Fans Blades | 0.1mm Ultraslim Fins| 5 Pipes CPU/GPU/VRM..

2.HP Pavilion gaming

HP has a good background in making laptops. It has always satisfied it's customers with the quality of it's products. The HP Pavilion is yet another good gaming laptop from HP. It is an affordable laptop and has good features in this price range. Though it has an i5 processor it is good for starting with. It is one of the best and affordable gaming laptop in this range.Here goes the features list:

Processor: 9th Gen Intel Core i5-9300H processor(2.40 GHz base processor speed, 8 MB cache, 4 cores), Max Boost Clock Up to 4.10 Ghz

Operating System: Pre-loaded Windows 10 Home with lifetime validity

Display: 15.6-inch Full HD (1920 x 1080) SVA anti-glare WLED-backlit display

Memory & Storage: 8GB (1x8GB) DDR4 RAM | Storage:1TB HDD Hybrid Storage | M.2 Slot available

Graphics: NVIDIA GeForce GTX 1050 4GB GDDR5 Dedicated Graphics

Design & Battery: Laptop weight: 2.17 kg | Lithium battery

Warranty: This genuine HP laptop comes with 1 year domestic warranty from HP covering manufacturing defects and not covering physical damage.

3. Lenovo Legion Y540

Lenovo, a trustable and good company. It has been consistently giving good laptops and always strives for betterment. The Legion Y540 is another satisfying gaming laptop. It has aa 9th gen i5 processor which is pretty fast and gives you a good experience while gaming.The features list is a bit long for all the laptops but you know that bigger is better.The features of this laptop are:

Processor: 9th Generation Core Intel I5-9300H, 2.4 Ghz base speed, 4.1 Ghz max speed, 4 Cores, 8Mb Smart Cache

Operating System: Preloaded Windows 10 Home, with lifetime validity

Display: 15.6-inch screen with (1920X1080) full HD display | Anti Glare technology | IPS display | 250Nits | 60Hz Refresh Rate

Memory and Storage: 8 GB RAM | Storage 1TB SSD

Design and Battery: Laptop weight: 2.3Kg | Thin bezeled gaming laptop | Battery Life: 5 hrs

Warranty: This genuine Lenovo laptop comes with 1 year domestic warranty from Lenovo covering manufacturing defects and not covering physical damage.

Pre-Installed Software: Windows 10 Home, Office Home and Student 2019 | Inside the box: Laptop, Charger, User Manual

4. Dell Gaming-G3

The Dell Gaming is a masterpiece from Dell. One of the most trusted brands for good gaming laptops. You can buy this laptop with your eyes closed. It has a 4GB Nvidia 1650 graphic card and comes with Windows 10. You can use it to play any game you want and get a smooth and lag free experience.Check out its cool features:

2.6GHz Intel Core i7-9750H 9th Gen processor

8GB DDR4 RAM

1TB 5400rpm hard drive

15.6-inch screen, NVIDIA 1650 4GB Graphics

Windows 10 operating system

10 hours battery life, 2.5kg laptop

1 year warranty

5. Acer Nitro 7

Acer has a very good experience in making good gaming laptops. It also has a very good history in making one of the best gaming laptops. The Acer Nitro 7 is a thin and light gaming laptop. It comes with 4GB of 1650 graphic card and windows 10. Good for all gamer.Don't forget to check out the features:

2.40 GhzGHz Intel Core i5-9300H processor 9th Gen processor

8GB DDR4 RAM

1TB SSD

15.6-inch screen, NVIDIA GeForce GTX 1650 with 4 GB of dedicated GDDR5 VRAM 4GB Graphics

Windows 10 Home 64 bit operating system

7 hours battery life, 2.5kg laptop

Processor: Intel Core i5-9300H processor, turbo up to 4.10 Ghz | Display: 15.6" display with IPS (In-Plane Switching) technology, Full HD 1920 x1080, high-brightness (300 nits) Led Backlit

Memory: 8 GB of DDR4 system memory, upgradable to 32 GB using two soDIMM modules | Storage: 1 TB SSD

Graphics: NVIDIA GeForce GTX 1650 with 4 GB of dedicated GDDR5 VRAM | Pre-installed: Windows 10 Home (64 bit)

Warranty: One-year International Travelers Warranty (ITW)

Bonus for you all

This bonus laptop is best for gamers but you need a high budget. I am talking about the Alienware manufactured by Dell. It is a dream laptop for every gamer. It comes with an 8GB Nvidia 2080 graphics.Features:

2.4GHz Intel Core i9-9980HK 9th Gen processor

16GB DDR4 RAM

5400rpm hard drive

15.6-inch screen, NVIDIA 2080 8GB Graphics

Windows 10 Home operating system

10 hours battery life, 2.16kg laptop

Processor detail

15.6 inch, Windows 10 Home

1 year manufacturer warranty

Good bye and make sure you share this post and comment down.

2 notes

·

View notes

Photo

6 Years Later - Reviewing My Build's Successes and Failures At 30,000 Hours of Uptime

So here it is, folks. The 4 grand, big brand, first hand grandstand on an often-neglected topic – what to expect of your machine after years of hard use. Listed below is the encased case I'll be studying for this piece. Components that look like this are in the machine currently. Components that look like this were replaced with upgrades. Components that look like this failed in service.

Type Item Price CPU Intel - Core i5-4670K 3.4 GHz Quad-Core Processor $230 CPU Cooler Noctua - NH-D14 64.95 CFM CPU Cooler $74.95 @ Amazon Motherboard Asus - Z87-Pro ATX LGA1150 Motherboard ~$180 Memory Mushkin - Redline 8 GB (2 x 4 GB) DDR3-1866 Memory ~$100? Upgraded Memory El Cheapo Nemix 32 GB (4 x 8 GB) DDR3-1600 Memory $150.00 Storage Samsung - 840 Series 120 GB 2.5" Solid State Drive ~$120? Storage Seagate - Barracuda 1 TB 3.5" 7200RPM Internal Hard Drive $58.49 @ OutletPC Replacement Storage Western Digital - BLACK SERIES 2 TB 3.5" 7200RPM Internal Hard Drive $119.16 @ OutletPC Video Card Asus - GeForce GTX 780 3 GB DirectCU II Video Card $650 Upgraded Video Card EVGA GeForce GTX 1080 FTW GAMING ACX 3.0 ~$650 Replacement Video Card EVGA GeForce RTX 2070 XC ULTRA GAMING $0 Case Fractal Design - Define R4 (Black Pearl) ATX Mid Tower Case ~$100 Power Supply Fractal Design - Newton R3 600 W 80+ Platinum Certified Semi-Modular ATX Power Supply ~$100 Optical Drive Asus - DRW-24F1ST DVD/CD Writer $21.39 @ OutletPC Operating System Microsoft - Windows 8 OEM 64-bit ~$100 Operating System Microsoft - Windows 10 64-bit $0 Case Fan Fractal Design - FD-FAN-SSR2-140 66 CFM 140mm Fan $13.89 @ SuperBiiz Monitor Asus - VG248QE 24.0" 1920x1080 144 Hz Monitor $246.00 @ Amazon Monitor Asus - VG248QE 24.0" 1920x1080 144 Hz Monitor $246.00 @ Amazon Upgraded Monitor LG - 34UM95 34.0" 3440x1440 60 Hz Monitor $750.00 Total ~$4,000 Generated by PCPartPicker

As you can tell I had two failures, both of them pretty major. I'll cover them a little farther down.

Starting off, these are the goals I had in mind when building this machine: First and foremost, I wanted the best performance in flight simulators and CAD/CAM software that I could justify spending for. I wanted perfect snappiness in Windows, MS Office, and web browsers. Second, I wanted longevity. Third, silence. I'd say this build achieved all of those things... but I have a few warnings for people looking to build a rig with a similar mindset.

I had to make multiple upgrades to the machine for it to keep up with the expanding RAM, VRAM, and storage requirements as sims like DCS got extra content and released updates with power-hungry graphics improvements. Also I may have purchased a much larger monitor and a VR headset... sorry 780.

If you have to skimp on things, don't skimp on the CPU, motherboard, or PSU. Although I have had the urge to get an M.2 SSD and upgrade my CPU for some time now (although really it's still keeping up perfectly fine), the fact that my current motherboard and RAM will also need to be replaced makes that unjustifiable. At this moment, for me to upgrade to a i7-8700k and an M.2 without losing RAM would cost about $1,200. Totally out of the ballpark.

Expect to have failures and do maintenance. I was lucky and had no DOA parts in the build, and the thing ran absolutely flawlessly for years. However about 5 years into the life of the machine, the 1TB storage drive suffered a soft failure. I noticed obvious performance issues, and with drive health monitoring software open I watched it slowly die as I attempted to transfer all the files I wanted elsewhere. I got everything important, but shit. You know the saying that while SSDs have a built-in service life, HDDs either fail within the first couple years or last until obsolescence? Ahhh... not in my experience. Anything I build from now on will probably be all-SSD.

(3 cont'd) As for the 1080 that died, that was much more dramatic. I'm flying along in the sublime DCS F/A-18C recreating Mongo's MiG-21 shootdown in the Gulf War when all at once the computer instantly powers off with a pop and the screen goes black. I'm thinking "...power outage?" until I smell it – something let the smoke out. After a postmortem I decide the smell had to have come from the GPU. So I throw in the old 780 and it boots up – but no video output. Shit. Video output from the IGPU works fine though? Huh. So I try a different PCIe slot and what do you know... I'm pretty sure my 1080 fried the only 16x slot on the board. Not too big a deal to run on 8x but now I feel the machine is in its twilight years with one of the newest components in the rig failing so spectacularly and running with a damaged motherboard. Being realistic though, I won't be at all surprised if this thing will keep going another 6 years or more with an SSD change.

Warranties matter as much or more than quality. At first, I went all-in on the highest quality parts I could get without paying any attention to the warranty service. To this day I still consider the Asus 780 DCUII an incredibly well-built card. When I retrieved it to replace the blown-up 1080 I was impressed all over again with how sturdy it felt and just the quality of work Asus put into it. But all cards can fail, and if the same thing that happened to my EVGA 1080 had happened to my Asus 780... well, I'd have been shit out of both luck and $650. As it stands I'm actually getting an upgrade out of this catastrophe (albeit still being left with a dead PCIe slot).

Don't bother with watercooling, not even AIOs except in very specific use cases. It's not anywhere close to being worth the headache for the vast majority of people going that route. The amount of additional maintenance and attention required to keep a watercooled rig going strong for so many years is way more than you're going to want to do. I know you're pretty into the hardware side of your computer now, but just trust me. You're going to be a substantially different person in 5 years, most likely one that wants a machine that just works without any doubts about water leaks, water line contamination, pumps dying, etc.

Shit's expensive, yo. Yes I know I didn't do my wallet any favors here, but just be aware that if you want to maintain a top-shelf rig for many years to come, get ready to shell out many thousands too. It's not a one-and-done purchase, even if you can handle falling behind the state of the art. I didn't even list all of my peripherals here. In addition to all of this I've also got a UPS, a Das Keyboard 4, monitor stand for the 34UM95 and an Ergotron arm mount for the VG248QEs, flight sim peripherals, headphones, DAC, and more. Plus power bills I've honestly got no clue how much this thing has cost me in total. At least $5.5k. Was it worth it? Oh fuck yeah it was worth it. But I'm not exactly on a tight budget here... don't stretch yourself for something that is ultimately probably going to serve as much as a distraction from responsibility as it will a tool for bettering your life. It undeniably is the latter... but you don't need to spend nearly as much if you just want a productivity machine.

What would I have done differently with the initial build? Probably nothing. I probably should have gone all-SSD a year or two ago but that's fine. In the near future I'll just replace the OS drive and add a storage SSD. My machine has been an absolute pleasure to own, a dream come true after years of the shitty family computer (even by 90s standards) and countless craptops. If you have the means, I highly recommend picking one up.

4 notes

·

View notes

Text

Radeon RX 580: Review 2021 | Testing| Specs | Profit ( Good and Bad Side)

Radeon RX 580 Cryptocurrency Mining: Review 2021 | Testing: Specs | Profit | Hashrate | Settings | Setup| Configuration | CPU Performance: Rx 580 mining - Check out the specification, hashrate, profitability and the payback period of this miner as well as other critical information before buying. After the release of the Radeon RX 580 video card, the entire five hundredth series became one of the most profitable options, not only for gamers, but also for mining. Cards bought up immediately after their appearance in the store and the resulting shortage significantly influenced their price.

The cost of the RX 580 even from the “cheapest” vendors has grown by at least 30-40%. This greatly increased the payback period of the video card and shaken the leading position of the video card.

Please note- The Review was done earlier than 2021 so their will be variation inters of the rate would earn when currency exchange is taken into consideration.

Is the RX 580 the best and most promising choice for miners in 2018, and is it worth paying attention to this video card today when building a farm from scratch? In this article we will look at all the features, calculations and potential of the card for mining today and the future.

People Also Ask: Common Questions Radeon RX 580

Specifications and power Radeon RX 580

First, let's briefly review the technical characteristics of the card, which will help to understand its relevance for mining and a place in the top. There are two versions of the RX 580, 4 and 8 gigabytes of memory. For the rest of the characteristics, there are almost no differences between the cards, and the relevance of the “extra” 4 GB of memory will be considered in the following blocks.

- Graphic Engine: AMD Radeon RX 580

- Bus Standard: PCI Express 3.0

- Video Memory: 8GB GDDR5

- Engine Clock:

- 1380 MHz (OC Mode)

- 1360 MHz (Gaming Mode)

- Stream Processors: 2304

- Memory Speed: 8 Gbps

- Memory Interface: 192-bit

- Resolution: Digital Max RTop 10 Questions Asked on Amazon Before Buying - Geforce GTX 1060esolution:7680x4320

- Interface:

- 1 x DVI-D

- 2 x HDMI 2.0b

- 2 x DisplayPort 1.4

- HDCP Support: Yes

- Maximum Display Support: 4

- Software:ASUS GPU Tweak II

- Dimensions: 9.53 " x 5.07 " x 1.49 " Inch

- Recommended PSU: 500W

- Power Connectors: 1 x 8-pin

- Slot: 2 Slot

If you take the most reference version of the RX 580 NITRO from Sapphire, then the characteristics will be as follows:

- The core frequency is 1340 MHz for silent mode and 1411 MHz for maximum boost.

- Memory - 8,192 GB with a 255-bit bus and a frequency of 2 MHz.

- 225 watts peak power.

However, this is the most powerful map, which is dispersed from the factory to the maximum. Solutions from other vendors will have lower frequencies, and not only their overclocking potential will be important here, but also some of the manipulations associated with the firmware.

The cornerstone of mining is overclocking. In terms of importance, this criterion is second only to the price of the video card itself, which determines the payback and the ratio of income and investment. As is the case with other video cards, everything will depend on the memory manufacturer. In 2018, it was almost impossible to find even the top NITRO and Limited Edition solutions with Samsung memory.

Most of the cards come with Hynix memory, whose overclocking potential is significantly inferior, which will certainly affect the overall profit of the farm in mining. This indicator is not critical, but in the case of a possible choice, you should always give preference to Samsung video cards.

Top 10 Questions Asked on Amazon Before Buying - Radeon RX 580

XFX Radeon RX 580 GTS Edition 1386MHz OC+, 8GB GDDR5, VR Ready, Dual BIOS, 3xDP HDMI DVI, AMD Graphics Card (RX-580P8DFD6)

Question 1: What would this equal to a GeForce card?

Answer: Between 1060 and 1070

Question 2: Is this one could build in a mini case?

Answer: No, it barely fit in my mid-size NZXT with no modification needed

Question 3: Is it with upgrading my nvidia gtx 1050ti 4 gb gddr5 direct 12 graphics card you this one??

Answer: Yes, that's what i had before too gtx 1050ti replaced with this XFX RX 580 GTS

Question 4: Will the Radeon RX 580 card work with an msi h110m atx gaming motherboard?

Answer: Yes, all you need is a PCI-Express 3.0 x16 Slot, and be sure your PC case is big enough to support a GPU of this size.

Question 5: Can I play rainbow six siege with 8gb or 4gb?

Answer: That is something you need to find out. Look at the system requirements for the game. Easy Google search.

Question 6: It shows that this gpu needs 500 watt. I have a sonnet egfx breakaway box 550w with 80% efficient. it means it only have 440w. so can i use this gpu?

Answer: Yes. ( Customer Answered: I have a 750W PSU, the card causes random reboots, it is a terrible design that overloads the 8-pin rail. Check the internet for issues with this particular manufacturer.)

Question 7: Does this card come with free games?

Answer: It did, when purchased it

Question 8: Hey I am just worried , so I ordered nzxt mid tower and will this video card fit in??

Answer: It, Should.

Question 9: The Radeon RX 580, is this card compatible with a dell xps 8700?

Answer: Sure it does, as long as you have a case that will fit the card.

Question 10: I have a ryzen 5 2400g will the rx 580 8gb be better than the gtx 1060 ti 3gb or 6gb for gaming?

Answer: I average 120 - 150 fps in rainbow 6 siege if that helps? that on 144hz monitor: ( Customer had this to says as well - The 580 would be a better match because it is around the gtx 1070 in performance.)

Best Review Posted on Amazon Before Buying - Radeon RX 580

Best Review Posted on Amazon Before Buying - Radeon RX 580

Customer Review 1 of Radeon RX 580: This card can handle games(destiny, fortnite, Pubg...etc) at high FPS with no issues. The card does use more power than an nvidia card with similar specs and also creates more heat. But at the same time value for the spec is great. As a product I would give it 4 stars however, XFX's warranty service is surprisingly easy and fast. My card broke after a year of use, so I registered the product on their site, I received a response within 24 hours. They troubleshooted and determined the card needs to be RMAed, I sent the card back and I received a new one within a week after they received the defective card. They didn't even require the receipt(required by most companies) even though I had it. It's amazing service compare to my PNY nvidia experience which was like pulling teeth. I will definitely buy more XFX products in the future.

Customer Review 2 of Radeon RX 580: Used to be a big Nvidia fan. Then, I started encountering problems with their drivers. Fine, rolled back my driver to an older version. Then, my GTX 970 stopped working after only 1 year of use. Fine, sent it in and received a refurbished one. The refurbished one now has the same issue.

Bought the XFX GTS RX 580 8GB DDR5. 0 driver issues. Card still works after a few months and puts out a better picture then my 970. I'll update my review if something goes wrong but, for now, I'm extremely pleased with this card both in terms of performance and price. I'll be all to happy to continue buying AMD in future.

Customer Review 3 of Radeon RX 580: Shipping arrive on time and before I got home, which is a first. A few things to note:

1: This is a fairly large GPU, if you don't have a Full Tower, or an opened-air mobo, your mileage with fitting this thing in a Mid Tower WILL differ

2: My old GPU require two 6-pin, while this GPU required one 8-pin, you will either need an 8-pin or a 6+2 pin to connect your power supply to your GPU, if you don't have a powersupply that you modify, well you will need a new one.

3: For the price, this GPU is very strong, even if it is only a rehash of the 480... and being a year old. It can still run newer titles like Monster Hunter: World without too many problems.

4: Price-wise, having to compete with cryto-miners may increase the price of this GPU from time to time... very annoying.

5: This is a major update from my old HD 7950 (which still works for many games that are moderately intense and are new.)

Worst Review Posted on Amazon Before Buying - Radeon RX 580

Bad Customer Review of the Radeon RX 580 on Amazon: After about 2 months of having this card, it had fried itself. I was playing fallout when my computer crashed and upon trying to start it up, my USB keyboard and mouse would get power for their LED's but the computer would not start, no fans would spin. A few days later I tried it again to which sparks shot out of my video card. It would have likely fried my whole computer if I hadn't unplugged my power strip from the wall. I later tried another video card that a friend gave me to which I downloaded the drivers for and it worked pretty much fine. Very disappointed in this product as it nearly ruined my entire computer.

Bad Customer Review 2 of the Radeon RX 580 on Amazon: When playing intensive full screen games or benchmarks two of the three display ports have issues with intermittent black screens. Confirmed on TWO copies of this card (I bought one, it started doing it, exchanged the card for another, same exact issues). Have spent a decent amount of time doing all the normal troubleshooting (fresh install of Windows, clean and re-installation of AMD drivers, manually playing with the voltage and frequency of the card, etc).

Please note: In spite of the bad review by a few customers, we experience no such issues (not saying you won't) but We would comfortably recommend this product for mining cryptocurrency based on our testing that will be continue below.

How to increase the potential of the Radeon RX 580 in mining

Even in the case of good versions of the RX 580 (Pulse and others), the potential of the card out of the box can hardly be called incredible. If you look at the average power of the video card, excluding the top solutions with good factory overclocking, you can count on the following indicators:

- Equihash - 302-310 SOL / s.

- X11gost - 8.4-8.6 MH / s.

- Daggerhashimoto - 26.1-26.8 MH / s.

- Pascal - 0.85 GH / s.

The best solution for current maps from Radeon is Ethereum mining. It is during its mining that the maximum potential of the cards is revealed, therefore any trusses with the RX 500 series are usually collected for this critical currency. Mine others is impractical, it is a direct loss of profit.

Initially, most of the 580s give from 18 to 22.5 MH / s without overclocking. Against the background of the possible 30-31 MH / s, which are sought by the owners of Radeon farms with the older model of the 500th series, the card mining capacity in the factory settings is not too high. However, a prerequisite for obtaining a better result is the BIOS firmware flashing. This can be called one of the conditional minuses of AMD cards, due to which some miners choose NVidia.

However, with a successful acceleration and reduction of energy consumption, the yield of the RX 580 will be quite high, especially with price increases and the overall attractiveness of Ethereum. Therefore, in the absence of sufficient knowledge, it is better to entrust the firmware to specialists. They will make it quickly and without risk to get the so-called “brick”, that is, a “dead” card without the possibility of returning it under warranty.

After flashing, the average potential of video cards will increase from 18-22 MH / s to minimum 26.5 MH / s. And in the case of good memory and successful overclocking, it is quite possible to get 28-30 MH / s, in which the RX 580 becomes one of the best in terms of return on video cards. On average, experts recommend focusing on 10-15% acceleration on average. This is the best indicator in terms of temperature, energy consumption and output power in the production of cryptocurrency.

Choosing the best OS - Radeon Rx 580

The correct choice of operating system can significantly simplify work with the farm and even reduce costs. In the confrontation of Windows and Linux for farms on the RX 580 usually choose the latest OS. Hive OS has several important advantages over Windows, the most significant of which are:

- There is no limit on 8 video cards.

- No need to face the difficult process of choosing the right drivers.

- The OS was originally developed and adapted for mining.

- Ability to work without monitors (using emulators).

- No need to have an SSD or HDD, a regular 8-16 GB flash drive is sufficient.

- WatchDog is built into the system, you do not need to pay extra for it (as is the case with Windows).

- Easy setup and functional remote monitoring.

- No need to buy an expensive license.

- Telegram notifications in real time.

You also need to consider that in case of installation of pirated copies of Windows, the farm can be confiscated, as it is stipulated by law. With Linux, there will be no such problems, which is another important advantage. One of the few advantages of Windows, namely the ability to use a video card for tasks other than mining, is irrelevant, because 99% of the farms are originally created specifically for mining cryptocurrency. This is the main task.

Farm payback with RX 580

One of the most important criteria when choosing a video card for mining is their payback. It depends on many conditions. Some of them have variable values, that is, they can constantly change. This concerns the recession or growth of the cryptocurrency rate.

Taking into account the relevance of Ethereum, the total payback period for the RX 580 can be 7-9 months, in case of a good time (accumulation of currency on the wallet and sale after peak rises), and 12-15 months. This period is influenced by the following factors (in descending order from the most significant):

- The course of the ether.

- Firmware (that is, the disclosure of the potential of a particular card in terms of overclocking, power consumption, etc.).

- The original cost of cards.

- Total investment in the farm (any savings, for example, on the HDD, reduces costs and accelerates payback a little).

Experienced miners recommend not to display a fixed broadcast systematically. This is best done only at times when there is a strong growth rate. In general, even under adverse conditions, the return on video card in 2018 is approximately 15-18 months. Given the current conditions for mining and farm profitability, this is not the worst time.

The choice between the 4 GB and 8 GB versions for the Radeon RX 580 is almost always unambiguous. If for the GTX 1060 it is possible to consider the 3 GB version as very promising, then for AMD, on which usually the broadcast is mined, only the 8 GB version would be preferable. Of course, after the miner exceeds the allowable amount in the 4 GB version, you can switch to mining other cryptocurrencies, but this is not the best option.

In such conditions, it will not be possible to extract the air on 4GB cards after February 6, 2021. Taking into account the not so big difference in prices between 4 and 8 GB, in most cases it is better to take a video card with a large amount of memory for the long term.

As far as vendors are concerned, there is not much difference. Buying top solutions, for example, NITRO, is not always profitable. Despite the fact that they provide good cooling and increased power, their cost is much higher than that of the "regular" versions.

It is much better to buy Asus Dual or other cards in the same price category. The difference between them, in comparison with the NITRO and LE versions, is about $ 150, but it is almost completely leveled by the correct firmware, pushing all the cards to approximately the same hashrate (+ - 3-4%).

Conclusion

Despite the fact that the Radeon RX 580 is no longer a top-notch solution, the positions of the video card are still preserved. This is one of the most sensible choices for mining in the medium and long term. With the right approach to buying cards for the farm and selling the naming ether, you can reduce the payback by almost half, making the RX 580 a leader in this criterion.

The only significant drawback may be mandatory BIOS firmware. But this issue is easily solved with the help of specialists, because this deficiency can be considered conditional.

HOW TO is the best main RX 580 graphics card...

XFX Radeon RX 580 GTS Edition 1386MHz OC+, 8GB GDDR5, VR Ready, Dual BIOS, 3xDP HDMI DVI, AMD Graphics Card (RX-580P8DFD6)

RX 580 8GB Test in 25 Games in 2020

https://www.youtube.com/watch?v=Je2BWKkkRK0

RX 580 series graphics card AMD and profitable mining - compatible?

For lovers of cryptocurrency production, one performance at an exponential speed or another video card with a priority is increasingly an issue in our country. In addition to paying electricity bills and law enforcement officers, who are increasingly asking the president and the government to introduce cryptocurrency on the part of the state monopoly may not be legitimate. Although the mining system is systematically efficient in the process of revenge (this is the longer the time, the longer the "production" of a unit cryptocurrency), the Russian miners are still committed to the same, if in the yard of 2012.

Performance RX 580 Series

AMD's products are firmly in the leadership of mining fans for many years in a continuous circle. Although their main competitor, Nvidia, has significantly improved the compatibility of mining cards during the five-year period, the company's main support is still the gaming industry and other media. The RX series of 8Gb 580 is updated with the Radeon RX 570 and put into production in 2017 and has picked up a large number of manufacturers. The most popular versions are the models released by "Sapphire" and "EM-ES-AJ".

The most popular model series - Features

At the end of the summer of 2017, the main mode of this line proved the sapphire NITRO. In Western Europe, the player miner offended all the last tilted copies as the AMD Radeon RX 580, so this card snatched pereproyti "Witcher 3" from the store for the first time with a good frame rate per second. What can please our RX5808 g:

- a clock frequency of 1450 MHz for the possibility of diffusion;

- The impressive number of stream processors - 2304;

- GDDR5 memory is 8192 megaGB, with a frequency of 2000 MHz.

In the numerous tests conducted by independent publications of computer hardware, we did not see the special adaptation of this graphics card mining. Many experts even suspect that the commercial success of the card may be caused by "popular rumors" than any actual benefit. When digging "Ether" (Vendetta, ETH for short), with the current version of the miner Claymore double ETH (firmware 9.2), our "Radeon" I give all 22, 5 MHS. It is interfered with the factory settings without a memory or mining calculator.

Of course, the result is unqualified. Obviously, memory timing is a high clock frequency that may have to be kept visible by production attenuating. We don't even dare to imagine - it will be 4GB of RAM in this one more budget RX 580. And for the RX 580 Nitro and Western and Russian experts agree that the main news sapphire Radeon revenge enough speed. Perhaps the so-called sapphire pulse is expected to update the model to change the situation of the Meining Ç580.

What is the competition?

From a little more known to us, the quotation of the MSI RX 580 has appeared on our new version of the "Sapphire". Only when the temperature reaches 60 ° C ventilation system and armor technology in the performance of the company's lead blade cooler it can not be more than those who buy cards to revenge or not. Maintaining a good and strong air supply cycle keeps the investment in the field. In contrast, whether it is the "Sapphire" and "ASUS" versions, this card is able to switch memory modes. What, again, it is more important for gamers and office workers.

Read the full article

0 notes

Text

Mac Mini For Photoshop

The entry-level Mac mini offers a 3.6GHz quad-core i3 processor for £799/$799 which may not fulfil the needs of the typical designer, but the £1,099/$1,099 version offers a 3.0GHz 6-core i5. Apple FINALLY updated their Mac Mini product line late in 2018 (with a minor update in March 2020), making it a pretty good option for photographers to run Lightroom and Photoshop. This buying guide provides insight into which model and what configuration options photographers should consider. The best budget Mac for photo editing is the 2019 Mac Mini for about $900 and the best budget PC is the Dell Inspiron 3670 for about $650. Add the ViewSonic VA2719-2K-SMHD 27 Inch display for about $220 and a 4TB hard drive for another $100. So you are a photographer on a tight budget in need of a computer to run Lightroom and Photoshop. Hi John - The PA272W-BK-SV 27' 16:9 IPS Monitor with SpectraViewII from NEC comes equipped with the NEC SpectraViewII color calibration tool and features enhanced color accuracy covering 99.3% of the Adobe RGB color space, 94.8% of the NTSC color space, and 146.4% of the sRGB color space. With a variety of input connectors including DisplayPort, Mini DisplayPort, HDMI, and DVI-D Dual-Link, you.

Mac Mini For Photoshop Cs6

Mac Mini For Photoshop Software

What is Final Cut Pro X like running on a new Mac mini? We take a look at the new model, its features and how well FCPX performs. It even beats an iMac Pro in one of our tests!

Before we plug the new Mac mini up, it is important to understand that this version of the unit has changed. Changed a lot.

Back in 2005, the Mac mini was designed for switchers from PCs. It didn’t come with a screen, keyboard or mouse and keeping the price down helped make the transition to Mac OS 10.3 Panther and a PowerPC processor as painless as possible.

Times and technology have changed, no need now for the DVD slot or a spinning hard disk for storage.

The switchers of today are buying MacBook Airs and MacBook Pros as their first Macs, not the mini.

So this gives Apple a chance to change and retarget the use of the Mac mini. Consequently, that’s exactly what they have done with the new range of models.

But instead of offering (To quote Steve Jobs) a ‘stripped down Mac’ they’ve actually put the logic board on steroids!

The fourth generation Mac mini now has a choice of quad and 6-core processors, up to 64GB of Ram, up to 2TB of SSD storage and the option of a 10GigE port over the standard GigE.

There are also four USB-C Thunderbolt 3 ports fed from two controllers, an HDMI port that supports 4K and two USB 3 ports. So yes, you can plug your own keyboard and mouse in without having to buy any adaptors.

Can the user upgrade the RAM in the new machine? Yes, it is possible, but it is not a case of flipping up a slot and exchanging the cards out.

You have to remove the cooling fan and then slide out the logic board. Make a mess of it and you’ll invalidate the warranty, so it is best to stick to an Apple approved centre for the upgrade. Should you want to get your spudger out and see the insides of the new Mac mini, head over to the excellent iFixit site.

The machine on test is a 3.2GHz 6‑core 8th‑generation Intel Core i7, 32GB 2666MHz DDR4, Intel UHD Graphics 630, 1TB SSD storage and the 10 Gigabit Ethernet option.

If the colour of the Mac mini looks familiar, it is exactly the same as the iMac Pro and (after seeing them side by side) the Blackmagic external GPU. This also makes sense of the decision for Apple to sell the black keyboard and mouse and also probably hints at the new Mac Pro colour. (And possible footprint- a skyscraper sized oblong trashcan?)

Connecting it up

I’m lucky to have 2 10GigE connections on the back of my QNAP NAS. Final Cut Pro X needs fast drives to be able to build the ‘always live’ waveforms and thumbnails.

Plumbing the Mac mini into the edit system is easy and I’ve detailed how to do point to point 10Gig connection before if you haven't got a router/switch.

If you need the internet on the machine, a cheap USB to Ethernet adaptor from Amazon for $15 works as well as anything else. Run a speed test to make sure you are accessing the NAS via the higher speed route.

I powered a 4K monitor from the HDMI port; this works well and avoids any more dongles having to be bought.

After 25 years of Mac ownership, I have enough keyboards and a spare mouse to finish off the system!

Power On

The machine comes with Mojave installed and defaults to dark mode on the now silent boot. The machine is very quiet and can’t be heard over the fan of the nearby QNAP, which isn’t that loud either. This machine could easily sit on your desktop and not annoy you or your co-worker.

I read a recent review that the sound was pretty terrible out of the Mac mini. I’d disagree. It isn’t great, but it isn’t bad either. I’d say it was better than the old cylinder Mac Pro, but not in the league of the Mac notebooks. You wouldn’t want to use it as edit monitoring.

The Mac mini does have a headphone socket and I can see many editors sitting in offices with the machine on the desk and headphones on editing all day.

Although I worked the machine hard with rendering, the shell didn't get too hot. However I did feel the warm rush of air out of the back of the machine which caught me by surprise when the mini was angled away from me.

I wouldn't have it in this orientation for continuous use, ie situated under a central monitor post with cables pointing at you. Instead I suggest putting the cables and heat exhaust to the back. It will make plugging the headphones in a bit trickier, but I hate sitting in draught!

Final Cut Pro X Performance!

Let’s give it a real test and put it up against an iMac Pro!

I thought I’d dive straight in with a 4K Project and use a Library with a short minute and a half sequence with large still images, transitions, multiple title and adjustment layers.

It is in a Library that I duplicated to both machines with the media staying on the QNAP. The cache was set to both desktops respectively as the RAM in both runs at up to 3Gig speed.

Mac Mini For Photoshop Cs6

Although I didn’t time it, it felt like the thumbnails were taking a longer time to draw than I’m used to on the iMac Pro. All render files were deleted before all tests.

Unrendered, the Mac mini played the sequence back in Better Performance, but dropped frames on Better Quality. The iMac Pro played back in both settings without a problem.

Skimming seemed just as fast as the iMac Pro and it didn’t feel underpowered when navigating the timeline or browser.

The iMac is an 8 core machine with 64GB of RAM and more importantly a Radeon Pro Vega 64 GPU.

Both machines are connected to the same storage via 10GigE and renders and exports went to the respective desktops. (Ok I know it is NBase-T which supports 1Gb, 2.5Gb, 5Gb and 10Gb)

Render time

Mac mini 7’03”

iMac Pro 1’43”

Mac Mini For Photoshop Software

4K ProRes 422 Export

Mac mini 6’45

iMac Pro 1’40”

As expected here as FCPX uses the GPU for image processing, the much lower powered Mac mini takes a lot longer.

Compressor Convert to HEVC 4K 8Bit

Mac mini 54”

iMac Pro 55”

Well, the Mac mini beat the iMac Pro! This is because all of the conversion is done on the CPUs.

Not really worth testing for Motion as apart from a few things like particles, Motion almost lives on the GPU.

Compressor Clusters

No, not a new breakfast cereal, but Compressor allows you to share the work out over connected machines.

I didn’t realise that the ability to set up a cluster of machines running Compressor was easy to do. Well, when I say easy my first attempt failed, but that might be down to my slightly quirky network topology with the QNAP.

To build a cluster, on the machines you want to add, open up a copy of Compressor. In the preferences, turn the option on for other computers to process batches.

Then on the host machine, make a cluster from the available machines in the list. Here you can see we have got something very wrong! We will be revisiting this topic with the issue fixed when we have more time.

Then having named your cluster (or other single machine), you can then toggle the processing destination in the dropdown menu on the bottom of the host machine's Compressor GUI.

To work properly and fast, all the machines need to be connected with 10GigE via a 10GigE switch. The costs of this networking is now a lot cheaper, Netgear and QNAP make a suitable budget switch.

There is no limit to the amount of Mac minis you can have in a cluster. You'll probably run out of switch ports first!

One note here. For distributed processing, it has to be a self contained movie that gets automatically diced and sliced and sent off to the cluster machines.

Conclusions

Not what I expected. I guess I was in the state of mind thinking that the Mac mini wasn’t a serious machine for anything other than web browsing, Plex serving or basic Photoshop.

It’s a lot more than than. It is a component in building a modular system, which is a new thought considering that Apple has been criticised over the past few years for lack of upgradability in the Mac Pro and iMac Pro.

There are two reasons for this new direction. The first are Thunderbolt 3 connections giving the option of using an external GPU and therefore factoring out the limited onboard Intel offering.

The second is the option of a 10GigE port. Being able to connect to high speed shared storage without going through an adaptor is a huge plus.

Why? Take sever centres for example. Every App on the iOS App Store has been compiled on a Mac. Rack up rows of Mac minis connected with 10GigE and you have a facility that can get apps ready quickly. No need for costly large GPUs here, all the work will be done by the CPU cores. Once set up, the Mac mini is more than happy to run in a headless mode. I've worked with a few producers like that.

This is also true of building a small Mac mini cluster to do the hard work of making all the different deliverables of an FCPX exported finished movie - while you carry on editing something else with your main machine. It would make sense for a large production or facility house to have a rack of these that everybody could access when needed.

Put five or ten of these together in a rack and you have a very fast DIT tool for making proxies and dailies on set. The Mac mini above gets its first on set DIT experience tomorrow!

I’ll leave you with a final thought.

Spec up a 6-core Mac mini with 32GB of RAM, 1TB of SSD storage and the soon to ship Blackmagic RX Vega 56 eGPU and you have a machine that’s not too far from the base model iMac Pro, wait for it... with over £1,200 left spare.

Granted, you’ll have to supply your own monitor, keyboard and mouse, but if upgradability is important to you, this could be a very clever way of getting the power with the flexibility.

Hopefully we will have an eGPU to test soon :)

Peter Wiggins is a broadcast freelance editor based in the UK although his work takes him around the world. An early adopter of FCP setting up pioneering broadcasts workflows, his weapon of choice is now Final Cut Pro X.

You can follow him on Twitter as @peterwiggins or as he runs the majority of this site, you can contact him here.

0 notes

Text

Dell XPS 13 (9310) Review

The newest Dell XPS 13 is one of the first ultraportable laptops to come with Intel's latest 11th Generation "Tiger Lake" CPUs, offering speedy, efficient computing performance and long battery life. This 2.8-pound laptop also has an exceptional 13.4-inch display and a gorgeous chassis, all of which combine to make it our Editors' Choice pick among premium Windows ultraportables. The price is a bit high, starting at $999.99 and ringing up at $1,649 as tested, but it's worth it for uncompromising fans of cutting-edge performance and style.

The XPS 13's Moment to Shine

If you were tempted to buy an XPS 13 earlier this year following its significant redesign (model 9300) but didn't pull the trigger, it's a good thing you waited. Now you can get everything we like about the new laptop with the added bonus of the latest Intel silicon.

The CPU bump is essentially the only change from the 9300 to the current model 9310, but it's an important one if you plan to keep your machine for five years or more. Dell does churn out new XPS 13 models at a prodigious rate, sometimes multiple times per year. Still, a brand-new processor and a physical redesign that's not even a year old make the 9310 a safe buy for people who don't want their expensive investment to be upstaged by something vastly better in a few months.

The XPS 13 is admirably thin and feels satisfyingly solid, if not particularly lightweight. It measures 0.58 by 11.6 by 7.8 inches (HWD) and weighs 2.8 pounds in the touch-screen configuration reviewed here. Versions without a touch screen weigh slightly less, at 2.64 pounds, since they lack the touch version's Gorilla Glass 6 coating over the display. Either of those weight measurements compare favorably with the Dell's archrival, the Apple MacBook Pro 13, which weighs 3.1 pounds. But the XPS 13 is still meaningfully heavier than the very lightest models on the market, such as Acer's 1.96-pound Swift 7 flagship.

The XPS 13's additional heft accommodates high-quality materials and a sleek design. The density of the build is apparent as soon as you slide the system out of its sleek white box and run your fingers over the aluminum lid and edges. Dell says the edges are anodized twice to prevent scratch damage from repeated plugging and unplugging of peripherals. Opening the lid results in even more to ogle. The two color options include Platinum Silver with a black carbon-fiber palm rest or Frost White with an Alpine White composite-fiber palm rest. Our review unit uses the latter scheme, and it's gorgeous. The palm rest is especially snazzy, and it incorporates a UV- and stain-resistant coating to prevent yellowing and discoloration.

If you like the XPS 13's styling, you’ll be interested to know Dell has expanded it across the range, which includes the latest versions of the larger XPS 15 and XPS 17 laptops. This is a similarly unified approach to the one Apple takes with the styling of the MacBook Air and the two sizes of the MacBook Pro. Whether you're looking for an ultraportable for frequent travels or a large-screen machine with serious computing power, there's an XPS for you. There's even a 2-in-1 version of the XPS 13, which sports a 360-degree hinge that lets you convert the laptop into a tablet.

An Exceptional Display, Even Without 4K

The XPS 13's display is available in three versions, all of which feature an unusual 16:10 aspect ratio instead of the more familiar 16:9. The former results in additional vertical space, which is handy for when you're scrolling through websites or updating lengthy documents.

Our review unit has a 1,920-by-1,200-pixel touch panel. Thanks to the aspect ratio, the resolution is a bit higher than full HD (1,920 by 1,080 pixels), but considerably less than the Retina Display of the MacBook Pro or the PixelSense display of the Microsoft Surface Laptop 3. However, the XPS 13 can be configured with a 3,840-by-2,400-pixel panel that leapfrogs not only those two laptops, but also the dimensions of standard widescreen 4K displays (3,840 by 2,160 pixels).

Once you’ve used a 4K screen, it's hard to go back to full HD, with its occasionally visible pixels and slightly grainy text. Perhaps that's why Apple and Microsoft don't offer full HD versions of the MacBook Pro or Surface Laptop 3. But I actually don't mind the XPS 13's screen resolution. Images appear especially vivid, which I attribute partly to the taller aspect ratio that results in slightly more pixels than a 1080p display and partly to the Dell's 100% sRGB and 90% DCI-P3 gamut support. I also appreciate the extraordinary rated maximum of 500 nits of brightness, which means the XPS 13 can even be viewed comfortably outdoors (though not in direct sunlight) if you crank up the brightness setting.

So I'm not recommending the 4K screen over the full HD one in this case. That's an added benefit to people watching their budgets, since the 4K version does add to the cost. On the other hand, I recommend staying away from Dell's entry-level screen, which is the same as the one on our review unit except that it lacks touch support. That's a shame, since many XPS 13 competitors offer touch support standard, with the notable exception of the MacBook Pro.

Feats of Miniaturization

In an impressive achievement in downsizing, a 720p webcam complete with IR face recognition sensors is located above the center of the display. It offers average video quality for a laptop camera, which is to say that indoor shots are slightly noisy and fuzzy compared with the quality from the cameras of even a midrange phone. Dell says it has improved the camera quality by adding a new four-piece lens and temporal noise reduction, but if you're planning to hold a Skype session in your living room at night, you'll still probably want to use your phone. The camera's chief innovation is its minuscule size—the XPS 13's screen occupies 91.5% of the footprint of the chassis, which means the bezels surrounding it are razor-thin.

One of the consequences of a compact laptop is less room for ports. The XPS 13's are limited to two USB Type-C ports with Thunderbolt 4 support, a headphone jack, and a microSD card reader. This means you'll need an adapter or dongle to plug in an external monitor or USB Type-A peripherals. (Dell thoughtfully includes a USB adapter in the box.) This could be a drawback for the work-from-home crowd, who will likely be using the XPS 13 with an external display. While it's true that the XPS 13 is simply following the trend toward fewer and fewer ports, its selection is stingy even among its peers. The MacBook Pro offers as many as four USB-C ports, all of which support Thunderbolt 3.

The ultraportable offers the latest Wi-Fi 6 (802.11ax) and Bluetooth 5 wireless connectivity standards, good for stable internet connections and wireless keyboards and mice. But many users will happily stick with the built-in touchpad and keyboard, both of which I find to be comfortable for short typing and tapping sessions. The large keycaps and extensive surface area of the pad are welcome improvements over the cramped equivalents on some competitors, including the Asus ZenBook 13. The power button in the upper right corner of the keyboard doubles as a fingerprint reader for password-free logins to your Windows 10 account.

Audio quality from the XPS 13's stereo speakers is excellent. Combined, they deliver up to 4 watts of output, and they're balanced enough to give the laptop far richer and more dimensional sound than you'd expect from such a compact package. Much of the audio emanates through a grille on the bottom of the laptop, but voice tracks and other treble notes in a few movie trailers that I watched never sounded muffled.

Dell supports the XPS 13 with a one-year hardware warranty, and offers optional extensions up to four years for an additional charge.

Testing the XPS 13: Goodbye Ice, Hello Tiger

The new XPS 13 ditches Intel's 10th Generation "Ice Lake" processors in favor of the latest Tiger Lake CPUs. There's not a huge difference between the two, but we did see some modest performance improvements on a few of our benchmark tests compared with the 9300 model. Our test unit comes with a Core i7-1165G7, a quad-core chip with Hyper-Threading that runs at a base frequency of 2.8GHz, up from 1.3GHz in the equivalent 10th Generation Core i7. The higher clock speed can improve performance on certain tasks, though the total number of cores and threads remains the same.

While the XPS 13 has always relied on integrated graphics rather than a discrete GPU, this model boasts Intel's latest Iris Xe silicon, replacing the Iris Plus graphics of its predecessor. Our review unit also has 16GB of memory and a 512GB solid-state drive, which should be sufficient for most users. The entry-level configuration, meanwhile, comes with a Core i3, 8GB of RAM, and a 256GB SSD. That's a relatively skimpy set of components compared with the MacBook Pro, whose entry-level configuration includes a Core i5. But the entry-level MacBook Pro is $300 more than the base XPS 13.

Below is a list of specs for our XPS 13 tester and a few other comparable laptops we've tested recently, including the Apple MacBook Pro, the Asus ZenBook 13, the Razer Blade Stealth 13, and the Microsoft Surface Laptop 3.

Of the group, the Asus is the only other contender to sport a Tiger Lake CPU.

CPU, Media, and Storage Tests

Our first look at overall performance comes from the Windows-only PCMark performance suite developed by the benchmark specialists at UL (formerly Futuremark). The PCMark 10 test we run simulates different real-world productivity and content-creation workflows. We use it to assess overall system performance for office-centric tasks such as word processing, spreadsheet jockeying, web browsing, and videoconferencing. The XPS 13 performs very well, though essentially the same as the similarly equipped ZenBook 13.

PCMark 8, meanwhile, has a storage subtest that we use to assess the speed of the system's boot drive. Like PCMark 10, it yields a proprietary numeric score (higher numbers are better). Most recent laptops with SSDs perform roughly equally well in this test, which is the case here.

Next is Maxon's CPU-crunching Cinebench R15 test, which is fully threaded to make use of all available processor cores and threads. Cinebench stresses the CPU rather than the GPU to render a complex image. The result is a proprietary score indicating a PC's suitability for processor-intensive workloads. The hierarchy on this test is clear: the Ice Lake-based Surface Laptop 3 and Blade Stealth 13 are a rung below the Tiger Lake XPS 13 and ZenBook 13. The MacBook Pro's Core i5 processor is an overachiever.

Cinebench is often a good predictor of our Handbrake video-editing trial, another tough, threaded workout that's highly CPU-dependent and scales well with cores and threads. In it, we put a stopwatch on test systems as they transcode a standard 12-minute clip of 4K video to a 1080p MP4 file. It's a timed test, and lower results are better. The XPS 13 is locked in a surprising tie for first place with the MacBook Pro, with the Asus not far behind.

We also run a custom Adobe Photoshop image-editing benchmark. Using an early 2018 release of the Creative Cloud version of Photoshop for Windows and the latest Photoshop CC release for macOS, we apply a series of 10 complex filters and effects to a standard JPEG test image. We time each operation and, at the end, add up the total execution time. As with Handbrake, lower times are better here. The Photoshop test stresses the CPU, storage subsystem, and RAM. The XPS 13 performs well—better than the Apple, but not quite as quick as the ZenBook 13.

Graphics and Battery Life Testing

One of the main benefits of Intel's Tiger Lake platform is the switch from the older Iris Plus to Iris Xe graphics. This improvement actually has only a small impact in our graphics testing, which uses the Windows-only 3DMark and Superposition game simulations to render sequences of highly detailed, gaming-style 3D graphics that emphasize particles and lighting.

We run two different 3DMark subtests, Sky Diver and Fire Strike, which are suited to different types of systems. Both are DirectX 11 benchmarks, but Sky Diver is more suited to laptops and midrange PCs, while Fire Strike is more demanding and made for high-end PCs to strut their stuff. The results are proprietary scores.

Like 3DMark, the Superposition test renders and pans through a detailed 3D scene and measures how the system copes. In this case, it's rendered in the company's eponymous Unigine engine, offering a different 3D workload scenario than 3DMark for a second opinion on the machine's graphical prowess.

The Iris Xe laptops show a slight advantage over their Iris Plus counterparts, but the difference isn't remarkable. It's also much less of an advantage than what is offered by an entry-level gaming GPU like the Nvidia processor in the Blade Stealth 13. The bottom line for graphics output is that the XPS 13 will be able to handle pretty much anything you throw at it except for intensive 3D games, which require a dedicated GPU to run smoothly. (For more analysis of Iris Xe performance, check out our Iris Xe primer and in-depth Tiger Lake testing feature.)

Equipped with a 52-watt-hour battery that lasted for 15 hours in our video playback test, the XPS 13 should easily survive an entire workday away from a power outlet...

That's a very good result, even though it's "just" in line with what the competition offers and actually slightly shorter than the 17.5 hours we saw from the previous model 9300.

Hear Me Roar: Today's Best High-End Ultraportable

The XPS 13 is an exceptional ultraportable whose key strengths are its cutting-edge computing components in a beautifully designed, exceptionally well-constructed chassis. These are rare achievements even in the crowded field of premium ultraportable laptops. Operating system differences aside, the XPS 13 is probably a better choice than the MacBook Pro for most people right now, since Apple's notebook is using older-generation processors that face imminent replacement.

One of the few reasons not to choose the XPS 13 is if you're seeking a robust gaming experience on the side. Intel's Iris Xe silicon is pretty good if you are willing to dial things back (this is not the integrated graphics of a year or two ago), but even so, the Nvidia GeForce GTX-equipped Blade Stealth 13 is likely a more prudent choice, at the minor expense of shorter battery life, a not-quite-as-sleek chassis, and an older-generation CPU. Otherwise, the XPS 13 is the cream of the crop, and retains our Editors' Choice award as 2020's best high-end ultraportable laptop to date.

0 notes

Text

Review of the Surface Book 3 for Developers

I was offered a Surface Book 3 to use as a loaner over the the last 5 weeks. I did a short video teaser on Twitter where I beat on the device with a pretty ridiculous benchmark - running Visual Studio 2019 while running Gears of War and Ubuntu under WSL and Windows Terminal. I have fun. ;)

Hey they loaned me a @surface book 3! So...I threw EVERYTHING at it...Visual Studio, Gears of War, Ubuntu/WSL2/Windows...*all at the same time* because why not? LOL (review very soon) pic.twitter.com/FmgGCBUGuR

— Scott Hanselman (@shanselman) May 14, 2020

Size and Weight

My daily driver has been a Surface Book 2 since 2017. The new Surface Book 3 is the exact size (23mm thick as a laptop) and weight (3.38 and 4.2 lbs.) as the SB2. I have had to add a small sticker to one otherwise I'd get them confused. The display resolutions are 3000×2000 for the 13.5-inch model and 3240×2160 for the 15-inch one that I have. I prefer a 15" laptop. I don't know how you 13" people do it.

Basically if you are a Surface Book 2 user the size and weight are the same. The Surface Book 3 is considerably more power in the same size machine.

CPU and Memory

They gave me an i7-1065G7 CPU to test. It bursts happily over 3.5 Ghz (see the compiling screenshot below) and in my average usage hangs out in the 2 to 1.8 range with no fan on. I regularly run Visual Studio 2019, VS Code, Teams, Edge (new Edge, the Chromium one), Ubuntu via WSL2, Docker Desktop (the WSL2 one), Gmail and Outlook as PWAs, as well as Adobe Premiere and Audition and other parts of the Creative Suite. Memory usually sits around 14-18 gigs unless I'm rendering something big.

It's a 10th gen Intel chip and as the Surface Book 3 can detach the base from the screen, it's both a laptop and tablet. I gleaned from Anandatech that TDP is between 10 and 25W (usually 15W) depends on what is needed, and it shifts frequencies very fast. This is evident in the great battery life when doing things like writing this blog post or writing in Edge or Word (basically forever) versus playing a AAA game or running a long compile, building containers, or rendering a video in Premiere (several hours).

FLIP THE SCREEN AROUND? You can also when docked even reverse the screen! Whatever do you mean? It's actually awesome if you want an external keyboard.

All this phrased differently? It's fast, quickly, when it needs to be but it's constantly changing the clock to maximize power/thermals/battery.

SSD - Size and Speed

The device I was loaned has a Toshiba KXG60PNV2T04 Hard Drive 2TB NVMe M.2 that's MASSIVE. I'm used to 512G or maaybe a 1TB drive in a Laptop. I'm getting used to never having to worry about space. Definitely 1TB minimum these days if you want to play games AND do development.

I ran a CrystalBenchmark on the SSD and it did 3.2GB/s sequential reads! Sweet. I feel like the disk is not the bottleneck with my development compile tests below. When I consulted with the Surface team last year during the conception of the Surface Book 3 I pushed them for faster SSDs and I feel that they delivered with this 2TB SSD.

GPU - Gaming and Tensorflow

The 13.5-inch model now comes with an NVIDIA GeForce GTX 1650 Max-Q GPU with 4GB of GDDR5 memory in its Core i7 variant, while the 15-inch unit features a NVIDIA GeForce GTX 1660 Ti Max-Q with 6GB of GDDR6 memory. When running the Gears 5 Benchmark while plugged in (from the Extras menu, Benchmark) is has no issues with the default settings doing 60fps for 90% of the benchmark with a few dips into the 57 range depending what's on screen.

It's not a gaming machine, per se, but it does have a NVIDIA GeForce GTX 1660 Ti so I'm basically able to 1080p 60fps AAA games. I've played Destiny 2, Gears of War 5, and Call of Duty Modern Warfare on default settings at 60 fps without issue. The fan does turn on but it's very manageable. I like that whenever we get back into hotels I'll be able to play some games and develop on the same machine. The 15" also includes an Xbox Wireless Adapter so I just paired my controller with it directly.

I was also able to run Tensorflow with CUDA on the laptop under Windows and it worked great. I ran a model against some video footage from my dashcam and 5.1 gigs of video RAM was used immediately and the CUDA engine on the 1660Ti is visible working in Taskman. The commercial SKU has an NVIDIA Quadro RTX 3000 that is apparently even more tuned for CUDA work.

Developer Performance

When I built my Intel i9 Ultimate Desktop 3.0 machine and others, I like to do compile tests to get a sense of how much you can throw at machine. I like big project compiles because they are a combination of a lot of disk access and a lot of parallel CPU work. However, some projects do have a theoretical maximum compile speed because of the way the dependences flesh out. I like to use Orchard Core for benchmarks.

Orchard Core is a fully-featured CMS with 143 projects loaded into Visual Studio. MSBUILD and .NET Core supports both parallel and incremental builds.

A warm build of Orchard Core on IRONHEART my i9 desktop takes just under 10 seconds.

My 6 year old Surface Pro 3 builds it warm in 62 seconds.

A totally cold build (after a dotnet clean) on IRONHEART takes 33.3 seconds.

My Surface Pro 3 builds it cold in 2.4 minutes.

I'll do the same build on both my Surface Book 2 and this new Surface Book 3 to compare. I've excluded the source folders from Defender as well as msbuild.exe and dotnet.exe. I've also turned off the Indexer.

A cold build (after a dotnet clean) on this Surface Book 3 takes 46 seconds.

A warm build is 16.1 seconds

A cold build (after a dotnet clean) on my Surface Book 2 takes 115 seconds.

It's WAY faster than my Surface Book 2 which has been my daily driver when mobile for nearly 3 years!

Benchmarks are all relative and there's raw throughput, there's combination benchmarks, and all kinds of things that can "make a chart." I just do benchmarks that show if I can do a thing I did before, faster.

You can also test various guesses if you have them by adding parameters to dotnet.exe. For example, perhaps you're thinking that 143 projects is thrashing to disk so you want to control how many CPUs are used. This has 4 physical cores and 8 logical, so we could try pulling back a little

dotnet build /maxcpucount:4

The result with Orchard Core is the same, so there is likely a theoretical max as to how fast this can build today. If you really want to go nuts, try

dotnet build -v diag

And dig through ALL the timing info!

Webcam Quality

Might be odd to add this as its own section but we're all using our webcams constantly right now. I was particularly impressed with the front-facing webcam. A lot of webcams are 720p with mediocre white balance. I do a lot of video calls so I notice this stuff. The SB3 has a 1080p front camera for video and decent light pickup. When using the Camera app you can do up to 5MP (2560x1920) which is cool. Here's a pic from today.

Ports and Power and Sound and Wi-Fi

The Surface Book 3 has just one USB-C port on the right side and two USB 3.1 Gen 2s on the left. I'd have liked one additional USB-C so I could project on stage and still have one additional USB-C available...but I don't know what for. I just want one more port. That said, the NEW Surface Dock 2 adds FOUR USB-C ports, so it's not a big deal.

It was theoretically possible to pull more power on the SB2 than its power supply could offer. While I never had an issue with that, I've been told by some Destiny 2 players and serious media renderers that it could happen. With the SB3 they upped the power supply with 65W for the base 13.5-inch version and a full 127W for the 15-inch SKUs so that's not an issue any more.

I have only two Macs for development and I have no Thunderbolt devices or need for an eGPU so I may not be the ideal Thunderbolt consumer. I haven't needed it yet. Some folks have said that it's a bummer the SB3 doesn't have it but it hasn't been an issue or sticking point for any of my devices today. With the new Surface Dock 2 (below) I have a single cable to plug in that gives me two 4k monitors at 60Hz, lots of power, 4 USB-C ports all via the Dock Connector.

I also want to touch on sound. There is a fan inside the device and if it gets hot it will run. If I'm doing 1080p 60fps in Call of Duty WarZone you can likely hear the fan. It comes and goes and while it's audible when the fan is on, when the CPU is not maxed out (during 70% of my work day) the Surface Book 3 is absolutely silent, even when running the monitors. The fan comes on with the CPU is bursting hard over 3Ghz and/or the GPU is on full blast.

One other thing, the Surface Book 3 has Wi-Fi 6 even though I don't! I have a Ubnt network and no Wi-Fi 6 mesh points. I haven't had ANY issues with the Wi-Fi on this device over Ubnt mesh points. When copying a 60 gig video file over Wi-Fi from my Synology NAS I see sustained 280 megabit speeds.

The New Surface Dock - Coming May 26th

I'm also testing a pre-release Surface Dock 2. I suspect they wanted me to test it with the Surface Book 3...BUT! I just plugged in every Surface I have to see what would happen.

My wife has a Surface Laptop 2 she got herself, one son has my 6 year old old Surface Pro 3 while the other has a Surface Go he got with his allowance. (We purchased these over the last few years.) As such we have three existing Surface Docks (original) - One in the kids' study/playroom, one in the Kitchen as a generalized docking station for anyone to drop in to, and one in my office assigned me by work.

We use these individual Surfaces (varying ages, sizes, and powers) along with my work-assigned Surface Book 2 plus this loaner Surface Book 3, so it's kind of a diverse household from a purely Surface perspective. My first thought was - can I use all these devices with the new Dock? Stuff just works with a few caveats for older stuff like my Surface Pro 3.

RANDOM NOTE: What happens when you plug a Surface Pro 3 (released in 2014) into a Surface Dock 2? Nothing, but it does get power. However, the original Surface Dock is great and still runs 4096 x 2160 @30Hz or 2960 x 1440 @60Hz via mini DisplayPort so the Pro 3 is still going strong 6 years out and the kids like it.

So this Surface Dock 2 replaces the original Dock my office. The Surface Dock 2 has

2x front-facing USB-C ports (I use these for two 4k monitors)

2x rear-facing USB-C ports

2x rear-facing USB-A 3.2 (10Gbps) ports

1x Gigabit Ethernet port

1x 3.5mm audio in/out port

Kensington lock slot - I've never used this

First, that's a lot of USB-C. I'm not there yet with the USB-C lifestyle, but I did pick up two USB-C to full-size DisplayPort cables at Amazon and I can happily report that I can run both my 4k monitors at 60hz plus run the main Surface Book 3 panel. The new Dock and its power supply can push 120 watts of power to the Surface with a total of 199 watts everything connected to the dock. I've got a few USB-C memory sticks and one USB-C external hard drive, plus the Logitech Brio is USB 3, so 6 total ports is fine with 4 free after the two monitors. I also Gigabit wired the whole house so I use the Ethernet port quite happily.

Initially I care about one thing - my 4k monitors. Using the USB-C to DisplayPort cables I plugged the dock into two Dell P2715Q 4ks and they work! I preferred using the direct cables rather than any adapters, but I also tested a USB-C to HDMI 2.0 adapter I got in 2018 with some other Dell monitors in the house and that worked with the Surface Book 3 as it had previously with the Book 2.

SURPRISE NOTE: How does the super-thin Surface Pro X do when plugged into a Surface Dock 2? Amazing. It runs two 4k monitors at 60 Hz. I don't know why I was shocked, it's listed on the support page. It's a brand new device, but it's also the size and weight of an iPad so I was surprised. It's a pretty amazing little device - I'll do another post on just the ARM-based Surface Pro X another time.

One final thing about the new Dock. The cable is longer! The first dock had a cable that was about 6" too short and now it's not. It's the little things and in this case, a big thing that makes a Dock that much nicer to use.

Conclusion

All in all, I'm very happy with this Surface Book 3 having been an existing Surface Book 2 user. It's basically 40-50% faster, the video card is surprisingly capable. The SSD is way faster at the top end. It's a clear upgrade over what I had before, and when paired with the Surface Dock 2 and two 4k monitors it's a capable developer box for road warriors or home office warriors like myself.

Sponsor: Have you tried developing in Rider yet? This fast and feature-rich cross-platform IDE improves your code for .NET, ASP.NET, .NET Core, Xamarin, and Unity applications on Windows, Mac, and Linux.

© 2020 Scott Hanselman. All rights reserved.

Review of the Surface Book 3 for Developers published first on https://deskbysnafu.tumblr.com/

0 notes

Text

Review of the Surface Book 3 for Developers

I was offered a Surface Book 3 to use as a loaner over the the last 5 weeks. I did a short video teaser on Twitter where I beat on the device with a pretty ridiculous benchmark - running Visual Studio 2019 while running Gears of War and Ubuntu under WSL and Windows Terminal. I have fun. ;)

Hey they loaned me a @surface book 3! So...I threw EVERYTHING at it...Visual Studio, Gears of War, Ubuntu/WSL2/Windows...*all at the same time* because why not? LOL (review very soon) pic.twitter.com/FmgGCBUGuR

— Scott Hanselman (@shanselman) May 14, 2020

Size and Weight