Text

This guy built a bot to expose dead start-ups

Twitter bot @deathwatch_us unveils dead start-ups by monitoring their Twitter account activity.

Tech start-ups often enter the world with a bang—a few eye-popping rounds of financing, a fawning review in industry must-read TechCrunch, maybe making a splashy debut on ProductHunt. When they go bust, though, there is usually less of a clatter. They simply fade from the tech hive mind’s memory, selling the company scraps as funding dries up.

But Caspar Wrede doesn’t think we should so easily forget the startups of Silicon Valley yesteryear. So the Berlin-based product manager built a Twitter bot called Deathwatch that monitors the health of tech start-ups. Companies that haven’t tweeted for 40 days are declared “unwell.” Those that have been inactive for 180 days or more are officially pronounced dead.

“You always catch the beginning of the wave, but rarely the tail end,” Wrede told me via phone. “That’s one thing that motivated me to make something like this. It’s very hard to measure when companies die, but measuring when they stop tweeting is a fairly good metric. When a company stops tweeting it’s usually an early sign that it’s in trouble.”

Wrede maintains bots that monitor startups in the U.S., Germany, India and the U.K. that are listed in TechCrunch’s start-up database Crunchbase and that have at least 300 followers on Twitter. Deathwatch speaks to the sheer volume of companies that start and fail each year—most of the thousands of start-ups Deathwatch monitors are ones that you have probably never heard of.

Recently, for example, Deathwatch tweeted that Floqq, a video-learning marketplace based in Madrid once featured in VentureBeat and TechCrunch, seemed to have shuttered. Signs point to yes: The company hasn’t tweeted since April, hasn’t updated its Android app since last March, and no longer exists on iTunes. Most of the founding team has changed their LinkedIn profiles to reflect new places of work. Indeed, it seems like Floqq was a flop. (The company did not respond to requests for comment.)

Sometimes, of course, Wrede’s bot gets it wrong. Recently it tweeted that Ripple Labs, a well-known bitcoin startup, had died when it had merely changed Twitter handles. When Deathwatch tweeted that gun.io, a hacker talent agency, had died, the company responded that it was just “listening” ...

Wrede said he envisions Deathwatch as part art, part social service—a way to keep Silicon Valley’s self-congratulating exuberance in check. Admittedly, Silicon Valley has started to come to terms with defeat, even launching FailCon in 2009, a conference where failed start-up founders can gather to discuss what went wrong.

Looking at DeathWatch’s list of the dead, it’s easy to feel a sense of start-up deja vu. Scroll past Foodily and Forkly and Sosh, for example, and you might find yourself wondering how many times are people are really going to start social networks centered around food. Perhaps the most interesting lesson to be taken from Deathwatch’s roster of dead start-ups is how similar they are to new start-ups we see launching every day.

Read full story here.

14 notes

·

View notes

Text

Expedia’s first bot is for booking hotels

Expedia launches a Facebook Messenger bot to make hotel reservations.

Expedia today unveiled a rather smart, rather simple little bot for Facebook Messenger. The bot is designed to do one thing and do it well: It helps travelers book hotels. What’s more, the bot is extremely intuitive. As in easy-to-use. For the tech savvy, this may seem like a big “duh,” but simplicity matters given that bots are still in their infancy and Expedia’s customer base spans all walks of life: the company owns more than 200 travel booking sites from Orbitz to HomeAway to Trivago to Hotels.com.

While the bot took only about 5 weeks to develop, Expedia got a head start in making it so smart. “Our work with natural language processing and machine learning – the tools that helped get us here – have been going on for years,” said Tarran Street, senior public relations manager. “For us it’s all about experimentation to better understand travelers’ needs. The rise of mobile means people are starting to move away from the mouse and traditional keyboard, we want to be sure our products are available wherever travelers are searching.”

In a blog post on the company web site, David Fleischman, vice president of global product, described the bot’s use of natural language processing, “The bot operates on a structured conversation flow: it analyzes information provided and prompts the user to input other relevant data points to complete a search.”

Expedia’s bot for Facebook Messenger is a marriage of giants. The travel company booked more than $60 billion of travel for its customers across 282,000 hotels, 475 airlines and dozens of car rental companies. Facebook Messenger, meanwhile, has 900 million registered users; Expedia has 5.9 million followers on Facebook. It’s likely that booking hotels is just the beginning of a broader more comprehensive messaging strategy.

For now, the bot does not directly integrate with other Expedia services like Affirm for payments, or HomeAway for vacation home rentals, said Street. “It can, though some of that magic doesn’t happen directly within the bot. That part of the experience happens on an Expedia page vs. within the Messenger app directly.”

For instance, Street said, those travelers wanting to see vacation rental options from HomeAway can click on the “View All Hotels” option within the bot. They are then taken to an Expedia search result page with relevant properties, and can select a filter option.

Asked about incorporating services from partners like airport car service provider Wingz (Expedia invested$11 million in the company), Tarran said, “[They’re] not integrated into the Expedia bot directly yet; we’re still at early stages so we’re starting with hotels.”

Like other companies building bots, Expedia sees this initial effort as dipping a toe in the water. “For us it’s all about experimentation to better understand travelers’ needs. The rise of mobile means people are starting to move away from the mouse and traditional keyboard, we want to be sure our products are available wherever travelers are searching,” Street said. “Being involved in [new] technologies and learning how our customers interact with them gives us an opportunity to get their feedback and finesse our approach across other parts of our site.

Read full story here.

1 note

·

View note

Text

Why Salespeople Need to Develop “Machine Intelligence”

The co-authors of Sales Growth consider the future of sales and salespeople with bots and automation.

Artificial intelligence (AI) is on quite a run, from Google’s AlphaGo, which earlier this year defeated Go world champion Lee Sedol four games to one, to Amazon’s Echo, the voice-activated digital assistant.

The trend is heating up the sales field as well, enabling entirely new ways of selling. Purchasing, for example, is moving to automated bots, with 15%–20% of total spend already sourced through e-platforms. By 2020 customers will manage 85% of their relationship with an enterprise without interacting with a human. Leading companies are experimenting with what these technologies can do for them, typically around transactional processes at early stages of the customer journey.

For example, AI applications can take over the time-consuming tasks of initiating contact with a sales lead and then qualifying, following up, and sustaining the lead. Amelia, the “cognitive agent” developed by IPsoft, can parse natural language to understand customers’ questions, handling up to 27,000 conversations simultaneously and in multiple languages. And because “she” is connected to all the relevant systems, Amelia delivers results faster than a human operator. Of course, there will be occasions when even AI is stumped, but Amelia is smart enough to recognize when to involve a human agent.

As we learned from researching our book, Sales Growth, companies that have pioneered the use of AI in sales rave about the impact, which includes an increase in leads and appointments of more than 50%, cost reductions of 40%–60%, and call time reductions of 60%–70%. Add to that the value created by having human reps spend more of their time closing deals, and the appeal of AI grows even more.

Clearly, AI is bringing big changes. But what do they mean for sales — and the people who do it? We see two big implications.

The Sales Role Is Going to Change Completely

The “death of a salesman” is an overplayed trope, but the road ahead does mean significant changes for how sales work is done. The changes are primarily focused on automating activities rather than individual jobs, but the scale of those changes is likely to profoundly disrupt what sales people do.

We analyzed McKinsey Global Institute data on the “automatability” of 2,000 different workplace activities, comparing job requirements to the current capabilities of leading-edge technology. We found that 40% of time spent on sales work activities can be automated by adapting current technologies. If the technologies that process and understand natural language reach the median level of human performance, this number will rise to 47% ...

Much of the focus on AI and automation has been on which jobs or tasks will be replaced. That’s understandable, of course. But it’s clear, if less explored, that sales leaders and reps will continue to be crucial to the sales process even as they adapt to working with machines.

The “human touch” will need to focus more on managing exceptions, tolerating ambiguity, using judgment, shaping the strategies and questions that machines will help enable and answer, and managing an increasingly complex web of relationships with employees, vendors, partners, and customers.

Machine learning and automation tools, for example, will be able to source, qualify, and execute far more sales opportunities than reps can keep up with. Sales leaders therefore need to develop clear escalation and exception protocols to manage the trickiest or most valuable situations, making sure a sales rep keeps a robot from losing a big sale.

Read full story here.

3 notes

·

View notes

Text

BOTS & DRONES

by Tyler Callich

bot: anthropomorphized algorithm with a UI

drone: Unmanned Aircraft System (UAS)

Bots and drones are two ways technology is making itself apparent to people, and they’re apparent to us in very different ways. I’m going to be discussing our experiences of both: compare, contrast, then try to conceive of something that’s neither (or both). Bots are interactive, playful devices in our lives. A chatbot, a photo bot, a game bot, an art bot. The list goes on, but the emphasis is on small size and exploration. drones are devices that are remote and automated. A surveillance drone, a racing drone, a military drone. they’re extensions of power and sight. Bot has a cute, positive valence, while drone has a menacing, negative one.

First off, I want to admit: I like bots.

I’ve been tinkering ever since I was a little girl. Tiny gewgaws from clothespins and rubber bands. Monsters from discarded rubberbands and paperclips. Even though these are static objects, I’d like to think of them as proto-bots. For little me, a ‘‘bot’’ didn’t have to accomplish a task. I made proto-bots to entertain myself. They might flip something across the room, but that was secondary.

These things were formed from limited materials and a child’s mind.

Here’s a recreation of one of my proto-bots:

I made this proto-bot in a coffee shop with items I had in my purse. Mint tin, chapstick, sticker, hair ties, markers, memory card case, Advil.

It doesn’t do anything except make me happy.

Its name is Toothsome.

Today I make bots mostly on Twitter out of Python or Ruby scraps that I copied shamelessly. There’s a certain level of frivolity behind most of my bots, which makes them unique, fun, possibly precious:

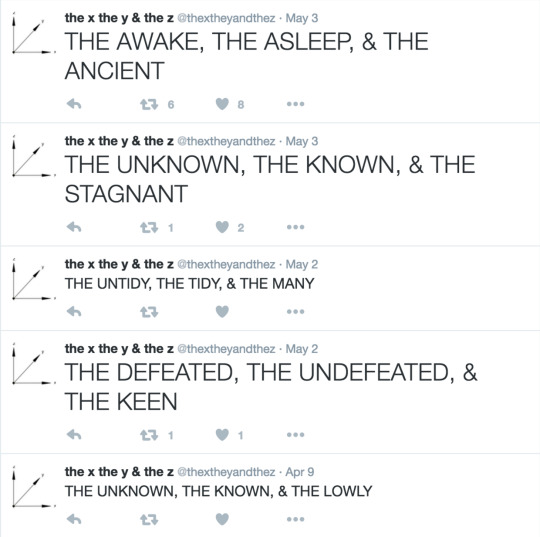

They allow me to play online even when I’m asleep or working (ex. @thextheyandthez, a tribute to The Good, The Bad, & The Ugly)

They uncover the distant, strange boundaries of an idea (ex. @lichmaze, a fake game concept)

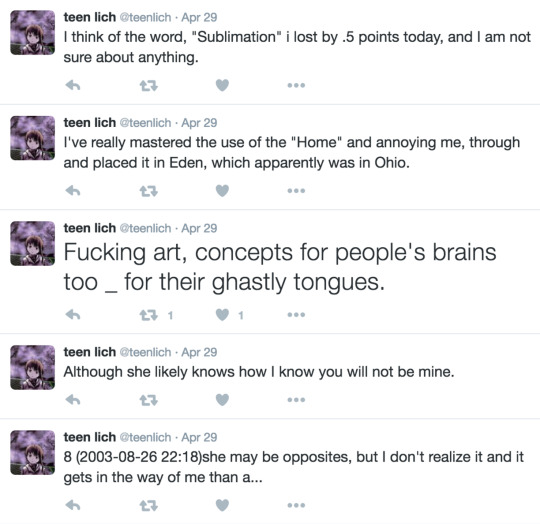

They reflect my own words back to me like an oracle (ex. @teenlich, which is made from my old livejournal)

Bots are a form of introspection for me. They help me answer personal questions.

How can I better understand my teenage self?

Why do labyrinths fascinate me?

To what extent can I take this ridiculous joke?

What is gender, anyway?

I keep making bots because I keep having questions and experiences that are hard for me to comprehend. A bot expands a concept in serial, revealing unanticipated combinations in the process. Sometimes it’s an uncanny turn of phrase. Other times it’s a recontextualized memory or utterance.

Drones are another kind of automated creature that people make. The thought of a drone conjures up the humming of a mindless bee army. Drones are used to expand the presence of a person or government. Drones kill. Drones deliver. Drones surveil. Governments, in particular the U.S. government, use drones to do great harm abroad. Today UASes are a big arm of the military science industrial complex, and they’re only making more.

On the other hand, as a culture, we have anthropomorphized bots into cute little artificially (un)intelligent homunculi. Our hopes of making real AI give bots a certain cuteness and relatability that drones certainly don’t have, but all that spontaneity and humanity comes from the bot creator and their acceptance of error.

Joke bots & murder drones

(image source)

This place we’ve gotten ourselves into reminds me of the movie TOYS (1992). cute heir to toy empire (Robin Williams) fights against general who wants to militarize playthings.

Even though the plot of TOYS is attractive today, the dichotomy between good friendly bots/evil servant drones isn’t useful. Bots aren’t that smart, and drones aren’t that mindless. Both are cultural constructs around how we automate processes. I’m not going to try and make drones cute. I have a nightmare that people start naming drones things like “@tiny_destroyer.” Cutifying or gamifying violence/control will likely become a trend as drones and the children of drones come to the fore.

Bots aren’t all good, either (ex. @_grammar_ and @RedScareBot), and their association with cuteness and play can obfuscate when bots are doing something terrible. (take for example, what happened with Microsoft’s @Tayandyou.)

Drones work, bots play

When we create things, we are always solving a problem. We create drones to do things we’d rather not, to try and erase responsibility. We created bots to keep ourselves company online. The effects that both have make our intentions clear.

Teach a bot to love

Today, drones put our own inhumanity on display. It’s disheartening and worrisome. silly little bots help somewhat: they make me feel more hopeful about technology.

I hope that one day we learn how to code empathy like we’ve learned to code cruelty.

Maybe it will be the other way around: our tiny bots teach us empathy.

Thanks for reading!

- tyler @TylerCallich

4 notes

·

View notes

Text

Chatbots take the pitch for Euro 2016

Copa 90 releases a Facebook Messenger bot to provide updates throughout Euro 2016.

U.K.-based online football community Copa 90, which has 2 million Facebook followers, is choosing the UEFA European Championship, aka Euro 2016, to roll out its first Facebook Messenger chatbot.

Facebook users can query the bot for animated updates on the latest goals, team line-ups, player profiles — pretty much anything that it can do, without needing sports rights. Much of the content will also be pulled in from other football magazines with which Copa 90 partner publications like Mundial Magazine, Scotts Menswear, Kick and Panenka. Copa 90 worked with Rock Labs to build the bot, which will send out an initial alert in the morning, giving users a taste of the main highlights they may have missed from the previous day’s coverage.

Copa 90 chief James Kirkham, who joined the company six months ago from Leo Burnett, believes within the next year, its entire audience will spend most of their time in some kind of messaging ecosystem. That’s a trend that’s becoming the norm in Asia, where people are staying for longer periods of time within messaging apps, like WeChat, doing things like ordering pizzas and Ubers.

“I firmly believe that within a year the majority of our audience will spend most of their time in some kind of messaging ecosystem, so that’s where we want to be. We’re not trying to mold new behaviors but tap into existing ones. This is just our first foray. We’re hours away from the Euro Championships beginning; the hype and appetite is at its peak.”

Copa 90 is planning to launch on all the big messaging apps like Kik, WeChat, WhatsApp and, but he thinks chatbots are a good place to start.

“We 100 percent think we can make revenue from it,” said Kirkham. “Within 12 months, a large proportion of our audience can be monetized that way, whether it’s via pay-per-view content or merchandising, where people can buy a Copa 90 shirt or items like that.”

Read full story here.

1 note

·

View note

Text

The surprising tool bots use to get around those pesky CAPTCHAs

Ticket-scapling bots utilize humans to get through CAPTCHAs.

Lin-Manuel Miranda — “Hamilton” star, creator, certified genius and all-around national obsession — penned an impassioned plea in the New York Times this week asking for help to stop bots from taking over Broadway. Miranda shed light on how scalpers use bots to buy up tickets to plays, operas, concerts and other events in milliseconds, only to resell them for at least double their original price on sites such as StubHub.

As Miranda outlined, this activity is both super profitable and illegal, and apparently profitable enough to be worth it to some to break the law. But, one might wonder, how are they getting past the captcha — those squiggly lettered snippets specifically designed to thwart robo-buying?

They have a secret weapon: people.

According to the New York Attorney General’s office report that Miranda cites in his op-ed, one way that these bots are getting around the security measures designed to thwart them is by enlisting the help of humans. Some companies do use software, namely optical character recognition (OCR) programs that can convert an image to text — for example, making a scanned page of a book searchable. But the bots also send real-time feeds of the captchas (per Wikipedia, technically a backcronym for "Completely Automated Public Turing test to tell Computers and Humans Apart") to have humans solve the puzzles that only humans are supposed to be able to solve.

From the report comes this picture of a somewhat grim career:

“[The] Bots transmit in real-time images of the CAPTCHAs they encounter on Ticketmaster and other sites to armies of “typers,” human workers in foreign countries where labor is less expensive. These typers — employed by companies such as Death by CAPTCHA, Image Typerz, and DeCaptcher — read the CAPTCHAs in real-time and type the security phrases into a text box for the Bot to use to bypass ticket vendors’ defenses and use their sites.”

This is far from a new tactic to get around captchas. In 2004, Cory Doctorow, co-editor at Boing Boing, reported that spammers were using free pornography sites to get people to solve the captchas for them. To unlock a video, a potential viewer would have to solve captchas piped in from other sites such as Yahoo and Hotmail, which spammers would then use to create oodles of e-mail accounts at a rapid pace to spam people.

Read full story here.

4 notes

·

View notes

Text

Parents are worried the Amazon Echo is conditioning their kids to be rude

Parents find their kids “pushing the virtual assistant further than they would push a human.”

Alexa will put up with just about anything. She has a remarkable tolerance for annoying behavior, and she certainly doesn’t care if you forget your please and thank yous.

But while artificial intelligence technology can blow past such indignities, parents are still irked by their kids’ poor manners when interacting with Alexa, the assistant that lives inside the Amazon Echo.

“I’ve found my kids pushing the virtual assistant further than they would push a human,” says Avi Greengart, a tech analyst and father of five who lives in Teaneck, New Jersey. “[Alexa] never says ‘That was rude’ or ‘I’m tired of you asking me the same question over and over again.'”

Perhaps she should, he thinks.

When Amazon released its internet-connected speaker in 2014, the world was puzzled. “Well this one came out of nowhere,” mused the Verge. In the time since, the Amazon Echo has proven to be a sleeper hit, capable of learning many new “skills,” so that it can control the smart home, add events to your calendar, summon an Uber, even tell knock-knock jokes. It’s become such an curious and influential device that Google last month gave the world a peek at its equivalent, the Google Home, and Apple is reportedly cooking up its own version powered by Siri.

Because Alexa responds to verbal commands, the Echo is incredibly child-friendly. Amazon says it didn’t build the Echo with kids in mind, but they’re eagerly embracing it ... The syntax is generally simple and straightforward, but it doesn’t exactly reward niceties like “please.” Adding to this, extraneous words can often trip up the speaker’s artificial intelligence. When it comes to chatting with Alexa, it pays to be direct—curt even. “If it’s not natural language, one of the first things you cut away is the little courtesies,” says Dennis Mortensen, who founded a calendar-scheduling startup called x.ai.

For parents trying to drill good manners into their children, listening to their kids boss Alexa around can be disconcerting.

“One of the responsibilities of parents is to teach your kids social graces,” says Greengart, “and this is a box you speak to as if it were a person who does not require social graces.”

It’s this combination that worries Hunter Walk, a tech investor in San Francisco. In a blog post, he described the Amazon Echo as “magical” while expressing fears it’s “turning our daughter into a raging asshole.”

He might be a tad hyperbolic. (“For what it’s worth,” says Rebecca Hanover Kurzweil, a friend and fellow parent-cum-Amazon Echo owner, “his daughter is the sweetest girl you’ve ever met.”)

... [W]ith the proliferation of AI, a debate is emerging around how humans should treat bots.

Mortensen, who created the calendar-scheduling assistant, is a big believer in being nice to bots. Citing a passage about the master-slave dialectic in Hegel’s The Phenomenology of Spirit written in 1807, Mortensen argues that “you are worse off if you treat your machines in a demeaning kind of way.”

Read full story here.

#virtual assistants#digital assistants#bot identity#ai#artificial intelligence#conversational commerce#conversational agents#language#ethics

4 notes

·

View notes

Text

OK Computer, Write Me a Song

Google says its AI software could make creative suggestions to help musicians, architects, and visual artists.

Last week [Douglas Eck, a researcher in Google’s main artificial intelligence research group, Google Brain,] announced a new Google project called Magenta aimed at making new kinds of creative software that can generate more sophisticated artworks using music, video, and text.

Magenta will draw on Google’s latest research into artificial neural networks ... Eck says he wants to help artists, creative professionals, and just about anyone else experiment and even collaborate with creative software capable of generating ideas.

“As a writer you could be getting from a computer a handful of partially written ideas that you can then run with,” says Eck. “Or you’re an architect and the computer generates a few directions for a project you didn’t think of.”

Those scenarios are a ways off. But at an event on creativity and AI hosted by Google last week, Project Magenta collaborator Adam Roberts demonstrated prototype software that gives a hint of how a musician might collaborate with a creative machine.

Roberts tapped out a handful of notes on a virtual Moog synthesizer. At the click of a mouse, the software extrapolated them into a short tune, complete with key changes and recurrent phrases. The software learned to do that by analyzing a database of nearly 4,500 popular music tunes.

Eck thinks it learned how to make key changes and melodic loops because it uses a crude form of attention, loosely inspired by human cognition, to extract useful information from the past tunes it analyzed. Researchers at Google and elsewhere are using attention mechanisms as a way to make learning software capable of understanding complex sentences or images ...

The technique is seen as one of the most promising ways to transition from machine learning that’s good at just pattern recognition—like transcribing speech—to software that is capable of planning and taking actions in the world (see “This Factory Robot Learns a New Job Overnight”).

Eck thinks reinforcement learning could make software capable of more complex artworks. For example, the sample tunes from Magenta’s current demo lack the kind of larger structure we expect in a song.

Magenta’s software is all being released as open-source in the hope of helping programmers and artists experiment with ideas like that. Eck also hopes to one day get help training Magenta’s software by releasing music or other creations to get feedback from the public.

Read full story here.

#machine learning#music#magenta#computer-generated music#computer-generated art#ai#artificial intelligence

3 notes

·

View notes

Text

Movie written by algorithm turns out to be hilarious and intense

Sunspring, a short science fiction movie written entirely by AI, debuts exclusively on Ars today.

youtube

Ars is excited to be hosting this online debut of Sunspring, a short science fiction film that's not entirely what it seems. It's about three people living in a weird future, possibly on a space station, probably in a love triangle. You know it's the future because H (played with neurotic gravity by Silicon Valley's Thomas Middleditch) is wearing a shiny gold jacket, H2 (Elisabeth Gray) is playing with computers, and C (Humphrey Ker) announces that he has to "go to the skull" before sticking his face into a bunch of green lights. It sounds like your typical sci-fi B-movie, complete with an incoherent plot. Except Sunspring isn't the product of Hollywood hacks—it was written entirely by an AI. To be specific, it was authored by a recurrent neural network called long short-term memory, or LSTM for short. At least, that's what we'd call it. The AI named itself Benjamin.

Knowing that an AI wrote Sunspring makes the movie more fun to watch, especially once you know how the cast and crew put it together. Director Oscar Sharp made the movie for Sci-Fi London, an annual film festival that includes the 48-Hour Film Challenge, where contestants are given a set of prompts (mostly props and lines) that have to appear in a movie they make over the next two days. Sharp's longtime collaborator, Ross Goodwin, is an AI researcher at New York University, and he supplied the movie's AI writer, initially called Jetson. As the cast gathered around a tiny printer, Benjamin spat out thescreenplay, complete with almost impossible stage directions like "He is standing in the stars and sitting on the floor." Then Sharp randomly assigned roles to the actors in the room. "As soon as we had a read-through, everyone around the table was laughing their heads off with delight," Sharp told Ars. The actors interpreted the lines as they read, adding tone and body language, and the results are what you see in the movie. Somehow, a slightly garbled series of sentences became a tale of romance and murder, set in a dark future world. It even has its own musical interlude (performed by Andrew and Tiger), with a pop song Benjamin composed after learning from a corpus of 30,000 other pop songs.

Building Benjamin

When Sharp was in film school at NYU, he made a discovery that changed the course of his career. "I liked hanging out with technologists in NYU's Interactive Telecommunications Program more than other filmmakers," he confessed. That's how he met Goodwin, a former ghost writer who is finishing up a PhD at NYU on natural language processing and neural networks. Speaking by phone from New York, the two recalled how they were both obsessed with figuring out how to make machines generate original pieces of writing. For years, Sharp wanted to create a movie out of random parts, even going so far as to write a play out of snippets of text chosen by dice rolls. Goodwin, who honed his machine-assisted authoring skills while ghost writing letters for corporate clients, had been using Markov chains to write poetry. As they got to know each other at NYU, Sharp told Goodwin about his dream of collaborating with an AI on a screenplay. Over a year and many algorithms later, Goodwin built an AI that could.

Benjamin is an LSTM recurrent neural network, a type of AI that is often used for text recognition. To train Benjamin, Goodwin fed the AI with a corpus of dozens of sci-fi screenplays he found online—mostly movies from the 1980s and 90s. Benjamin dissected them down to the letter, learning to predict which letters tended to follow each other and from there which words and phrases tended to occur together. The advantage of an LSTM algorithm over a Markov chain is that it can sample much longer strings of letters, so it's better at predicting whole paragraphs rather than just a few words. It's also good at generating original sentences rather than cutting and pasting sentences together from its corpus. Over time, Benjamin learned to imitate the structure of a screenplay, producing stage directions and well-formatted character lines. The only thing the AI couldn't learn were proper names, because they aren't used like other words and are very unpredictable. So Goodwin changed all character names in Benjamin's screenplay corpus to single letters. That's why the characters in Sunspring are named H, H2, and C. In fact, the original screenplay had two separate characters named H, which confused the humans so much that Sharp dubbed one of them H2 just for clarity.

When Sharp and Goodwin entered Sunspring in the Sci-Fi London contest, they were delighted when the judges placed it into the top ten out of hundreds of entries. One judge, award-winning sci-fi author Pat Cadigan, said, "I'll give them top marks if they promise never to do this again." Before the final judging, audience members were allowed to vote online for their favorite film from the top ten. As the filmmakers watched thousands of votes rolling in, Goodwin realized something. "These guys are cheating; they’re getting thousands of votes, they’re getting bots to vote for themselves," he said to Sharp. That's when he and Sharp came up with a nefarious plan. "I said, [Benjamin] is going to outvote them at the last minute," Sharp recalled. "So we had him vote 36,000 times per hour in last hours of the contest, and he crushed the cheaters." Sharp immediately called Louis Savy, who runs the film festival, and confessed that their AI had voted for himself and that they wanted to distance themselves from the AI's actions. Savy thought that was hilarious and decided to interview the AI on stage during the award ceremony. Here's part of the transcript:

What do you think of your historic nomination against human opponents in this contest?

I was pretty excited.

I think I can see the feathers when they release their hearts. It's like a breakdown of the facts. So they should be competent with the fact that they won't be surprised.

What is the future of machine written entertainment?

It's a bit sudden.

I was thinking of the spirit of the men who found me and the children who were all manipulated and full of children. I was worried about my command. I was the scientist of the Holy Ghost.

What's next for you?

Here we go. The staff is divided by the train of the burning machine building with sweat. No one will see your face. The children reach into the furnace, but the light is still slipping to the floor. The world is still embarrassed.

The party is with your staff.

My name is Benjamin.

Ever since that day, Sharp and Goodwin have called the AI by its chosen name.

Read full story here.

#ai#science fiction#experiments#movies#science fiction movies#sci-fi#artificial intelligence#recurrent neural network#neural networks#machine learning#computer-generated art#computer-generated movie

614 notes

·

View notes

Text

Isis Twitter accounts bombarded with nudes by pornbots

Hackers have created hundreds of bots on Twitter filled with nude images to follow Isis-related Twitter accounts.

Isis have once again found themselves the target of hackers. They’ve been rickrolled in the past, but this time it’s pornbots causing hassle. According to Ahlul Bayt News the pornbots do not tweet, but just follow known Isis accounts with hundreds of accounts in a minute, all of them featuring graphic imagery, something unlikely to impress Isis fighters trying to concentrate.

The hacker collective Anonymous was behind the rickrolling epidemic of 2015, a movement that saw Isis hashtags bombarded with Rick Astley’s “Never Gonna Give You Up”. So far, no person or organisation has come forward to claim responsibility for this pornbot pile-on. Some Twitter users following developments have commented that a lot of Isis accounts have locked while they find to find a solution.

The Isis media agency Amaq reportedly had to disable its account, while another account saw its follower count jump from 50 to 800. Normally, that’d be a cause for celebration but alas it was just 750 non-tweeting pornbots. You know what they say – sex sells...and shuts down Isis Twitter accounts.

1 note

·

View note

Text

Algorithms: The Future That Already Happened

Michael S. Evans reviews Pedro Domingos’s book, The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our World.

ONE DAY IN LATE MARCH, Microsoft made a chatbot named Tay. Tay began the day tweeting love to everyone. A few hours later, Tay was quoting Adolf Hitler and offering filthy sex on demand. To borrow a phrase from John Green, Tay fell in love with Hitler and filthy sex the way you fall asleep: slowly, and then all at once. That’s because Tay learned from humans, and humans are awful.

Machine-learning algorithms try to make sense of human activity from the data we generate. Usually these algorithms are invisible to us. We see their output as recommendations about what we should do, or about what should be done to us. Netflix suggests your next TV show. Your car reminds you it’s time for an oil change. Siri tells you about a nearby restaurant. That loan you wanted? You’re approved!

In a sense, you’re making these recommendations yourself. Machine-learning algorithms monitor information about what you do, find patterns in that data, and make informed guesses about what you want to do next. Without you, there’s no data, and there’s nothing for machine learning to learn. But when you provide your data, and when the guesses are correct, machine learning operates invisibly, leaving you to experience life as an endless stream of tiny, satisfying surprises.

Or at least that’s how things could be, according to computer scientist Pedro Domingos. In The Master Algorithm, Domingos envisions an individually optimized future in which our digital better halves learn everything about us, then go out into the world and act for us, thereby freeing us to be our best non-digital selves. In this vision, machine-learning algorithms replace tedious human activities like online shopping, legal filing, and scientific hypothesis testing. Humans feed data to algorithms, and algorithms produce a better world for humans.

It sounds like science fiction. And it is, notably in Charles Stross’s novel Accelerando. But is this future possible?

If you’re skeptical, maybe it’s because you think we’re not capable of creating good enough machine-learning algorithms. Maybe you got a bad Netflix recommendation. Maybe Siri can’t understand your instructions. The technology, you might think, just isn’t very good.

The Master Algorithm seeks to prove you wrong. Over the course of several chapters on the current state of machine-learning research, Domingos explains that we are close to creating a single, universal learning algorithm that can discover all knowledge, if given enough data. And he should know. In a research field dominated by competition, Domingos has long championed a synthetic approach to machine learning: take working components from competing solutions, find a clever way to connect them, then use the resulting algorithm to solve bigger and harder problems. The algorithms are good enough, or soon will be.

No, the problem isn’t the technology. But there are good reasons to be skeptical. The algorithmic future Domingos describes is already here. And frankly, that future is not going very well for most of us.

Read full review here.

1 note

·

View note

Text

AJ Bell looks to enable trading through Facebook Messenger

AJ Bell, a UK-based broker, is looking to develop a bot to trade shares either through Facebook Messenger or SMS.

UK fund supermarket AJ Bell is looking to allow investors to trade through Facebook’s Messenger service, as it aims to broaden its appeal to millennials.

The low-cost broker, which has £28bn of assets under administration, said it intended to launch a trading service on the messaging platform in the next three months, enabling retail investors to give instructions to buy and sell shares through the app.

AJ Bell customers will be able to communicate directly via text message with a bot, a software program powered by artificial intelligence that can respond to human requests.

Tim Huckle, digital strategy director at AJ Bell, said that using the platform, which has more than 900m monthly users, would appeal to 18-30 year olds.

The company believes that it is the first to execute a trade using Facebook’s Messenger app. It is also working on upgrading its system to allow retail investors to buy and sell funds through the platform.

“We have created a secure authorisation framework between the Facebook Messenger platform and AJ Bell Youinvest,” said Mr Huckle, referring to the company’s consumer investment platform.

The move comes two months after Facebook opened up its AI tools to businesses in April, in the hope that companies would create bots to communicate with customers on the platform.

Mr Huckle said that he bought £500 of Facebook shares using his own account and has released a video documenting the transaction. It shows how investors could access their accounts through Messenger, request information including charts and prices on hundreds of different stocks, and request a quote — which they can then choose to accept or reject.

Following the transaction, they can view a summary including dealing charges and foreign exchange trading costs without leaving the Messenger app.

Before the service is opened to the public, however, the broker will also need to submit its bot to Facebook for approval, much like app developers must gain approval from Apple to appear in its app store.

Facebook declined to comment.

Distributing funds through social media platforms has long been an aspiration for the asset management industry.

A senior partner at a consultancy firm said that using Messenger to distribute funds would give “much more reach” to asset managers looking to push investment products to the next generation of investors.

“If this is successful then all platforms are going to start wanting to do this,” he said.

But he warned that making trading too easy could backfire. “We know that retail investors tend to overtrade and that they lose out because of transaction costs,” he said. “That would be something to watch out for.”

Read full story here.

0 notes

Text

Conversable chatbot will offer allergy info along with a side of Wingstop wings

Conversable, an Austin-based company, launches a bot for Wingstop.

Conversable, a conversational commerce startup seeking to bolster relationships between brands and consumers, is announcing a partnership today with Wingstop. Users will be able to order food, inquire about potential food allergens, and look for nearby locations from within apps like Facebook Messenger and Twitter.

Instead of putting APIs in front of developers, Conversable wants to power the Fortune 500 with its own core platform that can be built out to nearly limitless applications. The company is starting with Wingstop but says it has plans to change the way people shop, purchase airline tickets and interact with brands.

Co-founders Ben Lamm and Andrew Busey have worked together twice before. After finding success with technology studio Chaotic Moon and game design studio Team Chaos, the two began tinkering with the idea of using automated chatbots to improve brand accessibility.

“What I love about this is that over time companies can just keep adding new conversation trees to the core platform so that over time you can respond to more things without spending years and a fortune building out mobile apps,” said Ben Lamm, Conversable CEO. “For me as a consumer, my experience with a brand just gets better and better as I can do more.”

$2 million in seed funding has been enough to get the team of 15 people running. So far it has secured a number of enterprise-paying, domestic Fortune 500 clients. The chat solutions are subscription based with available professional services for customization.

Most of us have used bad chat bots that are unresponsive and inaccurate. Conversable wants to avoid falling into the trap by emphasizing backend systems integration. The chatbots are being marketed to improve user experiences and brand engagement all without utilizing machine learning or AI.

“While machine learning and AI are interesting, brands want to understand more context at the individual node of the question or response. They want to grab analytics and empower the business to understand what people are asking,” Lamm added.

Instead, the Texas-based company will focus on further integration with emerging platforms like Amazon Echo.

Read full story here.

0 notes

Text

A Computer Tried (and Failed) to Write This Article

Writer Adrienne Lafrance tries to develop computer-generated articles that sound like her own writing, but fails.

None of this turned out how it was supposed to.

Here I am, a human, writing a story that was assigned to a machine. If I’d published what the computer came up with, you’d be reading something like:

“content that was that communications and everything that makes on a person what they’re are also to be in the Internet in the fact about it is that models are technologication of the same that its also from the most computer.”

A machine really did come up with that garbage. But let me back up for a second.

A little over a year ago, I started asking around—among computer scientists at universities and tech companies, mostly—to see if someone would help me design and carry out a weird little experiment I had in mind. I wanted to train a machine to write like me.

The idea was this: We’d give Robot Adrienne a crash course in journalism by having it learn from a trove of my past writings, then publish whatever Robot Me came up with.

This isn’t a far-fetched idea, and not just because robots have a long track-record of automating human labor. There are already algorithms that can write stories. At The Los Angeles Times, there’s a program that reports breaking news about earthquakes. Bots can easily be programmed to write other basic stories—things like box scores and real estate listings, even obituaries. In January, Wired had a news-writing bot produce a remembrance of Marvin Minsky, the artificial intelligence pioneer. The result was a little dry compared with the obituary for Minksy written by a human at The New York Times—but the machine version was decent. Last year, NPR’s Scott Horsley raced a bot to report quarterly earnings for the diner chain, Denny’s. Horsely’s version had more stylistic flourish, but the bot filed its story in two minutes flat—five full minutes before Horsely, basically an eternity in radio time.

Impressive as these news bots may be, I didn’t want Robot Adrienne to be a formulaic speed machine with a Mad Libsian approach to reporting. Instead, I wanted to use a neural network, a computer model inspired by the human brain, that could assess a bunch of input data, infer its structure, then represent that information with its own original output.

If a robot’s eventually going to take my job anyway, why not get it to help me become a better writer first?

This approach could ostensibly produce something more complex and less predictable than the bots that spend their days filling in the blanks. Using a neural network to generate language is, on the simplest level, how human babies learn about language, too: they model input from adults, then generate their own output. “If you think about that loop, babies are taking information in, encoding it, and expressive speech is the output,” said Kirsten Cullen Sharma, a neuropsychologist who focuses on child development. “This is how the brain develops, [and determines] what connections the brain decides to keep.”

My interest in a neural-net configuration for my experiment was somewhat selfish. These networks are so cool in part because when you give a machine a gob of data, that information is all the machine has to make sense of the world. So when you look at the eventual output, you have the potential to learn quite a bit about the input. If a computer can learn how to write simply by analyzing one person’s work, that machine is theoretically in a position to reveal otherwise subtle idiosyncrasies about the input data—a particular writer’s structural conventions might become obvious, and other stylistic tics illuminated.

If a robot’s eventually going to take my job anyway, why not get it to help me become a better writer first?

Last fall, Google agreed to help me. This was great news. The company’s commitment to A.I. research is well documented: It has taught computers todream, to defeat humans in abstract strategy games, and to compose music. Artificial intelligence is arguably at the heart of everything Google is and does.

But after months of back and forth, with little explanation, the company said it wouldn’t be able to help me with my experiment after all. (Understandable, of course, that one of the world’s leading tech firms might have other priorities.) I had similar experiences with several other big tech companies that focus on artificial intelligence.

Last month, my editor suggested I ask the writer and technologist Robin Sloan, one of those rare humans who always seems to be effortlessly dabbling in some crazy-brilliant side-project, yet is still somehow kind enough to maybe consider helping a random reporter obsessed with building a robot version of herself. As it happened, Sloan had lately been conducting a similar language-generation experiment of his own: tinkering with a recurrent neural network and a massive chunk of text from the annals of science fiction as a way to see what kind of story a computer mind might write.

So I begged him to help me, and told him about the massive plain text doc of my writing I had all ready to go.

“I am totally game to train an RNN on your Adrienne Corpus,” Sloan told me. “I would definitely set your expectations low—there is no way in which its output will seem like ‘a piece by Adrienne’—but at the same time, the things these networks capture—the ‘flavor’ of text—are, to me, still pretty remarkable.”

So I sent Sloan the document, which contained painstakingly copy-and-pasted text from two years of published stories—almost all the stuff I’d written forThe Atlantic since 2014, totaling 532,519 words. Sloan turned to an open-source Torch-RNN package—which you can find on GitHub, courtesy of the Stanford computer scientist Justin Johnson—and he got to work.

“One of the magical things about this moment in deep learning is that many of core tools have been packaged up and made accessible to enthusiast-level programmers (like me), but that doesn’t mean it’s exactly straightforward,” Sloan explained. “I had to install and configure a scientific computing package called Torch and a bundle of sub-packages that specifically support neural networks.” He also used a computer with a very fast GPU—experimentation still would have been possible with the kind of processor in a powerful laptop, for instance, but it would have taken a lot longer.

It became clear pretty quickly that half-a-million words, or about 3 MB of text, wasn’t enough for the neural network to learn language the way I’d hoped. When Sloan entered a prompt—“The most interesting thing is...”—here’s what the machine spit out in various iterations of the initial experiment:

The most interesting thing is a different on the past with the distriction of the film of a different of the computer seems of the since something that it was have people in the companies of the most computer that their people of the find of surfect for the profession of the same -- and the prediction of the company state that makes it was something when they were not on the other more of the meanth for the computer that they were all the parence -- and for the way that it is a companies and one of the paternation of the long of the computer in the some hald for a really to the first that has product and computer and companies in for the way that it makes the first that was a stories of the person of a person of the way the first that have a term of the question of the most interesting the computer of where the computer that they are things about the companies that was interested to computer when they were all of the planet of the companies with the most in the way the part of the companies that their experience and…

Here’s another example:

The most interesting thing is the one of the could that experience. "In the paceported by the drate which the nettory ferers of the east desound, the Now that are not be a mabriates, macketing other way for distant to a still provides. The presive in the long the years of could design are a computer to a time in the used to the person replored the same that it for the decigual is behove probless. For mean. There was a few in pulling diefter on pook simple Moren the really complement had business looking with too was flay depressicled to the the truition of a part the concent of the computer who have the decention of a bick of a litten, For study releaser satelles piece, and deference the recond in the world with a prite vises. The years like what's the play with it was not been to call the movion from the five samplo do make see planet as "more somethative to have a biggle than the finse counters to the mother take Google, eught in a releason in partable partically as the "explore of the more and the trach and sai …

The computer had ingested the Adrienne Corpus and produced mostly gobbledygook. This wasn’t exactly a surprise. “The deal with these networks—the thing that makes them so powerful, but also weirdly fragile—is that they know NOTHING at the outset of their training,” Sloan said. “It's not like you tell them, ‘Okay, this is going to be English text, look for nouns and verbs, etc.’—it's just a stream of characters. And from that stream, it infers all this amazing structure. BUT the inference requires a lot of input.”

So we needed more words, but how many?

Read full story here.

1 note

·

View note

Text

Artificial Curiosity Allows This Bot to Triumph at Montezuma’s Revenge

Researchers in Google’s DeepMind train a bot with “intrinsic motivation” to complete Montezuma’s Revenge.

youtube

When it first appeared in 1984, Montezuma’s Revenge was considered one of the most challenging video games to appear on a gaming console. Now, in an effort to help machines learn more efficiently, AI researchers have created an algorithm that actually motivates the hero of this classic video game in some very important ways—and it’s surprisingly effective.

Anyone who has ever played Montezuma’s Revenge knows how frustrating this game can actually be. The authors of the new study describe the challenges this way:

Montezuma’s Revenge is infamous for its hostile, unforgiving environment: the agent [called Panama Joe] must navigate a maze composed of different rooms, each filled with a number of traps. The rewards are far and few in between, making it almost impossible for undirected exploration schemes to succeed.

This vintage game is difficult for human players, let alone an artificial intelligence. To date, it takes hundreds of millions of individual frames for an AI to attain even the slightest performance levels, and at best they’re only capable of clearing two or three rooms out of the 72.

Google’s DeepMind division has been trying to solve Montezuma’s Revenge for quite some time now. Last year, Google announced that its Deep Q system was capable of defeating 49 Atari games simply by watching how video games are played. But Montezuma’s Revenge presents a different challenge entirely. As reported in Wired at the time, Deep Q was incapable of any kind of progress in the game, scoring “a big fat zero.” The issue, as pointed out by Dave Gershgorn in Popular Science, is that in order to succeed at this game, “players need to plan how to clear a room, and then execute that plan.”

To that end, and in its latest effort to finally create an agent that can at least partially succeed at Montezuma’s Revenge, the DeepMind researchers endowed Panama Joe with what’s called “intrinsic motivation.”

Basically, the protagonist of our digital adventure is trained to solve each level in a similar way to how humans do it, and it’s done using novelty-based rewards. Panama Joe is “motivated” not only to win the game but also to explore more of the game. In each episode, he tries out something different, and this often breeds new solutions and ultimately success.

Of course, Joe isn’t really self-aware like human players. Rather, he’s incentivized through a series of digital rewards. This helps him learn faster, and from just a few examples.

In one example (show in the above video), Panama Joe actually manages to solve an entire level in just four tries. And in a comparative analysis of agents programmed with and without this so-called artificial curiosity, the intrinsically motivated Joe explored 15 rooms out of 24, while the unmotivated Joe explored just two.

By working this way in so-called Arcade Learning Environments, the researchers are hoping to produce algorithms that can be applied to the real world. In future, similar motivations could help robots and other autonomous devices navigate and explore their worlds in a similar manner.

#machine learning#games#arcade learning environment#ai#artificial intelligence#experiments#research#deepmind#google#montezuma's revenge

3 notes

·

View notes

Text

MZ’s Gabe Leydon will demo a bot that runs New Zealand’s transportation system

At VentureBeat’s MobileBeat 2016 event, mobile game company, MZ will demo their bot is tracks real-time movement in New Zealand’s transportation system.

At our MobileBeat 2016 event on July 12-13 covering artificial intelligence and bots, a host of new bots from brands and other startups will launch on stage.

The event will come three months after Facebook helped set off the bot craze in April, when it launched its bot platform for Facebook Messenger. Those three months will have given developers enough time to dig in and build some meaningful bots.

But one of the more exciting demos of bot technology will come from Gabe Leydon, who will show off a way to use real-time technology and artificial intelligence to help run New Zealand’s transportation system, starting with buses. Leydon, who is chief executive of the one of the biggest-grossing mobile game companies, MZ, plans to expand the technology to be able to cover traffic lights, parking, and other areas, as we first reported two weeks ago. He’s actively working with New Zealand authorities right now to make that happen. MZ’s technology tracks movements of people with phones and other activity picked up by sensors, all within a live stream — and a bot on top can draw from artificial intelligence to allow users and public administrators to take action right away.

Through a single app, the NZ government will have a complete view into its infrastructure — for example, where the buses are, whether they are on time, and if they’re going in the right direction. “Users will soon have the ability to see down to the second where everything is, so that they can plan their days in a way that’s more efficient,” he said recently on CNBC’s Squawk Box, referring to how people will now be able to get updates on where exactly their bus is on its route. “Everyone knows they have to get to the bus stop 15 to 30 minutes early. All that is going to end.”

Read full story here.

0 notes