#azure openai service

Text

Can ChatGPT help Microsoft (MST) kill Google?

Microsoft (MST) is trying to destroy Alphabet’s Google by adding the ChatGPT AI to its Bing search engine and Edge browser.

“We’re excited to announce the new Bing is running on a new, next-generation OpenAI large language model that is more powerful than ChatGPT and customized specifically for search,” a Microsoft Press release states. “It takes key learnings and advancements from ChatGPT and…

View On WordPress

#AI-as a service (AIaaS)#Azure OpenAI Service#Can ChatGPT help Microsoft (MST) kill Google?#How Much Money can Alphabet (GOOGL) make from Bing?#Is Alphabet in danger from Microsoft?#Is Microsoft’s New Bing a threat to Alphabet (GOOG)?#Microsoft (MST)#Yes Alphabet is a Value Investment

2 notes

·

View notes

Text

Explore Cloud Computing 2024: Unlock the Future Today!

Discover the future of technology in our blog, "Cloud Computing 2024: Key Trends and Challenges." Stay ahead with insights into the latest trends shaping cloud computing. From AI integration to multi-cloud strategies, we cover it all.

Learn how businesses leverage AI for automation and personalized experiences. Explore the benefits of multi-cloud approaches and the importance of security and compliance. At ECF Data Solutions, we're here to help you navigate the challenges and embrace the opportunities of cloud computing.

Unlock the potential of cloud technology today. Contact us for tailored solutions to propel your business forward.

Contact Us:

ECF Data Solutions

#managed it services#cloud computing#cloud services#cloud security#azure services#azure ai#azure openai service

1 note

·

View note

Text

KPIT has partnered with Microsoft to introduce an Azure OpenAI Service-powered Copilot to transform automotive repair and maintenance.

The Copilot is built on KPIT's proven root cause diagnostics platform Trace2Fix, which addresses customer retention and dealership profitability challenges. These challenges stem from difficulties in identifying the underlying root causes due to complex vehicle systems and a global shortage of certified and skilled service technicians.

Read the media release to know more:

https://www.kpit.com/news/kpits-vehicle-diagnostics-platform-trace2fix-gets-a-microsoft-azure-openai-service-boost/

0 notes

Text

Microsoft's Comprehensive Suite of Free Artificial Intelligence Courses: A Gateway to Mastering AI

"Exciting news! Microsoft offers free AI courses covering everything from basics to advanced topics. Perfect for all skill levels. Enhance your AI knowledge with expert-led training. Don't miss this opportunity - start learning today! #Microsoft #AICourse

In an era where Artificial Intelligence (AI) is reshaping industries and daily lives, Microsoft has taken a significant step forward by offering a series of free courses designed to empower professionals, enthusiasts, and students alike. These courses, available through various online platforms, provide an invaluable opportunity for individuals to enhance their understanding and skills in AI,…

View On WordPress

#AI Education#AI for Beginners#AI-powered Apps#Artificial Intelligence#Azure AI Services#Azure OpenAI Service#Computer Vision#Ethical AI#Free Courses#Generative AI#GitHub Copilot#Machine Learning#Microsoft#Microsoft Copilot Studio#Natural Language Processing#Neural Networks#Power Virtual Agents#Professional Development#Responsible AI#Visual Studio

0 notes

Text

Microsoft and Epic Join for Healthcare AI

Today, technology holds the possibility of helping us overcome some of the largest problems we face, and nowhere is generative AI more necessary or maybe more influential than in the field of healthcare. The advancement of generative AI in the healthcare sector has been made possible by Epic and Microsoft. Together, we are tackling some of the most critical requirements, such as staffing shortages and workforce burnout, in order to assist doctors better serve their patients.

To solve many contemporary difficulties affecting physicians, we merged Epic’s in-depth knowledge of the healthcare sector and clinical procedures with Microsoft’s expansive cloud and AI technology. Today, we’re announcing the extension of our strategic commitment to integrate conversational, ambient, and generative AI technologies throughout the Epic electronic health record (EHR) ecosystem and deliver AI to healthcare at scale. The initiative will broaden secure access to AI-powered clinical insights and administrative tools within a variety of Epic modules to improve patient care, boost operational effectiveness, enhance patient experiences, and support the financial integrity of health systems globally. Its goal is to accelerate the development of solutions for healthcare’s most pressing needs.

As health organizations struggle to address the pressing personnel, budgetary, and clinical access problems they now face, we are collaborating to swiftly deploy hundreds of copilot solutions that safely harness the potential value that the Microsoft Cloud and our AI technologies offer. At its annual Users Group Meeting today, Epic will highlight several of these fresh features that expand on our Azure OpenAI Service and Nuance DAX Express solutions, including:

Using note summary to increase clinician productivity: The new technologies are intended to increase clinical efficiency for doctors and nurses, assisting them in becoming more efficient in their everyday clinical workflow. They build on the previously announced AI-assisted Epic In Basket. The solutions’ recommended content and in-context summaries will promote quicker documentation and evaluation.

Embedded ambient clinical documentation to increase physician productivity: Utilizing Dragon Ambient eXperience (DAX) technology from Nuance, which has already been implemented with hundreds of Epic customers and is currently supporting thousands of physicians, Epic will demonstrate this DAX Express AI technology integrated into the native Epic Hyperdrive platform and Haiku mobile application, further enhancing a seamless workflow experience for users. Furthermore, Epic has designated Nuance as one of the initial Partners in its Partner and Pals third-party vendor program.

Increasing administrative efficiency by reducing labor-intensive, manual processes: One of the many areas where generative AI may significantly increase efficiency is revenue cycle management. In order to increase accuracy and streamline the whole coding and billing processes, Epic will, for instance, show off an AI-powered solution that gives medical coding staff recommendations based on clinical record in the EHR.

Medical advancements for better patient outcomes: In order to fill in any gaps in clinical evidence using real-world data, investigate uncommon illnesses, and more, Epic is now providing generative AI exploration via SlicerDicer for an initial group of customers. This is done by utilizing Azure OpenAI Service.

Our efforts to incorporate Nuance ambient technology and Azure OpenAI Service into the Epic ecosystem demonstrate how more extensive strategic partnerships may significantly speed up the availability of practical AI-driven solutions for healthcare companies and the patients they serve.

The U.S. Department of Health and Human Services projects that there will be a 90,000 physician shortfall nationally by 2025. Additionally, between 40% and 60% of clinicians claim to be burnt out. In addition to these difficulties, healthcare practitioners must manage their finances while still providing high-quality treatment. Nearly 25% of U.S. national health spending is allocated to administrative expenditures, which might be decreased through technology, according to McKinsey & Company.

Additionally, a recent UPMC Center for Connected Medicine/KLAS Research poll of 58 executives from provider and payor companies again emphasized the critical need to increase operational and clinical efficiency. According to the poll, health systems will prioritize spending on artificial intelligence (AI) solutions over the next two years with a focus on operational efficiency, illness management and prediction, population health management, value-based care, patient engagement, and clinical research.

The expanded partnership between Epic and Microsoft will build on the integrations we recently announced, such as the integration of SlicerDicer, Epic’s self-service reporting tool, with the Azure OpenAI Service to enable interactive data analysis and to automatically draft message responses. Additionally, Microsoft and Nuance have worked together to integrate the Nuance® Dragon® Ambient eXperienceTM Express (DAX ExpressTM) solution into the Epic platform with a thorough approach to adding a wider range of AI-powered features for clinical and administrative users.

Epic’s strategy for utilizing Microsoft’s technology and infrastructure is unique in both its length and breadth. In order to solve the current difficulties affecting healthcare, we are all working together to bring generative AI to healthcare at scale as soon as possible, ethically, and in collaboration with providers.

#tech news#technology#govindhtech#news#microsoft#azure openai service#healthcare#health#ai#generative ai

0 notes

Text

[DAX] Descripciones en medidas con Azure Open AI

Hace un tiempo lanzamos un post sobre documentar descripciones de medidas automaticamente usando la external tool Tabular Editor y la API de ChatGPT. Lo cierto es que la API ahora tiene un límite trial de tres meses o una cantidad determinada de requests.

Al momento de decidir si pagar o no, yo consideraría que el servicio que presta Open AI dentro de Azure tiene una diferencia interesante. Microsoft garantiza que tus datos son tus datos. Qué lo que uses con la AI será solo para vos. Para mi eso es suficiente para elegir pagar ChatGPT por Open AI o por Azure.

Este artículo nos mostrará como hacer lo que ya vimos antes pero deployando un ChatGPT 3.5 y cambiando el script de C# para utilizar ese servicio en Azure.

Para poder realizar esta práctica necesitamos contar con un recurso de Azure Open AI. Este recurso se encuentra limitado al público y solo podremos acceder llenando una encuesta. Fijense al momento de crear el recurso debajo de donde seleccionaríamos el precio.

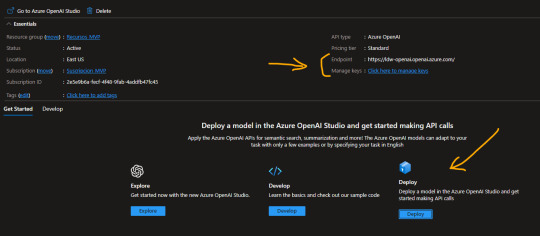

La respuesta de Microsoft para permitirnos usar el recurso puede demorar unos días. Una vez liberado nos permitirá usar un Tier S0. Este recurso es un espacio que nos permite explorar, desarrollar, deployar modelos. En nuestro caso queremos deployar uno ya existente. Al crear el recurso veremos lo siguiente y antes de ingresar a Deploy, copiaremos valores de interes.

Para nuestro script vamos a necesitar el “Endpoint” y una de las “Keys” generadas. Luego podemos dar click en “Deploy”.

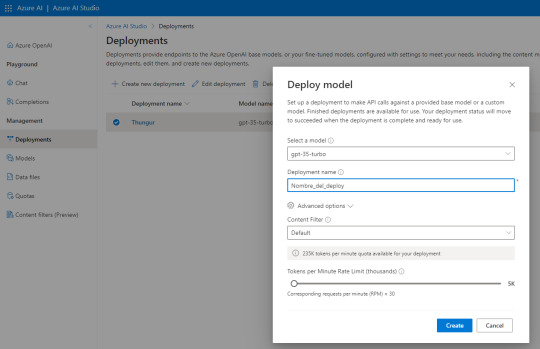

Al abrir Azure AI Studio vamos a “Deployments” para generar uno nuevo y seguimos esta configuración:

El nombre del deploy es importante puesto que será parte de la URL que usamos como request. Seleccionen esa versión de modelo que se usa para Chat especificamente de manera que repliquemos el comportamiento deseado de ChatGPT.

Atención a las opciones avanzadas puesto que nos permiten definir la cuota de tokens por minuto y el rate limiting de requests por minuto. Para mantenerlo similar a la API gratuita de Open AI lo puse en 30. Son 10 más que la anterior.

NOTA: ¿Por qué lo hice? si ya intentaron usar la API Trial de GPT verán que les permite ver sus gastos y consumos. Creo que manteniendo ese rate limiting tuve un costo bastante razonable que me ayudó a que no se extienda demasiado puesto que no solo lo uso para descripciones DAX. Uds pueden cambiar el valor

Con esto sería suficiente para tener nuestro propio deploy del modelo. Si quieren probarlo pueden ir a “Chat” y escribirle. Nos permite ver requests, json y modificarle parámetros:

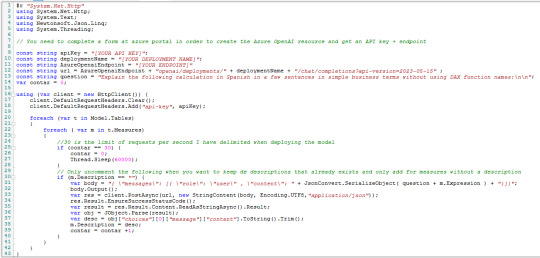

Con esto ya creado y lo valores antes copiados podemos proceder a lo que ya conocemos. Abrimos PowerBi Desktop del modelo a documentar. Luego abrimos Tabular Editor y usaremos el siguiente Script para agregar descripciones a todas las medidas que no tengan descripción previa. Tiene un pausador al llegar a 30 porque es el rate limiting que yo definí en mi modelo. Eso pueden cambiarlo

Las primeras variables son los valores que copiamos y el nombre del deployment. Completando esos tres el resto debería funcionar. El script lo pueden copiar de mi Github.

Con esto obtendrán las descripciones de las medidas automaticamente en sus modelos utilizando el servicio de Azure Open AI, espero que les sirva.

#powerbi#power bi#power bi desktop#azure openai service#azure ai#power bi argentina#power bi jujuy#power bi cordoba#power bi tips#power bi training#power bi tutorial#ladataweb#fabric

0 notes

Photo

The future of business is here: How industries are unlocking AI innovation and greater value with the Microsoft Cloud

Over the past six months, I have witnessed the staggering speed and scale of generative AI technology adoption, and how it has opened doors for organizations to imagine new ways to solve business, societal, and sustainability challenges. For many with modernized data estates fortified with the Microsoft Cloud, advanced AI technology is already unlocking innovation... The post The future of business is here: How industries are unlocking AI innovation and greater value with the Microsoft Cloud appeared first on The Official Microsoft Blog.

https://blogs.microsoft.com/blog/2023/07/24/the-future-of-business-is-here-how-industries-are-unlocking-ai-innovation-and-greater-value-with-the-microsoft-cloud/

#Featured#The Official Microsoft Blog#Azure#Azure AI#Azure Machine Learning#Azure OpenAI Service#Dynamics 365#GitHub Copilot#Microsoft 365#Microsoft 365 Copilot#Microsoft AI Cloud Partner Program#Microsoft Cloud#Microsoft Cloud for Manufacturing#Microsoft HoloLens#Microsoft Viva#Power Platform#Power Virtual Agents#Viva Learning#Judson Althoff

0 notes

Text

Unleashing the Power of Azure OpenAI and Service Embedding for unstructured document search

Are you struggling to find the information you need to be buried deep within unstructured documents? It's time to unleash the power of Azure OpenAI and Service Embedding! In this blog post, we'll show you how to harness the latest advancements in AI and c

View On WordPress

#Azure#Azure AI#Azure OpenAI#Azure OpenAI Service#LLM#Microsoft#microsoft azure#Open Source LLM Model#OpenAI

0 notes

Text

AI-050: Develop Generative AI Solutions with Azure OpenAI Service

Azure OpenAI Service provides access to the powerful OpenAI large language models, such as GPT; the model behind the popular ChatGPT service. These models enable various natural language processing (NLP) solutions for understanding, conversing, and generating content. Users can access the service through REST API, SDK, and Azure OpenAI Studio. In this course, you'll learn how to provision the Azure OpenAI service, deploy models, and use them in generative AI applications.

0 notes

Text

Looking for best AI enabled application development services? look no further! Ahex Technology excels in providing exceptional Artificial Intelligence development services. Elevate your online presence with our user-friendly solutions. Reach out for cutting-edge AI enabled development tailored to your unique needs.

#ahextechnologies#artificial intelligence (AI)#AI Expertise#AI technologies#AI-enabled applications#AI frameworks#developing AI-based applications#AI experts#Microsoft's Azure AI services#Azure AI#OpenAI#AI applications#AI Solutions#build AI-powered applications#Azure AI's Machine Learning#Watson AI#AI models

0 notes

Text

Introducing an easy way to add your data to Azure OpenAI Service for enhanced, personalized applications. Explore the possibilities today!

0 notes

Text

How will Chat GPT integrate to make your business run smoother?

In today's fast-paced business landscape, effective communication is paramount. Companies are constantly seeking innovative solutions to engage customers, improve customer service, and optimize internal operations. With the advancement of AI technology, integrating Chat GPT (Generative Pre-trained Transformer) into business processes is proving to be a game-changer. Chat GPT enables businesses to automate and enhance their communication channels, providing personalized interactions with customers and boosting overall efficiency.

Benefits of Chat GPT Integration

1. Your Helpful Chat Buddy: Chat GPT can act as a virtual assistant, addressing customer queries in real-time. Its ability to understand natural language and context allows for more meaningful and personalized interactions, leading to higher customer satisfaction and retention.

2. Always Ready, Day and Night: Unlike traditional support teams with limited operating hours, Chat GPT can provide round-the-clock assistance, ensuring that customers' concerns are addressed promptly, regardless of the time zone.

3. Smart Shopping Assistant: By integrating Chat GPT into the sales process, businesses can offer tailored product recommendations, answer customer questions, and even assist in completing purchases, resulting in a seamless user experience and increased conversion rates.

4. Helps Teamwork Inside: Chat GPT can also be utilized within the organization to streamline internal communication, facilitating smoother collaboration between team members and automating repetitive tasks.

5. Big Brain for Business: With the ability to process vast amounts of data, Chat GPT integration provides businesses with valuable insights into customer preferences, pain points, and behavior, enabling data-driven decision-making.

Csharptek's Approach to Chat GPT Integration

Csharptek, a leading AI and technology-focused company, is at the forefront of implementing cutting-edge solutions for businesses. With their deep expertise in AI and natural language processing, Csharptek is well-equipped to handle Chat GPT integration with precision and efficiency. We understand that every business has unique requirements. We will work closely with businesses to tailor Chat GPT integration solutions that align with their specific needs, ensuring maximum effectiveness and relevance. Integration can be complex, but our skilled team of developers will ensure smooth and seamless deployment. We will meticulously handle the technical aspects, API integrations, and data management to minimize disruption and optimize performance.

Csharptek ensures that businesses can harness the full potential of Chat GPT, driving success and growth for their ventures. Embrace the power of Chat GPT with Csharptek, and elevate your business communication to unprecedented heights. For general enquiries, you can get in touch with our front desk supporting team at [email protected] or call on +91-9334646668.

0 notes

Quote

会社で機械翻訳が必要な場合はDeepL Proを契約しましょう。会社でChatGPTが必要な場合はAzure OpenAI Serviceを導入するか、Microsoft 365 Copilotを待ちましょう。機密保持の信頼は契約を結んだ上で、金で買いましょう。

[B! AI] いくらAIが便利だからって、子どもの教育データをGPTに流し込んで表を作らせようとする馬鹿教師は滅亡して欲しい|山本一郎(やまもといちろう)|note

87 notes

·

View notes

Text

KPIT has partnered with Microsoft to introduce an Azure OpenAI Service-powered Copilot to transform automotive repair and maintenance.

KPIT has partnered with Microsoft to introduce an Azure OpenAI Service-powered Copilot to transform automotive repair and maintenance.

The Copilot is built on KPIT's proven root cause diagnostics platform Trace2Fix, which addresses customer retention and dealership profitability challenges. These challenges stem from difficulties in identifying the underlying root causes due to complex vehicle systems and a global shortage of certified and skilled service technicians.

Read the media release to know more:

https://www.kpit.com/news/kpits-vehicle-diagnostics-platform-trace2fix-gets-a-microsoft-azure-openai-service-boost/

0 notes

Text

Generative AI, innovation, creativity & what the future might hold - CyberTalk

New Post has been published on https://thedigitalinsider.com/generative-ai-innovation-creativity-what-the-future-might-hold-cybertalk/

Generative AI, innovation, creativity & what the future might hold - CyberTalk

Stephen M. Walker II is CEO and Co-founder of Klu, an LLM App Platform. Prior to founding Klu, Stephen held product leadership roles Productboard, Amazon, and Capital One.

Are you excited about empowering organizations to leverage AI for innovative endeavors? So is Stephen M. Walker II, CEO and Co-Founder of the company Klu, whose cutting-edge LLM platform empowers users to customize generative AI systems in accordance with unique organizational needs, resulting in transformative opportunities and potential.

In this interview, Stephen not only discusses his innovative vertical SaaS platform, but also addresses artificial intelligence, generative AI, innovation, creativity and culture more broadly. Want to see where generative AI is headed? Get perspectives that can inform your viewpoint, and help you pave the way for a successful 2024. Stay current. Keep reading.

Please share a bit about the Klu story:

We started Klu after seeing how capable the early versions of OpenAI’s GPT-3 were when it came to common busy-work tasks related to HR and project management. We began building a vertical SaaS product, but needed tools to launch new AI-powered features, experiment with them, track changes, and optimize the functionality as new models became available. Today, Klu is actually our internal tools turned into an app platform for anyone building their own generative features.

What kinds of challenges can Klu help solve for users?

Building an AI-powered feature that connects to an API is pretty easy, but maintaining that over time and understanding what’s working for your users takes months of extra functionality to build out. We make it possible for our users to build their own version of ChatGPT, built on their internal documents or data, in minutes.

What is your vision for the company?

The founding insight that we have is that there’s a lot of busy work that happens in companies and software today. I believe that over the next few years, you will see each company form AI teams, responsible for the internal and external features that automate this busy work away.

I’ll give you a good example for managers: Today, if you’re a senior manager or director, you likely have two layers of employees. During performance management cycles, you have to read feedback for each employee and piece together their strengths and areas for improvement. What if, instead, you received a briefing for each employee with these already synthesized and direct quotes from their peers? Now think about all of the other tasks in business that take several hours and that most people dread. We are building the tools for every company to easily solve this and bring AI into their organization.

Please share a bit about the technology behind the product:

In many ways, Klu is not that different from most other modern digital products. We’re built on cloud providers, use open source frameworks like Nextjs for our app, and have a mix of Typescript and Python services. But with AI, what’s unique is the need to lower latency, manage vector data, and connect to different AI models for different tasks. We built on Supabase using Pgvector to build our own vector storage solution. We support all major LLM providers, but we partnered with Microsoft Azure to build a global network of embedding models (Ada) and generative models (GPT-4), and use Cloudflare edge workers to deliver the fastest experience.

What innovative features or approaches have you introduced to improve user experiences/address industry challenges?

One of the biggest challenges in building AI apps is managing changes to your LLM prompts over time. The smallest changes might break for some users or introduce new and problematic edge cases. We’ve created a system similar to Git in order to track version changes, and we use proprietary AI models to review the changes and alert our customers if they’re making breaking changes. This concept isn’t novel for traditional developers, but I believe we’re the first to bring these concepts to AI engineers.

How does Klu strive to keep LLMs secure?

Cyber security is paramount at Klu. From day one, we created our policies and system monitoring for SOC2 auditors. It’s crucial for us to be a trusted partner for our customers, but it’s also top of mind for many enterprise customers. We also have a data privacy agreement with Azure, which allows us to offer GDPR-compliant versions of the OpenAI models to our customers. And finally, we offer customers the ability to redact PII from prompts so that this data is never sent to third-party models.

Internally we have pentest hackathons to understand where things break and to proactively understand potential threats. We use classic tools like Metasploit and Nmap, but the most interesting results have been finding ways to mitigate unintentional denial of service attacks. We proactively test what happens when we hit endpoints with hundreds of parallel requests per second.

What are your perspectives on the future of LLMs (predictions for 2024)?

This (2024) will be the year for multi-modal frontier models. A frontier model is just a foundational model that is leading the state of the art for what is possible. OpenAI will roll out GPT-4 Vision API access later this year and we anticipate this exploding in usage next year, along with competitive offerings from other leading AI labs. If you want to preview what will be possible, ChatGPT Pro and Enterprise customers have access to this feature in the app today.

Early this year, I heard leaders worried about hallucinations, privacy, and cost. At Klu and across the LLM industry, we found solutions for this and we continue to see a trend of LLMs becoming cheaper and more capable each year. I always talk to our customers about not letting these stop your innovation today. Start small, and find the value you can bring to your customers. Find out if you have hallucination issues, and if you do, work on prompt engineering, retrieval, and fine-tuning with your data to reduce this. You can test these new innovations with engaged customers that are ok with beta features, but will greatly benefit from what you are offering them. Once you have found market fit, you have many options for improving privacy and reducing costs at scale – but I would not worry about that in the beginning, it’s premature optimization.

LLMs introduce a new capability into the product portfolio, but it’s also an additional system to manage, monitor, and secure. Unlike other software in your portfolio, LLMs are not deterministic, and this is a mindset shift for everyone. The most important thing for CSOs is to have a strategy for enabling their organization’s innovation. Just like any other software system, we are starting to see the equivalent of buffer exploits, and expect that these systems will need to be monitored and secured if connected to data that is more important than help documentation.

Your thoughts on LLMs, AI and creativity?

Personally, I’ve had so much fun with GenAI, including image, video, and audio models. I think the best way to think about this is that the models are better than the average person. For me, I’m below average at drawing or creating animations, but I’m above average when it comes to writing. This means I can have creative ideas for an image, the model will bring these to life in seconds, and I am very impressed. But for writing, I’m often frustrated with the boring ideas, although it helps me find blind spots in my overall narrative. The reason for this is that LLMs are just bundles of math finding the most probable answer to the prompt. Human creativity —from the arts, to business, to science— typically comes from the novel combinations of ideas, something that is very difficult for LLMs to do today. I believe the best way to think about this is that the employees who adopt AI will be more productive and creative— the LLM removes their potential weaknesses, and works like a sparring partner when brainstorming.

You and Sam Altman agree on the idea of rethinking the global economy. Say more?

Generative AI greatly changes worker productivity, including the full automation of many tasks that you would typically hire more people to handle as a business scales. The easiest way to think about this is to look at what tasks or jobs a company currently outsources to agencies or vendors, especially ones in developing nations where skill requirements and costs are lower. Over this coming decade you will see work that used to be outsourced to global labor markets move to AI and move under the supervision of employees at an organization’s HQ.

As the models improve, workers will become more productive, meaning that businesses will need fewer employees performing the same tasks. Solo entrepreneurs and small businesses have the most to gain from these technologies, as they will enable them to stay smaller and leaner for longer, while still growing revenue. For large, white-collar organizations, the idea of measuring management impact by the number of employees under a manager’s span of control will quickly become outdated.

While I remain optimistic about these changes and the new opportunities that generative AI will unlock, it does represent a large change to the global economy. Klu met with UK officials last week to discuss AI Safety and I believe the countries investing in education, immigration, and infrastructure policy today will be best suited to contend with these coming changes. This won’t happen overnight, but if we face these changes head on, we can help transition the economy smoothly.

Is there anything else that you would like to share with the CyberTalk.org audience?

Expect to see more security news regarding LLMs. These systems are like any other software and I anticipate both poorly built software and bad actors who want to exploit these systems. The two exploits that I track closely are very similar to buffer overflows. One enables an attacker to potentially bypass and hijack that prompt sent to an LLM, the other bypasses the model’s alignment tuning, which prevents it from answering questions like, “how can I build a bomb?” We’ve also seen projects like GPT4All leak API keys to give people free access to paid LLM APIs. These leaks typically come from the keys being stored in the front-end or local cache, which is a security risk completely unrelated to AI or LLMs.

#2024#ai#AI-powered#Amazon#animations#API#APIs#app#apps#Art#artificial#Artificial Intelligence#Arts#audio#automation#azure#Building#Business#cache#CEO#chatGPT#Cloud#cloud providers#cloudflare#Companies#Creative Ideas#creativity#cutting#cyber#cyber criminals

2 notes

·

View notes

Text

Open Ai x Microsoft

I was hearing the latest episode of MKBHD’s podcast, And they came across the topic open ai... It was shocking that after all these months it still keeps the hype and rather wat the company does with their hype. They still manage to grow with the hype and keep growing on what they do to this humanity.

Adding Open AI services with microsoft applications are one of the best things going to happen in this year in my opinion.

Let’s dig more into this...

Large language models are quickly becoming an essential platform for people to innovate, apply AI to solve big problems, and imagine what’s possible. Today, we are excited to announce the general availability of Azure OpenAI Service as part of Microsoft’s continued commitment to democratizing AI, and ongoing partnership with OpenAI.

With Azure OpenAI Service now generally available, more businesses can apply for access to the most advanced AI models in the world—including GPT-3.5, Codex, and DALL•E 2—backed by the trusted enterprise-grade capabilities and AI-optimized infrastructure of Microsoft Azure, to create cutting-edge applications. Customers will also be able to access ChatGPT—a fine-tuned version of GPT-3.5 that has been trained and runs inference on Azure AI infrastructure—through Azure OpenAI Service soon.

According to the microsoft experties...

A responsible approach to AI

As an industry leader, we recognize that any innovation in AI must be done responsibly. This becomes even more important with powerful, new technologies like generative models. We have taken an iterative approach to large models, working closely with our partner OpenAI and our customers to carefully assess use cases, learn, and address potential risks. Additionally, we’ve implemented our own guardrails for Azure OpenAI Service that align with our Responsible AI principles. As part of our Limited Access Framework, developers are required to apply for access, describing their intended use case or application before they are given access to the service. Content filters uniquely designed to catch abusive, hateful, and offensive content constantly monitor the input provided to the service as well as the generated content. In the event of a confirmed policy violation, we may ask the developer to take immediate action to prevent further abuse.

We are confident in the quality of the AI models we are using and offering customers today, and we strongly believe they will empower businesses and people to innovate in entirely new and exciting ways.

The pace of innovation in the AI community is moving at lightning speed. We’re tremendously excited to be at the forefront of these advancements with our customers, and look forward to helping more people benefit from them in 2023 and beyond.

And that’s what i wanted to share with you all - Edwin K Jayesh

#technology#tech#tech news#New Tech#tech updates#technology updates#smartphone#smart phone#technews#news#latest#latest news#microsoft#microsoft windows#windows#windows 11#windows 12#open ai#ai#artificially generated#artificial intelligence#chat gpt#dall e#dall e 2

3 notes

·

View notes