#Red Hat Advanced Cluster Security for Kubernetes

Text

Red Hat recently announced the general availability of Red Hat Advanced Cluster Management for Kubernetes ( RHACM) v2.2. RHACM tool provides a central management console from where you can manage multiple Kubernetes-based clusters across data centers, public clouds, and private clouds. You can easily use the multicluster hub to create Red Hat OpenShift Container Platform clusters on selected providers, or import existing Kubernetes-based clusters.

It becomes easy to take control of your application deployment with the management capabilities for cluster creation, application lifecycle, and provide security and compliance for all of them across data centers and hybrid cloud environments.

With Red Hat Advanced Cluster Management for Kubernetes, Clusters and applications are all visible and managed from a single console, with built-in security policies. It becomes easy to run your operations from anywhere that Red Hat OpenShift runs, and manage any Kubernetes cluster in your fleet.

The new release includes the Open Policy Agent (OPA) operator for tighter integration, added new Argo CD integration and more to help you manage and automate your Kubernetes clusters at scale. Below are some of the key features in v2.2 release:

Import and manage Openshift clusters such Azure Red Hat OpenShift , OpenShift Dedicated, Openshift on Openstack and Openshift on IBM Z.

Customized metrics and dashboards: Customization of Grafana dashboards based on metrics you define, along with the predefined metrics, to create personalized views of what is important to you.

Contribute to and ship Open Policy Agent (OPA) as part of ACM: Support of OPA policies by distributing the OPA operator to the fleet.

Compliance Operator support: Run OpenSCAP scans (via the Compliance Operator) against the fleet, and surface the compliance results in ACM.

Argo CD integration: Utilize the fleet information from ACM and provide it to Argo CD, ensuring your applications are compliant and secure.

Install Red Hat Advanced Cluster Management on OpenShift 4.x

In the next steps we walk you through the process of installing Red Hat Advanced Cluster Management on OpenShift 4.x. You should have a working OpenShift 4.x cluster before you proceed with the installation steps.

Step 1: Create rhacm project

Let’s start by creating a new project for Red Hat Advanced Cluster Management deployment.

From CLI:

oc new-project rhacm

For UI project creation, it is done under Home > Projects > Create Project

Confirm the current working project is the one created.

Step 2: Install Red Hat Advanced Cluster Management Operator

Login to OpenShift Web console and navigate to Operators > OperatorHub and search for “Advanced Cluster Management”.

Click the Install button to begin installation of the operator.

Use Operator recommended namespace or create use the namespace we created in the first step.

Choose the “Update Channel” and “Approval Strategy” then hit the “Subscribe” button.

The Operator installation status can be checked under “Installed Operators” section.

Here is a screenshot of successful installation.

Step 3: Create the MultiClusterHub custom resource

In the OpenShift Container Platform console navigation, select Installed Operators > Advanced Cluster Management for Kubernetes

Select the MultiClusterHub tab.

Select Create MultiClusterHub then update the default values in the YAML file, according to your needs.

Wait for the installation to complete.

Upon completion the state should change to “Running“.

Step 4: Access Advanced Cluster Management for Kubernetes Console

Check the route for the Advanced Cluster Management for Kubernetes under “Networking” > “Routes“

Open the URL of your hub on a new tab and login with the OpenShift user credentials. You should be presented with a dashboard similar to below.

To access the local cluster use “Go to Clusters” link:

Important: The local-cluster namespace is used for the imported self-managed hub cluster. You must not have a local-cluster namespace on your cluster prior to installing. After the local-cluster namespace is created for the hub cluster, anyone who has access to the local-cluster namespace is automatically granted cluster administrator access. For security reasons, do not give anyone access to the local-cluster namespace who does not already have cluster-administrator access.

You can click on the listed cluster to view more details.

We have successfully installed and configured Red Hat Advanced Cluster Management on OpenShift 4.x. In our next guides we’ll discuss on Managing clusters, Applications, Security and Troubleshooting that will come in handy during clusters lifecycle management.

0 notes

Text

Red Hat Delivers on Open Source Security Promise for K8s

At the KubeCon + CloudNativeCon Europe 2022 conference, Red Hat this week made good on a promise to make Red Hat Advanced Cluster Security for Kubernetes available as an open source project after acquiring StackRox last year. Michael Foster, a principal product marketing manager at Red Hat, says rather than

The post Red Hat Delivers on Open Source Security Promise for K8s appeared first on Container Journal.

source https://containerjournal.com/features/red-hat-delivers-on-open-source-security-promise-for-k8s/

0 notes

Text

Red Hat open sources StackRox – TechCrunch

Red Hat open sources StackRox – TechCrunch

Last January, Red Hat announced that it was acquiring Kubernetes security startup StackRox, a company that had raised over $65 million since it was founded in 2014. With StackRox, the company acquired a sophisticated security solution for cloud-native applications, which it then rebranded under the slightly more prosaic name of ‘Red Hat Advanced Cluster Security (ACS) for Kubernetes.” Now, the…

View On WordPress

0 notes

Text

Red Hat open sources StackRox, the Kubernetes security platform it acquired last year

Red Hat open sources StackRox, the Kubernetes security platform it acquired last year

Last January, Red Hat announced that it was acquiring Kubernetes security startup StackRox, a company that had raised over $65 million since it was founded in 2014. With StackRox, the company acquired a sophisticated security solution for cloud-native applications, which it then rebranded under the slightly more prosaic name of ‘Red Hat Advanced Cluster Security (ACS) for Kubernetes.” Now, the…

View On WordPress

0 notes

Text

ปกป้อง Containerized Applications อย่างมั่นใจด้วย Solution จาก MFEC, F5 และ Red Hat

บริษัท เอ็ม เอฟ อี ซี จำกัด (มหาชน) และ F5 Networks ร่วมกับ Red Hat (ประเทศไทย) ขอเรียนเชิญลูกค้าเข้าร่วมฟังสัมมนาออนไลน์ภายใต้หัวข้อ ปกป้อง Containerized Applications ตั้งแต่เริ่มต้นถึง Deploy อย่างมั่นใจด้วย solution จาก MFEC, F5 และ Red Hat

เมื่อโลกของ Applications ก้าวมาสู่ Containerized Technology หลายองค์กรจึงจำเป็นต้องเข้าใจถึงการปกป้อง Application ให้ปลอดภัยสูงสุด ภายในงานสัมมนานี้ผู้ร่วมงานจะเข้าใจถึงแง่มุมของ Security ตั้งแต่การ Build Image ตลอดจนถึง Security ที่เกิดขึ้นในขั้นตอนการทำ Continuous Integration/Continuous Deployment และเมื่อ Applications ได้ถูกเอาขึ้นเพื่อรองรับการใช้งานแล้ว จะมีงานด้านความปลอดภัยที่ป้องกันการโจมตีที่เข้ามาที่ Application โดยตรงโดยใช้ Web App Firewall ด้วย

Speaker:

Saran Aroongarn - Solution Architect, Red Hat

Damrongsak Reetanon - Chief Cyber Security Officer, MFEC

Event Date:

31 March 2022 Time: 2.00 – 3.30 PM

Agenda :

2.00 - 2.40 PM Securing Workload in Kubernetes with NGINX App Protect โดยคุณดำรงศักดิ์ รีตานนท์ - Chief Cyber Security Officer, MFEC

2.40 - 3.20 PM Protecting your applications across build, deploy, and runtime with Red Hat Advanced Cluster Security for Kubernetes (ACS) โดยคุณศรัณย์ อรุณการณ์ - Solution Architect, Red Hat

3.20 – 3.30 PM Q&A

Register form : https://bit.ly/3t6VjJs

Free! สำหรับผู้ลงทะเบียนเข้าร่วมงาน (Early Bird) ลุ้นรับ UV Sterilizer เครื่องฆ่าเชื้อด้วยแสง UV มูลค่า 1,000 บาท 5 รางวัล และรางวัลอื่นอีกมากมาย

Topics:

MFEC

from https://bit.ly/3LuTAnS

0 notes

Quote

Open Source Definitely Changed Storage Industry

With Linux and other technologies and products, it impacts all areas.

By Philippe Nicolas | February 16, 2021 at 2:23 pm

It’s not a breaking news but the impact of open source in the storage industry was and is just huge and won’t be reduced just the opposite.

For a simple reason, the developers community is the largest one and adoption is so wide. Some people see this as a threat and others consider the model as a democratic effort believing in another approach. Let’s dig a bit.

First outside of storage, here is the list some open source software (OSS) projects that we use every day directly or indirectly: Linux and FreeBSD of course, Kubernetes, OpenStack, Git, KVM, Python, PHP, HTTP server, Hadoop, Spark, Lucene, Elasticsearch (dual license), MySQL, PostgreSQL, SQLite, Cassandra, Redis, MongoDB (under SSPL), TensorFlow, Zookeeper or some famous tools and products like Thunderbird, OpenOffice, LibreOffice or SugarCRM.

The list is of course super long, very diverse and ubiquitous in our world. Some of these projects initiated some wave of companies creation as they anticipate market creation and potentially domination. Among them, there are Cloudera and Hortonworks, both came public, promoting Hadoop and they merged in 2019. MariaDB as a fork of MySQL and MySQL of course later acquired by Oracle. DataStax for Cassandra but it turns out that this is not always a safe destiny …

Coldago Research estimated that the entire open source industry will represent $27+ billion in 2021 and will pass the barrier of $35 billion in 2024.

Historically one of the roots came from the Unix – Linux transition. In fact, Unix was largely used and adopted but represented a certain price and the source code cost was significant, even prohibitive. Projects like Minix and Linux developed and studied at universities and research centers generated tons of users and adopters with many of them being contributors. Is it similar to a religion, probably not but for sure a philosophy. Red Hat, founded in 1993, has demonstrated that open source business could be big and ready for a long run, the company did its IPO in 1999 and had an annual run rate around $3 billion. The firm was acquired by IBM in 2019 for $34 billion, amazing right. Canonical, SUSE, Debian and a few others also show interesting development paths as companies or as communities.

Before that shift, software developments were essentially applications as system software meant cost and high costs. Also a startup didn’t buy software with the VC money they raised as it could be seen as suicide outside of their mission. All these contribute to the open source wave in all directions.

On the storage side, Linux invited students, research centers, communities and start-ups to develop system software and especially block storage approach and file system and others like object storage software. Thus we all know many storage software start-ups who leveraged Linux to offer such new storage models. We didn’t see lots of block storage as a whole but more open source operating system with block (SCSI based) storage included. This is bit different for file and object storage with plenty of offerings.

On the file storage side, the list is significant with disk file systems and distributed ones, the latter having multiple sub-segments as well.

Below is a pretty long list of OSS in the storage world.

Block Storage Linux-LIO, Linux SCST & TGT, Open-iSCSI, Ceph RBD, OpenZFS, NexentaStor (Community Ed.), Openfiler, Chelsio iSCSI, Open vStorage, CoprHD, OpenStack Cinder

File Storage

Disk File Systems: XFS, OpenZFS, Reiser4 (ReiserFS), ext2/3/4

Distributed File Systems (including cluster, NAS and parallel to simplify the list): Lustre, BeeGFS, CephFS, LizardFS, MooseFS, RozoFS, XtreemFS, CohortFS, OrangeFS (PVFS2), Ganesha, Samba, Openfiler, HDFS, Quantcast, Sheepdog, GlusterFS, JuiceFS, ScoutFS, Red Hat GFS2, GekkoFS, OpenStack Manila

Object Storage Ceph RADOS, MinIO, Seagate CORTX, OpenStack Swift, Intel DAOS

Other data management and storage related projects TAR, rsync, OwnCloud, FileZilla, iRODS, Amanda, Bacula, Duplicati, KubeDR, Velero, Pydio, Grau Data OpenArchive

The impact of open source is obvious both on commercial software but also on other emergent or small OSS footprint. By impact we mean disrupting established market positions with radical new approach. It is illustrated as well by commercial software embedding open source pieces or famous largely adopted open source product that prevent some initiatives to take off. Among all these scenario, we can list XFS, OpenZFS, Ceph and MinIO that shake commercial models and were even chosen by vendors that don’t need to develop themselves or sign any OEM deal with potential partners. Again as we said in the past many times, the Build, Buy or Partner model is also a reality in that world. To extend these examples, Ceph is recommended to be deployed with XFS disk file system for OSDs like OpenStack Swift.

As these last few examples show, obviously open source projets leverage other open source ones, commercial software similarly but we never saw an open source project leveraging a commercial one. This is a bit antinomic. This acts as a trigger to start a development of an open source project offering same functions.

OpenZFS is also used by Delphix, Oracle and in TrueNAS. MinIO is chosen by iXsystems embedded in TrueNAS, Datera, Humio, Robin.IO, McKesson, MapR (now HPE), Nutanix, Pavilion Data, Portworx (now Pure Storage), Qumulo, Splunk, Cisco, VMware or Ugloo to name a few. SoftIron leverages Ceph and build optimized tailored systems around it. The list is long … and we all have several examples in mind.

Open source players promote their solutions essentially around a community and enterprise editions, the difference being the support fee, the patches policies, features differences and of course final subscription fees. As we know, innovations come often from small agile players with a real difficulties to approach large customers and with doubt about their longevity. Choosing the OSS path is a way to be embedded and selected by larger providers or users directly, it implies some key questions around business models.

Another dimension of the impact on commercial software is related to the behaviors from universities or research centers. They prefer to increase budget to hardware and reduce software one by using open source. These entities have many skilled people, potentially time, to develop and extend open source project and contribute back to communities. They see, in that way to work, a positive and virtuous cycle, everyone feeding others. Thus they reach new levels of performance gaining capacity, computing power … finally a decision understandable under budget constraints and pressure. Ceph was started during Sage Weil thesis at UCSC sponsored by the Advanced Simulation and Computing Program (ASC), including Sandia National Laboratories (SNL), Lawrence Livermore National Laboratory (LLNL) and Los Alamos National Laboratory (LANL). There is a lot of this, famous example is Lustre but also MarFS from LANL, GekkoFS from University of Mainz, Germany, associated with the Barcelona Supercomputing Center or BeeGFS, formerly FhGFS, developed by the Fraunhofer Center for High Performance Computing in Germany as well. Lustre was initiated by Peter Braam in 1999 at Carnegie Mellon University. Projects popped up everywhere.

Collaboration software as an extension to storage see similar behaviors. OwnCloud, an open source file sharing and collaboration software, is used and chosen by many universities and large education sites.

At the same time, choosing open source components or products as a wish of independence doesn’t provide any kind of life guarantee. Rremember examples such HDFS, GlusterFS, OpenIO, NexentaStor or Redcurrant. Some of them got acquired or disappeared and create issue for users but for sure opportunities for other players watching that space carefully. Some initiatives exist to secure software if some doubt about future appear on the table.

The SDS wave, a bit like the LMAP (Linux, MySQL, Apache web server and PHP) had a serious impact of commercial software as well as several open source players or solutions jumped into that generating a significant pricing erosion. This initiative, good for users, continues to reduce also differentiators among players and it became tougher to notice differences.

In addition, Internet giants played a major role in open source development. They have talent, large teams, time and money and can spend time developing software that fit perfectly their need. They also control communities acting in such way as they put seeds in many directions. The other reason is the difficulty to find commercial software that can scale to their need. In other words, a commercial software can scale to the large corporation needs but reaches some limits for a large internet player. Historically these organizations really redefined scalability objectives with new designs and approaches not found or possible with commercial software. We all have example in mind and in storage Google File System is a classic one or Haystack at Facebook. Also large vendors with internal projects that suddenly appear and donated as open source to boost community effort and try to trigger some market traction and partnerships, this is the case of Intel DAOS.

Open source is immediately associated with various licenses models and this is the complex aspect about source code as it continues to create difficulties for some people and entities that impact projects future. One about ZFS or even Java were well covered in the press at that time. We invite readers to check their preferred page for that or at least visit the Wikipedia one or this one with the full table on the appendix page.

Immediately associated with licenses are the communities, organizations or foundations and we can mention some of them here as the list is pretty long: Apache Software Foundation, Cloud Native Computing Foundation, Eclipse Foundation, Free Software Foundation, FreeBSD Foundation, Mozilla Foundation or Linux Foundation … and again Wikipedia represents a good source to start.

Open Source Definitely Changed Storage Industry - StorageNewsletter

0 notes

Text

AWS Responds To Anthos And Azure Arc With Amazon EKS Anywhere

New Post has been published on https://perfectirishgifts.com/aws-responds-to-anthos-and-azure-arc-with-amazon-eks-anywhere/

AWS Responds To Anthos And Azure Arc With Amazon EKS Anywhere

Amazon made strategic announcements related to container services at the re:Invent 2020 virtual event. Here is an attempt to deconstruct the container strategy of AWS.

Containers

Amazon EKS Distribution – An Alternative to Commercial Kubernetes Distributions

The cloud native ecosystem is crowded and even fragmented with various distributions of Kubernetes. Customers can choose from upstream Kubernetes distribution available for free or choose a commercial offering such as Charmed Kubernetes from Canonical, Mirantis Container Cloud, Rancher Kubernetes Engine, Red Hat OpenShift and VMware Tanzu Kubernetes Grid.

Amazon has decided to jump the Kubernetes distribution bandwagon with Amazon EKS Distribution (EKS-D), which powers the managed EKS in the cloud. Customers can rely on the same versions of Kubernetes and its dependencies deployed by Amazon EKS, which includes the latest upstream updates and comprehensive security patching support.

Amazon EKS-D comes with source code, open source tooling, binaries and container images, and the required configuration via GitHub and S3 storage locations. With EKS- D, Amazon promises extended support for Kubernetes versions after community support expires, providing updated builds of previous versions, including the latest security patches.

Why Did Amazon Launch EKS-D?

Customers running OpenShift or VMware Tanzu are more likely to run the same flavor of Kubernetes in the cloud. Most of the commercial Kubernetes distributions come with services and support for managing hybrid clusters. In this case, ISVs like Red Hat and VMware will leverage Amazon EC2 to run their managed Kubernetes offering. They decouple the underlying infrastructure (AWS) from the workloads, making it possible to port applications to any cloud.

Amazon’s ultimate goal is to drive the adoption of its cloud platform. With EKS-D, AWS has built an open source bridge to its managed Kubernetes platform, EKS.

Backed by Amazon’s experience and the promise to maintain the distribution even after the community maintenance window expires, it’s a compelling option for customers. An enterprise running EKS-D will naturally use Amazon EKS for its hybrid workloads. This reduces the friction between using a different Kubernetes distribution for on-prem and cloud-based environments. Since it’s free, customers are more likely to evaluate it before considering OpenShift or Tanzu.

Additionally, Amazon can now claim that it made significant investments in open source by committing to maintain EKS-D.

The design of EKS-D, which is based on upstream Kubernetes, makes it easy to modify the components such as the storage, network, security, and observability. The cloud native ecosystem will eventually build reference architectures for using EKS-D with their tools and components. This makes EKS-D better than any other distribution available in the market.

In summary, EKS-D is an investment from Amazon to reduce the friction involved in adopting AWS when using a commercial Kubernetes distribution.

EKS Anywhere – Amazon’s Response to Anthos and Azure Arc

According to AWS, Amazon EKS Anywhere is a new deployment option for Amazon EKS that enables customers to easily create and operate Kubernetes clusters on-premises, including on their own virtual machines (VMs) and bare metal servers.

EKS Anywhere provides an installable software package for building and managing Kubernetes clusters on-premises and automation tooling for cluster lifecycle support.

EKS-A can be technically installed on any infrastructure with available compute, storage, and network resources. This includes on-premises and cloud IaaS such as Google Compute Engine and Azure VMs.

Simply put, Amazon EKS Anywhere is an installer for EKS-D with AWS specific parameters and options. The installer comes with the defaults that are optimized for AWS. It works best on Amazon Linux 2 OS and tightly integrated with App Mesh for service mesh, CloudWatch for observability and S3 for cluster backup. When installed in a VMware environment, it even provides infrastructure management through the integration with vSphere APIs and vCenter. EKS-A relies on GitOps to maintain the desired state of cluster and workloads. Customers can subscribe to an Amazon SNS channel to automatically get updates on patches and releases.

Amazon calls EKS-A an opinionated Kubernetes environment. The keyword here is opinionated, which translates to as proprietary as it can get. From container runtime to the CNI plug-in to cluster monitoring, it has a strong dependence on AWS building blocks.

There is nothing open source about EKS-A. It’s an opaque installer that rolls out an EKS-like cluster on a set of compute nodes. If you want to customize the cluster components, switch to EKS-D, and assemble your own stack.

EKS-A supports three profiles – fully connected, semi-connected and fully disconnected. Unlike ECS Anywhere, EKS-A clusters can be deployed in offline, air-gapped environments. Fully connected and semi-connected EKS-A clusters talk to AWS cloud but have no strict dependency on the cloud.

EKS-A is Amazon’s own version of Anthos. Just like Anthos, it’s tightly integrated with vSphere, can be installed on bare metal or any other cloud. But the key difference is that there is no meta control plane to manage all the EKS-A clusters from a single pane of glass. All other capabilities such as Anthos Service Mesh (ASM) and Anthos Config Management (ACM) will be extended to EKS-A through App Mesh and Flux.

Unlike Anthos, EKS-A doesn’t have the concept of admin clusters and user clusters. What it means is that customers cannot use EKS-A for the centralized lifecycle management of clusters. Every EKS-A cluster is independent of others with optional connectivity to the AWS cloud. This topology closely resembles the stand-alone mode of Anthos on bare metal.

EKS-A will eventually become the de facto compute environment for AWS Edge devices such as Snowball. Similar to K3s, Amazon may even plan to launch an EKS Anywhere Mini to target single node installations of Kubernetes for the edge. It may have tight integration with AWS Greengrass, the software for edge devices.

EKS-A is the first, real multi-cloud software coming from AWS. If you are not concerned about the lock-in it brings, EKS-A dramatically simplifies deploying and managing Kubernetes. It brings AWS a step closer to multi-cloud platforms such as Anthos, Azure Arc, Rancher, Tanzu Mission Control and Red Hat Advanced Cluster Manager for Kubernetes.

EKS Console – The Meta Control Plane for Kubernetes in the Making

Though EKS-A comes across as a proprietary installer for EKS, it goes beyond that. Combined with a new addition called EKS Console, multiple EKS-A clusters can be managed from the familiar AWS Console. Of course, the EKS Console will provide visibility into all the managed clusters running in AWS.

EKS-A clusters running in fully-connected and semi-connected modes can be centrally managed from the EKS Console. AWS may open up the ability to attach non-EKS clusters to the EKS console by running an agent in the target cluster. This brings the ability to apply policies and roll out deployments from a single window.

When Amazon connects the dots between the EKS Console and EKS-A, it will deliver what Azure Arc promises – a single pane of glass to manage registered Kubernetes clusters. Extending this, EKS Console may even spawn new clusters as long as it can talk to the remote infrastructure, which will resemble Anthos. You see the obvious direction in which Amazon is heading!

The investments in ECS Anywhere, EKS Distribution, EKS Anywhere and EKS Console play a significant role in Amazon’s container strategy. They lay a strong foundation for future hybrid cloud and multi-cloud services expected from AWS.

From Cloud in Perfectirishgifts

0 notes

Text

Global Container Technology Market

In this blog post:

1.1 Introduction

1.2 Meaning

1.3 Market overview

1.4 Key players in global container technology market

1.1 Introduction:

Container technology, also simply known as just a container, is a method to package an application so it can be run, with its dependencies, isolated from other processes. The major public cloud computing providers, including Amazon Web Services, Microsoft Azure and Google Cloud Platform have embraced container technology, with container software having names including the popular choices of Docker, Apache Mesos, rkt (pronounced “rocket”), and Kubernetes.

Container technology gets its name from the shipping industry. Rather than come up with a unique way to ship each product, goods get placed into steel shipping containers, which are already designed to be picked up by the crane on the dock, and fit into the ship designed to accommodate the container’s standard size. In short, by standardizing the process, and keeping the items together, the container can be moved as a unit, and it costs less to do it this way.

With computer container technology, it is an analogous situation. Ever have the situation where a program runs perfectly great on one machine, but then turns into a clunky mess when it is moved to the next? This has the potential to occur when migrating the software from a developer’s PC to a test server, or a physical server in a company data center, to a cloud server. Issues arise when moving software due to differences between machine environments, such as the installed OS, SSL libraries, storage, security, and network topology.

Just like the crane picks up the entire container as a unit to put it onto the ship or truck for transport, which facilitates the movement, computer container technology accomplishes the same thing. The container technology contains not only the software, but also the dependencies including libraries, binaries and configuration files, all together, and they get migrated as a unit, avoiding the differences between machines including OS differences and underlying hardware that lead to incompatibilities and crashes. Containers also facilitate deployment of software to a server.

1.2 Meaning:

1.3 Market overview:

Global Container Technology Market applies the most effective of each primary and secondary analysis to weighs upon the competitive landscape and also the outstanding market players expected to dominate Global Container Technology Market place for the forecast 2019– 2025.

Global Container Technology Market is valued at USD 867.89 Million in 2018 and expected to reach USD 3168.33 Million by 2025 with CAGR of 20.32% over the forecast period.

Global Container Technology Market 2019 Industry research report is a proficient and in-depth research report on the world’s major regional market conditions, focusing on the main regions (North America, Europe and Asia-Pacific). It covers the market landscape and its growth prospects over the coming years. The report also includes a discussion of the Key Vendors operating in this Global market.

Container technology is now being adopted widely because of it serving as a reliable and emerging way of packaging, deploying and running the application workloads not needing to move the hardware or the systems of operations physically. Moreover, it is helping the organizations in enabling a runtime which is self-sufficient and the abstracting away of particulars which exist in the operating systems as well as hardware. The application technology manages to categorize the applications and then allows them to be run simultaneously for the resource utilization in an efficient manner.

Container Technology Market Key Segmentations:

By Type (Docker, Rkt, LXC and LXD, Containerd, FreeBSD Jails, Others)

By Applications (Container Monitoring, Container Provisioning, Container Security, Container Data Management, Container Networking)

North America has been garnering the highest market for container technology and this is because of the major levels of penetration of the technology of application container in the region by the larger enterprises. Moreover, the rising adoption of the technology of cloud computing has been boosting the global container technology market. In addition, the continuous advancements in the infrastructure of IT and the larger scale adoption of the technology due to the cloud computing presence and there being a major presence of the vendors for the container orchestration and there being an availability of the staff that is skilled.

The market has been segmented into the organization size, region and industry vertical. On the basis of component, the global container technology market has been bifurcated into the services and platform. As peer the size of the organization, the categorization has been done into SMEs and large enterprises. On industry vertical basis, the global container technology market has been segregated into governments, BSFI, telecom and IT. On the basis of region, the global container technology market has been analyzed as per the regions of LAMEA, North America, Asia-Pacific and Europe.

The major players in the global container technology market are Cisco Systems Inc. Amazon Web Services, Docker Inc, D2iQ, Google LLC., Oracle Corporation, SUSE, Red Hat, Rancher as well as Microsoft Corporation. There is a great trend of the containerization which is driving the global container technology market and most users have been using the products of this market.

1.4 Container Technology Market Key Market Players Analysis:

Apcera, AWS, Chef, Cisco, CloudFoundry, ClusterHQ, CoreOS, Docker, EMC, Hashicorp, Joyent, Mesosphere, Microsoft, Openstack, Rackspace, Others

The global container technology market has been sizably valued in the past few years and the value is only going to increase in the period where the forecasts are made. The orchestration of containers involves the management of the service life of the containers. This also aids in the scheduling of the hosts and its automation, the monitoring of health, deployment and the sharing of the containers as well as the scaling of containers in addition to the descaling as well as load balancing. At the time when a container has to be deployed for the first time in a cluster, the tool of the container orchestration manages to schedule the deployment as well as finds the best host for placing the container on the basis of the predefined constraints. These containers may be placed as per the proximity they have related to the other metadata or the hosts or the labels. The container technology is used for managing the lifecycles of the containers post the running of them on the hosts.

The rising implementation of the architecture for micro-services, the surging levels of adoption of the technology of application container and the increase in the need of the containers as well as for scheduling the workloads and then proliferating the container platforms have been anticipated to make an impact on the global container technology market growth. The global container technology market has been restrained though due to the lack of skills with regard to cloud architect, though the increasing levels of investment on the technology of application containers are expected to offer a lot of opportunities which are lucrative for the expansion of the market.

0 notes

Text

Windows Server 2019 Available at Powerful Box

New Post has been published on https://www.powerfulbox.co.uk/blog/2019/12/31/windows-server-2019-available-at-powerful-box/

Windows Server 2019 Available at Powerful Box

Microsoft Windows Server 2019 is now available at Powerful Box and comes included with any SSD VPS.

Microsoft Windows Server 2019 is the latest iteration of Microsoft’s venerable operating system (OS), and it brings to the table a laundry list of new and improved capabilities and features. This release of Windows Server should especially appeal to IT professionals because of the huge number of functional scenarios it can address. While Windows Server isn’t seen on customer premises as much anymore, it’s still the most popular server OS, and that’s across both on-premises data centers as well as in public clouds where it’s widely used in Infrastructure-as-a-Service (IaaS) implementations.

New features in Windows Server 2019

System Insights

System Insights is a new feature available in Windows Server 2019 that brings local predictive analytics capabilities natively to Windows Server. These predictive capabilities, each backed by a machine-learning model, locally analyze Windows Server system data, such as performance counters and events, providing insight into the functioning of your servers and helping you reduce the operational expenses associated with reactively managing issues in your Windows Server deployments.

Hybrid Cloud

Server Core app compatibility feature on demand: The Server Core App Compatibility Feature on Demand is an optional feature package that can be added to Windows Server 2019 Server Core installations.

Features on Demand (FODs) are Windows feature packages that can be added at any time. Common features include language resources like handwriting recognition or other features like the .NET Framework (.NetFx3). When Windows 10 or Windows Server needs a new feature, it can request the feature package from Windows Update. This app feature significantly improves the app compatibility of the Windows Server Core installation option by including a subset of binaries and packages from Windows Server with Desktop Experience, without adding the Windows Server Desktop Experience graphical environment

Improvements in the area of Security

i. Windows Defender Advanced Threat Protection (ATP)

ATP’s deep platform sensors and response actions expose memory and kernel level attacks and respond by suppressing malicious files and terminating malicious processes.

Windows Defender ATP Exploit Guard is a new set of host-intrusion prevention capabilities. The four components of Windows Defender Exploit Guard are designed to lock down the device against a wide variety of attack vectors and block behaviors commonly used in malware attacks.

ii. Security with Software Defined Networking (SDN)

These security enhancements are integrated into the comprehensive SDN platform introduced in Windows Server 2016.

They include:

Encrypted networks

Firewall auditing

Virtual network peering

Egress metering

iii. Shielded Virtual Machines improvements

Linux support

If you run mixed-OS environments, Windows Server 2019 now supports running Ubuntu, Red Hat Enterprise Linux, and SUSE Linux Enterprise Server inside shielded virtual machines

Troubleshooting improvements

Troubleshooting shielded virual machines has been made easier by VMConnect Enhanced Session Mode and PowerShell Direct. These features do not need to be configured, and they become available automatically when a shielded VM is placed on a Hyper-V host running Windows Server version 1803 or later.

iv. HTTP/2 for a faster and safer Web

Improved coalescing of connections to deliver an uninterrupted and properly encrypted browsing experience.

Upgraded HTTP/2’s server-side cipher suite negotiation for automatic mitigation of connection failures and ease of deployment.

Changed our default TCP congestion provider to Cubic to give you more throughput!

Storage

This release of Windows Server adds the following storage changes and technologies.

i. Manage storage with Windows Admin Center

ii. Storage Migration Service

iii. Storage Spaces Direct (Windows Server 2019 only)

• Deduplication and compression for ReFS volumes

• Native support for persistent memory

• Nested resiliency for two-node hyper-converged infrastructure at the edge

• Two-server clusters using a USB flash drive as a witness

• Windows Admin Center

• Performance history

• Scale up to 4 PB per cluster

• Mirror-accelerated parity is 2X faster

• Drive latency outlier detection

• Manually delimit the allocation of volumes to increase fault tolerance

iv. Storage Replica

v. File Server Resource Manager

Windows Server 2019 includes the ability to prevent the File Server Resource Manager service from creating a change journal (also known as a USN journal) on all volumes when the service starts.

vi. SMB

• SMB1 and guest authentication removal

• SMB2/SMB3 security and compatibility

vii. Data Deduplication

• Data Deduplication now supports ReFS

• DataPort API for optimized ingress/egress to deduplicated volumes

Application Platform

i. Linux containers on Windows

It is now possible to run Windows and Linux-based containers on the same container host, using the same docker daemon.

ii. Building Support for Kubernetes

Windows Server 2019 continues the improvements to compute, networking and storage from the semi-annual channel releases needed to support Kubernetes on Windows.

iii. Container improvements

• Improved integrated identity

• Better application compatibility

• Reduced size and higher performance

• Management experience using Windows Admin Center (preview)

iv. Low Extra Delay Background Transport

Low Extra Delay Background Transport (LEDBAT) is a latency optimized, network congestion control provider designed to automatically yield bandwidth to users and applications.

v. High performance SDN gateways

This greatly improves the performance for IPsec and GRE connections

vi. Persistent Memory support for Hyper-V VMs

This can help to drastically reduce database transaction latency or reduce recovery times for low latency in-memory databases on failure

vii. Windows Time Service

viii. Network performance improvements for virtual workloads

New features include:

Receive Segment Coalescing in the vSwitch

Dynamic Virtual Machine Multi-Queue (d.VMMQ)

Removed Features in Windows Server 2019

• Business Scanning, also called Distributed Scan Management(DSM)

• Internet Storage Name Service (iSNS)\

• Print components – now optional component for Server Coreinstallations

• Remote Desktop Connection Broker and Remote Desktop Virtualization Host in a Server Core installation

• These RDS roles are no longer available for use in a Server Core installation. If youneed to deploy these roles as part of your Remote Desktopinfrastructure, you can install them on Windows Server withDesktop Experience.

Deprecated Features in Windows Server 2019

Features no longer being developed by the team are:

• Key Storage Drive in Hyper-V

• Trusted Platform Module (TPM) management console

• Host Guardian Service Active Directory attestation mode

• Remote Differential Compression API support

• OneSync service

0 notes

Text

National Science Foundation awards grant to develop next-generation cloud computing testbed

- By Nuadox Crew -

Red Hat, Inc., the world's leading provider of open source solutions, announced on December 18 that the National Science Foundation (NSF) Division of Computer and Network Systems has awarded a grant to a research team from Boston University, Northeastern University and the University of Massachusetts Amherst (UMass) to help fund the development of a national cloud testbed for research and development of new cloud computing platforms.

The testbed, known as the Open Cloud Testbed, will integrate capabilities previously developed for the CloudLab testbed into the Massachusetts Open Cloud (MOC), a production cloud developed collaboratively by academia, government, and industry through a partnership anchored at Boston University’s Hariri Institute for Computing. As a founding industry partner and long-time collaborator on the MOC project, Red Hat will work with Northeastern University and UMass, as well as other government and industry collaborators, to build the national testbed on Red Hat’s open hybrid cloud technologies.

Testbeds such as the one being constructed by the research team, are critical for enabling new cloud technologies and making the services they provide more efficient and accessible to a wider range of scientists focusing on research in computer systems and other sciences.

By combining open source technologies and a production cloud enhanced with programmable hardware through field-programmable gate arrays (FPGAs), the project aims to close a gap in computing capabilities currently available to researchers. As a result, the testbed is expected to help accelerate innovation by enabling greater scale and increased collaboration between research teams and open source communities. Red Hat researchers plan to contribute to active research in the testbed, including a wide range of projects on FPGA hardware tools, middleware, operating systems and security.

Beyond this, the project also aims to identify, attract, educate and retain the next generation of researchers in this field and accelerate technology transfer from academic research to practical use via collaboration with industry partners such as Red Hat.

Since its launch in 2014, Red Hat has served as a core partner of the MOC, which brings together talent and technologies from various academic, government, non-profit, and industry organizations to collaboratively create an open, production-grade public cloud suitable for cutting-edge research and development. The MOC’s open cloud stack is based on Red Hat Enterprise Linux, Red Hat OpenStack Platform and Red Hat OpenShift.

Beyond creating the national testbed, the grant will also extend Red Hat’s collaboration with Boston University researchers to develop self-service capabilities for the MOC’s cloud resources. For example, via contributions to the OpenStack bare metal provisioning program (Ironic), the collaboration aims to produce production quality Elastic Secure Infrastructure (ESI) software, a key piece to enabling more flexible and secure resource sharing between different datacenter clusters. And by sharing new developments that enable moving resources between bare metal machines and Red Hat OpenStack or Kubernetes clusters in open source communities such as Ironic or Ansible, Red Hat and the MOC’s researchers are helping to advance technology well beyond the Open Cloud Testbed.

Source: Red Hat, Inc.

Read Also

Tony Flath on the evolution from cloud to AI-enabled multicloud

5 must-reads on the Red Hat acquisition by IBM

0 notes

Text

Contributing back to Ansible — flexible secrets with some Sops

This post is from Edoardo Tenani, DevOps Engineer at Arduino.

In this blog, we’re going to answer: How does one store sensitive data in source code (in this case, Ansible playbooks) securely and in a way that the secrets can be easily shared with the rest of the team?

Ansible is an open source community project sponsored by Red Hat, it’s the simplest way to automate IT. Ansible is the only automation language that can be used across entire IT teams from systems and network administrators to developers and managers.

At Arduino, we started using Ansible around the beginning of 2018 and since then, most of our infrastructure has been provisioned via Ansible playbooks: from the frontend servers hosting our websites and applications (such as Create Web Editor), to the MQTT broker at the heart of Arduino IoT Cloud.

As soon as we started adopting it, we faced one of the most common security problems in software: How does one store sensitive data in source code (in this case, Ansible playbooks) securely and in a way that the secrets can be easily shared with the rest of the team?

Ansible configuration system comes to the rescue here with its built-in mechanism for handling secrets called Ansible Vault, but unfortunately it had some shortcomings for our use case.

The main disadvantage is that Vault is tied to Ansible system itself: In order to use it, you have to install the whole Ansible stack. We preferred a more self-contained solution, possibly compiled in a single binary to ease portability (i.e. inside Docker containers).

The second blocker is the “single passphrase” Ansible Vault relies on: a shared password to decrypt the entire vault. This solution is very handy and simple to use for personal projects or when the team is small, but as we are constantly growing as a company we preferred to rely on a more robust and scalable encryption strategy. Having the ability to encrypt different secrets with different keys, while being able to revoke access to specific users or machines at any time was crucial to us.

The first solution we identified has been Hashicorp Vault, a backend service purposely created for storing secrets and sensitive data with advanced encryption policies and access management capabilities. In our case, as the team was still growing, the operational cost of maintaining our Vault cluster was considered too high (deploying a High Available service that acts as a single point of failure for your operations is something we want to handle properly and with due care).

Around that same time, while reading industry’s best practices and looking for something that could help us managing secrets in source code, we came across mozilla/sops, a simple command line tool that allows strings and files to be encrypted using a combination of AWS KMS keys, GCP KMS keys or GPG keys.

Sops seemed to have all the requirements we were looking for to replace Ansible Vault:

A single binary, thanks to the porting from Python to Golang that Mozilla recently did.

Able to encrypt and decrypt both entire files and single values.

Allow us to use identities coming from AWS KMS, identities that we already used for our web services and where our operations team had access credentials.

A fallback to GPG keys to mitigate the AWS lock-in, allowing us to decrypt our secrets even in the case of AWS KMS disruption.

The same low operational cost.

Sops’ adoption was a great success: The security team was happy and the implementation straightforward, with just one problem. When we tried to use Sops in Ansible configuration system, we immediately noticed what a pain it was to encrypt variables.

We tried to encrypt/decrypt single values using a helper script to properly pass them as extra variables to ansible-playbook. It almost worked, but developers and operations were not satisfied: It led to errors during development and deployments and overall felt clumsy and difficult.

Next we tried to encrypt/decrypt entire files. The helper script was still needed, but the overall complexity decreased. The main downside was that we needed to decrypt all the files prior to running ansible-playbook because Ansible system didn’t have any clue about what was going on: those were basically plain ansible var_files. It was an improvement, but still lacking the smooth developer experience we wanted.

As Ansible configuration system already supports encrypted vars and storing entire files in Ansible Vault, the obvious choice was to identify how to replicate the behaviour using Sops as the encryption/decryption engine.

Following an idea behind a feature request first opened upstream in the Ansible repository back in 2018 (Integration with Mozilla SOPS for encrypted vars), we developed a lookup plugin and a vars plugin that seamlessly integrate Ansible configuration system and Sops.

No more helper scripts needed

Just ensure Sops executable is installed, correct credentials are in place (ie. AWS credentials or GPG private key) and run ansible-playbook as you normally would.

We believe contributing to a tool we use and love is fundamental in following the Arduino philosophy of spreading the love for open source.

Our sops plugins are currently under review in the mozilla/sops GitHub repository: Add sops lookup plugin and Add sops vars plugin.

You can test it out right away by downloading the plugin files from the PRs and adding them in your local Ansible controller installation. You will then be able to use both plugins from your playbooks. Documentation is available, as for all Ansible plugins, in the code itself at the beginning of the file; search for DOCUMENTATION if you missed it.

If you can leave a comment or a GitHub reaction on the PR, that would be really helpful to expedite the review process.

What to do from now on?

If you’re a developer you can have a look at Sops’ issues list and contribute back to the project!

The Sops team is constantly adding new features (like a new command for publishing encrypted secrets in latest 3.4.0 release, or Azure Key Vault support) but surely there are interesting issues to tackle. For example, the Kubernetes Secret integration being discussed in issue 401 or the –verify command discussed in issue 437.

Made with <3 by the Arduino operations team!

Ansible® is a registered trademark of Red Hat, Inc. in the United States and other countries.

Contributing back to Ansible — flexible secrets with some Sops was originally published on PlanetArduino

0 notes

Text

5 Kubernetes trends to watch in 2020

It’s been a busy year for Kubernetes, marked most recently by the release of version 1.17, the fourth (and last) release of 2019. Many signs indicate that adoption is growing – that might be putting it mildly – and few omens suggest this will change soon.

Organizations continue to increase their usage of containerized software, fueling Kubernetes’ growth.

“As more and more organizations continue to expand on their usage of containerized software, Kubernetes will increasingly become the de facto deployment and orchestration target moving forward,” says Josh Komoroske, senior DevOps engineer at StackRox.

Indeed, some of the same or similar catalysts of Kubernetes interest to this point – containerization among them – are poised to continue in 2020. The shift to microservices architecture for certain applications is another example.

“2020 will see some acceleration by organizations for transformation to a microservices-based architecture based on containers, from a service-oriented architecture (SOA),” says Raghu Kishore Vempati, director for technology, research, and innovation at Altran. “The adoption of Kubernetes as an orchestration platform will hence see a significant rise.”

Rising adoption is really just table stakes in terms of Kubernetes issues that IT leaders and practitioners should keep tabs on in 2020. Let’s dig into five other probable trends in the year ahead.

Key Kubernetes trends

1. Expect a rising tide of “Kubernetes-native” software

In many organizations, the first step toward Kubernetes adoption to date might be best described as Oh, we can use Kubernetes for this! That means, for example, that a team running a growing number of containers in production might quickly see the need for orchestration to manage it all.

More organizations will develop software specifically with Kubernetes in mind.

Komoroske expects another adoption trend to grow in the near future: We can build this for Kubernetes! It’s the software equivalent of a cart-and-horse situation: Instead of having an after-the-fact revelation that Kubernetes would be a good fit for managing a particular service, more organizations will develop software specifically with Kubernetes in mind.

“I expect…not only containerized software that happens to be deployable in Kubernetes, but also software that is aware of and able to provide unique value when deployed in Kubernetes,” Komoroske says.

The roots of this trend are already growing, evident in the emerging ecosystem around Kubernetes. As Red Hat VP and CTO Chris Wright has noted, “Just as Linux emerged as the focal point for open source development in the 2000s, Kubernetes is emerging as a focal point for building technologies and solutions (with Linux underpinning Kubernetes, of course.)”

As a subset of this trend, Komoroske anticipates the growth of software branded as “Kubernetes-first” (or Kubernetes-native). There’s a marketplace reason, of course: Kubernetes is a hot topic, and the name alone attracts attention. But there’s substance underneath that, and Komoroske sees some specific areas where new solutions are likely to spring up.

“Software that is released and branded as ‘Kubernetes-first’ will be increasingly common, possibly manifesting as custom resources definitions or Kubernetes Operators,” Komoroske says.

On that topic, if you need a crash course in Operators, or need to help others understand them, check out our article: How to explain Kubernetes Operators in plain English.

2. Will Federation (finally) arrive?

Vempati notes that there has been interest in better Federation capabilities in Kubernetes for a little while now; from his vantage point, the ensuing development efforts in the community appear to be getting closer to paying off.

“While many features of Kubernetes have acquired maturity, Federation has undergone two different cycles of development,” Vempati says. “While v1 of Kubernetes Federation never achieved GA, v2 (KubeFed) is currently in Alpha. In 2020, the Kubernetes Federation feature will most likely reach Beta and possibly GA as well.”

You can access the KubeFed Github here. It’s also helpful to understand the “why” behind KubeFed: It’s potentially significant for running Kubernetes in multi-cloud and hybrid cloud environments. Here’s more of Vempati’s perspective on the issue:

“Federation helps coordinate multiple Kubernetes clusters using configuration from a single set of APIs in a hosting cluster,” Vempati says. “This feature is extremely useful for multi-cloud and distributed solutions.”

3. Security will continue to be a high-profile focus

As the footprint of just about any system or platform increases, so does the target on its back.

As the footprint of just about any system or platform increases, so does the target on its back. It’s like a nefarious version supply and demand; the greater the supply of Kubernetes clusters running in production, the greater “demand” there will be among bad actors trying to find security holes.

“As the adoption of Kubernetes and deployment of container-based applications in production accelerate to much higher volumes than we’ve seen to date, we can expect more security incidents to occur,” says Rani Osnat, VP of strategy at Aqua Security. “Most of those will be caused by the knowledge gap around what constitutes secure configuration, and lack of proper security tooling.”

It’s not that Kubernetes has inherent security issues, per se. In fact, there’s a visible commitment to security in the community. It simply comes with some new considerations and strategies for managing risks. According to Osnat, bad actors are getting better at spotting vulnerabilities.

“Our team has seen that it currently takes only one hour for attackers to recognize an unprotected cluster running in the public cloud and attempt to breach it,” Osnat says. “The most common attack vector is cryptocurrency mining, but wherever that’s possible, other types of attacks such as data exfiltration are possible.”

Osnat says it’s incumbent on IT teams to properly harden their environments: “Implement runtime protection to monitor for indicators of compromise and prevent them from escalating,” Osnat advises as one tactic.[Source]-https://enterprisersproject.com/article/2020/1/kubernetes-trends-watch-2020

Basic & Advanced Kubernetes Certification using cloud computing, AWS, Docker etc. in Mumbai. Advanced Containers Domain is used for 25 hours Kubernetes Training.

1 note

·

View note

Text

Red Hat เตรียมโอเพนซอร์ส StackRox ซอฟต์แวร์ความปลอดภัย Kubernetes

Red Hat เตรียมเปิดซอร์ส StackRox ซอฟต์แวร์ความปลอดภัย Kubernetes ที่ซื้อกิจการมาในเดือนมกราคม 2021

ซอฟต์แวร์ของ StackRox จะกลายมาเป็นโครงการต้นน้ำของ Red Hat Advanced Cluster Security for Kubernetes ที่เป็นผลิตภัณฑ์จับลูกค้าองค์กรแบบคิดเงิน ของ Red Hat (ลักษณะจะคล้าย Fedora ที่เป็นฐานของ RHEL)

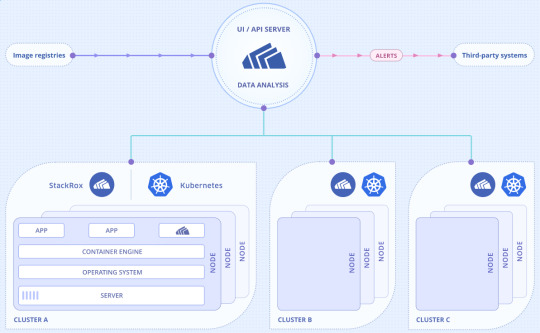

แนวทางจัดการความปลอดภัยของ StackRox คือการรันซอฟต์แวร์มอนิเตอร์ของ StackRox ในคลัสเตอร์ Kubernetes และมีเซิร์ฟเวอร์ที่ดึงข้อมูลกลับมาวิเคราะห์-แสดงผลให้แอดมินทราบสถานะความปลอดภัยของทั้งระบบ (ตามแผนผัง) ซอฟต์แวร์ของ StackRox สามารถใช้กับคอนเทนเนอร์ Kubernetes ได้ทุกเจ้า เช่น Amazon EKS, Google GKE, Azure AKS, Docker Enterprise และ Red Hat OpenShift

ที่ผ่านมา บริษัท StackRox มีซอฟต์แวร์โอเพนซอร์สอยู่แล้วหนึ่งตัวคือ KubeLinter และจะทยอยเปิดซอร์สของซอฟต์แวร์อื่นๆ ตามมาในเร็วๆ นี้

ที่มา - StackRox, Red Hat, The Register

Topics:

Red Hat

Security

Kubernetes

Open Source

from https://j.mp/2SsHyoL

0 notes

Link

Get hands-on training in AWS, Python, Java, blockchain, management, and many other topics.

Develop and refine your skills with 100+ new live online trainings we opened up for April and May on our learning platform.

Space is limited and these trainings often fill up.

Creating Serverless APIs with AWS Lambda and API Gateway, April 6

Getting Started with Amazon Web Services (AWS), April 19-20

Python Data Handling: A Deeper Dive, April 20

How Product Management Leads Change in the Enterprise, April 23

Beyond Python Scripts: Logging, Modules, and Dependency Management, April 23

Beyond Python Scripts: Exceptions, Error Handling, and Command-Line Interfaces, April 24

Getting Started with Go, April 24-25

End-to-End Data Science Workflows in Jupyter Notebooks, April 27

Getting Started with Vue.js, April 30

Java Full Throttle with Paul Deitel: A One-Day, Code-Intensive Java Standard Edition Presentation, April 30

Building a Cloud Roadmap, May 1

Git Fundamentals, May 1-2

AWS Certified SysOps Administrator (Associate) Crash Course , May 1-2

OCA Java SE 8 Programmer Certification Crash Course, May 1-3

Getting Started with DevOps in 90 Minutes, May 2

Learn the Basics of Scala in 3 hours, May 2

IPv4 Subnetting, May 2-3

SQL Fundamentals for Data, May 2-3

SAFe 4.5 (Scaled Agile Framework) Foundations, May 3

Managing Team Conflict, May 3

Hands-On Machine Learning with Python: Clustering, Dimension Reduction, and Time Series Analysis, May 3

Google Cloud Platform Professional Cloud Architect Certification Crash Course, May 3-4

Cyber Security Fundamentals, May 3-4

Advanced Agile: Scaling in the Enterprise, May 4

Network Troubleshooting Using the Half Split and OODA, May 4

Software Architecture for Developers, May 4

Hands-On Machine Learning with Python: Classification and Regression, May 4

Building and Managing Kubernetes Applications, May 7

Introducing Blockchain, May 7

Get Started with NLP, May 7

Introduction to Digital Forensics and Incident Response (DFIR), May 7

Essential Machine Learning and Exploratory Data Analysis with Python and Jupyter Notebooks, May 7-8

Building Deployment Pipelines with Jenkins 2, May 7 and 9

Introduction to Apache Spark 2.x, May 7-9

Deep Learning Fundamentals, May 8

Acing the CCNA Exam, May 8

Emotional Intelligence for Managers, May 8

Scala Core Programming: Methods, Classes, and Traits, May 8

Design Patterns Boot Camp, May 8-9

Introduction to Lean, May 9

Beginner’s Guide to Creating Prototypes in Sketch, May 9

AWS Certified Solutions Architect Associate Crash Course, May 9-10

Cloud Native Architecture Patterns, May 9-10

Amazon Web Services: Architect Associate Certification - AWS Core Architecture Concepts, May 9-11

Blockchain Applications and Smart Contracts, May 10

Deep Reinforcement Learning, May 10

Getting Started with Machine Learning, May 10

Introduction to Ethical Hacking and Penetration Testing, May 10-11

Explore, Visualize, and Predict using pandas and Jupyter, May 10-11

Scalable Web Development with Angular, May 10-11

Apache Hadoop, Spark, and Big Data Foundations, May 11

Visualizing Software Architecture with the C4 Model, May 11

Write Your First Hadoop MapReduce Program, May 14

Write Your First Spark Program in Java, May 14

Interactive Java with Java 9’s JShell, May 14

Bash Shell Scripting in 3 Hours, May 14

Learn Linux in 3 Hours, May 14

Cybersecurity Blue Teams vs. Red Teams, May 14

Next-Generation Java testing with JUnit 5, May 14

Product Management in Practice, May 14-15

IoT Fundamentals, May 14-15

Porting from Python 2 to Python 3, May 15

Red Hat Certified System Administrator (RHCSA) Crash Course, May 15-18

Introduction to Analytics for Product Managers, May 16

Architecture Without an End State, May 16-17

Deploying Container-Based Microservices on AWS, May 16-17

Agile for Everybody, May 17

Introduction to Google Cloud Platform, May 17

Practical Data Cleaning with Python, May 17-18

Hands-on Introduction to Apache Hadoop and Spark Programming, May 17-18

Troubleshooting Agile, May 18

Managing your Manager, May 18

Building Chatbots with AWS, May 18

Your First 30 Days as a Manager, May 21

Introduction to Unreal Engine 4 with Blueprints, May 21

Introduction to Critical Thinking, May 21

Testing and Validating Product Ideas with Lean, May 21

From Developer to Software Architect, May 22-23

CISSP Crash Course, May 22-23

Introduction to Kubernetes, May 22

CCNP R/S ROUTE (300-101) Crash Course, May 22-24

Advanced SQL for Data Analysis (with Python, R, and Java), May 23

Docker: Beyond the Basics (CI & CD), May 23-24

Introduction to TensorFlow, May 23-24

Leadership Communication Skills for Managers, May 24

Cyber Security Defense, May 24

End-to-End Data Science Workflows in Jupyter Notebooks, May 24

The DevOps Toolkit, May 24-25

Introduction to Cisco Next-Generation Firewalls, May 24-25

Amazon Web Services: Architect Associate Certification - AWS Core Architecture Concepts, May 24-25

Kubernetes in 3 Hours, May 25

Ansible in 3 Hours, May 25

Design Fundamentals for Non-Designers, May 25

Python Data Handling - A Deeper Dive, May 29

Introduction to Modularity with the Java 9 Platform Module System (JPMS), May 29

CCNA Security Crash Course, May 29-30

Scala: Beyond the Basics, May 29-30

Microservices Architecture and Design, May 29-30

Docker: Up and Running, May 29-30

High Performance TensorFlow in Production: Hands on with GPUs and Kubernetes, May 29-30

Rethinking REST: A Hands-On Guide to GraphQL and Queryable APIs, May 30

PMP Crash Course, May 31-June 1

Test Driven Development in Java, May 31-June 1

Architecture Without an End State, May 31-June 1

Building Microservices with Spring Boot, Spring Cloud, and Cloud Foundry, July 2-3

Visit our learning platform for more information on these and other live online trainings.

Continue reading 100+ new live online trainings just launched on O'Reilly's learning platform.

from All - O'Reilly Media https://ift.tt/2q5z0lQ

0 notes

Text

5 Kubernetes trends to watch in 2020

It’s been a busy year for Kubernetes, marked most recently by the release of version 1.17, the fourth (and last) release of 2019. Many signs indicate that adoption is growing – that might be putting it mildly – and few omens suggest this will change soon.

Organizations continue to increase their usage of containerized software, fueling Kubernetes’ growth.

“As more and more organizations continue to expand on their usage of containerized software, Kubernetes will increasingly become the de facto deployment and orchestration target moving forward,” says Josh Komoroske, senior DevOps engineer at StackRox.

Indeed, some of the same or similar catalysts of Kubernetes interest to this point – containerization among them – are poised to continue in 2020. The shift to microservices architecture for certain applications is another example.

“2020 will see some acceleration by organizations for transformation to a microservices-based architecture based on containers, from a service-oriented architecture (SOA),” says Raghu Kishore Vempati, director for technology, research, and innovation at Altran. “The adoption of Kubernetes as an orchestration platform will hence see a significant rise.”

Rising adoption is really just table stakes in terms of Kubernetes issues that IT leaders and practitioners should keep tabs on in 2020. Let’s dig into five other probable trends in the year ahead.

Key Kubernetes trends

1. Expect a rising tide of “Kubernetes-native” software

In many organizations, the first step toward Kubernetes adoption to date might be best described as Oh, we can use Kubernetes for this! That means, for example, that a team running a growing number of containers in production might quickly see the need for orchestration to manage it all.

Komoroske expects another adoption trend to grow in the near future: We can build this for Kubernetes! It’s the software equivalent of a cart-and-horse situation: Instead of having an after-the-fact revelation that Kubernetes would be a good fit for managing a particular service, more organizations will develop software specifically with Kubernetes in mind.

“I expect…not only containerized software that happens to be deployable in Kubernetes, but also software that is aware of and able to provide unique value when deployed in Kubernetes,” Komoroske says.

The roots of this trend are already growing, evident in the emerging ecosystem around Kubernetes. As Red Hat VP and CTO Chris Wright has noted, “Just as Linux emerged as the focal point for open source development in the 2000s, Kubernetes is emerging as a focal point for building technologies and solutions (with Linux underpinning Kubernetes, of course.)”

As a subset of this trend, Komoroske anticipates the growth of software branded as “Kubernetes-first” (or Kubernetes-native). There’s a marketplace reason, of course: Kubernetes is a hot topic, and the name alone attracts attention. But there’s substance underneath that, and Komoroske sees some specific areas where new solutions are likely to spring up.

“Software that is released and branded as ‘Kubernetes-first’ will be increasingly common, possibly manifesting as custom resources definitions or Kubernetes Operators,” Komoroske says.

On that topic, if you need a crash course in Operators, or need to help others understand them, check out our article: How to explain Kubernetes Operators in plain English.

2. Will Federation (finally) arrive?

Vempati notes that there has been interest in better Federation capabilities in Kubernetes for a little while now; from his vantage point, the ensuing development efforts in the community appear to be getting closer to paying off.

“While many features of Kubernetes have acquired maturity, Federation has undergone two different cycles of development,” Vempati says. “While v1 of Kubernetes Federation never achieved GA, v2 (KubeFed) is currently in Alpha. In 2020, the Kubernetes Federation feature will most likely reach Beta and possibly GA as well.”

You can access the KubeFed Github here. It’s also helpful to understand the “why” behind KubeFed: It’s potentially significant for running Kubernetes in multi-cloud and hybrid cloud environments. Here’s more of Vempati’s perspective on the issue:

“Federation helps coordinate multiple Kubernetes clusters using configuration from a single set of APIs in a hosting cluster,” Vempati says. “This feature is extremely useful for multi-cloud and distributed solutions.”

3. Security will continue to be a high-profile focus

As the footprint of just about any system or platform increases, so does the target on its back. It’s like a nefarious version supply and demand; the greater the supply of Kubernetes clusters running in production, the greater “demand” there will be among bad actors trying to find security holes.

“As the adoption of Kubernetes and deployment of container-based applications in production accelerate to much higher volumes than we’ve seen to date, we can expect more security incidents to occur,” says Rani Osnat, VP of strategy at Aqua Security. “Most of those will be caused by the knowledge gap around what constitutes secure configuration, and lack of proper security tooling.”

It’s not that Kubernetes has inherent security issues, per se. In fact, there’s a visible commitment to security in the community. It simply comes with some new considerations and strategies for managing risks. According to Osnat, bad actors are getting better at spotting vulnerabilities.[Source]-https://enterprisersproject.com/article/2020/1/kubernetes-trends-watch-2020

Basic & Advanced Kubernetes Certification using cloud computing, AWS, Docker etc. in Mumbai. Advanced Containers Domain is used for 25 hours Kubernetes Training.

0 notes