#Juniper Publishers Indexing Sites

Text

Let’s Talk and Grow Together: A Bidirectional Communication between Granulosa- and Oocyte Derived Factors in the Ovary

Abstract

Reproduction, one of the most active and appealing area of research for endocrinologists and reproductive biologists since many a years, has several faces that remains to be unmasked in terms of its regulatory aspects. Available information on the regulation of oocyte development and maturational competence are gaping and needs elucidation to achieve utmost quality of eggs, a major area of concern. The notion of the somatic follicular cells providing an appropriate microenvironment for the development of oocyte throughout its journey has been replaced with the current perception of a complex yet regulated cross-talk between the granulosa-and oocyte-derived factors to orchestrate follicle development. Interestingly, actions of FSH and LH are mediated or modulated by these locally produced non-steroidal peptide factors from the follicular layer and the oocyte itself (insulin-like growth factors (IGFs), epidermal growth factor (EGF) family members, TGFβ super family members etc.), forming an intimate regulatory network within the ovarian follicles. Present article will provide a deeper insight into the need and underlying mechanisms of action of these growth factors in the intraovarian network to sustain a healthy oocyte.

Read More About This Article: https://juniperpublishers.com/gjorm/GJORM.MS.ID.555569.php

Read More Juniper Publishers Google Scholar Articles: https://scholar.google.com/citations?view_op=view_citation&hl=en&user=xBu_HGEAAAAJ&authuser=1&citation_for_view=xBu_HGEAAAAJ:Zph67rFs4hoC

#gjorm#overy#reproductive medicine#Juniper Publishers Indexing Sites List#Juniper Publishers PubMed Indexed Articles

0 notes

Text

Seasonal Prediction of Marine Ecosystems: How Close Are We?- Juniper Publishers

Abstract

The primary purpose of this article is to supply definitive statements regarding current skills in seasonal prediction with emphasis on marine ecosystem indicators and how forecasts could be possibly used for societal benefit. To enhance skills and setting priorities toward the further development and application of the existing dynamical models for seasonal prediction one has to recognize that this kind of process necessarily requires robust interactions amongst the biophysical science and applications communities and a delicate balance between scientific feasibility and application requirements. Also, recommendations for improving seasonal prediction skills and enhancing the use of seasonal prediction information for applications are outlined.

Introduction

A marine ecosystem is a complex nonlinear dynamical system, with significant spatial variability, strongly linked to the circulation of the atmosphere and oceans, and temporal variability ranging from hours to decades. Marine ecosystem forecasters interact with society mainly because of the latter's demands for accurate and detailed ocean environmental forecasts. The complexity concerning the oceanic ecosystem demonstrates that quantitative predictions can only be made using comprehensive numerical models, which encode the relevant laws of dynamics, thermodynamics and biochemistry for a complex dynamical system. Typically, such models include some millions of scalar equations, describing the interaction of physics and biochemistry on scales ranging from tens of kilometres to tens of hundreds of kilometres. These equations can only be solved on large computers.

Predictability � Theory and Models

The maximum predictability of a marine ecosystem is yet to be achieved in operational seasonal forecasting. This positioning is based upon the recognition that the model error continues to limit forecast quality and that the interactions among the nonlinear terms of the ecosystem set the limits of predictability. The fact that model error continues to be problematic is evident from the need for successful calibration efforts and the efficient use of empirical techniques to improve dynamical model forecasts. Essentially there is untapped predictability since that we currently may not take into consideration important interactions among the physical and biological components of the ecosystem. The maximum achievable predictability is unknown and assessing this limit requires much additional research.

Chaos theory developed in an attempt to demonstrate the limited predictability of atmospheric variations [1]. In the past, the topic of predictability has been a somewhat theoretical and idealized one and to some extent not used in the practicalities of prediction. The predictability problem could well be formulated e.g. through a Liouville equation [2]. However, in practice, estimates of predictability are created from multiple (ensemble) forecasts of comprehensive atmospheric and ocean prediction models [3-6]. The uncertainty mainly in model error and initialization is quantified by contracting ensemble members, where the individual members of the ensemble differ by small perturbations [7]. For instance, the predictability of weather is largely determined by uncertainty in a forecast's starting conditions [8,9], whilst the predictability of ecosystem variations is also influenced by uncertainty in representing computationally the equations that govern the biogeochemical model (for example, to what extent the phytoplankton species should be represented, and subsequently what will be the model error). Furthermore, chaos theory shows that these kinds of environmental forecasts have to be expressed probabilistically [7]; the laws of physics dictate that relatively long-term accurate weather and ocean forecasts cannot be expected. These probability forecasts quantify uncertainty in weather and ecosystem prediction. The forecaster has to strive to estimate reliable probabilities, not to disseminate predictions with a precision that cannot be justified scientifically. Examples have shown that, in practice, the economic value of a reliable probability forecast (produced from an ensemble prediction system) exceeds the value associated with a single deterministic forecast with uncertain accuracy [10,11].

Scientific indications suggest unequivocally that predictions should be provided only as probabilities, utilizing either ensembles with dynamical models or appropriate alternatives along with empirical models. Metrics involved with probability estimations and their interpretations, are more complex compared to the deterministic ones. The necessity of a procedure that in fact sets a framework including all aspects of seasonal ecosystem forecast is incontestable considering the steadily expanding demand and use of model estimations. The purpose of hind-casts will be the standard method for improving the sample measurements. However, hind-casts may also have many challenging concerns that should be tackled, like insufficient initialization data, non-stationary nature of observing systems and non-stationary nature of the marine ecosystem.

Producing reliable probability forecasts from ensembles of atmospheric/biophysical model integrations put enormous demands on computer resources. Computer power is essential so that one can resolve the details of such a system [12]. It has been argued that, as a result of the nonlinear nature of a given system, systematic mistreatment of small-scale phenomena may lead to the systematic mistreatment of large-scale phenomena. However, one can find reasons for studying small-scale phenomena in their right. From an ecosystem prediction perspective, it is essential to have the ability to simulate such details, if models are able to forecast significant events like spring-blooms or fish stocks. However, this poses a significant dilemma given the current computing resources. To simulate extreme events, models with considerable complexity and resolution are required. On the other hand, estimating changes reliably to the probability distributions of extreme and relatively rare occasions, a large number of ensembles have to be used. One fact is inevitable; the more the necessity to provide reliable forecasts of uncertainty in the predictions of weather and ecosystem, the more the demand for computer power exceeds availability. Indeed the call for quantitative predictions of uncertainty is typically a pertinent consideration related to the design of future generations of supercomputers; ensemble prediction generally is a perfect application for parallel computing.

Model errors, particularly in areas exhibiting strong interannual variability of the ocean circulation and vertical mixing, such as the winter-spring period, continue to hamper seasonal prediction skill. The benefit of reducing the model error has not been overstated. There is a range of strategies for improving models including better representation of the interactions among the elements of the ecosystem (tuning and customization to reduce any model bias), better description of biogeochemical cycles, and substantially increased spatial resolution. All of these strategies ought to be vigorously pursued. Except the model errors, the production of seasonal forecast quality remains hindered using a wide range of factors, including:

I. Scarcity: Unlike daily forecasts, seasonal predictions can be obtained only a few times per year, creating difficulties in accumulating the information required to provide stable estimates of great quality (together with the expense of producing the predictions when ensembles are utilized),

II. Changes in variability: Predictability of ecosystem variables varies between years, across the Mediterranean, Atlantic, Baltic and Black Sea with strong evidence that these variations are also related to atmospheric and hydrodynamic variability,

III. Seasonal quality: Most metrics of seasonal forecast quality, are mainly technically presented in a nature not easily communicated to audiences outside the seasonal forecasting community.

Several broad qualitative and quantitative outcomes have been produced from the experiments recommending that seasonal predictions are more skilful in specified regions. Furthermore, climatology, or persisting recent seasonal anomalies in many cases can provide useful information. The quality of seasonal predictions varies on an inter-annual basis, partly connected to inter-annual variability of the specific marine ecosystem dynamics; average quality also differs between particular seasons. The primary seasonal variables of great interest, predictions of specific hydrodynamic variables (temperature, salinity, etc.) usually are of better quality than biogeochemical variables, such as chlorophyll, nutrients, primary production, etc.

Uncertainty and Recommendations - Decision-Making Issues, Societal Benefits

An overall recommendation, but with exceptions, is that initial conditions provided by data assimilation and used for the initialization of seasonal forecasts, improve the model projection but information on spatial patterns is lost quite quickly. It is demonstrated that in some regions and during some seasons, seasonal predictions have quality, but their translation into useful information for end-users is far from optimal; a concerted effort is essential to engage customers and seek their quantitative definition of value, so that the forecasts will be able to be used in decision-making issues. Since the direct connection between seasonal forecast quality and value has not been established, appropriate processes need to be engaged in measuring value in specific decision making instances independently from the assessment of quality. It is worth noted that a better experience in climate variability (e.g., the chances of varied scenarios, despite forecasts) could aid applications/ planning/management. The applying of forecasts requires trust in the overall quality of the forecasts and knowledge of forecast uncertainty.

Forecast initialization is an area that requires active research. Ocean data assimilation has improved forecast quality; however, coupled data assimilation can be a field of active research that is seeking enhanced support and maybe international coordination. There is certainly significant evidence that coupled atmosphere- ocean-biochemical data assimilation should improve forecast quality. Multi-model methodologies [8,13,14] are indeed a useful and practical approach for quantifying forecast uncertainty as a consequence of the model formulation. Still, there are open questions associated with the multi-model approach. For example, the approach is ad-hoc meaning that the choice of models isn't optimized. Nor has the community converged to any best strategy for combining the models. Multi-model calibration activities tend to yield positive results, but considerable work needs to be done, and additional research is required. The multimodel approach should not be utilized to prevent the need to improve models.

Validation has to be undertaken routinely on seasonal dynamical application models. These models ought to be complex enough to capture non-linear interactions, and at the same time being sufficiently simple to avoid over-tuning through non-constrained parameters. The relationship of forecast quality, such as applications of fish models linked to meteorological, biophysical models, is often highly non-linear. Consequently, quality of the prediction of seasonal chlorophyll might not be translated into quality within the prediction of mean fish stock, for example. Such application models like fish models should have additional metrics of forecast quality. Furthermore, these metrics should be suitable for a selected user group.

A clear need to provide information at local scales (space and time) is needed by many applications. Further effort is essential to provide and increase such information, e.g., through statistical and/or dynamical downscaling. Although there are numerous examples of seasonal forecast application (e.g., atmosphere, ocean, water management), there is potential to do considerably more. More progress has to be achieved in bringing seasonal prediction providers and seasonal prediction users together. More effort is required for the development, the production and understanding of probabilistic forecasts. More understanding of what exactly is predictable and what is not. The value of predicting 'extremes' can also be incredibly important.

The societal benefit is not fully realized, partly because there haven’t been adequate interactions among physical scientists associated with seasonal prediction research and production, applications scientists, decision-makers and operational seasonal prediction providers. The issues and problems go beyond merely improving forecast quality and making forecasts readily available. The physical scientists should actively facilitate and understand users' requirements, to provide enhanced climate information, prediction products and services leading to enhanced applications. Users also have to maintain an active dialogue with the physical scientists and forecast providers so that their climate information needs are taken into account. Decision makers should take into account the seasonal forecast information, however, we ought to be aware that our products aren't the only factor they consider within the decision process. Successfully communicating uncertainty and the limitations of seasonal forecasts are essential to the process of making seasonal forecasts useful. It is challenging for several users to make explicit utilization of seasonal forecasts, most importantly when they are not following the needs of many decision makers. In several occasions, our best forecast may simply be climatology that could be useful information. Access to hind-cast data is also essential to assist users in assessing the model performance and the potential benefit from the forecasts. In these data, there is often quantitative information, which users will try to convert into terms that more closely meet their needs. Similar issues can arise regarding the spatial scale of forecast information in which the resolution of regional ecosystem models is considered too coarse for managers or decision makers. Seriously misleading situations can take place when users take information appropriate for the large scale and apply it to local scales without considering the additional uncertainty connected with such action. Application models can be used with seasonal forecasts to provide a metric that combines quality and value. For example, the quality of a seasonal forecast of fish stocks is both a proper measure of skill and a measure of potential value. Seasonal predictability research should be encouraged. Collaborations and interactions with the climate change community need to be supported and have the potential for significant benefits. Indeed, a seasonal prediction is expected to be addressed in the context of a changing climate.

For more about Juniper Publishers please click on: https://twitter.com/Juniper_publish

For more about Oceanography & Fisheries please click on: https://juniperpublishers.com/ofoaj/index.php

#Marine ecology#Marine litter#Marine mammals#Juniper Publishers Indexing Sites#Juniper Publishers PubMed Articles

1 note

·

View note

Text

The Effect of Ramadan Fasting on Oral Anticoagulant: Experience of Tertiary Academic Hospital- Juniper Publishers

The Effect of Ramadan Fasting on Oral Anticoagulant: Experience of Tertiary Academic Hospital- Juniper Publishers

Authored by Fakhr AlAyoubi

Abstract

Introduction: Direct oral anticoagulants (DOACs) have been approved for stroke prevention in non-valvular atrial fibrillation (AF). The outcomes achieved by clinical trials depend on pharmacokinetics and pharmacodynamics of these drugs which are used orally in regular doses once or twice daily. During our routine practice we face an obligatory choice to prescribe NOAC twice daily during the month of Ramadan. Twice daily dose drugs in our practice as well as with Muslim patients everywhere in the world during the holy month of Ramadan.

Objective: to evaluate the effectiveness and safety of NOACs either once daily or twice daily used during the month of Ramadan for stroke prevention in Non-Valvular atrial fibrillation.

Methods: An observational study in tertiary university hospital at Saudi Arabia for 114 patient’s non-valvular atrial fibrillation on NOACs during the month of Ramadan 1441 H/ 2019 with laboratory follow ups before Ramadan and one month after for each patient who completed at least 15 days fasting during this Holy month of Ramadan 1441 H/ 2019.

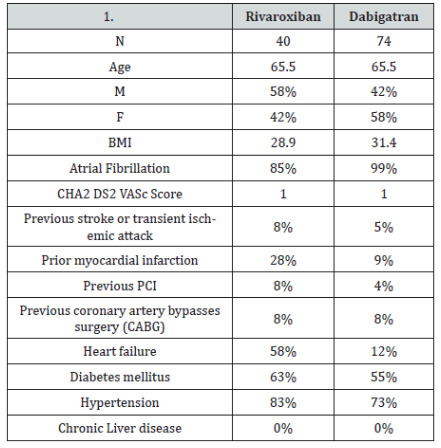

Results: 114 patients, 40 were on Rivaroxaban and 74 on Dabigatran, the baseline characteristic are similar except the history of myocardial infarction 28%, and Heart failure 58% in the Rivaroxaban arm which was higher than the Dabigatran arm 9%, & 12%. Regarding the concomitant medications used Aspirin in Rivaroxaban arm 33%, Dabigatran arm 11%, NSAID only Dabigatran arm 8%, %, no significant deferent in the stroke and bleeding events between two arms.

Conclusion: The data shows that the Direct oral anticoagulant are effective and safe to be used in Ramadan for non-valvular atrial fibrillation regardless the frequency of anticoagulant , further studies are needed to support these findings in non-valvular atrial fibrillation and other indications.

Keywords: Anticoagulant; Rivaroxaban; Dabigatran; Direct oral anticoagulant (DOAC); Ramadan; Fasting; Bleeding; Efficacy; Stroke prevention; Safety of direct oral anti-coagulant; non-valvular atrial fibrillation

Abbreviations: DOAC: Direct Oral Anticoagulant; AF Atrial Fibrillation; NOAC’s: Non-Valvular Atrial Fibrillation; TIA: Transient Ischemic Attack

Introduction

Around the world, the Muslim population performs fasting of the ninth month of the Islamic lunar calendar (Ramadan) every year. Fasting during the month of Ramadan, includes food, beverage, smoking, as well as all oral drugs with some restrictions for intravenous drugs to be restriction from dawn until sunset. Ramadan migrates throughout the seasons due to the Islamic lunar calendar year is shorter than the solar year by 10 to 11 days. Most Muslims change their lifestyle during Ramadan, including dietary and sleep pattern [1,2] leading to change in the hemodynamic profile for Ramadan fasters. Fasting Ramadan has a significant metabolic, hormonal, and inflammatory changes [3]. Dehydration was confirmed on Ramadan faster during the daylight hours but in summertime [4]. Although fasting Ramadan has a positive impact on fasting blood glucose and serum lipid profile [5,6]. The effectiveness of oral drugs may be changed during Ramadan, especially renal excreted drugs [7-9]. As the dehydration may decrease renal function, which will increase the toxicity of renal excreted drugs.

Methods

We conducted an observational cohort study at King Saud University Medical City (KSUMC), Riyadh, Saudi Arabia. The study included all patients with non-valvular atrial fibrillation on direct oral anticoagulant during Ramadan 1440Higri calendar.

a) Inclusion criteria: Patients with non-valvular Atrial Fibrillation documented by ECG,CHA2 DS2 VASc 1 or more and treated with Rivaroxaban or Dabigatran started at or before 1st of Shaban 1440 H which is one month before Ramadan . Each patient included in the study had to be fasting at least 15 days during the Holy month of Ramadan.

b) Exclusion criteria: Active liver disease, Pregnancy, Patient with transient ischemic attack (TIA), stroke or major bleeding within 14 days of recruitment, Patient with non-valvular A. Fib Received warfarin.

In order to study the effectiveness of direct oral anticoagulant for Ramadan fasting patients, we calculate the incidence of the clinical outcomes before the start of fasting and 1 month after fasting.

Data were analyzed using the SAS version 9.2 (SAS Institute, Inc, Cary, NC) for Windows®. With p-value of <0.05 considered statistically significant. Descriptive statistics are reported mean and medians ± standard deviation or as frequencies and percentages, as appropriate. Chi-squared tests were used to determine association between qualitative variables.

Discussion

During Ramadan fasting thru the daylight hours, practicing Muslims are undoubtedly dehydrating at a rate that is determined by the loss of body water minus the amount of metabolic water that is produced over this period. Losses in body mass over a long period, any other changes in body composition may bias the calculation. Many studies showed that the daytime urine osmolality tended to increase progressively throughout the month of intermittent fasting and it was even higher before breaking the fast especially if the fasting hours exceeded the 12 hours which is the case in summer time [10]. As recommended by the International Diabetes Federation and Diabetes and Ramadan International Alliance, patient on Chronic dialysis or chronic kidney disease stages 3-5 are considered to be at very high risk and high-risk categories, respectively, and are exempted from fasting [11,12]. Fasting for one month in Ramadan (June– July 2015, Rize, Turkey) for approximately 17.5 hours each day was not associated with worsening of renal functions in patients with Stage 3–5 CKD. These findings were similar to the results from other observational studies both in patients with CKD and renal transplantation. However, elderly patients may still be under a higher risk [13].

The study showed a significant association between fasting Ramadan and stroke as an independent risk factor for stroke, and specifically ischemic stroke [14]. Atrial fibrillation is a type of irregular heartbeat. It means that the heart may not be pumping as well as it should. As a result, blood clots are more likely to form in the heart, if a clot blocks one of the arteries leading to the brain, it could cause a stroke or transient ischemic attack (TIA). So, the direct oral anticoagulant one of the way to reduce the risk of a stroke by decrease risk of blood clots forming, [15] and have been approved for stroke prevention in non-valvular atrial fibrillation [16]. In all patients with AF, the CHA2DS2-VASc score should be systematically assessed to identify the risk of stroke and to decide on the initiation of appropriate direct oral anticoagulation therapy accordingly. Technology tools may assist in this undertaking. Oral anticoagulation therapy is recommended in all male patients with CHA2DS2-VASc score of 2 or more, and in all female patients with a score of 3 or more. Oral anticoagulation therapy should be considered in male patients with CHA2DS2-VASc score of 1 and in female patients with a score of 2, considering individual characteristics and patient preferences. When initiating anticoagulation, a non-vitamin K antagonist (DOAC) which include (Dabigatran, Rivaroxaban, Apixaban, and Edoxaban) are recommended over warfarin in DOAC-eligible patients with AF (except with moderate-to-severe mitral stenosis or a mechanical heart valve) [17].

Patient who treated with warfarin during Ramadan, has risk to develop supra-therapeutic INR [17-19]. The outcomes achieved by clinical trials for direct oral anticoagulant depend on pharmacokinetics and pharmacodynamics of these drugs which are used orally in regular doses once or twice. Across many diseases, studies have shown that patients taking medication at specific times of day may make the therapy more effective [20-23]. In Riyadh, Ramadan falls at summer at the time of this study. Adult Muslims who join the holy fast will refrain from taking any food, beverages, or oral drugs between dawn and sunset for up to 15 to19 hours a day. In fact, drug doses can be taken only between sunset and dawn, and the time span between them is shorter than outside Ramadan. It is therefore not surprising that potentially invasive studies have been challenging to conduct, leading to a relative paucity of direct evidence regarding the physiological effects of Ramadan fasting. However, over the past few years some studies examining glucose metabolism, lipid profiles, circadian rhythms, sleep, and aspects of hormone physiology during Ramadan have been performed, hypertension and small few studies for stroke in fasting patients.

The objective of this study is to evaluate the effectiveness and safety of direct oral anticoagulant used by Ramadan fasting Muslim patients for stroke prevention in non-valvular atrial fibrillation at King Saud University Medical City (KSUMC), Riyadh, Saudi Arabia. There is retrospective, observational study, supplemented with physician and patient questionnaires, with data captured before, during and after Ramadan that revealed 64% of diabetic patients reported fasting everyday of Ramadan and 94.2% fasted for at least 15 days [24]. The dehydration may be a significant risk factor for the physically and economically costly outcome of ischemic stroke and reinforces the need to better understand how dehydration increases the risk of ischemic stroke especially in patients at higher risk of stroke due to comorbid conditions such as atrial fibrillation [25]. There is a study conducted in Turkey concluded that although Ramadan fasting had an adverse effect on diabetic patients with ischemic stroke, there was no negative effect on stroke frequency, and the hypertensive hemorrhagic stroke ratio is lower [26]. There is a small study that was conducted in the State of Qatar in the Arabian Gulf demonstrated that no significant difference was found in the number of hospitalizations for stroke while fasting during the month of Ramadan when compared to the non-fasting months [27].

Result

Out of two hundred thirty-four (234) patients visiting KSUMC anticoagulant clinics receiving Dabigatran or Rivaroxaban during the past three months before Ramadan 1441 H/ 2019 , only 114 patients are eligible to our study. 120 patients were excluded duo to various reasons (Figure 1). There is no clinically significant in Laboratory values before and after fasting in patients who received Dabigatran or Rivaroxaban (Table 1-4).

*Significant at level ≤ 0.05.

Conclusion

The data shows no clinical significant between the patients who received DOACs before and after Ramadan (Fasting) that’s mean the direct oral anticoagulant (DOACs) are effective and safe to be used in Ramadan for non-valvular atrial fibrillation regardless the dose frequency of anticoagulants, further studies are needed to support these findings in non-valvular atrial fibrillation and other indications [28,29].

To know more about Juniper Publishers please click on: https://juniperpublishers.com/aboutus.php

For more articles in Open Access Journal of Reviews & Research please click on: https://juniperpublishers.com/arr/index.php

To know more about Open Access Journals please click on: https://juniperpublishers.com/journals.php

#Juniper Publishers Review#Juniper Publishers Indexing Sites#juniper publishers contact info#Juniper Publishers#juniper publisher reviews

0 notes

Text

PPARα as Potential Therapeutic Target for Neurodegenerative Diseases-Juniper Publishers

JUNIPER PUBLISHERS-OPEN ACCESS JOURNAL OF DRUG DESIGNING & DEVELOPMENT

Abstract

Peroxisome proliferator activated receptor a (PPARα) is ligand-activated transcriptional factor receptor belonging to nuclear receptors family. It plays a key role in lipid metabolism and glucose homeostasis and it is important in the prevention and treatment of metabolic diseases. PPARα has also a protective effects against brain cell death attributed to its anti-inflammatory and antioxidant properties. In the present work, we discuss the PPAR involvement in neurodegenerative pathologies and its potential as therapeutic target for these diseases.

Keywords: Alzheimers disease; Parkinsons disease; Neuroprotection

Abbreviations: PPARs: Peroxisome Proliferator Activated Receptors; RXR: Retinoid X-Receptor; SARs: Structure Activity Relationships; PEA: Palmitoyl Ethanol Amide; LPS: Lipo Poly Saccharide.

Mini Review

Peroxisome proliferator-activated receptors (PPARs) are members of the nuclear receptors super family and are ligand-activated transcription factors. They are involved in the regulation of metabolic pathologies such as cardiovascular disease, obesity, lipid disorder, hypertension and diabetes [1]. PPARs exist as three subtypes commonly designated as PPARα, PPARγ, and PPARβ/δ. All PPAR isoforms, once within the nucleus, heterodimerize with retinoid X-receptor (RXR) and bind to specific DNA-response elements in the promoter of target genes. When a ligand binds to PPARs, there is a conformational change in the receptor that causes the removal of co-repressors and the recruitment of co-activators; this causes chromatin remodeling which allows the initiation of DNA transcription [2].

PPARα, PPARγ, and PPARβ/δ are expressed in different tissues and with distinct binding ligands, co-activators or corepressors. PPARα, mainly expressed in tissues involved in lipid oxidation such as kidney, liver, skeletal and cardiac muscle, plays an important role in fatty acid oxidation and lipoprotein metabolism; PPARγ is expressed predominantly in adipose tissue and vascular smooth muscles; PPAR β /δ is expressed broadly and particularly in tissues associate with fatty acid metabolism, but also in the small intestine, liver, colon and keratinocytes [3]. A lot of studies showed that PPARs are expressed also in brain and in particular in neurons and glia [4]; for this reason, the potential use of PPAR agonists as neuroprotective agents in neurodegenerative disorders has been suggested. Neurodegenerative diseases are incurable pathologies with a progressive degeneration of neurons associated with motor and cognitive damage. These conditions are characterized by oxidative stress, mitochondrial and transcriptional dysregulation and apoptosis [5]. Because oxidative stress and neuro inflammation are involved in cell death, these dysfunctions are the key factors for the development of the most common neurodegenerative disorders such as Parkinson's disease, Alzheimer's disease and amyotrophic lateral sclerosis.

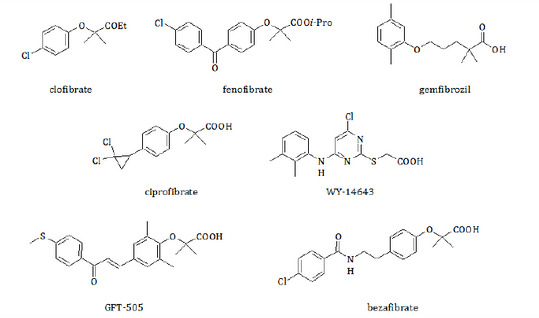

Therefore, novel therapeutic approaches are useful to obtain a reduction of the symptoms and slow progression of the pathology. In this contest, the role of PPARα is emerging as a promising pharmacological target for the treatment of neurodegenerative diseases. Fibrates are PPARα agonists widely studied especially for the treatment of hyperlipidemias (Figure 1). Some of these, such as gemfibrozil, ciprofibrate, WY-14643 or fenofibrate, activate selectively only PPARα, others do not have an isoform selectivity. For example, GFT505 is a dual PPARα/δ agonist and bezafibrate, that actives all three isoforms, is a PANagonist [6].

In the last years, the development of new fibrates that activate PPAR has been an important objective to better understand structure activity relationships (SARs) for obtaining new drugs with a better pharmacological profile [7,8]. In this contest, the neuroprotective effects of PPARα agonists have been studied; some researchers attributed this effect largely to the PPARα antioxidant and anti-inflammatory properties but also to the positive effects in lipid metabolism and glucose homeostasis [9].

About anti-inflammatory properties of PPARα, it was showed especially in astrocytes and microglia [10,11]. In fact, several authors demonstrated that the use of PPARα agonists, such as ciprofibrate, fenofibrate, gemfibrozil and WY-14643, causes a reduction of NO production especially in mouse microglia stimulated by lipopolysaccharide (LPS). Furthermore, it has been demonstrated that the treatment with palmitoylethanolamide (PEA) causes a reduction of oxidative stress in astrocytes mediated by PPARα [12]. PPARα is also expressed in brain and the anti-inflammatory role was evidenced by reduction of LPS- induced TNFα , IL-1β, IL-6 and COX-2 [13].

The anti-inflammatory effect mediated by PPARα has also been identified in reactive astrocytes. It has been shown that PPARα attenuates the inflammation in reactive astrocytes by decreasing NO and pro-inflammatory cytokines. Additional, PPARα has an important role in other glial cells such as microglia and ependymal cells in response to injury. [14]. PPARα has an antioxidant effect associated with a reduction of cerebral oxidative stress depending on the increase in activity antioxidant enzymes, such as Cu/Zn superoxide dismutase and glutathione peroxidase. This activity causes a decrease in lipid peroxidation and ischemia-induced reactive oxygen species production [9].

The anti-inflammatory and antioxidant properties of PPARα explain the neuroprotective effects especially in Parkinson's disease and Alzheimer's disease [15]. For these reasons, PPARα could be a therapeutic target for Parkinson’s disease; in fact, it has been established that there is a neuroprotective effect in the brain of animals treated with fenofibrate by decreasing inflammation. Uppalapati et al. showed that fenofibric acid, the active metabolite of fenofibrate (Figure 2), was present in the brain of animals treated with fenofibrate, suggesting that this compound was metabolized and that crossed the blood-brain barrier in vivo [16].

It was discovered that fenofibrate prevent the dopaminergic neurons loss in the substantia nigra, and it attenuates the loss of tyrosine hydroxylase immune reactivity in the striatum [17]. Many studies have shown that PPARα could have a therapeutic effect also in Alzheimer's disease, even if this conclusion remains controversial. Some researchers demonstrated that PPARα has a protective effect against beta-amyloid-induced neurodegeneration [18], but others found that fenofibrate increases beta-amyloid production in vitro; perhaps this effect of fenofibrate is not connected with PPARα activation [19]. Further, PPARα activation induces vascular protection through an improvement of cerebral artery sensitivity [20].

To conclude, neurodegenerative diseases induce progressive loss of cognitive functions and current drugs only furnish temporary symptomatic alleviation without blocking disease progression. During last years, growing interest was directed towards PPARs that have the capability to positively regulate the genes expression with the aim to modulate several molecular pathways responsible of neurodegenerative diseases. In particular, different researchers have shown the positive involvement of PPARα in neurodegenerative disease.

The useful effects are principally due to PPARα antiinflammatory and antioxidant properties but also to the capacity to restore the vascular and endothelial integrity. The potential use of PPARα agonists as neuroprotective agents against neurodegenerative disorders is an important start point to find new drugs that could cure definitively these pathologies. Though more laboratory and clinical studies are needed to understand all mechanisms involved in the neuroprotective actions of the PPARα agonists, these receptor is an effective target for neurodegenerative disorders.

For more Open Access Journals in Juniper Publishers please click on: https://www.crunchbase.com/organization/juniper-publishers

For more articles in Open Access Novel Approaches in Drug Designing & Development please click on: https://juniperpublishers.com/napdd/index.php

For more Open Access Journals please click on: https://juniperpublishers.com

To know more about Juniper Publishers please click on:

https://juniperpublishers.business.site/

#Heterodimerize#Anti-inflammatory#Alzheimer's disease#open access publishers#Juniper Publishers Indexing Sites

0 notes

Text

Quality by Design in Enzyme Catalyzed Reactions-JuniperPublishers

Journal of Chemistry-JuniperPublishers

Abstract

Quality by Design is the new-age path chosen towards achieving the demanding quality standards in pharmaceutical industry. The present paper aims to throw light on Pharmaceutical Quality byDesign (QbD) and how its implementation will help manufacture better quality of Pharmaceuticals. Quality by Design is introduced along with its key elements to help make the understanding process easier. To attain built-in quality is the primary objective of Quality by Design. Finally, it can be said that the quality that is achieved by end product testing is not something that can be guaranteed unlike the quality assurance that can be provided by Quality by Design.

Keywords: Quality by Design (QbD); Quality Target Product Profile; Design Space; Critical Quality Attributes

Go to

Introduction

“Quality Can Be Planned.”-Joseph Juran

The above quote is self-explanatory when it comes to product quality in the pharmaceutical manufacturing industry. Quality by design (QbD) is not very old but a recent inclusion in the pharmaceutical industry. It`s sole objective is to achieve better quality standards that is especially important in the pharmaceutical industry. The QbD approach consists of various components, important ones being risk assessment, assessment and management of the identified risks, design of experiments (DoE), quality target product profile (QTPP), and establishing a control strategy to keep the product within the design space that was created with the QbD study [1]. Out of all the components, a lot of pharmaceutical development studies have incorporated DoE for a more rational approach [2].

The target of analytical QbD approach is to establish a design space (DS) of critical process parameters (CPPs) where the critical quality attributes (CQAs) of the method have been assured to fulfil the desired requirements with a selected probability [3-4].

The principles that are involved in the pharmaceutical development and are relevant to QbD are all described in the ICH guidelines (ICHQ8-11) [5].

Any Pharmaceutical Development Process Typically Covers the Following Sections:

a) Complete portfolio including all the details as well as analysis of the Reference Listed Drug Product

b) Quality Target Product Profile (QTTP) compilation.

c) Figuring out the Critical Quality Attributes (CQA)

d) Complete characterization of API &CMA (Components of Drug product) identification of the API

e) Excipient selection& excipients CMA identification

f) Formulation Development

g) Manufacturing Process Development [6]

Quality Target Product Profile (QTPP) describes the design criteria for the product, and should therefore form the basis for development of the CQAs, CPPs, and control strategy.

Critical Quality Attributes (CQA) – A physical, chemical, biological, or microbiological property or characteristic that should be within an appropriate limit, range, or distribution to ensure the desired product quality (ICH Q8) Critical Process Parameter (CPP) – A process parameter whose variability has an impact on a CQA and therefore should be monitored or controlled to ensure the process produces the desired quality. (ICH Q8) Critical Process Parameters (CPP) identification and their impact analysis is done by conducting a preliminary risk analysis for every process parameter (PP) that is involved in the individual unit operations.

Need for QbD in Pharmaceutical Industry [7,8]:

a) To integratepatient needs, quality requirements and scientific knowledge all in one design while the pharmaceutical product is still under developmentand further extending to the manufacturing process.

b) To have a better understanding about the impact of raw materials and process parameters on the quality of the final product. This is especially important for biopharmaceutical products since raw materials like cell culture media can be the risk for variability, effecting important factors likecellular viability, cell growth and specific productivity.

c) To collaborate closely with rest of the industries and the regulators and successfully keep up with the regulatory reviews

d) To maintain harmonization in all the regions so that a single CMC submission worldwide is all that is needed.

e) To encourage continuous quality improvement for the benefit of patients.

f) To enable better product design that will have less problems while manufacturing, thus facilitating more efficiency in the manufacturing process.

g) To make post-approval changes easier since it will be contained within a pre-defined design space, thus resulting in regulatory flexibility.

Every production process in a pharmaceutical industry to implement certain control strategies with the ultimate goal of a robust process. A robust process is the gateway to high product quality at the end of the day [9]. Process variability stands as a hurdle to process robustness, and this originates from lack of control on the process parameters. Thus, QbD steps-in to avoidbatch to batch variability in pharmaceutical products [10].

The net outcome of the detailed QbD study (applied in any product) is the segregation of process parameters with respect to their criticality and the finalization of a proven acceptable range (PAR) for every operation. The knowledge that is gained post the QbD evaluation encompasses every minute detail of the operational process as well as the product in general, and lead to the defining of a Design Space. This way, the impact that the manufacturing process might have with regard to the variability of the CQAs becomes apparent, which helps in strategizing testing, quality and monitoring of batches [11].

Process Evaluation: Linking Process Parameters to Quality Attributes

It is important to carefully evaluate the process completely before applying QbD to it. The better knowledge you have of the process, the more effective your QbD will be. Moreover, process characterization is required to specify the proven acceptable ranges (PAR) for critical process parameters (CPPs). In the traditional approach that is implemented in biopharmaceutical production, existing empirical process knowledge is used on a daily basis. However, this approach leads tolaborious and time consuming post approval changes during process adaptation and any new technology implementation that may have become necessary for raising the efficiency of the process. Also, the effects aprocess scale-up can have on the quality of the final product cannot be predicted when using the empirical process development.

This can increase costs and also can cause difficulty in implementing any changes in the set manufacturing process. Thus was born a way to achieve deeper understanding of processes which would lead to greater flexibility and freedom to effect changes. The concept of operation under a pre-defined design space gave this flexibility. Design space is nothing but a concept that is a part of the “Quality by Design” (QbD) paradigm. Now, manufacturers are to follow a science-based process development than their empirical counterpart.

The QbD Concept is Best Explained in this Flowchart Below

Define a Quality Target Product Profile (QTPP) for product performance

⇩

Identify its Critical Quality Attributes (CQAs)

⇩

Create experimental design (DoE)

⇩

Analysis done to understand the impact of Critical Process Parameters (CPP) on CQAs

⇩

Identify and control the sources of variability.

Process characterization sets the ball rolling in any process development, which employs a sound risk assessment rating the various critical process parameters according to their importance [12-14].

Downstream Processing in Biotransformation

Downstream processes of biopharmaceutical industry essentially include the following steps:

a) Harvesting

b) Isolation

c) Purification

Various unit operations that constitute any biopharmaceutical process follow a designed sequence to form an integrated process [15]. Thus, any change in any one of the one-unit operation can affect the functioning of the subsequent unit operations. This is the reason why interaction effects between participating parameters across unit operations should also be taken into account during the process development. Interactions are said to happen when setting of a parameter will show effect on the response of another parameter. Due to this dependence between the parameters, the combined effects of any two parameters hailing from different unit operations cannot be predicted from their individual effects. Regulatory authorities demand inclusion of interactions of parameters within the QbD approach during any process optimization [16]

Example: Downstream processing of 1, 3-propanediol

Process: Fermentation

Fermentation broth that uses flocculation, reactive extraction, and distillation was studied. Flocculation of soluble protein as well as cellular debris that were present in the broth was carried out by using optimal concentrations of chitosan (150 ppm) and polyacrylamide (70 ppm). It was seen that the soluble protein that was present in the broth decreased to 0.06 g L-1. Recovery ratio (supernatant liquor: broth was found to be greater than 99% (Figure 1) [17,18].

The above flowchart shows a typical fermentation process broken down in steps. Glycerol fermentation process is taken as example for the illustration [19].

Go to

Case Study for API

API product development from the very nascent stages require a lot of planning when implementing QbD at every stage. Whether it is two-step process or a multi-step process, each and every operation and parameter needs to be scrutinized before creating a relevant design space. Brainstorming every possible roadblock that might threaten the quality of the final pharma product is what will help design a top-quality process. A futuristic vision is important in the initial steps of QbD planning. The most important part is to pay sufficient attention to detail lest critical aspects might be missed. This is best done by sitting with the entire development team and taking every minor detail into account. Given below is a case study for a API intermediate development process with the help of QbD that highlights the important steps as to how to go about implementing it from the very beginning of your research. QbD is done best, when it is implemented from the very nascent stage of product development.

Quality Target Product Profile

When making your QTPP, make sure you list down everything from your vendor details to target costing. This step basically asks you to think of every aspect of your product and make a comprehensive profile of it. The specification of quality must be highlighted here with all the challenging impurities that might threaten your quality. Everything from stability testing requirements to raw material quality [20] is encompassed in this stage of QbD.

CQA Determination

Given below are some typical CQA parameters that are considered in most of the enzymatic methods of API intermediate preparations.

a) Purity

b) Chiral purity

c) Enzyme residue

d) Assay

e) Appearance

f) Residual Solvent

g) Yield

h) Polymorphic forms

i) Moisture content

j) Melting point (Table 1)

Initial Risk Assessment

The risk assessment can be done in various ways and is the customizable step in QbD. This part calls for a group-discussion or a team meeting where everyone can list down all possible risks related to the project in discussion and grade each one in the list with the amount of risk that it poses. The simplest module suggests you number them 1, 2, 3 with the increasing or decreasing order of the risk threat. A more complicated and detailed risk assessment requires linking of CQAs and CPAs to highlight the risk of their interdependence (Figure 2) [21].

Post risk assessment, comes the control strategies to be followed to tackle the possible risks that are probable. The control strategies are for you to think and execute to achieve your target quality specifications.

Design of Experiment

This is a valuable tool for channelizing your experimental work, to move ahead in a systematic manner. Design of experiments can be of several types: comparative, screening, response surface modeling, and regression modeling [1].

Comparative Experiments: The aim of this study is simple, i.e., picking best out of two options. The selection can be done by the comparison data generated, which is the average of the sample of data.

Screening Experiments: If you want to zero-in on key factors affecting a response, screening experiments would be the best bet. For this, list down concise list of factors that might have critical effects on response that you desire. This model serves as preliminary analysis during development studies.

Response Surface Modeling: Once you have identified the critical factors that affect your desired response, response surface modeling comes handy to identify a target and/or minimize or maximize a response.

Regression Modeling: This is used to estimate the dependence of a response variable on the process inputs.

A step by step guide is given for the DoE step of the QbD process (Figure 3).

Response columns were filled post experimentation as per the design creation (Figure 4).

Factorial Design Analysis Done as Given Under

Analysis Done First for One of the Responses, “Yield”: (Table 2)

P-Values Were Checked for Significance and Higher P-Value Term Eliminated First to Create a Reduced Model:

(Table 3) (Figures 5 & 6)

Observation

From the above graph, significant interaction between the two terms can be inferred.

Analysis Done for the Response “Diacid”: (Table 4)

P-values Checked for Significance and Higher P-Value Term Eliminated First to Create a Reduced Model: (Table 5) (Figure 7)

Observation

From the above graph, significant interaction between the two terms can be inferred.

Response Optimizer Was Used to Optimize Both The Terms With Respective to The Given Responses- Yield and Diacid: (Table 6) (Figure 8)

The optimized parameters predicted for maximum yield and minimum impurity (of di-acid) was found to be 8pH and 37C.

Go to

Case Study 2

As mentioned before, regression analysis is another important tool that can be used to study existing data. This means that if you have done some experiments (without designing them beforehand), you can quickly run a regression analysis of the collected data to derive a relationship between CPPs and the reaction results.

A lot of times, when one follows the one-factor-at-a-time optimization process, by the time any CPP is optimized, a lot of data stands generated. Instead of just tabulating the data and wasting time manually making sense out of them, regression analysis can come to your rescue. As always, graphical data representation seems much easier to understand and also saves your valuable time.

The effect of pH was studied [22] separately in the preparation of deoxynojirimycin base (stage III). The reaction involved N-formyl amino sorbitol, water, oxygen and whole cells of Gluconobacter oxydans DSM2003. Later involvement of sodium hydroxide and sodium borohydride gave rise to deoxynojirimycin. Further work-up and 2-methoxy ethanol facilitated crystallization yielded Deoxynojirimycin base. In this experiment, pH of the reaction was changed to find out its role during the reaction and a regression analysis was run using Minitab to study this affect.

Observations recorded showed that reaction did not occur at pH2 and at pH8, the reaction did not reach completion. The optimum pH range between 4 to 6 showed certain effect on yield and purity. The significance of pH variation during the reaction was thus established as described below (Graphs 1-3):

When null hypothesis p-test was carried out, no significant effect of pH was to be found on product purity, impurity1 and impurity2, but its significant influence was seen in minimizing impurity3.

Furthermore, large-scale batches conducted were statistically analyzed as well to achieve better understanding of the influence of list of parameters on the output obtained. The following parameters were studied during the stage III reaction described above:

a) pH, RPM and Oxygen cylinders consumed during the course of the reaction.

Their effect on the output and reaction completion time was studied. It was seen that only RPM showed statistically significant effect on the reaction completion time and rest of the factors did not contribute to any significant effect on the output or reaction completion time.

During biotransformation process, i.e. during oxidation of N-formyl using Gluconobacter oxydans DSM2003 whole cell, three main unknown impurities peaks were observed in HPLC chromatogram while reaction monitoring. This process is capable of removing these three impurities during down streaming, work up & isolation to the levels mentioned below:

a) Impurity 1 (has defined RRT on HPLC chromatogram) not more than 3%

b) Impurity 2 (any other unknown impurity) not more than 1%

c) Impurity 3 (has defined RRT on HPLC chromatogram) not more than 10 %

Since higher level of impurities affect the yield of the process, efforts were carried out to study the factors which can reduce the formation of process impurities.

Go to

Conclusion

The concept of Quality by Design (QbD) is highly reliable when it comes to achieving foolproof quality of your product. This is a modern tool that is going viral in Pharmaceutical industry especially because this industry demands high quality standards and tolerates no compromise when it comes to the quality. Breaking down QbD, it essentially comes down to identifying the critical parameters of the process and assigning a particular design space for every single critical attribute. Thus, QbD can be considered as an intelligent approach to quality that yields robust processes. QbD also ensures that there is continuous improvement in the process during the entire lifecycle of a Pharmaceutical product [23].

Go to

Acknowledgement

Our group would like to thank the Department of Scientific and Industrial Research India, Dr. Hari Babu (COO Mylan), Sanjeev Sethi (Chief Scientific Office Mylan Inc); Dr. Abhijit Deshmukh (Head of Global OSD Scientific Affairs); Dr. Yasir Rawjee {Head - Global API}, Dr. Sureshbabu Jayachandra (Head of Chemical Research); Dr. Suryanarayana Mulukutla (Head Analytical Dept MLL API R & D) as well as analytical development team of Mylan Laboratories Limited for their encouragement and support. We would also like to thank Dr. Narahari Ambati (AGC- India IP) & his Intellectual property team for their support.

To know more about Journal of chemistry,

Click here:

https://juniperpublishers.com/omcij/index.php

To know more about juniper Publishers,

click here:

https://juniperpublishers.com/index.php

#Juniper Publishers group#Juniper Publishers#JuniperPublishers#Juniper Publisher Reviews#Juniper Publishers Indexing Sites List#organic chemistry#inorganic chemistry#Open access Journal of chemistry#chemistry journal#chemistry#open access journals

3 notes

·

View notes

Text

Effect of Addition of Silica Fume and Oil Palm Fiber on the Engineering Properties of Compressed Earth Block- Juniper Publishers

Abstract

In the recent years, the population has increased to use fire bricks and concrete blocks causing to raise demand and price. Further, people turn back using new techniques such as mixing the earth block with silica fume and oil palm fiber to improve compressed earth block to aid people to use it, especially people with low income. The compressed earth block has a weak resistance to compressive strength which applies on it., also has a high percentage of water absorption due to the voids in earth block. This study aimed to improve the durability and compressive strength of compressed earth block by using additives such as silica fume and oil palm fiber. In addition, to investigate which one of the additives have appropriate to obtain the highest compressive strength with reducing the percentage of voids. The purpose of this study is to mix the compressed earth block with cement and some additives such as silica fume and oil palm fiber to achieve the objectives mentioned previously. further, to identify the highest compressive strength to compressed stabilized earth block. This paper focused to implement the significant experiments such as Moisture Content test, Atterberg Limit Tests, compaction test, compressive strength test and water absorption test. The total of samples prepared to perform the experiments for compressed earth block cubes are 48 cubes. The results have shown that compressed earth block mix with additives such as silica fume and oil palm fiber has higher compressive strength than compressed earth block without additives by 62%. In addition, it indicated that the water absorption rate in the compressed earth block mixed with silica fume and oil palm fiber is less than the compressed earth block mix without additives by 0.3%. The silica fume and oil palm fiber materials in the compressed earth block are leading to decreasing spaces among the particles more than in compressed earth block without additives. Therefore, the results prove that earth block with additives is better than the earth block without additives. besides, the using of silica fume is more appropriate than to utilized oil palm fiber in compressed earth block.

Keywords: Compressed earth block; Compressive strength; Water absorption; Silica fume; Oil palm Fiber

Go to

Introduction

Earth is an ancient factory of material that has been producing a lot of different materials that were used in several ways around the globe. The suitable scientific usage of these materials and resources could provide a very significant change in terms of sufficiency of the efforts and solutions that being provided nowadays to cover the shortage among housing with low-income standard around the world or countries that suffering from the high prices of construction materials. In previous years, the popular material used to construct the buildings are soil, therefore, the soil plays a significant role in creating several of construction materials such as compressed earth block. Compressed earth block is the modern descendant of the shaped earth block, it also called adobe block. CEB was used for the first time in the beginning of 19th century in Europe by architect Francois Cointreau. CEB has offered a different option for conventional building practices that are relatively cheaper, using the local resources which has been found recently in some countries. It was used worldwide until the 70’s and 80’s, there was a new generation of manual, prompting to the emergence today for a real market to produce the compressed earth block. In the result, to increase the price of concrete blocks and more demand for using it. These reasons drive people to improve the compressed earth block by additives in order to obtain compressive strength equal the compressive strength in concrete blocks. thereby, the additives such as silica fume and oil palm fiber have the popular usage because of silica fume has a good surface area and it has a good percentage of silicon dioxide, the average size of silica fume is smaller than the average size of Portland cement by 100 to 150 times. On the other hand, Oil palm fiber is easy to obtain because it is the highest yielding in the world. It is cultivated in 42 countries in 11 million ha worldwide including Malaysia.

Taallah, Guettala & Kriker [1] studied the properties of compressed earth block while date palm fiber was being added and found that the better results could be achieved for compressive strength of compressed earth block with 0.05% of date palm fiber content, 8% cement and compaction pressure of 10 MPa. They also stated that general decrease in water absorption could occur if the cement content increased and date palm fiber was decreased.

Izemmouren, Guettala & Guettala [2] aimed to test the mechanical properties of steam-cured stabilized compressed earth block and here are their objectives which are analyzing the impact of the conditions and curing period on mechanical durability of stabilized compressed earth block with lime, and particular attention is given to impacts of blended treatment on the mechanical qualities, strength and resistance of Stabilized compressed earth block undergone a steam curing. The materials that used in their test were Soil, Crushed Sand, Water, Lime, and Stabilizers and they did tests from different percentage of lime 6%, 8%, 10%, the result show that when they use 10% of lime and 24 hours of curing the block was better and higher strength than 6%, 8%.

Cid-Falceto, Mazarrón, & Cañas [3] studied in this research was to resist the rain using the most industrialized building material to improve the physical properties of the earth block that was made in Spain, and the purpose was to analyze the utility of universal tests. They used three types of compressed earth blocks and all solid blocks. The second block added 6% cement and the third block was added with cement and quicklime 8%. The result for the three blocks was as follows: the first block was negative because the first block did not meet the procedure evaluation standard, but the result for the second and third blocks was positive because they met the standards.

Al-Sakkaf [4] reported the relationships between soil properties considering the several offsets and compressive strength and intensity soil. The tests were achieved throughout 1, 7 and 28 days for stabilized compressed earth block, and the materials that were used were soil 78.25%, Cement 10%, Bitumen 6%, Lime 5% and 0.75 calcium silicate. The purpose of tests at the laboratory were to compare the properties of the normal earth block with the additives. The results showed the best compressive strength was with the percentages of additives that was assumed. In addition, the highest compressive strength that was the mixture of lime and cement earth block.

Quagliarini & Lenci [5] used straw fiber to investigate its influence in the mechanical properties of the Roman bricks. The outcomes were in an agreement with another previous research announced were the straw fiber did not have any positive influence on compressive strength. On the other hand, mentioned that the straw fiber had a control on the plastic behavior and caused that was broken easily for specimens of Roman bricks.

Bouhicha, Aouissi & Kenai [6] studied four different types of soil mixed with barley straw fiber in various percentages. The results indicated that 1.5% increase in reinforcement led to the improvement of the compressive strength by 10-20% with considering the type of the soil. Furthermore, found that the compressive strength was reduced by 45% at 3.5% of barely straw fiber. The results appeared the declining fiber size causing the compressive strength was weak that was led to raising the capability of the blocks to distort at failure phase.

Qu et al. [7] showed the seismic behavior tests of the walls in stabilized compressed earth blocks controlled by bending. There were approximately four specimens of walls with the height was 1.8m that were constructed and tested using the standard unit which was 100* 150* 300mm 4”* 6” * 12 “block and which was known as “Rhino Block” three “grout channels”, and there were also two locking bolts. They mixed the materials to provide the best resistance, the required constructability, and the most stable pressure, they used the sand 10, 0%, soil 74.3%, cement 6.2% and The results appeared that the second and fourth walls went according to plan, but at the time of testing the first and third walls, mechanical failures occurred; which caused damage, including cracks, and permanent distortion, third damaged walls at the top of the sample caused by mechanical failures in the first and third walls. Ghavami, Toledo Filho & Barbosa [8] used Coconut and Sisal fiber as reinforcement to exam the behavior of the soil. The proportions of the fiber used were 4% of Sisal fiber and 4% of Coconut fiber for two various specimens. The results appeared in this research that the use of Taperoa soil with 28% W/S ratio and 4% of Sisal or Coconut fiber will slightly increase the compression strength.

Morel Pkla & Walker (2007) [9] reported that was for the purpose of determining the compressive strength of the compressed earth blocks. Also, the RILEM test. Earth blocks were mostly used for small houses with two levels, but a few years ago became earth blocks have been used for to build 10-levels high buildings in Yemen. In over the last 50 years’ earth blocks have developed many countries such as Mayotte. Adding 4-10% of cement to earth block was to improve the compressive strength and water resistance comparison with the normal block. The purpose of tests was to achieve the critical failure limit. The RILEM was used to test for the compressive strength. The results appeared that the cement made the earth block had the significant defect because of tensile stress that was led to slit in the line of the load in the block.

Laursen Herskedal & Jansen Qu [10] indicated in this research was to study these goals, the first was to document the experimental behavior of the stabilized compressed earth block walls fixed to the slip. The second was to test the flexural strength of the existing wall and to relate it to the current coded analytical procedures for predicting resistance. The last objective was to develop an analytical procedure for prediction. The materials used to make the blocks are 74.3% soil, 6.2% Portland cement, 10% sand and 9.5% water. Overall, these results indicate that the first wall

A. and the second wall

B. were approximately identical, but for the third wall

C. it had a greater resistance due to increase the thickness of the wall.

Sharma Marwaha & Vinayak [11] used vernacular fiber (Pinus roxburghii and Grewia optiva) together with cement to improve the sustainability of rural adobe houses. The maximum increase in compressive strength were observed with 2% of Grewia optiva fiber and 2.5% cement in the range of 225-235% for both cubical and cylindrical samples (base strength 1.17 and 0.85 N/𝑚𝑚2 respectively of soil), followed by the sample mixed with 2% Pinus roxburghii and 2.5% cement which showed an increase in compressive strength by 87-145% for both cylindrical and cubical samples (base strength 1.17 and 0.85 N/ 𝑚𝑚2 respectively of soil).

Walker & Stace [12] have done an experimental study to estimate the properties and similarity of both lime and cement soil. Also, to check the average compressive strength. They did two tests the first test was by using 95.0% soil and 5.0% cement and the second test was 90.0% soil and 10% cement, the result shows that the compressive strength for the first test by using 5.0% cement reach 3.67 (MPa), but for the second test when they use 10.0% cement the result was 7.11 (MPa).

Millogo, Morel, Aubert & Ghavami [13] have done an experimental analysis for compressed adobe blocks and they used Hibiscus cannabinus fiber as reinforcement. The amounts used in this experiment were 0.2–0.8𝑤𝑡% of 30𝑚𝑚 and 60𝑚𝑚 lengths of Hibiscus cannabinus fiber. And they declared that there was an increase occurred for the compressive strength by 16% for the fiber of 30𝑚𝑚 lengths and by 8% for a fiber of 60𝑚𝑚 lengths. They also stated how the addition of 60𝑚𝑚 fiber had an undesirable effect on the compressive strength.

This research indicated that the compressed earth block has a lot of voids that allow water to enter the compressed earth blocks and making the resistance of the compressed earth block very weak. therefore, cement and additives were added to the compressed earth block to achieve the maximum compressive strength possible using some of the proportions proposed in previous studies. in addition, a comparison was made to obtain the best results among the compressed earth blocks using silica fumes and oil palm fibers.

Go to

Methodology

The main objective of this study aims to compare between compressed earth block without additives and with additives such as silica fume and oil palm fiber. Therefore, to identify the best additive material mentioned, the compressive strength test and water absorption test were done to obtain appropriate compressed earth block that gives highest compressive strength and resistance to water absorption. The tests carried out in this study include water absorption test, moisture content, Atterberg limit test, classification test, compaction test and compressive strength test. The number of compressed earth block without additives were 18 cubes, the compressed earth block with silica fume were 18 cubes and the earth block with oil palm fiber were 18 cubes. The total number of cubes carried out in this experiment were 54 cubes. in addition, the experiment was done in IKRAM’s laboratory. The general procedures for laboratory works are illustrated in Figure 1.

Go to

Preparation of Materials

Materials used for this study were soil, water, cement, and additives such as silica fume and oil palm fiber.

Soil

Soil is one of the main materials in this study that used about 80% of the components of the compressed earth block. it was brought from behind Unipark Condominium in Kajang, Selangor, Malaysia. the excavation was 1.5m deep under level ground. the soil was tested in several experiments as follows:

Moisture content

Moisture content test is a method for determination of the water content of a soil as a percentage of its kiln-dried weight. It is important as a guide to classify the normal soil, it can be useful to calculate other properties as the Atterberg Limits.

Atterberg limits

A. Liquid limit LL is the lowest water content at which the fine-grained soil behaves like a viscous mud, flowing under its own weight. It is the transition water content between plastic and liquid states. It along with the plastic limit provides a mean of soil classification as well as being useful in determining other soil properties. At the liquid limit, the soil has a little strength.

B. The Plastic limit is the moisture content at which a soil becomes too dry and the lowest water content at which the soil to be in a plastic specification, as determined by the Plastic Limit Test.

Classification tests

Hydrometer analysis: Hydrometer analysis is the procedure generally adopted for determination of the particle size distribution in a soil for the fraction that is finer than no. 200 sieve size 0.075mm. The lower limit of the particle size determined by the procedure is about 0.001mm, in hydrometer analysis; a soil specimen is dispersed in water. In a dispersed state in the water, the soil particle will settle individually.

Sieve analysis test: Sieve analysis test can make the soil be classified into coarse, smooth, and by regularity coefficient of the soil can be classified into the regular gradient, good gradient, and poor gradient.

Compaction test: Compaction is a mechanical procedure by which the soil particles are set intently by reducing the air voids. By reducing the air voids the thickness and the shear quality of the soil will also be expanded.

Additives

Silica fume: Silica Fume also is known as (microsilica). MicroSilica Buzolanah is material that added to concrete block mixes by-product of the process of production of ferrosilicon metal in electric arc furnaces where it is obtained from the smoke from the furnace through the stacks by the condensation process. Notes that the blocks of the container on the proportion of silica fume (5%) has increased by (13%), the increase in the block of up to (84%) when the silica fume proportion (20%) Bottom line so that downturn increases with the proportion of silica fume, the reason is due to the effectiveness of Buzoalnah materials that contain silica fume, which react slowly, causing size reduction resulting from the interaction and cause an increasing downturn as a result of drought.

Oil palm fiber: The oil palm is the main edible oil crop in the world. It is grown in 42 countries on 11 million hectares worldwide. Southeast Asian countries such as Malaysia and Indonesia, India, Latin America and West Africa are the main oil palm crops. Each year, 1ha of oil palm produces about 55 tons of dry matter in the form of fibrous biomass, which gives 5.5 tons of oil.

Water

The common specifications regarding the quality of the mixing water is that the water should be potable. Such water should have an inorganic solid of less than 1000ppm.

Cement

The cements used in the construction are generally inorganic, often based on calcium silicate or lime, and can be characterized as being hydraulic or non-hydraulic, depending on the capacity of the cement to be taken in the presence of water.

Go to

Mix Proportion

The performance of the mixes was specified by the researcher after the experiments were carried out and the result was analyzed while the mix proportions was shown according to the suitability and workability of both Oil Palm Fiber data and Silica fume. The mix proportion for Oil Palm Fiber 10% cement, 10% water, 0.03 oil palm fiber and 79.97% soil. And for the silica fume 7% cement, 10% water, 3% Silica fume and 80% soil. This is most rational approach to the selection of mix proportions with specific materials in mind possessing more or less unique characteristics. The approach results in the production of compressed earth block with the most economically appropriate properties.

Compressive strength test

Compressive strength test was done to know the limit of compressive stress that leads to failure of the compressed earth block.

Water absorption test

The water absorption is one of the main factors which affect in compressed earth blocks. Therefore, it is significant to identify the rate of water absorption of compressed earth blocks because the high rate of water absorption of specimens may cause weakness of the stabilized compressed earth block which will result in losing strength with time.

Go to

Results

The experiment was carried out in accordance with standard stated in BS 1377 procedures for laboratory testing of soil. The purpose of the tests is to achieve the goals of this research. The laboratory tests to be carried out on the soil samples (S1, S2) that were obtained from these specific locations are particle moisture content, atterberg limits, hydrometer test, sieve analysis, compaction test. The average moisture content of the samples is 20%, atterberg consist of liquid limit and plastic limit and the average result for each test are 46% and 30% respectively, the compaction test result has a maximum dry density (γd) of 1.858mg/m³ and the optimum moisture content (%) is 12.50%. The result for hydrometer test and sieve analysis test is shown in the Figure 2.

The results of compressive strength after 7,21 and 28 days shown that the earth block mixed with cement and silica fume is stronger than the control and oil palm fiber which the average for control is 0.66MPa, 1.3MPa and 1.47MPa, the average for oil palm fiber is 0.62MPa, 1.71MPa and 1.81MPa and for earth block mixed with cement and silica fume is 0.80MPa, 2.2MPa and 2.38MPa. After 21 and 28 days the difference becomes much bigger because the cement and silica fume mixed with soil and it increase the intensity and strength of the earth block. In the Figure 3 below shows the difference between the control sample and the mix with stabilize materials in 7, 21 and 28 days.

As we know that when the water absorption rate is low that mean the block is good and when the water absorption rate is high that mean the block is not that good. The results shown in the chart below and it can be seen that after 7, 21 and 28 days, the average of water absorption for control sample is 2.0%, 7.6% and 8.1%, the average for oil palm fiber is 2.7%, 9.4% and 9.7% and the average for earth block mixed with cement and silica fume is 1.9%, 7.4% and 7.8%. Which show that the absorption rate for the earth blocks mixed with silica fumes are less than the control block and oil palm fiber and that is because silica fumes bond the laterite particles together thereby reducing the pores which reduce the percentage of the water to flow into the blocks. The percentage of water absorbed by the blocks are shown in Figure 4.

Go to

Conclusion