#23 million pixels

Text

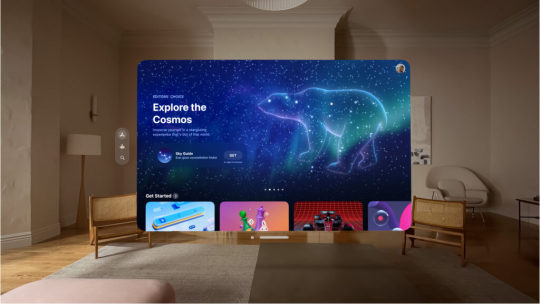

Apple Vision Pro 2024: The Ultimate Augmented Reality Headset for Gaming, Education, Work, and Entertainment

Apple has unveiled its first augmented reality headset, the Apple Vision Pro. The Vision Pro is a high-end device that promises to revolutionize the way we interact with the world around us.

The Vision Pro features a high-resolution display, a powerful processor, and a variety of sensors that allow users to interact with digital content in a natural way. The headset is expected to be released in…

View On WordPress

#10 times faster#23 million pixels#apple vision pro#augmented reality#augmented reality games#collaborate with colleagues#complex designs#depth sensor#education#entertainment#gaming#historical landmarks#lidar scanner#m2 chip#motion sensor#scientific concepts#virtual theater#virtual worlds#work

3 notes

·

View notes

Text

I'm using simblr gratitude day as an excuse to take a leaf outta @akitasimblr's book n' do a sunday faves/crushes style post to appreciate some of my fave blogs i scour for updates on the reg 👀 believe me, the list is 100x longer than this but it's 23:40 the night before cos i ran outta time and y'girl is tired AND stuck in the office tomorrow sdkjskdj...

@simlit - for the one of the most unique takes on a "bc" i've come across, with interactive polls and all! great character building too.. and impeccable screenies/worldbuilding 🤤

@lynzishell - for the cutest, realest characters i feel like i could be neighbours with, i bet i could barge in and join em for dinner and they prolly wouldn't mind 🤭 but also 'cause ur so sweet and supportive and ily <3

@citylighten - for a grittier story with complex characters, amazing shots and a dash of crime-.. oh and lots of hot, hairy men (not u, Ben) and strong women that tickle my pickle.. i need to see more Pietro soon or i may cease to exist skdsk

@rainymoodlet - for the hottest man tiddies on simblr and the most ridiculously ambitious bachelor challenge EVER, idk how ur keeping it together Shan but we love u for it and i can't wait to see who wins Dan's heart and the journey's all the lil pixels you've adopted have along the way ;-;

@akitasimblr - not only for the wealth of positivity you bring to simblr in general but your various gameplay/challenges that regularly liven my dash, and your unbridled love for my pixels too.. it's very special to me and so are you! 🤧

@zosa95 - for the queen of the cookies!! 🍪 and also because you created Edith, the legend.. and because Gemma & Markus are back and i love them as much as i love youuu.. your insightful comments (among some others in this list that may or may not result in a slightly chaotic comments section more often than not 👀) give me so much joy and so many of you make my day with ur support :')

@natolesims - i love ur approach to storytelling via the nsb challenge and the way you combine the silliness of the sims with the real emotions of the characters u create with it.. i always find myself checking in on Grey to see what he's been up to 🤭

@treason-and-plot - one of my faaave ts3 blogs, i'm obsessed with your characters and your writing! i'm forever on my toes waiting to see what happens next 👀 and the fact that u and those that follow/read along still have so much fun with ur story gives me so much hope that i'll still be here long after.. whatever the hell comes after ts4 😌

like i said.. there are so many of u i could list here so pls just know that ily all and could find a million and one amazing things to say abt you all if i only had the time! <33333

#ts4#sims 4#ts3#?!#simblr#simblrgratitudeday#truly go could on forever#always love doin stuff like this but also don't do it very often cos it makes me feel bad for those i “missed out” ;-;#pls don't feel left out tho!! ILY ALL SM#<3

93 notes

·

View notes

Text

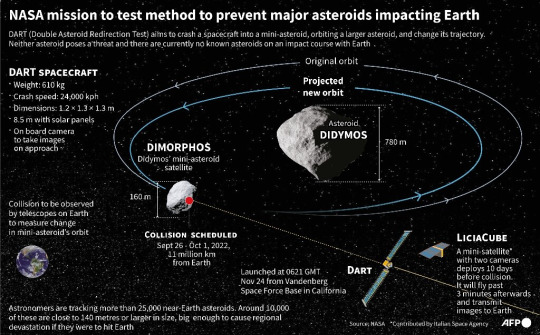

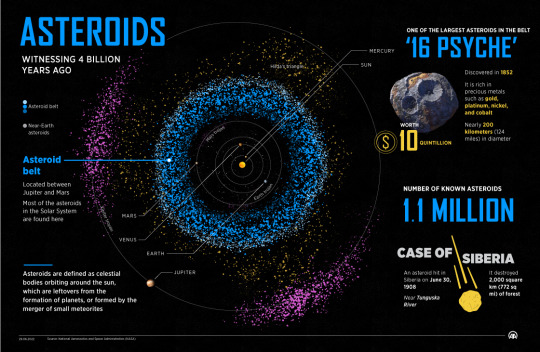

DART All Set To Crash Into Asteroid Dimorphos

youtube

This animation depicts the asteroid moonlet Dimorphos being approached by DART and then crashing with it.

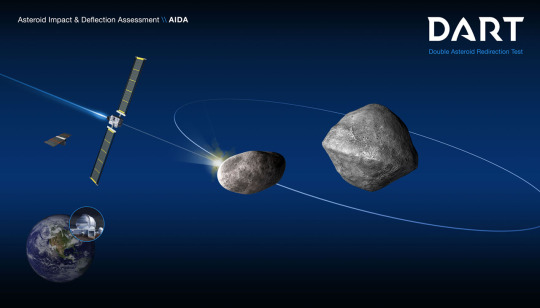

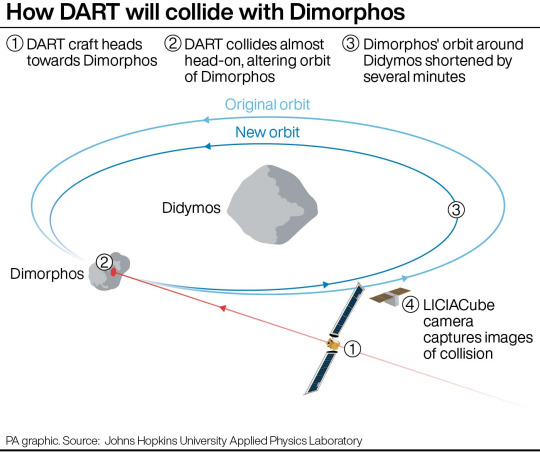

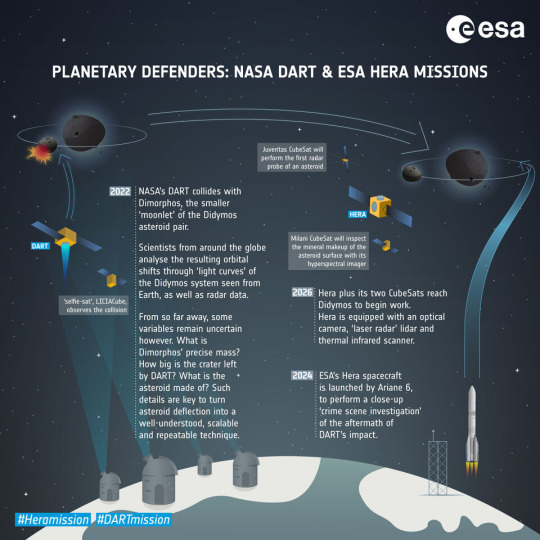

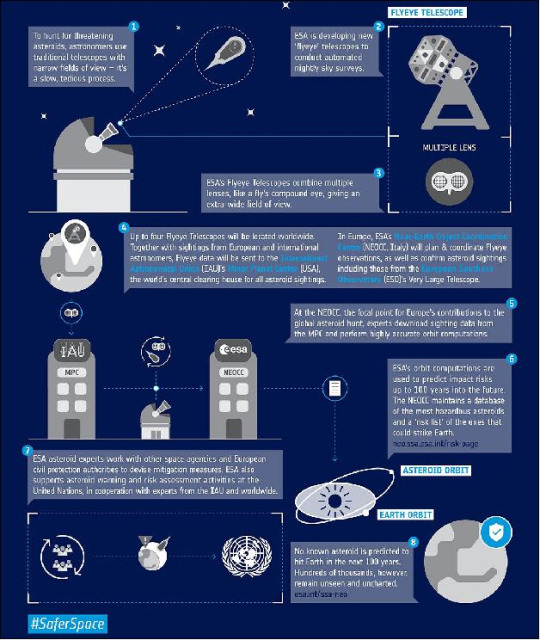

In order to demonstrate a method for diverting potentially deadly space rocks headed for Earth, NASA's Double Asteroid Redirection Test (DART) mission will deliberately crash a spacecraft with an asteroid on Monday.

The Double Asteroid Redirection Test, or DART, will collide with the asteroid moon Dimorphos on September 26 at 7:14 p.m. EDT (23:14 UTC). At 6:00 p.m. EDT, NASA TV will begin airing live coverage.

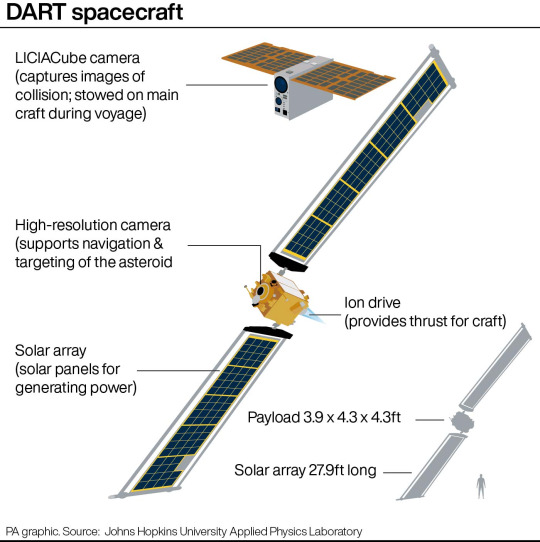

DART will be transmitting photographs every per second throughout the last hour of the mission when Dimorphos transforms from a point of light to an object that fills the full camera frame.

Dimorphos' orbit around Didymos, its bigger parent asteroid, will change as a result of DART's collision. This alteration will be visible to telescopes on Earth, providing proof that the test was successful.

DART's CubeSat will also monitor the impact, and the Hera probe from the European Space Agency will go to Dimorphos in 2026 to examine the crater it left behind.

For billions of years, asteroids have been colliding with Earth. Finally, mankind is responding, starting with DART.

Why is DART crucial?

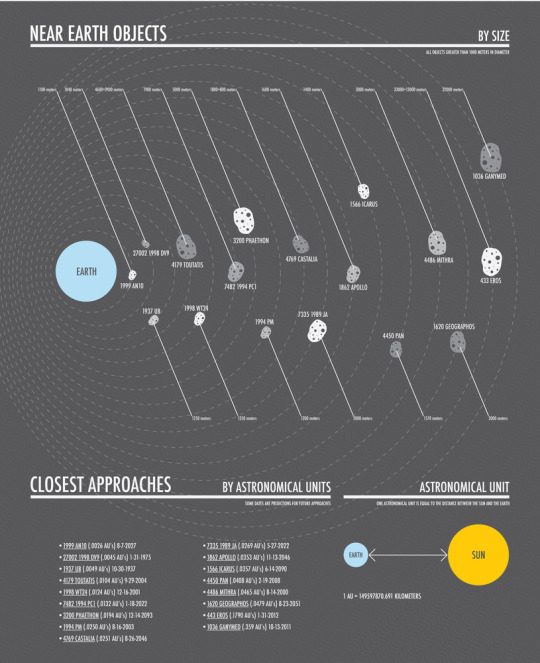

An asteroid 6 miles (10 kilometers) broad that impacted the Earth 66 million years ago wiped off the dinosaurs and caused a worldwide extinction. Although some evade detection, we believe we have located the majority of asteroids this large, and none are headed directly for Earth.

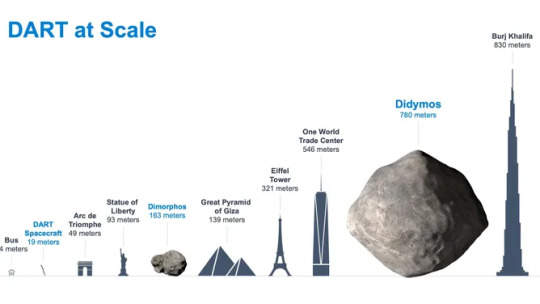

But it doesn't take anything that big to wreak havoc. An asteroid that is just 50 meters (160 feet) broad has the potential to kill a lot of people under the appropriate circumstances.

Around 20 meters wide, the tiny asteroid that burst in 2013 near Chelyabinsk, Russia, injured 1,500 people and destroyed 7,200 structures in six towns.

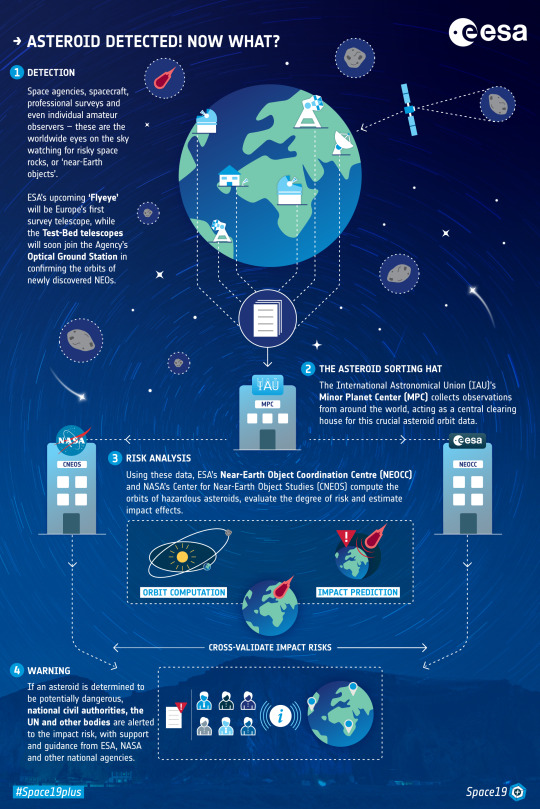

Near-Earth asteroids, also known as near-Earth objects, are asteroids that pass by Earth on their orbits (NEOs). If we discover a dangerous NEO on path to strike Earth with enough time to spare, we may be able to gently push it off course.

The kinetic impactor method is what is used in this. By crashing with the asteroid Dimorphos, DART will put this strategy to the test and demonstrate how an asteroid's orbit may be changed by a spacecraft.

When will DART have an effect on Dimorphos?

youtube

At 7:14 p.m. EDT on September 26, 2022, DART will affect Dimorphos (23:14 UTC). At 6:00 p.m., NASA TV will begin airing live coverage. The Johns Hopkins Applied Physics Laboratory in Baltimore, Maryland, is in charge of running the mission.

DART's last broadcasts will take 38 seconds to reach Earth at the speed of light. The spacecraft launched on November 21, 2021, and its 10-month voyage will come to an end with the hit.

What will the closing minutes of DART entail?

Till the very last hour, and particularly the very last five minutes, we won't see anything.

Didymos and Dimorphos are already visible to DART, although they are still only a single point of light. DART is growing quickly, and Didymos and Dimorphos are both rather tiny cities. DART will approach Dimorphos at a scorching speed of 6.6 kilometres (4 miles) per second, whereas previous asteroid missions like Hayabusa2 at Ryugu and OSIRIS-REx at Bennu have featured leisurely approaches and plenty of beautiful photos.

Didymos is between Ryugu and Bennu in terms of size, being around 780 metres (2,560 feet) wide. Dimorphos, with just 160 metres (525 feet) wide, is smaller than both.

Four hours before to impact, the DRACO telescopic camera on board the spacecraft will begin sending photos to a group of navigational algorithms referred to as SMART Nav. The task of SMART Nav is to locate Dimorphos and manoeuvre the spaceship into collision on its own.

One of those photos will be sent to Earth every second or so. Although DRACO's detector has a resolution of 2560 × 2160 pixels, the pictures are reduced in size so that DART can relay them quickly—to only 512 by 512 pixels.

The DART crew and NASA TV will both see these photographs at the same time. What can we anticipate to see?

With an hour till impact, Dimorphos should finally be visible to DART as a distinct point of light, albeit one that is just a few pixels wide.

Both asteroids should still be visible in the camera frame four minutes before collision. Dimorphos will be roughly 20 pixels wide, whereas Didymos will be about 100 pixels wide.

Two minutes before impact: Dimorphos will be about 45 pixels wide, and it will be obvious that DART is approaching.

Terik Daly, the DRACO deputy instrument scientist, predicted that DART will be able to observe stones and rocks on Dimorphos as large as vending machines 20 seconds before impact. If everything goes as planned, Daly told The Planetary Society, "the last few milliseconds before impact will enable us to observe features on the surface with a pixel size of around ten centimeters (about 4 inches) per pixel."

Dimorphos will be completely vaporized when DART collides with it. Depending on the makeup of Dimorphos, the resultant crater might be as large as 20 meters (66 ft) across. The time it takes for signals to travel at the speed of light to reach Earth is 38 seconds by the time we see DART's broadcasts come to a stop.

How will we know if DART worked?

DART's success will eventually be determined by measurements made using ground-based telescopes.

Didymos and Dimorphos appear to Earth as a single point of light. As the two asteroids pass in front of one another, that point of light changes. This makes it possible to calculate Dimorphos' orbital distance from Didymos.

Dimorphos's orbital period should be shortened by the impact of DART from about 11.9 hours to 11.8 hours, demonstrating the effectiveness of the kinetic impactor method.

The Lowell Discovery Telescope in Arizona, Las Campanas Observatory in Chile, the worldwide network of Las Cumbres Observatory, and the Magdalena Ridge Observatory in New Mexico are just a few of the telescopes that the DART team uses for its studies.

What will the CubeSat for DART do?

DART will launch a CubeSat called LICIACube, the Light Italian Cubesat for Imaging of Asteroids, about 10 days before impact.

The Italian Space Agency's LICIACube was created with the intention of observing DART's impact, the plume it produces, and maybe the resultant crater. It will use two cameras called LUKE and LEIA to do so from a distance of around 50 kilometers (31 miles).

You shouldn't anticipate LICIACube to send back photographs straight immediately since the CubeSat can only interact with Earth at modest data speeds, which might take months.

How will Hera look into the effects of DART?

The European Space Agency will deploy a mission dubbed Hera in 2024 in order to thoroughly investigate what took place when DART collided with Dimorphos. Hera will land in 2026 with a collection of tools to explore Didymos and Dimorphos, particularly the impact crater that DART created. The spacecraft is equipped with a laser altimeter to produce 3D maps and an infrared camera to measure the temperatures of asteroids and learn more about their surface characteristics.

Additionally, Hera will launch two of its own CubeSats. Before trying to land on Didymos, Dimorphos, or both asteroids, they will investigate the innards of the asteroids.

What distinguishes DART from Deep Impact?

Planetary protection was not the goal of Deep Impact. The spacecraft, which had been launched in January 2005, hit Comet Tempel 1 on July 4, 2005. The comet likely originated from the Oort cloud, a ring of ice debris that surrounds our solar system, since the collision showed it to be around 75% empty space.

Deep Impact was given the new designation Deep Impact (EPOXI) and despatched to Comet Hartley 2 after completing its mission at Tempel 1. Extrasolar Planet Observations (EPOCh) and Deep Impact Extended Investigation make up the double-nested acronym EPOXI (DIXI).

After Hartley 2, NASA planned to deploy Deep Impact (EPOXI) to a third comet, however in August 2013, contact was lost with the probe.

What is the cost of DART?

Costing $324.5 million is the DART mission. The spacecraft itself cost $308 million for NASA's planetary defense program, as well as $68.8 million for launch services and $16.5 million for operations and data analysis.

How else might asteroid strikes be avoided?

To prevent harmful asteroids from striking the Earth, a considerably bigger jigsaw must be pieced together than DART.

We must first look for nearby things. The fraction of asteroids we've discovered will increase significantly with the launch of the NEO Surveyor mission.

An asteroid must be tracked and studied after discovery.

It is important to support asteroid-hunting astronomers via Grants since the world's professional sky surveys cannot do all of this job on their own.

We can significantly lower the likelihood that our planet will be hit by a hazardous space rock by locating, tracking, and describing asteroids, and then testing deflection measures.

~ Jai Krishna Ponnappan

Find Jai on Twitter | LinkedIn | Instagram

#NASA#DART#Double Asteroid Redirection Test#Asteroid#Dimorphos#Asteroid Diversion#NEO#Near Earth Objects#Eart Protection#Space Weather

7 notes

·

View notes

Text

also HERE

this episode is almost all about OJ. follow the 2nd link above HERE to skip ahead, of the OJ story doesn't interest you.

00:00Open/ OJ Simpson in Review

02:51The OJ story begins long before the trials

05:46Johnnie Cochran as the deciding factor

08:55Behind the scenes of The OJ Simpson Civil Trial (Original sketch)

12:12Mark Fuhrman: The subplot of the OJ Simpson trial

14:17Differences between the criminal and civil trials

16:32OJ Simpson's Testimony (Original sketch)

23:52'Lies' by The Knickerbockers

26:36The Verdict, The Wrap Party, and The End of the Road

30:06'Rachmaninoff: Symphony No. 2 in E Minor, Op. 27: II. Allegro molto' by Eugene Ormandy & The Philadelphia Orchestra

38:03One final thought: Races of the juries

38:34The Apologies of the Week: Canada, Shohei Ohtani's former interpreter Ippei Mizuhara, State Rep. Don Wilson

43:05News of Crypto Winter: Two Australian cryptocurrency companies have collapsed owing more than $150 million

45:34'Pixelated (F Minor)' by Tom McDermott

46:56'Bad Kids To The Back' by Snarky Puppy

52:40News of the Olympic Movement: Track and field becomes the first sport to pay prize money at the Olympics

54:47'Olympic Fanfare and Theme' by Boston Pops Orchestra & John Williams /Close

57:00'I Got Rhythm' by Oscar Peterson

1 note

·

View note

Text

The Definitive Faceoff: Meta Quest 3 versus Apple Vision Pro

The Meta Quest 3 and Apple Vision Pro stand at the forefront of the virtual and augmented reality realm, each offering unique features and capabilities. This comparative analysis aims to simplify the intricate landscape of spatial computing, aiding in your decision-making process.

1. Hardware and Performance:

Meta Quest 3:

Boasting a formidable Qualcomm Snapdragon XR2 Gen 2 processor, the Meta Quest 3 delivers enhanced processing power. Its 4K+ Infinite Display, with a resolution of 2064 x 2208 per eye, promises crisp visuals. The device offers a 90 Hz refresh rate, with a planned upgrade to 120 Hz, and features a compact design, 40% smaller than its predecessor. Additionally, its AR passthrough enriches the mixed reality experience.

Apple Vision Pro:

Powered by the groundbreaking Apple M2/R1 System-on-a-Chip (SoC), the Apple Vision Pro features a Micro-OLED display, offering 4K HDR per eye with Micro-OLED technology. Boasting 23 million pixels, it ensures unparalleled sharpness and detail. Innovative functionalities such as spatial audio and eye-tracking further enhance its appeal.

2. Style and Convenience:

Meta Quest 3:

The Meta Quest 3 is praised for its improved comfort and sleek design. Its built-in adjustability allows for a personalized fit, although some users may experience motion sickness during prolonged usage.

Apple Vision Pro:

Aligning with Apple’s aesthetics, the Vision Pro features a sleek and innovative design. It offers intuitive controls and motions, though some users find it heavy and uncomfortable for extended periods.

3. User Immersion and Experience:

Meta Quest 3:

Adaptable to various users, the Meta Quest 3 delivers a high-powered GPU and display, enhancing the gaming experience. However, concerns regarding fit and comfort may deter some users, alongside its premium price point.

Apple Vision Pro:

Revolutionizing the industry with its immersive AR/VR experience, the Vision Pro incorporates innovative features like spatial audio and eye-tracking. Nonetheless, its high price tag and battery life concerns may limit accessibility.

4. Accessibility and Price:

Meta Quest 3:

Positioned as a more budget-friendly option, the Meta Quest 3 may still pose affordability challenges due to its premium features.

Apple Vision Pro:

While offering revolutionary technology, the Vision Pro comes at a high cost, appealing predominantly to tech enthusiasts and early adopters.

In conclusion, the Meta Quest 3 caters to a wider demographic with its gaming capabilities, adaptability, and reasonable price, whereas the Apple Vision Pro targets those seeking cutting-edge AR/VR experiences and are willing to invest. Ultimately, the choice between the two devices hinges on individual preferences and priorities in the realm of spatial computing.

0 notes

Text

What is Vision OS? The source of Apple Vision Pro

Apple Vision Pro, which includes visionOS, the world's first spatial operating system, enables users to engage with digital information as if it were physically present in their surroundings. The groundbreaking design of Apple Vision Pro includes an ultra-high-resolution display system with 23 million pixels over two screens, as well as Apple silicon in a unique dual-chip architecture to ensure that every experience seems like it's happening in front of the user's eyes in real time.

Read more: https://www.mindstick.com/articles/335289/what-is-vision-os-the-source-of-apple-vision-pro

0 notes

Text

Vision Pro Users will Soon be Able to Use Their Headset for Zoom Calls

While it's not the first headset on the market to incorporate mixed-reality elements into its featureset, Apple's Vision Pro is certainly one of the more eye-catching tech products that we've seen in recent years, thanks to the possibilities that it offers in terms of software experiences. This will no doubt include support for entertainment platforms, games, and more importantly productivity apps.

With that in mind, Zoom recently announced that it will be launching a special app designed for the Vision Pro, which brings major features from the mainline app to a more unique form factor for users, in addition to some new add-ons for the app. The app launches on February 2nd, but what exactly can we expect with it?

Zoom Features for Vision Pro

First up are personas, which are spatial representations of users within the Vision Pro. This also allows other meeting participants to view their facial and hand movements while using the app. There's also the "Spatial Zoom Experience," which allows an immersive user setup that can be scaled to a user's desired size without the need for additional gear or setup.

Zoom adds that it will bring over more features later this Spring, which include 3D object sharing which is ideal for media and design projects, and allows users to share 3D files with others, allowing better viewing in a spatial computing scenario. Zoom Team Chat is also scheduled to be brought over to the app, and is a staple feature of Zoom in general. There's also "Real-world Pinning," which allows users to pin up to five Zoom Meeting participants anywhere in their physical space, with the option to remove the background of pinned participants.

Vision Pro Specs

Announced back in June last year, the Vision Pro is Apple's first attempt at a premium MR headset, although the company has frequently leaned towards the term "spatial computing." In terms of design, the Vision Pro comes with a curvy look which incorporates aesthetic elements from other Apple products though, with a laminated glass visor that joins into an alloy frame.

Inside is a micro-OLED setup that packs 23 million pixels across two displays, as well as a custom Apple M2 SoC, allowing it to be used for an array of different applications as well as hardware, with planned connectivity for other Apple products such as the Magic Keyboard and Trackpad, in addition to natively-built gesture and motion controls. FaceTime support will also be available soon, for more immersive video calls.

The App Fiasco

While Zoom has pledged to provide exclusive software support for the Vision Pro, other major platforms have yet to warm up to Apple's fancy new headset - for example, Netflix announced that it won't be launching an app for the Vision Pro, essentially citing the need for additional time and effort for a relatively-small user base.

On one hand, users can still watch their favourite Netflix programming with the Vision Pro's we browser, although one could argue that this isn't the most seamless method overall. Other big names such as Spotify and YouTube have also stated that they won't be bringing over exclusive apps for the Vision Pro.

Going back to Zoom support however, it is pretty interesting to see how work calls will be handled on the Apple Vision Pro, and this does provide users with a sneak peek as to how spatial computing will work on the new headset.

Read the full article

0 notes

Text

wow. thats all i have to say for now.

It does everything i wanted and so so so so much more.

They thought of it all and solved our brief. Great.

...

or have they.

two screens with 23 million pixels for each eye. which means it is close to your eye. Will this pose a problem for the future of eye deseases

youtube

due to be released in late 2024

0 notes

Video

youtube

What is Spam Score | How to Reduce Spam Score Of Website

The Spam Score shows the percentage of sites with features similar to what you are looking for that have been penalized or banned by Google. Spam Score is based on our machine learning algorithms, which discovered 27 common traits in the data supplied to us from millions of banned or penalized sites.

A low spam score is defined as a score of 1%-30%.

Medium spam scores range from 31% to 60%.

A high spam score ranges from 61% to 100%.

A high spam score for your site or the site you are considering does not necessarily mean that it is spammy. This is a sign that you should pay more attention to the quality and relevance of this site.

Spam Score is based on our machine learning model, which identified 27 common characteristics among the millions of banned or penalized sites in the data we provided.

1. Low number of pages found

2. TLD correlated with spam domains

3. Domain name length

4. Domain name contains numerals

5. Google Font API Present

6. Google Tag Manager

7. Doubleclick Present

8. Phone Number Present

9. Links to LinkedIn

10. Email Address Present

11. Defaults to HTTPS

12. Use of Meta Keywords

13. Visit Rank

14. Rel Canonical

15. Length of Title Element

16. Length of Meta Description

17. Length of Meta Keywords

18. Browser Icon

19. Facebook Pixel

20. Number of External Outlinks

21. Number of Domains Linked-To

22. Ratio of External Links to Content

23. Vowels/Consonants in Domain Name

24. Hyphens in Domain Name

25. URL Length

26. Presence of Poison Words

27. Uses High CPC Anchor Text

#spam #whatisspam #spamscore #wesbitespam #mozspamscore #bannedwebsite #canonical #googletagmanager #internallinnk #externallink #metakeyword #content #domainname #domainname #anchortext #facebookpixel

0 notes

Text

[00:27:38]

Dan Smigrod: - Let me just try and ask that question again. If we're talking about a virtual reality experience, a VR experience meets Matterport. That seems like it fell flat. It fizzled. You called it a VR winter. Paolo called it a VR winter.

How important is it that Apple has come out --It says that the Apple M2 chip + the Apple R1 chip to make a latency free viewing experience.

The 64 pixels for every pixel of an iPhone, meaning there's 23 million pixels on a postage stamp size screen in each eye, or the equivalent of 4k each eye. I remember when I was using the Samsung Gear VR with my Samsung phone, it looked like "burlap" in terms of the screen resolution.

It was of course, and it sounds like apples about to come out with a silky smooth viewing experience as you described it, Emily.

Also the fact that you can come in and out of the experience of what I think of being in the real-world versus immersed in a virtual world. Are these factors super-important in terms of the potential success of Matterport + Apple Vision Pro?

[00:29:01]

Emily Olman: - Go ahead, Paolo.

[00:29:06]

Paolo Tosolini: - I'm thinking that the in general for the success of the Apple Vision Pro, having a 4k resolution for each eye is very important because it opens up the possibility to do actual work: to read text; to spend more time on the device and do something meaningful other than just gaming.

Gaming, if it's low resolution, I can bear with it, but I cannot do actual work on a low resolution screen. Now, how will Matterport benefit from the combination of this hi-res? Well, photography will be better. The tour will look better.

However, I'm thinking of something else. Matterport as a company is hosting all of these virtual tours and so on, but it's becoming a data company. They just released a week ago, Matterport Genesis AI [WGAN.INFO/mpgenesisai].

[00:30:10]

Dan Smigrod: - June 14, 2023 -- Matterport Genesis AI -- like ChatGPT4 for inside of a Matterport tour. Isn't that crazy and exciting? Relevant to our conversation for Matterport?

[00:30:23]

Paolo Tosolini: - I think so.

[00:30:25]

Dan Smigrod: Matterport tours + Matterport Genesis generative AI + Apple Vision Pro?

[00:30:32]

Paolo Tosolini: AI, these keywords, perfect. ;-)

[00:30:37]

Dan Smigrod: Just to pause for our viewers, that means AI. You're looking at a house, and you type in, "show me the house without any furniture." Boom! It's clutter-free. Type in, "show me the house with contemporary furniture." Boom! It's filled in.

How significant is Matterport Genesis generative AI in a Matterport tour + Apple Vision Pro experience, Paolo?

[00:30:55]

Paolo Tosolini: To me, it is significant. For example, a simple use case. I do a Matterport scan of an empty office. I load it into Apple Vision Pro.

Then, through Matterport Genesis generative AI, I'm standing in the middle of this office and I can pre-visualize what this office might look like if we subdivided this open space or in individual rooms. This is what Emily already did with her app.

[00:31:28]

Dan Smigrod: Yes. Is this better than holding up an Apple iPhone or an Apple iPad?

[00:31:33]

Paolo Tosolini: Totally.

[00:31:33]

Emily Olman: It's going to be better, but also, it's not as universal yet. That's one of its problems is that the price point is intended right now for developers and for early adopters. And it's going to show you what the future will be. Yeah, Paolo is right.

This is totally relevant. Matterport Genesis AI -- being able to have Matterport combined with AI and to be able to with a verbal prompt, if you will, pull a model off of a URL, pull it out, grab the model and bring it into wherever you want it to be.

You can put it around you, or you can hold it and probably rotate it. Paolo and I could be standing around it and looking at it, like we already do with other AR experiences.

0 notes

Text

Apple Vision Pro is an upcoming mixed reality headset developed by Apple Inc. It was announced on June 5, 2023, at its 2023 Worldwide Developers Conference and is set to be available for purchase in 2024 in the United States.

Here are some of the key features of Apple Vision Pro:

Ultra-high-resolution display: The headset features two micro-OLED displays with a combined resolution of 23 million pixels, which is more than a 4K TV for each eye.

Spatial Audio: The headset has an advanced Spatial Audio system that creates the feeling that sounds are coming from the environment around the user.

R1 chip: The headset is powered by the new R1 chip, which is designed for high-performance spatial computing.

Hand gesture and voice control: The headset can be controlled using hand gestures and voice commands.

VisionOS: The headset runs on a new operating system called VisionOS, which is specifically designed for mixed reality.

Apple Vision Pro is expected to be a major player in the mixed reality market. It has the potential to revolutionize the way we work, play, and communicate.

Here are some of the potential uses for Apple Vision Pro:

Virtual reality gaming: The headset's high-resolution display and Spatial Audio system will make virtual reality gaming more immersive than ever before.

Productivity apps: The headset can be used to run productivity apps like Microsoft Office and Adobe Photoshop in a virtual environment.

Communication apps: The headset can be used to make video calls and collaborate with others in a virtual space.

Education: The headset can be used to learn about different subjects in a more immersive way.

Entertainment: The headset can be used to watch movies, TV shows, and play games in a virtual environment.

Apple Vision Pro is still in development, so it is not yet clear how it will be used in the real world. However, the potential uses for this headset are vast. It has the potential to change the way we interact with the world around us.

0 notes

Text

iPhone 15 Series Set to Integrate Built-in Support for Apple Vision Pro Headset

Rumors have been swirling about the upcoming iPhone 15 series, and the latest reports suggest that these new iPhones will feature built-in support for Apple's cutting-edge Vision Pro headset. Apple enthusiasts can expect the release of a lineup comprising the iPhone 15, iPhone 15 Plus, iPhone 15 Pro, iPhone 15 Pro Max, and the rumored iPhone 15 Ultra in September. These speculations come from none other than the well-regarded Apple analyst, Ming-Chi Kuo, who has an impressive track record when it comes to predicting Apple's future moves.

Seamless Integration: iPhone 15 Series to Enhance User Experience with Apple Vision Pro Headset

According to Kuo, Apple has ambitious plans to integrate the iPhone into the Vision Pro ecosystem, and this integration will commence with the iPhone 15 series. As part of this development, Apple intends to upgrade the ultrawideband (UWB) chip found in the iPhone 15 series to support Vision Pro. Since the launch of the iPhone 11, Apple has been utilizing the U1 ultrawideband chip in its devices. This chip is also present in other Apple products, including AirTag, Apple Watch Series 6 and later models, AirPods Pro charging case, and more. By transitioning to a 7nm fabrication process for the iPhone 15 series, Apple aims to reduce power consumption while enhancing overall performance.

For those unfamiliar with the capabilities of the Apple U1 chip, it enables features such as Find My, AirDrop, Handoff, and Precision Finding. With an enhanced UWB chip in the iPhone 15 series, the reliability and performance of these features will be further improved, enabling seamless compatibility with the Vision Pro headset. This integration will facilitate effortless interaction between Apple's highly advanced wearable and its other products.

Critics may argue that the Apple Vision Pro headset is prohibitively expensive for the average consumer, rendering the integration unnecessary. However, recent rumors suggest that Apple is also working on a more affordable mixed-reality headset, potentially named Apple Vision. The news of Apple adding support for the headset indicates that the Apple Vision may indeed become a reality. To ensure cost-effectiveness, the Apple Vision is rumored to feature lower-resolution displays, older processors, fewer sensors, and fewer cameras. This approach will help keep the price within a reasonable range. The Apple Vision Pro, on the other hand, is slated for release in 2024 with a price tag of $3,499 (~Rs 2,88,000). It boasts impressive features, including two micro OLED displays with a combined resolution of 23 million pixels, Spatial Computing, EyeSight, an M2 processor, a custom media R1 processor, and much more.

At present, specific details regarding the exact specifications of the Vision Pro headset and the iPhone 15 series remain scarce. However, with the release date drawing near, more information is expected to surface in the coming days. Apple enthusiasts and tech enthusiasts alike eagerly await the unveiling of these groundbreaking products, which promise to deliver an exceptional user experience and push the boundaries of innovation in the technology realm.

Read the full article

0 notes

Text

Exploring Apple Vision Pro: A Fresh Perspective on Spatial Computing

Quick Summary

The new Apple Vision Pro is a revolutionary spatial computer that seamlessly blends digital content with the physical world. This blending of the digital and physical worlds allows users to stay present and connected to others in their daily lives. The system introduces a fully three-dimensional user interface controlled by the most natural and intuitive inputs possible — a user’s eyes, hands, and voice.

Introduction Apple Vision Pro

Groundbreaking technology—Vision OS is the world’s first spatial operating system, enabling us to interact with digital content in real-time. To accomplish this feat – an ultra-high-resolution display that packs 23 million pixels across two displays, and custom Apple silicon in a unique dual-chip design – we needed to fully reinvent how we think about sensing and interacting with the world.

The Vision Pro is a headset with a bold design and big promises. It’s easy to see the headset, with its $3,499 price tag, showing up in high-end technology stores like Apple. With its premium design, the Vision Pro deserves that kind of reputation.

Apple’s new visionOS will massively reduce the development time of applications and games within the AR ecosystem. RealityKit and Reality Composer Pro, in the meantime, will make it easier to design AR content for your existing or new AR application. The reality composer allows the creation of 3D objects via a series of growth steps that allow combining entire scenes using menus and social media templates. The Vision Pro is based upon Apple technology; one example shown was voice recognition being able to trigger the execution of code; another is full support for Apple’s SwiftUI and Xcode.

The headset is made of a single piece of polished and anodized aluminum, much like the Apple Watch, with a leather-and-silicone strap. The first units will come in two sizes, one bigger than the other. An array of sensors including a gyroscope, an accelerometer, a magnetometer, and a barometer will provide orientation tracking and health monitoring. Display resolution is about twice that of the current Rift prototype shown to developers.

Extraordinary New Experiences

Apple vision pro is a stunning technology that brings elements of augmented reality, 3D, advanced gesture controls, and a powerful new interface to personal computing.

An infinite canvas for apps at work and at home: Apple today introduced a new version of its popular Pro app — Apple Vision Pro. The update brings several improvements that users will appreciate. To begin with, the updated program features a three-dimensional interface that frees apps from the boundaries of the display so they can appear side by side at any size. Apple says that this feature makes it easier to multitask and use more apps simultaneously. The update has been designed with professionals in mind and, as such, supports dual monitors.

Engaging entertainment experiences: Apple Vision Pro is the most advanced entertainment system Apple has ever created. It’s built to deliver an authentic and intensely immersive viewing experience, with two ultra-high-resolution displays (4K monitors for a combined total resolution of 32 million pixels) that provide a 100-foot wide screen and advanced Spatial Audio. Users can watch movies and TV shows, or enjoy stunning three-dimensional movies. And Apple Immersive Video offers 180-degree high-resolution recordings with Spatial Audio, bringing them to entirely new places.

Immersive Environments: With Environments app brings the power of spatial augmented reality to iOS. With it, users can create virtual environments which they can then adjust in myriad ways. This enables a user’s world to grow beyond the dimensions of a physical room with dynamic, beautiful landscapes that can help them focus or reduce clutter in busy spaces.

Memories come alive: Apple Vision Pro’s unwavering commitment to the highest standards of image capture and processing, combined with decades of experience delivering consumer experiences, make Apple Vision Pro an exceptional choice for everyone from casual photographers to industry professionals. The result is an ever-expanding collection of photos and videos to enjoy anytime, anywhere – either on iPhone or Mac – all beautiful and accessible across Apple devices.

FaceTime becomes spatial: With Apple Vision Pro, FaceTime calls take advantage of the room around the user. To reflect real-world environments in FaceTime, we looked to see how people are communicating today — taking in the whole scene and interacting with everyone within it.

A Revolutionary Operating System and User Interface

VisionOS is a revolutionary operating system that will take your computer to an all-new level that you’ve never seen before. Built from the ground up, this OS delivers powerful spatial computing experiences that can take advantage of the space around the user, unleashing new opportunities at work and at home. Apple Vision Pro with EyeSight features a revolutionary innovation that helps users stay connected with those around them. When a person approaches someone wearing Vision Pro, the device feels transparent — letting the user see them while also displaying the user’s eyes, providing a reassuring and welcoming experience. When a user is immersed in an environment or using an app, EyeSight gives visual cues to others about what the user is focused on.

What will Apple Vision Pro Bring to App Development?

Apple Vision Pro is the new spatial computing platform that allows Apple to market its augmented reality glasses. The new platform is poised to change the way software developers, software testers, and cybersecurity experts create applications.

For App Developers: The release of iOS 11 has introduced a new Augmented Reality (AR) platform called Vision. With this new framework, Apple is opening up the world of AR to developers and designers across the globe. Paired with Xcode 9 beta, developers will have access to tools that enable them to build even better AR experiences for iOS devices. Vision places over 200 computer vision APIs at our disposal; helping developers quickly build AR experiences.

For Cybersecurity Professionals: Vision Pro is a new mobile app that was recently released for iOS devices. It allows users to perform eye-based facial recognition scans using their device’s front camera. This type of authentication is often used by enthusiasts in the cybersecurity community for fun, but also by some enterprises to identify employees and gain access to secure areas. Personal use of this type of application has increased considerably since its introduction on Snapchat where it is used to prevent users from taking screenshots.

For Software Testers: Vision Pro was developed by Exploratory Testing Software. The software company provides the leading testing platform for QA teams to collaborate in real-time and produce more reliable apps. Vision Pro is changing the way developers and testers work together and is designed to improve the way quality assurance processes are conducted.

For UI/UX Designers: Vision Pro claims to be the world’s most advanced authoring tool for creating spatial user interfaces. It facilitates the creation of applications with features supporting all of the senses, providing a much more natural and intuitive way to interact with computers. The San Diego-based software company that builds Vision, called Immersion Corporation, thinks this new technology will change the way applications are developed in the future.

Apple Vision Pro: Pioneering a New Era of AR-VR Wearable Technology

Apple has recently launched its AR Kit to introduce the world to virtual reality. Along with a number of patents, it has been suggested that the new technology will be incorporated into all of their new iPhone models. Some developers are already porting their apps to this exciting new development. There are many organizations that are researching how to make better use of this technology for building engaging experiences and systems. Whatever your vision, or necessity, at Brain Inventory we’re experienced at leveraging our Virtual and Augmented Reality expertise to elevate the capabilities of your digital solutions.

0 notes

Text

Everything you need to know about Apple Vision Pro

Apple has just unveiled Apple Vision Pro, a revolutionary spatial computer that seamlessly blends digital content with the physical world, while allowing users to stay present and connected to others. Vision Pro creates an infinite canvas for apps that scales beyond the boundaries of a traditional display and introduces a fully three-dimensional user interface controlled by the most natural and intuitive inputs possible — a user’s eyes, hands, and voice.

Featuring visionOS, the world’s first spatial operating system, Vision Pro lets users interact with digital content in a way that feels like it is physically present in their space. The breakthrough design of Vision Pro features an ultra-high-resolution display system that packs 23 million pixels across two displays, and custom Apple silicon in a unique dual-chip design to ensure every experience feels like it’s taking place in front of the user’s eyes in real time.

Today marks the beginning of a new era for computing,” said Tim Cook, Apple’s CEO. “Just as the Mac introduced us to personal computing, and iPhone introduced us to mobile computing, Apple Vision Pro introduces us to spatial computing. Built upon decades of Apple innovation, Vision Pro is years ahead and unlike anything created before — with a revolutionary new input system and thousands of groundbreaking innovations. It unlocks incredible experiences for our users and exciting new opportunities for our developers.”

“Creating our first spatial computer required invention across nearly every facet of the system,” said Mike Rockwell, Apple’s vice president of the Technology Development Group. “Through a tight integration of hardware and software, we designed a standalone spatial computer in a compact wearable form factor that is the most advanced personal electronics device ever.”

Apple Vision Pro starts at $3,499 (U.S.), and will be available early next year on apple.com and at Apple Store locations in the U.S., with more countries coming later next year. Customers will be able to learn about, experience, and personalise their fit for Vision Pro at Apple Store locations.

Check out the video below which explains in a bit more detail how the Vision pro works.

https://youtu.be/TX9qSaGXFyg

Read the full article

0 notes

Text

Meet the Vision Pro, Apple's first Official VR Headset

The rumours were indeed true - Apple's foray into VR technology has finally been made official in the form of the Apple Vision Pro, the company's first-ever VR headset. It's not available for several more months, although that didn't stop Apple from revealing the headset, giving fans (and folks curious enough about VR) a reason to wait just a bit longer.

In terms of design, the Vision Pro comes with a curvy look, which conjures up mental images of snowboarding goggles and snorkels. It makes for a streamlined appearance however, although this will ultimately be a subjective matter altogether. The design does incorporate aesthetic elements from other Apple products though, with a laminated glass visor that joins into an alloy frame.

The Vision Pro features speakers positioned externally from a user's ear, control buttons on the sides of the headset frame, as well as a rather-obvious strap that secures the Vision Pro on a user's head. Apple says that the Vision Pro comes with Light Seal, which wraps and conforms to a user's particular head and face shape allowing for a more secure and comfortable fit.

The Vision Pro runs on visionOS, which Apple describes as "the world's first spatial operating system" (we'll leave you guys to decide on that one). It is a clever approach to software though, allowing users to interact with digital content as if it were present in their immediate surroundings.

Inside the Vision Pro is a micro-OLED setup that packs 23 million pixels across two displays, as well as a custom Apple M2 SoC. The company intends the headset to be used for an array of different applications as well as hardware, with planned connectivity for other Apple products such as the Magic Keyboard and Trackpad, in addition to natively-built gesture and motion controls. FaceTime support will also be available soon, for more immersive video calls.

Of course, entertainment is a key element in the Vision Pro's usefulness - Apple says that the device will be able to deliver movies and TV shows to users in an immersive manner, in addition to gameplay access with over a hundred Apple Arcade titles. There's even built-in imaging photo functionality, allowing the device to render what Apple calls Spatial Photo and video functionality, letting users experience memories from photographs and videos up close and personal. Building on TouchID and FaceID, Apple says that the device will feature OpticID for biometrics, which will scan a user's eyes to unlock and use the headset.

As for battery life, don't expect to go around walking ala-ready-player-one just yet - at the moment, the device is limited to a rather unimpressive two hours of battery life per charge when unplugged, so users might want to opt for wired indoor use instead.

If you're planning on grabbing one for yourself, then it should be said that the Apple Vision Pro is a bit on the expensive side. The headset starts at at $3,499, and it won't be available until early next year. Whether or not the Vision Pro will elevate VR into mainstream adoption is still yet unknown, but at the moment there's a lot riding on Apple's promises with the device.

Read the full article

0 notes

Text

War in Ukraine and ICBMs: The Untold Story of How They Could Blow Up the World February 23, 2023

Ever since Russia invaded Ukraine a year ago, media coverage of the war hasn’t included even the slightest mention of intercontinental ballistic missiles (ICBMs). Yet the war has boosted the chances that ICBMs will set off a global holocaust. Four hundred of them — always on hair-trigger alert — are fully armed with nuclear warheads in underground silos scattered across Colorado, Montana, Nebraska, North Dakota and Wyoming, while Russia deploys about 300 of its own. Former Defense Secretary William Perry has called ICBMs “some of the most dangerous weapons in the world,” warning that “they could even trigger an accidental nuclear war.”

"Because they’re uniquely vulnerable as land-based strategic weapons — with the military precept of “use them or lose them” — ICBMs are set to launch on warning. So, as Perry explained, “If our sensors indicate that enemy missiles are en route to the United States, the president would have to consider launching ICBMs before the enemy missiles could destroy them. Once they are launched, they cannot be recalled. The president would have less than 30 minutes to make that terrible decision.”

What is at stake? In an interview after publication of his landmark 2017 book “The Doomsday Machine: Confessions of a Nuclear War Planner,” Daniel Ellsberg explained that nuclear war “would loft into the stratosphere many millions of tons of soot and black smoke from the burning cities. It wouldn’t be rained out in the stratosphere. It would go around the globe very quickly and reduce sunlight by as much as 70 percent, causing temperatures like that of the Little Ice Age, killing harvests worldwide and starving to death nearly everyone on Earth. It probably wouldn’t cause extinction. We’re so adaptable. Maybe 1 percent of our current population of 7.4 billion could survive, but 98 or 99 percent would not.”But rather than openly discuss — and help to reduce — such dangers, U.S. mass media and officials downplay or deny them with silence. The best scientific research tells us that a nuclear war would result in “nuclear winter,” causing the deaths of about 99 percent of the planet’s human population."

"One corporate-media favorite, Timothy Snyder, churns out bellicose bravado under the guise of solidarity with the Ukrainian people, issuing declarations such as his recent claim that “the most important thing to say about nuclear war” is that “it’s not happening.” Which just goes to show that a prominent Ivy League historian can be as dangerously blinkered as anyone else."

To be clear: There is no valid excuse for Russia’s invasion of Ukraine and its horrific ongoing war on that country. At the same time, continually pouring in vast quantities of higher and higher tech weaponry qualifies as what Martin Luther King Jr. called “the madness of militarism.” During his Nobel Peace Prize speech, King declared: “I refuse to accept the cynical notion that nation after nation must spiral down a militaristic stairway into the hell of thermonuclear destruction.”

"In the coming days, reaching a crescendo Friday on the first anniversary of the Ukraine invasion, media assessments of the war will intensify. Upcoming protestsand other actions in dozens of U.S. cities – many calling for genuine diplomacy to “stop the killing” and “avert nuclear war” — are unlikely to get much ink, pixels or airtime. But without real diplomacy, the future offers ongoing slaughter and escalating risks of nuclear annihilation."

READ MORE https://www.counterpunch.org/2023/02/23/war-in-ukraine-and-icbms-the-untold-story-of-how-they-could-blow-up-the-world/

0 notes