Text

LRT vs BRT for value, utilization, emissions-savings the two modes couldn’t be different

BRT or bus rapid transit has been described as light rail transit or LRT on the cheap. If that is true then would this not be the same as saying LRT is nothing more than enhanced BRT?

On Jan. 11, 2014 a similar investigation was done regarding passenger rail in general and roadway travel in general as well. By the end of the analysis the answer was clear: rail won, hands down. (See: “Rails vs roads for value, utilization, emissions-savings: difference like night and day” here).

On the other hand, when comparing LRT to BRT, there doesn’t appear to be any clear winner. So, as to LRT or BRT, is there one that stands above the other?

Baltimore Light Rail, Baltimore

What say we further investigate?

If what we’re after here is an apples-to-apples comparison, we’re probably not going to get that. However, there is one that comes close: that’d be the Confederation Line operated by OC Transit in Ottawa, Ontario, Canada.

Before exploring that corridor and service more, this should no doubt be prefaced by pointing out that there are successful rapid-bus and light rail transit systems seemingly in all corners of the world. There are said operations in Curitiba, Brazil (BRT); Cleveland, Ohio (BRT); Stockton, California (BRT); Portland, Oregon (LRT); Phoenix, Arizona (LRT); Salt Lake City, Utah (LRT); and, Ottawa, of course, which now has one or both (BRT and LRT).

The backstory – Ottawa

Ottawa, as an early adherent of both modes, first installed BRT in ’83. But, the city also embraced LRT, its Trillium Line (its original) coming into being in 1999. Both have served the city well. Where the bus rapid transit was concerned, no doubt did too well, so well, in fact, that patronage demand could not be kept up with. That’s how popular the service had become. Something had to be done. Something was.

That was in 2012 when Ottawa leaders decided that BRT was no longer going to cut it and opted for was LRT in its place.

With that decision ruling – if not saving – the day, all there was left to do was to build what is today referred to as the light rail transit Confederation Line – 7.8-mile-long LRT system for the most part running in an east-west direction with a total of 13 stations dotting the line and at one of those stations, it is joined by the north-south configured Trillium Line. As for the train, well, it’s been branded the “O-Train.” https://ift.tt/34N6Txb Service was inaugurated on Sept. 14, 2019 and the daily passenger counts from then until year’s end was 159,000.

In the meantime, plans are afoot to extend not just the Confederation Line (both east and west), but the Trillium Line (south) as well.

So, the question now becomes did this northern North American city make the wrong choice when it went with its decision to implement BRT in the first place?

Here is what The Transport Politic blogger Yonah Freemark on the bus move in his “Ottawa, Closer than Ever to Replacing Bus Rapid Transit with Light Rail” post had to say. “With expenses like that – practically equivalent to building a new rail line from scratch – one wonders whether there was ever any fiscal advantage to using buses first along the rapidway. Did the city lose out by not choosing rail when the transitway first opened in 1983?”

Offering more perspective, Freemark added, “The transitway has so many riders that it puts 2,600 daily buses onto two downtown streets, and by 2018, the system will have literally no more capacity. By 2030, Ottawa would have to get a bus downtown every eighteen second to accommodate all of its riders – an impossible feat.”

So, it can be seen what the problem is.

That being said, that Ottawa’s BRT was as wildly popular as it was, it is important to note that such was the exception; not the rule.

Breaking new ground

While it can be argued that the main function of public transit – in whatever form – is to provide a mobility service to the masses that is affordable; gets riders to and from where they need and/or want to go; treads lightly, that is, it taking up as small a footprint as is practicable; not be unduly disruptive to other travel modes – the ideal here to be a complement to the others; and is efficient, fast, frequent, reliable, safe, if this all is what typifies the program, that and the daily mobility needs of mass-transit-riding public being satisfactorily met, then as a provider of transit services the task that such were called on to do, has, in fact, been done. Anything above and beyond that is what’s called gravy.

Baltimore, Maryland traffic jam

Speaking to just that very point, meanwhile, on Oct. 26, 2015, published here on the Air Quality Matters blog was the post: “Transit as density facilitator, traffic and emissions inhibitor, report finds.”

In that post, brought out were factors relating to the implementation of light rail transit in cities not previously having had them and how such installation would impact considerations such as development and population densification, air pollution and greenhouse gas (GHG) emissions mitigation and ridership generation, the last having direct implications relating to traffic reduction and travel-pattern alteration. Important to note is that whereas BRT buses using internal combustion power for propulsion output harmful pollutants into the atmosphere, with electrified LRT train use that simply doesn’t happen.

Moreover, and though it might not come immediately to mind additional factors like the numbers of operating employees needing to be hired, how much of a capital outlay must there be for such, or, for that matter, for the procurement of fuel or energy depending on mode, LRT versus BRT operation, these as well important considerations must not be overlooked. Over time, all of this could make a huge difference.

With all of this in mind, for those areas weighing one mode against the other, instructive it could be to view the Ottawa BRT/LRT experience as a model from which others could be measured.

Images: AndrewHorne, Wikimedia Commons (upper); U.S. Census Bureau (second); Clean Energy Canada (third); Alstom (lower)

Published by Alan Kandel

source https://alankandel.scienceblog.com/2020/11/01/lrt-vs-brt-for-value-utilization-emissions-savings-the-two-modes-couldnt-be-different/

0 notes

Text

Designing A Better Battery

Two researchers from the Artie McFerrin Department of Chemical Engineering at Texas A&M University are making strides in developing the next generation of batteries.

Professor Perla Balbuena and Professor Jorge Seminario are developing protective layers for batteries that are safer and have longer lives. Balbuena and Seminario’s research is currently in Phase 2 of the Battery 500 project, which is supported by the U.S. Department of Energy (DOE). The continuous support of the DOE has resulted in significant developments in the understanding and design of advanced battery materials that will further the progress of battery technologies for electrical vehicles.

For their research, the pair was awarded a 2020 Texas A&M Engineering Experiment Station Research Impact Award, which recognizes research that has had an impact, broadly defined as leading to outcomes that extend beyond conventional boundaries, including opening new lines of research, solving a long existing problem or producing tools or products that have become widely adopted in practice by industry and/or government.

The researchers’ work is focused on the mechanisms of electron and ion transport and degradation reactions of electrolyte materials, which have had a profound effect in the development of advanced materials for high-energy density batteries, beyond current lithium-ion (Li-ion) technologies.

Going beyond Li-ion technologies means that instead of the typical layered intercalation – the movement of lithium ions between the layered materials of the anode and cathode during charge and discharge – materials used in Li-ion batteries, Li-metal is used as one of the electrodes, providing a much higher theoretical capacity.

However, stable, long-lived Li-metal batteries are not yet possible due to multiple issues. One of these issues has to do with the interaction between Li-metal and the electrolyte solution – the substance through which the lithium ions pass during charging and discharging. The extreme reactivity of lithium metal causes the formation of microscopic fibers of lithium (called dendrites), which sprout from the electrode and eventually may short circuit the battery.

To prevent or mitigate the dendritic growth, one of the most important factors, yet also one of the least understood, is the formation and properties of solid-electrolyte-interphase (SEI) layers. The continuous passing of electrons between the Li-metal anode and a liquid or solid electrolyte causes the electrolyte layer to degrade. This degradation results in the formation of multicomponent SEI layers on the electrodes. In order to be effective, the SEI films need to act as barriers that stop the transfer of electrons while letting the lithium ions go through and deposit smoothly on the metal surface.

The research conducted by Balbuena and Seminario has had a strong impact on the development of protective layers for safer and longer-life batteries. In particular, for the first time, the research team was able to explain the sustained growth of SEI layers at the surface of anodes in Li-ion batteries where the degradation reactions proceed via radical species when the layers thicknesses grow beyond those allowing electron tunneling.

The team was also able to contribute ideas for tuning/designing protective layers by examining nucleation and growth mechanisms of SEI layers derived from the electrolyte material of the battery. Further work discussed the development of a practical device using these ideas and other new concepts related to ionic transport through the interfacial layer. The mechanisms proved by the theoretical and simulation work by Balbuena and Seminario were confirmed by experimental observations and applied to practical devices.

source https://scienceblog.com/519376/designing-a-better-battery/

0 notes

Text

Skilled surgeons boost colon cancer survival by 70%

Patients of more technically skilled surgeons, as assessed by review of operative video, have better long-term survival after surgery for the treatment of colon cancer, reports a new Northwestern Medicine study.

Patients whose surgery was performed by a highly skilled surgeon had a 70 percent lower risk of dying over five years compared to patients with a lower skilled surgeon, the study found.

“This is pretty mind blowing,” said lead author Dr. Karl Bilimoria, professor of surgery at Northwestern University Feinberg School of Medicine and a Northwestern Medicine surgical oncologist. “The long-term effect is huge.”

The paper was published October 30 in JAMA Oncology and presented virtually at the Commission on Cancer Annual Meeting.

Listen to a podcast about this study.

Although the importance of surgical skill has long been presumed, this is the first study, to the authors’ knowledge, to demonstrate a surgeon’s skill is important to long-term outcomes among patients undergoing cancer treatment surgery.

“It may seem common sense in some ways, but we didn’t realize the magnitude of the effect,” Bilimoria said. In a prior study the team conducted, there were 25% fewer short-term complications — such as a colon leak or bleeding — when a highly skilled surgeon performed the colon cancer operation.

Colon cancer is one of most common in the U.S.

Colon cancer is one of the most common cancers, with over 100,000 new cases diagnosed annually in the United States. In many cases, surgery is the primary treatment.

Bilimoria also is director of the Surgical Outcomes and Quality Improvement Center of Northwestern and a member of the Robert H. Lurie Comprehensive Cancer Center of Northwestern University.

One possible reason for the difference is that a skilled surgeon may be more likely to remove lymph nodes that contain colon cancer, said study first author Dr. Brian Brajcich, a Northwestern surgical resident and research fellow.

“A less skilled surgeon may not remove as many lymph nodes, potentially leaving behind cancer,” Brajcich said. “Patients who would benefit from chemotherapy after surgery won’t receive it if we don’t know that the cancer has spread to the lymph node.”

How do you find a highly skilled cancer surgeon?

How do you find a highly skilled cancer surgeon? Ask about the number of similar procedures they have done, Bilimoria said.

“Lots of experience with the procedure is a good marker of skill,” Bilimoria said. “General surgeons, colorectal surgeons and surgical oncologists all do colon cancer surgery, and there can be excellent cancer surgeons in each of those fields.”

In the study, a surgeon’s technical skill was scored based on review of video footage of colon surgery by other surgeons, including peers and expert reviewers. The outcomes of patients treated by surgeons with higher and lower skill scores were then compared.

Improving outcomes after surgery, especially cancer surgery, should include efforts targeting surgeons’ technical skill. Comprehensive approaches to help surgeons improve their technical skill could include surgical coaching and the ability to review and discuss surgical video footage with peers and experts in the field.

Further research is needed to determine whether efforts to improve surgical skill lead to improved technical skills and patient outcomes. Additionally, the actual methods to improve surgical skill need to be advanced and studied to determine whether they are effective at improving patient outcomes.

Bilimoria acknowledged the small study size but said, “Even in this size study, we see a large impact.”

Other Northwestern authors include Dr. Jonah Stulberg, Jeanette Chung and Reiping Huang.

This study was funded by a grant from the Agency for Healthcare Research and Quality (5R01HS024516), a grant from the National Cancer Institute (T32CA247801) of the National Institutes of Health and a grant from the Health Care Services Corporation.

source https://scienceblog.com/519374/skilled-surgeons-boost-colon-cancer-survival-by-70/

0 notes

Text

Ultra-thin, energy efficient photodetectors on glass

Though we may not always realize it, photodetectors contribute greatly to the convenience of modern life. Also known as photosensors, photodetectors convert light energy into electrical signals to complete tasks such as opening automatic sliding doors and automatically adjusting a cell phone’s screen brightness in different lighting conditions.

A new paper, published by a team of Penn State researchers in ACS Nano, seeks to further advance photodetectors’ use by integrating the technology with durable Gorilla glass, the material used for smart phone screens that is manufactured by Corning Incorporated.

The integration of photodetectors with Gorilla glass could lead to the commercial development of “smart glass,” or glass equipped with automatic sensing properties. Smart glass has a number of applications ranging from imaging to advanced robotics, according to the researchers.

“There are two problems to address when attempting to manufacture and scale photodetectors on glass,” said principal investigator Saptarshi Das, assistant professor of engineering science and mechanics (ESM). “It must be done using relatively low temperatures, as the glass degrades at high temperatures, and must ensure the photodetector can operate on glass using minimal energy.”

To overcome the first challenge, Das, along with ESM doctoral student Joseph R. Nasr, determined that the chemical compound molybdenum disulfide was the best material to use as a coating on the glass.

Then, Joshua Robinson, professor of materials science and engineering (MatSE) and MatSE doctoral student Nicholas Simonson used a chemical reactor at 600 degrees Celsius — a low enough temperature so as not to degrade the Gorilla glass – to fuse together the compound and glass. The next step was to turn the glass and coating into a photodetector by patterning it using a conventional electron beam lithography tool.

“We then tested the glass using green LED lighting, which mimics a more natural lighting source unlike laser lighting, which is commonly used in similar optoelectronics research,” Nasr said.

The ultra-thin body of the molybdenum disulfide photodetectors allows for better electrostatic control, and ensures it can operate with low power — a critical need for the smart glass technology of the future.

“The photodetectors need to work in resource-constrained or inaccessible locations that by nature do not have access to sources of unrestricted electricity,” Das said. “Therefore, they need to rely on pre-storing their own energy in the form of wind or solar energy.”

If developed commercially, smart glass could lead to technology advances in wide-ranging sectors of industry including in manufacturing, civil infrastructure, energy, health care, transportation and aerospace engineering, according to the researchers. The technology could be applied in biomedical imaging, security surveillance, environmental sensing, optical communication, night vision, motion detection and collision avoidance systems for autonomous vehicles and robots.

“Smart glass on car windshields could adapt to oncoming high-beam headlights when driving at night by automatically shifting its opacity using the technology,” Robinson said. “And new Boeing 757 planes could utilize the glass on their windows for pilots and passengers to automatically dim sunlight.”

Mark W. Horn, professor of ESM, and Aaryan Oberoi, ESM doctoral student, also participated in this project.

Corning Incorporated and the Center for Atomically Thin Multifunctional Coatings, housed in Penn State’s Materials Research Institute, funded this research.

source https://scienceblog.com/519372/ultra-thin-energy-efficient-photodetectors-on-glass/

0 notes

Text

When Algorithms Compete, Who Wins?

Companies like Netflix and Hulu compete for subscribers to make sure their businesses thrive. But there’s another type of competition at work that receives far less attention – the competition among the machine learning algorithms used by these kinds of competitor companies.

James Zou, Stanford assistant professor of biomedical data science and an affiliated faculty member of the Stanford Institute for Human-Centered Artificial Intelligence, says that as algorithms compete for clicks and the associated user data, they become more specialized for subpopulations that gravitate to their sites. And that, he finds in a new paper with graduate student Antonio Ginart and undergraduate Eva Zhang, can have serious implications for both companies and consumers.

Perhaps consumers don’t mind if Hulu recommendations seem intended for urban teenagers or Netflix offers better choices for middle-aged rural men, but when it comes to predicting who should receive a bank loan or whose resume should reach a hiring manager, these algorithms have real-world repercussions.

“The key insight is that this happens not because the businesses are choosing to specialize for a specific age group or demographic,” Ginart says. “This happens because of the feedback dynamics of the competition.”

Inevitable Specialization

Before they started their research, Zou’s team recognized that there’s a feedback dynamic at play if companies’ machine learning algorithms are competing for users or customers and at the same time using customer data to train their model. “By winning customers, they’re getting a new set of data from those customers, and then by updating their models on this new set of data, they’re actually then changing the model and biasing it toward the new customers they’ve won over,” Ginart says.

The team wondered: How might that feedback affect the algorithms’ ability to provide quality recommendations? To get at an answer, they analyzed algorithmic competition mathematically and simulated it using some standard datasets. In the end, they found that when machine learning algorithms compete, they eventually (and inevitably) specialize, becoming better at predicting the preferences of a subpopulation of users.

“It doesn’t matter how much data you have, you will always see these effects,” Zou says. Moreover, “The disparity gets larger and larger over time – it gets amplified because of the feedback loops.”

In addition, the team showed that beyond a certain mathematically calculable number of competitors, the quality of predictions declines for the general population. “There’s actually a sweet spot – an optimal number of competitors that optimizes the user experience,” Ginart says. Beyond that number, each AI agent has access to data from a smaller fraction of users, reducing their ability to generate quality predictions.

The team’s mathematical theorems apply whenever an online digital platform is competing to provide users with predictions, Ginart says. Examples in the real world include companies that use machine learning to predict users’ entertainment preferences (Netflix, Hulu, Amazon) or restaurant tastes (Yelp, TripAdvisor), as well as companies that specialize in search, such as Google, Bing, and DuckDuckGo.

“If we go to Google or Bing and type in a search query, you could say that what Google is trying to do is predict what links we will consider most relevant,” Ginart says. And if Bing does a better job of making those predictions, maybe we’ll be more inclined to use that platform, which in turn alters the input into that machine learning system and changes the way it makes predictions in the future.

The theorems also apply to companies that predict users’ credit risk or even the likelihood that they will jump bail. For example, a bank may become very good at predicting the creditworthiness of a very specific cohort of people – say, people over the age of 45 or people of a specific income bracket – simply because they’ve gathered a lot of data for that cohort. “The more data they have for that cohort, the better they can service them,” Ginart says. And although these algorithms get better at making accurate predictions for one subpopulation, the average quality of service actually declines as their predictions for other groups become less and less accurate.

Imagine a bank loan algorithm that relies on data from white, middle-aged customers and therefore becomes adept at predicting which members of that population should receive loans. That company is actually missing an opportunity to accurately identify members of other groups (Latinx millennials, for example) who would also be a good credit risk. That failure, in turn, sends those customers elsewhere, reinforcing the algorithm’s data specialization, not to mention compounding structural inequality.

Seeking Solutions

In terms of next steps, the team is looking at the effect that buying datasets (rather than collecting data only from customers) might have on algorithmic competition. Zou is also interested in identifying some prescriptive solutions that his team can recommend to policymakers or individual companies. “What do we do to reduce these kinds of biases now that we have identified the problem?” he says.

“This is still very new and quite cutting-edge work,” Zou says. “I hope this paper sparks researchers to study competition between AI algorithms, as well as the social impact of that competition.”

Stanford HAI’s mission is to advance AI research, education, policy and practice to improve the human condition. Learn more.

source https://scienceblog.com/519368/when-algorithms-compete-who-wins/

0 notes

Text

Scientists launch quest to develop quantum sensors for probing quantum materials

When it comes to fully understanding the hidden secrets of quantum materials, it takes one to know one, scientists say: Only tools that also operate on quantum principles can get us there.

A new Department of Energy research center will focus on developing those tools. Based at the University of Illinois at Urbana-Champaign, the Center for Quantum Sensing and Quantum Materials brings together experts from UIUC, DOE’s SLAC National Accelerator Laboratory, Stanford University and the University of Illinois-Chicago.

They’ll work on developing three cutting-edge quantum sensing devices: a scanning qubit microscope, a spectroscopy instrument that takes advantage of pairs of entangled electrons and another instrument that will probe materials with pairs of photons from SLAC’s X-ray free-electron laser, the Linac Coherent Light Source, which has recently reopened after an upgrade.

These new techniques will allow researchers to see in much greater detail why quantum materials do the weird things they do, paving the way to discovering new quantum materials and inventing even more sensitive probes of their behavior.

The work will focus on understanding the atomic-level processes behind unconventional superconductors that conduct electricity with no resistance at relatively high temperatures; topological insulators, which carry current with no loss along their edges; and strange metals, which superconduct when chilled but have strange properties at higher temperatures.

“What is exciting is that this center gives us a chance to create some really new quantum measurement techniques for studying energy-relevant quantum materials,” center Director Peter Abbamonte, a professor of physics at UIUC, said in a press release.

“We often get trapped in the cycle of using the same old measurements – not because we don’t need new kinds of information or knowledge, but because developing techniques is expensive and time consuming,” Abbamonte said. The new center, he said, will allow scientists to push the envelope of quantum measurement by tackling bigger problems.

Exotic entangled states

Quantum materials get their name from the fact that their exotic properties stem from the cooperative behavior of electrons and other phenomena that obey the rules of quantum mechanics, rather than the familiar Newtonian laws of physics that govern our everyday world. These materials could eventually have a huge impact on future energy technologies – for instance, by allowing people to transmit power with essentially no loss over long distances and making transportation much more energy efficient.

But a quantum material may contain a confounding mixture of exotic, overlapping states of matter that are hard to sort out with conventional tools.

“In the quantum world everything becomes entangled, so the boundaries of one object start to overlap with the boundaries of another,” said SLAC Professor Thomas Devereaux, one of six SLAC and Stanford researchers collaborating in the new center. “We’ll be probing this entanglement using various tools and techniques.”

Quantum sensors are nothing new. They include superconducting quantum interference devices, or SQUIDs, invented half a century ago to detect extremely small magnetic fields, and superconducting transition edge sensors, which incorporate SQUIDS to detect exquisitely small signals in astronomy, nuclear non-proliferation, materials analysis and homeland defense.

At a basic level, they operate by putting the sensor into a known quantum state and allowing it to interact with the object of interest. The interaction changes the state of the quantum system, and measuring the new state of the system reveals information about the object that could not be obtained with conventional approaches.

Qubits on a tip

In one of the technologies under development, the scanning qubit microscope, the quantum sensor would consist of one or more qubits placed on the tip of a probe and moved over the surface of a material. A qubit is a basic unit of quantum information, like the bits of ordinary computer memory that flip back and forth between zero and 1. But a qubit exists as a superposition of both zero and 1 states at once. The scanner’s qubit might consist of a single hydrogen atom, for instance, with the spin of its single electron simultaneously existing as up, down and all possible states in between.

“You can try to entangle the qubit sensor with the quantum state of the material you’re studying so you can actually sense the entanglement of quantum states within the material,” said Kathryn Moler, Stanford’s vice provost and dean of research. “If we can do that, it will be really cool.”

Other SLAC and Stanford researchers collaborating in research for the new center are Professors Zhi-Xun Shen and David Reis, Assistant Professor Ben Feldman and staff scientist Mariano Trigo.

The center is one of 10 Energy Frontier Research Centers awarded $100 million by the DOE Office of Science.

For questions or comments, contact the SLAC Office of Communications at [email protected].

source https://scienceblog.com/519370/scientists-launch-quest-to-develop-quantum-sensors-for-probing-quantum-materials/

0 notes

Text

Researchers develop a new way to create a spectrum of natural-looking hair colors

We’ve long been warned of the risks of dyeing hair at home and in salons. Products used can cause allergies and skin irritation — an estimated 1% of people have an allergy to dye. Furthermore, repeated use of some dyes has been linked to cancer.

But there soon may be a solution for the growing list of salons and hair color enthusiasts searching for natural alternatives to dyes and cosmetics.

Northwestern University researchers have developed a new way to create a spectrum of natural-looking hair colors, ranging from blond to black, by using enzymes to catalyze synthetic melanin.

The research was published Oct. 30 in the journal Chemistry of Materials.

Melanin is an enigmatic and ubiquitous material often found in the form of brown or black pigment. Northwestern’s Nathan Gianneschi, the research lead and associate director for the International Institute for Nanotechnology, said every type of organism produces melanin, making it a readily available and versatile material to use in the lab.

“In humans, it’s in the back of our eye to help with vision, it’s in our skin to help with protecting skin cells from UV damage,” Gianneschi said. “But birds also use it as a spectacular color display — peacock feathers are made of melanin entirely.”

Gianneschi is the Jacob and Rosalind Cohn Professor of Chemistry in Northwestern’s Weinberg College of Arts and Sciences and a professor of materials science and engineering and biomedical engineering in the McCormick School of Engineering. Claudia Battistella, a postdoctoral fellow in Gianneschi’s lab, is the paper’s first author.

In the typical process of coloring hair, stylists use bleach to strip melanin from hair, then add ammonia and dye to open and penetrate the hair cuticles for permanent color. Work being done with melanin in other parts of the lab inspired Battistella to see how it applied to hair dye.

Battistella thought by replacing melanin instead of removing it and depositing color on the surface of hair instead of within it, she could create a more sustainable way to create lasting color. In this process, researchers dye hair by combining mushroom enzymes with an amino acid, causing a process that mimics reactions that naturally occur in the body. Preliminary studies revealed potential for the colored layer to persist through several washes.

“The dyeing process is similar from a stylist’s point of view, but these conditions are milder, so they take a little longer,” Battistella said. “Though it could be combined with a base, it’s not necessary to use one, and there is no need for chemical pigments. Because we already have melanin in our bodies, we believe we won’t have allergic reactions to it.”

Because we already have melanin in our bodies, we believe we won’t have allergic reactions to it.”

Claudia Battistella

Chemistry Postdoc

Because melanin also fades in hair due to sun exposure and aging, researchers also wondered about the sun’s impact on the dye. Along with being a milder process than traditional dye, coating hair in synthetic melanin holds potential to protect hair from sun damage that can cause whitening. Studies showed that compared to untreated hair, hair treated with melanin pigment was damaged less when exposed to ultraviolet rays.

The paper identifies four key advantages to using synthetic melanin instead of traditional dyes:

Synthetic melanin avoids the use of ammonia as a base layer.

The precursors to treating hair with melanin are less toxic.

The process uses safer, more scalable chemicals.

There is vast potential in future cosmetic translations of synthetic melanin.

Given the industry’s desire to move away from carcinogens and other toxic chemicals, Gianneschi believes his lab will be able to break through the regulatory industry. The team then hopes to find a partner willing to develop the dye on a larger scale. From Gianneschi’s perspective, the main motivation for the research isn’t just excitement over melanin’s potentially vast commercial use.

“From a biomedical perspective, there’s a huge market of people with a hair dye allergy,” Gianneschi said. “Our first thought was it would be great to have a solution to help those people.”

source https://scienceblog.com/519366/researchers-develop-a-new-way-to-create-a-spectrum-of-natural-looking-hair-colors/

0 notes

Text

Touch and taste? It’s all in the suckers

Octopuses have captured the human imagination for centuries, inspiring sagas of sea monsters from Scandinavian kraken legends to TV’s “Voyage to the Bottom of the Sea” and, most recently, Netflix’s less-threatening “My Octopus Teacher.” With their eight suction-cup covered arms, their very appearance is unique, and their ability to use those appendages to touch and taste while foraging further sets them apart.

In fact scientists have wondered for decades how those arms, or more specifically the suction cups on them, do their work, prompting a number of experiments into the biomechanics. But very few have studied what is happening on a molecular level. In a new report, Harvard researchers got a glimpse into how the nervous system in the octopus’ arms (which operate largely independently from its centralized brain) manage this feat. The work published Thursday in Cell.

The scientists identified a novel family of sensors in the first layer of cells inside the suction cups that have adapted to react and detect molecules that don’t dissolve well in water. The research suggests these sensors, called chemotactile receptors, use these molecules to help the animal figure out what it’s touching and whether that object is prey.

Video: Peter B. Kilian

“We think because the molecules do not solubilize well, they could, for instance, be found on the surface of octopuses’ prey and [whatever the animals touch],” said Nicholas Bellono, an assistant professor of molecular and cellular biology and the study’s senior author. “So, when the octopus touches a rock versus a crab, now its arm knows, ‘OK, I’m touching a crab [because] I know there’s not only touch but there’s also this sort of taste.’”

In addition, scientists found diversity in what the receptors responded to and the signals they then transmitted to the cell and nervous systems.

“We think that this is important because it could facilitate complexity in what the octopus senses and also how it can process a range of signals using its semi-autonomous arm nervous system to produce complex behaviors,” Bellono said.

The scientists believe this research can help uncover similar receptor systems in other cephalopods, the invertebrate family that also includes squids and cuttlefish. The hope is to determine how these systems work on a molecular level and answer some relatively unexplored questions about how these creatures’ capabilities evolved to suit their environment.

“Not much is known about marine chemotactile behavior and with this receptor family as a model system, we can now study which signals are important for the animal and how they can be encoded,” said Lena van Giesen, a postdoctoral fellow in the Bellono Lab and lead author of the paper. “These insights into protein evolution and signal coding go far beyond just cephalopods.”

Along with Giesen, other co-authors from the lab include Peter B. Kilian, an animal technician, and Corey A.H. Allard, a postdoctoral fellow.

“The strategies they have evolved in order to solve problems in their environment are unique to them and that inspires a great deal of interest from both scientists and non-scientists alike,” Kilian said. “People are drawn to octopuses and other cephalopods because they are wildly different from most other animals.”

The team set out to uncover how the receptors are able to sense chemicals and detect signals in what they touch, like an arm around a snail, to help them make choices.

Octopus arms are distinct and complex. About two-thirds of an octopus’s neurons are located in their arms. Because the arms operate partially independently from the brain, if one is severed it can still reach for, identify, and grasp items.

“People are drawn to octopuses and other cephalopods because they are wildly different from most other animals.”

— Peter B. Kilian

The team started by identifying which cells in the suckers actually do the detecting. After isolating and cloning the touch and chemical receptors, they inserted them in frog eggs and in human cell lines to study their function in isolation. Nothing like these receptors exists in frog or human cells, so the cells act essentially like closed vessels for the study of these receptors.

The researchers then exposed those cells to molecules such as extracts from octopus prey and others items to which these receptors are known to react. Some test subjects were water-soluble, like salts, sugars, amino acids; others do not dissolve well and are not typically considered of interest by aquatic animals. Surprisingly, only the poorly soluble molecules activated the receptors.

Researchers then went back to the octopuses in their lab to see whether they too responded to those molecules by putting those same extracts on the floors of their tanks. They found the only odorants the octopuses receptors responded to were a non-dissolving class of naturally occurring chemicals known as terpenoid molecules.

“[The octopus] was highly responsive to only the part of the floor that had the molecule infused,” Bellono said. This led the researchers to believe that the receptors they identified pick up on these types of molecules and help the octopus distinguish what it’s touching. “With the semi-autonomous nervous system, it can quickly make this decision: ‘Do I contract and grab this crab or keep searching?’”

While the study provides a molecular explanation for this aquatic touch-taste sensation in octopuses through their chemotactile receptors, the researchers suggest further study is needed, given that a great number of unknown natural compounds could also stimulate these receptors to mediate complex behaviors.

“We’re now trying to look at other natural molecules that these animals might detect,” Bellono said.

This research was supported by the New York Stem Cell Foundation, the Searle Scholars Program, the Sloan Foundation, the Klingenstein-Simons Fellowship, the National Institutes of Health, and the Swiss National Science Foundation.

source https://scienceblog.com/519359/touch-and-taste-its-all-in-the-suckers/

0 notes

Text

Asteroid’s Scars Tell Stories of its Past

By studying impact marks on the surface of asteroid Bennu – the target of NASA’s OSIRIS-REx mission – a team of researchers led by the University of Arizona has uncovered the asteroid’s past and revealed that despite forming hundreds of millions of years ago, Bennu wandered into Earth’s neighborhood only very recently.

The study, published in the journal Nature, provides a new benchmark for understanding the evolution of asteroids, offers insights into a poorly understood population of space debris hazardous to spacecraft, and enhances scientists’ understanding of the solar system.

The researchers used images and laser-based measurements taken during a two-year surveying phase in which the OSIRIS-REx spacecraft, about the size of a 15-passenger van, orbited Bennu and broke the record for the smallest spacecraft to orbit a small body.

Presented at the opening day of the American Astronomical Society’s Division for Planetary Sciences meeting on Oct. 26, the paper details the first observations and measurements of impact craters on individual boulders on an airless planetary surface since the Apollo missions to the moon 50 years ago, according to the authors.

The publication comes just a few days after a major milestone for NASA’s University of Arizona-led OSIRIS-REx mission. On Oct. 20, the spacecraft successfully descended to asteroid Bennu to grab a sample from its boulder-scattered surface – a first for NASA. The sample has now been successfully stowed and will be returned to Earth for study in 2023, where it could give scientists insight into the earliest stages of the formation of our solar system.

Impact Craters on Rocks Tell a Story

Although Earth is being pelted with more than 100 tons of space debris each day, it is virtually impossible to find a rockface pitted by impacts from small objects at high velocities. Courtesy of our atmosphere, we get to enjoy any object smaller than a few meters as a shooting star rather than having to fear being struck by what essentially amounts to a bullet from outer space.

Planetary bodies lacking such a protective layer, however, bear the full brunt of a perpetual cosmic barrage, and they have the scars to show for it. High-resolution images taken by the OSIRIS-REx spacecraft during its two-year survey campaign allowed researchers to study even tiny craters, with diameters ranging from a centimeter to a meter, on Bennu’s boulders.

The team found boulders of 1 meter or larger to be scarred, on average, by anywhere from one to 60 pits – impacted by space debris ranging in size from a few millimeters to tens of centimeters.

“I was surprised to see these features on the surface of Bennu,” said the paper’s lead author, Ronald Ballouz, a postdoctoral researcher in the UArizona Lunar and Planetary Laboratory and a scientist with the OSIRIS-REx regolith development working group. “The rocks tell their history through the craters they accumulated over time. We haven’t observed anything like this since astronauts walked on the moon.”

For Ballouz, who grew up during the 1990s in post-civil war Beirut, Lebanon, the image of a rock surface pitted with small impact craters evoked childhood memories of building walls riddled with bullet holes in his war-torn home country.

“Where I grew up, the buildings have bullet holes all over, and I never thought about it,” he said. “It was just a fact of life. So, when I looked at the images from the asteroid, I was very curious, and I immediately thought these must be impact features.”

The observations made by Ballouz and his team bridge a gap between previous studies of space debris larger than a few centimeters, based on impacts on the moon, and studies of objects smaller than a few millimeters, based on observations of meteors entering Earth’s atmosphere and impacts on spacecraft.

“The objects that formed the craters on Bennu’s boulders fall within this gap that we don’t really know much about,” Ballouz said, adding that rocks in that size range are an important field of study, mainly because they represent hazards for spacecraft in orbit around Earth. “An impact from one of these millimeter to centimeter-size objects at speeds of 45,000 miles per hour can be dangerous.”

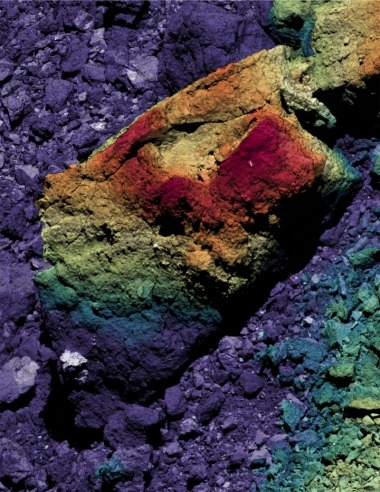

This composite image of a boulder on Bennu’s surface shows the cascading rim of one of the asteroid’s ancient craters that originated while Bennu resided in the asteroid belt. The image combines photos from OSIRIS-REx and reconstructed shape models built from the OSIRIS-REx laser altimeter instrument. The overlaid colors highlight the topography of the boulder (warmer colors are higher elevation).University of Arizona/Johns Hopkins APL/York University

Ballouz and his team developed a technique to quantify the strength of solid objects using remote observations of craters on the surfaces of boulders – a mathematical formula that allows researchers to calculate the maximum impact energy that a boulder of a given size and strength could endure before being smashed. In other words, the crater distribution found on Bennu today keeps a historical record of the frequency, size and velocity of impact events the asteroid has experienced throughout its history.

“The idea is actually pretty simple,” Ballouz said, using a building exposed to artillery fire as an analogy to boulders on an asteroid. “We ask, ‘What is the largest crater you can make on that wall before the wall disintegrates?’ Based on observations of multiple walls of the same size, but with different sized craters, you can get some idea of the strength of that wall.”

The same holds true for a boulder on an asteroid or other airless body, said Ballouz, who added that the approach could be used on any other asteroid or airless body that astronauts or spacecraft may visit in the future.

“If a boulder gets hit by something larger than an object that would leave a certain size cater, it would just disappear,” he explained. In other words, the size distribution of boulders that have persisted on Bennu serve as silent witnesses to its geologic past.

A Newcomer to Earth’s Neighborhood

Applying the technique to boulders ranging in size from pebbles to parking garages, the researchers were able to make inferences about the sizes and type of impactors to which the boulders were exposed, and for how long.

The authors conclude that the largest craters on Bennu’s boulders were created while Bennu resided in the asteroid belt, where impact speeds are lower than in the near-Earth environment, but are more frequent and often near the limit of what the boulders could withstand. Smaller craters, on the other hand, were acquired more recently, during Bennu’s time in near-Earth space, where impact speeds are higher but potentially disruptive impactors are much less common.

Based on these calculations, the authors determine that Bennu is a relative newcomer to Earth’s neighborhood. Although it is thought to have formed in the main asteroid belt more than 100 million years ago, it is estimated that it was kicked out of the asteroid belt and migrated to its current territory only 1.75 million years ago. Extending the results to other near-Earth objects, or NEOs, the researchers also suggest that these objects likely come from parent bodies that fall in the category of asteroids, which are mostly rocky with little or no ice, rather than comets, which have more ice than rock.

While theoretical models suggest that the asteroid belt is the reservoir for NEOs, no observational evidence of their provenance was available other than meteorites that fell to Earth and were collected, Ballouz said. With these data, researchers can validate their models of where NEOs come from, according to Ballouz, and get an idea of how strong and solid these objects are – crucial information for any potential missions targeting asteroids in the future for research, resource extraction or protecting Earth from impact.

source https://scienceblog.com/519361/asteroids-scars-tell-stories-of-its-past/

0 notes

Text

Aprotinin a new drug candidate for the treatment of COVID-19

Researchers from the University of Kent, the Goethe-University in Frankfurt am Main (Germany), and the Hannover Medical School (Germany) have identified a drug with the potential to provide a treatment for COVID-19.

The international team led by Professor Martin Michaelis, Dr Mark Wass (both School of Biosciences, University of Kent), and Professor Jindrich Cinatl (Institute of Medical Virology, Goethe-University) found that the approved protease inhibitor aprotinin displayed activity against SARS-CoV-2, the coronavirus that causes COVID-19, in concentrations that are achieved in patients. Aprotinin inhibits the entry of SARS-CoV-2 into host cells and may compensate for the loss of host cell protease inhibitors that are downregulated upon SARS-CoV-2 infection.

Aprotinin aerosols are approved in Russia for the treatment of influenza and could be readily tested for the treatment of COVID-19.

Professor Martin Michaelis said: ‘”he aprotinin aerosol has been reported to be tolerated extremely well in influenza patients. Hence, it may have a particular potential to prevent severe COVID-19 disease when applied early after diagnosis.”

source https://scienceblog.com/519360/aprotinin-a-new-drug-candidate-for-the-treatment-of-covid-19/

0 notes

Text

Individuals may legitimise hacking when angry with system or authority

University of Kent research has found that when individuals feel that a system or authority is unresponsive to their demands, they are more likely to legitimise hacker activity at an organisation’s expense.

Individuals are more likely to experience anger when they believe that systems or authorities have overlooked pursuing justice on their behalf or listening to their demands. In turn, the study found that if the systems or authorities in question were a victim of hacking, individuals would be more likely to legitimise the hackers’ disruptive actions as a way to manifest their own anger against the organisation.

With more organisations at risk to cyber security breaches, and more elements of individuals’ social lives taking place online, this research is timely in highlighting how hackers are perceived by individuals seeking justice.

The research, led by Maria Heering and Dr Giovanni Travaglino in the School of Psychology, was carried out with British undergraduate students and participants on academic survey crowdsourcer, Prolific Academic. The participants were presented with fictional scenarios of unfair treatment from authorities, with complaints either dismissed or pursued, before they were told that hackers had defaced the authorities’ websites. Participants were then asked to indicate how much they disagreed or agreed with the hackers’ actions. These hackers were predominantly supported by participants perceiving them as a way to ‘get back at’ the systems who do not listen to their demands.

Maria Heering said: ‘When individuals perceive a system as unjust, they are motivated to participate in political protest and collective action to promote social change. However, if they believe they will not have voice, they will legitimise groups and individuals who disrupt the system on their behalf. While this study explored individuals’ feelings of anger, there is certainly more to be explored in this research area. For example, there might be important differences between the psychological determinations of individuals’ support for humorous, relatively harmless forms of hacking, and more serious and dangerous ones.’

Their research paper ‘“If they don’t listen to us, they deserve it”: The effect of external efficacy and anger on the perceived legitimacy of hacking’ is published in Group Processes & Intergroup Relations. DOI: https://doi.org/10.1177/1368430220937777

Research

source https://scienceblog.com/519357/individuals-may-legitimise-hacking-when-angry-with-system-or-authority/

0 notes

Text

Bridges With Limb-Inspired Architecture Could Withstand Earthquakes, Cut Repair Costs

Structural damage to ailing bridges can mean billions of dollars in repairs. New bridge designs promise more damage-resistant structures and lower restoration costs. But if these designs haven’t been implemented in the real world, predicting how they can be damaged and what repair strategies should be implemented remain unresolved.

In a study published in the Journal of Structural Engineering, Texas A&M University and the University of Colorado, Boulder researchers have conducted a comprehensive damage and repair assessment of a still-to-be-implemented bridge design using a panel of experts from academia and industry. The researchers said the expert feedback method offers a unique and robust technique for evaluating the feasibility of bridge designs that are still in early research and development phases.

“Bridges, particularly those in high-seismic regions, are vulnerable to damage and will need repairs at some point. But now the question is what kind of repairs should be used for different types and levels of damage, what will be the cost of these repairs and how long will the repairs take — these are all unknowns for new bridge designs,” said Petros Sideris, assistant professor in the Zachry Department of Civil and Environmental Engineering. “We have answered these questions for a novel bridge design using an approach that is seldomly used in structural engineering.”

Most bridges are monolithic systems made of concrete poured over forms that give the bridges their shape. These bridges are strong enough to support their own weight and other loads, such as traffic. However, Sideris said if there is an unexpected occurrence of seismic activity, these structures could crack, and remedying the damage would be exorbitantly expensive.

The hybrid sliding-rocking bridge column tested in the Center for Infrastructure Renewal’s large-scale experimental facilities./ Justin Baetge/Texas A&M Engineering

To overcome these shortcomings, Sideris and his team have developed a new design called a hybrid sliding-rocking bridge. Instead of a monolithic design, these bridges are made of columns containing limb-inspired joints and segments. Hence, in the event of an earthquake, the joints allow some of the energy from the ground motion to diffuse while the segments move slightly, sliding over one another rather than bending or cracking. Despite the overall appeal of the hybrid sliding-rocking bridge design, little is known about how the bridges will behave in real-world situations.

“To find the correct repair strategy, we need to know what the damages look like,” Sideris said. “Our bridge design is relatively new and so there is little scientific literature that we could refer to. And so, we took an unconventional approach to fill our gap in knowledge by recruiting a panel of experts in bridge damage and repair.”

For their study, Sideris and Abbie Liel, professor at the University of Colorado, Boulder, and the team recruited a panel of eight experts from industry and academia to determine the damage states in experimentally tested hybrid sliding-rocking segment designed columns. Based on their evaluations of the observed damage, the panel provided repair strategies and estimated costs for repair. The researchers then used that information to fix the broken columns, retested the columns under the same initial damage-causing conditions and compared the repaired column’s behavior to that of the original column through computational investigations.

The panel found that columns built with their design sustained less damage overall compared to bridges built with conventional designs. In fact, the columns showed very little damage even when subject to motions reminiscent of a powerful once-in-a-few-thousand-years earthquake. Furthermore, the damage could be repaired relatively quickly with grout and carbon fibers, suggesting that no special strategy was required for restoration.

“Fixing bridges is a slow process and costs a significant amount of money, which then indirectly affects the community,” Sideris said. “Novel bridge designs that may have a bigger initial cost for construction can be more beneficial in the long run because they are sturdier. The money saved can then be used for helping the community rather than repairing infrastructure.”

This work is funded by the National Science Foundation.

Other contributors include Jakub Valigura, former graduate student researcher from the University of Colorado, Boulder and Mohammad Salehi, former graduate student in the civil and environmental engineering department at Texas A&M.

source https://scienceblog.com/519350/bridges-with-limb-inspired-architecture-could-withstand-earthquakes-cut-repair-costs/

0 notes

Text

Face mask aims to deactivate virus to protect others

In the pandemic, people wear face masks to respect and protect others — not merely to protect themselves, says a team of Northwestern University researchers.

With this in mind, the researchers developed a new concept for a mask that aims to make the wearer less infectious. The central idea, which received support from the National Science Foundation through a RAPID grant, is to modify mask fabrics with anti-viral chemicals that can sanitize exhaled, escaped respiratory droplets.

By simulating inhalation, exhalation, coughs and sneezes in the laboratory, the researchers found that non-woven fabrics used in most masks work well to demonstrate the concept. A lint-free wipe with just 19% fiber density, for example, sanitized up to 82% of escaped respiratory droplets by volume. Such fabrics do not make breathing more difficult, and the on-mask chemicals did not detach during simulated inhalation experiments.

The research was published today (Oct. 29) in the journal Matter.

Importance of protecting others

“Masks are perhaps the most important component of the personal protective equipment (PPE) needed to fight a pandemic,” said Northwestern’s Jiaxing Huang, who led the study. “We quickly realized that a mask not only protects the person wearing it, but much more importantly, it protects others from being exposed to the droplets (and germs) released by the wearer.

“There seems to be quite some confusion about mask wearing, as some people don’t think they need personal protection,” Huang added. “Perhaps we should call it public health equipment (PHE) instead of PPE.”

Huang is a professor of materials science and engineering in Northwestern’s McCormick School of Engineering. Graduate student Haiyue Huang and postdoctoral fellow Hun Park, both members of Huang’s laboratory, are co-first authors of the paper.

“Where there is an outbreak of infectious respiratory disease, controlling the source is most effective in preventing viral spread,” said Haiyue Huang, a 2020 Ryan Fellowship Awardee. “After they leave the source, respiratory droplets become more diffuse and more difficult to control.”

The goal and results

Although masks can block or reroute exhaled respiratory droplets, many droplets (and their embedded viruses) still escape. From there, virus-laden droplets can infect another person directly or land on surfaces to indirectly infect others. Huang’s team aimed to chemically alter the escape droplets to make the viruses inactivate more quickly.

To accomplish this, Huang sought to design a mask fabric that: (1) Would not make breathing more difficult, (2) Can load molecular anti-viral agents such as acid and metal ions that can readily dissolve in escaped droplets, and (3) Do not contain volatile chemicals or easily detachable materials that could be inhaled by the wearer.

82%New design sanitizes up to 82% of droplets

After performing multiple experiments, Huang and his team selected two well-known antiviral chemicals: phosphoric acid and copper salt. These non-volatile chemicals were appealing because neither can be vaporized and then potentially inhaled. And both create a local chemical environment that is unfavorable for viruses.

“Virus structures are actually very delicate and ‘brittle,’” Huang said. “If any part of the virus malfunctions, then it loses the ability to infect.”

Huang’s team grew a layer of a conducting polymer polyaniline on the surface of the mask fabric fibers. The material adheres strongly to the fibers, acting as reservoirs for acid and copper salts. The researchers found that even loose fabrics with low-fiber packing densities of about 11%, such as medical gauze, still altered 28% of exhaled respiratory droplets by volume. For tighter fabrics, such as lint-free wipes (the type of fabrics typically used in the lab for cleaning), 82% of respiratory droplets were modified.

Working through shutdown

Huang’s team scrambled to assemble resources to start this new line of research, right before Northwestern entered the “essential-only” state. Several team members received “essential” designation to work throughout Illinois’ “Stay-at-Home” order.

“As an adviser, I must admit that I did not see all the technical challenges coming,” Huang said. “But I am very pleased that my team members have exhibited amazing drive and creativity to tackle those difficulties and get the work done.”

One unexpected difficulty was how to measure and quantify the degree of chemical modification of escaped droplets. “For acid-modified droplets, I thought we could do this simply by collecting them on a pH paper and seeing the color changes of the landed droplets,” Huang said. “I insisted that Haiyue and Hun should try this first, and only to realize that it wasted a few weeks of their time.”

Park noted yet another problem: “The droplets tend to spread out on pH papers, leaving barely detectable drying marks, and the pH dyes do not have enough color intensity to generate a clear contrast of the dried droplets under optical microscope. So it was very difficult to even ‘see’ the droplets, let alone determine the acid content in them.”

Then Park and Haiyue Huang realized that polyaniline — the polymer they used to coat the fabrics — is a great color indicator for acid itself as it turns from dark blue to green.

“I was laughing at myself when I found out their new idea — I should have thought about it much earlier,” Huang said. “I was even more impressed that Haiyue and Hun quickly invented a new way to make ultrasmooth and uniform polyaniline films that work perfectly as a colorimetric indictor for microscopy observation of acidic droplets.”

Our research has become an open knowledge, and we will love to see more people joining this effort to develop tools for strengthening public health responses.”

Jiaxing Huang

materials scientist

With this breakthrough, the team was able to generate hundreds of microscopy images. Next, Yihan Liu, a master’s student in Huang’s lab joined the project remotely, working from home to develop imaging analysis algorithms to quantity how much the escaped droplets are modified.

“I was stuck home in the spring and summer anyway, so I was very glad that I can make some good use of myself to help out the project,” Liu said. “My parents were pleased to learn that I was not only safe, and also doing something meaningful. I have lived up to their support of my tuition!”

Earlier this year, Huang engaged a team of clinical and biomedical researchers overseas with frontline COVID-19 experiences to discuss his hypotheses, propose research needs and even potential solutions. Together, the researchers published their ideas the journal ACS Nano.

Huang hopes the current work provides a scientific foundation for other researchers, particularly in other parts of the world, to develop their own versions of this chemical modulation strategy and test it further with viral samples or even with patients.

“Our research has become an open knowledge, and we will love to see more people joining this effort to develop tools for strengthening public health responses,” Huang said. “The work is done nearly entirely in lab during campus shutdown. We hope to show researchers in non-biological side of science and engineering and those without many resources or connections that they can also contribute their energy and talent.”

This work was mainly supported by the National Science Foundation (RAPID DMR-2026944). Huang is a member of Northwestern’s International Institute of Nanotechnology,

source https://scienceblog.com/519347/face-mask-aims-to-deactivate-virus-to-protect-others/

0 notes

Text

Study: Republicans and Democrats hate the other side more than they love their own side

The bitter polarization between the Republican and Democratic parties in the U.S. has been on the rise since Newt Gingrich’s partisan combat against President Bill Clinton in the 1990s. But according to a new Northwestern University-led study, disdain for the opposing political party now — and for the first time on record — outweighs affection for one’s own party.

The study, titled “Political sectarianism in America,” will be published Oct. 30 by the journal Science. The authors provide a broad survey of current scientific literature to interpret the current state of politics.

The paper introduces the construct of “political sectarianism” to describe the phenomenon. Political sectarianism has the hallmarks of religious fervor, such as sin, public shaming and apostasy. But unlike traditional sectarianism, where political identity is secondary to religion, political identity is primary.

“The current state of political sectarianism produces prejudice, discrimination and cognitive distortion, undermining the ability of government to serve its core functions of representing the people and solving the nation’s problems,” said lead author Eli Finkel. “Along the way, it makes people increasingly willing to support candidates who undermine democracy and to favor violence in support of their political goals.”

Finkel is professor of social psychology with appointments in Northwestern’s Weinberg College of Arts and Sciences and the Kellogg School of Management.

To ensure that their synthesis captured the collective knowledge base, Finkel recruited coauthors from six academic disciplines: political science, psychology, sociology, economics, management and computational social science.

A systematic review of dozens of published research studies led the authors to identify three key ingredients of political sectarianism, which, when combined, form the “poisonous cocktail” seen today. They include seeing the other side as different (othering), as dislikeable (aversion) and as immoral (moralization).

Using nationally representative survey data since the 1970s, the authors calculated the difference between Americans’ warm feelings toward their fellow partisans and their cold feelings toward opposing partisans. While feelings toward fellow partisans have remained consistently warm, feelings toward opposing partisans have chilled further from tepid to frosty. Indeed, those feelings have grown so frigid that they now exceed warm feelings toward fellow partisans, turning out-party hate into the dominant feeling in American politics.

“Things have gotten much more severe in the past decade, and there is no sign we’ve hit bottom,” said co-author James Druckman, Payson S. Wild Professor of political science and Institute for Policy Research fellow at Northwestern. “As much as the parties differ from one another, partisans perceive even greater differences, believing, for example, that the other party is ideologically extreme, engaged and hostile. Correcting these types of misperceptions could partially vitiate sectarianism.”

The researchers identify the multiple causes of political sectarianism and suggest potential approaches to address and mitigate it. The three causes include:

Identity alignment, meaning political party identities have sorted into a “mega-identity” separated along racial, religious, educational and geographic lines;

The rise of partisan media, impacted by the termination of the FCC “fairness doctrine” in 1987, which required broadcasters to discuss controversial topics in an unbiased way; and

Elite ideological polarization, with Republican politicians moving further right and Democratic politicians moving further left — and politicians in both parties becoming increasingly reliant on ideologically extreme donors

A proposed behavioral intervention includes correcting misperceptions of opposing partisans and encouraging people to engage in cross-party interactions.

“If the differences between Democrats and Republicans really were as extreme as Americans believe, that could help to explain the contempt,” Finkel observes. “But these differences exist more in people’s heads than in reality. There’s a whole lot of common ground, but Americans struggle to see it.”

Finkel offers that simply reminding people of what they have in common reduces out-party hate.

The researchers advise that structural fixes are also required, including tweaking social media algorithms to limit the reach of false or hyperpartisan content and incentivizing politicians to appeal to a broader proportion of Americans. Reforms around campaign finance and partisan gerrymandering are suggested as ways to reduce sectarianizing behaviors and to generate more robust competition in the marketplace of ideas.

Additional co-authors include Christopher A. Bail, Duke University; Mina Cikara, Harvard University; Peter H. Ditto, University of California-Irvine; Shanto Iyengar, Stanford University; Samara Klar, University of Arizona, Tucson; Lilliana Mason, University of Maryland; Mary C. McGrath, Northwestern University; Brendan Nyhan, Dartmouth College; David G. Rand, Massachusetts Institute of Technology; Linda J. Skitka, University of Illinois–Chicago; Joshua A. Tucker, New York University; Jay J. Van Bavel, New York University; and Cynthia S. Wang, Northwestern University.

source https://scienceblog.com/519346/study-republicans-and-democrats-hate-the-other-side-more-than-they-love-their-own-side/

0 notes

Text

Most isolated massive stars are kicked out of their clusters

A pair of University of Michigan studies reveals how some massive stars—stars eight or more times the mass of our sun—become isolated in the universe: most often, their star clusters kick them out.

Massive stars typically reside in clusters. Isolated massive stars are called field massive stars. The papers published by U-M students examined most of these stars in the Small Magellanic Cloud, a dwarf galaxy near the Milky Way.

The studies, appearing in the same issue of The Astrophysical Journal, reveal how these field massive stars originate, or become so isolated. Understanding how field massive stars become isolated—whether they form in isolation or whether they become isolated by being ejected from a star cluster—will help astronomers probe the conditions in which massive stars are formed. Understanding this and cluster formation is critical for understanding how galaxies evolve.

“About a quarter of all massive stars appear to be isolated, and that’s our big question,” said recent undergraduate Johnny Dorigo Jones. “How they’re found to be isolated, and how they got there.”

Dorigo Jones shows in his paper that the vast majority of field massive stars are “runaways,” or stars ejected from clusters. Graduate student Irene Vargas-Salazar looked for field massive stars that may have formed in relative isolation by looking for evidence of tiny clusters around them. That means these relatively isolated stars could have formed in conjunction with these smaller stars. But she found very few of these faint clusters.

“Because massive stars require a lot of material to form, there are usually a lot of smaller stars around them,” Vargas-Salazar said. “My project asks specifically how many of these field massive stars could have formed in the field.”

Dorigo Jones examined how field massive stars are ejected from clusters. He looks at the two different mechanisms that produce runaways: dynamical ejection and binary supernova ejection. In the first, the massive stars are ejected from their clusters—by up to half a million miles per hour—because of unstable orbital configurations of stellar groups. In the second, a massive star is ejected when a binary pair has one star that explodes and shoots its companion out into space.

“By having the velocities and the masses of our stars, we’re able to compare the distributions of those parameters to the model predictions to determine the certain contributions from each of the ejection mechanisms,” Dorigo Jones said.

He found that dynamical ejections—ejections caused by unstable orbital configurations—were about 2 to 3 times more numerous than supernova ejections. But Dorigo Jones also found the first observational data that shows a large fraction of the field massive stars came from a combination of both dynamical and supernova ejections.

“These have been studied in the past but we have now set the first observational constraints on the numbers of these two-step runaways,” he said. “The way we reach that conclusion is we’re essentially seeing that the stars that trace the supernova ejections in our sample are a bit too numerous and too fast compared to the model predictions. You can imagine this being remedied by these stars being reaccelerated upon a supernova kick, having first been dynamically ejected.”

The researchers found that potentially up to half of the stars first thought to be from supernova ejections were first dynamically ejected.

Vargas-Salazar’s findings also support the idea that most field massive stars are runaways, but she looked at opposite conditions: she looked for field massive stars that formed in relative isolation in tiny clusters of smaller stars, where the massive target star is, called the “tip of the iceberg, or TIB clusters. She did this using two algorithms, “friends-of-friends” and “nearest neighbors,” to search for those clusters around 310 field massive stars in the Small Magellanic Cloud.

The “friends-of-friends” algorithm measures the number density of stars by counting how many stars there are at a specific distance from the target star and then doing the same for those stars in turn. The more tightly packed the stars are, the more likely it is to be a cluster. The “nearest neighbors” algorithm measures the number density of stars between the target star and its nearest 20 companions. The more compact and denser the group, the more likely they are to be clusters, Vargas-Salazar said.

Using statistical tests, Vargas-Salazar compared these observations with three random-field datasets and compared the known runaway massive stars to nonrunaways. She found that only a few of the field massive stars appeared to have TIB clusters around them, suggesting that very few actually formed in the field. The balance of the field stars must have originated as runaways.

“In the end, we showed that 5% or less of the stars had TIB clusters. Instead, our findings imply that the majority of stars in field samples could be runaways,” Vargas-Salazar said. “Our findings are actually supporting the result that Johnny found, wrapped in a neat little bow.”

Vargas-Salazar’s findings provide part of the answer to the question of how massive stars form, says Sally Oey, senior author on both of the papers and professor of astronomy at U-M.

“Johnny and Irene’s work are flip sides of the same coin,” Oey said. “Irene’s numbers are consistent with Johnny’s in that the vast majority of field massive stars are runaways, but that a few are not. This is a critical finding for understanding how massive stars and clusters form, and in what conditions.”

Both Dorigo Jones and Vargas-Salazar’s work was supported by the National Science Foundation.

source https://scienceblog.com/519342/most-isolated-massive-stars-are-kicked-out-of-their-clusters/

0 notes

Text

Cellular networks vulnerable to wildfires across U.S.

About one in four people in the United States lives in an area served by cellular phone towers at risk of an outage caused by wildfires, according to researchers at the University of Wisconsin–Madison.

Their study, presented today to a meeting of internet network researchers, found more than 430,000 cell network transceivers covering approximately 85 million people are in areas the U.S. Forest Service considers at moderate or high wildfire risk. The research suggests ways to make the sites more fire-hardy.

“This is a kind of roadmap for cell service providers to make assessments, allocate resources and take steps to make their infrastructure more resilient in the face of this threat,” says Paul Barford, a UW–Madison professor of computer science.

Paul Barford and Carol Barford, who directs UW–Madison’s Center for Sustainability and the Global Environment and is a co-author of the wildfire study, also collaborated on a 2018 project describing thousands of miles of fiber optic cable in the U.S. likely to be inundated by rising sea levels. Wildfire danger has a distinctly different footprint, and presented a complex set of prediction problems.

“Fires are a fact of life for many ecosystems,” says graduate student Scott Anderson, first author of the study, which was supported by the National Science Foundation and the U.S. Department of Homeland Security and presented at the ACM Internet Measurement Conference. “Assessing the risk of fire, though, is complex. It varies a lot over time based on the amount of fuel available, how dry that fuel can get as climate changes, and almost unpredictable factors like the source of ignition.”

The researchers worked with historical records of wildfires, and matched the Forest Service’s map of Wildfire Hazard Potential to a crowdsourced database of cellular network equipment locations from OpenCelliD.

Six states — California, Florida, Texas, South Carolina, Georgia and North Carolina — each have at least 5,000 transceivers in high-risk areas.

UW–Madison researchers mapped the cellular network coverage of cell phone towers in the continental U.S. at moderate (above), high and very high risk of outages cause by wildfires. Images courtesy of Scott Anderson.

High risk.

Very high risk.

“We think of the wildfire threat as being concentrated only in the western part of the United States,” Anderson says. “But we did see that there are areas that are at risk outside of the West, including the southeastern United States — especially in Florida — and even up around Philadelphia.”