#free website classification API

Text

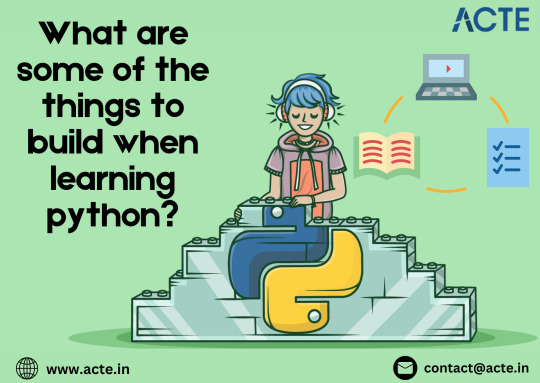

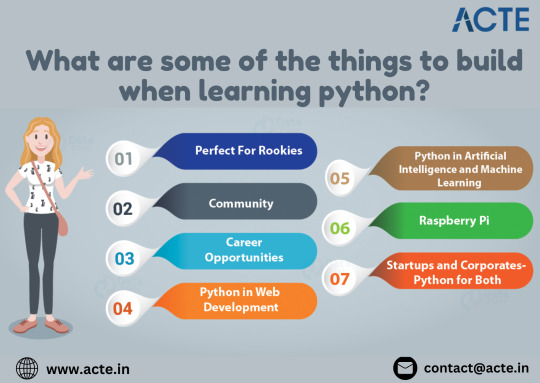

Power Up Your Python Skills: 10 Exciting Projects to Master Coding

Forget textbooks and lectures – the most epic way to learn Python is by doing! This guide unveils 10 thrilling projects that will transform you from a programming rookie to a coding champion. Prepare to conquer these quests and unleash your creativity and problem-solving prowess.

With the helpful assistance of Learn Python Course in Hyderabad, studying Python becomes lot more exciting — regardless of whether you’re a beginner or moving from another programming language.

Mission 1: Command Line Masters

Your quest begins with mastering the fundamentals. Build simple command-line applications – think math wizards, unit converters, or random password generators. These projects are the stepping stones to Pythonic greatness!

Mission 2: Text-Based Games – Level Up

Time to challenge yourself! Create captivating text-based games like Hangman, Tic-Tac-Toe, or a trivia extravaganza. Craft engaging gameplay using loops, conditionals, and functions, while honing your Python skills in the process.

Mission 3: Web Scraper – Unearthing Web Data

The vast web holds secrets waiting to be discovered! Build web scrapers to extract valuable information from websites. Employ libraries like BeautifulSoup and Requests to navigate the HTML jungle, harvest data, and unlock hidden insights.

Mission 4: Data Analysis Detectives

Become a data analysis extraordinaire! Craft scripts to manipulate and analyze data from diverse sources – CSV files, spreadsheets, or databases. Calculate statistics, then use matplotlib or seaborn to create eye-catching data visualizations that reveal hidden truths.

Mission 5: GUI Gurus – Building User-Friendly Interfaces

Take your Python mastery to the next level by crafting user-friendly graphical interfaces (GUIs) with Tkinter or PyQt. From to-do list managers to weather apps, these projects will teach you how to design intuitive interfaces and conquer user interactions.

Mission 6: API Alliances – Connecting to the World

Expand your horizons by building clients for web APIs. Interact with services like Twitter, Reddit, or weather APIs to retrieve and display data. Master the art of making HTTP requests, parsing JSON responses, and handling authentication – invaluable skills for any programmer. People can better understand Python’s complexity and reach its full potential by enrolling in the Best Python Certification Online.

Mission 7: Automation Army – Streamlining Workflows

Say goodbye to repetitive tasks! Write automation scripts to handle tedious processes like file management, data processing, or email sending. Utilize libraries like os, shutil, and smtplib to free up your time and boost productivity.

Mission 8: Machine Learning Marvels – Unveiling AI Power

Enter the fascinating world of machine learning! Build basic classification or regression models using scikit-learn. Start with beginner-friendly projects like predicting housing prices or classifying flowers, then explore more complex algorithms as you progress.

Mission 9: Web Development Warriors – Forge Your Online Presence

Immerse yourself in the thrilling world of web development. Construct simple websites or web applications using frameworks like Flask or Django. Whether it's a personal portfolio site, a blog, or a data-driven application, these projects will teach you essential skills like routing, templating, and database interactions.

Mission 10: Open Source Odyssey – Join the Coding Community

Become a valued member of the open-source community! Contribute to projects on platforms like GitHub. Tackle beginner-friendly issues, fix bugs, or improve documentation. Gain real-world experience and collaborate with fellow developers to make a lasting impact.

These 10 Python quests aren't just about acquiring coding skills – they're a gateway to a world of exploration and innovation. Each project offers a unique opportunity to learn, grow, and create something amazing. So, grab your virtual sword and shield (aka your code editor) and embark on this epic Python adventure!

0 notes

Text

What are free top 10 AI tools you must use in 2024?

As of 2024, the landscape of AI tools continues to evolve, with many new advancements and innovations. Here's a list of the top 10 free AI tools that you must consider using:

TensorFlow: Google's TensorFlow remains one of the most popular and versatile deep learning frameworks. It offers a comprehensive ecosystem for building and deploying machine learning models across various platforms.

PyTorch: Developed by Facebook's AI Research lab (FAIR), PyTorch is known for its dynamic computational graph construction and ease of use. It's widely adopted in both academia and industry for deep learning projects.

Scikit-learn: Scikit-learn is a powerful and user-friendly library for classical machine learning algorithms in Python. It provides simple and efficient tools for data mining, data analysis, and model evaluation.

Keras: Keras is an open-source deep learning library written in Python. It's renowned for its user-friendly interface and seamless integration with other deep learning frameworks like TensorFlow and Theano.

IBM Watson Studio: IBM Watson Studio offers a suite of tools for data scientists, developers, and domain experts to collaborate on AI projects. It provides a variety of capabilities for data preparation, model training, and deployment in a cloud-based environment.

Google Colab (Colaboratory): Google Colab is a free cloud-based Jupyter notebook environment that allows you to write and execute Python code, particularly for machine learning and deep learning tasks. It provides access to free GPU and TPU resources for faster model training.

Hugging Face Transformers: Hugging Face's Transformers library provides a wide range of pre-trained natural language processing (NLP) models, including state-of-the-art transformers like BERT, GPT, and RoBERTa. It's a go-to resource for NLP practitioners and researchers.

Fast.ai: Fast.ai offers high-level deep learning APIs built on top of PyTorch. It provides easy-to-use interfaces and pre-built models for various tasks like image classification, object detection, and natural language processing.

OpenAI Gym: OpenAI Gym is a toolkit for developing and comparing reinforcement learning algorithms. It provides a collection of environments where you can train and evaluate RL agents, making it invaluable for research and experimentation.

NLTK (Natural Language Toolkit): NLTK is a leading platform for building Python programs to work with human language data. It offers a suite of libraries and tools for tasks like tokenization, stemming, tagging, parsing, and semantic reasoning.

These tools cover a broad spectrum of AI and machine learning tasks and are widely used by practitioners and researchers worldwide. They provide a solid foundation for developing innovative AI solutions without the barrier of high costs.

For more information about the Artificial Intelligence and Internet please visit our technology website:

0 notes

Text

5 Tips about small seo tools You Can Use Today

Google Lookup Console is often a free of charge tool that can help Web-site house owners check their Web site’s overall performance on Google research. It offers information about the web site’s rating, the volume of clicks, and also the impressions.

Track your desktop, cell and native rankings in a lot more than one hundred fifty nations around the world, keep an eye on your competitors and acquire everyday updated reviews.

Although There is certainly some discussion about how actionable Google's Normal Language API is for Website positioning, there is not any denying it is a amazing Resource with plenty of Superior Investigation.

The Software also gives a content score that steps the standard of the information towards prime-ranking web pages for your presented search term. MarketMuse is helpful for generating articles which is suitable, instructive, and interesting.

By the top of this article, you need to have a greater understanding of which Software(s) would be the most suitable for your enterprise.

Specialized Search engine optimization will be the Main stage of Search engine marketing optimization, So use our small Search engine optimization tools to figure out on-web site glitches and backend glitches.

Ahrefs Backlink Checker is another well known backlink Evaluation Resource that provides a detailed check out of an internet site’s backlink profile.

In https://cuteseotools.com/webtools/youtube-channel-search , Clearscope's search phrase discovery Software makes use of "smart language algorithms" to discover feasible search term solutions based on matter Thoughts or URLs.

Within a planet of extremely comparable Search engine optimisation tools, Letterdrop is style of in a classification of its possess. Portion content management Remedy, component SEO auditor, component keyword exploration tool, It is really a novel product that does a small amount of a number of various things. But just one definitely distinct element is how it integrates material automation capabilities.

Boasting your organization profile forms the inspiration of most other neighborhood Search engine marketing actions, so It really is A vital stage.

Welcome to the future of landing site personalization. Instapage causes it to be less difficult than previously to develop, examination, and improve your web pages with the power of synthetic intelligence.

The plugin is novice-welcoming still capable of providing the outcome that Search engine marketing pros be expecting. So, it’s unsurprising that companies and big brands also use this plugin.

The SERP Analyzer can be miles forward of similar tools from a lot of opponents. I really like the interactive chart that demonstrates what back links in the very best 48 positions normal for metrics like word counts, character counts, search phrase density, and partial keywords and phrases.

Can Letterdrop fully automate your whole articles agenda? No. Could it be A very all-in-a person Search engine optimisation Software? Also no. But like a hybrid written content creation System with neat automation features created to assist you create excellent written content, It is Probably the most interesting tools on this list.

0 notes

Text

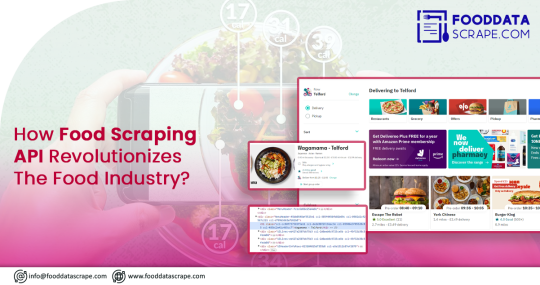

How Food Scraping API Revolutionizes the Food Industry?

How Food Scraping API Revolutionizes The Food Industry?

Food Scraping API is a game-changing tool for food enthusiasts, chefs, and businesses in the culinary world. This API can provide easy access to a treasure trove of food-related data, from recipes and ingredient lists to nutritional information and cooking tips. Whether you're a home cook looking for inspiration, a professional chef seeking to streamline your menu planning, or a food-related business aiming to stay competitive, the Food Scraping API is your gateway to a world of culinary possibilities. Let's explore how this API can revolutionize your approach to food in exciting and innovative ways.

List of Data Fields

Recipes

Ingredient Lists

Nutritional Information

Cuisine Type

Cooking Time

Cooking Tips

Dish Ratings

Reviews

Restaurant Menus

Food Images

Dietary Information

Food Allergen Information

Ingredient Prices

Overview of Food Scraping API

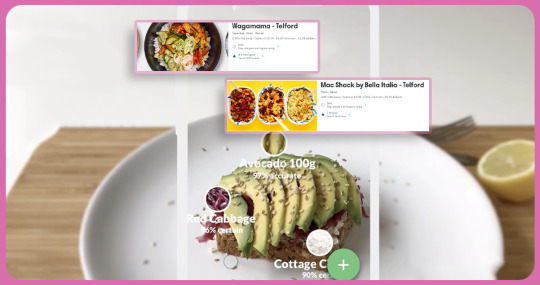

A Food Scraping API is a specialized tool that provides users with the capability to extract a wealth of food-related data from a variety of online sources, including recipe websites, food blogs, restaurant listings, and more. These APIs can meet the needs of individuals, chefs, food enthusiasts, and businesses within the culinary and hospitality industries. They empower users to access a diverse range of culinary information, such as recipes, ingredient lists, nutritional details, cooking tips, restaurant menus, and user-generated reviews. This data can be invaluable for meal planning, recipe development, menu optimization, dietary analysis, and market research. Food data scraping streamlines the data collection process, offering a convenient and efficient means to access and utilize culinary insights, ultimately enhancing the culinary experience and enabling businesses to make data-driven decisions in the ever-evolving world of food and dining.

Key Features of Food Scraping API

Data Extraction: The API is capable of accessing and collecting data from a wide range of online sources, including websites that feature food-related content, recipe databases, restaurant listings, and other culinary resources. It does so by scanning web pages, extracting the HTML content, and parsing the relevant data for various applications.

Recipe Information: Scrape food delivery data to get comprehensive information about recipes, covering everything from the list of ingredients and their quantities to the step-by-step preparation instructions. Users can access serving sizes and dietary classifications (e.g., vegetarian, gluten-free) to cater to specific culinary preferences.

Nutritional Data: Scrape Restaurant API Data to retrieve vital nutritional information for recipes. It includes calorie counts, macronutrients (carbohydrates, fats, proteins), and micronutrients. This data is essential for individuals looking to make informed dietary choices or businesses aiming to provide accurate nutritional information to customers.

Cuisine and Dietary Filters: Users can apply filters to the scraped data, allowing them to narrow down their search based on specific criteria. For instance, they can filter recipes by cuisine type, such as Italian, Mexican, or Asian. Moreover, dietary preferences like vegan, gluten-free, or keto can also ensure that the content aligns with specific dietary needs.

Cooking Tips: This feature provides users with practical guidance and tips related to cooking techniques and food preparation. It can offer advice on various aspects of cooking, such as proper knife skills, cooking temperatures, and time-saving tricks to help users improve their culinary skills.

Restaurant Menu Details: For the hospitality industry, the restaurant data scraper can scrape restaurant menu data. It includes information about the dishes offered, their prices, and their availability. This feature is invaluable for restaurants, enabling them to keep their online menus updated and accurate.

User Reviews: Accessing user-generated content, the API can retrieve ratings, reviews, and comments associated with recipes and restaurant listings. This user feedback provides insights into the popularity of certain dishes, dining experiences, and the overall reputation of restaurants. Users can make more informed choices based on the feedback of others.

Image Retrieval: This feature lets users obtain food-related images associated with recipes, ingredients, or restaurant dishes. Visuals are a crucial aspect of the culinary experience, and these images enhance the presentation and visual appeal of the culinary content. Individuals or businesses need to showcase their food offerings online.

How to Collect Real-Time Food Delivery Data with Food Scraping API

Collecting real-time food delivery data using a Food data scraper involves several steps:

Select a Food Scraping API: Choose a reliable one that suits your data collection needs. Ensure that it provides the features required to access real-time food delivery information from the specific sources you are interested in.

Define Data Sources: Identify the online platforms or sources where the real-time food delivery data is available. Familiar sources may include food delivery apps, restaurant websites, or third-party aggregators like Uber Eats, GrubHub, DoorDash, or Postmates.

API Configuration: Set up the Food Scraping API to target the chosen data sources. Configure the API to collect real-time data, specifying the information you want to extract. It may include restaurant menus, delivery times, pricing, and availability.

Scheduling: Depending on the API's capabilities, you can schedule data extraction regularly to maintain real-time updates. Perform it hourly, daily, or frequently to keep the data fresh.

Data Extraction: The API will initiate data scraping from the selected sources according to your configuration. It will access the web, collect the required data, and return it in a structured format helpful for analysis or display.

Data Storage: Store the scraped data in a secure database or cloud storage to ensure its accessibility and preservation. Keep real-time data up to date and readily available for use.

Data Analysis: Utilize the collected real-time data for analysis, reporting, or visualization. You can use this information to track restaurant availability, delivery times, popular dishes, pricing trends, and more.

Integration: If you are building an application or service that relies on real-time food delivery data, integrate the API into your software to automate data collection and processing.

Monitoring: Continuously monitor the API's performance to ensure that it is consistently retrieving real-time data. Set up alerts for any issues or anomalies in the data collection process.

Compliance and Ethics: Ensure that your data scraping practices comply with the terms of service of the websites or platforms from which you collect data. Respect their robots.txt files and follow ethical scraping practices.

Data Visualization: Consider using data visualization tools to present real-time food delivery data in a user-friendly and informative manner, making it accessible and actionable for your intended audience.

Why Scrape Food Delivery Data?

Scraping food delivery data serves various essential purposes. For consumers, it provides real-time access to a wide range of menu options, prices, and delivery times, facilitating informed decisions when ordering meals. It allows individuals to discover new dishes, compare restaurant offerings, and find the best deals. For businesses operating in the food delivery industry, scraping data is invaluable for market research, enabling them to stay competitive by monitoring trends, pricing strategies, and popular menu items. It also supports optimizing delivery services, as accurate and up-to-date data helps in efficient order fulfillment. Overall, scraping food delivery data empowers consumers and businesses by providing a comprehensive and real-time view of the dynamic and ever-evolving food delivery landscape.

To access comprehensive insights, don't hesitate to contact Food Data Scrape. We offer a comprehensive suite of services, including Food Data Aggregator and Mobile Restaurant App Scraping. Our advanced insights and analytics can elevate your decision-making processes and take your business strategies to new heights. Contact us today to unlock success powered by data!

Know more : https://www.fooddatascrape.com/food-scraping-api-revolutionizes-the-food-industry.php

#FoodScrapingAPIRevolutionizes#CollectRealTimeFoodDeliveryData#ScrapeFoodDeliveryData#Scrapingfooddeliverydata

0 notes

Text

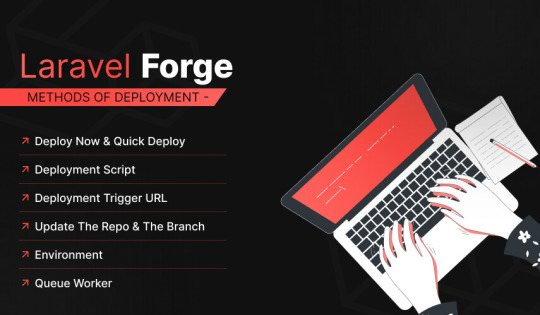

Auto Deployment with Laravel using Forge/Envoyer

We know most readers love to build web applications with the Laravel framework, and deploying these applications is a crucial step. However, while many know server management, only some are particularly fond of configuring and maintaining servers. Luckily, Laravel Forge and Envoyer are available to assist everyone!

When one is ready to deploy their Laravel application to production, there are some essential points that one can do to ensure your application is running as efficiently as possible. This blog will cover some great topics to ensure your Laravel framework is appropriately deployed.

Regarding our blog topic, you must have a brief about what Envoyer and Forge are regarding deployment.

Envoyer is a deployment tool used to deploy PHP applications, and the best thing about this tool is the zero downtime during deployment. Unfortunately, this indicates that your application and the customers must be aware that a new version has been pushed.

The basic overview of the Laravel framework:

Laravel framework is an open-source, free PHP framework that renders a set of tools and resources to build modern PHP applications. It comprises a complete ecosystem leveraging its built-in features, range of extensions, and compatible packages. The growth and popularity of Laravel have escalated in recent years, with several developers adopting it as their framework of choice for a streamlined development process.

What is deployment concerning Laravel?

A deployment is a process in which your code is downloaded from your source control provider to your server. This makes it ready for the world to access.

It is accessible to manager servers with Laravel Forge. A new website is online quickly, queues and cron jobs are easily set up, and a more advanced setup using a network of servers and load balancers can be configured. While with Envoyer, one can manage deployments. This blog will give you an insight into both; Forge and Envoyer.

Laravel Forge

Laravel Forge is a tool to manage your servers, and the first step is creating an account. Next, one has to connect to a service provider; several cloud servers are supported out of the box, which includes Linode, Vultr, Hetzner, and Amazon. Moreover, you can manage custom VPS.

Deploy Now and Quick Deploy

The Quick Deploy feature of Forge allows you to quickly deploy your projects when you push to your source control provider. When you push to the configured quick deploy branch, Laravel Forge will pull your latest code from source control and run the application’s configured deployment script.

Deployment Trigger URL

This option can integrate your app into a third-party service or create a custom deployment script. Then, when the URL receives a request, the deployment script gets triggered.

Update the Repo and the Branch

You can use these options if you need to install a newer version of the same project on a different repository or update the branch in Laravel Forge. If you are updating the branch, you may also have to update the branch name in the deployment script.

Environment

Laravel Forge automatically generates an environment file for the application, and some details like; database credentials are automatically added to the environment. However, if the app uses an API, you can safely place the API key in the environment. Even running a generic PHP web app, you can access the ENV variables using the getenv() method.

Queue Worker

Starting a queue worker in Forge is the same as running the queue: work Artisan command. Laravel Forge manages queue workers by using a process monitor called Supervisor to keep the process running permanently. You can create multiple queues based on queue priority and any other classification that you find helpful.

Project Creation in Envoyer

The first step is to create an account on Envoyer and log in. A free trial is available via your profile page>integrations; you can link a source control system such as Bitbucket or Github. Enter the access token for the service you are using, and just like that, you’re ready to create your first project.

First Deployment:

Envoyer needs to be able to communicate with Forge, which is done via an ‘SSH’ key. You will find the SSH key under the ‘key button’ on the servers tab in Envoyer. Enter this key in the SSH tab for your server in Laravel Forge

The last straw to this hat is to add the environment file. Click the ‘Manage Environment’ button on the server tab in Envoyer. Now you have to enter an ‘encryption key,’ which will be used by Envoyer to encrypt your environment file, which contains access tokens and passwords.

conclusion

This blog gave you an exclusive insight into the Laravel framework and deployment with Forge and Envoyer.

Laravel Forge and Envoyer are incredible tools that make deployment a cakewalk. Both the tools have tons of features and easy-to-use UI that lets you create and provision servers and deploy applications without hassle.

Numerous web app development companies are available in the market. However, the client searches for lucenta solutions to build their customized app. We code your imagination with 4D techniques, i.e., Determine, Design, Develop, and Deliver. With proficient team members, we can cross every obstacle coming across our path to success. Your satisfaction is our prime mantra!

0 notes

Text

Us Address Verification API

Us address verification api helps ensure that the addresses entered on your website are correct and free of errors. By leveraging the world's most powerful address databases (USPS, Canada Post, and others) along with IPQS proprietary technology, you can quickly standardize and format user input, correct typos and abbreviations, and verify that an address exists. This allows you to improve package delivery success rates and prevent expensive complications such as failed deliveries, returned merchandise, or chargebacks.

The service validates an address and provides data about it, including information about the location (latitude/longitude), postal code, state/province, and city/borough. In addition, the API verifies that an address is a valid address in its entirety and checks that it conforms to localized formatting rules. The API can also help you correct misspellings in the street name and suffix, add missing elements like full city/state/postal code, and detect whether an address is a P.O. Box or rural route.

The API can also determine if an address is a business or residential and can even identify the type of business, such as restaurant or retail, at that location. This classification is based on reference data that the API references to compare with an existing address record. USPS makes this reference data available to third parties through the APIs and web forms that are part of the Coding Accuracy Support System (CASS). The API can optionally enable CASS as a way to validate an address for mailing purposes by setting enableUspsCass to true.

youtube

SITES WE SUPPORT

Address Letter API – Wix

1 note

·

View note

Text

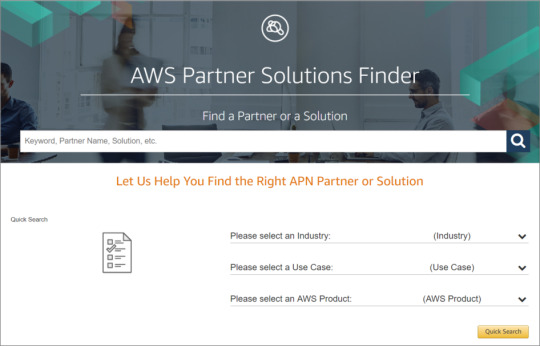

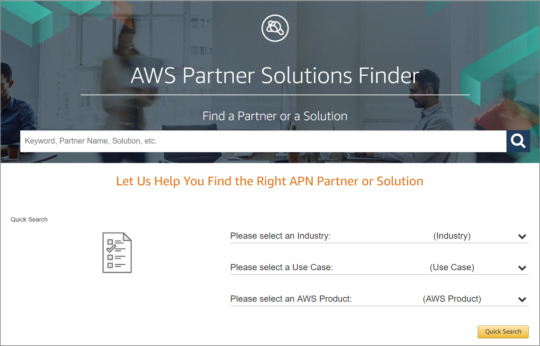

How you can Release a Successful Company on Amazon online Online Solutions

AWS is really a reputable program that's almost sure to satisfy your business's specifications when you comprehend its advantages.

Trouble-free Operation

The instant you sign up to Amazon . com Website Solutions, you'll be welcomed using the AWS Administration Console, a very consumer-warm and friendly graphical user interface. These applications and professional services might be reached while using server's interface. The program is easy to use because the firm provides well-noted website services APIs.

The Tools Offered Are Exceptionally Considerable

Initially intended for cloud storage and computers, it has since developed to incorporate a lot more than 70 a lot more services. Data base, computer software, cellular, statistics, and marketing are common incorporated into this classification. In case you have even the most basic of IT needs, you simply cannot go wrong using this system. By using a solitary foundation to deal with all of your current requirements helps save from being forced to monitor many service providers. It is a practical decision that could save you a lot of time and cash.

Limitless Web server Resources

What needs do you possess for your personal cloud web server? Unlimited data transfer rate for high-targeted traffic websites or perhaps an email support that's risk-free and reliable may be the concern. AWS will take care of it all. WS can handle virtually every IT-associated problem your enterprise might have. Better still, you won't have to worry about support interruptions if you opt to develop or increase. Amazon . com Website Professional services (AWS) is queen in the cloud simply because of its virtually limitless capability. As a matter of reality, it is a service that is certainly currently made use of by greater than a million businesses all over the world.

A Trustworthy Encryption And Burglar Alarm System

In terms of guarding your data, aws is a large advantage. Wp internet sites were actually compromised in around 90% of cases this past year.

AWS Is Actually A Cost-Effective And Flexible Choice

With AWS, you have a great deal of control over your sources. When it comes to employing its facilities on demand, there's practically no limit. With AWS, you might have an array of options.

0 notes

Text

What is an Address Validation API?

An Address Validation API is a tool for verifying addresses. It uses reference data to determine an address's classification. The API also uses operational feedback to confirm a specific address. When an address matches its reference data, it is called a resolved address. If the address does not have a postal code, a state code or city is enough to identify it as a valid address. The API also attempts to normalize the address, replacing common roadway identifiers and reordering components. Once the address validation process is complete, it can return the address to its input state.

Address verification API services can help companies ensure that addresses are correct before sending out mailers to customers. They can help prevent spam by verifying addresses against standard postal data and ensuring that the addresses are valid. With address verification, an organization can save money by avoiding the cost of sending mailers to the wrong addresses.

An Address Verification API should be easy to use. It should have an intuitive interface and an accurate database. Look for a service that offers free trials, demos, and training. Also, consider your budget and business needs before investing in address verification software. It's an important investment in the long run.

Address validation services validate physical addresses and can validate them either through a real-time API call or data file upload. They can also correct typos, scores, and analyze user information. In addition, an Address Verification API can be used to process requests from websites.

youtube

SITES WE SUPPORT

API To Verify Address - Wix

SOCIAL LINKS

Facebook

Twitter

LinkedIn

Instagram

Pinterest

0 notes

Text

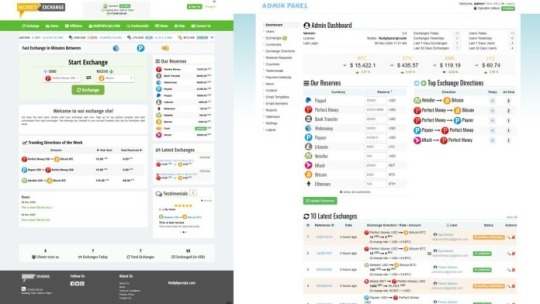

Not Known Facts About PHP currency Exchange Script

I'd a short while ago obtained a script from Up coming Hyip. The script fitted me pretty nicely and was precisely as explained on their web site. Thank you Up coming hyip for that superb encounter!

An prolonged license enables an item to be used in limitless assignments for possibly personal or industrial use. The merchandise can't be made available for resale "as-is". It really is permitted to distribute/sublicense the supply data files as portion of a larger undertaking. Near

Then it can vanish soon after a while for it to return soon after some time and wishes once again one click. You should don't react using a duplicate previous concept but produce down your method and how you're thinking that you can reach it as part of your reaction or to me in chat. Many thanks! CSS HTML JavaScript Python Website Scraping $33 (Avg Bid) $33 Avg Bid 23 bids

This tutorial will evaluation The fundamental code snippets necessary for use with PHP, Python, Ruby, and Java Script apps. RapidAPI supports 19 common programming languages with code automobile-technology facilities geared toward software developers.

Exchange Rix is effective and characteristics rich php script which will help you to run your personal bitcoin or other cryptocurrencies exchange internet site. Basically make order and start it currently. It’s uncomplicated.

We will likely be employing PHP like a scripting language and interpreter that is mainly utilized on any World-wide-web server, such as xamp, wamp, and so on. It's being used to any common Web-sites, and it's got a contemporary know-how that can certainly be utilized by the following era.

Our currency converter is Search engine optimization optimized and ready to be uploaded in minutes, No coding information desired, Well documented and easy to create, Install, and operate.

VERIFIED I really need to scrap uncomplicated look for results of mom Ondo services. I do not should extract details - I need just html just after JavaScript execution.- I'll extract alone

Classification: MLM Description Added info Testimonials (3) Cryptocurrency is turn into a long run for that miners, it can be impossible to mine the crypto currency Using the list of time frame, only the facility comprehensive server miners can mine considerably quicker than other, so Here's the answer with the cryptocurrency miners, purchase the highly effective server for an rent to mine the crypto currency.

The primary API command may be the “GET exchange” connect with which is utilized to specify the currencies for that exchange prices. Developers will require to include both their Rapi API crucial and the two variables for the currency pair in Every single API simply call.

Detect that we're utilizing the set Param approach which specifies the parameter and price, which is a comma divided listing of currency codes. The currency codes can be found by following the website link for supported currencies presented above.

As it is possible to see, we can easily do a great deal with our free of charge access to the currency Layer products and services because we might get the two Stay and historical facts. If you need a lot more than 1,000 API accesses in a month then you need to choose the high quality approach that most closely fits your preferences.

The web site admin can PHP currency Exchange Script regulate the many exercise like increase and take away cash, payment gateways, edit buyers interface plus much more. Also, There may be all the following stats are available:

Brightery Currency converter, The very best currency exchange script in 2020 you could find. Get simpler now currency rate, dollar exc. https://www.exchangerix.com/

0 notes

Text

What are Web 2.0 submission sites? What are its benefits in SEO?

Web 2.0 is a term that describes a wide variety of interactive and collaborative aspects of the internet. This term appeared in 2004-2005 and is also called “Social Web”. Basically, it represents the second generation of developing web pages that facilitate communication, offers services and safe information and ensures collaboration on the internet.

Visit Seotoolskit for more exciting and free SEO Content.

The benefits of Web 2.0

The benefits of Web 2.0 include:

Free classification of information; allows users to collectively classify and find information (e.g. “tagging” of websites, images, videos or links)

Rich user experience – dynamic content that is responsive to user input (e.g., a user can “click” on an image to enlarge it or find out more information)

User participation – information flows two ways between site owner and site users by means of evaluation, review, and online commenting. Site users also typically create user-generated content for others to see (e.g., Wikipedia, an online encyclopedia that anyone can write articles for or edit)

Software as a service (SaaS) – Web 2.0 sites developed APIs to allow automated usage, such as by an Web “app” (software application) or a mashup

Mass participation – near-universal web access leads to differentiation of concerns, from the traditional Internet user base (who tended to be hackers and computer hobbyists) to a wider variety of users

Visit Seotoolskit for more exciting and free SEO Content.

0 notes

Text

Top 10 Python Libraries for Machine Learning

With the increase in the markets for smart products, auto-pilot cars and other smart products, the ML industry is on a rise. Machine Learning is also one of the most prominent tools of cost-cutting in almost every sector of industry nowadays.

ML libraries are available in many programming languages, but python being the most user-friendly and easy to manage language, and having a large developer community, is best suited for machine learning purposes and that's why many ML libraries are being written in Python.

Also, the python works seamlessly with C and C++ and so, the already written libraries in C/C++ can be easily extended to Python. In this tutorial, we will be discussing the most useful and best machine-learning libraries in the Python programming language.

1. TensorFlow :

Website: https://www.tensorflow.org/

GitHub Repository: https://github.com/tensorflow/tensorflow

Developed By: Google Brain Team

Primary Purpose: Deep Neural Networks

TensorFlow is a library developed by the Google Brain team for the primary purpose of Deep Learning and Neural Networks. It allows easy distribution of work onto multiple CPU cores or GPU cores, and can even distribute the work to multiple GPUs. TensorFlow uses Tensors for this purpose.

Tensors can be defined as a container that can store N-dimensional data along with its linear operations. Although it is production-ready and does support reinforcement learning along with Neural networks, it is not commercially supported which means any bug or defect can be resolved only by community help.

2. Numpy:

Website: https://numpy.org/

Github Repository: https://github.com/numpy/numpy

Developed By: Community Project (originally authored by Travis Oliphant)

Primary purpose: General Purpose Array Processing

Created on the top of an older library Numeric, the Numpy is used for handling multi-dimensional data and intricate mathematical functions. Numpy is a fast computational library that can handle tasks and functions ranging from basic algebra to even Fourier transforms, random simulations, and shape manipulations. This library is written in C language, which gives it an edge over standard python built-in sequencing.

Numpy arrays are better than pandas series in the term of indexing and Numpy works better if the number of records is less than 50k. The NumPy arrays are loaded into a single CPU which can cause slowness in processing over the new alternatives like Tensorflow, Dask, or JAX, but still, the learning of Numpy is very easy and it is one of the most popular libraries to enter into the Machine Learning world.

3. Natural Language Toolkit (NLTK):

Website:

https://www.nltk.org/

Github Repository:https://github.com/nltk/nltk

Developed By: Team NLTK

Primary Purpose: Natural Language Processing

NLTK is the widely used library for Text Classification and Natural Language Processing. It performs word Stemming, Lemmatizing, Tokenization, and searching a keyword in documents. The library can be further used for sentiment analysis, understanding movie reviews, food reviews, text-classifier, checking and censoring the vulgarised words from comments, text mining, and many other human language-related operations.

The wider scope of its uses includes AI-powered chatbots which need text processing to train their models to identify and also create sentences important for machine and human interaction in the upcoming future.

4.Pandas

Website: https://pandas.pydata.org/

Github Repository: https://github.com/pandas-dev/pandas

Developed By: Community Developed (Originally Authored by Wes McKinney)

Primary Purpose: Data Analysis and Manipulation

The Library is written in Python Web Framework and is used for data manipulation for numerical data and time series. It uses data frames and series to define three-dimensional and two-dimensional data respectively. It also provides options for indexing large data for quick search in large datasets. It is well known for the capabilities of data reshaping, pivoting on user-defined axis, handling missing data, merging and joining datasets, and the options for data filtrations. Pandas is very useful and very fast with large datasets. Its speed exceeds that of Numpy when the records are more than 50k.

It is the best library when it comes to data cleaning because it provides interactiveness like excel and speed like Numpy. It is also one of the few ML libraries that can deal with DateTime without any help from any external libraries and also with a bare minimum code with python code quality. As we all know the most significant part of data analysis and ML is the data cleaning, processing, and analyzing where Pandas helps very effectively.

5. Scikit-Learn:

Website: https://scikit-learn.org/

Github Repository: https://github.com/scikit-learn/scikit-learn

Developed By: SkLearn.org

Primary Purpose: Predictive Data Analysis and Data Modeling

Scikit-learn is mostly focused on various data modeling concepts like regression, classification, clustering, model selections, etc. The library is written on the top of Numpy, Scipy, and matplotlib. It is an open-source and commercially usable library that is also very easy to understand.

It has easy integrability which other ML libraries like Numpy and Pandas for analysis and Plotly for plotting the data in a graphical format for visualization purposes. This library helps both in supervised as well as unsupervised learnings.

6. Keras:

Website: https://keras.io/

Github Repository: https://github.com/keras-team/keras

Developed By: various Developers, initially by Francois Chollet

Primary purpose: Focused on Neural Networks

Keras provides a Python interface of Tensorflow Library especially focused on AI neural networks. The earlier versions also included many other backends like Theano, Microsoft cognitive platform, and PlaidMl.

Keras contains standard blocks of commonly used neural networks, and also the tools to make image and text processing faster and smoother. Apart from standard blocks of neural networks, it also provides re-occurring neural networks.

7. PyTorch:

Website: https://pytorch.org/

Github Repository: https://github.com/pytorch/pytorch

Developed By: Facebook AI Research lab (FAIR)

Primary purpose: Deep learning, Natural language Processing, and Computer Vision

Pytorch is a Facebook-developed ML library that is based on the Torch Library (an open-source ML library written in Lua Programming language). The project is written in

Python Web Development, C++, and CUDA languages. Along with Python, PyTorch has extensions in both C and C++ languages. It is a competitor to Tensorflow as both of these libraries use tensors but it is easier to learn and has better integrability with Python. Although it supports NLP, but the main focus of the library is only on developing and training deep learning models only.

8. MlPack:

Github Repository: https://github.com/mlpack/mlpack

Developed By: Community, supported by Georgia Institute of technology

Primary purpose: Multiple ML Models and Algorithms

MlPack is mostly C++-based ML library that has bindings to Python other languages including R programming, Julia, and GO. It is designed to support almost all famous ML algorithms and models like GMMs, K-means, least angle regression, Linear regression, etc. The main emphasis while developing this library was on making it a fast, scalable, and easy-to-understand as well as an easy-to-use library so that even a coder new to programming can understand and use it without any problem. It comes under a BSD license making it approachable as both open source and also proprietary software as per the need.

9. OpenCV:

Website: https://opencv.org/

Github Repository: https://github.com/opencv/opencv

Developed By: initially by Intel Corporation

Primary purpose: Only focuses on Computer Vision

OpenCV is an open-source platform dedicated to computer vision and image processing. This library has more than 2500 algorithms dedicated to computer vision and ML. It can track human movements, detect moving objects, extract 3d models, stitch images together to create a high-resolution image, exploring the AR possibilities.

It is used in various CCTV monitoring activities by many governments, especially in China and Israel. Also, the major camera companies in the world use OpenCv for making their technology smart and user-friendly.

10. Matplotlib:

Website: https://matplotlib.org/

Github Repository: https://github.com/matplotlib/matplotlib

Developed By: Micheal Droettboom, Community

Primary purpose: Data Visualization

Matplotlib is a library used in Python for graphical representation to understand the data before moving it to data-processing and training it for Machine learning purposes. It uses python GUI toolkits to produce graphs and plots using object-oriented APIs.

The Matplotlib also provides a MATLAB-like interface so that a user can do similar tasks as MATLAB. This library is free and open-source and has many extension interfaces that extend matplotlib API to various other libraries.

Conclusion:

In this blog, you learned about the best Python libraries for machine learning. Every library has its own positives and negatives. These aspects should be taken into account before selecting a library for the purpose of machine learning and the model’s accuracy should also be checked after training and testing the models so as to select the best model in the best library to do your task.

Also Read:

Unit Testing Frameworks in Python

0 notes

Text

Group Buy Seo Tools

Bulk of the Search Engine Optimization players provide onsite optimization with 91% of the SEOs practicing this. Google+ optimization has also a great variety of SEOs practicing it as 86% claimed to be using this solution to their clients. For affiliate marketing, just a tiny percentage (11%) of SEOs are providing this service to their clients.

Other solutions provided by Search engine optimizations:

Content creation/optimization

Link building

Citation structure

Social media site marketing

Site advancement

PPC

Mobile site development

Video marketing as well as mobile advertising and marketing.

The most required service by SEO customers is onsite marketing, while the least demanded solution is affiliate marketing. The various other solutions are in some way averagely required. Nonetheless, the majority of tiny and also average businesses do not understand the chances that video clips as well as mobile advertising can unlock them and therefore often tend not to use these solutions. Search engine optimizations, therefore, need to inform their customers on these advertising and marketing networks to ensure that they can opt for them.

When asked about the online Search Engine Optimization services SEOs really feel are effective, 82% of them stated that the General search is the most reliable for producing leads. Local search appears to be the second-best after 62% of the respondents in a Search Engine Optimization study voted in its favor. PPC is third with 53% while social networks are 4th with 26%. Everyday bargains seem an interesting a lot of Search engine optimizations due to the fact that only 1% voted in support of it.

Laborious SEO Tasks

55% of the current SEOs out there locate web link building to be one of the most tedious as well as lengthy jobs. Others, though 15% only think that web content writing is one of the most tiresome and also time-consuming job among all the jobs they do. It as a result emerges that to the 55% who locate web link structure laborious, this task is also boring to them.

Future Projections

This is a synopsis of what we anticipate in the near future as for SEO business is of worry.

Development of Search Engine Optimization Businesses

Contrasted to the previous years, SEO organizations have actually been growing as well as increasing continuously. The Majority Of Search engine optimizations (concerning 93%) are not just hopeful about expanding their companies, yet are also expecting to grow their organization. In what seems to be an identified transfer to expand their organizations, the majority of Search engine optimizations (82%) want to hire more personnel so as to understand their desired levels of development.

The social media is likewise anticipated to grow and be a lot more effective also than the local directories. This is since many Search engine optimizations think that social networks can do better because of referrals from friends. Mobile usage is likewise anticipated to grow additional and become pertinent for neighborhood businesses.

The upsurge of Confidence in SEO Service

Unlike other companies, the SEO business is showing a favorable development of self-confidence among gamers. Regardless of this being a vibrant as well as innovative sector, the majority of Search engine optimizations are still ready to hire even more personnel to assist drive their businesses in advance. 84% of the tested Search engine optimizations in the SEO study are also confident that the SEO business is going to be extra lucrative starting this year 2013. Self-confidence is additionally running high that if the SEO players boost the understanding of SEO/social among business owners they serve in future, they will not just boost the business proprietors' price of financial investment, however also make them move their advertising and marketing budget plans from various other networks to the digital channels. Read more about cheap SEO tools.

. Google Analytics

Google Analytics is to the online advertiser like air is to the individual. You can't survive without it.

In case you're not yet utilizing Google Analytics, I suggest that you start doing as such as quickly as time permits. There is no other information source that gives high caliber and nitty gritty data like Google Analytics. In a Google-overwhelmed period, it's what we need to work with. The majority of different information apparatuses basically piggyback on Google's information utilizing the API.

Appraisal

you can't disregard Google Analytics. For all intents and purposes all the significant and significant information about site guests, traffic, and so forth. originates from this basic source.

More Information

Look at a conversation of how Analytics assists with driving high changing over inquiry traffic.

Gain proficiency with a couple of Google Analytics reports that can help create natural traffic.

2. Google Search Console

In the event that Google Analytics is the air that an online advertiser inhales, at that point Google Search Console (some time ago Webmaster Tools) is the food that the online advertiser eats.

"Webmaster" in the previous name is somewhat of a misnomer. The apparatus is for more than website admins. It's for SEOs. The very focal point of the device has to do with "search" and "improvement."

A portion of GSC's best bits of knowledge originates from simply looking at the dashboard. SEOs and advertisers need to stay aware of the dashboard all the time. Google Search Console gives a portion of indistinguishable information from Analytics, however in a less complex and increasingly direct manner. There's less tweaking you can do with the numbers, yet simpler access to them.

3. Uber suggest

Uber suggest is an across the board SEO apparatus intended to assist you with dominating the match of SEO. A major piece of SEO achievement is recognizing what you're doing, what your rivals are doing, and how to modify your procedure to exploit openings in the market.

Beginning with Uber suggest is as basic as composing a space or catchphrase into the inquiry bar. From here, you increase moment access to a report for your picked space or catchphrase. For this post, I need to concentrate on the catchphrase search abilities of Uber suggest. It's an extraordinary option in contrast to the Google Keyword Planner apparatus, which has lost a portion of its brilliance throughout the years. In the wake of running a quest for your essential watchword, here's the way you can utilize Uber suggest to reveal related catchphrases to remember for your substance.

Step #1: Click "Watchword Ideas"

Situated in the left sidebar, click "Watchword Ideas" to be taken to a far-reaching report.

4. MozBar

Information is the situation with the MozBar. At the point when you consider it, SEO has a great deal to do with knowing the secret sauce. The MozBar causes you to find the secret sauce initially. The MozBar button sits straight up in your program toolbar — where all the activity is going on. Snap-on the MozBar, and you create a moment report on the site you're visiting.

5. SEO Workers Analysis Tool

The Analysis Tool permits you to connect a site URL — any site, regardless of whether it's not yours — and produce a report. SEO Workers doesn't give anything new, yet it assists with sparing a ton of time in the examination. Additionally, it gives foundation data on the information. A portion of the information, similar to the HTTP Headers Check is in the engine stuff that you wouldn't ordinarily think to check for. Here is SEO Worker's one of a kind component. They show you SEO notwithstanding demonstrating you the SEO information that issues. Inside each broke down classification, the report gives an itemized conversation, and frequently a video (ft. Matt Cuts).

6. Woo Rank

from the outset, Woo Rank seems, by all accounts, to be simply one more freemium site analyzer. Upon closer, examination, be that as it may, it gives convenient experiences into some not really evident stuff. Woo Rank first shows a "Main concerns segment. Sadly, here's one of the drawbacks of the device. In one way or another, it feels that I have a 404 mistake, come up short on a favicon, and don't have a robots.txt document. These are incorrect. Read more about Group Buy SEO tools.

7. Neil Patel SEO Analyzer

I'm accepting that you're now mindful of my free site analyzer. I believe it merits referencing, notwithstanding, as a last free apparatus available to you. One component that I've taken a stab at is the serious investigation. Some portion of the achievement of web-based showcasing is knowing how your rivals are getting along.

1 note

·

View note

Text

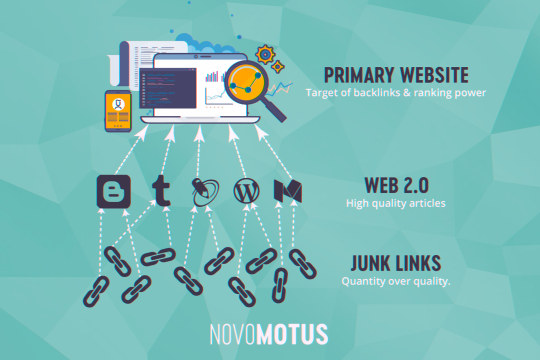

The Next 5 Things You Should Do For Web 2.0 Sites Success

8 Guilt Free Benefits Of Using Web 2.0 Tips

Table of ContentsBest 10 Tips For Web 2.0 Backlinks8 Incredible Web 2.0 ExamplesWant More Money? Get Web 2.06 Enticing Ways To Improve Your Web 2.0 Backlinks Skills5 Unforgivable Sins Of Web2.0 Backlinks

Advance Web 2.0 Backlink Strategy After ...

Get DA50+Web 2.0 post - From Asia Virtual Solutions

At DBurns Style, we're an internet marketing business that's concentrated on delivering outcomes for our customers. Just recently, we dealt with a big chemical suppliers business called Shepard Bros. They've stayed in business for over 40 years, and thought it was time to redesign their site. We realize that a company website is very important.

As such, we began re-designing their website with the objective of developing something that created more traffic from different sources - web2.0 backlinks. One of the secrets to this effective SEO project was web 2.0 link structure. If you do not understand what this is, then enable us to discuss things in this article.

To round off, we'll reveal you how we used this strategy in the Shepard Bros case. Link building is one of the earliest SEO tactics around and is all to do with the concept of building backlinks to a site. The more links you have directing people back to a website, the higher it will rank.

How To Get Backlinks From Web 2.0 That ...

The value of this SEO is method is that it allows you to construct links on sites with high domain authority. All web 2.0 sites have this, which indicates you'll get backlinks from authority websites like, Twitter or Facebook, and so on. When a site has high authority backlinks, it will only serve to boost it up the rankings.

The key concept of web 2.0 link building is that you produce your own web 2.0 websites to build links from - using web 2.0. You can establish blogs on different platforms like WordPress or Tumblr, produce special material, and link back to the target website naturally. There are lots of benefits to this method, and we have actually noted the crucial ones down listed below: Expense Efficient: Web 2.0 s are totally free for you to make, which makes this technique incredibly easy on your spending plan.

Don't Just Sit There! Start Getting More Using Web 2.0

Web 2.0 SEO Backlinks Pages 1 - 4 ...

Safe: Web 2.0 link building is completely safe and follows all of Google's link structure rules, suggesting you'll never ever get penalized. Numerous Hyperlinks: Perhaps the very best advantage of web 2.0 link building is that you can link to your site sometimes throughout different authority websites. You can likewise link to it on the same website, simply in a various piece of material.

At DBurns styles, we used it as best as we could to assist Shepard Bros establish a new and better website. To do this, we began by structuring the so that each page targets its own set of highly relevant keywords. Then, by utilizing web 2.0 link structure, we utilized these keywords as best as we perhaps could.

We slipped keywords into the content, making it look natural from a user point of view. We made sure to include a link to the keywords, hence creating high authority backlinks to the Shepard Bros website. The more links we constructed, the further the website rose the rankings. After just four months we saw some unbelievable website statistics that displayed the success of our SEO campaign: The number of site sessions increased by 45.08% The variety of users increased by 18.77% Page views increased by 42.15% Session period increased by 37.5% Organic site traffic increased by 29% Direct traffic increased by 50% Referral traffic increased by 85% Social traffic increased by 344% While SEO is a long term method, it speaks volumes to the efficiency of our work that we saw such fantastic data after just four months.

We use cookies to ensure that we give you the very best experience on our site. If you continue to utilize this site we will assume that you enjoy with it.Ok Privacy policy.

View All Questions Bounty New (No Responses) Discussion Answered Product Assistance Unanswered From All Time Last 1 month Last 7 Days Last 24 Hours Sorted by Newest Questions Current Activity Most Thumbs Up Many Responses Fewest Reactions Earliest Concerns With classification All Categories Affiliate Marketing Alternative Search Sources Analytics Habits & Demographics Branding/ Brand Name Awareness Competitive Research Consulting Tips & Trends Content & Blogging Conversion Rate Optimization Educational Resources Email Marketing Entrepreneurship Inbound Marketing Events Inbound Marketing in the Media Inbound Marketing Industry Intermediate & Advanced SEO International Issues Internet Marketing Interviews Keyword Research Study Legal Link Structure Local Listings Local Method Resident Website Optimization Management/ Culture Mobile and Local Moz News Moz Tools On-Page/ Site Optimization Internet Marketing Tools Paid Search Marketing PRO Application Reporting Credibility Management Reviews and Rankings Search Engine Trends Social Network Social Network for Regional Search Assistance - Account Help Support - Function Requests Assistance - Followerwonk Assistance - Getting Going Support - Link Explorer Assistance - Moz Analytics: Brand & Mentions Support - Moz Analytics: Links Assistance - Moz Analytics: Search Support - Moz Analytics: Social Support - Moz APIs Support - Moz Local Support - Other Research Study Tools Support - Settings Help Technical SEO Issues Checking/ Quality Control Vertical SEO: Video, Image, Local Website Design White Hat/ Black Hat SEO White Boards Friday - using web 2.0.

5 Tips To Reinvent Your Search Engine Optimization And Win

High PR Dofollow Web 2.0 Sites ...

" Terrific service from Paco - assisted us to get a brand-new launch with extensively utilized words in the title on the very first page of Google - beyond our expectations. Will definitely utilize once again" Examined by siazzah msshaikh Great Extremely advised cityquick 5 Star service as always cityquick 5 Star service as constantly cityquick 5 Star service as always cityquick Fantastic service eagerly anticipating the results cityquick Great service anticipating the results cityquick Fantastic and fast service, i look forward to the results:-RRB- harrywalker As constantly Paco - a 5 star rated service, always on time & more than helpful, would advise anyone trying to find a realiable company - get this one! siazzah Terrific service from Paco - assisted us to get a new launch with commonly utilized words in the title on the very first page of Google - beyond our expectations.

It's common knowledge that Web 2.0 Backlinks are an important part of any (effective) Private Blog site Network, right? Web 2.0 Backlinks are not just FREE, however they are super helpful when attempting to increase your PBN's authority and link juice. Despite all this goodness, it's still very tough to find the best information on how to setup a successful Web 2.0 PBN link farm.

Look: Web 2.0 are simple; they are profiles developed on totally free sub-domains with a lot of quality post. How? Here is an example: I had built this Web 2.0 last year for a little project; it ranked pretty well for my desired keyword ... I have actually since taken down the task as I am rebuilding the platform on nodejs.

Glad you asked! Lately, I have been exploring a lot with Web 2.0 and following checklist works the finest for me: Setup a minimum of 10 article and an optimum of 20 posts per Web 2.0 during a timespan of 3-4 months. Build each Web 2.0 blog site really slowly, grow it and make it look like a genuine blog.

Target LSI and Long Tail Keywords; you may naturally rank for a number of terms. the natural traffic would provide your blog a greater authority and make your blog appearance natural. Setup required social media profiles Submit a Video on YouTubeyou can make a fast video using Animoto (I started adding my videos on my Web 2.0 blogs previously this year).

5 Things You Have In Common With Link Building

You can use Web 2. web 2.0.0 Blog Network in two possible method: 1) Use it as Tier 1 (Buffer Sites) Buffer Sites? Here is the definition: A buffer website functions as an average between your cash website and private blog network Why do we need this? If your connecting building method includes links from other higher authority blog network (owned by somebody else), it's a good idea to use a buffer website.

Asia Virtual Solutions

2) Use it as Tier 2 Appearance: The above image speaks a million words! Numerous Web 2.0 blogs are created that are pointing towards your Tier 1 links. If you are planning to do the above setup then ensure to spam your Web 2.0 with GSA Search Engine Ranking tool to pass as much link juice to your Web 2.0 as possible.

Prior to we start discovering the desired web 2.0 domains, here is what we will require: I will look for some quality expired Web 2.0 for hosting niche. First; we need few keywords, to start with. For this tutorial, we will use Ubersuggest Also: You can utilize Keywordtool.io, but their Pro Plus version is perfect however features a cost tag of $88/m which can be pricey if you are wanting to keep your cost low.

The above number of keywords would be good enough for us to get going. Now: Click Consider As Text and copy all the keywords. Next: My favourite enjoyable part starts! Head over to your Windows VPSand begin your scrapebox ... now load your proxies. and paste your keywords from ubersuggest to scrapebox keyword list field: Once everything is set as the above image, struck the Start Gathering button and you will see this: Select Google, Bing, MyWebSearch and DuckDuckGo You can experiment and choose others.

I extremely suggest taking a look at my parent business's main Scrapebox authorized Scrapebox Windows VPS to accelerate the scraping process. Prior to you proceed further. web2.0 backlinks. Let's cut the URLs and eliminate the duplicates. Once the process is finished, click Addons- > Program Offered Addons. Now download Scrapebox Alive Check addon. Click on Addons- > Scrapebox Alive Check, and you will be welcomed with the above screen.

10 Places To Get Deals On Web 2.0

Next: You require to click Setup and set the success code to 404 as revealed in the screenshot above. REMOVE whatever else! Inspect Usage Proxies! Strike the START button and. get some popcorn while the procedure gets completed. The addon will examine whether the domain ditched still exist if NO then we have discovered a bunch of gold mine in our specific niche.

Click on Inspect Indexed and then select Google Indexed You will now see the below screen: Once you are done, export the list. Next: Go to Addons- > Program Offered Addons and download Scrapebox Vanity Name Checker. Download that Addon ... and open it from the Addons tab. Now, load the URLs from Harvester and click START! Wait.

Lastly: Let's check the Domain Authority and Page Authority for the list of domains we have. We will utilize CheckMoz as our bulk domain stats checker. Get in all your URL you simply gathered using your Scrapebox and paste them inside CheckMoz. click the FIND MOZ METRICS button! Here are the outcomes: Out of all the work that got executed, we found six good Web 2.0 that we can restore on Tumblr and has great stats.

You can duplicate the procedure with other Web 2.0 platforms. Before you begin the setup process, ensure to grab . net Structure 4.0 Once you start the software application, you will be greeted with the following screen: P.S. I am utilizing their Pro version for this tutorial. Next: Click on the Web 2.0 Hunter Button and you will be greeted with the following screen: We initially look at few settings prior to starting the software.

While: We will choose all the search options and leave the custom-made date variety uncontrolled. Next: Click on Setup and Assist Tab on top Import your proxy files and utilize them as your main proxies. Ensure to check Auction Searching, Quality Monitoring, Searching and Ended Domain and Web 2.0 Checking option.

#Asia Virtual Solutions#web2.0 backlinks#Search Engine Optimization#web 2.0#Web 2.0 Backlinks#web 2.0 sites#Benefits Of Using Web 2.0#using web 2.0#link building

1 note

·

View note

Quote

The coronavirus outbreak is taking over headlines. Due to the spread of COVID-19, remote work is suddenly an overnight requirement for many. You might be working from home as you are reading this article.

With millions working from home for many weeks now, we should seize this opportunity to improve our skills in the domain we are focusing on.

Here is my strategy to learn Data Science while working from home with few personal real life projects.

"So what should we do?"

"Where should we start learning?"

Grab your coffee as I explain the process of how you can learn data science sitting at home. This blog is for everyone, from beginners to professionals.

Photo by Nick Morrison on Unsplash

Prerequisites

To start this journey, you will need to cover the prerequisites. No matter which specific field you are in, you will need to learn the following prerequisites for data science.

Logic/Algorithms:

It’s important to know why we need a particular prerequisite before learning it. Algorithms are basically a set of instructions given to a computer to make it do a specific task.

Machine learning is built from various complex algorithms. So you need to understand how algorithms and logic work on a basic level before jumping into complex algorithms needed for machine learning.

If you are able to write the logic for any given puzzle with the proper steps, it will be easy for you to understand how these algorithms work and you can write one for yourself.

Resources: Some awesome free resources to learn data structures and algorithms in depth.

Statistics:

Statistics is a collection of tools that you can use to get answers to important questions about data.

Machine learning and statistics are two tightly related fields of study. So much so that statisticians refer to machine learning as “applied statistics” or “statistical learning”.

Image source : http://me.me/

The following topics should be covered by aspiring data scientists before they start machine learning.

Measures of Central Tendency — mean, median, mode, etc

Measures of Variability — variance, standard deviation, z-score, etc

Probability — probability density function, conditional probability, etc

Accuracy — true positive, false positive, sensitivity, etc

Hypothesis Testing and Statistical Significance — p-value, null hypothesis, etc

Resources: Learn college level statistics in this free 8 hour course.

Business:

This depends on which domain you want to focus on. It basically involves understanding the particular domain and getting domain expertise before you get into a data science project. This is important as it helps in defining our problem accurately.

Resources: Data science for business

Brush up your basics

This sounds pretty easy but we tend to forget some important basic concepts. It gets difficult to learn more complex concepts and the latest technologies in a specific domain without having a solid foundation in the basics.

Here are few concepts you can start revising:

Python programming language

Python is widely used in data science. Check out this collection of great Python tutorials and these helpful code samples to get started.

Image source : memecrunch.com

You can also check out this Python3 Cheatsheet that will help you learn new syntax that was released in python3. It'll also help you brush up on basic syntax.

And if you want a great free course, check out this Python for Everybody course from Dr. Chuck.

General data science skills

Want to take a great course on data science concepts? Here's a bunch of data science courses that you can take online, ranked according to thousands of data points.

Resources: Data science for beginners - free 6 hour course, What languages should you learn for data science?

Data Collection

Now it is time for us to explore all the ways you can collect your data. You never know where your data might be hiding. Following are a few ways you can collect your data.

Web scraping

Web scraping helps you gather structured data from the web, select some of that data, and keep what you selected for whatever use you require.

You can start learning BeautifulSoup4 which helps you scrape websites and make your own datasets.

Advance Tip: You can automate browsers and get data from interactive web pages such as Firebase using Selenium. It is useful for automating web applications and automating boring web based administration

Resources: Web Scraping 101 in Python

Cloud servers

If your data is stored on cloud servers such as S3, you might need to get familiar with how to get data from there. The following link will help you understand how to implement them using Amazon S3.

Resources : Getting started with Amazon S3, How to deploy your site or app to AWS S3 with CloudFront

APIs

There are millions of websites that provide data through APIs such as Facebook, Twitter, etc. So it is important to learn how they are used and have a good idea on how they are implemented.

Resources : What is an API? In English, please, How to build a JSON API with Python, and Getting started with Python API.

Data Preprocessing

This topic includes everything from data cleaning to feature engineering. It takes a lot of time and effort. So we need to dedicate a lot of time to actually learn it.

Image source : https://www.pinterest.com/pin/293648838181843463/

Data cleaning involves different techniques based on the problem and data type. The data needs to be cleaned from irrelevant data, syntax erros, data inconsistencies and missing data. The following guide will get you started with data cleaning.

Resources : Ultimate guide to data cleaning

Data Preprocessing is an important step in which the data gets transformed, or encoded, so that the machine can easily parse it. It requires time as well as effort to preprocess different types of data which include numerical, textual and image data.

Resources : Data Preprocessing: Concepts, All you need to know about text preprocessing for NLP and Machine Learning, Preprocessing for deep learning.

Machine Learning

Finally we reach our favourite part of data science: Machine Learning.

Image source : https://in.pinterest.com/pin/536209899383255279/

My suggestion here would be to first brush up your basic algorithms.

Classification — Logistic Regression, RandomForest, SVM, Naive Bayes, Decision Trees

Resources : Types of classification algorithms in Machine Learning, Classification Algorithms in Machine Learning

Regression — Linear Regression, RandomForest, Polynomial Regression

Resources : Introduction to Linear Regression , Use Linear Regression models to predict quadratic, root, and polynomial functions, 7 Regression Techniques you should know, Selecting the best Machine Learning algorithm for your regression problem,

Clustering — K-Means Clustering, DBSCAN, Agglomerative Hierarchical Clustering

Resources : Clustering algorithms

Gradient Boosting — XGBoost, Catboost, AdaBoost

Resources : Gradient boosting from scratch, Understanding Gradient Boosting Machines

I urge you all to understand the math behind these algorithms so you have a clear idea of how it actually works. You can refer to this blog where I have implemented XGBoost from scratch — Implementing XGBoost from scratch

Now you can move on to Neural Networks and start your Deep Learning journey.

Resources: Deep Learning for Developers, Introduction to Deep Learning with Tensorflow, How to develop neural networks with Tensorflow, Learn how deep neural networks work

You can then further dive deep into how LSTM, Siamese Networks, CapsNet and BERT works.

Hackathons:

Image Source : https://me.me/

Now we need to implement these algorithms on a competitive level. You can start looking for online Data Science Hackathons. Here is the list of websites where I try to compete with other data scientists.

Analytics Vidhya — https://datahack.analyticsvidhya.com/contest/all/

Kaggle — https://www.kaggle.com/competitions

Hackerearth — https://www.hackerearth.com/challenges/

MachineHack — https://www.machinehack.com/

TechGig — https://www.techgig.com/challenge

Dare2compete — https://dare2compete.com/e/competitions/latest

Crowdanalytix — https://www.crowdanalytix.com/community

To have a look at a winning solution, here is a link to my winning solution to one online Hackathon on Analytics Vidhya — https://github.com/Sid11/AnalyticsVidhya_DataSupremacy

Projects:

We see people working on dummy data and still don’t get the taste of how actual data looks like. In my opinion, working on real life data gives you a very clear idea how data in real life looks like. The amount of time and effort required in cleaning real life data takes about 70% of your project’s time.

Here are the best free open data sources anyone can use

Open Government Data — https://data.gov.in/

Data about real contributed by thousands of users and organizations across the world — https://data.world/datasets/real

19 public datasets for Data Science Project — https://www.springboard.com/blog/free-public-data-sets-data-science-project/

Business Intelligence

After you get the results from your project, it is now time to make business decisions from those results. Business Intelligence is a suite of software and services that helps transform data into actionable intelligence and knowledge.

This can be done by creating a dashboard from the output of our model. Tableau is a powerful and the fastest growing data visualization tool used in the Business Intelligence Industry. It helps in simplifying raw data into the very easily understandable format. Data analysis is very fast with Tableau and the visualizations created are in the form of dashboards and worksheets.

http://damianfallon.blogspot.com/2020/03/how-to-improve-your-data-science-skills_31.html

1 note

·

View note

Text

How you can Release an excellent Enterprise on Amazon Web Solutions

AWS is really a reputable program that's almost sure to satisfy your business's specifications when you comprehend its advantages.

Trouble-free Operation

The instant you sign up to Amazon . com Website Solutions, you'll be welcomed using the AWS Administration Console, a very consumer-warm and friendly graphical user interface. These applications and professional services might be reached while using server's interface. The program is easy to use because the firm provides well-noted website services APIs.

The Tools Offered Are Exceptionally Considerable

Initially intended for cloud storage and computers, it has since developed to incorporate a lot more than 70 a lot more services. Data base, computer software, cellular, statistics, and marketing are common incorporated into this classification. In case you have even the most basic of IT needs, you simply cannot go wrong using this system. By using a solitary foundation to deal with all of your current requirements helps save from being forced to monitor many service providers. It is a practical decision that could save you a lot of time and cash.

Limitless Web server Resources