#Mark Zuckerberg Wants To KILL Section 230

Text

Zuckerpunch

A monopolist's first preference is always "don't regulate me." But coming in at a close second is "regulate me in ways that only I can comply with, so that no one is allowed to compete with me."

A couple hundred mil for compliance *sounds* like a lot but it's a *bargain* if it excludes future competitors.

That's why Facebook and Youtube flipped and endorsed the EU plan to mandate hundreds of millions of euros' worth of copyright filters in 2019.

https://www.eff.org/deeplinks/2019/03/european-copyright-directive-what-it-and-why-has-it-drawn-more-controversy-any

Mark Zuckerberg may be a mediocre sociopath with criminally stupid theories of human interaction that he imposes on 2.6 billion people, but he is an unerring bellwether for policies that will enhance Facebook's monopoly power.

Pay attention whenever Zuck proposes a "solution" to the problems he caused (not just because creating a problem in no way qualifies you to solve that problem) - the only "problem" he wants to solve is, "How do I monopolize all human interaction?"

Today, Zuckerberg is testifying about his monopoly power to Congress. Hours before he went on air, he released a proposal to "fix Section 230 of the Communications Decency Act."

https://www.theverge.com/2021/3/24/22348238/zuckerberg-dorsey-pichai-section-230-hearing-misinformation

CDA230 is the rule that says that the people who publish unlawful speech should be accountable for it - not the service providers that hosted the speech (but it *does* allow online services to moderate speech it finds offensive).

CDA230 is among the only good technology laws the US Congress ever adopted, and without it, no one would ever host your complaint about a business, your #MeToo whistleblowing, your negative reviews or your images of official corruption or police violence.

The GOP hates 230 because it lets online platforms delete disinformation and hate speech without obliging them to have a "fairness doctrine" rebuttal (e.g. deleting both Trump's exhortations to inject bleach AND messages from doctors saying *don't inject bleach!*).

The right hates 230 because their 40 year program to create unlimited corporate power by nerfing antitrust law has created...unlimited corporate power, which is sometimes wielded against them.

Rather than limiting corporate power, they want to make it possible for rich people to sue Big Tech platforms for shadowbanning them. This is standard cognitive dissonance nonsense, and par for the course for Reagan's brainchildren.

But in a weird reversal, progressives have picked up on this, joining hands across the aisle to demand the right to sue platforms for things users say, despite the fact that this is a gift to giant corporations and ruthless plutes who want to silence their critics.

Seriously. CDA230 is 26 words long. It's not hard to understand. if you think abolishing CDA230 will hurt Facebook, you've been fooled. Just read this:

https://www.techdirt.com/articles/20200531/23325444617/hello-youve-been-referred-here-because-youre-wrong-about-section-230-communications-decency-act.shtml

If that doesn't convince you, consider this: Zuck wants to get rid of CDA230, too.

Zuck does not propose rules that hurt Zuck.

Zuck is an unreliable narrator, but his regulatory proposals for Facebook are unswervingly perfect indicators of "Things that benefit Facebook."

Here's Zuck's proposal: CDA230 should only apply to platforms that use "best practices" to eliminate bad speech. What's a "best practice?" It's what the "industry leaders" do. Who's an "industry leader?"

Facebook.

Zuck's proposal then, is, "To solve the problems Facebook creates, we should mandate that everyone do what Facebook is doing."

There's two big problems with this.

First, Facebook sucks. The "AI filters" and army of moderators its uses to moderate content are tuna nets that catch entire pods of dolphins.

Consider three sentences:

I. Shut up, you [racial epithet]!

II. Then he told me, "shut up, you [racial epithet!" and,

III. "The candidate is ending his campaign because it was reported that he told a message-board user, 'shut up, you [racial epithet]!"

No filter can decide which of these to block. Indeed, human moderators often get this wrong.

https://pluralistic.net/2021/01/09/the-old-crow-is-getting-slow/#deplatforming

If you hate Facebook's moderation, I invite you to consider that the problem isn't that FB isn't trying hard enough - it's that making speech judgments about the discourse of 2.6b people in 150 countries speaking hundreds of languages is an impossible job.

Which brings me to the second problem with Zuck's proposal: it is incredibly expensive, which means only companies that already as big as Facebook would be allowed to compete with Facebook, and that means we can only have services that are too big to moderate effectively.

Importantly, it means we have to kill proposals - like the 2020 ACCESS Act - to allow new services to interoperate with FB, which would allow groups of users to have the autonomy to make their own moderation choices, booting out the toxic trolls and harassers FB welcomes.

Zuck's proposal to fix the problem of Facebook, then, is "We should fix Facebook by not changing Facebook at all, and making it illegal to make a service that interoperates with Facebook, starving it of the monopoly rents it uses to evade real regulation."

This is such a shameless piece of self-serving bullshit, it should be comic. But it's not. It's terrifying. It's exactly the kind of "solution" that low-information lawmakers love - something that lets them "get tough on a bad actor" without risking campaign contributions.

Don't be bamboozled. Please. The answer to Facebook will not come from Facebook. You can't fix something from the inside that shouldn't exist in the first place.

Facebook doesn't have a "Nazi problem" or a "disinformation problem." It has a *Facebook problem*.

The answer to Facebook is giving the public technological self-determination. Use interoperability so FB can't hold our friends hostage anymore. Force breakups so FB can't use predatory acquisitions to deprive us of choice. Block mergers.

https://www.eff.org/wp/interoperability-and-privacy

But don't address Big Tech by making Bigness a requirement to simply operate online.

Image: Brian Solis (modified):

https://www.flickr.com/photos/briansolis/2696198607

CC BY:

https://www.flickr.com/photos/briansolis/2696198607

52 notes

·

View notes

Text

Right or Left, You Should Be Worried About Big Tech Censorship

Conservatives are being censored

Claiming that “right-wing voices are being censored,” Republican-led legislatures in Florida and Texas have introduced legislation to “end Big Tech censorship.” They say that the dominant tech platforms block legitimate speech without ever articulating their moderation policies, that they are slow to admit their mistakes, and that there is no meaningful due process for people who think the platforms got it wrong.

They’re right.

So is everyone else

But it’s not just conservatives who have their political speech blocked by social media giants. It’s Palestinians and other critics of Israel, including many Israelis. And it’s queer people, of course. We have a whole project tracking people who’ve been censored, blocked, downranked, suspended and terminated for their legitimate speech, from punk musicians to peanuts fans, historians to war crimes investigators, sex educators to Christian ministries.

The goat-rodeo

Content moderation is hard at any scale, but even so, the catalog of big platforms’ unforced errors makes for sorry reading. Experts who care about political diversity, harassment and inclusion came together in 2018 to draft the Santa Clara Principles on Transparency and Accountability in Content Moderation but the biggest platforms are still just winging it for the most part.

The situation is especially grim when it comes to political speech, particularly when platforms are told they have a duty to remove “extremism.”

The Florida and Texas social media laws are deeply misguided and nakedly unconstitutional, but we get why people are fed up with Big Tech’s ongoing goat-rodeo of content moderation gaffes.

So what can we do about it?

Let’s start with talking about why platform censorship matters. In theory, if you don’t like the moderation policies at Facebook, you can quit and go to a rival, or start your own. In practice, it’s not that simple.

First of all, the internet’s “marketplace of ideas” is severely lopsided at the platform level, consisting of a single gargantuan service (Facebook), a handful of massive services (YouTube, Twitter, Reddit, TikTok, etc) and a constellation of plucky, struggling, endangered indieweb alternatives.

DIY?

If none of the big platforms want you, you can try to strike out on your own. Setting up your own rival platform requires that you get cloud services, anti-DDoS, domain registration and DNS, payment processing and other essential infrastructure. Unfortunately, every one of these sectors has grown increasingly concentrated, and with just a handful of companies dominating every layer of the stack, there are plenty of weak links in the chain and if just one breaks, your service is at risk.

But even if you can set up your own service, you’ve still got a problem: everyone you want to talk about your disfavored ideas with is stuck in one of the Big Tech silos. Economists call this the “network effect,” when a service gets more valuable as more users join it. You join Facebook because your friends are there, and once you’re there, more of your friends join so they can talk to you.

Setting up your own service might get you a more nuanced and welcoming moderation environment, but it’s not going to do you much good if your people aren’t willing to give up access to all their friends, customers and communities by quitting Facebook and joining your nascent alternative, not least because there’s a limit to how many services you can be active on.

Network effects

If all you think about is network effects, then you might be tempted to think that we’ve arrived at the end of history, and that the internet was doomed to be a winner-take-all world of five giant websites filled with screenshots of text from the other four.

But not just network effects

But network effects aren’t the only idea from economics we need to pay attention to when it comes to the internet and free speech. Just as important is the idea of “switching costs,” the things you have to give up when you switch away from one of the big services - if you resign from Facebook, you lose access to everyone who isn’t willing to follow you to a better place.

Switching costs aren’t an inevitable feature of large communications systems. You can switch email providers and still connect with your friends; you can change cellular carriers without even having to tell your friends because you get to keep your phone number.

The high switching costs of Big Tech are there by design. Social media may make signing up as easy as a greased slide, but leaving is another story. It's like a roach motel: users check in but they’re not supposed to check out.

Interop vs. switching costs

Enter interoperability, the practice of designing new technologies that connect to existing ones. Interoperability is why you can access any website with any browser, and read Microsoft Office files using free/open software like LibreOffice, cloud software like Google Office, or desktop software like Apple iWorks.

An interoperable social media giant - one that allowed new services to connect to it - would bust open that roach motel. If you could leave Facebook but continue to connect with the friends, communities and customers who stayed behind, the decision to leave would be much simpler. If you don’t like Facebook’s rules (and who does?) you could go somewhere else and still reach the people that matter to you, without having to convince them that it’s time to make a move.

The ACCESS Act

That’s where laws like the proposed ACCESS Act come in. While not perfect, this proposal to force the Big Tech platforms to open up their walled gardens to privacy-respecting, consent-seeking third parties is a way forward for anyone who chafes against Big Tech’s moderation policies and their uneven, high-handed application.

Some tech platforms are already moving in that direction. Twitter says it wants to create an “app store for moderation,” with multiple services connecting to it, each offering different moderation options. We wish it well! Twitter is well-positioned to do this - it’s one tenth the size of Facebook and needs to find ways to grow.

But the biggest tech companies show no sign of voluntarily reducing their switching costs. The ACCESS Act is the most important interoperability proposal in the world, and it could be a game-changer for all internet users.

Save users' rights under Section 230, save the internet

Unfortunately for all of us, many of the people who don’t like Big Tech’s moderation think the way to fix it is to eliminate Section 230, a law that makes people who post illegal content responsible for their own speech , while allowing anyone that hosts expressive speech to remove offensive, harassing or otherwise objectionable content.

That means that conservative Twitter alternatives can delete floods of pornographic memes without being sued by their users. It means that online forums can allow survivors of workplace harassment to name their abusers without worrying about libel suits.

If hosting speech makes you liable for what your users say, then only the very biggest platforms can afford to operate, and then only by resorting to shoot-first/ask-questions-later automated takedown systems.

Kumbaya

There’s not much that the political left and right agree on these days, but there’s one subject that reliably crosses the political divide: frustration with monopolists’ clumsy handling of online speech.

For the first time, there’s a law before Congress that could make Big Tech more accountable and give internet users more control over speech and moderation policies. The promise of the ACCESS Act is an internet where if you don’t like a big platform’s moderation policies, if you think they’re too tolerant of abusers or too quick to kick someone off for getting too passionate during a debate, you can leave, and still stay connected to the people who matter to you.

Killing CDA 230 won’t fix Big Tech (if that was the case, Mark Zuckerberg wouldn’t be calling for CDA 230 reform). The ACCESS Act won’t either, by itself -- but by making Big Tech open up to new services that are accountable to their users, the ACCESS Act takes several steps in the right direction.

from Deeplinks https://ift.tt/3kq2hFs

0 notes

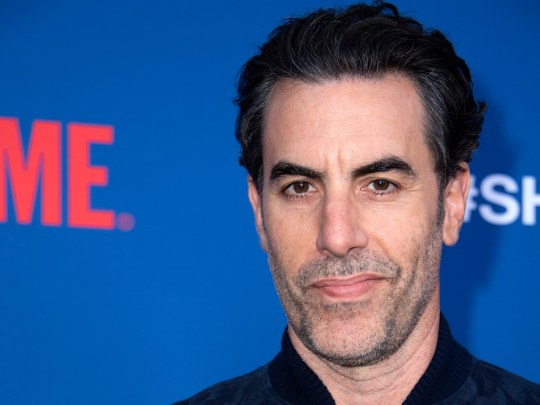

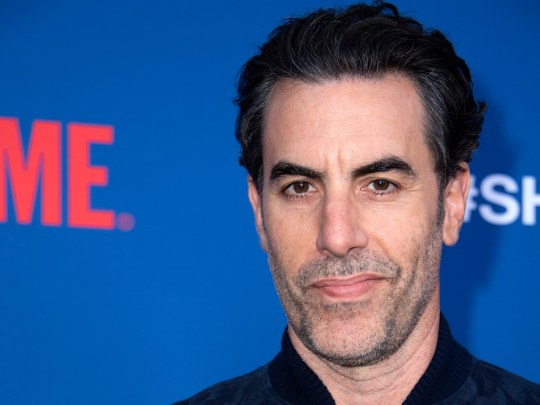

Photo

New Post has been published on https://magzoso.com/tech/the-silicon-six-spread-propaganda-its-time-to-regulate-social-media-sites/

The 'Silicon Six' Spread Propaganda. It's Time to Regulate Social Media Sites.

I get it: I’m one of the last people you’d expect to hear warning about the danger of conspiracies and lies. I’ve built a career on pushing the limits of propriety and good taste. I portrayed Borat, the first fake news journalist, along with satirical characters such as Ali G, a wannabe gangster, and Bruno, a gay fashion reporter from Austria. Some critics have said my comedy risks reinforcing old racial and religious stereotypes.

I admit that most of my comedy over the years has been pretty juvenile. However, when Borat was able to get an entire bar in Arizona to sing “throw the Jew down the well,” it revealed people’s indifference to anti-Semitism. When, as Bruno, I started kissing a man in a cage fight in Arkansas and nearly started a riot, it showed the violent potential of homophobia. And when, disguised as an ultra-woke developer, I proposed building a mosque in one rural community, prompting a resident to proudly admit, “I am racist, against Muslims,” it showed a wide acceptance of Islamophobia.

The ugliness my jokes help reveal is why I’m so worried about our pluralistic democracies. Demagogues appeal to our worst instincts. Conspiracy theories once confined to the fringe are going mainstream, fueled in part by President Donald Trump, who has spread such paranoid lies more than 1,700 times to his 67 million Twitter followers. It’s as if the Age of Reason – the era of evidential argument – is ending, and now knowledge is delegitimised and scientific consensus is dismissed. Democracy, which depends on shared truths, is in retreat, and autocracy, which thrives on shared lies, is on the march. Hate crimes are surging, as are murderous attacks on religious and ethnic minorities.

All this hate and violence actually has something in common: It’s being facilitated by a handful of Internet companies that amount to the greatest propaganda machine in history.

Facebook, YouTube, Twitter and other social media platforms reach billions of people. The algorithms these platforms depend on deliberately amplify content that keeps users engaged – stories that appeal to our baser instincts and trigger outrage and fear. That’s why fake news outperforms real news on social media; studies show that lies spread faster than truth.

On the Internet, everything can appear equally legitimate. Breitbart resembles the BBC, and the rantings of a lunatic seem as credible as the findings of a Nobel Prize winner. We have lost a shared sense of the basic facts upon which democracy depends.

When I, as Ali G, asked the astronaut Buzz Aldrin, “What woz it like to walk on de sun?” the joke worked, because we, the audience, shared the same facts. If you believe the moon landing was a hoax, the joke was not funny.

When Borat got that bar in Arizona to agree that “Jews control everybody’s money and never give it back,” the joke worked because the rest of us knew that the depiction of Jews as miserly is a conspiracy theory originating in the Middle Ages.

Social media platforms make it easier for people who share the same false premises to find one another, and then the technology acts as an accelerant for toxic thinking. When conspiracies take hold, it’s easier for hate groups to recruit, easier for foreign intelligence agencies to interfere in our elections and easier for a country like Myanmar to commit genocide against the Rohingya.

Yes, social media companies have taken some steps to reduce hate and conspiracies on their platforms. Yet these steps have been mostly superficial, and the next 12 months could be pivotal: British voters will go to the polls next month while online conspiracists promote the despicable theory of “great replacement” that white Christians are being deliberately replaced by Muslim immigrants. Americans will vote for president while trolls and bots perpetuate the disgusting lie of a “Hispanic invasion.” And after years of YouTube videos calling climate change a “hoax,” the United States is on track, a year from now, to formally withdraw from the Paris agreement.

Unfortunately, the executive of these platforms don’t appear interested in a close look at how they’re spreading hate, conspiracies and lies. Look at the speech Facebook founder and chief executive Mark Zuckerberg delivered last month that warned against new laws and regulations on companies like his.

Zuckerberg tried to portray the issue as one involving “choices” around “free expression.” But freedom of speech is not freedom of reach. Facebook alone already counts about a third of the world’s population among its users. Social media platforms should not give bigots and paedophiles a free platform to amplify their views and target victims.

Zuckerberg claimed that new limits on social media would “pull back on free expression.” This is utter nonsense. The First Amendment says that “Congress shall make no law” abridging freedom of speech, but this does not apply to private businesses. If a neo-Nazi comes goose-stepping into a restaurant and starts threatening other customers and saying he wants to kill Jews, would the restaurant owner be required to serve him an elegant eight-course meal? Of course not. The restaurant owner has every legal right, and, indeed, a moral obligation, to kick the Nazi out. So do Internet companies.

Zuckerberg seemed to equate regulation of companies like his to the actions of “the most repressive societies.” This, from one of the six people who run the companies that decide what information so much of the world sees: Zuckerberg at Facebook; Sundar Pichai at Google; Larry Page and Sergey Brin at Google’s parent company, Alphabet; Brin’s ex-sister-in-law, Susan Wojcicki, at YouTube; and Jack Dorsey at Twitter. These super-rich “Silicon Six” care more about boosting their share price than about protecting democracy. This is ideological imperialism – six unelected individuals in Silicon Valley imposing their vision on the rest of the world, unaccountable to any government and acting like they’re above the reach of law. Surely, instead of letting the Silicon Six decide the fate of the world order, our democratically elected representatives should have at least some say.

Zuckerberg speaks of welcoming a “diversity of ideas,” and last year, he gave us an example. He said he found posts denying the Holocaust “deeply offensive,” but he didn’t think Facebook should take them down “because I think there are things that different people get wrong.” This is madness. The Holocaust is a historical fact, and those who deny it aim to encourage another one. There’s no benefit in pretending that “the Holocaust is a hoax” is simply a “thing” that “different people get wrong.” Zuckerberg says that “people should decide what is credible, not tech companies.” But two-thirds of millennials say they haven’t even heard of Auschwitz. How are they supposed to know what’s “credible”? How are they supposed to know that the lie is a lie?

When it comes to removing content, Zuckerberg asked, “where do you draw the line?” Yes, that can be difficult, but here’s what he’s really saying: Removing lies and conspiracies is just too expensive.

Facebook, Google, and Twitter are unthinkably rich, and they have the best engineers in the world. They could fix these problems if they wanted to. Twitter could deploy an algorithm to remove more white supremacist hate speech, but they reportedly haven’t because it would eject some very prominent politicians. Facebook could hire enough monitors to actually monitor, work closely with groups such as the Anti-Defamation League and the NAACP and purge deliberate lies from their platforms.

But they won’t, because their entire business model relies on generating more engagement, and nothing generates more engagement than lies, fear and outrage.

These companies pretend they’re something bigger, or nobler, but what they really are is the largest publishers in history – after all, they make their money on advertising, just like other publishers. They should abide by basic standards and practices just like the ones that apply to newspapers, magazines, television and movies. I’ve had scenes in my movies cut or truncated to abide by those standards. Surely companies that publish material to billions of people should have to abide by basic standards just like film and television studios do.

Zuckerberg said social media companies should “live up to their responsibilities,” but he’s totally silent about what should happen when they don’t. By now, it’s pretty clear that they cannot be trusted to regulate themselves. In other industries, you can be sued for the harm you cause: Publishers can be sued for libel; people can be sued for defamation. I’ve been sued many times. But social media companies are almost completely protected from liability for the content their users post – no matter how indecent – by Section 230 of, get ready for it, the Communications Decency Act.

That immunity has warped their whole worldview. Take political ads. Fortunately, Twitter finally banned them, and Google says it will make changes, too. But if you pay Facebook, it will run any “political” ad you want, even if it’s a lie. It’ll even help you micro-target those lies to users for maximum effect. Under this twisted logic, if Facebook were around in the 1930s, it would have allowed Adolf Hitler to post 30-second ads on his “solution” to the “Jewish problem.” Here’s a good way for Facebook to “live up to” its responsibilities: Start fact-checking political ads before running them, stop micro-targeted lies immediately, and when ads are false, don’t publish them.

Section 230 was amended last year so that tech companies can be held responsible for paedophiles who use their sites to target children. Let’s also hold them responsible for users who advocate for the mass murder of children because of their race or religion. And maybe fines are not enough. Maybe it’s time for Congress to tell Zuckerberg and his fellow CEOs: You already allowed one foreign power to interfere in US elections; you already facilitated one genocide; do it again and you go to prison.

In the end, we have to decide what kind of world we want. Zuckerberg claims his main goal is to “uphold as wide a definition of freedom of expression as possible.” Yet our freedoms are not only an end in themselves, but they’re also a means to another end – to our right to life, liberty and the pursuit of happiness. And today these rights are threatened by hate, conspiracies and lies.

A pluralistic democratic society should make sure that people are not targeted, not harassed and not murdered because of who they are, where they come from, who they love or how they pray. If we do that – if we prioritize truth over lies, tolerance over prejudice, empathy over indifference and experts over ignoramuses – maybe we have a chance of stopping the greatest propaganda machine in history. We can save democracy. We can still have a place for free speech and free expression.

And, most important, my jokes will still work.

© The Washington Post 2019

0 notes