#nvidia drive

Text

I tried to watch a DVD tonight and after a few failed attempts, counted and determined I have at least 11 distinct configurations of technology that could play a DVD, they all range from 50-80% functional, and while I definitely could have gotten one of them working eventually, we gave up after attempt three.

This is a pretty accurate summation of my relationship with technology, I think.

#technology#I have so many ways of doing things#and they are all a little bit broken#yes of course I am a linux user#I got halfway through reinstalling my Nvidia Optimus drivers before remembering I had a whole-ass Bluray player in a cabinet#but by then it was bedtime#also this doesn't count trivially distinct configurations#dvd drive connected through SATA to USB adapter only counts once even though I have several bare DVD drives at hand#I am very annoyimg to try to do technology with sometimes

6 notes

·

View notes

Text

Me casually uninstalling BG3 so I have more room for TS4 stuff dfdkfdk

#[ OOC ] ── * MUN ( 𝘪 𝘯𝘦𝘦𝘥 𝘢 𝘤𝘶𝘱𝘱𝘢 𝘵𝘦𝘢 )#( I may end up putting it on my external hard drive instead )#( or eventually get an internal hard drive that fits in the laptop )#( or third option. install it on my other pc /but/ it doesn't have nvidia so that could be a problem despite the space )#( 'cos 123 GBs from BG3 is so much and my gaming laptop has only 475 gb sobs )#( also plan on playing bg1 and bg2 eventually for the lore )#( and reading more books. like the novelization and get some good ol' bhaalspawn vibes )

3 notes

·

View notes

Text

i really cant think of a better way to make fun of libertarian internet toughguys than the trump coke tweet

2 notes

·

View notes

Photo

prompto killed it today

bonus:

#that framing tho#ffxv#i am looking forward to getting a nvidia drive in the future so i can play with ansel and not rely on prompto#but for now. hot dang dude#cruddy rambles

4 notes

·

View notes

Video

youtube

Need for Speed Underground Remastered - Top 3 Best Graphics Mods

#youtube#need for speed#playstation#steam#xbox#race#racing#games#gamingpc#gaming#gamingcommunity#steamdeck#drive#supercars#reshade#pcgaming#nvidia#rtx

1 note

·

View note

Text

“Humans in the loop” must detect the hardest-to-spot errors, at superhuman speed

I'm touring my new, nationally bestselling novel The Bezzle! Catch me SATURDAY (Apr 27) in MARIN COUNTY, then Winnipeg (May 2), Calgary (May 3), Vancouver (May 4), and beyond!

If AI has a future (a big if), it will have to be economically viable. An industry can't spend 1,700% more on Nvidia chips than it earns indefinitely – not even with Nvidia being a principle investor in its largest customers:

https://news.ycombinator.com/item?id=39883571

A company that pays 0.36-1 cents/query for electricity and (scarce, fresh) water can't indefinitely give those queries away by the millions to people who are expected to revise those queries dozens of times before eliciting the perfect botshit rendition of "instructions for removing a grilled cheese sandwich from a VCR in the style of the King James Bible":

https://www.semianalysis.com/p/the-inference-cost-of-search-disruption

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn't optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable - errors ("hallucinations"). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don't care about the odd extra finger. If the chatbot powering a tourist's automatic text-to-translation-to-speech phone tool gets a few words wrong, it's still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company's perspective – is that these aren't just low-stakes, they're also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principle income from high-value applications, the servers shut down, and the low-value applications disappear:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

Some businesses may be insensitive to those consequences. Air Canada replaced its human customer service staff with chatbots that just lied to passengers, stealing hundreds of dollars from them in the process. But the process for getting your money back after you are defrauded by Air Canada's chatbot is so onerous that only one passenger has bothered to go through it, spending ten weeks exhausting all of Air Canada's internal review mechanisms before fighting his case for weeks more at the regulator:

https://bc.ctvnews.ca/air-canada-s-chatbot-gave-a-b-c-man-the-wrong-information-now-the-airline-has-to-pay-for-the-mistake-1.6769454

There's never just one ant. If this guy was defrauded by an AC chatbot, so were hundreds or thousands of other fliers. Air Canada doesn't have to pay them back. Air Canada is tacitly asserting that, as the country's flagship carrier and near-monopolist, it is too big to fail and too big to jail, which means it's too big to care.

Air Canada shows that for some business customers, AI doesn't need to be able to do a worker's job in order to be a smart purchase: a chatbot can replace a worker, fail to their worker's job, and still save the company money on balance.

I can't predict whether the world's sociopathic monopolists are numerous and powerful enough to keep the lights on for AI companies through leases for automation systems that let them commit consequence-free free fraud by replacing workers with chatbots that serve as moral crumple-zones for furious customers:

https://www.sciencedirect.com/science/article/abs/pii/S0747563219304029

But even stipulating that this is sufficient, it's intrinsically unstable. Anything that can't go on forever eventually stops, and the mass replacement of humans with high-speed fraud software seems likely to stoke the already blazing furnace of modern antitrust:

https://www.eff.org/de/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

Of course, the AI companies have their own answer to this conundrum. A high-stakes/high-value customer can still fire workers and replace them with AI – they just need to hire fewer, cheaper workers to supervise the AI and monitor it for "hallucinations." This is called the "human in the loop" solution.

The human in the loop story has some glaring holes. From a worker's perspective, serving as the human in the loop in a scheme that cuts wage bills through AI is a nightmare – the worst possible kind of automation.

Let's pause for a little detour through automation theory here. Automation can augment a worker. We can call this a "centaur" – the worker offloads a repetitive task, or one that requires a high degree of vigilance, or (worst of all) both. They're a human head on a robot body (hence "centaur"). Think of the sensor/vision system in your car that beeps if you activate your turn-signal while a car is in your blind spot. You're in charge, but you're getting a second opinion from the robot.

Likewise, consider an AI tool that double-checks a radiologist's diagnosis of your chest X-ray and suggests a second look when its assessment doesn't match the radiologist's. Again, the human is in charge, but the robot is serving as a backstop and helpmeet, using its inexhaustible robotic vigilance to augment human skill.

That's centaurs. They're the good automation. Then there's the bad automation: the reverse-centaur, when the human is used to augment the robot.

Amazon warehouse pickers stand in one place while robotic shelving units trundle up to them at speed; then, the haptic bracelets shackled around their wrists buzz at them, directing them pick up specific items and move them to a basket, while a third automation system penalizes them for taking toilet breaks or even just walking around and shaking out their limbs to avoid a repetitive strain injury. This is a robotic head using a human body – and destroying it in the process.

An AI-assisted radiologist processes fewer chest X-rays every day, costing their employer more, on top of the cost of the AI. That's not what AI companies are selling. They're offering hospitals the power to create reverse centaurs: radiologist-assisted AIs. That's what "human in the loop" means.

This is a problem for workers, but it's also a problem for their bosses (assuming those bosses actually care about correcting AI hallucinations, rather than providing a figleaf that lets them commit fraud or kill people and shift the blame to an unpunishable AI).

Humans are good at a lot of things, but they're not good at eternal, perfect vigilance. Writing code is hard, but performing code-review (where you check someone else's code for errors) is much harder – and it gets even harder if the code you're reviewing is usually fine, because this requires that you maintain your vigilance for something that only occurs at rare and unpredictable intervals:

https://twitter.com/qntm/status/1773779967521780169

But for a coding shop to make the cost of an AI pencil out, the human in the loop needs to be able to process a lot of AI-generated code. Replacing a human with an AI doesn't produce any savings if you need to hire two more humans to take turns doing close reads of the AI's code.

This is the fatal flaw in robo-taxi schemes. The "human in the loop" who is supposed to keep the murderbot from smashing into other cars, steering into oncoming traffic, or running down pedestrians isn't a driver, they're a driving instructor. This is a much harder job than being a driver, even when the student driver you're monitoring is a human, making human mistakes at human speed. It's even harder when the student driver is a robot, making errors at computer speed:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

This is why the doomed robo-taxi company Cruise had to deploy 1.5 skilled, high-paid human monitors to oversee each of its murderbots, while traditional taxis operate at a fraction of the cost with a single, precaratized, low-paid human driver:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

The vigilance problem is pretty fatal for the human-in-the-loop gambit, but there's another problem that is, if anything, even more fatal: the kinds of errors that AIs make.

Foundationally, AI is applied statistics. An AI company trains its AI by feeding it a lot of data about the real world. The program processes this data, looking for statistical correlations in that data, and makes a model of the world based on those correlations. A chatbot is a next-word-guessing program, and an AI "art" generator is a next-pixel-guessing program. They're drawing on billions of documents to find the most statistically likely way of finishing a sentence or a line of pixels in a bitmap:

https://dl.acm.org/doi/10.1145/3442188.3445922

This means that AI doesn't just make errors – it makes subtle errors, the kinds of errors that are the hardest for a human in the loop to spot, because they are the most statistically probable ways of being wrong. Sure, we notice the gross errors in AI output, like confidently claiming that a living human is dead:

https://www.tomsguide.com/opinion/according-to-chatgpt-im-dead

But the most common errors that AIs make are the ones we don't notice, because they're perfectly camouflaged as the truth. Think of the recurring AI programming error that inserts a call to a nonexistent library called "huggingface-cli," which is what the library would be called if developers reliably followed naming conventions. But due to a human inconsistency, the real library has a slightly different name. The fact that AIs repeatedly inserted references to the nonexistent library opened up a vulnerability – a security researcher created a (inert) malicious library with that name and tricked numerous companies into compiling it into their code because their human reviewers missed the chatbot's (statistically indistinguishable from the the truth) lie:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

For a driving instructor or a code reviewer overseeing a human subject, the majority of errors are comparatively easy to spot, because they're the kinds of errors that lead to inconsistent library naming – places where a human behaved erratically or irregularly. But when reality is irregular or erratic, the AI will make errors by presuming that things are statistically normal.

These are the hardest kinds of errors to spot. They couldn't be harder for a human to detect if they were specifically designed to go undetected. The human in the loop isn't just being asked to spot mistakes – they're being actively deceived. The AI isn't merely wrong, it's constructing a subtle "what's wrong with this picture"-style puzzle. Not just one such puzzle, either: millions of them, at speed, which must be solved by the human in the loop, who must remain perfectly vigilant for things that are, by definition, almost totally unnoticeable.

This is a special new torment for reverse centaurs – and a significant problem for AI companies hoping to accumulate and keep enough high-value, high-stakes customers on their books to weather the coming trough of disillusionment.

This is pretty grim, but it gets grimmer. AI companies have argued that they have a third line of business, a way to make money for their customers beyond automation's gifts to their payrolls: they claim that they can perform difficult scientific tasks at superhuman speed, producing billion-dollar insights (new materials, new drugs, new proteins) at unimaginable speed.

However, these claims – credulously amplified by the non-technical press – keep on shattering when they are tested by experts who understand the esoteric domains in which AI is said to have an unbeatable advantage. For example, Google claimed that its Deepmind AI had discovered "millions of new materials," "equivalent to nearly 800 years’ worth of knowledge," constituting "an order-of-magnitude expansion in stable materials known to humanity":

https://deepmind.google/discover/blog/millions-of-new-materials-discovered-with-deep-learning/

It was a hoax. When independent material scientists reviewed representative samples of these "new materials," they concluded that "no new materials have been discovered" and that not one of these materials was "credible, useful and novel":

https://www.404media.co/google-says-it-discovered-millions-of-new-materials-with-ai-human-researchers/

As Brian Merchant writes, AI claims are eerily similar to "smoke and mirrors" – the dazzling reality-distortion field thrown up by 17th century magic lantern technology, which millions of people ascribed wild capabilities to, thanks to the outlandish claims of the technology's promoters:

https://www.bloodinthemachine.com/p/ai-really-is-smoke-and-mirrors

The fact that we have a four-hundred-year-old name for this phenomenon, and yet we're still falling prey to it is frankly a little depressing. And, unlucky for us, it turns out that AI therapybots can't help us with this – rather, they're apt to literally convince us to kill ourselves:

https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#automation#humans in the loop#centaurs#reverse centaurs#labor#ai safety#sanity checks#spot the mistake#code review#driving instructor

701 notes

·

View notes

Photo

Nvidia има компјутер за автомобили што врши 254 трилиони операции во секунда

Производителот на чипови Nvidia покажа автомобилски компјутер со високи перформанси, што е наменет да врши напредни процеси во инфо-забавниот систем и во системите за автоматско водење на возилото. Новата технологија на Nvidia доживеа премиера синоќа на настанот што компанијата го организираше во Сан Хозе, Калифорнија. Аналитичарите ценат дека компјутерот ќе ѝ обезбеди предност на Nvidia, […]

0 notes

Text

hi rendoc enjoyers i have a gift for you (ren describing the first time he met doc irl)

[bonus and transcript under cut]

chat summarized the vibes best:

[ IMG ID: A screenshot from Rendog's Twitch chat timestamped 1:47:04, reading: "Sproxite: Wow Ren was y/n for a second". END ID.]

[ VIDEO TRANSCRIPT:

Rendog is placing red terracotta and quartz blocks along the edge of Blue River Raceway.

Ren: How did I meet Doc, then, at this tournament? Well, Doc was also invited to this tournament, and Doc was playing, uh, of course, for the German team. And Nvidia had put us all up in the same hotel together, and-- well, not all in the same hotel but, like, I think most of us were in the same hotel. And, when I got to the hotel, uh, by-- like, a taxi picked us-- picked up a bunch of us from the airport and then took us to that hotel. When I got there, got out the taxi, everybody else went into the hotel. I hadn't really, like-- it was kind of an awkward taxi drive, because none of us really knew each other. We were from different countries, y'know? We were all Youtubers and streamers and stuff from different countries, so we didn't really know each other. So they, like-- the rest of the people from the taxi just, like, went into the hotel, and I got my suitcase and started walking to the hotel. And there, leaning against the glass wall of the hotel-- like the hotel has this massive glass wall that looked into the lobby-- was a ridiculously tall, very good-looking German fella, wearing a hat, of course, with a bunch of like, festival arm bands, or, like, armbands from conventions, I guess. Just looking like, basically, the coolest cat I had ever seen. I was like, 'Oh my god, I know this person. This is- This is Doc.' (laughs) And, uh, at the time, like, I was a pretty small channel, I was maybe, like, I dunno, 50 thousand subs, or something? And Doc was, like, in the millions. (laughs) So I was immediately, like, quite intimidated and quite starstruck, y'know? Uh, 'cause this is somebody that I'd been watching for a really long time. Plus, he was massive, u-r-uh, insanely handsome, and, like, super cool. (laughs) I was, like, just this ner- this Minecraft nerd, y'know?

END TRANSCRIPT.]

1K notes

·

View notes

Text

Researchers release open-source photorealistic simulator for autonomous driving | MIT News

Researchers release open-source photorealistic simulator for autonomous driving | MIT News

Hyper-realistic virtual worlds have been heralded as the best driving schools for autonomous vehicles (AVs), since they’ve proven fruitful test beds for safely trying out dangerous driving scenarios. Tesla, Waymo, and other self-driving companies all rely heavily on data to enable expensive and proprietary photorealistic simulators, since testing and gathering nuanced I-almost-crashed data…

View On WordPress

#Alexander Amini#autonomous driving#Autonomous vehicles#Daniela Rus#Drive AGX Pegasus#event-based cameras#LIDAR#Massachusetts Institute of Technology#MIT#MIT CSAIL#MIT News#MIT research#multi-agency#NVidia#photorealistic simulator#Sertac Karaman#Song Han#Tesla#Toyota Research Institute#Tsun-Hsuan Wang#VISTA 2.0#Waymo#Wilko Schwarting#Zhijian Liu

0 notes

Text

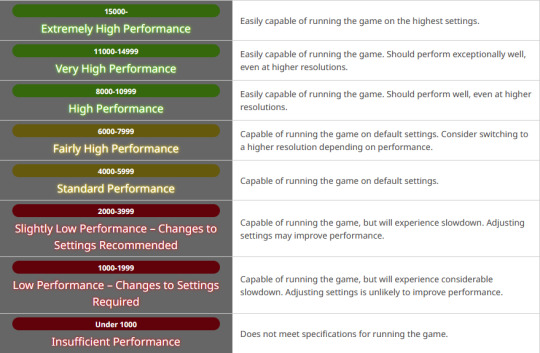

Benchmark Tech Notes

Running the Benchmark

If your Benchmark isn't opening, it's an issue with the executable file, and something not completing properly on either download, or extracting the Zip file. The Benchmark is designed to run and give you scores for your potato computer, I promise.

I actually saved my Benchmark to my external drive, and it still pulls and saves data and runs as it should. Make sure you allowed the download to complete before extracting the zip.

Resolution

Check your Settings; in Display, it may be defaulting your monitor Resolution to something than you might otherwise use if you aren't on standard 1920x1080.

To check your monitor Resolution, minimize everything on your screen and right click anywhere on your Desktop. Go to Display Settings and scroll down to find Resolution and what it's set at.

You can set the Graphic Settings 1 tab to Maximum, or to Import your game settings. Display Settings tab is where you set it to be Windowed, Bordered, or Full Screen, as well as select Resolution to match your monitor in the dropdown (or customize it if needed). I speak on Resolution as some folks in my FC noted it changed how their characters looked.

The Other tab in Settings is where you can change the text output, or even check a box to disable the logo and score; I do this on subsequent plays, once I have my scores at various settings, to get the clean screenshots.

@calico-heart has a post about fixing graphics settings, with screenshots of the settings tab. Basically, change graphics upscaling from AMD to NVIDIA, and/or uncheck Enable Dynamic Resolution. Also check the Framerate Threshold dropdown.

Screenshots

The benchmark auto-saves 5 screens each playthrough. In the Benchmark folder there is a Screenshots folder to find the auto-images taken of your characters.

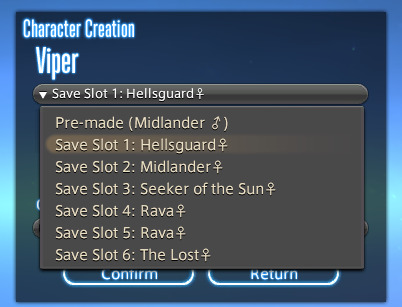

Character Appearance

If you want to get your current in game appearance, including non-standard hairstyles, make sure to load up the live game, right click and "Save Character Settings."

Then go to Documents/My Games/Final Fantasy XIV: A Realm Reborn (this is the default in Windows 10 so mileage varies). The file will have the date you last updated their settings and be named FFXIV_CHARA_01.dat (or however many saves you have/made).

Grab those newly updated DAT files for your character(s) and copy them, then in the same base folder, go to Final Fantasy XIV: A Realm Reborn (Benchmark).

Paste the copied DAT files in there, and rename to FFXIV_CHARA_BENCH01.dat (the number doesn't matter, and you may have more).

When running Benchmark Character Creation, use the dropdown menu.

If you do Create a Custom Character and Load Appearance Data, it will give you default hairstyles again. Meteor's Dawntrail hairstyle is a new default.

In Char Gen I am finding that a very pale hrothgal reflects the green scenery around her, giving her white skin/fur a green tinge. The other zones do not have this problem, or at least not to the same degree.

They added a Midday vs Evening setting in outdoor areas as well to test lighting. The lighting in the Gridanian innroom is better; not as bright as outdoors, to be expected, but not completely useless.

New voice type icons to clarifying the sounds you make.

Remember we're getting a free fantasia with the expansion, so some tweaking may be needed; Iyna I felt like I needed to adjust her jaw. Other colors--skin, hair, eyes, tattoos, etc--are showing differently in the various kinds of lighting.

Uncertain if the limit on hairstyles for the Hrothgals so far is just a Benchmark thing; they do have set styles for different head options. Everyone gets Meteor's hair though, so it may be a temporary/Benchmark limit. But which clan and face you choose drastically alters what hair and facial feature options you have access to.

Check your settings, tweak them a bit, play around with chargen, and remember this is still a Benchmark; they always strike me as a little less polished than the finished game, but so far I'm actually pretty pleased with having defined fingers and toes, the irises in the eyes, scars looking cut into the skin, and other improvements.

163 notes

·

View notes

Text

the other console generation poll was mostly just a "what generation of gaming did you grow up on" so new idea

223 notes

·

View notes

Text

Extremely Quick and Dirty Distro Chooser for Complete Beginners

You are a person who might be interested in using Linux, but there’s literally thousands of distros out there and you have no idea where to start. Luckily for you, I am a weirdo who likes to test-drive Linux distros for fun. I have not tested literally every distro out there, but I have tried a fair number (35 according to my notes), and I think I have a decent shortlist of distros for people who haven’t used Linux before.

Note that this is: a) not an Objective List of the Best Linux Distros Out There; and b) not really an objective list at all. These are just the ones that I’ve tested and that I think would be good for people new to Linux.

My recommendations are as follows:

If your computer is a more than a few years old or underpowered/slow, I would recommend MX Linux or SpiralLinux.

If your computer has an NVIDIA GPU, I would recommend Pop!_OS.

Everyone else, I would recommend Linux Mint or potentially MX Linux.

Honorable mentions: Manjaro, Nobara, AV Linux ,Trisquel

I would NOT recommend Ubuntu or any of its variants, Linux Lite, or Fedora at this time.

My reasoning for each of these is below the cut, if you’re interested. If not, then that’s the post, have a great day!

All of my recommended distros are mainly or fully GUI-controllable (no messing around in the command line unless you want to, or for a few specific things), have a full complement of the software you need pre-installed, and a software center for anything else you might want. They’re also very stable and customizable, albeit some of them require more fiddling than others to get things the way you want.

Linux Mint is probably the best all-arounder for new Linux users at the current moment. It’s what I use as my daily driver on my main laptop, and with very few exceptions (mainly caused by having an NVIDIA GPU and/or trying to run ancient finicky software), I’m able to do everything I want and need to do on it without a lot of drama. If you don’t want Linux to be your hobby and you just need a computer that works, Linux Mint is currently your best bet. Use the standard Cinnamon edition if your computer is fairly new and/or beefy, or try the MATE or XFCE editions if it’s a little older.

Pop!_OS is another one that shows up on “good for beginners” lists. It’s based off of Ubuntu, the same as Linux Mint, and it’s got a lot of similar features going for it. I haven’t tested this one as extensively as I have some of the others on this list, so I can’t speak on it as in-depth, but I would 100% recommend it if your computer has an NVIDIA GPU. NVIDIA and Linux do not tend to play nicely together, and Pop!_OS has built-in NVIDIA support. Had I known what a pain it would be getting my GPU to work, I likely would have started with Pop.

MX Linux is another good all-arounder, though it doesn’t tend to be recommended for beginners, and I’m not sure why. It has a very friendly tour that shows you the various features of the OS, and a huge suite of tools so you can manage everything from the GUI. It bills itself as a “midweight” distro, but personally have found it to be much faster on my older laptop than many so-called “lightweight” distros, so it’s a good choice for older hardware. It can be set up to run off of a USB thumb drive with persistence, and I believe there are still programs for managing dial-up connections and old mp3 players/iPods in the repositories as well, if you were to need those. Use the main XFCE edition if you’re installing it on an older computer.

SpiralLinux is the kind of extremely small distro that probably shouldn’t be on this type of list, except it’s “Literally Just Debian But More User-Friendly” so that makes it perfect. The main reason it’s on here is because I am using it right now to write this post on my 12-year-old Dell, and it’s running like a charm. SpiralLinux is the fastest distro I have used on this laptop, except for Trisquel (more on that one later). If you have an older Windows computer that you want to breathe new life into, SpiralLinux is a good choice. Use the XFCE, MATE, or LXQT editions for old hardware.

Honorable mentions: people who know Linux will notice that all of the distros above are based off of Debian and/or Ubuntu. Do I have something against Fedora, Arch, etc? Nope. I just think the Debian/Ubuntu lineage has a real knack for making beginner-friendly distros, which is the focus of this post. That said, Manjaro is also said to be fairly beginner-friendly, though I haven’t tested it extensively, and Nobara might be a good place to start for a Fedora-based distro. Nobara is a little more niche than I would usually recommend, but it is really nice and pretty user-friendly.

If you do a lot of video or audio work, AV Linux may be worth a look. It’s based off of MX Linux, and has the same features, but it also has a lot of audio and video tools built in, and uses a different kernel.

Trisquel also makes the honorable mention list for being incredibly fast and having an interesting premise. Trisquel and all of the software in its repositories are 100% open-source. Normally I wouldn’t recommend this kind of niche distro for newer users, but it is based off of Debian so it’s pretty stable, and it runs surprisingly fast on my old Dell laptop. It’s also a fun crash-course in open-source software and what it can do. I would not necessarily recommend it as a daily driver, but if you want to get started in the Linux and FLOSS worlds and have an old laptop, you could do a lot worse than Trisquel.

What I DON’T recommend:

Ubuntu has historically been considered THE user-friendly Linux distro, and also one of the most popular. I don’t recommend it (or any of its official variants: Kubuntu, Xubuntu, Lubuntu, etc) because of some really poor decisions and doubling-down on those decisions by Canonical, the company that develops Ubuntu. As you’re currently reading this on Tumblr, I doubt I have to tell you why the company in charge making decisions their users hate and then refusing to budge on them is a bad time. That said, it’s not a terrible OS in and of itself. If you really don’t like any of the distros listed above, or can’t get them to work, you could give Ubuntu a shot...but personally, I wouldn’t, when there are so many other options.

I am anti-recommending Linux Lite, even though it often gets touted as a good “lightweight” beginner-friendly distro. In my experience, it’s very slow compared to something like MX or Spiral, and it’s faithful to its Ubuntu base to a fault. Also the app store is terrible. I think the Debian-based “light” distros are almost always better than the Ubuntu ones. If you feel like you absolutely NEED an Ubuntu-based “light” distro, consider Bodhi Linux as an alternative. It’s still pretty slow, but it has some clever features (the app center is browser-based so it’s much faster) and lacks some of the anti-features that Linux Lite has.

Fedora is my final anti-recommendation, less because of the OS itself and again, because of the organization behind it--in this case, IBM. IBM has been pulling some Typical Corporate Fuckery by closing off their source code to the public. Needless to say, this is...bad. Again, assuming that Tumblr users aren’t big fans of Corporate Fuckery, I would advise people to steer clear of Fedora for now. If you want or need a RHEL distro, I would consider AlmaLinux instead.

So that’s it for my recommendations. If none of these are to your liking, then check out DistroWatch. It has thousands of different Linux distros and a few other OSes listed. If you use Ventoy, you can easily test-drive many different live versions of distros without a lot of fuss...but that’s another post ;) Have a great day!

39 notes

·

View notes

Text

Annnnd also, very important update on Aislynn's Borked Up Computer...

Still borked up but Georgette (the computer) and I are working out a system:

Turn computer on

Pray it does not blow up

Thank God when it does not blow up

Check to make sure that all of the drivers are still correct and haven't been "helpfully" rolled back by Windows, Dell, the demon who apparently infected a bunch of Dells at the factory level, etc.

Try not to stare at the Nvidia light every time it comes on

Remind self it's okay for the Nvidia light to be on because it's, you know, the graphics card and does have legit things to do

Check Windows Reliability Monitor

Check the health and temperature of the C drive

Check the time

Those last two are important because in about an hour to 2 hours, Georgette will begin to just feel off in some way. Go back and check health and temp. Completely shut down computer if temp has raised (which, to be fair, I think the temp going up, like, 1 degree is normal with use but it's just something I can look at and makes me feel more like I have some sort of control).

If we make it to 2 hours up time without a problem, shut down anyway.

Leave off for a while. Switch to Fire tablet with its awesome, new $9 Bluetooth keyboard \o/ 🥳🤷♀️😉and spend some time writing the next chapter of whatever fic I'm working on (currently "15 Minutes" chapter 9) in a Tumblr draft.

Eventually come back to computer and turn it on again. Weirdly now, it should feel a little steadier than the first "session" and can run as long as 10 hours without feeling like it's about to fall over, explode, or all of the above. I do not understand why this is but... it is. 🤷♀️

Try not to keep looking at the Nvidia light every time it comes on but, for Pete's sake, that looked so cool when I first got Georgette, like she was some Fancy Gaming PC™️ and now it just gives me anxiety.

Eventually shut down and feel like it was a Good Computer Day if we made it through without a blue screen event.

I'd ideally like to get an entire week with no blue screening, then please, Dear Lord, a whole month, then I want to try installing Steam again. This may end very badly because I know the computer is NOT stable but she's more stable than before. I just want to play Halo again, friends. I miss it so, so much.

I also want this computer, which has so much potential to be a genuinely amazing gaming/vidding rig, to live up to that, one day. 64GBs of RAM should not go into vapors and die because I'm watching a video on Youtube while checking my email. That's just wrong. 😭I know Georgette can be a great PC, can make up for this incredibly rough first 7 weeks of her life. We just need to get this dang BIOS/OS/driver/whatever Incompatibility Demon out of her.

Hope you're all doing well. Love you, frens. 🤗💖

#ageless aislynn#computer problems#computer issues#i believe in georgette#she's a good pc i just know it#she just has an incompatibility demon#anybody have the number of a pc exorcist? 🤷♀️😉

13 notes

·

View notes

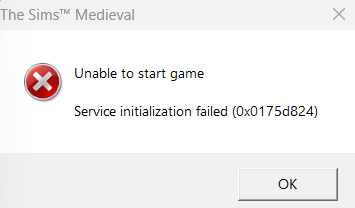

Text

Does anybody have any suggestions for why I can't play TSM? I've not touched it at all since the last time I played in September so I don't know why it's suddenly not starting. That's the error message.

Everything I'm finding on google is saying to repair game via origin/ea app and stuff but I installed it with the disc so there is no repair game.

nvidia geforce did do a driver update today 🤔 so maybe that broke it?

I don't want to uninstall and reinstall unless I have to because it's a pain to do that without having a disc drive...would need to go track down my dad's portable thingymajig. And then reapply whatever various fixes I had to do to get it to start in the first place when I got this laptop in Dec 2022.

As I panic whenever anything like this happens I did boot up ts2 and ts3 and they're both fine. Not that that's relevant.

12 notes

·

View notes

Video

youtube

Need for Speed Underground 2 Legendary Edition - Ultra Realistic Textur...

#youtube#need for speed#playstation#steam#xbox#race#racing#games#gamingpc#gaming#gamingcommunity#steamdeck#drive#supercars#reshade#pcgaming#nvidia#rtx

1 note

·

View note

Text

here's a question I'm not sure this is the best place to talk about:

if you were going to build a set of DOS/windows machines to play ALL PC GAMES, how many would you need?

Like, the boring answer is "ONE" but that's terrible and no, not really. Yes you can run DOSBox and such on a modern computer, but there's plenty of games in the hard-to-emulate era where they just won't run on a modern computer without a lot of patching and hacks and even then, maybe not.

So a slightly less boring answer is "TWO": One for the Hard To Emulate Era and one for NOW-GAMES. So like a 600mhz Pentium III with a Voodoo 3 and Windows 98, and a Modern Boring Machine. You play nearly everything on the modern one, including DOS games (using DOSBox and similar), but games that fit in the hard-to-emulate era, you play on the Old Machine.

BUT if you want to have a set of computers for most of the eras of PC gaming, you need more than that. Like if you were populating a museum of PC games. How many? I'm gonna say... FIVE. Maybe SIX.

An IBM XT. This is the best system for the earliest era of PC games: booters, early DOS games, anything without speed control (yes, there are turbo buttons, but they can only do so much). This is like an 8mhz with a 20mb hard drive, 640kb of ram, PC speaker only. You definitely need a joystick.

High-end 486/Pentium. This will play the rest of DOS games and nearly all Windows 3.1 games. 16mb of RAM is more than enough. A hard drive of about ~500mb (one of the first size limits), a soundblaster and maybe a GUS or MIDI device. You'll need a CD-ROM drive, for sure, even though most games won't need it.

Pentium II/III, or an AMD K6: Most early Windows games from the windows 9x era. You'll need a PCI sound card and a 3DFX card, probably a Voodoo 3 or above. You could go with an early nvidia like a geforce 2/3, but then you don't get GLIDE games. Something like 128mb of RAM, a couple gigs of hard drive, nearly all games will be CD-ROM so you want something FAST, and probably an old copy of Daemon Tools/CloneCD and a pile of no-CD cracks, because that will be NOISY.

Late single-core era: Pentium 4 or AMD Athlon 64. This is for the games that are relatively recent (2000s and onward) but have compatibility reasons that make them not run well on modern systems, because they don't handle multi-core systems, 64bit windows, or windowses newer than XP. So obviously this is going to run Windows XP, maybe tweaked Vista at a push, and it will 100% be the 32bit version. You're gonna have like 1-2 gigabytes of RAM, a few hundred gigabytes of hard drive space to a terabyte, and a video card like a GeForce 8-series or a Radeon HD 3000.

Boring modern machine. Fastest CPU/GPU, most RAM, biggest HD, etc that you can afford. Run Windows 10 or 11, it doesn't matter.

And the only reason I say "five or six" is because you might want to stuff another computer in, call it PC 3.5: Basically a fast Win9x machine, but this one uses an nvidia/ATI GPU instead of a 3DFX GPU. This'll be a machine for high-end Win9x/ME games that need more graphics power than a 3DFX card can manage, but don't work on an XP machine. I don't know for certain there are games in that area, but I wouldn't at all be surprised.

143 notes

·

View notes