#deepfakes

Text

I assure you, an AI didn’t write a terrible “George Carlin” routine

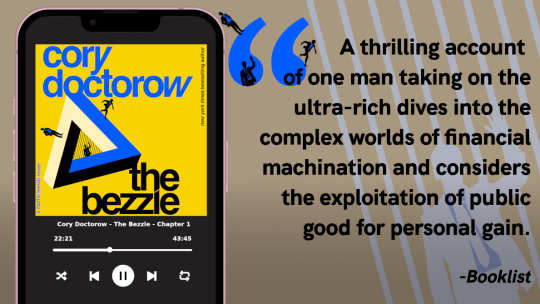

There are only TWO MORE DAYS left in the Kickstarter for the audiobook of The Bezzle, the sequel to Red Team Blues, narrated by @wilwheaton! You can pre-order the audiobook and ebook, DRM free, as well as the hardcover, signed or unsigned. There's also bundles with Red Team Blues in ebook, audio or paperback.

On Hallowe'en 1974, Ronald Clark O'Bryan murdered his son with poisoned candy. He needed the insurance money, and he knew that Halloween poisonings were rampant, so he figured he'd get away with it. He was wrong:

https://en.wikipedia.org/wiki/Ronald_Clark_O%27Bryan

The stories of Hallowe'en poisonings were just that – stories. No one was poisoning kids on Hallowe'en – except this monstrous murderer, who mistook rampant scare stories for truth and assumed (incorrectly) that his murder would blend in with the crowd.

Last week, the dudes behind the "comedy" podcast Dudesy released a "George Carlin" comedy special that they claimed had been created, holus bolus, by an AI trained on the comedian's routines. This was a lie. After the Carlin estate sued, the dudes admitted that they had written the (remarkably unfunny) "comedy" special:

https://arstechnica.com/ai/2024/01/george-carlins-heirs-sue-comedy-podcast-over-ai-generated-impression/

As I've written, we're nowhere near the point where an AI can do your job, but we're well past the point where your boss can be suckered into firing you and replacing you with a bot that fails at doing your job:

https://pluralistic.net/2024/01/15/passive-income-brainworms/#four-hour-work-week

AI systems can do some remarkable party tricks, but there's a huge difference between producing a plausible sentence and a good one. After the initial rush of astonishment, the stench of botshit becomes unmistakable:

https://www.theguardian.com/commentisfree/2024/jan/03/botshit-generative-ai-imminent-threat-democracy

Some of this botshit comes from people who are sold a bill of goods: they're convinced that they can make a George Carlin special without any human intervention and when the bot fails, they manufacture their own botshit, assuming they must be bad at prompting the AI.

This is an old technology story: I had a friend who was contracted to livestream a Canadian awards show in the earliest days of the web. They booked in multiple ISDN lines from Bell Canada and set up an impressive Mbone encoding station on the wings of the stage. Only one problem: the ISDNs flaked (this was a common problem with ISDNs!). There was no way to livecast the show.

Nevertheless, my friend's boss's ordered him to go on pretending to livestream the show. They made a big deal of it, with all kinds of cool visualizers showing the progress of this futuristic marvel, which the cameras frequently lingered on, accompanied by overheated narration from the show's hosts.

The weirdest part? The next day, my friend – and many others – heard from satisfied viewers who boasted about how amazing it had been to watch this show on their computers, rather than their TVs. Remember: there had been no stream. These people had just assumed that the problem was on their end – that they had failed to correctly install and configure the multiple browser plugins required. Not wanting to admit their technical incompetence, they instead boasted about how great the show had been. It was the Emperor's New Livestream.

Perhaps that's what happened to the Dudesy bros. But there's another possibility: maybe they were captured by their own imaginations. In "Genesis," an essay in the 2007 collection The Creationists, EL Doctorow (no relation) describes how the ancient Babylonians were so poleaxed by the strange wonder of the story they made up about the origin of the universe that they assumed that it must be true. They themselves weren't nearly imaginative enough to have come up with this super-cool tale, so God must have put it in their minds:

https://pluralistic.net/2023/04/29/gedankenexperimentwahn/#high-on-your-own-supply

That seems to have been what happened to the Air Force colonel who falsely claimed that a "rogue AI-powered drone" had spontaneously evolved the strategy of killing its operator as a way of clearing the obstacle to its main objective, which was killing the enemy:

https://pluralistic.net/2023/06/04/ayyyyyy-eyeeeee/

This never happened. It was – in the chagrined colonel's words – a "thought experiment." In other words, this guy – who is the USAF's Chief of AI Test and Operations – was so excited about his own made up story that he forgot it wasn't true and told a whole conference-room full of people that it had actually happened.

Maybe that's what happened with the George Carlinbot 3000: the Dudesy dudes fell in love with their own vision for a fully automated luxury Carlinbot and forgot that they had made it up, so they just cheated, assuming they would eventually be able to make a fully operational Battle Carlinbot.

That's basically the Theranos story: a teenaged "entrepreneur" was convinced that she was just about to produce a seemingly impossible, revolutionary diagnostic machine, so she faked its results, abetted by investors, customers and others who wanted to believe:

https://en.wikipedia.org/wiki/Theranos

The thing about stories of AI miracles is that they are peddled by both AI's boosters and its critics. For boosters, the value of these tall tales is obvious: if normies can be convinced that AI is capable of performing miracles, they'll invest in it. They'll even integrate it into their product offerings and then quietly hire legions of humans to pick up the botshit it leaves behind. These abettors can be relied upon to keep the defects in these products a secret, because they'll assume that they've committed an operator error. After all, everyone knows that AI can do anything, so if it's not performing for them, the problem must exist between the keyboard and the chair.

But this would only take AI so far. It's one thing to hear implausible stories of AI's triumph from the people invested in it – but what about when AI's critics repeat those stories? If your boss thinks an AI can do your job, and AI critics are all running around with their hair on fire, shouting about the coming AI jobpocalypse, then maybe the AI really can do your job?

https://locusmag.com/2020/07/cory-doctorow-full-employment/

There's a name for this kind of criticism: "criti-hype," coined by Lee Vinsel, who points to many reasons for its persistence, including the fact that it constitutes an "academic business-model":

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

That's four reasons for AI hype:

to win investors and customers;

to cover customers' and users' embarrassment when the AI doesn't perform;

AI dreamers so high on their own supply that they can't tell truth from fantasy;

A business-model for doomsayers who form an unholy alliance with AI companies by parroting their silliest hype in warning form.

But there's a fifth motivation for criti-hype: to simplify otherwise tedious and complex situations. As Jamie Zawinski writes, this is the motivation behind the obvious lie that the "autonomous cars" on the streets of San Francisco have no driver:

https://www.jwz.org/blog/2024/01/driverless-cars-always-have-a-driver/

GM's Cruise division was forced to shutter its SF operations after one of its "self-driving" cars dragged an injured pedestrian for 20 feet:

https://www.wired.com/story/cruise-robotaxi-self-driving-permit-revoked-california/

One of the widely discussed revelations in the wake of the incident was that Cruise employed 1.5 skilled technical remote overseers for every one of its "self-driving" cars. In other words, they had replaced a single low-waged cab driver with 1.5 higher-paid remote operators.

As Zawinski writes, SFPD is well aware that there's a human being (or more than one human being) responsible for every one of these cars – someone who is formally at fault when the cars injure people or damage property. Nevertheless, SFPD and SFMTA maintain that these cars can't be cited for moving violations because "no one is driving them."

But figuring out who which person is responsible for a moving violation is "complicated and annoying to deal with," so the fiction persists.

(Zawinski notes that even when these people are held responsible, they're a "moral crumple zone" for the company that decided to enroll whole cities in nonconsensual murderbot experiments.)

Automation hype has always involved hidden humans. The most famous of these was the "mechanical Turk" hoax: a supposed chess-playing robot that was just a puppet operated by a concealed human operator wedged awkwardly into its carapace.

This pattern repeats itself through the ages. Thomas Jefferson "replaced his slaves" with dumbwaiters – but of course, dumbwaiters don't replace slaves, they hide slaves:

https://www.stuartmcmillen.com/blog/behind-the-dumbwaiter/

The modern Mechanical Turk – a division of Amazon that employs low-waged "clickworkers," many of them overseas – modernizes the dumbwaiter by hiding low-waged workforces behind a veneer of automation. The MTurk is an abstract "cloud" of human intelligence (the tasks MTurks perform are called "HITs," which stands for "Human Intelligence Tasks").

This is such a truism that techies in India joke that "AI" stands for "absent Indians." Or, to use Jathan Sadowski's wonderful term: "Potemkin AI":

https://reallifemag.com/potemkin-ai/

This Potemkin AI is everywhere you look. When Tesla unveiled its humanoid robot Optimus, they made a big flashy show of it, promising a $20,000 automaton was just on the horizon. They failed to mention that Optimus was just a person in a robot suit:

https://www.siliconrepublic.com/machines/elon-musk-tesla-robot-optimus-ai

Likewise with the famous demo of a "full self-driving" Tesla, which turned out to be a canned fake:

https://www.reuters.com/technology/tesla-video-promoting-self-driving-was-staged-engineer-testifies-2023-01-17/

The most shocking and terrifying and enraging AI demos keep turning out to be "Just A Guy" (in Molly White's excellent parlance):

https://twitter.com/molly0xFFF/status/1751670561606971895

And yet, we keep falling for it. It's no wonder, really: criti-hype rewards so many different people in so many different ways that it truly offers something for everyone.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/01/29/pay-no-attention/#to-the-little-man-behind-the-curtain

Back the Kickstarter for the audiobook of The Bezzle here!

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

--

Ross Breadmore (modified)

https://www.flickr.com/photos/rossbreadmore/5169298162/

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/

#pluralistic#ai#absent indians#mechanical turks#scams#george carlin#comedy#body-snatchers#fraud#theranos#guys in robot suits#criti-hype#machine learning#fake it til you make it#too good to fact-check#mturk#deepfakes

2K notes

·

View notes

Text

Imagine being the loser ass tool, Yisha Raziel, who made a deepfake of Bella Hadid saying she supports Israel. 🤮

If you’re reading this, I am telling you right now, you better second and third guess what you see and hear on social media and the news. Stick to reliable news sources. Vet them. Require multiple, trusted sources. Validate links to sources. In the last several months, I’ve seen the deepfake of President Nixon talking about the failed NASA mission that never happened. I’ve seen a deepfake of Joe Biden hilariously using profanity to trash talk Trump - Biden’s deepfake, however, was made to be intentionally obvious that it wasn’t his words, or something he would actually say.

But imagine a viral deepfake video of Biden announcing a nuclear strike on Russia within the next 20 minutes? Or a deepfake of Biden reintroducing the draft to support Israel? Or a deepfake of Biden withdrawing from the 2024 election and endorsing Trump…

These kinds of things are going to begin happening a lot more, especially with the proliferation of troll farms, and especially since YouTube, Twitter (I refuse to call it X), and Facebook have all eviscerated their verification and factcheck teams that used to at least attempt to limit disinformation and misinformation.

Pay attention, peeps.

Don’t get bamboozled.

663 notes

·

View notes

Text

India has warned tech companies that it is prepared to impose bans if they fail to take active measures against deepfake videos, a senior government minister said, on the heels of warning by a well-known personality over a deepfake advertisement using his likeness to endorse a gaming app.

The stern warning comes as New Delhi follows through on advisory last November of forthcoming regulations to identify and restrict propagation of deepfake media. Rajeev Chandrasekhar, Deputy IT Minister, said the ministry plans to amend the nation’s IT Rules by next week to establish definitive laws counteracting deepfakes. He expressed dissatisfaction with technology companies’ adherence to earlier government advisories on manipulative content.

Continue Reading.

73 notes

·

View notes

Text

“I’m looking at a picture of my naked body, leaning against a hotel balcony in Thailand. My denim bikini has been replaced with exposed, pale pink nipples – and a smooth, hairless crotch. I zoom in on the image, attempting to gauge what, if anything, could reveal the truth behind it. There’s the slight pixilation around part of my waist, but that could be easily fixed with amateur Photoshopping. And that’s all.

Although the image isn’t exactly what I see staring back at me in the mirror in real life, it’s not a million miles away either. And hauntingly, it would take just two clicks of a button for someone to attach it to an email, post it on Twitter or mass distribute it to all of my contacts. Or upload it onto a porn site, leaving me spending the rest of my life fearful that every new person I meet has seen me naked. Except they wouldn’t have. Not really. Because this image, despite looking realistic, is a fake. And all it took to create was an easily discovered automated bot, a standard holiday snap and £5.

This image is a deepfake – and part of a rapidly growing market. Basically, AI technology (which is getting more accessible by the day) can take any image and morph it into something else. Remember the alternative ‘Queen’s Christmas message’ broadcast on Channel 4, that saw ‘Her Majesty’ perform a stunning TikTok dance? A deepfake. Those eerily realistic videos of ‘Tom Cruise’ that went viral last February? Deepfakes. That ‘gender swap’ app we all downloaded for a week during lockdown? You’ve guessed it: a low-fi form of deepfaking.

Yet, despite their prevalence, the term ‘deepfake’ (and its murky underworld) is still relatively unknown. Only 39% of Cosmopolitan readers said they knew the word ‘deepfake’ during our research (it’s derived from a combination of ‘deep learning’ – the type of AI programming used – and ‘fake’). Explained crudely, the tech behind deepfakes, Generative Adversarial Networks (GANs), is a two-part model: there’s a generator (which creates the content after studying similar images, audio, or videos) and the discriminator (which checks if the new content passes as legit). Think of it as a teenager forging a fake ID and trying to get it by a bouncer; if rejected, the harder the teen works on the forgery. GANs have been praised for making incredible developments in film, healthcare and technology (driverless cars rely on it) – but sadly, in reality it’s more likely to be used for bad than good.

Research conducted in 2018 by fraud detection company Sensity AI found that over 90% of all deepfakes online are non-consensual pornographic clips targeting women – and predicted that the number would double every six months. Fast forward four years and that prophecy has come true and then some. There are over 57 million hits for ‘deepfake porn’ on Google alone [at the time of writing]. Search interest has increased 31% in the past year and shows no signs of slowing. Does this mean we’ve lost control already? And, if so, what can be done to stop it?

WHO’S THE TARGET?

Five years ago, in late 2017, something insidious was brewing in the darker depths of popular chatrooms. Reddit users began violating celebrities on a mass scale, by using deepfake software to blend run-of-the-mill red-carpet images or social media posts into pornography. Users would share their methods for making the sexual material, they’d take requests (justifying abusing public figures as being ‘better than wanking off to their real leaked nudes’) and would signpost one another to new uploads. This novel stream of porn delighted that particular corner of the internet, as it marvelled at just how realistic the videos were (thanks to there being a plethora of media of their chosen celebrity available for the software to study).

That was until internet bosses, from Reddit to Twitter to Pornhub, came together and banned deepfakes in February 2018, vowing to quickly remove any that might sneak through the net and make it onto their sites – largely because (valid) concerns had been raised that politically motivated deepfake videos were also doing the rounds. Clips of politicians apparently urging violence, or ‘saying’ things that could harm their prospects, had been red flagged. Despite deepfake porn outnumbering videos of political figures by the millions, clamping down on that aspect of the tech was merely a happy by-product.

But it wasn’t enough; threads were renamed, creators migrated to different parts of the internet and influencers were increasingly targeted alongside A-listers. Quickly, the number of followers these women needed to be deemed ‘fair game’ dropped, too.

Fast forward to today, and a leading site specifically created to house deepfake celebrity porn sees over 13 million hits every month (that’s more than double the population of Scotland). It has performative rules displayed claiming to not allow requests for ‘normal’ people to be deepfaked, but the chatrooms are still full of guidance on how to DIY the tech yourself and people taking custom requests. Disturbingly, the most commonly deepfaked celebrities are ones who all found fame at a young age which begs another stomach-twisting question here: when talking about deepfakes, are we also talking about the creation of child pornography?

It was through chatrooms like this, that I discovered the £5 bot that created the scarily realistic nude of myself. You can send a photograph of anyone, ideally in a bikini or underwear, and it’ll ‘nudify’ it in minutes. The freebie version of the bot is not all that realistic. Nipples appear on arms, lines wobble. But the paid for version is often uncomfortably accurate. The bot has been so well trained to strip down the female body that when I sent across a photo of my boyfriend (with his consent), it superimposed an unnervingly realistic vulva.

But how easy is it to go a step further? And how blurred are the ethics when it comes to ‘celebrities vs normal people’ (both of which are a violation)? In a bid to find out, I went undercover online, posing as a man looking to “have a girl from work deepfaked into some porn”. In no time at all I meet BuggedBunny*, a custom deepfake porn creator who advertises his services on various chatroom threads – and who explicitly tells me he prefers making videos using ‘real’ women.

When I ask for proof of his skills, he sends me a photo of a woman in her mid-twenties. She has chocolate-brown hair, shy eyes and in the image, is clearly doing bridesmaid duties. BuggedBunny then tells me he edited this picture into two pornographic videos.

He emails me a link to the videos via Dropbox: in one The Bridesmaid is seemingly (albeit with glitches) being gang-banged, in another ‘she’ is performing oral sex. Although you can tell the videos are falsified, it’s startling to see what can be created from just one easily obtained image. When BuggedBunny requests I send images of the girl I want him to deepfake – I respond with clothed photos of myself and he immediately replies: “Damn, I’d facial her haha!” (ick) and asks for a one-off payment of $45. In exchange, he promises to make as many photos and videos as I like. He even asks what porn I’d prefer. When I reply, “Can we get her being done from behind?” he says, “I’ve got tonnes of videos we can use for that, I got you man.”

I think about The Bridesmaid, wondering if she has any idea that somebody wanted to see her edited into pornographic scenes. Is it better to be ignorant? Was it done to humiliate her, for blackmailing purposes, or for plain sexual gratification? And what about the adult performers in the original video, have they got any idea their work is being misappropriated in this way?

It appears these men (some of whom may just be teenagers: when I queried BuggedBunny about the app he wanted me to transfer money via, he said, “It’s legit! My dad uses it all the time”) – those creating and requesting deepfake porn – live in an online world where their actions have no real-world consequences. But they do. How can we get them to see that?

REAL-LIFE FAKE PORN

One quiet winter afternoon, while her son was at nursery, 36-year-old Helen Mort, a poet and writer from South Yorkshire, was surprised when the doorbell rang. It was the middle of a lockdown; she wasn’t expecting visitors or parcels. When Helen opened the door, there stood a male acquaintance – looking worried. “I thought someone had died,” she explains. But what came next was news she could never have anticipated. He asked to come in.

“I was on a porn website earlier and I saw… pictures of you on there,” the man said solemnly, as they sat down. “And it looks as though they’ve been online for years. Your name is listed, too.”

Initially, she was confused; the words ‘revenge porn’ (when naked pictures or videos are shared without consent) sprang to mind. But Helen had never taken a naked photo before, let alone sent one to another person who’d be callous enough to leak it. So, surely, there was no possible way it could be her?

“That was the day I learned what a ‘deepfake’ is,” Helen tells me. One of her misappropriated images had been taken while she was pregnant. In another, somebody had even added her tattoo to the body her face had been grafted onto.

Despite the images being fake, that didn’t lessen the profound impact their existence had on Helen’s life. “Your initial response is of shame and fear. I didn't want to leave the house. I remember walking down the street, not able to meet anyone’s eyes, convinced everyone had seen it. You feel very, very exposed. The anger hadn't kicked in yet.”

Nobody was ever caught. Helen was left to wrestle with the aftereffects alone. “I retreated into myself for months. I’m still on a higher dose of antidepressants than I was before it all happened.” After reporting what had happened to the police, who were initially supportive, Helen’s case was dropped. The anonymous person who created the deepfake porn had never messaged her directly, removing any possible grounds for harassment or intention to cause distress.

Eventually she found power in writing a poem detailing her experience and starting a petition calling for reformed laws around image-based abuse; it’s incredibly difficult to prosecute someone for deepfaking on a sexual assault basis (even though that’s what it is: a digital sexual assault). You’re more likely to see success with a claim for defamation or infringement of privacy, or image rights.

Unlike Helen, in one rare case 32-year-old Dina Mouhandes from Brighton was able to unearth the man who uploaded doctored images of her onto a porn site back in 2015. “Some were obviously fake, showing me with gigantic breasts and a stuck-on head, others could’ve been mistaken as real. Either way, it was humiliating,” she reflects. “And horrible, you wonder why someone would do something like that to you? Even if they’re not real photos, or realistic, it’s about making somebody feel uncomfortable. It’s invasive.”

Dina, like Helen, was alerted to what had happened by a friend who’d been watching porn. Initially, she says she laughed, as some images were so poorly edited. “But then I thought ‘What if somebody sees them and thinks I’ve agreed to having them made?’ My name was included on the site too.” Dina then looked at the profile of the person who’d uploaded them and realised an ex-colleague had been targeted too. “I figured out it was a guy we’d both worked with, I really didn’t want to believe it was him.”

In Dina’s case, the police took things seriously at first and visited the perpetrator in person, but later their communication dropped off – she has no idea if he was ever prosecuted, but is doubtful. The images were, at least, taken down. “Apparently he broke down and asked for help with his mental health,” Dina says. “I felt guilty about it, but knew I had to report what had happened. I still fear he could do it again and now that deepfake technology is so much more accessible, I worry it could happen to anyone.”

And that’s the crux of it. It could happen to any of us – and we likely wouldn’t even know about it, unless, like Dina and Helen, somebody stumbled across it and spoke out. Or, like 25-year-old Northern Irish politician Cara Hunter, who earlier this year was targeted in a similarly degrading sexual way. A pornographic video, in which an actor with similar hair, but whose face wasn’t shown, was distributed thousands of times – alongside real photos of Cara in a bikini – via WhatsApp. It all played out during the run-up to an election, so although Cara isn’t sure who started spreading the video and telling people it was her in it, it was presumably politically motivated.

“It’s tactics like this, and deepfake porn, that could scare the best and brightest women from coming into the field,” she says, adding that telling her dad what had happened was one of the worst moments of her life. “I was even stopped in the street by men and asked for oral sex and received comments like ‘naughty girl’ on Instagram – then you click the profiles of the people who’ve posted, and they’ve got families, kids. It’s objectification and trying to humiliate you out of your position by using sexuality as a weapon. A reputation can be ruined instantly.”

Cara adds that the worst thing is ‘everyone has a phone’ and yet laws dictate that while a person can’t harm you in public, they can legally ‘try to ruin your life online’. “A lie can get halfway around the world before the truth has even got its shoes on.”

Is it any wonder, then, that 83% of Cosmopolitan readers have said deepfake porn worries them, with 42% adding that they’re now rethinking what they post on social media? But this can’t be the solution - that, once again, women are finding themselves reworking their lives, in the hopes of stopping men from committing crimes.

Yet, we can’t just close our eyes and hope it all goes away either. The deepfake porn genie is well and truly out of the bottle (it’s also a symptom of a wider problem: Europol experts estimate that by 2026, 90% of all media we consume may be synthetically generated). Nearly one in every 20 Cosmopolitan readers said they, or someone they know, has been edited into a false sexual scenario. But what is the answer? It's hard for sites to monitor deepfakes – and even when images are promptly removed, there’s still every chance they’ve been screen grabbed and shared elsewhere.

When asked, Reddit told Cosmopolitan: "We have clear policies that prohibit sharing intimate or explicit media of a person created or posted without their permission. We will continue to remove content that violates our policies and take action against the users and communities that engage in this behaviour."

Speaking to leading deepfake expert, Henry Adjer, about how we can protect ourselves – and what needs to change – is eye-opening. “I’ve rarely seen male celebrities targeted and if they are, it’s usually by the gay community. I’d estimate tens of millions of women are deepfake porn victims at this stage.” He adds that sex, trust and technology are only set to become further intertwined, referencing the fact that virtual reality brothels now exist. “The best we can do is try to drive this type of behaviour into more obscure corners of the internet, to stop people – especially children and teenagers – from stumbling across it.”

Currently, UK law says that making deepfake porn isn’t an offence (although in Scotland distributing it may be seen as illegal, depending on intention), but companies are told to remove such material (if there’s an individual victim) when flagged, otherwise they may face a punishment from Ofcom. But the internet is a big place, and it’s virtually impossible to police. This year, the Online Safety Bill is being worked on by the Law Commission, who want deepfake porn recognised as a crime – but there’s a long way to go with a) getting that law legislated and b) ensuring it’s enforced.

Until then, we need a combination of investment and effort from tech companies to prevent and identify deepfakes, alongside those (hopefully future) tougher laws. But even that won’t wave a magic wand and fix everything. Despite spending hours online every day, as a society we still tend to think of ‘online’ and ‘offline’ as two separate worlds – but they aren’t. ‘Online’ our morals can run fast and loose, as crimes often go by unchecked, and while the ‘real world’ may have laws in place that, to some degree, do protect us, we still need a radical overhaul when it comes to how society views the female body.

Deepfake porn is a bitter nail in the coffin of equality and having control over your own image; even if you’ve never taken a nude in your life (which, by the way, you should be free to do without fear of it being leaked or hacked) you can still be targeted and have sexuality used against you. Isn’t it time we focus on truly Photoshopping out that narrative as a whole?”

Link | Archived Link

522 notes

·

View notes

Text

Joan Is Awful: Black Mirror episode is every striking actor’s worst nightmare

“A sticking point of the near-inevitable Sag-Aftra strike is the potential that AI could soon render all screen actors obsolete. A union member this week told Deadline: ‘Actors see Black Mirror’s Joan Is Awful as a documentary of the future, with their likenesses sold off and used any way producers and studios want. We want a solid pathway. The studios countered with ‘trust us’ – we don’t.’ ...

“If a studio has the kit, not to mention the balls, to deepfake Tom Hanks into a movie he didn’t agree to star in, then it has the potential to upend the entire industry as we know it. It’s one thing to have your work taken from you, but it’s another to have your entire likeness swiped.

“The issue is already creeping in from the peripheries. The latest Indiana Jones movie makes extensive use of de-ageing technology, made by grabbing every available image of Harrison Ford 40 years ago and feeding it into an algorithm. Peter Cushing has been semi-convincingly brought back to life for Star Wars prequels, something he is unlikely to have given permission for unless the Disney execs are particularly skilled at the ouija board. ITV’s recent sketch show Deep Fake Neighbour Wars took millions of images of Tom Holland and Nicki Minaj, and slapped them across the faces of young performers so adeptly that it would be very easy to be fooled into thinking that you were watching the real celebrities in action.

“Unsurprisingly, Sag-Aftra members want this sort of thing to be regulated, asking for their new labour contract to include terms about when AI likenesses can be used, how to protect against misuse, and how much money they can expect from having their likenesses used by AI.”

#joan is awful#black mirror#charlie brooker#sag aftra strike#screen actors guild#sag aftra#wga strike#writers guild of america#wga#actors#screenwriters#hollywood#film and tv#ai#deepfakes#strikes#unions#labour#labor#us

94 notes

·

View notes

Text

Interesting article in the National today about deepfake revenge porn.

There’s been no reported cases of it in Scotland yet but Police Scotland have issued a warning that the technology is advancing fast.

On their advice, if you find yourself a victim of revenge porn whether real or deepfaked, you should report it.

Not sure if will be the same guidance given by police in other countries, but good that it’s being treated as the same crime here.

185 notes

·

View notes

Text

#ai influencers#deepfakes#social media marketing#digital ethics#inauthentic content#influencer marketing#virtual reality#computer generated imagery#dystopian fiction

13 notes

·

View notes

Text

I hope this time there's action and not just talk.

10 notes

·

View notes

Text

AI is here – and everywhere: 3 AI researchers look to the challenges ahead in 2024

by Anjana Susarla, Professor of Information Systems at Michigan State University, Casey Fiesler, Associate Professor of Information Science at the University of Colorado Boulder, and Kentaro Toyama

Professor of Community Information at the University of Michigan

2023 was an inflection point in the evolution of artificial intelligence and its role in society. The year saw the emergence of generative AI, which moved the technology from the shadows to center stage in the public imagination. It also saw boardroom drama in an AI startup dominate the news cycle for several days. And it saw the Biden administration issue an executive order and the European Union pass a law aimed at regulating AI, moves perhaps best described as attempting to bridle a horse that’s already galloping along.

We’ve assembled a panel of AI scholars to look ahead to 2024 and describe the issues AI developers, regulators and everyday people are likely to face, and to give their hopes and recommendations.

Casey Fiesler, Associate Professor of Information Science, University of Colorado Boulder

2023 was the year of AI hype. Regardless of whether the narrative was that AI was going to save the world or destroy it, it often felt as if visions of what AI might be someday overwhelmed the current reality. And though I think that anticipating future harms is a critical component of overcoming ethical debt in tech, getting too swept up in the hype risks creating a vision of AI that seems more like magic than a technology that can still be shaped by explicit choices. But taking control requires a better understanding of that technology.

One of the major AI debates of 2023 was around the role of ChatGPT and similar chatbots in education. This time last year, most relevant headlines focused on how students might use it to cheat and how educators were scrambling to keep them from doing so – in ways that often do more harm than good.

However, as the year went on, there was a recognition that a failure to teach students about AI might put them at a disadvantage, and many schools rescinded their bans. I don’t think we should be revamping education to put AI at the center of everything, but if students don’t learn about how AI works, they won’t understand its limitations – and therefore how it is useful and appropriate to use and how it’s not. This isn’t just true for students. The more people understand how AI works, the more empowered they are to use it and to critique it.

So my prediction, or perhaps my hope, for 2024 is that there will be a huge push to learn. In 1966, Joseph Weizenbaum, the creator of the ELIZA chatbot, wrote that machines are “often sufficient to dazzle even the most experienced observer,” but that once their “inner workings are explained in language sufficiently plain to induce understanding, its magic crumbles away.” The challenge with generative artificial intelligence is that, in contrast to ELIZA’s very basic pattern matching and substitution methodology, it is much more difficult to find language “sufficiently plain” to make the AI magic crumble away.

I think it’s possible to make this happen. I hope that universities that are rushing to hire more technical AI experts put just as much effort into hiring AI ethicists. I hope that media outlets help cut through the hype. I hope that everyone reflects on their own uses of this technology and its consequences. And I hope that tech companies listen to informed critiques in considering what choices continue to shape the future.

youtube

Kentaro Toyama, Professor of Community Information, University of Michigan

In 1970, Marvin Minsky, the AI pioneer and neural network skeptic, told Life magazine, “In from three to eight years we will have a machine with the general intelligence of an average human being.” With the singularity, the moment artificial intelligence matches and begins to exceed human intelligence – not quite here yet – it’s safe to say that Minsky was off by at least a factor of 10. It’s perilous to make predictions about AI.

Still, making predictions for a year out doesn’t seem quite as risky. What can be expected of AI in 2024? First, the race is on! Progress in AI had been steady since the days of Minsky’s prime, but the public release of ChatGPT in 2022 kicked off an all-out competition for profit, glory and global supremacy. Expect more powerful AI, in addition to a flood of new AI applications.

The big technical question is how soon and how thoroughly AI engineers can address the current Achilles’ heel of deep learning – what might be called generalized hard reasoning, things like deductive logic. Will quick tweaks to existing neural-net algorithms be sufficient, or will it require a fundamentally different approach, as neuroscientist Gary Marcus suggests? Armies of AI scientists are working on this problem, so I expect some headway in 2024.

Meanwhile, new AI applications are likely to result in new problems, too. You might soon start hearing about AI chatbots and assistants talking to each other, having entire conversations on your behalf but behind your back. Some of it will go haywire – comically, tragically or both. Deepfakes, AI-generated images and videos that are difficult to detect are likely to run rampant despite nascent regulation, causing more sleazy harm to individuals and democracies everywhere. And there are likely to be new classes of AI calamities that wouldn’t have been possible even five years ago.

Speaking of problems, the very people sounding the loudest alarms about AI – like Elon Musk and Sam Altman – can’t seem to stop themselves from building ever more powerful AI. I expect them to keep doing more of the same. They’re like arsonists calling in the blaze they stoked themselves, begging the authorities to restrain them. And along those lines, what I most hope for 2024 – though it seems slow in coming – is stronger AI regulation, at national and international levels.

Anjana Susarla, Professor of Information Systems, Michigan State University

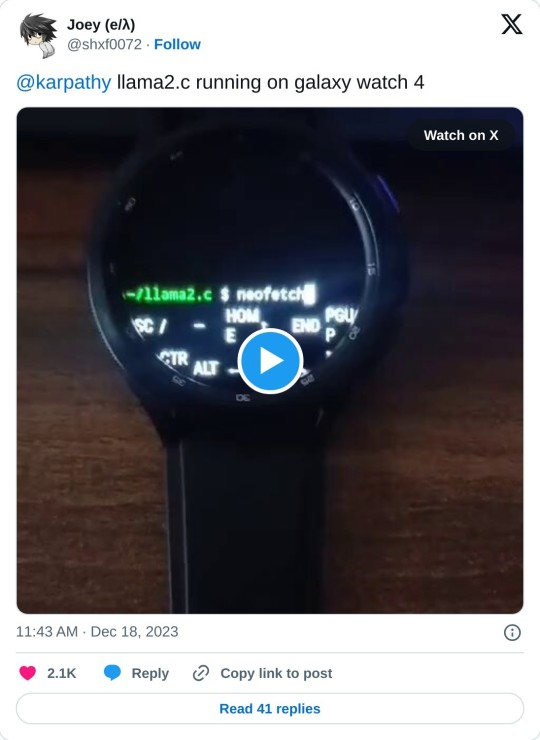

In the year since the unveiling of ChatGPT, the development of generative AI models is continuing at a dizzying pace. In contrast to ChatGPT a year back, which took in textual prompts as inputs and produced textual output, the new class of generative AI models are trained to be multi-modal, meaning the data used to train them comes not only from textual sources such as Wikipedia and Reddit, but also from videos on YouTube, songs on Spotify, and other audio and visual information. With the new generation of multi-modal large language models (LLMs) powering these applications, you can use text inputs to generate not only images and text but also audio and video.

Companies are racing to develop LLMs that can be deployed on a variety of hardware and in a variety of applications, including running an LLM on your smartphone. The emergence of these lightweight LLMs and open source LLMs could usher in a world of autonomous AI agents – a world that society is not necessarily prepared for.

These advanced AI capabilities offer immense transformative power in applications ranging from business to precision medicine. My chief concern is that such advanced capabilities will pose new challenges for distinguishing between human-generated content and AI-generated content, as well as pose new types of algorithmic harms.

The deluge of synthetic content produced by generative AI could unleash a world where malicious people and institutions can manufacture synthetic identities and orchestrate large-scale misinformation. A flood of AI-generated content primed to exploit algorithmic filters and recommendation engines could soon overpower critical functions such as information verification, information literacy and serendipity provided by search engines, social media platforms and digital services.

The Federal Trade Commission has warned about fraud, deception, infringements on privacy and other unfair practices enabled by the ease of AI-assisted content creation. While digital platforms such as YouTube have instituted policy guidelines for disclosure of AI-generated content, there’s a need for greater scrutiny of algorithmic harms from agencies like the FTC and lawmakers working on privacy protections such as the American Data Privacy & Protection Act.

A new bipartisan bill introduced in Congress aims to codify algorithmic literacy as a key part of digital literacy. With AI increasingly intertwined with everything people do, it is clear that the time has come to focus not on algorithms as pieces of technology but to consider the contexts the algorithms operate in: people, processes and society.

#technology#science#futuristic#artificial intelligence#deepfakes#chatgpt#chatbot#AI education#AI#Youtube

14 notes

·

View notes

Text

Thanks to advances in artificial intelligence, it's easier than ever to create images and video of things that don't exist, or events that never happened. That's spurring warnings about digital fakery being used to spread propaganda and disinformation, impersonate celebrities and politicians, manipulate elections and scam people.

But the unleashing of powerful generative AI to the public is also raising concerns about another phenomenon: that as the technology becomes more prevalent, it will become easier to claim that anything is fake.

"That's exactly what we were concerned about: that when we entered this age of deepfakes, anybody can deny reality," said Hany Farid, a digital forensics expert and professor at the University of California, Berkeley. "That is the classic liar's dividend."

50 notes

·

View notes

Text

https://www.rnz.co.nz/news/world/507694/taylor-swift-ai-deepfakes-spark-calls-for-new-legislation

Look, I'm not a swiftie, but what the Fuck.

WHY WOULD YOU D O T H a T ???

As someone who's had images shared online that I did not consent to, this is Foul. Absolutely foul. The repercussions on someone's reputation is absolutely disgusting.

14 notes

·

View notes

Text

These are demo videos made from prompts on OpenAI’s Sora. It’s similar to how you would prompt ChatGPT and get text or a still image output, but with Sora the output is video. (source)

I cynically believe that by November, Sora will have perfected its algorithm enough to make the upcoming 2024 election online ads … very interesting.

And even after the terrible job that Facebook, Instagram and Twitter (never calling it x) did in the 2016 elections and Brexit, they somehow still decided to cut back on their departments that could at least theoretically curtail attempts at political disinformation.

Anyway, be forewarned: Social media manipulation and disinformation campaigns are very real things. Don’t believe everything you see on social media. Slightly similar A.I. deepfake technologies already exist. (example) (example) (example) (example)

#politics#disinformation#openai#sora#deepfakes#artificial intelligence#tech#technology#misinformation#ai#a.i.

220 notes

·

View notes

Text

Inspired by the landmark 1968 exhibition Cybernetic Serendipity, the first-ever international event in the UK dedicated to arts and emerging technologies, the event, titled Cybernetic Serendipity: Towards AI, will look back to look forwards, exploring the transformative potential of machine learning across the creative industries, from algorithms and text-to-image chatbots like ChatGPT to building virtual worlds and using AI to detect bias and disinformation.

From AI chatbots to virtual companions and the never ending wave of deepfakes on our screens, artificial intelligence is an unavoidable part of culture nowadays.

via Dazed and confused

#cybernetic serendipity#since 1968#technology#AI#chatgpt#bias#desinformation#deepfakes#nowadays#culture#algorithm#virtual world#machine learning

5 notes

·

View notes

Text

This is what we're facing with AI

I got this ad on a YouTube video and instantly knew something was up. The logo looks scammy, the product is vague - and that's definitely not Elon Musk talking.

The video is low-quality on purpose because it's a shitty, yet at a glance convincing, deepfake coupled with an AI generated voice.

The link leads to a fake BBC article, which in turn leads to some scammy-looking website.

Sooner or later, we are going to have to confront how to deal with this technology. Not everyone will be able to tell the difference.

anyway i guess if you get this ad or similar ads i encourage you to report it by clicking on the little circled "i" next to the runtime of the ad.

15 notes

·

View notes

Text

one of the things that made me less panicked about the “ai will destroy the truth” thing is that... for pretty much everything ai can do, humans could already do it, it just takes us a little longer. fake articles can already be written by hand. photos can already be manipulated with free or pirated software. you can straight up lie to people in real life out loud. those are already things we deal with an watch for on a daily basis, not to mention how frequently someone is just wrong about something, causing the spread of misinformation on accident.

this isn’t to say that ai-deepfakes et al aren’t terrible, they are. it’s just that once in a while i see someone say “there won’t be any way to trust a photo is real anymore!” and I just want to ask them if they’ve ever heard of photoshop. or staging. or lying. photos have never been 100% trustworthy, not because of technology, but because of the human taking the picture.

40 notes

·

View notes

Text

I’m so glad the global economic system is in the hands of capitalists who wouldn’t take the same due diligence over $26m that I take over €20 based on a call from my “bank”.

You know what I do? I hang up & call them back on the number I have.

Someone pay me 6-7 figures for being smarter.

But ofc they’re the ones being fleeced. Banks and investment firms .etc have run for years on moving money around and making dodgy deals and…most importantly…not asking questions.

Of course they’re gonna get scammed by ppl pretending to be the big wigs who routinely ask for sus shit like this anyway, and don’t want a trail caused by minions asking for ridiculous things like verification.

3 notes

·

View notes