#cognitive bias

Text

The Curse finale interpretation

Ash's ascent/death, parallels to pregnancy, and "lived experience" in The Curse

There was a parallel between the way nobody believed or understood Asher when he was stuck in the tree to the way that pregnant people are treated while they are in labor (or even how women are treated in medical settings in general)

Ash's ascent/death

Nobody would believe Ash (besides Whitney who witnessed him floating inside the house and Moses who saw Ash float up into the tree) that he couldn't come down. Everyone who sees him projects their own interpretation of Ash's experience and intentions.

Dougie thinks Ash is running from his responsibilities because Dougie's dad did the same, and (from Dougie's POV), some men panic or even run away from their responsibilities once their partner is in labor or gives birth.

The neighbors from the community think that Ash and Dougie must be filming something because that's is their experience with these outsiders; they are TV people and act strangely, which can be explained by assuming that any weird behavior is a part of making a TV show. This explanation is also the best that they have for how Ash could possibly defy physical law (because it really isn't reasonable to assume that he just is breaking physical law in some way).

Ash repeatedly tells them that he will fly up. He tries his best to explain what he is going through, and he isn't doing the best job, probably because he's extremely afraid that he might die. I repeatedly tells Dougie and the first responders what he needs from them, and nobody listens. They think that Ash is delusional and that everyone else has a better understanding of the situation and therefore know what to do.

Connection to pregnancy

I think some of Ash's experience can be seen as analogous to what pregnant women (and women in medical settings in general) experience. Historically, doctors have been male, and they obviously have never been pregnant or gone into labor, and studies show that even women healthcare providers dismiss women and minorities in medical settings (it has to do with socialized biases in everyone, which I will come back to).

These professionals often dismiss a pregnant person's self-report of needing help, and a CDC report shows that 1 in 5 women report medical mistreatment while giving birth:

Approximately one in five (20.4%) respondents reported experiencing at least one type of mistreatment. The most commonly reported experiences of mistreatment were being ignored by health care providers, having requests for help refused, or not responded to (9.7%); being shouted at or scolded by health care providers (6.7%); having their physical privacy violated (5.1%); and being threatened with withholding of treatment or being forced to accept treatment they did not want (4.6%).

The same report found that the poorer the woman or more marginalized her background, the more risk of facing mistreatment:

Overall, 28.9% of respondents reported experiencing at least one form of discrimination during maternity care (Table 3), with highest prevalences reported by Black (40.1%), multiracial (39.4%), and Hispanic (36.6%) respondents. Overall, the most commonly reported reasons for discrimination were age (10.1%), weight (9.7%), and income (6.5%); reasons varied by race and ethnicity.

Initially Whitney planned to go to what was implied to be a better hospital. It feels like the show maybe wanted the viewer to expect that Whitney would die due to being at a "poor" hospital (and maybe she did; the finale went no full magical realism, imo). Benny and Nathan probably expected that viewers would immediately think or even assume that this would happen (drawing from our own biases, even if they are informed by statistics), which makes me think that Ash's experience is analogous to pregnant peoples' medical mistreatment.

In these medical settings, doctors frequently ignore a pregnant person's self-reports or requests for help, and instead, the doctors and medical staff (regardless of gender) tend to think that they know better or that the pregnant person is delusional/hormonal/emotional/etc. They dismiss their lived experience. Doctors have historically been male, so they have NO experience being pregnant, but they think they know better than the pregnant person, and even women who have been pregnant cannot speak for every woman. It is not rational to take your own experiences and extrapolate them to everyone else (which has been a common theme in the show: making assumptions based off of limited experience or socialized biases).

Like pregnant people facing medical mistreatment, Ash was ignored by health care providers Dougie and First Responders, had requests for help refused, or not responded to; he was shouted at or scolded by health care providers Dougie for running from responsibilities of becoming a father; and had treatment withheld (the anchored net that he repeatedly begged for) and was forced to accept treatment they did not want (tree branch cut off, sending him to his death).

Lived experience, hermeneutical gaps, and epistemic injustice

OKAY. So this comes back to (what I have taken to be) the overall recurrent theme of The Curse: lived experience, hermeneutical gaps/injustice, and testimonial injustice (which are forms of epistemic injustice, for anyone who is interested in learning more about this).

Hermeneutical gaps occur when a person or group lacks the concepts or terminology to describe their experience. Such gaps lead to hermeneutical injustice; Miranda Fricker describes hermeneutical injustice as occurring

when a gap in collective interpretive resources puts someone at an unfair disadvantage when it comes to making sense of their social experiences. An example of the first might be that the police do not believe you because you are black; an example of the second might be that you suffer sexual harassment in a culture that still lacks that critical concept . . . hermeneutical injustice is caused by structural prejudice in the economy of collective hermeneutical resources.

Before the term 'sexual harassment' came to be, people impacted by such harassment didn't have the concepts or terminology to be able to describe their experience or what they were going through; they were often dismissed as just being flirted with or they didn't even discuss their experience because even though the felt like something was wrong, they didn't have the concepts to articulate their experience, particularly to groups who do not have such experiences.

Here, Fricker describes hermeneutical injustice as:

. . . someone has a significant area of their social experience obscured from understanding owing to prejudicial flaws in shared resources for social interpretation . . . The wrong is analysed in terms of a situated hermeneutical inequality: the prejudicial flaws in shared interpretive resources prevent the subject from making sense of an experience which it is strongly in her interests to render intelligible.

So hermeneutical gaps (lack of conceptual resources [words or formed concepts] to describe experience) lead to hermeneutical injustice (where a person's experience is misinterpreted in a way that leads to harm or testimonial injustice).

Testimonial injustice occurs when one party (person or group) dismisses the credibility of another group (basically treating the marginalized person as though they are not a true knower).

An example might be Fernando trying to be heard about his knowledge of the community violence. Whitney dismisses him, thinking that she knows more about systemic issues. Ash takes advantage of this kind of injustice when he tries to cover his lie that Abshir, Nala, and Hani live in transitional housing once they bought the property they live in. Whitney called out the inconsistency, and Ash decided to exploit the lack of credibility marginalized people are usually extended. He says something like "honestly I don't know with them they say one thing then another," implying that they are dishonest.

Connecting Ash's ascent/death and medical mistreatment of pregnant people with overall themes in The Curse

ANYWAY. Pregnant people in labor go through a unique experience, and sometimes they lack the concepts necessary to explain their experience in a way that medical professionals will "understand" or take seriously (hermeneutical gap leading to hermeneutical injustice). Further, medical professionals dismiss a pregnant person's testimony and treat them like they are not credible while the medical professionals work from their own assumptions or formal medical knowledge (testimonial injustice).

Asher does not have the concepts to describe what he's going through. Nobody has experienced what he experienced, and the experience is new to him, so he doesn't know how to convey what he experiences in a way that Dougie and First Responders will understand. Further, Dougie and the First Responders dismiss Ash's testimony and treats him like he's not credible while Dougie and the First Responders work from their own assumptions or ascriptions of Ash's intentions.

Throughout the show, our main characters have made assumptions about poor people, natives, and their own employees. Many of these assumptions arise out of dismissing or discrediting the experiences of others in favor of their own interpretation of events or others' intentions. Whitney (and Ash) thinks she knows what's best for Las Espanola, even though she lacks the lived experience or even the proper educational experience to understand the complex nature of amending systemic injustice. She is like the medical professionals and First Responders who do not listen to the lived experiences (self-reports) of what people want or need.

This behavior necessarily implies that the people she's helping don't know what's best for themselves, which implies that Whitney has some kind of expertise that qualifies her to intervene on their behalf. She actually doesn't; she has no qualification other than she happens to have rich parents, which doesn't really qualify a person for any kind of job, especially one as complicated as amending economic or social injustice.

I didn't expect there to be growth on behalf of the characters (largely because people have pointed out that Safdie brother projects rarely involve any kind of meaningful growth or resolution; they have bleak outcomes), but in the finale, I thought that Whitney (and Ash) had grown. She expresses jealousy and bitterness that Cara was receiving national attention for leaving the art scene while her and Ash's show wasn't even aired; it ended up being direct to app content. She uncharitably criticizes Cara for disliking exploitive collectors, and Whit says that she thinks that Cara quit because no one bought her work. Ash jokes that maybe if Whit quits her project to work in a massage parlor, maybe people will write about her too. Whit bitterly jokes back that she would need some kind of cultural sob story like saying she was making a statement on the Holocaust. Ash says he knows that she's making joke that selling her art retraumatized her but goes on to point out that native people have gone through a lot, which he says that he fully understands where Cara is coming from and that people process tragedy in their own way (discussing Mel Brooks), and Whitney finally concedes that she probably shouldn't be talking the way she is and that she doesn't have that lived experience. He assures her that he considers her Jewish (and that she can make such jokes), but I think the takeaway is that—on some level—Whitney has gained some self-awareness and realizes that her experiences shouldn't inform the way she interprets other peoples' choices and intentions.

The concepts I discussed here might also be connected to the Dunning-Kruger Effect, which is the phenomenon that people (at any level of intelligence or education) learn something and think that they have a better understanding of what is going on than they actually do. When people (like Whitney) decide to act on such false assumptions of self-evaluation, they are likely to make mistakes or perpetuate injustice.

tldr; the real curse (imo) is the insidious implicit biases that are socialized into us and lead us to making assumptions about others' experiences and intentions. These assumptions ultimately create barriers that limit social understanding and social progress. If we all take a moment to examine why we reasoned as we do or where we get our ideas about people who don't share our ethnic, economic, gendered, religious, etc. background, we might find that we are missing the necessary lived experience (a hermeneutical gap) to understand where they might be coming from. Instead of assuming intent or competency or dismissing or being suspicious, we should all charitably interpret others to try to assume the best in and most of other people. It is what we would want others to do for us. Performing this kind of empathy will ultimately lead to developing the necessary empathy to overcome such biases by habit alone, which will create a more compassionate, empathetic, and understanding world, while also deepening and enriching our own lives and the lives of others by celebrating our plurality.

When we allow certain ideas into our head, they become very real to us, and when we act on those ideas without examining them carefully, those false beliefs can cause real harm.

#the curse#nathan fielder#benny safdie#emma stone#a24#showtime#epistemic injustice#hermeneutical gap#hermeneutical injustice#lived experience#spoilers#the curse explained#the curse finale explained#green queen#the curse s1e10#implicit bias#cognitive bias#dunning kruger

72 notes

·

View notes

Note

I would love if you got started on the Benjamin Franklin effect, please fill me in

Oh dear how did I miss this lil prod from days ago to ramble on about something I mentioned in my tags?! I never miss a chance to ramble! No time like the present I suppose.

As anyone who’s followed me too long will well know, I love a cognitive bias or lil human psychology fun fact. This is yet another one I’ve collected over the years. In its shortest form, the Benjamin Franklin Effect is a cognitive bias that “causes people to like someone more after they do that person a favor, especially if they previously disliked that person or felt neutral toward them.”

I’m sure the science behind it is dense but I’m fairly unfamiliar with all that 😂 For me, this is just one of those interpersonal skills I strategically deploy when needed in life and work. As a nonprofit fundraiser/someone who works in philanthropy, I’m constantly asking very “important” or “fancy” people for large sums of money and before said large sums can be asked for (to employ 20 people in feminist work in Texas year round) you usually have to build a positive and trusting relationship. It’s extremely useful to be able to fast track that process, so I ask for the favor of getting a chance to “pick their brains” about something they have expertise in or I ask them to speak at an event, etc. It’s effective!

But this tool has usefulness in SO MANY ways beyond work, too. And if you know about it, you can even be aware when someone is using it on you. The very person who taught me about it…let’s call her Becky, years later, used it on me. Becky and I had experienced a falling out and she had reached out and apologized. We eventually met up to catch up over coffee and the next thing I knew, I had agreed to help give advice to her friend who is also a nonprofit fundraiser. Becky’s friend had a work conundrum and she needed to troubleshoot it with someone like me and there I was agreeing to do Becky a favor and I couldn’t resist being like “I know what you’re doing bitch and it IS working” 😂

As someone who is not naturally the most adept at navigating social situations but who made creating positive relationships w/ other human beings a lifelong special interest approx 30 years ago, it’s so helpful for me. I just keep on collecting ways that you can tangibly build rapport with others to have ready in my back pocket and I can say with certainty this is an effective one when used well.

Clearly I’m not saying to be a manipulative, calculating asshole in life, but understanding how to utilize tools like these can be a huge help when/if you need them.

Anyway, use wisely and enjoy 😘

#Benjamin Franklin effect#cognitive bias#resources#healthy relationships#positive relationships#career stuff

84 notes

·

View notes

Text

Chart of 20 Cognitive Biases That Affect Decision-Making

#psychology#cognition#cognitive bias#bias#biases#cognitive biases#chart#infographic#self help#self care#critical thinking#thinking#problem solving#analysis#life#business#motivation

174 notes

·

View notes

Text

This is your Friendly Reminder to make sure you're actually observing trends in data and not just applying one of the many human cognitive biases that plague everyday discourse and discussion

139 notes

·

View notes

Text

Cognitive Biases

Fundamental attribution error

(FAE, aka correspondence bias)

Tendency to overemphasize personality-based explanations for behaviors observed in others. At the same time, individuals under-emphasize the role and power of situational influences on the same behavior. Edward E. Jones and Victor A. Harris' (1967) classic study illustrates the FAE. Despite being made aware that the target's speech direction (pro-Castro/anti-Castro) was assigned to the writer, participants ignored the situational pressures and attributed pro-Castro attitudes to the writer when the speech represented such attitudes.

Implicit bias

(aka implicit stereotype, unconscious bias)

Tendency to attribute positive or negative qualities to a group of individuals. It can be fully non-factual or be an abusive generalization of a frequent trait in a group to all individuals of that group.

Priming bias

Tendency to be influenced by the first presentation of an issue to create our preconceived idea of it, which we then can adjust with later information.

Confirmation bias

Tendency to search for or interpret information in a way that confirms one's preconceptions, and discredit information that does not support the initial opinion. Related to the concept of cognitive dissonance, in that individuals may reduce inconsistency by searching for information which reconfirms their views (Jermias, 2001, p. 146).

Affinity bias

Tendency to be favorably biased toward people most like ourselves.

Self-serving bias

Tendency to claim more responsibility for successes than for failures. It may also manifest itself as a tendency for people to evaluate ambiguous information in a way beneficial to their interests.

Belief bias

Tendency to evaluate the logical strength of an argument based on current belief and perceived plausibility of the statement's conclusion.

Framing

Tendency to narrow the description of a situation in order to guide to a selected conclusion. The same primer can be framed differently and therefore lead to different conclusions.

Hindsight bias

Tendency to view past events as being predictable. Also called the "I-knew-it-all-along" effect.

Embodied cognition

Tendency to have selectivity in perception, attention, decision making, and motivation based on the biological state of the body.

Anchoring bias

The inability of people to make appropriate adjustments from a starting point in response to a final answer. It can lead people to make sub-optimal decisions. Anchoring affects decision making in negotiations, medical diagnoses, and judicial sentencing.

Status quo bias

Tendency to hold to the current situation rather than an alternative situation, to avoid risk and loss (loss aversion). In status quo bias, a decision-maker has the increased propensity to choose an option because it is the default option or status quo. Has been shown to affect various important economic decisions, for example, a choice of car insurance or electrical service.

Overconfidence effect

Tendency to overly trust one's own capability to make correct decisions. People tended to overrate their abilities and skills as decision makers.See also the Dunning–Kruger effect.

Physical attractiveness stereotype

The tendency to assume people who are physically attractive also possess other desirable personality traits.

(via: Cognitive bias - Wikipedia)

14 notes

·

View notes

Text

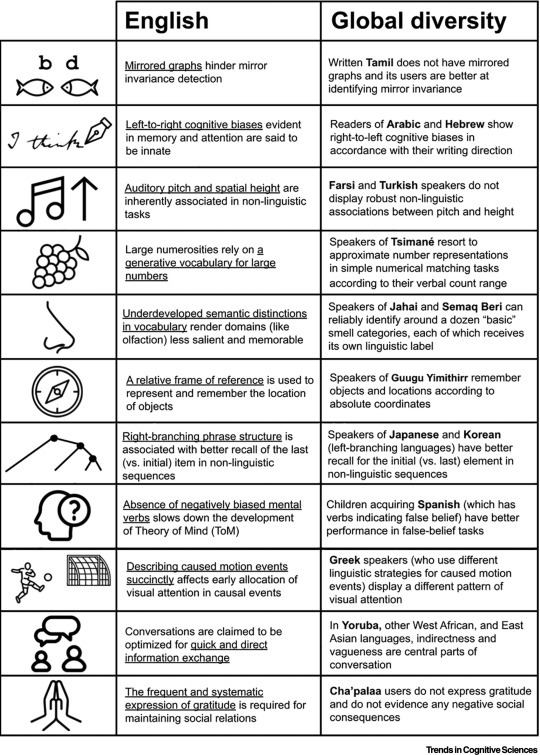

Over-reliance on English hinders cognitive science: Trends in Cognitive Sciences

Here we integrate a diverse body of recent evidence to highlight the theoretical and practical limitations stemming from this Anglocentric bias. We do not presume to be exhaustive, but rather aim to showcase a range of phenomena where over-reliance on English has led cognitive scientists to premature claims of universality (due to the over-sampling of English speakers) or has limited the cognitive constructs being examined (due to the use of English as a meta-language in scientific endeavors).

We consider examples of English use in its broadest possible sense, including the specific nature of its representational format (spoken, written), as well as its vocabulary and grammar, and the interactional style of its users. For each, we illustrate how the default presumptions stemming from English are hampering progress in various areas of cognitive science which, although including the study of language itself, go far beyond it into the cognitive and neuroscientific study of perception, memory, reasoning, social cognition, and more.

107 notes

·

View notes

Note

Hello, I realize I unconsciously blame others for my feelings. I'm trying to better understand the concept that my feelings come from me and not from the outside. I read your guide and you said that what triggers an emotion is different from what causes it. Can you please explain more about the difference between the trigger and the cause? To me if something triggered something else to happen, it is same as that thing causing it. Thank you

I'll use a common example. Let's say that you grew up with hot/cold parents. Sometimes they were caring, other times they were mean and harsh. In the end, you never knew what to expect from them, and you came to understand that they were unreliable sources of love. You were always uncertain of whether they truly loved you. How do you think this history would affect your future relationships?

Fast forward to adulthood. You meet a new person and they seem somewhat reserved. To lighten the mood, you start joking around more. They respond with polite smiles, but never laughter. You'd be quick to assume they don't like you. Why? Your negative childhood experiences have led you to adopt skeptical, suspicious, or even cynical beliefs about people.

When your underlying beliefs cause you to view situations in a distorted way, not grounded in reality, it is called a cognitive bias. Cognitive biases are persistent because they make you blind to counter-evidence that would prove your beliefs wrong. People are often unaware of their cognitive biases because they believe that what they are seeing is reality.

Whenever anyone behaves in a way that seems to resemble your parents, cognitive bias leads you to feel the same insecurity you felt as a child. You might be quick to blame people whenever they behave in a way that makes you feel insecure. For example, you might call this new person cold or uncaring when, in fact, they are merely a stoic person. You misinterpret their behavior and then fret about whether they like you even though, objectively, they have done nothing but behave as they normally do, with no negative thoughts or intentions toward you whatsoever. If I had a completely different relationship history than you, a healthier one, I'd have no problem with this new person at all.

When something "triggers" you, it is not causing your problem, but just reminding you of a preexisting problem that remains buried and unresolved. Your feelings of insecurity with this new person are actually caused, at their root, by your own negative memories, distorted perception of reality, faulty beliefs about people, and/or cognitive biases that produced flawed judgment.

21 notes

·

View notes

Text

Book of the Day -

Today’s Book of the Day is Thinking, Fast and Slow, written by Daniel Kahneman in 2013 and published by Farrar, Straus and Giroux.

Daniel Kahneman is an Israeli-American psychologist whose main research topics have been the psychology of judgment and decision-making, and behavioural economics, for which he was awarded the 2002 Nobel Memorial Prize in Economic Sciences (shared with Vernon L.…

View On WordPress

#availability heuristic#behavioural economics#behavioural psychology#Book Of The Day#book recommendation#book review#bookstagram#booktok#cognitive bias#cognitive science#Daniel Kahneman#decision-making#Economics#endowment effect#loss aversion#Neuroscience#prospect theory#Psychology#Raffaello Palandri

12 notes

·

View notes

Text

I have been methodically slowly working my way through The Talos Principle 2 and it's reminded me why I love puzzle games so much.

Most of the puzzles in Talos 2 aren't a big deal (at least for me). But every so often I get stuck, and it's always one of two reasons.

Finicky positioning - these are annoying, because I know what I need to do, it just takes me a while to get the positioning just right. Rare in Talos. I encountered this much more frequently in The Entropy Centre, and to some degree in Portal 2.

Mental Block - These are the interesting ones, where my preconception of how a puzzle element works just straight-up blinds me to the solution.

The mental blocks are honestly fascinating, because it starts from flat fury at an apparently impossible task, and then--once I convince myself to walk away for a bit--I eventually realize that some basic assumption I had was just wrong.

It's a weird feeling in the brain. And not entirely a good one? But it's not bad, it's just... "oh that would have been obvious had I not been convinced I already knew how that worked."

7 notes

·

View notes

Text

Have you ever learned about something – be it a new product or a certain phrase – and suddenly started to notice it everywhere, all the time? If so, you may have encountered the Baader–Meinhof phenomenon, also known as the frequency illusion.

Though these sorts of terms are usually named after the person who coined them, this one was named after a German terrorist group, the Baader-Meinhof gang. In 1994, Terry Mullen sent a letter to a Minnesotan newspaper explaining that he had been talking about the gang one day to a friend. Despite not having heard anything about them for years, the next day the friend found an article about the gang in that day's papers and started seeing information about them all the time.

You may have had similar experiences, with a common one being the 11:11 illusion, where people notice that when they look at a clock, the time is 11 minutes past 11 more often than they'd expect it to be. We know there aren't more than two 11:11s in a day, so why would you feel you are seeing that specific time more than any others?

Well, you probably aren't, and it's likely just a cognitive bias at play. Say someone tells you that you're more likely to see 11:11 – you will then be more likely to actually notice it when you happen to look at the clock and find it's 11:11. You may look at the clock many times during the day, but think nothing of all the other times you glance at, while focusing on the times when you did see 11:11. As a result, you may think you see it more frequently.

Academics aren't immune to the bias, with linguist Thomas Grano coining the term "frequency bias" in a 2005 blog post.

"Here at Stanford we have a group working on innovative uses of all, especially the quotative use, as in the song title 'I'm like 'yeah' and she's all 'no'," Grano wrote in the blog post, explaining that the group believed this usage was common, especially amongst young Californian women.

"The undergraduates working on the project reported that they had friends who used it 'all the time'. But in fact, when the undergrads engage these friends in (lengthy) conversation, tape the conversations, transcribe them, and then extract occurrences of quotatives, the frequency of quotative all is very low (quotative like is really really big)," he continued. "There are several interpretations for this annoying finding, but we're inclined to think that part of it is the Frequency Illusion on our part."

One fun example could be the "Mariko Aoki Phenomenon", where you need to poop shortly after entering a bookstore. Though there are other explanations, one is that after Mariko Aoki wrote about her own experiences of needing to poop in bookstores (itself probably because of the frequency illusion) others began to notice when they needed to go too, of course disregarding instances where this didn't happen.

4 notes

·

View notes

Text

MCX Rants: Burden of Proof (general), specifically: religious/magical claims

Not everyone cares about that level of skepticism; and thats reality of humans being unique !!—- im not one to gatekeep, as i hope is clear with everything I do in life …. Hahaha, so what you choose to believe in or not is of course all you and i support you! My problem is more towards people who use their magical thinking to inform their voting choices and that often has measurable negative consequences towards often minorities and often people of color.

I am accepting of what has been said (in redacted convo previous to this about belief in paganism) except I must press on this specifically: burden of proof isnt something you can ignore or not believe in.

The burden of proof is the foundation of the american legal system… “innocent until proven guilty” is burden of proof (ie: i claim you crimes me i must prove it, otherwise you go to jail based on my claim and must defend yourself from my claim even if i have no evidence).

Without burden of proof: wed just have a country of accusations without evidence and anyone could say anyone did crimes and they be charged for it.

Burden of proof is the obligation to provide evidence to support one's argument or claim in a discussion or legal context. It rests on the party making the assertion to substantiate their position.

While perspectives on burden of proof may vary, it's a crucial concept in discussions. It ensures a fair and rational exchange by assigning the responsibility of supporting claims. Without it, it becomes challenging to distinguish between valid and baseless arguments.

#logic#reasoning#fallacy#cognitive bias#burden of proof#truth#claim#mycology#magic mushies#microbiology#mold#60s psychedelia#lgbtqia#lgbtqia2s#lgbtqia2s+#myc#enby#queer#trans#non binary

2 notes

·

View notes

Text

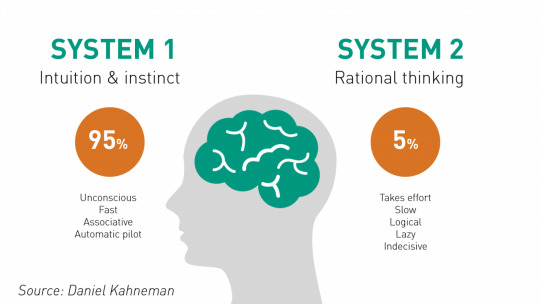

Systems of thinking

There are 2 systems that are responsible for our thinking processes. System 1 is a near-instantaneous, quick process that happens automatically and is based on intuition and previous knowledge. System 2, on the other hand, is slower and requires a mental effort in order to produce the answer.

What are cognitive biases?

While system 1 is very efficient, its intuitive nature makes it prone to errors. It is often based on assumptions (often called heuristics) that do not match the complexity of the real world. Heuristics are mental shortcuts that generate a probable answer that would fit the overall picture, but they are rarely accurate. The patterns that are formed due to heuristics are referred to as cognitive biases. A cognitive bias is a systematic error in thinking that occurs when information is being processed and interpreted. Some cognitive biases are independent of heuristics.

#brain#neuroscience#science#biology#psychology#cognition#cognitive bias#cognitive biases#systems of thinking#system 1#system 2#system 1 vs system 2

116 notes

·

View notes

Text

Be Aware of #CognitiveDistortions

Every day I have a reminder of my phone set to go off that says “I am Aware of Cognitive Distortions”. It only goes off once per day. I could probably use another two or three…dozen reminders over the course of the day, as could most people.

Cognitive distortions or perceiving reality inaccurately, is as natural and normal and easy and automatic as breathing. Is it possible for people to not…

View On WordPress

#cognitive bias#cognitive distortion#health#human nature#life#mental health#personal development#psychology

2 notes

·

View notes

Text

Okay I know I said I wasn't gonna talk about Simon but like, this is what's written in the Satanic Panic wikipedia page.

The lack of credible evidence for the more extreme interpretations often being seen as evidence of an effective conspiracy rather than an indication that the allegations are unfounded.

I was never able to express exactly what the problem with his "experiment" was. It's this.

8 notes

·

View notes

Link

By: Steven Pinker

Published: Jan 10, 2023

When I tell people that I teach and write about human rationality, they aren’t curious about the canons of reason like logic and probability, nor the classic findings from the psychology lab on how people flout them. They want to know why humanity appears to be losing its mind.

Why do people believe in outlandish conspiracy theories, such as that Covid-19 was a plot by Bill Gates to implant trackable microchips in our bodies? Or in blatant fake news, such as that Joe Biden called Trump supporters “dregs of society”? Or in paranormal woo-woo, like astrology, extra-sensory perception (ESP), and spiritual energy in pyramids and crystals?

It won’t do to give the Spockian answer that humans are simply irrational. Our hunter-gatherer ancestors lived by their wits, outsmarting animals with traps, poisons, and ambushes, while protecting themselves from the elements with fire, clothing, and shelter. It was this ingenuity that allowed mankind, according to the definition by Ambrose Bierce, to “infest the whole habitable earth and Canada.” Since then our species has plumbed the nature of matter, life, and mind, has slipped the surly bonds of Earth to explore other planets, and has blunted the scourges of war, pestilence and famine, doubling our life expectancy. Even the everyday feats of holding a job, keeping food in the fridge, and getting the kids clothed, fed, and off to school on time require feats of reasoning that are beyond the ken of our best AI.

How, then, can we explain the pandemic of poppycock? My best answer comes in four parts.

The first is rooted in the very nature of rationality. Reason, almost by definition, is inference deployed in service of a goal; no one gets rationality credit merely for enumerating true but useless propositions. But that goal need not be an objective understanding of the world. It can also be to win an argument in which the stakes matter to you. As Upton Sinclair pointed out, “It is difficult to get a man to understand something when his salary depends upon his not understanding it.”

Or reason can be used to show how wise and moral your group is—your religion, tribe, your political sect—and how stupid and evil a rival one is. This “myside bias,” explained in Keith Stanovich’s The Bias That Divides Us, appears to be the most pervasive bias among the hundreds documented by cognitive science and social psychology. And there’s a perverse rationality behind it. It’s far from irrational for an individual to endorse a belief that gains them status as a hero for their side while avoiding ostracism as a traitor. The problem is that it’s irrational for a society as a whole to lurch between one faction’s dogmas and another’s rather than collectively arrive at the most accurate understanding of reality. These conflicting incentives place us in a tragedy of the rationality commons.

-

A second contributor to irrationality is that human reasoning is guided by deeply rooted folk intuitions, the evolutionary legacy of having to figure out the hidden laws of reality before the scientific revolution gave us a sound method for doing so. Those intuitions, while indispensable for navigating everyday human life, are incommensurate with our best modern understanding of the world. The mismatch makes us vulnerable to superstition and pseudoscience.

For example, we are intuitive dualists: we feel that people are composed of two things, a body and a mind. We don’t treat others as robots or meat puppets, but assume they have beliefs and desires and sentience like our own, which we ascribe to an intangible mind or soul, a ghost in the machine. (This contrasts with the dominant scientific understanding that mental life arises from neurons firing in patterns.) From there it’s a short step to believing that minds can part company with bodies, and so to believe in spirits, souls, ghosts, an afterlife, reincarnation, and ESP.

We are also intuitive essentialists, sensing that living things contain an invisible essence or lifeblood that gives them their form and powers. This instinct allowed our ancestors to discern the continuity beneath the differing appearances of a single species (such as its eggs, seeds, flowers, or larvae) and to extract foods, medicines, and poisons from their tissues. But from essentialist inklings it’s a short step to intuit that disease must be caused by an adulteration of one’s essence by a foreign contaminant. That in turn makes it natural to recoil from vaccines, which, after all, introduce a bit of an infectious agent deep into the tissues of a healthy body. It invites homeopathy and herbal remedies, which infuse us with tinctures that seem to carry the essence of a healthful living thing. And it inspires the many forms of quackery in which people are subjected to purging, bloodletting, fasting, sweating, cupping, and other means of “getting rid of toxins.”

Finally, we are intuitive teleologists, aware that our own plans, tools, and artifacts are designed with a purpose. As the 19th-century theologian William Paley pointed out, when you find a watch lying on the ground, you correctly infer that it was designed by a watchmaker. But from there it’s a short step to inferring that the universe has a purpose, and so to believe in astrology, creationism, synchronicity, and the philosophy that “everything happens for a reason.” That mentality can also lead to conspiracy theorizing, abetted by the truth that our enemies really may plot out ways to harm us, and that in being vigilant against them, false positives may be less costly than false negatives.

-

The third key to public irrationality is to consider how we unlearn these folk intuitions and acquire a more sophisticated understanding. It’s certainly not by each of us exercising our inner genius. It’s by trusting legitimate expertise: scientists, journalists, historians, government record-keepers, and responsible, fact-checked authors. After all, few of us can really justify our beliefs by ourselves, including the true ones. Surveys have shown that creationists and climate change deniers are, on average, no less scientifically literate than believers (many of whom attribute warming to the ozone hole, toxic waste dumps, or plastic straws in the ocean). The difference is political tribalism: the farther to the right, the more denial.

For my part, I’ve been vaccinated against Covid five times, but my understanding of how the vaccines work extends little deeper than “Something something mRNA antibodies immune system.” I basically trust the people in the white coats who say they work. Flaky beliefs, in contrast, persist in people who don’t trust the public health establishment—who see it as just another faction, one that competes against their trusted preachers, politicians, and celebrities. In other words, we all have to trust authorities; the difference between believers who are probably right and those who are almost certainly wrong is that the authorities the first group listens to engage in practices and belong to institutions that are explicitly designed to sift truths from falsehoods.

-

The last piece of the puzzle of why people believe outlandish things is: It depends on what you mean by “believe.” George Carlin observed, “Tell people there’s an invisible man in the sky who created the universe, and the vast majority will believe you. Tell them the paint is wet, and they have to touch it to be sure.” The distinction was drawn more formally by the social psychologist Robert Abelson in his classic article “Beliefs Are Like Possessions,” which distinguished between “distal” and “testable” beliefs.

Touching the paint and other empirical probing is how we navigate the zone of reality in which we live our lives: the physical objects around us, the people we deal with face to face, the memory of our interactions. Beliefs in this zone are testable, and the compos mentis among us hold them only if they are likely to be true. They have no choice: reality, which doesn’t go away when you stop believing in it, punishes people for delusions.

But people also hold beliefs in spheres of existence that are far from their everyday experience: the distant past, the unknowable future, faraway peoples and places, remote corridors of power, the microscopic, the cosmic, the counterfactual, the metaphysical. Our lived experience gives us no grounds for beliefs about how the universe began, or when humans first appeared, or what makes it rain, or why bad things happen to good people, or what really goes on in the Oval Office or Microsoft headquarters or the dining rooms of Davos. Nor, except for a few movers and shakers and deciders, do our beliefs on these matters make any difference.

But that doesn’t mean that people abstain from believing things about these imponderables at all. They can adopt beliefs about them that are entertaining, or uplifting, or empowering, or morally edifying. Whether beliefs in this mythology zone are objectively “true” or “false” is, to them, unknowable and hence irrelevant.

Here again there’s a kind of historical rationality to this insouciance. Until modern times—the scientific revolution, the Enlightenment, systematic historiography and journalism, public records and datasets—the truth about these remote realms really was unknowable, and mythology was as good a kind of belief as any. This, I suggest, is the default human intuition when it comes to beliefs about these recondite matters. To appreciate the naturalness of this mythological mindset, you don’t have to invoke hunter-gatherers on the Pleistocene savannah. You just have to think about humanity during the vast majority of its existence, and about the vast majority of people today who have not signed on to the Enlightenment conviction that all of reality is in principle knowable by the scientific-analytical mindset.

-

The result is that there are as many kinds of mythological belief as there are questions about the world beyond our senses. The most obvious is religion, which most of its adherents cheerfully concede is a matter of faith, rather than of reason or evidence.

Another consists of national myths about the glorious martyrs and heroes who founded our great nations. The real historians who expose their feet of clay are as popular as a skunk at a garden party.

Many conspiracy theories, too, are entertained as engrossing myths rather than credible hypotheses. Believers in vast nefarious conspiracies, such as 9/11 “truthers,” hold their meetings and publish their exposés openly, apparently unconcerned that the omnipotent regime will crack down on brave truth-tellers like them.

QAnon might be likened to a live action role-playing game, with fans avidly trading clues and following leads. Its progenitor, Pizzagate (according to which Hillary Clinton ran a child sex ring out of the basement of a DC pizzeria), also had a make-believe quality. As the cognitive scientist Hugo Mercier has pointed out, virtually none of the adherents took steps commensurate with such an atrocity, like calling the police. (One of them did leave a one-star review on Google.) With the exception of the fanatic who burst into the restaurant with his guns blazing to rescue the children, among Pizzagate believers the proposition “I believe that Hillary Clinton ran a child sex ring” can really be translated as “I believe that Hillary Clinton is so depraved that she is capable of running a child sex ring”—or, perhaps even more accurately, “Hillary…Boo!” Beliefs outside our immediate experience, then, can be expressions of moral and political commitments rather than assertions of factual states of affairs.

Many of us are nonplussed by this way of thinking. It’s one thing to believe that Hillary Clinton is a morally compromised person—everyone is entitled to an opinion—but it’s quite another thing, and completely unacceptable, to express that opinion as a fabricated factual assertion.

But it’s our mindset that is exotic and unnatural. For many of us, it’s the dividend of a higher education which has imparted the sense that there is a fact of the matter about states of the world; that even if we don’t know it, there are ways of finding out; and that, as Bertrand Russell put it, “It is undesirable to believe a proposition when there is no ground whatsoever for supposing it is true.” Indeed, one could argue that this mindset is the most important dividend of higher education.

-

Or at least, it used to be. Here's another candidate for a mythology zone: the sacred creeds of academic and intellectual elites. These include the belief that we are born blank slates, that sex is a social construction, that every difference in the social statistics of ethnic groups is caused by racism, that the source of all problems in the developing world is European and American imperialism, and that repressed abuse and trauma are ubiquitous.

Many observers have been taken aback by the repression of dissent from these beliefs in contemporary universities—the deplatformings, the cancelings, the heckler’s vetoes, the defenestrations, the multi-signatory denunciations, the memory-holing of journal articles. Universities, after all, are supposed to be the place in which propositions are interrogated and challenged and complexified and deconstructed, not criminalized. Yet these beliefs are treated not as empirical hypotheses but as axioms that decent members of the community may not challenge.

Academic cancel culture may be a regression to the default human intuition that distal beliefs are no more than moral expressions—in this case, opposition to bigotry and oppression. But the default intuition has also been intellectualized and fortified by the doctrines of relativism, postmodernism, critical theory, and social constructionism, according to which claims to objectivity and truth are mere pretexts to power. This marriage of intuition and theory may help us make sense of the mutual unintelligibility between Enlightenment liberal science, according to which beliefs are things about which decent people may be mistaken, and critical postmodern wokeism, according to which certain beliefs are self-incriminating.

-

Can anything be done? Explicit instruction in “critical thinking” is a common suggestion. These curricula try to impart an awareness of fallacies such as arguments from anecdote and authority, and of cognitive biases such as motivated reasoning. They try to inculcate habits of active open-mindedness, namely to seek disconfirmatory as well as confirmatory evidence and to change one’s mind as the evidence changes.

But jaded teachers know that lessons tend to be forgotten as soon as the ink is dry on the exam. It’s hard, but vital, to embed active open-mindedness in our norms of intellectual exchange wherever it takes place. It should be conventional wisdom that humans are fallible and misconceptions ubiquitous in human history, and that the only route to knowledge is to broach and then evaluate hypotheses. Arguing ad hominem or by anecdote should be as mortifying as arguing from horoscopes or animal entrails; repressing honest opinion should be seen as risible as the doctrines of biblical inerrancy or Papal infallibility.

But of course we can no more engineer norms of discourse than we can dictate styles of hairstyling or tattooing. The norms of rationality must be implemented as the ground rules of institutions. It’s such institutions that resolve the paradox of how humanity has mustered feats of great rationality even though every human is vulnerable to fallacies. Though each of us is blind to the flaws in our own thinking, we tend to be better at spotting the flaws in other people’s thinking, and that is a talent that institutions can put to use. An arena in which one person broaches a hypothesis and others can evaluate it makes us more rational collectively than any of us is individually.

Examples of these rationality-promoting institutions include science, with its demands for empirical testing and peer review; democratic governance, with its checks and balances and freedom of speech and the press; journalism, with its demands for editing and fact-checking; and the judiciary, with its adversarial proceedings. Wikipedia, surprisingly reliable despite its decentralization, achieves its accuracy through a community of editors that correct each other's work, all of them committed to principles of objectivity, neutrality, and sourcing. (The same cannot be said for web platforms that are driven by instant sharing and liking.)

If we are to have any hope of advancing rational beliefs against the riptide of myside bias, primitive intuitions, and mythological thinking, we must safeguard the credibility of these institutions. Experts such as public health officials should be prepared to show their work rather than deliver pronouncements ex cathedra. Fallibility should be acknowledged: we all start out ignorant about everything, and whenever changing evidence calls for changing advice, that should be touted as a readiness to learn rather than stifled as a concession of weakness.

Perhaps most important, the gratuitous politicization of our truth-seeking institutions should be halted, since it stokes the cognitively crippling myside bias. Universities, scientific societies, scholarly journals, and public-interest nonprofits have increasingly been branding themselves with woke boilerplate and left-wing shibboleths. The institutions should not be surprised when they are then blown off by the center and right which make up the majority of the population. The results have been disastrous, including resistance to climate action and vaccination.

The defense of freedom of speech and thought must not be allowed to suffer that fate. Its champions should have at their fingertips the historical examples in which free speech has been indispensable to progressive causes such as the abolition of slavery, women’s suffrage, civil rights, opposition to the war in Vietnam, and gay rights. They should go after the censors on the right as vigorously as those on the left, and should not give a pass to conservative intellectuals or firebrands who are no friends to free speech, but are merely enemies of their enemies.

The creed of universal truth-seeking is not the natural human way of believing. Submitting all of one’s beliefs to the trials of reason and evidence is cognitively unnatural. The norms and institutions that support this radical creed are constantly undermined by our backsliding into tribalism and magical thinking, and must constantly be cherished and secured.

This essay is adapted from a presentation given to the Stanford Academic Freedom Conference in November 2022.

#Steven Pinker#rationality#rational thought#irrationality#religious irrationality#irrational#wokeness#cult of woke#woke activism#woke#wokeism#wokeness as religion#cognitive bias#conspiracy theory#conspiracy theorists#conspiracy theories#academic corruption#ideological capture#religion is a mental illness

15 notes

·

View notes