#big data and analytics

Text

Mastering HR Analytics for Workforce Management & Productivity

HR analytics is crucial for monitoring and improving the role of the workforce in business success. Organizations always look for skilled employees to achieve their goals and rely heavily on human resource teams or staffing agencies to hire the best ones.

Besides talent acquisition, HR teams up with official administrators to make informed workforce planning and management decisions. They use people management software and big data analytics to view and analyze significant metrics relating to employee productivity.

You must be curious about how data science empowers HR professionals worldwide. Don’t miss out on reading this blog on human resource data analytics.

What is Human Resource Analytics?

HR analytics is also called people, workforce, and talent analytics.

It involves gathering, analyzing, and managing data to make informed decisions about talent acquisition, retention, and overall team productivity.

Enterprises using business automation tools for leave and attendance management software, employee retention, and recruitment deploy data analytics in human resource management.

What are the Types of HR Analytics?

There are four types of HR analytics techniques: descriptive analytics, diagnostic analytics, predictive analytics, and prescriptive analytics.

Descriptive analytics: It involves analyzing data patterns and gaining insights from historical data using data visualization such as leaves taken, attendance, active hours, etc. It helps them understand past events without making future forecasts.

Diagnostic analytics: This technique goes beyond descriptive analytics to understand the underlying reasons behind issues identified from historical data. It covers metrics for payouts, leaves, skill training, net income per employee, etc.

Predictive analytics: It helps anticipate outcomes of processes based on past and present data, leading to more efficient recruitment and employee engagement strategies. Most recruitment consultants even hire data science experts for predictive modeling and insights.

Prescriptive analytics: It provides recommendations for future actions based on predictive analytics and other data-related insights.

What are the Benefits of Data Analytics in HR?

HR analytics enhances talent acquisition by leveraging big data trends. It contributes to workforce planning, employee experience, and training.

Here are a few other advantages of HR analytics for recruitment consultants and enterprises working with them:

Employee Retention Rates

Data analytics provides insights to improve recruiting and onboarding processes. It helps in understanding employee satisfaction, turnover rates, and misconduct.

Optimized Allocation of Resources

With big data engineering services and analytics, HR managers can ensure precise allocation of resources so that they can easily visualize staff utilization.

Unified Employee Support

People analytics offers transparency in employer-employee relationships. It uses cloud data engineering solutions to introduce a flexible support channel for employees to connect with HR, share concerns, and get direct updates.

Accelerated Business Growth

HRs using big data and analytics are more likely to retain a valuable workforce for higher productivity. As a result, they contribute to upscaling business growth to a great extent.

At BluEnt, our data analysts use advanced machine learning algorithms to match the needs of growing organizations.

For HR analytics, we bring certified and experienced industry experts to assist recruitment agencies and HR professionals in decision-making.

Whether you want to elevate your workforce productivity or improve your talent-hunting techniques, enterprise-grade data solutions and analytics can be a game changer for your business. Get in touch to implement big data in your human resource operations.

0 notes

Photo

Nvidia has announced the availability of DGX Cloud on Oracle Cloud Infrastructure. DGX Cloud is a fast, easy and secure way to deploy deep learning and AI applications. It is the first fully integrated, end-to-end AI platform that provides everything you need to train and deploy your applications.

#AI#Automation#Data Infrastructure#Enterprise Analytics#ML and Deep Learning#AutoML#Big Data and Analytics#Business Intelligence#Business Process Automation#category-/Business & Industrial#category-/Computers & Electronics#category-/Computers & Electronics/Computer Hardware#category-/Computers & Electronics/Consumer Electronics#category-/Computers & Electronics/Enterprise Technology#category-/Computers & Electronics/Software#category-/News#category-/Science/Computer Science#category-/Science/Engineering & Technology#Conversational AI#Data Labelling#Data Management#Data Networks#Data Science#Data Storage and Cloud#Development Automation#DGX Cloud#Disaster Recovery and Business Continuity#enterprise LLMs#Generative AI

0 notes

Link

Big data analytics is at the core of some of the world's most significant industry developments, whether in health care, government, finance, or another field. Continue reading to learn more about big data analytics and its numerous advantages. Big data analytics is the use of advanced analytics to enormous collections of both structured and unstructured data in order to generate important insights for businesses.

#pgdm#big data and analytics#top college in delhi ncr#best management college in greater noida#top business institute in delhi ncr

0 notes

Photo

#bigdata#bigdataanalytics#big data and analytics#bigdataservices#bigdataservicesusa#bigdataservicesindia#bigdataservicesuk

0 notes

Text

Big Data Analytics Services - Ray Business Technologies

Ray Business Technologies helps clients leverage data analytics using automated cause-effect modeling, decision trees, and data validation techniques that ultimately help in churning vast amount of data into a pool of strategic information that helps stakeholders and partners in making informed decisions.

We help businesses in leveraging Data Sciences for a greater efficiency with intelligent and real-time data derived from BI and OLAP (On Line Analytical Processing) tools that offer crucial performance indicators. Ray Business Technologies offers a range of specialized services across the spectrum of Data Analytics with a special focus on crucial disciplines such as Data Mining, Predictive Analytics, and Enterprise Data Migration.

Read more at: https://raybiztech.com/solutions/artificial-intelligence/data-analytics

0 notes

Link

Data observability is a collection of technologies and activities that allows data science teams to prevent problems from becoming severe business issues.

0 notes

Text

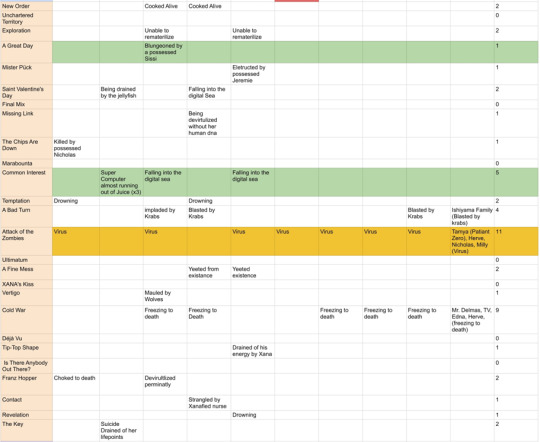

Code Lyoko is notoriously known for placing its main cast in constant danger. Many jokes have come from the fandom about certain characters being more prone to getting into danger than others. Out of curiosity, I decided to find out exactly which character nearly died the most and what attack was the deadliest. Starting from episode 1 ‘Teddygozilla’ to episode 95 ‘Echoes’ and logging each ‘death’ into a google spreadsheet. To simplify it, I’ve chosen to log the main characters, William, Sissi, and Jim. I also added two additional categories, Background Characters, and Special Mentions. Background Characters refers to characters that don’t have a prominent role in either the episode or series but are still mixed up in one of Xana’s attacks, while Special Mentions refer to characters that hold a prominent or semi-prominent role within the episode but are not important enough to get their own category.

One issue I came across right away was determining just what exactly counted as a ‘near-death.’ For example, in episode 7 ‘Image Problem’ Jeremie is thrown down a hatch connecting the scanner room to the computer’s mainframe. Depending on a combination of factors, from where he lands on the mainframe, to where on his body her lands, to the height he fell from, an argument could be made that he could possibly survive, but with severe injuries. To avoid the headache, I will be treating each case with a ‘live or die’ mindset. For Background Characters, I only counted each death as a singular entity to avoid calculating the estimated number of casualties. To calculate the exact toll would require more time and effort than I am willing to put in. With the perimeters set, allow me to showcase the results.

The season opens with ‘Teddygozilla’ with Ulrich and Aelita getting the first two deaths of the series, with Ulrich nearly being crushed to death, and Aelita almost falling into the digital sea. Jeremie, Odd, Yumi, Sissi, and Jim join the roster in episode 2 when a nuclear power plant nearly explodes. Episode 11 becomes the first episode to have a character almost die twice in the same episode, when Aelita almost falls into the digital sea and is later stabbed by a Krabe, and Odd when he almost falls into the digital sea twice in the same episode. Episode 17 ‘Amnesia’ becomes the first episode of the series to not have a single death. Each episode has an average of 3.2 deaths per episode with episode 11 ‘Plagued’ and Episode 16 ‘Claustrophobia’ tie at 8 deaths each, and episode 17 ‘Amnesia’ and episode 25 ‘Code: Earth’ tie for the least amount at 0 deaths. At the end of season one total count comes to 83 with the personal count ending in the following: Jeremie-8, Aelita-5, Ulrich-13, Yumi-9, Odd-8, Sissi-10, and Jim-10.

Yumi and Ulrich begin season 2 off as the first deaths, nearly dying of heat stroke in the season opener ‘New Order.' Episode 30 ‘A Great Day’ marks the first death at the hands of someone possessed by Xana with Ulrich nearly being bludgeoned to death via a xanafied Sissi. Aelita gets the record for most deaths in a single episode, with episode 37 ‘Common Interest’ where she almost dies three times because of the Supercomputer’s battery almost dying. Season 2 also holds another record for having the most deaths in a single episode at a count of 11 in episode 40 ‘Attack of the Zombies.’ That episode also marks the first death William experiences. Season 2 ends with an average of 1.9 deaths per episode, with episode 40 at 11, and episodes 28, 33, 36, 41, 43, and 48 all tied for least amount at 0. Season 2 has 51 deaths in total with the personal count being the following: Jeremie-4, Aelita-5, Ulrich-6, Yumi-8, Odd-7, William-1, Sissi-2, and Jim-2.

I included the 2 parter prequel ‘Xana Awakens’ as part of season 3 to make formatting easier. The first deaths of the season happen within the opening episode ‘Lyoko Minus One’ with Ulrich and Yumi nearly being electrocuted by their xanafied teachers and classmates, and Aelita and Odd nearly falling into the digital sea. Jeremie has his only near death experience of the season in the prequel ‘Xana Awakens,’ also marking the first canological near death of the series. William almost dies twice in this season, both in episode 59 ‘The Secret’ from drowning and blowing up by a bomb. Unlike the past two seasons, Xana’s focus was mostly on the Lyoko warriors this season, with collateral damage being quite low. This season ends with 27 deaths in total and has an average of 2 deaths per episode. Episode 59 ‘The Secret’ brings us the most deaths at 5, while episodes 53, 57, 60, and 65 each have 0 deaths. At the end of the season the count comes to Jeremie-1, Aelita-4, Ulrich-6, Yumi-5, Odd-5, William-2, Sissi-1, and Jim-1.

Season 4 begins with episode 66 ‘William Returns’ with Yumi nearly falling into the digital sea. Ulrich gets his first death of the series in Episode 69 ‘Wreck Room’ with Sissi from nearly falling off of a roof. Odd comes in, in episode 73 ‘Replika’ falling into the digital sea. Jeremie, Aelita, and Jim come in after a meteor crashes into the earth in episode 75 ‘Hot Shower’. Episode 81 ‘A Lack of Goodwill’ brings the first time the entire team nearly dies in a single episode. The final deaths come in episode 94 ‘Fight to the Finish’, with Yumi and Ulrich nearly killed by a Xanafied William, Franz Hopper sacrificing himself, and Xana from Jeremie’s antivirus program. There are a total of 61 deaths in Season 4, and an average of 2 deaths per episode. Episodes 75 ‘Hot Shower’, and 86 ‘Canine Conundrum’, both tie for most deaths at 9 each. Episodes 70, 71,73,74,78,79,83,85,87,89,92, and 95 all have 0 deaths, leaving all tied for the least deaths in the season. The individual death count for the season comes to the following: Jeremie- 5, Aelita- 8, Ulrich- 7, Yumi- 12, Odd- 6, William- 0, Sissi- 5, and Jim- 3.

By the end of the series, there are a total of 222 deaths in total. The episode with the highest count is episode 40 ‘Attack of the Zombies’ at 11. For the personal count, I will be starting from the lowest to the highest. William is at the bottom with a total of 3, Jim next at 16, then Jeremie and Sissi tie at 18, and Aelita at 23. Odd takes third place at 26. Yumi takes second place with 34 deaths in total. Ulrich claims first place, beating Yumi by 1 with 35 deaths at the end.

In the beginning, I set out to find which character and which episode had the highest ‘death count’. I was thoroughly surprised as the series went on, seeing Ulrich, who I had originally thought to have the lowest count, come out in first place. Discovering the total death count at the end of each season was also fascinating. For example, finding out that season 1 had 83 deaths in total. I was aware the first season was one of the most deadly, but seeing the actual number was surprising. As said, these are not the exact number, it is very possible that I have missed counted, or perhaps there was a judgment I had made that you didn’t agree with. At the end of the day, I did this out of curiosity and for fun, and I can only hope you enjoyed reading my findings as I had collected them.

If you would like to see the full graph here is the link:

https://docs.google.com/spreadsheets/d/1jEUk5lypN37hHpK2pzbA95euYr68clNryzQFvgzbVqQ/edit?usp=sharing

#code lyoko#aelita schaeffer#aelita stones#jeremie belpois#yumi ishiyama#ulrich stern#odd della robbia#william dunbar#big data#data analytics

351 notes

·

View notes

Text

For anyone currently in school or recently in school

I am doing a few guest lectures at some of the universities in my state and working on my presentation. Curious if anyone has any recommendations of topics they really enjoyed from a past guest speaker that isn't major/field-specific?

#college#college student#studying#studyblr#school#university#accounting#finance#consulting#data analytics#data analysis#data visualization#big data#data

22 notes

·

View notes

Text

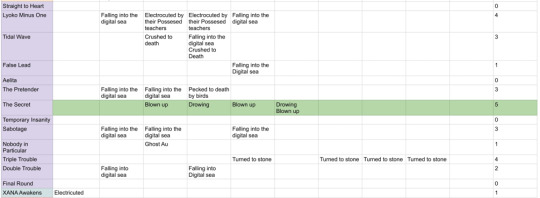

In the subject of data analytics, this is the most important concept that everyone needs to understand. The capacity to draw insightful conclusions from data is a highly sought-after talent in today's data-driven environment. In this process, data analytics is essential because it gives businesses the competitive edge by enabling them to find hidden patterns, make informed decisions, and acquire insight. This thorough guide will take you step-by-step through the fundamentals of data analytics, whether you're a business professional trying to improve your decision-making or a data enthusiast eager to explore the world of analytics.

Step 1: Data Collection - Building the Foundation

Identify Data Sources: Begin by pinpointing the relevant sources of data, which could include databases, surveys, web scraping, or IoT devices, aligning them with your analysis objectives.

Define Clear Objectives: Clearly articulate the goals and objectives of your analysis to ensure that the collected data serves a specific purpose.

Include Structured and Unstructured Data: Collect both structured data, such as databases and spreadsheets, and unstructured data like text documents or images to gain a comprehensive view.

Establish Data Collection Protocols: Develop protocols and procedures for data collection to maintain consistency and reliability.

Ensure Data Quality and Integrity: Implement measures to ensure the quality and integrity of your data throughout the collection process.

Step 2: Data Cleaning and Preprocessing - Purifying the Raw Material

Handle Missing Values: Address missing data through techniques like imputation to ensure your dataset is complete.

Remove Duplicates: Identify and eliminate duplicate entries to maintain data accuracy.

Address Outliers: Detect and manage outliers using statistical methods to prevent them from skewing your analysis.

Standardize and Normalize Data: Bring data to a common scale, making it easier to compare and analyze.

Ensure Data Integrity: Ensure that data remains accurate and consistent during the cleaning and preprocessing phase.

Step 3: Exploratory Data Analysis (EDA) - Understanding the Data

Visualize Data with Histograms, Scatter Plots, etc.: Use visualization tools like histograms, scatter plots, and box plots to gain insights into data distributions and patterns.

Calculate Summary Statistics: Compute summary statistics such as means, medians, and standard deviations to understand central tendencies.

Identify Patterns and Trends: Uncover underlying patterns, trends, or anomalies that can inform subsequent analysis.

Explore Relationships Between Variables: Investigate correlations and dependencies between variables to inform hypothesis testing.

Guide Subsequent Analysis Steps: The insights gained from EDA serve as a foundation for guiding the remainder of your analytical journey.

Step 4: Data Transformation - Shaping the Data for Analysis

Aggregate Data (e.g., Averages, Sums): Aggregate data points to create higher-level summaries, such as calculating averages or sums.

Create New Features: Generate new features or variables that provide additional context or insights.

Encode Categorical Variables: Convert categorical variables into numerical representations to make them compatible with analytical techniques.

Maintain Data Relevance: Ensure that data transformations align with your analysis objectives and domain knowledge.

Step 5: Statistical Analysis - Quantifying Relationships

Hypothesis Testing: Conduct hypothesis tests to determine the significance of relationships or differences within the data.

Correlation Analysis: Measure correlations between variables to identify how they are related.

Regression Analysis: Apply regression techniques to model and predict relationships between variables.

Descriptive Statistics: Employ descriptive statistics to summarize data and provide context for your analysis.

Inferential Statistics: Make inferences about populations based on sample data to draw meaningful conclusions.

Step 6: Machine Learning - Predictive Analytics

Algorithm Selection: Choose suitable machine learning algorithms based on your analysis goals and data characteristics.

Model Training: Train machine learning models using historical data to learn patterns.

Validation and Testing: Evaluate model performance using validation and testing datasets to ensure reliability.

Prediction and Classification: Apply trained models to make predictions or classify new data.

Model Interpretation: Understand and interpret machine learning model outputs to extract insights.

Step 7: Data Visualization - Communicating Insights

Chart and Graph Creation: Create various types of charts, graphs, and visualizations to represent data effectively.

Dashboard Development: Build interactive dashboards to provide stakeholders with dynamic views of insights.

Visual Storytelling: Use data visualization to tell a compelling and coherent story that communicates findings clearly.

Audience Consideration: Tailor visualizations to suit the needs of both technical and non-technical stakeholders.

Enhance Decision-Making: Visualization aids decision-makers in understanding complex data and making informed choices.

Step 8: Data Interpretation - Drawing Conclusions and Recommendations

Recommendations: Provide actionable recommendations based on your conclusions and their implications.

Stakeholder Communication: Communicate analysis results effectively to decision-makers and stakeholders.

Domain Expertise: Apply domain knowledge to ensure that conclusions align with the context of the problem.

Step 9: Continuous Improvement - The Iterative Process

Monitoring Outcomes: Continuously monitor the real-world outcomes of your decisions and predictions.

Model Refinement: Adapt and refine models based on new data and changing circumstances.

Iterative Analysis: Embrace an iterative approach to data analysis to maintain relevance and effectiveness.

Feedback Loop: Incorporate feedback from stakeholders and users to improve analytical processes and models.

Step 10: Ethical Considerations - Data Integrity and Responsibility

Data Privacy: Ensure that data handling respects individuals' privacy rights and complies with data protection regulations.

Bias Detection and Mitigation: Identify and mitigate bias in data and algorithms to ensure fairness.

Fairness: Strive for fairness and equitable outcomes in decision-making processes influenced by data.

Ethical Guidelines: Adhere to ethical and legal guidelines in all aspects of data analytics to maintain trust and credibility.

Data analytics is an exciting and profitable field that enables people and companies to use data to make wise decisions. You'll be prepared to start your data analytics journey by understanding the fundamentals described in this guide. To become a skilled data analyst, keep in mind that practice and ongoing learning are essential. If you need help implementing data analytics in your organization or if you want to learn more, you should consult professionals or sign up for specialized courses. The ACTE Institute offers comprehensive data analytics training courses that can provide you the knowledge and skills necessary to excel in this field, along with job placement and certification. So put on your work boots, investigate the resources, and begin transforming.

21 notes

·

View notes

Text

From Curious Novice to Data Enthusiast: My Data Science Adventure

I've always been fascinated by data science, a field that seamlessly blends technology, mathematics, and curiosity. In this article, I want to take you on a journey—my journey—from being a curious novice to becoming a passionate data enthusiast. Together, let's explore the thrilling world of data science, and I'll share the steps I took to immerse myself in this captivating realm of knowledge.

The Spark: Discovering the Potential of Data Science

The moment I stumbled upon data science, I felt a spark of inspiration. Witnessing its impact across various industries, from healthcare and finance to marketing and entertainment, I couldn't help but be drawn to this innovative field. The ability to extract critical insights from vast amounts of data and uncover meaningful patterns fascinated me, prompting me to dive deeper into the world of data science.

Laying the Foundation: The Importance of Learning the Basics

To embark on this data science adventure, I quickly realized the importance of building a strong foundation. Learning the basics of statistics, programming, and mathematics became my priority. Understanding statistical concepts and techniques enabled me to make sense of data distributions, correlations, and significance levels. Programming languages like Python and R became essential tools for data manipulation, analysis, and visualization, while a solid grasp of mathematical principles empowered me to create and evaluate predictive models.

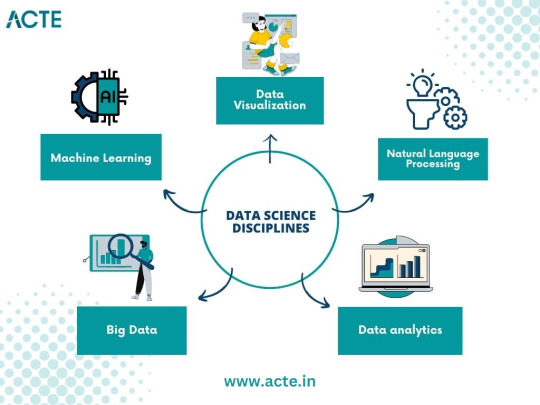

The Quest for Knowledge: Exploring Various Data Science Disciplines

A. Machine Learning: Unraveling the Power of Predictive Models

Machine learning, a prominent discipline within data science, captivated me with its ability to unlock the potential of predictive models. I delved into the fundamentals, understanding the underlying algorithms that power these models. Supervised learning, where data with labels is used to train prediction models, and unsupervised learning, which uncovers hidden patterns within unlabeled data, intrigued me. Exploring concepts like regression, classification, clustering, and dimensionality reduction deepened my understanding of this powerful field.

B. Data Visualization: Telling Stories with Data

In my data science journey, I discovered the importance of effectively visualizing data to convey meaningful stories. Navigating through various visualization tools and techniques, such as creating dynamic charts, interactive dashboards, and compelling infographics, allowed me to unlock the hidden narratives within datasets. Visualizations became a medium to communicate complex ideas succinctly, enabling stakeholders to understand insights effortlessly.

C. Big Data: Mastering the Analysis of Vast Amounts of Information

The advent of big data challenged traditional data analysis approaches. To conquer this challenge, I dived into the world of big data, understanding its nuances and exploring techniques for efficient analysis. Uncovering the intricacies of distributed systems, parallel processing, and data storage frameworks empowered me to handle massive volumes of information effectively. With tools like Apache Hadoop and Spark, I was able to mine valuable insights from colossal datasets.

D. Natural Language Processing: Extracting Insights from Textual Data

Textual data surrounds us in the digital age, and the realm of natural language processing fascinated me. I delved into techniques for processing and analyzing unstructured text data, uncovering insights from tweets, customer reviews, news articles, and more. Understanding concepts like sentiment analysis, topic modeling, and named entity recognition allowed me to extract valuable information from written text, revolutionizing industries like sentiment analysis, customer service, and content recommendation systems.

Building the Arsenal: Acquiring Data Science Skills and Tools

Acquiring essential skills and familiarizing myself with relevant tools played a crucial role in my data science journey. Programming languages like Python and R became my companions, enabling me to manipulate, analyze, and model data efficiently. Additionally, I explored popular data science libraries and frameworks such as TensorFlow, Scikit-learn, Pandas, and NumPy, which expedited the development and deployment of machine learning models. The arsenal of skills and tools I accumulated became my assets in the quest for data-driven insights.

The Real-World Challenge: Applying Data Science in Practice

Data science is not just an academic pursuit but rather a practical discipline aimed at solving real-world problems. Throughout my journey, I sought to identify such problems and apply data science methodologies to provide practical solutions. From predicting customer churn to optimizing supply chain logistics, the application of data science proved transformative in various domains. Sharing success stories of leveraging data science in practice inspires others to realize the power of this field.

Cultivating Curiosity: Continuous Learning and Skill Enhancement

Embracing a growth mindset is paramount in the world of data science. The field is rapidly evolving, with new algorithms, techniques, and tools emerging frequently. To stay ahead, it is essential to cultivate curiosity and foster a continuous learning mindset. Keeping abreast of the latest research papers, attending data science conferences, and engaging in data science courses nurtures personal and professional growth. The journey to becoming a data enthusiast is a lifelong pursuit.

Joining the Community: Networking and Collaboration

Being part of the data science community is a catalyst for growth and inspiration. Engaging with like-minded individuals, sharing knowledge, and collaborating on projects enhances the learning experience. Joining online forums, participating in Kaggle competitions, and attending meetups provides opportunities to exchange ideas, solve challenges collectively, and foster invaluable connections within the data science community.

Overcoming Obstacles: Dealing with Common Data Science Challenges

Data science, like any discipline, presents its own set of challenges. From data cleaning and preprocessing to model selection and evaluation, obstacles arise at each stage of the data science pipeline. Strategies and tips to overcome these challenges, such as building reliable pipelines, conducting robust experiments, and leveraging cross-validation techniques, are indispensable in maintaining motivation and achieving success in the data science journey.

Balancing Act: Building a Career in Data Science alongside Other Commitments

For many aspiring data scientists, the pursuit of knowledge and skills must coexist with other commitments, such as full-time jobs and personal responsibilities. Effectively managing time and developing a structured learning plan is crucial in striking a balance. Tips such as identifying pockets of dedicated learning time, breaking down complex concepts into manageable chunks, and seeking mentorships or online communities can empower individuals to navigate the data science journey while juggling other responsibilities.

Ethical Considerations: Navigating the World of Data Responsibly

As data scientists, we must navigate the world of data responsibly, being mindful of the ethical considerations inherent in this field. Safeguarding privacy, addressing bias in algorithms, and ensuring transparency in data-driven decision-making are critical principles. Exploring topics such as algorithmic fairness, data anonymization techniques, and the societal impact of data science encourages responsible and ethical practices in a rapidly evolving digital landscape.

Embarking on a data science adventure from a curious novice to a passionate data enthusiast is an exhilarating and rewarding journey. By laying a foundation of knowledge, exploring various data science disciplines, acquiring essential skills and tools, and engaging in continuous learning, one can conquer challenges, build a successful career, and have a good influence on the data science community. It's a journey that never truly ends, as data continues to evolve and offer exciting opportunities for discovery and innovation. So, join me in your data science adventure, and let the exploration begin!

#data science#data analytics#data visualization#big data#machine learning#artificial intelligence#education#information

16 notes

·

View notes

Text

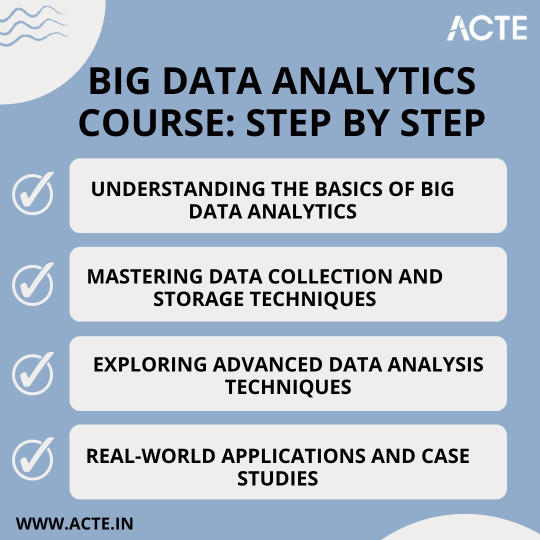

From Beginner to Pro: A Game-Changing Big Data Analytics Course

Are you fascinated by the vast potential of big data analytics? Do you want to unlock its power and become a proficient professional in this rapidly evolving field? Look no further! In this article, we will take you on a journey to traverse the path from being a beginner to becoming a pro in big data analytics. We will guide you through a game-changing course designed to provide you with the necessary information and education to master the art of analyzing and deriving valuable insights from large and complex data sets.

Step 1: Understanding the Basics of Big Data Analytics

Before diving into the intricacies of big data analytics, it is crucial to grasp its fundamental concepts and methodologies. A solid foundation in the basics will empower you to navigate through the complexities of this domain with confidence. In this initial phase of the course, you will learn:

The definition and characteristics of big data

The importance and impact of big data analytics in various industries

The key components and architecture of a big data analytics system

The different types of data and their relevance in analytics

The ethical considerations and challenges associated with big data analytics

By comprehending these key concepts, you will be equipped with the essential knowledge needed to kickstart your journey towards proficiency.

Step 2: Mastering Data Collection and Storage Techniques

Once you have a firm grasp on the basics, it's time to dive deeper and explore the art of collecting and storing big data effectively. In this phase of the course, you will delve into:

Data acquisition strategies, including batch processing and real-time streaming

Techniques for data cleansing, preprocessing, and transformation to ensure data quality and consistency

Storage technologies, such as Hadoop Distributed File System (HDFS) and NoSQL databases, and their suitability for different types of data

Understanding data governance, privacy, and security measures to handle sensitive data in compliance with regulations

By honing these skills, you will be well-prepared to handle large and diverse data sets efficiently, which is a crucial step towards becoming a pro in big data analytics.

Step 3: Exploring Advanced Data Analysis Techniques

Now that you have developed a solid foundation and acquired the necessary skills for data collection and storage, it's time to unleash the power of advanced data analysis techniques. In this phase of the course, you will dive into:

Statistical analysis methods, including hypothesis testing, regression analysis, and cluster analysis, to uncover patterns and relationships within data

Machine learning algorithms, such as decision trees, random forests, and neural networks, for predictive modeling and pattern recognition

Natural Language Processing (NLP) techniques to analyze and derive insights from unstructured text data

Data visualization techniques, ranging from basic charts to interactive dashboards, to effectively communicate data-driven insights

By mastering these advanced techniques, you will be able to extract meaningful insights and actionable recommendations from complex data sets, transforming you into a true big data analytics professional.

Step 4: Real-world Applications and Case Studies

To solidify your learning and gain practical experience, it is crucial to apply your newfound knowledge in real-world scenarios. In this final phase of the course, you will:

Explore various industry-specific case studies, showcasing how big data analytics has revolutionized sectors like healthcare, finance, marketing, and cybersecurity

Work on hands-on projects, where you will solve data-driven problems by applying the techniques and methodologies learned throughout the course

Collaborate with peers and industry experts through interactive discussions and forums to exchange insights and best practices

Stay updated with the latest trends and advancements in big data analytics, ensuring your knowledge remains up-to-date in this rapidly evolving field

By immersing yourself in practical applications and real-world challenges, you will not only gain valuable experience but also hone your problem-solving skills, making you a well-rounded big data analytics professional.

Through a comprehensive and game-changing course at ACTE institute, you can gain the necessary information and education to navigate the complexities of this field. By understanding the basics, mastering data collection and storage techniques, exploring advanced data analysis methods, and applying your knowledge in real-world scenarios, you have transformed into a proficient professional capable of extracting valuable insights from big data.

Remember, the world of big data analytics is ever-evolving, with new challenges and opportunities emerging each day. Stay curious, seek continuous learning, and embrace the exciting journey ahead as you unlock the limitless potential of big data analytics.

17 notes

·

View notes

Text

First time that I'm seeing in practice why Python is so widely used in Data Analysis. I have an Excel sheet with 8 million rows, that's impossible to open. I can't analyze anything without the program crashing.

I have even more respect for Python now.

33 notes

·

View notes

Text

sldkslfdk

#I'VE BEEN WORKING ON AWS FOR 3+ HOURS RRRRRRAAAAH#yumi does grad stuff#hi hello if you are new here i am a grad student and i yell about amazon web services every now and then#because i am taking big data analytics as a class rn

5 notes

·

View notes

Text

one of the most effective means of ending the digitization of socioeconomic systems of discrimination in the U.S. would have been to boycott Citigroup and its subsidiaries + Citadel LLC and its subsidiaries.

#employment discrimination#direct action#ai#big data#data analytics#gild#microeconomics#studyblr#studyspo#imperial core

2 notes

·

View notes

Text

Mastering Data Analytics: Your Path to Success Starts at Corpus Digital Hub

Corpus Digital Hub is more than just a training institute—it's a hub of knowledge, innovation, and opportunity. Our mission is simple: to empower individuals with the skills and expertise needed to thrive in the fast-paced world of data analytics. Located in the vibrant city of Calicut, our institute serves as a gateway to endless possibilities and exciting career opportunities.

A Comprehensive Approach to Learning

At Corpus Digital Hub, we believe that education is the key to unlocking human potential. That's why we offer a comprehensive curriculum that covers a wide range of topics, from basic data analysis techniques to advanced machine learning algorithms. Our goal is to provide students with the tools and knowledge they need to succeed in today's competitive job market.

Building Strong Foundations

Success in data analytics begins with a strong foundation. That's why our courses are designed to provide students with a solid understanding of core concepts and principles. Whether you're new to the field or a seasoned professional, our curriculum is tailored to meet your unique needs and aspirations.

Hands-On Experience

Theory is important, but nothing beats hands-on experience. That's why we place a strong emphasis on practical learning at Corpus Digital Hub. From day one, students have the opportunity to work on real-world projects and gain valuable experience that will set them apart in the job market.

A Supportive Learning Environment

At Corpus Digital Hub, we believe that learning is a collaborative effort. That's why we foster a supportive and inclusive learning environment where students feel empowered to ask questions, share ideas, and explore new concepts. Our experienced faculty members are dedicated to helping students succeed and are always available to provide guidance and support.

Cultivating Future Leaders

Our ultimate goal at Corpus Digital Hub is to cultivate the next generation of leaders in data analytics. Through our rigorous curriculum, hands-on approach, and supportive learning environment, we provide students with the tools and confidence they need to excel in their careers and make a positive impact on the world.

Join Us on the Journey

Are you ready to take the next step towards a brighter future? Whether you're a recent graduate, a mid-career professional, or someone looking to make a career change, Corpus Digital Hub welcomes you with open arms. Join us on the journey to mastery in data analytics and unlock your full potential.

Contact Us Today

Ready to get started? Contact Corpus Digital Hub to learn more about our programs, admissions process, and scholarship opportunities. Your journey towards success starts here!

Stay connected with Corpus Digital Hub for the latest news, updates, and success stories from our vibrant community of learners and educators. Together, we'll shape the future of data analytics and make a difference in the world!

#data analytics#data science#machinelearning#Data Visualization#Business Intelligence#big data#Data Mining#Business Analytics#Data Exploration#Data Analysis Techniques#Data Analytics Certification#Data Analytics Training#Data Analyst Skills#Data Analytics Careers#Data Analytics Jobs#Data Analytics Industry

2 notes

·

View notes

Text

Top 5 Benefits of Low-Code/No-Code BI Solutions

Low-code/no-code Business Intelligence (BI) solutions offer a paradigm shift in analytics, providing organizations with five key benefits. Firstly, rapid development and deployment empower businesses to swiftly adapt to changing needs. Secondly, these solutions enhance collaboration by enabling non-technical users to contribute to BI processes. Thirdly, cost-effectiveness arises from reduced reliance on IT resources and streamlined development cycles. Fourthly, accessibility improves as these platforms democratize data insights, making BI available to a broader audience. Lastly, agility is heightened, allowing organizations to respond promptly to market dynamics. Low-code/no-code BI solutions thus deliver efficiency, collaboration, cost savings, accessibility, and agility in the analytics landscape.

#newfangled#polusai#etl#nlp#data democratization#business data#big data#ai to generate dashboard#business dashboard#bi report#generativeai#business intelligence tool#artificialintelligence#machine learning#no code#data analytics#data visualization#zero coding

3 notes

·

View notes