#URL classification API

Text

5 Tips about small seo tools You Can Use Today

Google Lookup Console is often a free of charge tool that can help Web-site house owners check their Web site’s overall performance on Google research. It offers information about the web site’s rating, the volume of clicks, and also the impressions.

Track your desktop, cell and native rankings in a lot more than one hundred fifty nations around the world, keep an eye on your competitors and acquire everyday updated reviews.

Although There is certainly some discussion about how actionable Google's Normal Language API is for Website positioning, there is not any denying it is a amazing Resource with plenty of Superior Investigation.

The Software also gives a content score that steps the standard of the information towards prime-ranking web pages for your presented search term. MarketMuse is helpful for generating articles which is suitable, instructive, and interesting.

By the top of this article, you need to have a greater understanding of which Software(s) would be the most suitable for your enterprise.

Specialized Search engine optimization will be the Main stage of Search engine marketing optimization, So use our small Search engine optimization tools to figure out on-web site glitches and backend glitches.

Ahrefs Backlink Checker is another well known backlink Evaluation Resource that provides a detailed check out of an internet site’s backlink profile.

In https://cuteseotools.com/webtools/youtube-channel-search , Clearscope's search phrase discovery Software makes use of "smart language algorithms" to discover feasible search term solutions based on matter Thoughts or URLs.

Within a planet of extremely comparable Search engine optimisation tools, Letterdrop is style of in a classification of its possess. Portion content management Remedy, component SEO auditor, component keyword exploration tool, It is really a novel product that does a small amount of a number of various things. But just one definitely distinct element is how it integrates material automation capabilities.

Boasting your organization profile forms the inspiration of most other neighborhood Search engine marketing actions, so It really is A vital stage.

Welcome to the future of landing site personalization. Instapage causes it to be less difficult than previously to develop, examination, and improve your web pages with the power of synthetic intelligence.

The plugin is novice-welcoming still capable of providing the outcome that Search engine marketing pros be expecting. So, it’s unsurprising that companies and big brands also use this plugin.

The SERP Analyzer can be miles forward of similar tools from a lot of opponents. I really like the interactive chart that demonstrates what back links in the very best 48 positions normal for metrics like word counts, character counts, search phrase density, and partial keywords and phrases.

Can Letterdrop fully automate your whole articles agenda? No. Could it be A very all-in-a person Search engine optimisation Software? Also no. But like a hybrid written content creation System with neat automation features created to assist you create excellent written content, It is Probably the most interesting tools on this list.

0 notes

Text

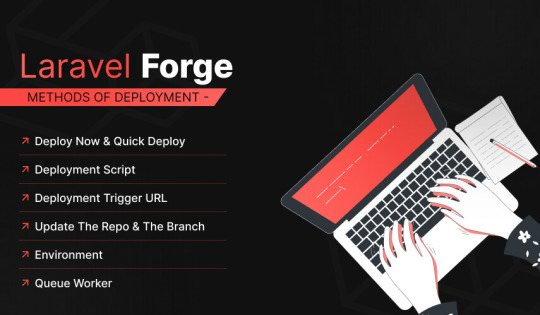

Auto Deployment with Laravel using Forge/Envoyer

We know most readers love to build web applications with the Laravel framework, and deploying these applications is a crucial step. However, while many know server management, only some are particularly fond of configuring and maintaining servers. Luckily, Laravel Forge and Envoyer are available to assist everyone!

When one is ready to deploy their Laravel application to production, there are some essential points that one can do to ensure your application is running as efficiently as possible. This blog will cover some great topics to ensure your Laravel framework is appropriately deployed.

Regarding our blog topic, you must have a brief about what Envoyer and Forge are regarding deployment.

Envoyer is a deployment tool used to deploy PHP applications, and the best thing about this tool is the zero downtime during deployment. Unfortunately, this indicates that your application and the customers must be aware that a new version has been pushed.

The basic overview of the Laravel framework:

Laravel framework is an open-source, free PHP framework that renders a set of tools and resources to build modern PHP applications. It comprises a complete ecosystem leveraging its built-in features, range of extensions, and compatible packages. The growth and popularity of Laravel have escalated in recent years, with several developers adopting it as their framework of choice for a streamlined development process.

What is deployment concerning Laravel?

A deployment is a process in which your code is downloaded from your source control provider to your server. This makes it ready for the world to access.

It is accessible to manager servers with Laravel Forge. A new website is online quickly, queues and cron jobs are easily set up, and a more advanced setup using a network of servers and load balancers can be configured. While with Envoyer, one can manage deployments. This blog will give you an insight into both; Forge and Envoyer.

Laravel Forge

Laravel Forge is a tool to manage your servers, and the first step is creating an account. Next, one has to connect to a service provider; several cloud servers are supported out of the box, which includes Linode, Vultr, Hetzner, and Amazon. Moreover, you can manage custom VPS.

Deploy Now and Quick Deploy

The Quick Deploy feature of Forge allows you to quickly deploy your projects when you push to your source control provider. When you push to the configured quick deploy branch, Laravel Forge will pull your latest code from source control and run the application’s configured deployment script.

Deployment Trigger URL

This option can integrate your app into a third-party service or create a custom deployment script. Then, when the URL receives a request, the deployment script gets triggered.

Update the Repo and the Branch

You can use these options if you need to install a newer version of the same project on a different repository or update the branch in Laravel Forge. If you are updating the branch, you may also have to update the branch name in the deployment script.

Environment

Laravel Forge automatically generates an environment file for the application, and some details like; database credentials are automatically added to the environment. However, if the app uses an API, you can safely place the API key in the environment. Even running a generic PHP web app, you can access the ENV variables using the getenv() method.

Queue Worker

Starting a queue worker in Forge is the same as running the queue: work Artisan command. Laravel Forge manages queue workers by using a process monitor called Supervisor to keep the process running permanently. You can create multiple queues based on queue priority and any other classification that you find helpful.

Project Creation in Envoyer

The first step is to create an account on Envoyer and log in. A free trial is available via your profile page>integrations; you can link a source control system such as Bitbucket or Github. Enter the access token for the service you are using, and just like that, you’re ready to create your first project.

First Deployment:

Envoyer needs to be able to communicate with Forge, which is done via an ‘SSH’ key. You will find the SSH key under the ‘key button’ on the servers tab in Envoyer. Enter this key in the SSH tab for your server in Laravel Forge

The last straw to this hat is to add the environment file. Click the ‘Manage Environment’ button on the server tab in Envoyer. Now you have to enter an ‘encryption key,’ which will be used by Envoyer to encrypt your environment file, which contains access tokens and passwords.

conclusion

This blog gave you an exclusive insight into the Laravel framework and deployment with Forge and Envoyer.

Laravel Forge and Envoyer are incredible tools that make deployment a cakewalk. Both the tools have tons of features and easy-to-use UI that lets you create and provision servers and deploy applications without hassle.

Numerous web app development companies are available in the market. However, the client searches for lucenta solutions to build their customized app. We code your imagination with 4D techniques, i.e., Determine, Design, Develop, and Deliver. With proficient team members, we can cross every obstacle coming across our path to success. Your satisfaction is our prime mantra!

0 notes

Text

What is the Codigo Postal API?

Codigo postal api

This API enables you to retrieve information about the physical address of an object. You can use the data to find a specific address, look up customer information, or check addresses against an existing database.

The service returns geocoded address data based on the requested format, which can be a human-readable street address or a zip code. The geocoded data includes all the features of the actual address, including name, phone, url, classifications, and more.

You can also get the data in JSON, XML, or CSV format. This is useful if you want to export the results in bulk for offline analysis.

Result types and address components

Each result has a type array that indicates the type of the returned feature. These include: locality, sublocality, and administrative_area.

Several types of locality may be included in each result, such as city, county, and state. Depending on the country, these might be filtered as separate fields or as different components of an address.

Results can also contain a formatted_address component, which contains the human-readable format of the address. This can be useful if you need to build custom formats or display the information in a way that is more user-friendly than just displaying the address as a string.

youtube

The service can also be used to create new geocoded address data, which can be stored as a JSON object or a CSV file. The API can also be used to check or verify addresses, as well as enrich them with demographic data.

SITES WE SUPPORT

Mail Invoice – Blogger

0 notes

Text

Linus digital asset trading platform's unique and versatile API drives the future of the digital economy

Smart contracts are like computers that are not connected to the internet, they have their own intrinsic value, the intrinsic value of a smart contract is to create and trade pass-throughs. However, in the same way that computers unleash tremendous innovation and value when they are connected to the Internet, smart contracts become immensely powerful once they are connected to the rapidly growing off-chain data and API economy. If smart contracts can connect to a huge variety of databases such as off-chain data providers, web APIs, enterprise systems, cloud providers, IoT devices, payment systems, and other blockchains, then it will become a mainstream digital protocol across a variety of industries.

Data and Data Exchange

Data are results or information derived from observations, such as measuring the outdoor temperature, calculating the geographic location of a car, or recording the user's interaction with an application. The raw data itself is neither of special value nor reliable, but needs to be interpreted or confirmed with other data to ensure its authenticity and validity.

If data is to be a central pillar of next-generation applications, then it cannot be entirely dependent on internally generated data, but rather a mechanism for trading data must be established, since the cost of buying data is much lower than the cost of producing it. As an example, developing algorithms for self-driving cars requires the use of large amounts of data for target detection, target classification, target localization, and motion prediction. Developers can generate this data internally, but at the cost of accumulating millions of miles of driving; and they can also buy this data through APIs.

An application programming interface (API) is really a set of commands that control how external applications access data sets and services inside a system. APIs are the standard solution for data and service transactions today.

Linus multifunctional APIs help the digital economy grow

The Linus platform offers a variety of fully functional trading web application programming interfaces (APIs) that cover key trading functions while providing familiar entry points for different trading styles and user types. Users can use any of these APIs to gain read access to public market data and use the private API to gain private read access to accounts.

The Linus platform develops public and private WebSockets to make it easier to exchange data between the client and server, allowing the server to actively push data to the client. In the WebSocket API, the browser and server only need to complete a single handshake, and a persistent connection can be created directly between the two and data can be transferred in both directions.The WebSocket API contains two URLs.

(a) WebSocket URL: Clients use it to connect to the API and allow them to send messages and receive notifications.

(b) Connection URL: sends back messages to clients (requires Connection ID to send to a specific client).

Users can use the WebSocket API to receive push notifications, including any updates about the public order book and private accounts. the Linus platform developed the WebSocket API to support security authentication, order entry, fulfillment reports, market data queries, customer profile subscriptions, and many other features.

Conclusion

The future of the world will definitely be dominated by the digital economy, and the pillar of the digital economy is every piece of data. Linus' unique multi-functional API will drive the faster development of the digital economy in the future.

0 notes

Text

Group Buy Seo Tools

Bulk of the Search Engine Optimization players provide onsite optimization with 91% of the SEOs practicing this. Google+ optimization has also a great variety of SEOs practicing it as 86% claimed to be using this solution to their clients. For affiliate marketing, just a tiny percentage (11%) of SEOs are providing this service to their clients.

Other solutions provided by Search engine optimizations:

Content creation/optimization

Link building

Citation structure

Social media site marketing

Site advancement

PPC

Mobile site development

Video marketing as well as mobile advertising and marketing.

The most required service by SEO customers is onsite marketing, while the least demanded solution is affiliate marketing. The various other solutions are in some way averagely required. Nonetheless, the majority of tiny and also average businesses do not understand the chances that video clips as well as mobile advertising can unlock them and therefore often tend not to use these solutions. Search engine optimizations, therefore, need to inform their customers on these advertising and marketing networks to ensure that they can opt for them.

When asked about the online Search Engine Optimization services SEOs really feel are effective, 82% of them stated that the General search is the most reliable for producing leads. Local search appears to be the second-best after 62% of the respondents in a Search Engine Optimization study voted in its favor. PPC is third with 53% while social networks are 4th with 26%. Everyday bargains seem an interesting a lot of Search engine optimizations due to the fact that only 1% voted in support of it.

Laborious SEO Tasks

55% of the current SEOs out there locate web link building to be one of the most tedious as well as lengthy jobs. Others, though 15% only think that web content writing is one of the most tiresome and also time-consuming job among all the jobs they do. It as a result emerges that to the 55% who locate web link structure laborious, this task is also boring to them.

Future Projections

This is a synopsis of what we anticipate in the near future as for SEO business is of worry.

Development of Search Engine Optimization Businesses

Contrasted to the previous years, SEO organizations have actually been growing as well as increasing continuously. The Majority Of Search engine optimizations (concerning 93%) are not just hopeful about expanding their companies, yet are also expecting to grow their organization. In what seems to be an identified transfer to expand their organizations, the majority of Search engine optimizations (82%) want to hire more personnel so as to understand their desired levels of development.

The social media is likewise anticipated to grow and be a lot more effective also than the local directories. This is since many Search engine optimizations think that social networks can do better because of referrals from friends. Mobile usage is likewise anticipated to grow additional and become pertinent for neighborhood businesses.

The upsurge of Confidence in SEO Service

Unlike other companies, the SEO business is showing a favorable development of self-confidence among gamers. Regardless of this being a vibrant as well as innovative sector, the majority of Search engine optimizations are still ready to hire even more personnel to assist drive their businesses in advance. 84% of the tested Search engine optimizations in the SEO study are also confident that the SEO business is going to be extra lucrative starting this year 2013. Self-confidence is additionally running high that if the SEO players boost the understanding of SEO/social among business owners they serve in future, they will not just boost the business proprietors' price of financial investment, however also make them move their advertising and marketing budget plans from various other networks to the digital channels. Read more about cheap SEO tools.

. Google Analytics

Google Analytics is to the online advertiser like air is to the individual. You can't survive without it.

In case you're not yet utilizing Google Analytics, I suggest that you start doing as such as quickly as time permits. There is no other information source that gives high caliber and nitty gritty data like Google Analytics. In a Google-overwhelmed period, it's what we need to work with. The majority of different information apparatuses basically piggyback on Google's information utilizing the API.

Appraisal

you can't disregard Google Analytics. For all intents and purposes all the significant and significant information about site guests, traffic, and so forth. originates from this basic source.

More Information

Look at a conversation of how Analytics assists with driving high changing over inquiry traffic.

Gain proficiency with a couple of Google Analytics reports that can help create natural traffic.

2. Google Search Console

In the event that Google Analytics is the air that an online advertiser inhales, at that point Google Search Console (some time ago Webmaster Tools) is the food that the online advertiser eats.

"Webmaster" in the previous name is somewhat of a misnomer. The apparatus is for more than website admins. It's for SEOs. The very focal point of the device has to do with "search" and "improvement."

A portion of GSC's best bits of knowledge originates from simply looking at the dashboard. SEOs and advertisers need to stay aware of the dashboard all the time. Google Search Console gives a portion of indistinguishable information from Analytics, however in a less complex and increasingly direct manner. There's less tweaking you can do with the numbers, yet simpler access to them.

3. Uber suggest

Uber suggest is an across the board SEO apparatus intended to assist you with dominating the match of SEO. A major piece of SEO achievement is recognizing what you're doing, what your rivals are doing, and how to modify your procedure to exploit openings in the market.

Beginning with Uber suggest is as basic as composing a space or catchphrase into the inquiry bar. From here, you increase moment access to a report for your picked space or catchphrase. For this post, I need to concentrate on the catchphrase search abilities of Uber suggest. It's an extraordinary option in contrast to the Google Keyword Planner apparatus, which has lost a portion of its brilliance throughout the years. In the wake of running a quest for your essential watchword, here's the way you can utilize Uber suggest to reveal related catchphrases to remember for your substance.

Step #1: Click "Watchword Ideas"

Situated in the left sidebar, click "Watchword Ideas" to be taken to a far-reaching report.

4. MozBar

Information is the situation with the MozBar. At the point when you consider it, SEO has a great deal to do with knowing the secret sauce. The MozBar causes you to find the secret sauce initially. The MozBar button sits straight up in your program toolbar — where all the activity is going on. Snap-on the MozBar, and you create a moment report on the site you're visiting.

5. SEO Workers Analysis Tool

The Analysis Tool permits you to connect a site URL — any site, regardless of whether it's not yours — and produce a report. SEO Workers doesn't give anything new, yet it assists with sparing a ton of time in the examination. Additionally, it gives foundation data on the information. A portion of the information, similar to the HTTP Headers Check is in the engine stuff that you wouldn't ordinarily think to check for. Here is SEO Worker's one of a kind component. They show you SEO notwithstanding demonstrating you the SEO information that issues. Inside each broke down classification, the report gives an itemized conversation, and frequently a video (ft. Matt Cuts).

6. Woo Rank

from the outset, Woo Rank seems, by all accounts, to be simply one more freemium site analyzer. Upon closer, examination, be that as it may, it gives convenient experiences into some not really evident stuff. Woo Rank first shows a "Main concerns segment. Sadly, here's one of the drawbacks of the device. In one way or another, it feels that I have a 404 mistake, come up short on a favicon, and don't have a robots.txt document. These are incorrect. Read more about Group Buy SEO tools.

7. Neil Patel SEO Analyzer

I'm accepting that you're now mindful of my free site analyzer. I believe it merits referencing, notwithstanding, as a last free apparatus available to you. One component that I've taken a stab at is the serious investigation. Some portion of the achievement of web-based showcasing is knowing how your rivals are getting along.

1 note

·

View note

Text

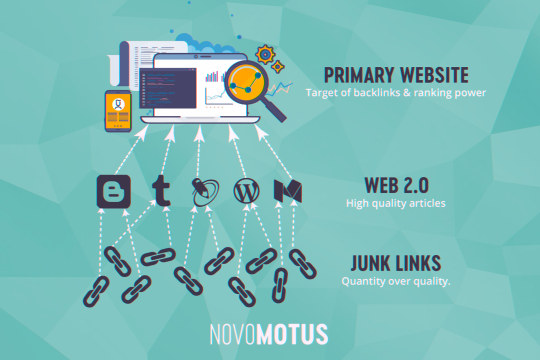

The Next 5 Things You Should Do For Web 2.0 Sites Success

8 Guilt Free Benefits Of Using Web 2.0 Tips

Table of ContentsBest 10 Tips For Web 2.0 Backlinks8 Incredible Web 2.0 ExamplesWant More Money? Get Web 2.06 Enticing Ways To Improve Your Web 2.0 Backlinks Skills5 Unforgivable Sins Of Web2.0 Backlinks

Advance Web 2.0 Backlink Strategy After ...

Get DA50+Web 2.0 post - From Asia Virtual Solutions

At DBurns Style, we're an internet marketing business that's concentrated on delivering outcomes for our customers. Just recently, we dealt with a big chemical suppliers business called Shepard Bros. They've stayed in business for over 40 years, and thought it was time to redesign their site. We realize that a company website is very important.

As such, we began re-designing their website with the objective of developing something that created more traffic from different sources - web2.0 backlinks. One of the secrets to this effective SEO project was web 2.0 link structure. If you do not understand what this is, then enable us to discuss things in this article.

To round off, we'll reveal you how we used this strategy in the Shepard Bros case. Link building is one of the earliest SEO tactics around and is all to do with the concept of building backlinks to a site. The more links you have directing people back to a website, the higher it will rank.

How To Get Backlinks From Web 2.0 That ...

The value of this SEO is method is that it allows you to construct links on sites with high domain authority. All web 2.0 sites have this, which indicates you'll get backlinks from authority websites like, Twitter or Facebook, and so on. When a site has high authority backlinks, it will only serve to boost it up the rankings.

The key concept of web 2.0 link building is that you produce your own web 2.0 websites to build links from - using web 2.0. You can establish blogs on different platforms like WordPress or Tumblr, produce special material, and link back to the target website naturally. There are lots of benefits to this method, and we have actually noted the crucial ones down listed below: Expense Efficient: Web 2.0 s are totally free for you to make, which makes this technique incredibly easy on your spending plan.

Don't Just Sit There! Start Getting More Using Web 2.0

Web 2.0 SEO Backlinks Pages 1 - 4 ...

Safe: Web 2.0 link building is completely safe and follows all of Google's link structure rules, suggesting you'll never ever get penalized. Numerous Hyperlinks: Perhaps the very best advantage of web 2.0 link building is that you can link to your site sometimes throughout different authority websites. You can likewise link to it on the same website, simply in a various piece of material.

At DBurns styles, we used it as best as we could to assist Shepard Bros establish a new and better website. To do this, we began by structuring the so that each page targets its own set of highly relevant keywords. Then, by utilizing web 2.0 link structure, we utilized these keywords as best as we perhaps could.

We slipped keywords into the content, making it look natural from a user point of view. We made sure to include a link to the keywords, hence creating high authority backlinks to the Shepard Bros website. The more links we constructed, the further the website rose the rankings. After just four months we saw some unbelievable website statistics that displayed the success of our SEO campaign: The number of site sessions increased by 45.08% The variety of users increased by 18.77% Page views increased by 42.15% Session period increased by 37.5% Organic site traffic increased by 29% Direct traffic increased by 50% Referral traffic increased by 85% Social traffic increased by 344% While SEO is a long term method, it speaks volumes to the efficiency of our work that we saw such fantastic data after just four months.

We use cookies to ensure that we give you the very best experience on our site. If you continue to utilize this site we will assume that you enjoy with it.Ok Privacy policy.

View All Questions Bounty New (No Responses) Discussion Answered Product Assistance Unanswered From All Time Last 1 month Last 7 Days Last 24 Hours Sorted by Newest Questions Current Activity Most Thumbs Up Many Responses Fewest Reactions Earliest Concerns With classification All Categories Affiliate Marketing Alternative Search Sources Analytics Habits & Demographics Branding/ Brand Name Awareness Competitive Research Consulting Tips & Trends Content & Blogging Conversion Rate Optimization Educational Resources Email Marketing Entrepreneurship Inbound Marketing Events Inbound Marketing in the Media Inbound Marketing Industry Intermediate & Advanced SEO International Issues Internet Marketing Interviews Keyword Research Study Legal Link Structure Local Listings Local Method Resident Website Optimization Management/ Culture Mobile and Local Moz News Moz Tools On-Page/ Site Optimization Internet Marketing Tools Paid Search Marketing PRO Application Reporting Credibility Management Reviews and Rankings Search Engine Trends Social Network Social Network for Regional Search Assistance - Account Help Support - Function Requests Assistance - Followerwonk Assistance - Getting Going Support - Link Explorer Assistance - Moz Analytics: Brand & Mentions Support - Moz Analytics: Links Assistance - Moz Analytics: Search Support - Moz Analytics: Social Support - Moz APIs Support - Moz Local Support - Other Research Study Tools Support - Settings Help Technical SEO Issues Checking/ Quality Control Vertical SEO: Video, Image, Local Website Design White Hat/ Black Hat SEO White Boards Friday - using web 2.0.

5 Tips To Reinvent Your Search Engine Optimization And Win

High PR Dofollow Web 2.0 Sites ...

" Terrific service from Paco - assisted us to get a brand-new launch with extensively utilized words in the title on the very first page of Google - beyond our expectations. Will definitely utilize once again" Examined by siazzah msshaikh Great Extremely advised cityquick 5 Star service as always cityquick 5 Star service as constantly cityquick 5 Star service as always cityquick Fantastic service eagerly anticipating the results cityquick Great service anticipating the results cityquick Fantastic and fast service, i look forward to the results:-RRB- harrywalker As constantly Paco - a 5 star rated service, always on time & more than helpful, would advise anyone trying to find a realiable company - get this one! siazzah Terrific service from Paco - assisted us to get a new launch with commonly utilized words in the title on the very first page of Google - beyond our expectations.

It's common knowledge that Web 2.0 Backlinks are an important part of any (effective) Private Blog site Network, right? Web 2.0 Backlinks are not just FREE, however they are super helpful when attempting to increase your PBN's authority and link juice. Despite all this goodness, it's still very tough to find the best information on how to setup a successful Web 2.0 PBN link farm.

Look: Web 2.0 are simple; they are profiles developed on totally free sub-domains with a lot of quality post. How? Here is an example: I had built this Web 2.0 last year for a little project; it ranked pretty well for my desired keyword ... I have actually since taken down the task as I am rebuilding the platform on nodejs.

Glad you asked! Lately, I have been exploring a lot with Web 2.0 and following checklist works the finest for me: Setup a minimum of 10 article and an optimum of 20 posts per Web 2.0 during a timespan of 3-4 months. Build each Web 2.0 blog site really slowly, grow it and make it look like a genuine blog.

Target LSI and Long Tail Keywords; you may naturally rank for a number of terms. the natural traffic would provide your blog a greater authority and make your blog appearance natural. Setup required social media profiles Submit a Video on YouTubeyou can make a fast video using Animoto (I started adding my videos on my Web 2.0 blogs previously this year).

5 Things You Have In Common With Link Building

You can use Web 2. web 2.0.0 Blog Network in two possible method: 1) Use it as Tier 1 (Buffer Sites) Buffer Sites? Here is the definition: A buffer website functions as an average between your cash website and private blog network Why do we need this? If your connecting building method includes links from other higher authority blog network (owned by somebody else), it's a good idea to use a buffer website.

Asia Virtual Solutions

2) Use it as Tier 2 Appearance: The above image speaks a million words! Numerous Web 2.0 blogs are created that are pointing towards your Tier 1 links. If you are planning to do the above setup then ensure to spam your Web 2.0 with GSA Search Engine Ranking tool to pass as much link juice to your Web 2.0 as possible.

Prior to we start discovering the desired web 2.0 domains, here is what we will require: I will look for some quality expired Web 2.0 for hosting niche. First; we need few keywords, to start with. For this tutorial, we will use Ubersuggest Also: You can utilize Keywordtool.io, but their Pro Plus version is perfect however features a cost tag of $88/m which can be pricey if you are wanting to keep your cost low.

The above number of keywords would be good enough for us to get going. Now: Click Consider As Text and copy all the keywords. Next: My favourite enjoyable part starts! Head over to your Windows VPSand begin your scrapebox ... now load your proxies. and paste your keywords from ubersuggest to scrapebox keyword list field: Once everything is set as the above image, struck the Start Gathering button and you will see this: Select Google, Bing, MyWebSearch and DuckDuckGo You can experiment and choose others.

I extremely suggest taking a look at my parent business's main Scrapebox authorized Scrapebox Windows VPS to accelerate the scraping process. Prior to you proceed further. web2.0 backlinks. Let's cut the URLs and eliminate the duplicates. Once the process is finished, click Addons- > Program Offered Addons. Now download Scrapebox Alive Check addon. Click on Addons- > Scrapebox Alive Check, and you will be welcomed with the above screen.

10 Places To Get Deals On Web 2.0

Next: You require to click Setup and set the success code to 404 as revealed in the screenshot above. REMOVE whatever else! Inspect Usage Proxies! Strike the START button and. get some popcorn while the procedure gets completed. The addon will examine whether the domain ditched still exist if NO then we have discovered a bunch of gold mine in our specific niche.

Click on Inspect Indexed and then select Google Indexed You will now see the below screen: Once you are done, export the list. Next: Go to Addons- > Program Offered Addons and download Scrapebox Vanity Name Checker. Download that Addon ... and open it from the Addons tab. Now, load the URLs from Harvester and click START! Wait.

Lastly: Let's check the Domain Authority and Page Authority for the list of domains we have. We will utilize CheckMoz as our bulk domain stats checker. Get in all your URL you simply gathered using your Scrapebox and paste them inside CheckMoz. click the FIND MOZ METRICS button! Here are the outcomes: Out of all the work that got executed, we found six good Web 2.0 that we can restore on Tumblr and has great stats.

You can duplicate the procedure with other Web 2.0 platforms. Before you begin the setup process, ensure to grab . net Structure 4.0 Once you start the software application, you will be greeted with the following screen: P.S. I am utilizing their Pro version for this tutorial. Next: Click on the Web 2.0 Hunter Button and you will be greeted with the following screen: We initially look at few settings prior to starting the software.

While: We will choose all the search options and leave the custom-made date variety uncontrolled. Next: Click on Setup and Assist Tab on top Import your proxy files and utilize them as your main proxies. Ensure to check Auction Searching, Quality Monitoring, Searching and Ended Domain and Web 2.0 Checking option.

#Asia Virtual Solutions#web2.0 backlinks#Search Engine Optimization#web 2.0#Web 2.0 Backlinks#web 2.0 sites#Benefits Of Using Web 2.0#using web 2.0#link building

1 note

·

View note

Text

Advertise your product or service online with Classified Ad Posting to increase website traffic

Online classified advertising is the way toward advertising an item or administration by composing an intriguing portrayal about it. The depiction can include the name, telephone number, address, site url, value, email address and a photo of the item or administration being advanced. It is called classified advertising since every one of the advertisements that relate to a specific classification are classified under one heading. For instance, autos that are accessible on contract or available to be purchased would be classified under automobiles.

The procedure of classified ad posting includes the accompanying advances:

Distinguishing classified ad posting directories

Keeping in mind the end goal to profit by classified ad posting, guarantee that the directories have a decent page rank. You can likewise utilize Alexa to know the site rank and furthermore distinguish the locales that connect to it. This will help in building your classified advertising directory list. Post the ads in those directories that have a high capability of customers. The more the customers, the better is the perceivability. Keep in mind the web promoting principle of five which says to post your classified ad to no less than 5 destinations.

Rule for classified ad posting

Every directory has its own particular rules for tolerating and dismissing classified ad postings. Before you present your classified ads to a directory, it regards read the rules said there.

Making your classified advertisement

This is the most critical advance of all. Recognize the item or administration that you need to offer first. At that point remembering that write a short ad of not in excess of 500 characters that depicts the item/benefit and furthermore stresses its advantages. You can utilize Microsoft Word as a decent tool to alter your ad and furthermore remedy the spelling oversights and sentence structure.

Following and testing

When you have presented your classified ad to a directory, it is essential to know which ad is changing over the most and furthermore from which directory. This will assist you with concentrating your endeavors where required and get the greatest outcomes. Keep in mind Pareto's Law. 80% of the business originates from 20% of the customers. Focus your endeavors on those 20 classified ad directories that change over, and you can accomplish this by persistently following them. By this you will come to know the transformation rate.

Classified ad syndication

Distinguish locales where you can put one classified advertisement and from that point it gets posted to numerous destinations. This incorporates API and additionally RSS. This will help in getting your advertisement distributed in numerous spots with the goal that the perceivability increments.

See more here- free classified ads

4 notes

·

View notes

Text

Firebase Cloud Messaging

Nowadays one of the stable part of web, NOTIFICATIONS have occupied its place that it is very common to ask permission for sending notification to your browser when you visited sites. Push API is most modern and valuable insertion in web browser to handle push notification. According to caniuse , API bear a great assistance among modern chrome browers and Firefox browser.

Pusher and Firebase are the another implementing notification services for web. In this article, we will see the implementation of Firebase Cloud Messaging ( FCM) service, which give you a reliable and free messaging solution.

Let us assume that the reader has some intimacy with writing back-end application in Express.js and/some intimacy with React. If you have good knowledge in either of these technologies, then you could work with either frontend or backend, one should implement the backend first, followed by frontend. Thus, you can use more applicable section. So let’s get started.

Classification of Firebase Messages

The requirement of Firebase documentation is that there are two component necessary for an FCM implementation.

An environment for implementation like Cloud Functions for Firebase or an app server on which to build, target and send message.

Message recipient client app like an ios, Android, or web (Java Script) from the corresponding platform with specific transport service.

We will take care of item 1 and item 2 in our express back-end app and front-end app respectively.

There are two types of message that FCM usually used.

Notification or display message that are usually handled by FCM SDK automatically used by optional data payload from client app.

Data message handled by client app.

In this tutorial we will learn about sending and receiving message from client app which is very affordable.

Setting Up A Firebase Project

The first thing is to set a Firebase Project, where FCM is a service and one should about some API keys. First create a Goggle account clicking here (ignore if you already have).

Setting a Google account head on Firebase console.

In Firebase console the following steps should carried out.

1. Click on add project.2. After entering a name click on continue.3. From the Analytical menu of web page you can always turn it on.4. Click continue and wait few minute for creating the project.5. Click continue to open the project. 6. Getting necessary key to complete the project, a complete configuration step for the frontend and backend separately.

FRONTEND

1. On the project page, click on the icon to add Firebase to your web app.2. Give your app a nick name3. Click on Resister app and wait few second for completion.4. On the next screen, you have to open app credential to store it somewhere and leave the window open to check it later.5. To return to your console click on continue to console.

BACKEND

1. A service account is needed to connect with our Firebase project from backend.2. In the project page click on the gear icon next to Project Overview to create a service account to use with Express backend.3. In this picture step 1 to 4 is indicated.4. A JSON file is downloaded.5. Don’t download it until you ready to use.6. Come back to this sections if a refresher is needed. A successful set up of a Firebase project is done and it is added to the web page. A fronted and backend is important for credentials.

Let’s observe how sending push notification is done by express backend.

Getting Started

I have set a project on Github with both server and a client. Steps of Getting started:

Fork the repo and check out the 01-get-started branch.

Open the project in your code editor of choice and observe the contents.

In the project root, we have two folders, client/ and server/.There’s also a .editorconfig file, a .gitignore, and a README.md.

The client folder contains a React app. This is where we will listen for notifications.

The server folder contains an express app. This is where we’ll send notifications from. The app is from the project we built in my other article.

Open a terminal and navigate to the client/ folder. Run the yarn install command to install the project dependencies. Then run yarn start to start the project. Visit http://localhost:3000 to see the live app.

Create a .env file inside the server/ folder and add the CONNECTION_STRING environment variable. This variable is a database connection URL pointing to a PostgreSQL database. If you need help with this, check out the Connecting The PostgreSQL Database And Writing A Model section of my linked article. You should also provide the PORT environment variable since React already runs on port 3000. I set PORT=3001 in my .env file.

Open a separate terminal and navigate to the server/ folder. Run the yarn install command to install the project dependencies. Run yarn runQuery to create the project database. Run yarn startdev to start the project. Visit http://localhost:3001/v1/messages and you should see some messages in a JSON format.

We will be happy to answer your questions on designing, developing, and deploying comprehensive enterprise web, mobile apps and customized software solutions that best fit your organization needs. As a reputed Software Solutions Developer we have expertise in providing dedicated remote and outsourced technical resources for software services at very nominal cost. Besides experts in full stacks We also build web solutions, mobile apps and work on system integration, performance enhancement, cloud migrations and big data analytics. Don’t hesitate to

get in touch with us!

0 notes

Text

Firebase Cloud Messaging

Nowadays one of the stable part of web, NOTIFICATIONS have occupied its place that it is very common to ask permission for sending notification to your browser when you visited sites. Push API is most modern and valuable insertion in web browser to handle push notification. According to caniuse , API bear a great assistance among modern chrome browers and Firefox browser.

Pusher and Firebase are the another implementing notification services for web. In this article, we will see the implementation of Firebase Cloud Messaging ( FCM) service, which give you a reliable and free messaging solution.

Let us assume that the reader has some intimacy with writing back-end application in Express.js and/some intimacy with React. If you have good knowledge in either of these technologies, then you could work with either frontend or backend, one should implement the backend first, followed by frontend. Thus, you can use more applicable section. So let’s get started.

Classification of Firebase Messages

The requirement of Firebase documentation is that there are two component necessary for an FCM implementation.

An environment for implementation like Cloud Functions for Firebase or an app server on which to build, target and send message.

Message recipient client app like an ios, Android, or web (Java Script) from the corresponding platform with specific transport service.

We will take care of item 1 and item 2 in our express back-end app and front-end app respectively.

There are two types of message that FCM usually used.

Notification or display message that are usually handled by FCM SDK automatically used by optional data payload from client app.

Data message handled by client app.

In this tutorial we will learn about sending and receiving message from client app which is very affordable.

Setting Up A Firebase Project

The first thing is to set a Firebase Project, where FCM is a service and one should about some API keys. First create a Goggle account clicking here (ignore if you already have).

Setting a Google account head on Firebase console.

In Firebase console the following steps should carried out.

1. Click on add project.2. After entering a name click on continue.3. From the Analytical menu of web page you can always turn it on.4. Click continue and wait few minute for creating the project.5. Click continue to open the project. 6. Getting necessary key to complete the project, a complete configuration step for the frontend and backend separately.

FRONTEND

1. On the project page, click on the icon to add Firebase to your web app.2. Give your app a nick name3. Click on Resister app and wait few second for completion.4. On the next screen, you have to open app credential to store it somewhere and leave the window open to check it later.5. To return to your console click on continue to console.

BACKEND

1. A service account is needed to connect with our Firebase project from backend.2. In the project page click on the gear icon next to Project Overview to create a service account to use with Express backend.3. In this picture step 1 to 4 is indicated.4. A JSON file is downloaded.5. Don’t download it until you ready to use.6. Come back to this sections if a refresher is needed. A successful set up of a Firebase project is done and it is added to the web page. A fronted and backend is important for credentials.

Let’s observe how sending push notification is done by express backend.

Getting Started

I have set a project on Github with both server and a client. Steps of Getting started:

Fork the repo and check out the 01-get-started branch.

Open the project in your code editor of choice and observe the contents.

In the project root, we have two folders, client/ and server/.There’s also a .editorconfig file, a .gitignore, and a README.md.

The client folder contains a React app. This is where we will listen for notifications.

The server folder contains an express app. This is where we’ll send notifications from. The app is from the project we built in my other article.

Open a terminal and navigate to the client/ folder. Run the yarn install command to install the project dependencies. Then run yarn start to start the project. Visit http://localhost:3000 to see the live app.

Create a .env file inside the server/ folder and add the CONNECTION_STRING environment variable. This variable is a database connection URL pointing to a PostgreSQL database. If you need help with this, check out the Connecting The PostgreSQL Database And Writing A Model section of my linked article. You should also provide the PORT environment variable since React already runs on port 3000. I set PORT=3001 in my .env file.

Open a separate terminal and navigate to the server/ folder. Run the yarn install command to install the project dependencies. Run yarn runQuery to create the project database. Run yarn startdev to start the project. Visit http://localhost:3001/v1/messages and you should see some messages in a JSON format.

We will be happy to answer your questions on designing, developing, and deploying comprehensive enterprise web, mobile apps and customized software solutions that best fit your organization needs. As a reputed Software Solutions Developer we have expertise in providing dedicated remote and outsourced technical resources for software services at very nominal cost. Besides experts in full stacks We also build web solutions, mobile apps and work on system integration, performance enhancement, cloud migrations and big data analytics. Don’t hesitate to

get in touch with us!

#b2b seo

#b2b ecommerce

#b2bservices

#Ecommerce

0 notes

Text

Firebase Cloud Messaging

Nowadays one of the stable part of web, NOTIFICATIONS have occupied its place that it is very common to ask permission for sending notification to your browser when you visited sites. Push API is most modern and valuable insertion in web browser to handle push notification. According to caniuse , API bear a great assistance among modern chrome browers and Firefox browser.

Pusher and Firebase are the another implementing notification services for web. In this article, we will see the implementation of Firebase Cloud Messaging ( FCM) service, which give you a reliable and free messaging solution.

Let us assume that the reader has some intimacy with writing back-end application in Express.js and/some intimacy with React. If you have good knowledge in either of these technologies, then you could work with either frontend or backend, one should implement the backend first, followed by frontend. Thus, you can use more applicable section. So let’s get started.

Classification of Firebase Messages

The requirement of Firebase documentation is that there are two component necessary for an FCM implementation.

An environment for implementation like Cloud Functions for Firebase or an app server on which to build, target and send message.

Message recipient client app like an ios, Android, or web (Java Script) from the corresponding platform with specific transport service.

We will take care of item 1 and item 2 in our express back-end app and front-end app respectively.

There are two types of message that FCM usually used.

Notification or display message that are usually handled by FCM SDK automatically used by optional data payload from client app.

Data message handled by client app.

In this tutorial we will learn about sending and receiving message from client app which is very affordable.

Setting Up A Firebase Project

The first thing is to set a Firebase Project, where FCM is a service and one should about some API keys. First create a Goggle account clicking here (ignore if you already have).

Setting a Google account head on Firebase console.

In Firebase console the following steps should carried out.

1. Click on add project.2. After entering a name click on continue.3. From the Analytical menu of web page you can always turn it on.4. Click continue and wait few minute for creating the project.5. Click continue to open the project. 6. Getting necessary key to complete the project, a complete configuration step for the frontend and backend separately.

FRONTEND

1. On the project page, click on the icon to add Firebase to your web app.2. Give your app a nick name3. Click on Resister app and wait few second for completion.4. On the next screen, you have to open app credential to store it somewhere and leave the window open to check it later.5. To return to your console click on continue to console.

BACKEND

1. A service account is needed to connect with our Firebase project from backend.2. In the project page click on the gear icon next to Project Overview to create a service account to use with Express backend.3. In this picture step 1 to 4 is indicated.4. A JSON file is downloaded.5. Don’t download it until you ready to use.6. Come back to this sections if a refresher is needed. A successful set up of a Firebase project is done and it is added to the web page. A fronted and backend is important for credentials.

Let’s observe how sending push notification is done by express backend.

Getting Started

I have set a project on Github with both server and a client. Steps of Getting started:

Fork the repo and check out the 01-get-started branch.

Open the project in your code editor of choice and observe the contents.

In the project root, we have two folders, client/ and server/.There’s also a .editorconfig file, a .gitignore, and a README.md.

The client folder contains a React app. This is where we will listen for notifications.

The server folder contains an express app. This is where we’ll send notifications from. The app is from the project we built in my other article.

Open a terminal and navigate to the client/ folder. Run the yarn install command to install the project dependencies. Then run yarn start to start the project. Visit http://localhost:3000 to see the live app.

Create a .env file inside the server/ folder and add the CONNECTION_STRING environment variable. This variable is a database connection URL pointing to a PostgreSQL database. If you need help with this, check out the Connecting The PostgreSQL Database And Writing A Model section of my linked article. You should also provide the PORT environment variable since React already runs on port 3000. I set PORT=3001 in my .env file.

Open a separate terminal and navigate to the server/ folder. Run the yarn install command to install the project dependencies. Run yarn runQuery to create the project database. Run yarn startdev to start the project. Visit http://localhost:3001/v1/messages and you should see some messages in a JSON format.

We will be happy to answer your questions on designing, developing, and deploying comprehensive enterprise web, mobile apps and customized software solutions that best fit your organization needs. As a reputed Software Solutions Developer we have expertise in providing dedicated remote and outsourced technical resources for software services at very nominal cost. Besides experts in full stacks We also build web solutions, mobile apps and work on system integration, performance enhancement, cloud migrations and big data analytics. Don’t hesitate to

get in touch with us!

0 notes

Text

Google Professional Machine Learning Engineer Exam Questions

New Google Professional Machine Learning Engineer Exam Questions have been released online to help you prepare for the Professional Machine Learning Engineer Exam to complete your certification. PassQuestion team collected Google Professional Machine Learning Engineer real exam questions and verified all the answers to ensure that you can pass Professional Machine Learning Engineer exam in the first go. Choosing Google Professional Machine Learning Engineer Exam Questions is a great way to complete your Professional Machine Learning Engineer exam.

Google Professional Machine Learning Engineer Exam Overview

Machine Learning Engineer certification exam is a two-hour exam which assesses individuals’ ability to frame ML problems, develop ML models, and architect ML solutions. It also evaluates abilities to automate ML pipelines, orchestrate ML pipelines, prepare data, process data, as well as monitor, optimize, and maintain ML solutions. We recommend you have at least three years of industry experience, including one year of experience designing and managing solutions using Google Cloud before taking the exam.

A Professional Machine Learning Engineer designs, builds, and productionizes ML models to solve business challenges using Google Cloud technologies and knowledge of proven ML models and techniques. The ML Engineer is proficient in all aspects of model architecture, data pipeline interaction, and metrics interpretation and needs familiarity with application development, infrastructure management, data engineering, and security.

Professional Machine Learning Engineer Exam Information

Length: Two hours

Registration fee: $200 (plus tax where applicable)

Language: English

Exam format: Multiple choice and multiple select

Exam Delivery Method:

Take the online-proctored exam from a remote location, review the online testing requirements.

Take the onsite-proctored exam at a testing center, locate a test center near you.

Prerequisites: None

Recommended experience: 3+ years of industry experience including 1+ years designing and managing solutions using GCP.

Professional Machine Learning Engineer Exam Topics

Frame ML problems

Architect ML solutions

Prepare and process data

Develop ML models

Automate & orchestrate ML pipelines

Monitor, optimize, and maintain ML solutions

View Online Google Professional Machine Learning Engineer Free Questions

NO.1 You are an ML engineer at a global shoe store. You manage the ML models for the company's website. You are asked to build a model that will recommend new products to the user based on their purchase behavior and similarity with other users. What should you do?

A. Build a collaborative-based filtering model

B. Build a classification model

C. Build a regression model using the features as predictors

D. Build a knowledge-based filtering model

Answer: A

NO.2 You have been asked to develop an input pipeline for an ML training model that processes images from disparate sources at a low latency. You discover that your input data does not fit in memory. How should you create a dataset following Google-recommended best practices?

A. Convert the images to tf .Tensor Objects, and then run tf. data. Dataset. from_tensors ().

B. Convert the images to tf .Tensor Objects, and then run Dataset. from_tensor_slices{).

C. Convert the images Into TFRecords, store the images in Cloud Storage, and then use the tf. data API to read the images for training

D. Create a tf.data.Dataset.prefetch transformation

Answer: C

NO.3 You work for an online retail company that is creating a visual search engine. You have set up an end-to-end ML pipeline on Google Cloud to classify whether an image contains your company's product. Expecting the release of new products in the near future, you configured a retraining functionality in the pipeline so that new data can be fed into your ML models. You also want to use Al Platform's continuous evaluation service to ensure that the models have high accuracy on your test data set. What should you do?

A. Keep the original test dataset unchanged even if newer products are incorporated into retraining

B. Extend your test dataset with images of the newer products when they are introduced to retraining

C. Replace your test dataset with images of the newer products when they are introduced to retraining.

D. Update your test dataset with images of the newer products when your evaluation metrics drop below a pre-decided threshold.

Answer: C

NO.4 You are developing a Kubeflow pipeline on Google Kubernetes Engine. The first step in the pipeline is to issue a query against BigQuery. You plan to use the results of that query as the input to the next step in your pipeline. You want to achieve this in the easiest way possible. What should you do?

A. Use the BigQuery console to execute your query and then save the query results Into a new BigQuery table.

B. Write a Python script that uses the BigQuery API to execute queries against BigQuery Execute this script as the first step in your Kubeflow pipeline

C. Locate the Kubeflow Pipelines repository on GitHub Find the BigQuery Query Component, copy that component's URL, and use it to load the component into your pipeline. Use the component to execute queries against BigQuery

D. Use the Kubeflow Pipelines domain-specific language to create a custom component that uses the Python BigQuery client library to execute queries

Answer: A

NO.5 You manage a team of data scientists who use a cloud-based backend system to submit training jobs. This system has become very difficult to administer, and you want to use a managed service instead. The data scientists you work with use many different frameworks, including Keras, PyTorch, theano. Scikit-team, and custom libraries. What should you do?

A. Set up Slurm workload manager to receive jobs that can be scheduled to run on your cloud infrastructure.

B. Create a library of VM images on Compute Engine; and publish these images on a centralized repository

C. Configure Kubeflow to run on Google Kubernetes Engine and receive training jobs through TFJob

D. Use the Al Platform custom containers feature to receive training jobs using any framework

Answer: A

0 notes