#Speech Recognition AI

Photo

AI is outpacing human performance in reading comprehension, language understanding as well as image, handwriting, and speech recognition.

(via AI vs. human – which one is better in major benchmarks? (chart))

9 notes

·

View notes

Text

we invite you to explore the limitless possibilities that AI unlocks in the world of video production. The future is here, and it’s intelligent, creative, and boundless. Embrace it with Creative Splash!

#AI#AI can analyse trends#AI-powered voice and speech#recognition audience behaviour#future of Video#production role of AI#Video production#social media#data creation#technology advancement#video production

0 notes

Text

AI for Speech Recognition Market is Set To Fly High in Years to Come

Advance Market Analytics published a new research publication on "AI for Speech Recognition Market Insights, to 2028" with 232 pages and enriched with self-explained Tables and charts in presentable format. In the Study you will find new evolving Trends, Drivers, Restraints, Opportunities generated by targeting market associated stakeholders. The growth of the AI for Speech Recognition market was mainly driven by the increasing R&D spending across the world.

Get Free Exclusive PDF Sample Copy of This Research @ https://www.advancemarketanalytics.com/sample-report/113270-global-ai-for-speech-recognition-market

Some of the key players profiled in the study are: Nuance Communications (United States), Google LLC (United States), Amazon.com, Inc (United States), Apple, Inc. (United States), IBM Corporation (United States), Microsoft Corporation (United States), Baidu (China), iFLYTEK (China), Sensory, Inc. (United States), LumenVox LLC (United States).

Scope of the Report of AI for Speech Recognition

Speech recognition is a type of authentication technology that aids in receiving and comprehending human voice commands as well as carrying them out. This technique works by transforming commands into electronic signals, changing them into coding patterns, and transmitting them back to the device in digital format for final execution. Due to increases in processing capacity and improvements in internet connection, this technology has lately been adopted in mobile devices and other consumer goods, despite the fact that it was invented several decades ago. This tendency, combined with the growing popularity of artificial intelligence (AI) and virtual assistants like Apple Siri, Amazon Alexa, and Google Assistant, has fueled demand for this application in the electronics industry.

The titled segments and sub-section of the market are illuminated below:

by Type (Software, Hardware), Application (Automotive, Marketing, Automotive, Healthcare), Devices (Smartphones, Tablets, PCs and laptops), Deployment (Cloud base, On premises)

Market Trends:

Increasing Usage Of The AI-Based Voice Recognition Systems Develop Region

Opportunities:

Rising Adoption Of Speech Recognition In Various Sectors

Rising Digitization And Government Policies Favoring Incentives For Digitization

Market Drivers:

Increasing Demand Of Smart Devices Among People

Region Included are: North America, Europe, Asia Pacific, Oceania, South America, Middle East & Africa

Country Level Break-Up: United States, Canada, Mexico, Brazil, Argentina, Colombia, Chile, South Africa, Nigeria, Tunisia, Morocco, Germany, United Kingdom (UK), the Netherlands, Spain, Italy, Belgium, Austria, Turkey, Russia, France, Poland, Israel, United Arab Emirates, Qatar, Saudi Arabia, China, Japan, Taiwan, South Korea, Singapore, India, Australia and New Zealand etc.

Have Any Questions Regarding Global AI for Speech Recognition Market Report, Ask Our Experts@ https://www.advancemarketanalytics.com/enquiry-before-buy/113270-global-ai-for-speech-recognition-market

Strategic Points Covered in Table of Content of Global AI for Speech Recognition Market:

Chapter 1: Introduction, market driving force product Objective of Study and Research Scope the AI for Speech Recognition market

Chapter 2: Exclusive Summary – the basic information of the AI for Speech Recognition Market.

Chapter 3: Displayingthe Market Dynamics- Drivers, Trends and Challenges & Opportunities of the AI for Speech Recognition

Chapter 4: Presenting the AI for Speech Recognition Market Factor Analysis, Porters Five Forces, Supply/Value Chain, PESTEL analysis, Market Entropy, Patent/Trademark Analysis.

Chapter 5: Displaying the by Type, End User and Region/Country 2015-2020

Chapter 6: Evaluating the leading manufacturers of the AI for Speech Recognition market which consists of its Competitive Landscape, Peer Group Analysis, BCG Matrix & Company Profile

Chapter 7: To evaluate the market by segments, by countries and by Manufacturers/Company with revenue share and sales by key countries in these various regions (2023-2028)

Chapter 8 & 9: Displaying the Appendix, Methodology and Data Source

finally, AI for Speech Recognition Market is a valuable source of guidance for individuals and companies.

Read Detailed Index of full Research Study at @ https://www.advancemarketanalytics.com/reports/113270-global-ai-for-speech-recognition-market

Thanks for reading this article; you can also get individual chapter wise section or region wise report version like North America, Middle East, Africa, Europe or LATAM, Southeast Asia.

#Global AI for Speech Recognition Market#AI for Speech Recognition Market Demand#AI for Speech Recognition Market Trends#AI for Speech Recognition Market Analysis#AI for Speech Recognition Market Growth#AI for Speech Recognition Market Share#AI for Speech Recognition Market Forecast#AI for Speech Recognition Market Challenges

0 notes

Link

0 notes

Text

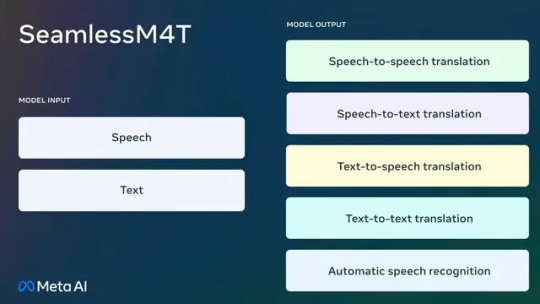

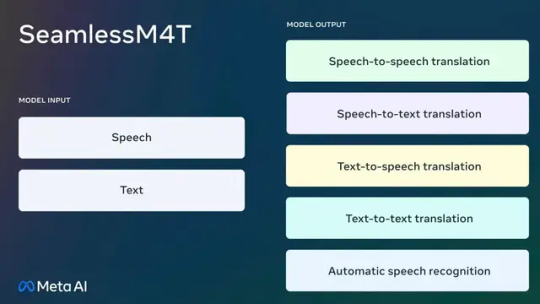

Meta Releases SeamlessM4T Translation AI for Text and Speech

Meta took a step towards a universal language translator on Tuesday with the release of its new Seamless M4T AI model, which the company says can quickly and efficiently understand language from speech or text in up to 100 languages and generate translation in either mode of communication. Multiple tech companies have released similar advanced AI translation models in recent months.

In a blog…

View On WordPress

#Applications of artificial intelligence#Computational linguistics#Creative Commons#Gizmodo#Human Interest#Internet#Large language model#Machine translation#Massively Multilingual#Massively Multilingual Speech system#META#Meta AI#Multilingualism#Paco#Paco Guzmán#Speech recognition#Speech synthesis#Technology#Translation

0 notes

Text

ngl it's driving me a little bit fucking insane that the whole conversation about image scraping for AI has settled on copyright and legality as a primary concern, and not consent. my shit should not be used without my consent. I will give it away for free, but I want to be asked.

I don't want to be included in studies without my knowledge or consent. I don't want my face captured for the training of facial recognition models without my knowledge or consent. I don't want my voice captured for the training of speech recognition models without my consent. I don't want my demographic or interest profile captured without my consent. I don't want my art harvested for visual model training without my consent. It's not about 'theft' (fake idea) or 'ownership' (fake idea) or 'inherent value' (fake idea). It's about my ability to opt out from being used as a data point. I object to being a commodity by default.

31K notes

·

View notes

Text

How to Use Large Language Models for Speech Recognition ?

Large language models (LLMs) are a type of artificial intelligence (AI) that can be used for a variety of tasks, including speech recognition. LLMs are trained on massive datasets of text and code, which allows them to learn the statistical relationships between words and phrases. This knowledge can then be used to predict the next word in a sequence, even if the audio is noisy or distorted.

There are two main ways to use LLMs for speech recognition:

First-pass recognition: In this approach, the LLM is used to generate a list of possible transcriptions for the audio. This list is then passed to a traditional speech recognition system, which uses its own algorithms to select the most likely transcription.

Second-pass rescoring: In this approach, the LLM is used to rescore the output of a traditional speech recognition system. This means that the LLM is used to evaluate the likelihood of each transcription, and the transcription with the highest likelihood is selected as the final output.

LLMs have been shown to be effective for both first-pass recognition and second-pass rescoring. In particular, LLMs can be used to improve the performance of speech recognition systems in noisy environments and for speakers with accents.

Here are some of the benefits of using LLMs for speech recognition:

Improved accuracy: LLMs can improve the accuracy of speech recognition systems by up to 20% in noisy environments.

Robustness to accents: LLMs are more robust to accents than traditional speech recognition systems. This is because LLMs are trained on a massive dataset of text from a variety of speakers.

Scalability: LLMs can be scaled to handle large volumes of audio data. This makes them ideal for use in applications such as call centers and customer service.

Here are some of the challenges of using LLMs for speech recognition:

Data requirements: LLMs require a large amount of training data to be effective. This can be a challenge for some applications, such as medical transcription, where there is limited data available.

Computational complexity: LLMs can be computationally expensive to train and use. This can be a challenge for some applications, such as real-time speech recognition.

Interpretability: LLMs are not always easy to interpret. This can make it difficult to debug and improve LLM-based speech recognition systems.

Overall, LLMs are a promising technology for speech recognition. They have the potential to improve the accuracy, robustness, and scalability of speech recognition systems. However, there are still some challenges that need to be addressed before LLMs can be widely adopted for speech recognition applications.

https://astconsulting.in/artificial-intelligence/nlp-natural-language-processing/how-to-use-large-language-models-speech-recognition/?utm_source=Tumblr&utm_medium=social&utm_campaign=promotion

0 notes

Text

Delve into the inner workings of ML-driven speech recognition systems, gaining insights into the impressive algorithms that have reshaped human-computer communication.

#ML Techniques for Speech Recognition#MachineLearningTech#technology#machine learning#ai tools#automation#VoiceControlledSystems

0 notes

Link

0 notes

Text

What is the audio datasets for machine learning, and how it helps different industries?

What is Audio Datasets?

An audio dataset for machine learning is a collection of audio recordings that have been labeled and organized for use in training machine learning algorithms. These datasets are typically used for tasks such as speech recognition, audio classification, and audio segmentation.

There are a variety of audio datasets available for machine learning, including the Common Voice dataset, the Speech Commands dataset, and the UrbanSound8K dataset. These datasets vary in size, scope, and quality, and are often designed for specific applications or use cases.

Audio datasets can be used in a variety of industries to improve efficiency, accuracy, and productivity. In the healthcare industry, audio datasets can be used to analyze patient recordings to identify patterns and diagnose conditions. In the finance industry, audio datasets can be used to transcribe earnings calls and other financial reports. In the entertainment industry, audio datasets can be used to improve speech recognition for virtual assistants and improve the accuracy of voice recognition for video game characters.

Overall, the use of audio datasets for machine learning is becoming increasingly important as more industries recognize the value of audio data and the potential it holds for improving their operations and services.

What are datasets used for in machine learning?

Datasets are used in machine learning to train, evaluate, and test machine learning algorithms. A dataset is a collection of data that is used to represent a particular problem or phenomenon. It is a critical component of machine learning, as the quality and size of the dataset can have a significant impact on the performance of the resulting machine learning model.

Machine learning algorithms use datasets to learn patterns and relationships within the data. The datasets are typically divided into two parts: the training dataset and the testing dataset. The training dataset is used to train the machine learning model, while the testing dataset is used to evaluate the performance of the trained model.

Datasets can be collected from various sources, including databases, online sources, and sensors. They can be labeled, meaning that each data point is associated with a specific output, or they can be unlabeled, meaning that there is no output associated with each data point.

Commonly used datasets in machine learning include the MNIST dataset for image recognition, the Iris dataset for classification, and the Boston Housing dataset for regression. In addition to these, there are many other publicly available datasets that researchers and developers can use to train and test machine learning models.

What machine learning models are used in the industry?

Linear Regression — used for predicting continuous numerical values.

Logistic Regression — used for classification problems where the output is binary.

Decision Trees — used for both classification and regression problems, based on hierarchical decisions.

Random Forest — an ensemble learning method that combines multiple decision trees to make more accurate predictions.

Support Vector Machines (SVM) — used for classification, regression and outlier detection by defining a hyperplane in a high-dimensional space.

Gradient Boosting Machines (GBM) — a boosting technique that builds models in a slow learning and iterative way, with each new model improving upon the errors of the previous ones.

Neural Networks — a highly flexible and powerful approach that can be used for a wide range of tasks, including image and speech recognition, natural language processing, and game playing.

Convolutional Neural Networks (CNN) — a type of neural network commonly used for image and video analysis.

Recurrent Neural Networks (RNN) — a type of neural network commonly used for sequential data analysis such as natural language processing, speech recognition and time series data.

Long Short-Term Memory (LSTM) — a type of RNN that can learn long-term dependencies and is useful for time series analysis.

These are just a few examples of the machine learning models used in the industry, and there are many other models and variations of these models that are also used.

What is an Audio Datasets?

An audio dataset is a collection of audio recordings that have been labeled for specific purposes. These recordings can be of any type, such as music, speech, environmental sounds, or any other type of sound. The dataset is used to train machine learning algorithms to recognize and classify these sounds automatically. This process is known as audio recognition.

The audio dataset comprises both raw audio recordings and metadata that describe the characteristics of the sound. For example, if the dataset contains speech recordings, the metadata might include the gender of the speaker, their accent, and the language spoken. This information helps the machine learning algorithm to learn to recognize and classify sounds based on their specific features.

How Does an Audio Datasets Help Different Industries?

Speech Recognition:

Speech recognition dataset is one of the most common applications of audio datasets. It is used in various industries such as healthcare, customer service, and banking. An audio dataset is used to train speech recognition systems to recognize speech and convert it into text. This technology is used in various applications, such as virtual assistants, dictation software, and call center automation.

Music Classification:

The music industry has been using audio datasets for a long time to classify music. These datasets are used to train machine learning algorithms to recognize different genres of music, such as rock, pop, jazz, and classical. The music classification technology is used in various applications such as music recommendation systems, personalized playlists, and music search engines.

Environmental Sound Recognition:

Environmental sound recognition is another application of audio datasets. It is used in various industries such as surveillance, security, and traffic management. An audio dataset is used to train machine learning algorithms to recognize different sounds, such as sirens, car horns, gunshots, and explosions. This technology is used to detect abnormal sounds and alert the authorities in case of any emergency.

Emotional Recognition:

Emotional recognition is a new application of audio datasets. It is used in the entertainment industry and mental health research. An audio dataset is used to train machine learning algorithms to recognize emotions in speech. This technology is used in various applications, such as virtual assistants, chatbots, and mental health assessment tools.

Conclusion:

Audio datasets are essential in AI training datasets for machine learning algorithms to recognize and classify different types of sounds. They are used in various industries such as healthcare, customer service, banking, music, surveillance, security, traffic management, entertainment, and mental health research. The development of audio recognition technology has brought a new wave of innovation in the industry, and we can expect more applications in the future.

0 notes

Text

The Future Unleashed: The Role of AI in Video Production

Step into the future of video production, where technology and creativity converge in unprecedented ways. At Creative Splash, we’re at the forefront of innovation, and today, we’re unravelling the transformative influence of Artificial Intelligence (AI) in reshaping the landscape of video creation. Join us as we explore the dynamic role AI plays in revolutionizing the art and science of video production.

Automated Editing: Redefining Efficiency Witness the evolution of the editing room, where AI algorithms are streamlining the post-production process. Dive into how automated editing tools analyse footage, identify key moments, and seamlessly piece together a visual narrative. Explore how this not only enhances efficiency but also provides editors with more time for creative refinement.

Personalization at Scale: AI is unlocking the potential for personalized video content on an unprecedented scale. Delve into how algorithms analyse user data to tailor video content based on individual preferences. From personalized product recommendations to dynamically generated content, discover how AI is reshaping the way brands connect with their diverse audience.

Enhancing Visual Effects with AI: Experience the fusion of AI and visual effects, elevating the cinematic experience to new heights. Explore how AI-driven tools contribute to realistic CGI, facial recognition, and scene analysis. Uncover the ways in which AI is not just a tool for efficiency but a catalyst for pushing the boundaries of visual storytelling.

Predictive Analytics for Audience Engagement: AI isn’t just about post-production; it’s also a guide for pre-production decisions. Discover how predictive analytics powered by AI can analyse trends, audience behaviour, and social media data to inform content creation strategies. Learn how this data-driven approach helps in crafting videos that resonate with the target audience.

Voice and Speech Recognition: The Dialogue Revolution: Uncover the impact of AI-powered voice and speech recognition on video production. Explore how these technologies enable automated transcription, language translation, and even voice-controlled editing. Dive into the possibilities of breaking language barriers and creating more accessible and engaging content.

Streamlining Workflow and Efficiency: Explore how AI is acting as a virtual assistant for video production teams. From organizing files to automating repetitive tasks, AI is enhancing workflow efficiency. Learn how Creative Splash integrates AI tools to create a seamless production process, allowing our team to focus on the creative aspects of video production.

Ethical Considerations and Creative Collaboration: As AI becomes more integrated into video production, it brings forth ethical considerations. Delve into the importance of balancing automation with human creativity. Learn how Creative Splash approaches ethical considerations, emphasizing the collaboration between AI tools and human ingenuity to maintain the authenticity of creative expression.

Conclusion: The infusion of AI into video production is not just a technological advancement; it’s a revolution. At Creative Splash, we embrace the symbiotic relationship between AI and creativity, harnessing the power of technology to elevate our storytelling capabilities. As we ride the wave of innovation, we invite you to explore the limitless possibilities that AI unlocks in the world of video production. The future is here, and it’s intelligent, creative, and boundless. Embrace it with Creative Splash!

#AI#AI canalyse trends#AI-powered voice and speech recognition#audience behaviour#future of Video production#role of AI in Video production#social media#data creation technology#advancement video production

0 notes

Text

This is not a drill

This is IMPORTANT especially if you live in the USA or use the internet REGULATED by the USA!!!!

Do not scroll. Signal boost. Reblog.

Reblog WITHOUT reading if you really can't right now, I promise all the links and proof are here. People NEED to know this.

( I tried to make this accessible but you can't cater to EVERYONE so please just try your best to get through this or do your own research 🙏)

TLDR: Homeland Security has been tying our social media to our IPs, licenses, posts, emails, selfies, cloud, apps, location, etc through our phones without a warrant using Babel X and will hold that information gathered for 75 years. Certain aspects of it were hushed because law enforcement will/does/has used it and it would give away confidential information about ongoing operations.

This gets renewed in September.

Between this, Agincourt (a VR simulator for cops Directly related to this project), cop city, and widespread demonization of abortions, sex workers, & queer people mixed with qanon/Trumpism, and fascism in Florida, and the return of child labor, & removed abortion rights fresh on our tails it's time for alarms to be raised and it's time for everyone to stop calling us paranoid and start showing up to protest and mutual aid groups.

🚨🚨🚨🚨🚨🚨🚨🚨🚨🚨

These are the same feds who want to build cop city and recreate civilian houses en masse and use facial recognition. The same feds that want cop city to also be a training ground for police across the country. Cop city where they will build civilian neighborhoods to train in.

Widespread mass surveillance against us.

Now let's cut to some parts of the article. May 17th from Vice:

Customs and Border Protection (CBP) is using an invasive, AI-powered monitoring tool to screen travelers, including U.S. citizens, refugees, and people seeking asylum, which can in some cases link their social media posts to their Social Security number and location data, according to an internal CBP document obtained by Motherboard.

Called Babel X, the system lets a user input a piece of information about a target—their name, email address, or telephone number—and receive a bevy of data in return, according to the document. Results can include their social media posts, linked IP address, employment history, and unique advertising identifiers associated with their mobile phone. The monitoring can apply to U.S. persons, including citizens and permanent residents, as well as refugees and asylum seekers, according to the document.

“Babel data will be used/captured/stored in support of CBP targeting, vetting, operations and analysis,” the document reads. Babel X will be used to “identify potential derogatory and confirmatory information” associated with travelers, persons of interest, and “persons seeking benefits.” The document then says results from Babel X will be stored in other CBP operated systems for 75 years.

"The U.S. government’s ever-expanding social media dragnet is certain to chill people from engaging in protected speech and association online. And CBP’s use of this social media surveillance technology is especially concerning in connection with existing rules requiring millions of visa applicants each year to register their social media handles with the government. As we’ve argued in a related lawsuit, the government simply has no legitimate interest in collecting and retaining such sensitive information on this immense scale,” Carrie DeCell, senior staff attorney at the Knight First Amendment Institute, told Motherboard in an email.

The full list of information that Babel X may provide to CBP analysts is a target’s name, date of birth, address, usernames, email address, phone number, social media content, images, IP address, Social Security number, driver’s license number, employment history, and location data based on geolocation tags in public posts.

Bennett Cyphers, a special advisor to activist

organization the Electronic Frontier Foundation, told Motherboard in an online chat “the data isn’t limited to public posts made under someone’s real name on Facebook or Twitter.”

The document says CBP also has access to AdID information through an add-on called Locate X, which includes smartphone location data. AdID information is data such as a device’s unique advertising ID, which can act as an useful identifier for tracking a phone and, by extension, a person’s movements. Babel Street obtains location information from a long supply chain of data. Ordinary apps installed on peoples’ smartphones provide data to a company called Gravy Analytics, which repackages that location data and sells it to law enforcement agencies via its related company Venntel. But Babel Street also repackages Venntel’s data for its own Locate X product."

The PTA obtained by Motherboard says that Locate X is covered by a separate “commercial telemetry” PTA. CBP denied Motherboard’s FOIA request for a copy of this document, claiming it “would disclose techniques and/or procedures for law enforcement investigations or prosecutions”.

A former Babel Street employee previously told Motherboard how users of Locate X can draw a shape on a map known as a geofence, see all devices Babel Street has data on for that location, and then follow a specific device to see where else it has been.

Cyphers from the EFF added “most of the people whose location data is collected in this way likely have no idea it’s happening.”

CBP has been purchasing access to location data without a warrant, a practice that critics say violates the Fourth Amendment. Under a ruling from the Supreme Court, law enforcement agencies need court approval before accessing location data generated by a cell phone tower; those critics believe this applies to location data generated by smartphone apps too.

“Homeland Security needs to come clean to the American people about how it believes it can legally purchase and use U.S. location data without any kind of court order. Americans' privacy shouldn't depend on whether the government uses a court order or credit card,” Senator Ron Wyden told Motherboard in a statement. “DHS should stop violating Americans' rights, and Congress should pass my bipartisan legislation to prohibit the government's purchase of Americans' data." CBP has refused to tell Congress what legal authority it is following when using commercially bought smartphone location data to track Americans without a warrant.

Neither CBP or Babel Street responded to a request for comment. Motherboard visited the Babel X section of Babel Street’s website on Tuesday. On Wednesday before publication, that product page was replaced with a message that said “page not found.”

Do you know anything else about how Babel X is being used by government or private clients? Do you work for Babel Street? We'd love to hear from you. Using a non-work phone or computer, you can contact Joseph Cox securely on Signal on +44 20 8133 5190, Wickr on josephcox, or email [email protected].

Wow that sounds bad right.

Be a shame if it got worse.

.

.

It does.

The software (previously Agincourt Solutions) is sold by AI data company Babel Street, was led by Jeffrey Chapman, a former Treasury Department official,, Navy retiree & Earlier in his career a White House aide and intelligence officer at the Department of Defense, according to LinkedIn.

🙃

So what's Agincourt Solutions then right now?

SO FUCKING SUS IN RELATION TO THIS, THATS WHAT

In essence, synthetic BATTLEVR training is a mixture of all three realities – virtual, augmented and physical. It is flexible enough to allow for mission rehearsals of most types and be intuitive enough to make training effective.

Anyway the new CEO of Babel Street (Babel X) as of April is a guy named Michael Southworth and I couldn't find much more on him than that tbh, it's all very vague and missing. That's the most detail I've seen on him.

And the detail says he has a history of tech startups that scanned paperwork and sent it elsewhere, good with numbers, and has a lot of knowledge about cell networks probably.

Every inch more of this I learn as I continue to Google the names and companies popping up... It gets worse.

Monitor phone use. Quit photobombing and filming strangers and for the love of fucking God quit sending apps photos of your actual legal ID to prove your age. Just don't use that site, you'll be fine I swear. And quit posting your private info online. For activists/leftists NO personally identifiable info at least AND DEFINITELY leave your phone at home to Work™!!!

#current events#capitalism#USA#politics#facism#public surveillance#anarchists#democrats#lgbt#direct action#leftist#ndn#indigenous#lesbian#gay#trans#bisexual#neurodivergent#Feminism#privacy rights#human rights#internet#tumblr#signal boost#desantis#florida#queer#refugees#immigration#threads

7K notes

·

View notes

Photo

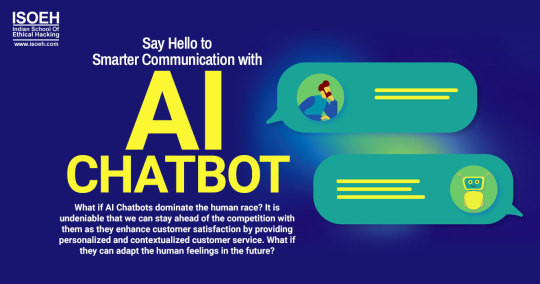

Smart AI Chatbots understands customers' language and expressions and has real-time solving power with accurate answers. Explore its benefits and limitations.

#AI Chatbot#Natural language processing (NLP)#Machine learning (ML)#Artificial intelligence (AI)#Conversational interface#Virtual assistant#Customer service#Chatbot development#Personalization#Automation#User experience (UX)#Human-like interactions#Sentiment analysis#Multilingual support#Text-to-speech (TTS)#Voice recognition#Data analytics#Omnichannel support#Dialog management#Intent recognition#Active Learning#Maximizing efficiency#Customers' value#Chatbots

0 notes

Link

Throughout the projection period, there will likely be a greater need for AI speech recognition solutions as additional digital assistants with artificial...

0 notes

Text

Natural Language Processing (NLP) is a part of artificial intelligence that allows computers to recognize natural language – the words and sentences that humans use to communicate – to generate value. While machines are excellent at operating with and understanding structured data (such as spreadsheets and database tables), they’re not so great at deciphering unstructured data, for instance, raw text in English, Polish, Chinese, or any other human language. To know more about browse: https://teksun.com/ Contact us ID: [email protected]

#Deep Learning#Machine Translation#Sentiment Analysis#Text Classification#Text Summarization#Word Embeddings#Chatbots#Speech Recognition#product engineering services#product engineering company#iot and ai solutions#digital transformation#technology solution partner#product engineering solutions#Teksun Teksuninc

0 notes

Text

Meta Releases SeamlessM4T Translation AI for Text and Speech

Meta took a step towards a universal language translator on Tuesday with the release of its new Seamless M4T AI model, which the company says can quickly and efficiently understand language from speech or text in up to 100 languages and generate translation in either mode of communication. Multiple tech companies have released similar advanced AI translation models in recent months.

In a blog…

View On WordPress

#Applications of artificial intelligence#Computational linguistics#Creative Commons#Gizmodo#Human Interest#Internet#Large language model#Machine translation#Massively Multilingual#Massively Multilingual Speech system#META#Meta AI#Multilingualism#Paco#Paco Guzmán#Speech recognition#Speech synthesis#Technology#Translation

0 notes