#Section 230

Text

Hey remember when Tumblr banned porn and everyone fled to Twitter and the internet by and large became that much more insufferable?

Yeah well, we’re about to get the sequel no one’s been waiting for

A recent lawsuit is calling into question the very law that makes user-generated content possible on the internet and it’s going before a bunch of old people who have no idea how the internet works.

If Section 230 gets repealed or modified, the internet as we know it will radically change forever. Content policing will be that much worse; and either the guidelines will be so restrictive it’ll squeeze the life out of their sites, or sites will close their doors all together by not being able to meet the moderation demand and not wanting to risk being liable.

Unlike SOPA way back in the day, this one is quietly flying under everyone’s radar so there’s no big pushback like last time, so there is a very real possibility this goes down in the worst way possible.

So

Yeah

Ya’ll may want to start saving your favorite content if you feel it’s going to go bye-bye

33K notes

·

View notes

Text

STOP KOSA(and cie) MASTERPOST 2024

Both KOSA and the Earn It Act bill are dangerous for the future of the Internet.

In a nutshell, KOSA would allow states to sue any websites that host content deemed “harmful” to minors. With such a vague wording, its expected that any NSFW stuff, educational ressources or LGBT content, will immediately be taken down if govts dont approve of it.

Plus, it will be made mandatory to use IDs to confirm your age when going online, to so called “protect kids” but all that will cause is a huge potential data breach and endanger more kids.

As for the Earn It Act bill, it would allow the governement to spy and filter out anything they dont like in private dms for any users,as well as blowing a hole into Section 230 : The thing that prevent websites from being directly liable in case a user post something illegal,instead of the user being punished directly.

The comics I made about KOSA and Earn It Act are old but sadly still relevant. All the info you need are in this post.

•KOSA Comic

•Earn It Act comic

•KOSA UPDATE + CALL IN DAY THE 16TH OF APRIL !

(Made the April 11, 2024)

•When contacting your reps, you may also add that they should support better bills that will make kids (and anyone) safer by focusing on data privacy legislations instead of KOSA

• Contact Congress through here https://www.stopkosa.com/

• House Energy and Commerce are the best to contact for the hearing of 17th April 2024

https://energycommerce.house.gov/representatives

(the link doesnt work properly so you'll need to head to the site and select "Members" to find them)

• Find all your Congresspeople here http://badinternetbills.com/

• Find your House representative here https://www.house.gov/representatives/find-your-representative

•Never forget to make tweets, posts, tiktoks, or use any social media you can think of to talk about this : Spreading the word will be crucial.

As always, if you wish to help us fight against bad inetrnet bills,and have the latest infos about KOSA, consider joining our Discord server

(if not, please just share it around)

• https://discord.gg/pwTSXZMxnH

#kosa#kids online safety act#internet censorship#us politics#supreme court#section 230#earn it act#net neutrality

739 notes

·

View notes

Text

HELP FIGHT AGAINST KOSA !

Sadly this bill is still a thing, Senate opens back up the 8th January 2024 and its HIGHLY probable KOSA will be brought back. We need to keep the pressure up!!

Site with good ressources :

https://www.badinternetbills.com/

My previous posts about bad bills (old but relevant):

-Old post

-Kosa comic

-Earn it comic

COME JOIN OUR SERVER TO HELP FIGHT AGAINST BAD BILLS !

We organize call in days to senators,faxing,along with scripts and ressources to work with!

https://discord.gg/pwTSXZMxnH

LETS KEEP OUR INTERNET PROTECTED !

PLEASE REBLOG TO SPREAD THE WORD!

419 notes

·

View notes

Text

The internet censorship is coming..(again)

There are two well known censorship bill known as KOSA and the EarnIt act.

These bills both promised that they will protect children but unfortunately these are misguided bills that says they’ll do something but then they will do the opposite or make things worse.

Both of these bills are serious threat to the LGBT community and will censor a lot of content especially there

the EarnItact will also get rid of NSFW content and deem it as illegal, and will also get rid of section 230

The KOSA act will let attorneys from Florida and Texas take control and decide what people could and could not watch and sue websites and anything they don’t like and will not protect children but mostly put many vulnerable teens and children at risk while going as far as to even censor important information like sex education, health issues, suicide prevention hotlines and many more

We have stopped these bills from passing before but the cofounder Richard Bluemenhal is clearly not giving up and trying hard and hard again to push these bills back on congress

Last year more than 90/100 human rights groups urged lawmakers and congress to not pass KOSA in the omnibus bill and it got shelved and the same then happened to Earn it last year on February/March

But now he is trying a third time,using and manipulating grieving parents and young people into supporting and lobbying his bills, whiles even accepting anti trans and LGBT groups into supporting his legislations. He’s trying to find any type of scandal a platform is currently facing and turn and twist it on behalf of his agendas.

He says he supports abortions and the LGBT community but his bills will censor those things he claims to support. He can’t have it both ways.

But he was stubborn enough to ignore every criticism and scrutiny he gets about the legislations, being childish and all.

Not to mention that they are also both privacy nightmares to everyone and globally too

That’s why it’s important that you call and email your representatives and lawmakers and urge them to drop Kosa and the earn it act

Let any human rights group you trust knows and tell anyone you trust about it weather it be a friend or family member.

For more information, click these links below ⬇️

You can also help us by joining our discord server on how to stop internet censorship

There also a petition made from Fightforfuture recently about the KOSA act

(Update # 2)

Hey guys I’m back to warn everyone about yet again another bad internet bill it’s called the safe tech act

This act is supported by 7 democratic senators including bluemenhal which is never a good sign with him when it comes to internet bills.

This is a misguided 230 reform and when reading it, all it shows is that these people have no understanding of 230 whatsoever.

It’s just another dangerous censorship bill that threatens everyone’s free speech. The creators claim that it’s won’t hurt free speech but it actually does and they do not understand how important 230 is in its current form right now!

Here is a good article explaining the safe tech act really well and why it’s dangerous :

Also talk to your representatives about this and why it’s bad and if you can, try to explain to them about why section 230 is important. Support digital advocacy, human rights and any other groups that supports free internet and expression and let them know about these legislators and their bad ideas!

Update 3

The EarnIt act is sadly coming back after failing two times, now they are trying a 3rd time.

This legislation is dangerous for privacy and free expression and speech. It will bring lots of surveillance and is just as bad as the restrict act.

https://act.eff.org/action/the-earn-it-act-is-back-seeking-to-scan-us-all

Now it’s being reintroduced by two senators and two representatives if you don’t know what this bill actually does there is more information about it here from these links : https://www.eff.org/deeplinks/2023/04/earn-it-bill-back-again-seeking-scan-our-messages-and-photos

The second one is called KOSA (KidsOnlineSafteyAct),

now this bill has failed to pass last year because a lot of opposition from 90/100 human rights.

It claims it’s would protect kids but it’s actually has a lot of censorship and is very dangerous to lgbt/trans kids and many other kids that are in abusive households. It will actually hurt them instead of protecting them.

If that’s not bad enough it’s tragically gaining momentum and attraction by these child advocacy groups and being sponsored by Dove and Lizzo. And there has been petitions in supporting this unconstitutional bill, One of them having somewhere around 30k signs…

I really wish I could say I’m joking but this is sadly true.

If you want more info on KOSA here they are:

https://www.fightforthefuture.org/actions/censorship-wont-make-kids-safe/

Please everyone call your senators and representatives and tell them to oppose these bills. We really need help into fighting off these bill so we could keep a free opened internet!

#stop kosa#earn it act#im sorry#fuck censorship#net neutrality#lgbt rights#fight for your right#i’m so tired#trans rights#lgbtq#transgender#lgbt#safe tech act#section 230#important#censorship#technology#politics#stop censorship#anti censorship#antifascism#government#congress#lgbtqia#activism#human rights

1K notes

·

View notes

Text

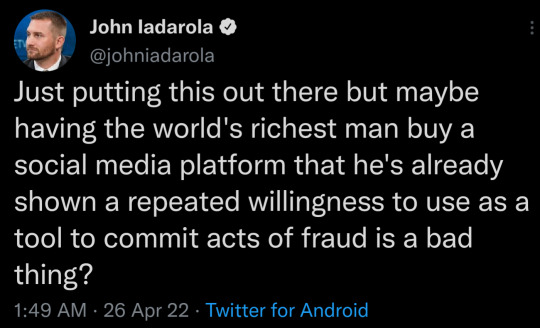

#us politics#news#twitter#tweet#2022#john iadarola#elon musk#free speech#section 230#billionaires#fuck billionaires#memes#tyt#the young turks#the serfs#big tech

1K notes

·

View notes

Text

What the fediverse (does/n't) solve

No matter how benevolent a dictatorship is, it’s still a dictatorship, and subject to the dictator’s whims. We must demand that the owners and leaders of tech platforms be fair and good — but we must also be prepared for them to fail at this, sometimes catastrophically.

That is, even if you trust Tim Cook to decide what apps you are and aren’t allowed to install — including whether you are allowed to install apps that block Apple’s own extensive, nonconsensual, continuous commercial surveillance of its customers — you should also be prepared for Cook to get hit by a bus and replaced by some alt-right dingleberry.

What happens next is a matter of technology and law. It’s a matter of whether you have to give up your media and your apps and your data to escape the no-longer-benevolent dictatorship. It depends on whether the technology is designed to let you move those things, and whether the law protects you from tech companies, or whether it protects tech companies from *you, by criminalizing jailbreaking, reverse engineering, scraping, etc.

As thorny as this is, it’s even harder when we’re talking about social media, because it’s social. Sociability adds a new and pernicious switching cost, when we hold each other hostage because we can’t agree on when/whether to go, and if we do, where to go next. When the management of your community goes septic, it can be hard to leave, because you have to leave behind the people who matter to you if you do.

We’ve all been there: do you quit your writers’ circle because one guy is being a jerk? Do you stop going to a con because the concom tolerates a predator? Do you stop going to family Thanksgiving because your racist Facebook uncle keeps trying to pick a fight with you? Do you accompany your friends to dinner at a restaurant whose owners are major donors to politicians who want to deport you?

This collective action problem makes calamity of so long life. At the outer extreme, you have the families who stay put even as their governments slide into tyranny, risking imprisonment or even death, because they can’t bear to be parted from one another, and they all have different views of how bad the situation really is:

https://www.theatlantic.com/books/archive/2022/12/the-oppermanns-book-holocaust-nazi-fascism/672505/

The corporate person is a selfish narcissist, a paperclip-maximizing artificial lifeform forever questing after its own advantage. It is an abuser. Like all abusers, it is keenly attuned to any social dynamic that it can use to manipulate its victims, and so social media is highly prized by these immortal colony-organisms.

You can visit all manner of abuses upon a social network and it will remain intact, glued together by the interpersonal bonds of its constituent members. Like a kidnapper who takes your family hostage, abusers weaponize our love of one another and use it to make us do things that are contrary to our own interests.

In “Stop Talking to Each Other and Start Buying Things: Three Decades of Survival in the Desert of Social Media,” Cat Valente is characteristically brilliant about this subject. It is one of the best essays you’ll read this month:

https://catvalente.substack.com/p/stop-talking-to-each-other-and-start

Valente is on the leading edge of creators who were born digital — whose social life was always online, and whose writing career grew out of that social life. In 2009, she posted her debut novel, “The Girl Who Circumnavigated Fairyland in a Ship of Her Own Making” to the web for free. Two years, and many awards, later, Macmillan brought it out in hardcover:

https://memex.craphound.com/2011/05/10/valentes-girl-who-circumnavigated-fairyland-sweet-fairytale-shot-through-with-salty-tears-magic/

“Stop Talking to Each Other” is a memoir wrapped around a trenchant, take-no-prisoners critique of all the robber-barons who’ve made us prisoners to one another and fashioned whips out of our own affection for one another and the small pleasures we give each other.

It begins with Valente’s girlhood in the early 1990s, where Prodigy formed a lifeline for her lonely, isolated existence. Valente — a precocious writer — made penpals with other Prodigy users, including older adults who assumed they were talking to a young adult. These relationships expanded her world, uplifting and enriching her.

Then, one day, she spotted a story about Prodigy in her dad’s newspaper: “PRODIGY SAYS: STOP TALKING TO EACH OTHER AND START BUYING THINGS.” The headline floored her. Even if Valente wanted to buy the weird grab-bag of crap for sale at Prodigy in 1991, she was a 12 year old and had no way to send internet money to Prodigy. Also, she had no money of any sort.

For her, the revelation that the owners of Prodigy would take away “this one solitary place where I felt like I mattered” if she “didn’t figure out how to buy things from the screen” was shocking and frightening. It was also true. Prodigy went away, and took with it all those human connections a young Cat Valente relied on.

This set the pattern for every online community that followed: “Stop talking to each other and start buying things. Stop providing content for free and start paying us for the privilege. Stop shining sunlight on horrors and start advocating for more of them. Stop making communities and start weaponizing misinformation to benefit your betters.”

Or, more trenchantly: “Stop benefitting from the internet, it’s not for you to enjoy, it’s for us to use to extract money from you. Stop finding beauty and connection in the world, loneliness is more profitable and easier to control. Stop being human. A mindless bot who makes regular purchases is all that’s really needed.”

Valente traces this pathology through multiple successive generations of online community, lingering on Livejournal, whose large community of Russian dissidents attracted Russian state-affiliated investors who scooped up the community and then began turning the screws on it, transforming it into a surveillance and control system for terrorizing the mutual hostages of the Russian opposition.

Valente and her friends on the service were collateral damage in the deliberate enshittification of LJ, band the Russian dissidents had it worse than they did, but it was still a painful experience. LJ was home to innumerable creators who “grew audiences through connections and meta-connections you already trusted.”

Most importantly, the poisoning of LJ formed a template, for how to “[take] apart a minor but culturally influential community and develop techniques to do it again, more efficiently, more quickly, with less attention.”

It’s a template that has been perfected by the alt-right, by the Sad Puppies and the Gamergaters and their successor movements. These trolls aren’t motivated by the same profit-seeking sociopathy of the corporate person, but they are symbiotic with it.

Valente lays out the corporate community’s lifecycle:

Be excited about the internet, make a website!

Discover that users are uninterested in your storefront, add social features.

Add loss-leaders to “let users make their own reasons to use the site” (chat, blogs, messaging, etc), and moderate them “to make non-monster humans feel safe expressing themselves and feel nice about site.”

The site works, and people “[use] free tools to connect with each other and learn and not be lonely and maybe even make a name for themselves sometimes.”

The owners demand that users “stop talking and start buying things.”

Users grow disillusioned with a site whose sociability is an afterthought to the revenue-generation that is supposed to extract all surplus value from the community they themselves created.

The owners get angry, insult users, blanket the site with ads, fire moderators, stoke controversy that creates “engagement” for the ads. They sell user data. They purge marginalized community that advertisers don’t like. They raise capital, put the community features behind a paywall, and focus so hard on extraction that they miss the oncoming trends.

“Everyone is mad.”

“Sell the people you brought together on purpose to large corporation, trash billionaire, or despotic government entity who hates that the site’s community used those connective tools to do a revolution.”

The people who “invested their time, heart, labor, love, businesses and relationships” are scattered to the winds. Corporate shareholders don’t care.

Years later, the true story of how the site disintegrated under commercial pressures comes out. No one cares.

The people who cashed out by smashing the community that created their asset are now wealthy, and they spend that wealth on “weird right-wing shit…because right-wing shit says no taxes and new money hates taxes.”

This pattern recurs on innumerable platforms. Valente’s partial list includes “Prodigy, Geocities, collegeclub.com, MySpace, Friendster, Livejournal, Tumblr,” and, of course, Twitter.

Twitter, though, is different. First, it is the largest and most structurally important platform to be enshittified. Second, because it was enshittified so much more quickly than the smaller platforms that preceded it.

But third, and most importantly, because Twitter’s enshittification is not solely about profit. Whereas the normal course of a platform’s decline involves a symbiosis between corporate extraction and trollish cruelty, the enshittification of Twitter is being driven by an owner who is both a sociopathic helmsan for a corporate extraction machine and a malignant, vicious narcissist.

Valente describes Musk’s non-commercial imperatives: “the yawning, salivating need to control and hurt. To express power not by what you can give, but by what you can take away…[the] viral solipsism that cannot bear the presence of anything other than its own undifferentiated self, propagating not by convincing or seduction or debate, but by the eradication of any other option.”

Not every platform has been degraded this way. Valente singles out Diaryland, whose owner, Andrew, has never sold out his community of millions of users, not in all the years since he created it in 1999, when he was a Canadian kid who “just like[d] making little things.” Andrew charges you $2/month to keep the lights on.

https://diaryland.com/

Valente is right to lionize Diaryland and Andrew. In fact, she’s right about everything in this essay. Or, nearly everything. “Almost,” because at the end, she says, “the minute the jackals arrive is the same minute we put down the first new chairs in the next oasis.”

That’s where I think she goes wrong. Or at least, is incomplete. Because the story of the web’s early diversity and its focus on its users and their communities isn’t just about a natural cycle whereby our communities became commodities to be tormented to ruination and sold off for parts.

The early web’s strength was in its interoperability. The early web wasn’t just a successor to Prodigy, AOL and other walled gardens — it was a fundamental transformation. The early web was made up of thousands of small firms, hobbyists, and user groups that all used the same standard protocols, which let them set up their own little corners of the internet — but also connected those communities through semi-permeable membranes that joined everything, but not in every way.

The early web let anything link to anything, but not always, which meant that you could leave a community but still keep tabs on it (say, by subscribing to the RSS feeds of the people who stayed behind), but it also meant that individuals and communities could also shield themselves from bad actors.

The right of exit and the freedom of reach (the principle that anyone can talk to anyone who wants to talk to them) are both key to technological self-determination. They are both imperfect and incomplete, but together, they are stronger, and form a powerful check on both greed and cruelty-based predation:

https://pluralistic.net/2022/12/19/better-failure/#let-my-tweeters-go

Small wonder that, from the beginning, the internet has been a fight between those who want to build a commons and those who wish to enclose it. Remember when we were all angry that the web was disappearing into Flash, the unlinkable proprietary blobs that you couldn’t ad-block or mute or even pause unless they gave you permission?

Remember when Microsoft tried, over and over again, to enclose the internet, first as a dial-up service, then as a series of garbage Windows-based Flash-alikes. Remember Blackbird?

https://en.wikipedia.org/wiki/Blackbird_(online_platform)

But standard protocols exert powerful network effects on corporations. When everyone is adhering to a standard, when everything can talk to everything else, then it’s hard to lure users into a walled garden. Microsoft coerced users into it by striking bargains with buyers at large companies to force its products on all their employees, and then by breaking compatibility with rival products, which made it hard for those employees to use another vendor’s products in their personal lives. Not being able to access your company email or edit your company documents on your personal device is a powerful incentive to use the same product your company uses.

Apple, meanwhile, seduced users into its walled garden, promising that it would keep them safe and that everything would just work, and then using its power over those customers to gouge them on dongles and parts and repair and apps.

Both companies — like all corporations — are ferocious rent-seekers, but both eventually capitulated to the internet — bundling TCP and, eventually, browsers with their OSes. They never quit trying to enclose the web, via proprietary browser extensions and dirty tricks (Microsoft) or mobile lock-in and dirty tricks (Apple). But for many years, the web was a truly open platform.

The enclosure of online communities can’t be understood without also understanding the policy choices that led to the enclosure of tech more broadly. The decision to stop enforcing antitrust law (especially GWB’s decision not to appeal in the Microsoft antitrust case) let the underlying platforms grow without limits, by buying any serious rival, or by starving it out of existence by selling competing products below cost, cross-subidizing them with rents extracted from their other monopoly lines.

These same policies let a few new corporate enclosers enter the arena, like Google, which is virtually incapable of making a successful product in-house, but which was able to buy others’ successes and cement its web dominance: mobile, video, server management, ad-tech, etc.

These firms provide the substrate for community abusers: apps, operating systems and browser “standards” that can’t be legally reverse-engineered, and lobbying that strengthens and expands those “Felony Contempt of Business Model” policies:

https://www.eff.org/deeplinks/2017/09/open-letter-w3c-director-ceo-team-and-membership

Without these laws and technologies, corporations wouldn’t be able to block freedom of exit and freedom of reach. These laws and technologies let these corporations demand that the state obliterate anyone who gives users the tools to set their own terms for the communities they built.

These are the laws and technologies that transform network effects from a tool for openness — where even the largest, most vicious corporations must seek to pervert, rather than ignore, standards — into a tool for enclosure, where we are all under mounting pressure to move inside a walled garden.

This digital feudalism is cloaked in the language of care and safety. The owners of these walled gardens insist that they are benevolent patriarchs who have built fortresses to defend us from external threats, but inevitably they are revealed as warlords who have built prisons to keep us from escaping from them:

https://locusmag.com/2021/01/cory-doctorow-neofeudalism-and-the-digital-manor/

Which brings me to the Fediverse. The Fediverse’s foundation is a standard called ActivityPub, which was designed by weirdos who wanted to make a durably open, interoperable substrate that could support nearly any application. This was something that large corporations were both uninterested in building and which they arrogantly dismissed as a pipe dream. This means that Activitypub is actually as good as its architects could make it, free from boobytraps laid by scheming monopolists.

The best-known Fediverse application is Mastodon, which has experienced explosive growth from people who found Musk’s twin imperatives to cruelty and extraction sufficiently alarming that they have taken their leave of Twitter and the people they cared about there. This is not an easy decision, and Musk is bent on making it harder by sabotaging ex-Twitter users’ ability to find one another elsewhere. He wants the experience of leaving Twitter to be like the final scene of Fiddler On the Roof, where the villagers of Anatevka are torn from one another forever:

https://doctorow.medium.com/how-to-leave-dying-social-media-platforms-9fc550fe5abf

With Mastodon’s newfound fame comes new scrutiny, and a renewed debate over the benefits and drawbacks of decentralized, federated systems. For example, there’s an ongoing discussion about the role of quote-tweeting, which Mastodon’s core devs have eschewed as conducive to antisocial dunks, but which some parts of Black Twitter describe as key to a healthy discourse:

https://www.tbray.org/ongoing/When/202x/2022/12/21/Mastodon-Ethics

But quote tweeting wasn’t initially a part of Twitter. Instead, users kludged it, pasting in text and URLs for others’ tweets to make it work. Eventually, Twitter saw the utility of quote-tweeting and adopted it, making it an official feature.

There is a possibility that Mastodon’s core devs will do the same, adding quote-tweet to the core codebase for Mastodon. But if they don’t, the story isn’t over. Because Mastodon is free software, and because it is built on an open standard, anyone can add this feature to their Mastodon instance. You can do this yourself, or you can hire someone else to do it for you.

Now, not everyone has money or coding skills — but also, not everyone has the social clout to convince a monolithic, for-profit corporation to re-engineer its services to better suit their needs. And while there is a lot of overlap between “people who can code,” and “people who can afford to pay coders” and “people whom a tech company listens to,” these are not the same population.

In other words: Twitter is a place where you get quote-tweeting if the corporation decides you need it, and Mastodon is a place where you get quote-tweeting if the core devs decide you need it, or if you have the skills or resources to add it yourself.

What’s more, if Mastodon’s core devs decide to take away a feature you like, you and your friends can stand up your own Mastodon server that retains that feature. This is harder than using someone else’s server — but it’s way, way easier than convincing Twitter it was wrong to take away the thing you loved.

The perils of running your own Mastodon server have also become a hot topic of debate. To hear the critics warn of it, anyone who runs a server that’s open to the public is painting a huge target on their back and will shortly be buried under civil litigation and angry phone-calls from the FBI.

This is: Just. Not. True. The US actually has pretty good laws limiting intermediary liability (that is, the responsibility you bear for what your users do). You know all that stuff about how CDA230 is “a giveaway to Big Tech?” That’s only true if the internet consists solely of Big Tech companies. However, if you decide to spend $5/month hosting a Mastodon instance for you and your community, that same law protects you.

Indeed, while running a server that’s open to the public does involve some risk, most of that risk can be contained by engaging in a relatively small, relatively easy set of legal compliance practices, which EFF’s Corynne McSherry lays out in this very easy-to-grasp explainer:

https://www.eff.org/deeplinks/2022/12/user-generated-content-and-fediverse-legal-primer

Finally, there’s the ongoing debate over whether Mastodon can (and should) replace Twitter. This week on the Canadaland Short Cuts podcast, Jesse Brown neatly summarized (and supported, alas) the incorrect idea that using Mastodon was no different from using Gab or Parler or Post.

https://www.canadaland.com/podcast/843-god-save-the-tweets/

This is very, very wrong. The thing is, even if you like and trust the people who run Gab or Parler or Post, you face exactly the same risk you face with Twitter or Facebook: that the leadership will change, or have a change of heart, and begin to enshittify your community there. When they do, your only remedy will be the one that Valente describes, to scatter to the winds and try and reform your community somewhere else.

But that’s not true of the Fediverse. On Mastodon, you can export all your followers, and all the people who follow you, with two clicks. Then you can create an account on another server and again, with just two clicks, you can import those follows and followers and be back up and running, your community intact, without being under the thumb of the server manager who decided to sell your community down the river (you can also export the posts you made).

https://codingitwrong.com/2022/10/10/migrating-a-mastodon-account.html

Now, it’s also true that a particularly vindictive Mastodon server owner could summarily kick you off the server without giving you a chance to export your data. Doing so would arguably run afoul of the GDPR and state laws like the CCPA.

Strengthening these privacy laws would actually improve user rights — unlike abolishing CDA 230, which would simultaneously make the corporate owners of big services more trigger-happy when it comes to censoring content from marginalized groups, and make it all but impossible for those groups to safely run their own servers to decamp to when this happens.

Letting people set up their own communities, responsible to one another, is the tonic for Valente’s despair that the cycle of corporate predation and enshittification is eternal, and that people who care for one another and their communities are doomed to be evicted again and again and again and again.

And *federating these communities — creating semi-permeable membranes between them, blocking the servers for people who would destroy you, welcoming messages from the like-minded, and taking intermediate steps for uneasy allies — answers Brown’s concern that Twitter is the only way we can have “one big conversation.”

This “one conversation” point is part of Brown’s category error in conflating federated media with standalone alternatives to Twitter like Post. Federated media is one big conversation, but smeared out, without the weak signal amplification of algorithms that substitute the speech of the people you’ve asked to hear from with people who’ve paid to intrude on your conversation, or whom the algorithm has decided to insert in it.

Federation is an attractive compromise for people like Valente, who are justly angry at and exhausted by the endless cycle of “entrepreneurs” building value off of a community’s labor and then extracting that value and leaving the community as a dried-out husk.

It’s also a promising development for antitrust advocates like me, who are suspicious of corporate power overall. But federation should also please small-government libertarian types. Even if you think the only job of the state is to enforce contracts, you still need a state that is large and powerful enough to actually fulfill that role. The state can’t hold a corporation to its promises if it is dwarfed by that corporation — the bigger the companies, the bigger the state has to be to keep them honest.

The stakes are high. As Valente writes, the digital communities that flourished online, only to be eradicated by cruelty and extraction, were wonderful oases of care and passion. As she says, “Love things. Love people. Love the small and the weird and the new.”

“Be each other’s pen pals. Talk. Share. Welcome. Care. And just keep moving. Stay nimble. Maybe we have to roll the internet back a little and go back to blogs and decentralized groups and techy fiddling and real-life conventions and idealists with servers in their closets.”

“Protect the vulnerable. Make little things. Wear electric blue eyeshadow. Take a picture of your breakfast. Overthink Twin Peaks. Get angry. Do revolutions. Find out what Buffy character you are. Don’t get cynical. Don’t lose joy. Be us. Because us is what keeps the light on when the night comes closing in.”

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

Heisenberg Media (modified)

https://commons.wikimedia.org/wiki/File:Elon_Musk_-_The_Summit_2013.jpg

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/deed.en

[Moses confronting the Pharaoh, demanding that he release the Hebrews. Pharaoh's face has been replaced with Elon Musk's. Moses holds a Twitter logo in his outstretched hand. Moses's head has been replaced with the head of Tusky, the Mastodon mascot. The faces embossed in the columns of Pharaoh's audience hall have been replaced with the menacing red eye of HAL9000 from 2001: A Space Odyssey. The wall over Pharaoh's head has been replaced with a Matrix 'code waterfall' effect.]

#pluralistic#prodigy#standards#activitypub#tumblr#federation#fediverse#mastodon#jesse brown#canadaland#cat valente#twitter#yasnses#social media#freedom of exit#section 230#cda 230#intermediary liability

142 notes

·

View notes

Text

Guys does anyone know what to do for the new PF topic. I’m actually struggling so hard wtf are we supposed to do for statistics.

I spent like 2 hours trying to find any amount of statistics on how it could maybe impact those who work for social media companies but I couldn’t find anything.

Resolution: The United States federal government should repeal section 230(or something like that)

#speech and debate#Colorado speech and debate#public forum#resolution#debate#send help#helpppp#section 230

19 notes

·

View notes

Text

March 2023 Newsletter, Volume 176

A new issue of TWC drops, Open Doors announces two new archive imports, and Legal joins allies in support of Section 230 in our March newsletter. Read more at https://otw.news/51ecb4

58 notes

·

View notes

Text

Goldman, of Santa Clara University, agreed. Twitter, he said, could prevent all users without blue verification checks from posting on the platform or prevent their posts from appearing on the timeline.

"It's inevitable that services will move away from user-generated content and toward a model like Netflix," he said. "It'll be professionally produced, it won't have the diversity it has, it won't give speech platforms to as many people and to compensate professional producers, it's more likely to be paywalled."

#section 230#net neutrality#internet#we are really witnessing the end of the internet as we know it#supreme court

132 notes

·

View notes

Text

This article talks about some misinformation about Section 230.

3 notes

·

View notes

Note

Do you think Section 230 is pretty much going to be passed? I've been thinking about leaving the internet completely over this.

...Well, like many things, the answer is "It's Complicated,"

Firstly, for the most part, efforts to screw up Section 230 aren't direct repealing all of it so much as carve-outs that majorly weaken it, in ways that could still deeply screw up free speech.

The recent Kids Online Safety Act/EARN IT Act is being pushed for, and while it's not in committee, given the former was sent to the Commerce Committee last time and the latter to the Judiciary Committee, they're probably gonna send it next time, and you're probably going to want to call your senators if they're in said committee to tell them to kill those bills.

The membership of the Commerce Committee:

Maria Cantwell, Washington, Chair

Amy Klobuchar, Minnesota

Brian Schatz, Hawaii

Ed Markey, Massachusetts

Gary Peters, Michigan

Tammy Baldwin, Wisconsin

Tammy Duckworth, Illinois

Jon Tester, Montana

Kyrsten Sinema, Arizona[a]

Jacky Rosen, Nevada

Ben Ray Luján, New Mexico

John Hickenlooper, Colorado

Raphael Warnock, Georgia

Peter Welch, Vermont

Ted Cruz, Texas, Ranking Member

John Thune, South Dakota

Roger Wicker, Mississippi

Deb Fischer, Nebraska

Jerry Moran, Kansas

Dan Sullivan, Alaska

Marsha Blackburn, Tennessee

Todd Young, Indiana

Ted Budd, North Carolina

Eric Schmitt, Missouri

J.D. Vance, Ohio

Shelley Moore Capito, West Virginia

Cynthia Lummis, Wyoming

The membership of the Judiciary Committee:

Dick Durbin, Illinois, Chairman

Dianne Feinstein, California

Sheldon Whitehouse, Rhode Island

Amy Klobuchar, Minnesota

Chris Coons, Delaware

Richard Blumenthal, Connecticut

Mazie Hirono, Hawaii

Cory Booker, New Jersey

Alex Padilla, California

Jon Ossoff, Georgia

Peter Welch, Vermont

Lindsey Graham, South Carolina, Ranking Member

Chuck Grassley, Iowa

John Cornyn, Texas

Mike Lee, Utah

Ted Cruz, Texas

Josh Hawley, Missouri

Tom Cotton, Arkansas

John Kennedy, Louisiana

Thom Tillis, North Carolina

Marsha Blackburn, Tennessee

So yeah.

I may as well add, If you've got the misfortune to be calling a Republican, be sure to bring up how KOSA will be used as a way for Big Government to spy on people via mandated age verification, and how EARN IT will be used to censor conservative speech.

That'll get the bastards attention. And no matter what you do, don't shut up about it, because silence means the fuckers win, just look at FOSTA/SESTA...

...Tho, in better news, the questioning in those Supreme Court suits tackling Section 230 seem to show that the justices are at least reluctant to try and do much to 230, very specifically because of how much it could fuck up.

Which begs the question, if even these fucking demons know why fucking with Section 230 is a godawful idea, what excuse do these senators have?

Point is, the efforts to undermine it aren't all at once so much as gradual and insidious. Call your senators folks, and stay vigilant.

26 notes

·

View notes

Text

RED ALERT FOR THE INTERNET: KOSA AND EARN IT ACT ARE RETURNING(2024)

These twos bills are very bad for the future of the Internet. Like..Very bad.

In a nutshell, KOSA would allow states to sue any websites that host content deemed “harmful” to minors. With such a vague wording, its expected that any nsfw or lgbt content will immediately be taken down.

Plus, it will be made mandatory to use IDs to confirm your age when going online, to so called “protect kids” but all that will cause is a huge potential data breach.

As for the Earn It Act bill, it would allow the governement to spy and filter out antyhing they dont like in private dms for any users,as well as blowing a hole into Section 230 : The thing that prevent websites from being directly liable in case a user post something illegal,instead of the user being punished directly.

I also made comics about these in the past, while the “contact your senators” bits may be outdated, I heavily encourage you to spread the word about this, as the linktrees are very interesting and useful still, especially call script wise.

KOSA Comic here

Earn It Act comic here

New post here

KOSA UPDATE !!! (16/02/2024)

PLEASE REBLOG !

ALSO IF YOU WANNA HELP DO JOIN OUR DISCORD SERVER AGAINST THESE BILLS

https://discord.gg/pwTSXZMxnH

3K notes

·

View notes

Text

Kati Morton was a reluctant adopter of YouTube.

A therapist working toward her license in California, it was her then-boyfriend, now-husband, who first suggested that Morton explore posting videos on the platform as a way to disseminate mental health information.

The year was 2011, and Morton, like many others, thought YouTube primarily consisted of videos of cats playing the piano and make-up tutorials. But after seeing other content posted on the site, Morton decided to give it a shot.

Her audience started small, with her videos garnering a handful of views. But in the more than a decade since then, Morton's YouTube channel has grown to more than 1.2 million subscribers.

Crucial to the growth of Morton's audience is YouTube's system for recommending content to users, which the company began building in 2008. It relies on a highly complex algorithm to predict what videos will interest viewers and keep them watching. Today, half of Morton's views come from recommendations, she said.

"If you could see the entire life of the channel, it was really, really slow and steady," Morton told CBS News. "And then through recommendations, as well as collaborations, things have grown as you're able to reach a broader audience and YouTube is better able to understand the content."

YouTube's recommendations algorithm, and those used by platforms like TikTok, Facebook and Twitter, are now at the heart of a legal dispute that will go before the Supreme Court on Tuesday, in a case that involves the powerful legal shield that helped the internet grow.

"We're talking about rewriting the legal rules that govern the fundamental architecture of the internet," Aaron Mackey, senior staff attorney at the Electronic Frontier Foundation, told CBS News of what's at stake in the case, known as Google v. Gonzalez.

"A BACKBONE OF ONLINE ACTIVITY"

Section 230 of the Communications Decency Act immunizes internet companies from liability over content posted by third parties and allows platforms to remove content considered obscene or objectionable. The dispute before the Supreme Court marks the first time the court will consider the scope of the law, and the question before the justices is whether Section 230's protections for platforms extend to targeted recommendations of information.

The court fight arose after terrorist attacks in Paris in November 2015, when 129 people were murdered by ISIS members. Among the victims was 23-year-old Nohemi Gonzalez, an American college student studying abroad who was killed at a bistro in the city.

Gonzalez's parents and other family members filed a civil lawsuit in 2016 against Google, which owns YouTube, alleging that the tech company aided and abetted ISIS in violation of a federal anti-terrorism statute by recommending videos posted by the terror group to users.

Google moved to dismiss the complaint, claiming that they were immune from the claims under Section 230. A federal district court in California agreed and, regarding YouTube's recommendations, found that Google was protected under the law because the videos at issue were produced by ISIS.

The U.S. Court of Appeals for the 9th Circuit affirmed the district court's ruling, and Gonzalez's family asked the Supreme Court to weigh in. The high court said in October it would take up the dispute.

The court fight has elicited input from a range of parties, many of which are backing Google in the case. Platforms like Twitter, Meta and Reddit — all of which rely on Section 230 and its protections — argue algorithmic recommendations allow them to organize the millions of pieces of third-party content that appear on their sites, enhancing the experience for users who would otherwise be forced to sift through a mammoth amount of posts, articles, photos and videos.

"Given the sheer volume of content on the internet, efforts to organize, rank, and display content in ways that are useful and attractive to users are indispensable," lawyers for Meta, the parent company of Facebook and Instagram, told the court.

Even the company that operates online dating services Match and Tinder pointed to Section 230 as "vital" to its efforts to connect singles, as the law allows "its dating platforms to provide recommendations to its users for potential matches without having to fear overwhelming litigation."

But conservatives are using the case as a vehicle to rail against "Big Tech" firms and amplify claims that platforms censor content based on political ideology.

Citing lower court decisions they believe has led to a "broad grant of immunity," a group of Republican Senators and House members told the Supreme Court that platforms "have not been shy about restricting access and removing content based on the politics of the speaker, an issue that has persistently arisen as Big Tech companies censor and remove content espousing conservative political views, despite the lack of immunity for such actions in the text of" Section 230.

The case has presented the Justices with a rare opportunity to hear directly from the co-authors of the legislation at issue. Ron Wyden, now a Democratic Senator from Oregon, and Chris Cox, a former GOP congressman from California, crafted Section 230 in the House in 1996. The bipartisan pair filed a friend-of-the court brief explaining the plain meaning of their law and the policy balance they sought to strike.

"Section 230 protects targeted recommendations to the same extent that it protects other forms of content curation and presentation," they wrote. "Any other interpretation would subvert Section 230's purpose of encouraging innovation in content moderation and presentation. The real-time transmission of user-generated content that Section 230 fosters has become a backbone of online activity, relied upon by innumerable internet users and platforms alike."

Google, they argued, is entitled to liability protection under Section 230, since the platform's recommendation algorithm is merely responding to user preferences by pairing them with the types of content they seek.

"The algorithm functions in a way that is not meaningfully different from the many curatorial decisions that platforms have always made in deciding how to present third-party content," Wyden and Cox said.

The battle also highlights competing views about the internet today and how Section 230 has shaped it. For tech companies, the law has laid the groundwork for new platforms to come online, an industry of online creators to form and free expression to flourish. For Gonzalez's family and others, the algorithmic recommendations have proven deadly and harmful.

Like the Gonzalezes, Taiwanna Anderson, too, has fought to hold a social media platform responsible over content it recommends to users.

Last May, Anderson sued TikTok and its parent company, China-based ByteDance, after her 10-year-old daughter Nylah died in late 2021 after trying to perform the dangerous "Blackout Challenge," in which users are pushed to strangle themselves until they pass out and then share videos of the experience.

The challenge, which went viral on TikTok, was recommended to Nylah through her account's "For You" page, a curated feed of third-party content powered by TikTok's algorithmic recommendation system.

Anderson's lawsuit sought to hold TikTok accountable for deliberately funneling dangerous content to minors through the challenges and encouraging behavior that put their lives in danger. TikTok asked the federal district court in Pennsylvania to dismiss the suit, invoking Section 230.

U.S. District Judge Paul Diamond tossed out the case in October, writing that the law shielded TikTok from liability because it was promoting the work of others. But he acknowledged in a brief order that TikTok made the Blackout Challenge "readily available on their site" and said its algorithm "was a way to bring the challenge to the attention of those likely to be most interested in it."

"The wisdom of conferring such immunity is something properly taken up with Congress, not the courts," Diamond wrote.

Mackey, of the Electronic Frontier Foundation, noted that if people disagree with the reach of Section 230 as the courts have interpreted it, the right remedy is for Congress, not the Supreme Court, to rewrite the law.

"When they passed it, they set this balance and said not that they didn't believe there wouldn't be harmful content, but they believed on balance the creation of opportunities and forums for people to speak, for the growth of the internet and development of a tool that became central to our lives, commerce, political expression — that was what they valued more," Mackey said. "Congress is free to rewrite that balance."

A NEW CREATOR ECONOMY

In the 27 years since Section 230 became law, the explosive growth of the internet has fueled a multi-billion-dollar industry of independent online creators who rely on large tech platforms to reach new audiences and monetize their content.

In Morton's case, her YouTube channel has allowed her to expand beyond her office in Santa Monica, California, and reach patients around the country, including in areas where mental health resources may be scarce.

"The ability for me to get over a million views on YouTube means that I'm able to reach so many more people, and mental health information isn't held behind a paywall," she said.

Alex Su, a lawyer by training who runs the TikTok account LegalTechBro, first began sharing content on LinkedIn in 2016 as a way to drive awareness of his employer, a technology company. After building up a following of lawyers and others in the legal industry on LinkedIn, Su began experimenting with TikTok in 2020.

His TikTok videos, which touch on insider experiences of working at a law firm, resonated with other lawyers and people with ties to the profession. He said LinkedIn's recommendation system has been instrumental in helping Su reach his target audience and market his company's services.

"These algorithms let me go viral among people who can relate to my jokes," he told CBS News. "If I put this type of content in front of a general audience, they probably wouldn't find it as funny."

Internet companies and supporters of Section 230 note the law has allowed for new and emerging companies to grow into industry leaders without incurring significant litigation costs fighting frivolous claims.

Su, an early adopter of LinkedIn and TikTok for those in the legal field, noted that creators are often quick to take advantage of new platforms, where they can reach new audiences.

"I think it's no accident that there are these shifts where new entrants come in and you can take advantage of it as a content creator because then you can go viral on that platform with a new audience quickly," he said. "Without those different platforms, I would not have been able to grow in the way that I did."

FEW CLUES FROM THE COURT

The Supreme Court has given little indication of how it may approach Section 230. Only Justice Clarence Thomas has written about lower courts' interpretations of the legal shield.

"Courts have long emphasized non-textual arguments when interpreting [Section] 230, leaving questionable precedent in their wake," Thomas wrote in a 2020 statement urging the court to consider whether the law's text "aligns with the current state of immunity enjoyed by internet platforms."

The Supreme Court could issue a ruling that affirms how Section 230 has been interpreted by lower courts, or narrow the law's immunity.

But internet companies warned the court that if it limits the scope of Section 230, it could drastically change how they approach content posted to their sites. With a greater risk of costly litigation with fewer protections, companies may be more cautious about letting content appear on their sites that may be problematic, and only allow content that has been vetted and poses little legal risk.

"If you're concerned about censorship, the last thing you want is a legal regime that is going to punish platforms for keeping things online," Mackey said. "It's going to be increased censorship, more material will be taken down, a lot won't make it alone in the first place."

A decision from the Supreme Court is expected by the summer.

#us politics#news#cbs news#2023#us supreme court#scotus#Google v. Gonzalez#section 230#Communications Decency Act#tiktok#facebook#twitter#YouTube#google#meta#instagram#social media#censorship#9th circuit court of appeals#reddit#algorithmic recommendations#match#tinder#big tech#sen. Ron Wyden#Chris Cox#Judge Paul Diamond#LinkedIn#justice clarence thomas#the internet

21 notes

·

View notes

Text

The 230 case in the supreme court in the USA is the most important thing to pay attention to right now. Its honestly scaring me how much this could impact myself and other content creators. Right now Im panicking over what my only career path will look like in 4 months if the supreme court does what it always does and fucks everything up…

If you havent heard whats going on, I’d suggest watching what Legal Eagle or Phillip DeFranco posted about the Supreme court and the future of the internet. Basically they’re debating the ability to sue a content platform for the content its users post on it, basically treating youtube’s suggestion algorithm as youtube “creating” the content, making it liable for everything its algorithm recommends… this could destroy Youtube’s ability to host videos in the way that we know it, limiting search and recommendation functions, or at worst forcing Youtube to limit who can even post to its platform. Its scary to even think about what might happen to small creators without an established subscriber base… no one new would see your videos, only people who actively search it out, or who already follow you…

TL:DR — If you live in the USA, please pay attention to the supreme court and do whatever you can to influence them to keep the freedom rights of these platforms!! Sign petitions, call your Rep’s, please do whatever you can to keep platforms like Youtube and Twitch alive!!

#announcerofgames#lgbtqia+#safespace#twitch#twitch streamer#gaming#lgbtqia#youtuber#youtube#lgbtq#memes#supreme court#supremecourt#usa#politics#save the internet#savetheinternet#section 230#section230

26 notes

·

View notes

Text

As a member of Congress, New Jersey Democrat Tom Malinowski led a push to open tech companies up to lawsuits if they promote content that leads to offline harm, a proposal that zeroed in on cases pertaining to international terrorism or civil rights abuses.

The effort has become more timely as the Supreme Court takes up that issue in Gonzalez v. Google, a case over whether platforms can bear responsibility for boosting terrorist content.

Read more.

12 notes

·

View notes

Text

Solving the Moderator's Trilemma with Federation

The classic trilemma goes: “Fast, cheap or good, pick any two.” The Moderator’s Trilemma goes, “Large, diverse userbase; centralized platforms; don’t anger users — pick any two.” The Moderator’s Trilemma is introduced in “Moderating the Fediverse: Content Moderation on Distributed Social Media,” a superb paper from Alan Rozenshtein of U of Minnesota Law, forthcoming in the journal Free Speech Law, available as a prepub on SSRN:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4213674#maincontent

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/03/04/pick-all-three/#agonism

Rozenshtein proposes a solution (of sorts) to the Moderator’s Trilemma: federation. De-siloing social media, breaking it out of centralized walled gardens and recomposing it as a bunch of small servers run by a diversity of operators with a diversity of content moderation approaches. The Fediverse, in other words.

In Albert Hirschman’s classic treatise Exit, Voice, and Loyalty, stakeholders in an institution who are dissatisfied with its direction have two choices: voice (arguing for changes) or exit (going elsewhere). Rozenshtein argues that Fediverse users (especially users of Mastodon, the most popular part of the Fediverse) have more voice and more “freedom of exit”:

https://en.wikipedia.org/wiki/Exit,_Voice,_and_Loyalty

Large platforms — think Twitter, Facebook, etc — are very unresponsive to users. Most famously, Facebook polled its users on whether they wanted to be spied on. Faced with overwhelming opposition to commercial surveillance, Facebook ignored the poll result and cranked the surveillance dial up to a million:

https://www.nbcnews.com/tech/tech-news/facebook-ignores-minimal-user-vote-adopts-new-privacy-policy-flna1c7559683

A decade later, Musk performed the same stunt, asking users whether they wanted him to fuck all the way off from the company, then ignored the vox populi, which, in this instance, was not vox Dei:

https://apnews.com/article/elon-musk-twitter-inc-technology-business-8dac8ae023444ef9c37ca1d8fe1c14df

Facebook, Twitter and other walled gardens are designed to be sticky-traps, relying on high switching costs to keep users locked within their garden walls which are really prison walls. Internal memos from the companies reveal that this strategy is deliberate, designed to keep users from defecting even as the service degrades:

https://www.eff.org/deeplinks/2021/08/facebooks-secret-war-switching-costs

By contrast, the Fediverse is designed for ease of exit. With one click, users can export the list of the accounts they follow, block and mute, as well as the accounts that follow them. With one more click, users can import that data into any other Fediverse server and be back up and running with almost no cost or hassle:

https://pluralistic.net/2022/12/23/semipermeable-membranes/

Last month, “Nathan,” the volunteer operator of mastodon.lol, announced that he was pulling the plug on the server because he was sick of his users’ arguments about the new Harry Potter game. Many commentators pointed to this as a mark against federated social media, “You can’t rely on random, thin-skinned volunteer sysops for your online social life!”

https://mastodon.lol/@nathan/109836633022272265

But the mastodon.lol saga demonstrates the strength of federated social media, not its weakness. After all, 450 million Twitter users are also at the mercy of a thin-skinned sysop — but when he enshittifies his platform, they can’t just export their data and re-establish their social lives elsewhere in two clicks:

Mastodon.lol shows us how, if you don’t like your host’s content moderation policies, you can exercise voice — even to the extent of making him so upset that he shuts off his server — and where voice fails, exit steps in to fill the gap, providing a soft landing for users who find the moderation policies untenable:

https://doctorow.medium.com/twiddler-1b5c9690cce6

Traditionally, centralization has been posed as beneficial to content moderation. As Rozenshtein writes, a company that can “enclose” its users and lock them in has an incentive to invest in better user experience, while companies whose users can easily migrate to rivals are less invested in those users.

And centralized platforms are more nimble. The operators of centralized systems can add hundreds of knobs and sliders to their back end and twiddle them at will. They act unilaterally, without having to convince other members of a federation to back their changes.

Centralized platforms claim that their most powerful benefit to users is extensive content moderation. As Tarleton Gillespie writes, “Moderation is central to what platforms do, not peripheral… [it] is, in many ways, the commodity that platforms offer”:

https://yalebooks.yale.edu/book/9780300261431/custodians-of-the-internet/

Centralized systems claim that their enclosure keeps users safe — from bad code and bad people. Though Rozenshtein doesn’t say so, it’s important to note that this claim is wildly oversold. Platforms routinely fail at preventing abuse:

https://www.nbcnews.com/nbc-out/out-news/sexual-assault-harassment-bullying-trans-students-say-targeted-school-rcna7803

And they also fail at blocking malicious code:

https://www.scmagazine.com/news/threats/apple-bugs-ios-macos_new_class

But even where platforms do act to “keep users safe,” they fail, thanks to the Moderator’s Trilemma. Setting speech standards for millions or even billions of users is an impossible task. Some users will always feel like speech is being underblocked — while others will feel it’s overblocked (and both will be right!):

https://www.eff.org/deeplinks/2021/07/right-or-left-you-should-be-worried-about-big-tech-censorship

And platforms play very fast and loose with their definition of “malicious code” — as when Apple blocked OG App, an Instagram ad-blocker that gave you a simple feed consisting of just the posts from the people you followed:

https://pluralistic.net/2023/02/05/battery-vampire/#drained

To resolve the Moderator’s Trilemma, we need to embrace subsidiarity: “decisions should be made at the lowest organizational level capable of making such decisions.”

https://pluralistic.net/2023/02/07/full-stack-luddites/#subsidiarity

For Rozenshtein, “content-moderation subsidiarity devolves decisions to the individual instances that make up the overall network.” The fact that users can leave a server and set up somewhere else means that when a user gets pissed off enough about a moderation policy, they don’t have to choose between leaving social media or tolerating the policy — they can simply choose another server that’s part of the same federation.

Rozenshtein asks whether Reddit is an example of this, because moderators of individual subreddits are given broad latitude to set their own policies and anyone can fork a subreddit into a competing community with different moderation norms. But Reddit’s devolution is a matter of policy, not architecture — subreddits exist at the sufferance of Reddit’s owners (and Reddit is poised to go public, meaning those owners will include activist investors and large institutions that might not care about your little community). You might be happy about Reddit banning /r_TheDonald, but if they can ban that subreddit, they can ban any subreddit. Policy works well, but fails badly.

By moving subsidiarity into technical architecture, rather than human policy, the fediverse can move from antagonism (the “zero-sum destructiveness” that dominates current online debate) to agonism, where your opponent isn’t an enemy — they are a “political adversary”:

https://www.yalelawjournal.org/article/the-administrative-agon

Here, Rozenshtein cites Aymeric Mansoux and Roel Roscam Abbing’s “Seven Theses On The Fediverse And The Becoming Of Floss”:

https://test.roelof.info/seven-theses.html

For this to happen, different ideologies must be allowed to materialize via different channels and platforms. An important prerequisite is that the goal of political consensus must be abandoned and replaced with conflictual consensus…

So your chosen Mastodon server “may have rules that are far more restrictive than those of the major social media platforms.” But the whole Fediverse “is substantially more speech protective than are any of the major social media platforms, since no user or content can be permanently banned from the network and anyone is free to start an instance that communicates both with the major Mastodon instances and the peripheral, shunned instances.”

A good case-study here is Gab, a Fediverse server by and for far-right cranks, conspiratorialists and white nationalists. Most Fediverse servers have defederated (that is, blocked) Gab, but Gab is still there, and Gab has actually defederated from many of the remaining servers, leaving its users to speak freely — but only to people who want to hear what they have to say.

This is true meaning of “freedom of speech isn’t freedom of reach.” Willing listeners aren’t blocked from willing speakers — but you don’t have the right to be heard by people who don’t want to talk to you:

https://pluralistic.net/2022/12/10/e2e/#the-censors-pen

Fediverse servers are (thus far) nonprofits or hobbyist sites, and don’t have the same incentives to drive “engagement” to maximize the opportunties to show advertisements. Fediverse applications are frequently designed to be antiviral — that is, to prevent spectacular spreads of information across the system.

It’s possible — likely, even — that future Fediverse servers will be operated by commercial operators seeking to maximize attention in order to maximize revenue — but the users of these servers will still have the freedom of exit that they enjoy on today’s Jeffersonian volunteer-run servers — and so commercial servers will have to either curb their worst impulses or lose their users to better systems.

I’ll note here that this is a progressive story of the benefits of competition — not the capitalist’s fetishization of competition for its own sake, but rather, competition as a means of disciplining capital. It can be readily complemented by discipline through regulation — for example, extending today’s burgeoning crop of data-protection laws to require servers to furnish users with exports of their follow/follower data so they can go elsewhere.

There’s another dimension to decentralized content moderation that exit and voice don’t address — moderating “harmful” content. Some kinds of harm can be mitigated through exit — if a server tolerates hate speech or harassment, you can go elsewhere, preferably somewhere that blocks your previous server.

But there are other kinds of speech that must not exist — either because they are illegal or because they enact harms that can’t be mitigated by going elsewhere (or both). The most spectacular version of this is Child Sex Abuse Material (CSAM), a modern term-of-art to replace the more familiar “child porn.”

Rozenshtein says there are “reasons for optimism” when it comes to the Fediverse’s ability to police this content, though as he unpacked this idea, I found it much weaker than his other material. Rozenshtein proposes that Fediverse hosts could avail themselves of PhotoDNA, Microsoft’s automated scanning tool, to block and purge themselves of CSAM, while noting that this is “hardly foolproof.”

If automated scanning fails, Rozenshtein allows that this could cause “greater consolidation” of Mastodon servers to create the economies of scale to pay for more active, human moderation, which he compares to the consolidation of email that arose as a result of the spam-wars. But the spam-wars have been catastrophic for email as a federated system and produced all kinds of opportunities for mischief by the big players:

https://doctorow.medium.com/dead-letters-73924aa19f9d

Rozenshtein: “There is a tradeoff between a vibrant and diverse communication system and the degree of centralized control that would be necessary to ensure 100% filtering of content. The question, as yet unknown, is how stark that tradeoff is.”

The situation is much simpler when it comes to servers hosted by moderators who are complicit in illegal conduct: “the Fediverse may live in the cloud, its servers, moderators, and users are physically located in nations whose governments are more than capable of enforcing local law.” That is, people who operate “rogue” servers dedicated to facilitating assassination, CSAM, or what-have-you will be arrested, and their servers will be seized.

Fair enough! But of course, this butts up against one of the Fediverse’s shortcomings: it isn’t particularly useful for promoting illegal speech that should be legal, like the communications of sex workers who were purged from the internet en masse following the passage of SESTA/FOSTA. When sex workers tried to establish a new home in the fediverse on a server called Switter, it was effectively crushed.

This simply reinforces the idea that code is no substitute for law, and while code can interpret bad law as damage and route around it, it can only do so for a short while. The best use of speech-enabling code isn’t to avoid the unjust suppression of speech — it’s to organize resistance to that injustice, including, if necessary, the replacement of the governments that enacted it:

https://onezero.medium.com/rubber-hoses-fd685385dcd4

Rozenshtein briefly addresses the question of “filter bubbles,” and notes that there is compelling research that filter bubbles don’t really exist, or at least, aren’t as important to our political lives as once thought:

https://sciendo.com/article/10.2478/nor-2021-0002

Rozenshtein closes by addressing the role policy can play in encouraging the Fediverse. First, he proposes that governments could host their own servers and use them for official communications, as the EU Commission did following Musk’s Twitter takeover:

https://social.network.europa.eu

He endorses interoperability mandates which would required dominant platforms to connect to the fediverse (facilitating their users’ departure), like the ones in the EU’s DSA and DMA, and proposed in US legislation like the ACCESS Act:

https://www.eff.org/deeplinks/2022/04/eu-digital-markets-acts-interoperability-rule-addresses-important-need-raises

To get a sense of how that would work, check out “Interoperable Facebook,” a video and essay I put together with EFF to act as a kind of “design fiction,” in the form of a user manual for a federated, interoperable Facebook:

https://www.eff.org/interoperablefacebook

He points out that this kind of mandatory interop is a preferable alternative to the unconstitutional (and unworkable!) speech bans proposed by Florida and Texas, which limit the ability of platforms to moderate speech. Indeed, this is an either-or proposition — under the terms proposed by Florida and Texas, the Fediverse couldn’t operate.

This is likewise true of proposals to eliminate Section 230, the law that immunizes platforms from federal liability for most criminal speech acts committed by their users. While this law is incorrectly smeared as a gift to Big Tech, it is most needed by small services that can’t possibly afford to monitor everything their users say:

https://www.techdirt.com/2020/06/23/hello-youve-been-referred-here-because-youre-wrong-about-section-230-communications-decency-act/

One more recommendation from Rozenshtein: treat interop mandates as an alternative (or adjunct) to antitrust enforcement. Competition agencies could weigh interoperability with the Fediverse by big platforms to determine whether to enforce against them, and enforcement orders could include mandates to interoperate with the Fediverse. This is a much faster remedy than break-ups, which Rozenshtein is dubious of because they are “legally risky” and “controversial.”

To this, I’d add that even for people who would welcome break-ups (like me!) they are sloooow. The breakup of AT&T took 69 years. By contrast, interop remedies would give relief to users right now:

https://onezero.medium.com/jam-to-day-46b74d5b1da4

On Tue (Mar 7), I’m doing a remote talk for TU Wien.

On Mar 9, you can catch me in person in Austin at the UT School of Design and Creative Technologies, and remotely at U Manitoba’s Ethics of Emerging Tech Lecture.

On Mar 10, Rebecca Giblin and I kick off the SXSW reading series.

[Image ID: A trilemma Venn diagram, showing three ovoids in a triangular form, which intersect at their tips, but not in the middle. The ovoids are labeled 'Avoid angering users,' 'Diverse userbase,' 'Centralized platforms.' In the center of the ovoids is the Mastodon mascot. The background is composed of dead Twitter birds on their backs with exes for eyes.]

#pluralistic#cda#cda230#section 230#content moderation#federation#intermediary liability#mastodon#fediverse#protocols not platforms#activitypub#moderator's trilemma#agonism#subsidiary

93 notes

·

View notes