#OpenCog

Text

Can the Singularity Net (AGIX) make money from AI?

Can the Singularity Net (AGIX) make money from AI?

The Singularity Net (AGIX) is a blockchain platform built to cash in on artificial intelligence.

To elaborate, the Singularity Net serves as a decentralized marketplace for artificial intelligence products. They claim AIs can run on Singularity Net’s blockchain.

The goal of the Singularity Net is to decentralize AI and Artificial General Intelligence (AGI). To explain, an artificial general…

View On WordPress

#AGIX Token Tools#AI Marketplace & AI Publisher#An Ever Growing Library of AI Algorithms#Artificial General Intelligence (AGI)#Artificial Intelligence#Artificial Intelligence for Everybody#Can the Singularity Net (AGIX) make money from AI?#OpenCog Hyperion#Singularity Net (AGIX)#SingularityNet (AGIX)#What Value Does the SingularityNet (AGIX) have?

0 notes

Text

Ben Goertze (Metta/Opencog) posited that using some kind of actual pedagogy, teacher/student relationships, will be a possible step required for AGI and that we might make AGI with a gigantic decentralized type of process that is similar to the ‘do androids dream of electric sheep’ screensaver.

Nothing like what we’re doing now.

0 notes

Text

Yapay zeka coini SingularityNET COO’su Janet Adams yakın vakitte bir röportaj verdi. Önemli noktalara vurgu yapıyor. Buna nazaran, yapay zeka sanayisinde ihtilal yaratmayı ve büyümesini engelleyen klasik mahzurları ortadan kaldırmayı amaçlayan yapay zeka pazarının muazzam potansiyeline ışık tutuyor. Ayrıntılara bakalım.Yapay zeka coini için patlayıcı işaretlerYapay zeka coini SingularityNET‘in merkezi olmayan, yapay zeka dalındaki geliştiriciler için oyun alanını düzleştirmeyi amaçlıyor. Ayrıyeten yapay zekaya erişimi demokratikleştirmeyi ve çoklukla inovasyonu yavaşlatan barikatları ortadan kaldırmayı hedefliyor. Mevcut teknoloji ortamında, değerli atılımlar çoklukla teknoloji devleri tarafından tekelleştirilmekte. Öbür taraftan vizyonerleri, akademisyenleri ve öğrencileri dezavantajlı durumda bırakmakta.Yapay zeka coini SingularityNET, merkezi denetimi olmayan merkezi olmayan bir pazar yaratıyor. Buna nazaran, iştirakçilerin bir şirket kurmalarına yahut tahlillerini büyük şirketlere satmalarına gerek kalmıyor. Ayrıyeten süratli bir biçimde yenilik yapmalarını, inşa etmelerini ve yararlarından yararlanmalarını sağlıyor. Bu pazar yeri, her şeyi kapsayan bir yapay zeka ortamını teşvik ediyor.Cardano-Ethereum köprüsünde ilerlemeYapay zeka coini SingularityNET’in Cardano ve Ethereum ortasındaki köprü üzerindeki çalışmaları istikrarlı bir formda ilerliyor. Bangalore takımı tarafından 2022’nin başlarında başlatılan ve daha sonra NAINT’e devredilen proje, Ethereum ve Cardano üzere farklı kripto ağları ortasında kesintisiz ve kayıpsız token transferleri sağlamaya odaklanıyor.Köprü başlangıçta Yapay zeka coini AGIX ve NTX üzere tokenleri destekledi. Ayrıyeten algoritmalara ince ayar yaptı. Başka taraftane API’leri optimize etti. Bunu RJV ve CGV tokenlerinin entegrasyonu ve HypC’nin eklenmesi planları takip etti. 2022’den 2023’e kadar yapılan toplam süreçlerde %68’lik bir artış var. ayrıyeten transfer hacmi %50 arttı.Diva Robot Desdemona ve Ben Ditto ile işbirliğiJam Galaxy Band’in solisti Desdemona, Ulusal Müzik Tüccarları Birliği (NAMM) de dahil olmak üzere dünya çapında performans sergiledi. Eşsiz, kendine mahsus bir stile sahip olması onu Sophia’dan ayırıyor.Ben Ditto ile işbirliği güçlü bir başlangıç yaptı. Yapay zeka coini SingularityNET’in bir yan projesi olan Yaya Labs’ında kıymetli adımları var. Robot Desi’yi önde gelen bir yapay zeka ünlüsü haline getirmeyi amaçlayan Web3 Projeleri ve robotik üzerine odaklanıyor.Elon Musk ve yapay zeka projeleriRöportajda, Elon Musk‘ın vizyon sahibi olmakla birlikte farklı bir duruma da işaret var. Yapay Genel Zeka’ya (AGI) yanlışsız sıçrama yapabilecek eşsiz fikirlere sahip olmayabileceği söz ediliyor. Musk’ın YZ eforları, cointahmin.com olarak baktığımızda Büyük Teknoloji şirketlerininkine benzeri halde, ana akım yaklaşımlarını takip ediyor üzere görünüyor. Adams, OpenCog Hyperon projesinin istikametiyle uyumlu olarak, YGZ’ye ulaşmanın derin hudut ağlarından daha fazlasını gerektireceğine inanıyor.Adams, 5-10 yıl içinde insan seviyesinde AGI’ye yanlışsız bir atılım yapılacağı fikrinde. Ayrıyeten bunun toplumu ve global ekonomiyi altüst edeceğini öngörüyor. Finans ve tıp üzere halihazırda büyük ölçüde bilgisayarlaşmış ve internet odaklı dallar var. Muhtemelen YZ hizmetlerini daha süratli benimseyecek. Ayrıyeten, eğitim ve tarım üzere başkaları ise geride kalacaklar.Yapay zeka coini AGIX’te geliştiriciler için teşviklerYapay zeka coini SingularityNET’in pazaryeri değerli proje. Ayrıyeten yapay zeka geliştiricilerine yeniliklerine sahip olma ve adil bir halde telafi edilme fırsatı sağlıyor. Geliştiricilerin büyük şirketler için çalışmaya yahut fikirlerini satmaya gerek kalmadan çalışmaları için adil ödeme almaları için yeni bir yol yaratıyor.OpenCog Hyperon, çeşitli dar yapay zeka cinslerini entegre ediyor. Ayrıyeten farklı vazifeleri yerine getirebilen yapay zekanın önünü açmayı amaçlıyor. YZ fonksiyonlarının bu entegrasyonunun YZ yeteneklerinde kıymetli ilerlemeler sağlaması beklentiler dahilinde.

Yapay zekada yazılım ve donanımın dengelenmesiYazılım ve algoritmalar yapay zeka gelişimi için hayati değer taşıyor. Öbür taraftan donanım da pratik tesir elde etmede çok kıymetli bir rol oynuyor. Yapay zeka coini SingularityNET‘in donanım geliştiricisi Simuli ile paydaşlığı kıymetli. Buna nazaran verimli YZ işleyişi için temel bilgi-grafiği arama algoritmalarını birleştiren Metagraph Pattern Matching ’i (MPMC) yaratmayı amaçlıyor.Bu noktada Yapay zeka coini AGIX token’ın kilit rolü var. AGIX token, yapay zekanın potansiyelini ortaya çıkarmanın merkezinde yer alıyor. Yapay zeka küratörlüğünü, kendi kendini organize etmeyi, sorun çözmeyi hedefliyor. Ayrıyeten süreçleri mümkün kılarak yapay zekayı şirketler, kuruluşlar ve geliştiriciler için büyük ölçekte yapay zeka algoritmaları satın almak ve satmak için erişilebilir hale getiriyor.

0 notes

Text

the wonders of Sophia’s mind

By: Shubh Puranik

Hanson Robotics, a Hong Kong-based business, created Sophia, a social humanoid robot. After being activated on February 14, 2016, Sophia debuted in front of the general public at South by Southwest (SXSW) in Austin, Texas, USA, in the middle of March. Sophia has been featured in numerous high-profile interviews and has been covered by media outlets all around the world. Sophia received Saudi citizenship in October 2017, making her the first robot to have citizenship in any nation. Sophia became the first non-human recipient of a United Nations title when she was designated the organisation's first Innovation Champion in November 2017. Sophia was first activated on February 14, 2016. The robot, modelled after the ancient Egyptian Queen Nefertiti, Audrey Hepburn, and its innovator's woman , Amanda Hanson, is known for its human-like appearance and behaviour compared to former robotic variants. As of 2018, Sophia's architecture includes scripting software, a chat system, and OpenCog, an AI system designed for general logic. Sophia imitates mortal gestures and facial expressions and is suitable to answer certain questions and to make simple exchanges on predefined motifs. Sophia uses speech recognition technology from AlphabetInc. and is" designed to get smarter over time". Its speech conflation capability is handled by CereProc's textbook- to- speech machine, and also allows it to sing. Sophia's intelligence software is designed by Hanson Robotics. The AI program analyses exchanges and excerpts data that allows it to ameliorate responses in the future.

1 note

·

View note

Text

Robots and AI

This topic is fascinating to me because we’ve already got so far with this kind of technology in only a few decades. Robotics and artificial intelligence is almost undoubtedly - good or bad - going to be a huge part of our future.

Sophia

Sophia was made by Hong-Kong based company Hanson Robotics. She was first activated in 2016. Whilst Sophia is nowhere near as complicated or scary as the pubic seem to think, what Hanson Robotics achieved is still very impressive.

The robot has some set answers to certain questions so she doesn’t actually think about a lot of the things she’s asked in conferences, etc (a lot of it is just for show). It’s like asking google or Alexa a question. The programs that determine these kind of responses are called Timeline Editor and ChatBot.

However, it’s not all as fake as I’m making it out to be. She can technically “learn” through something called OpenCog. With this, AI can sort of store information they’ve been told and use it later on; like a human memory but not quite. People believe this to be what will eventually lead to robots being able to think for themselves.

She hasn’t got emotions like people think, just a few creepy facial expressions.

Boston Dynamics

Their robots are some of the most innovative out there to date.

Atlas is a humanoid 6ft robot capable of moving in a very human like way. The robot has been tested on parkour courses and can now do a lot of things that most people can’t, including backflips. This is made possible with it’s 28 hydraulic joints and other custom-engineered components. It can run at speeds of 2.5m/s and weights less than you might’ve thought at 89kg.

I also generally like how this robot looks, maybe it’ll help me design a character.

Spot is a dog inspired bot. It has similar balancing and movement capabilities to Atlas with plenty of joints that allow it to move in certain ways that most other robots can’t. The robot has many attachments that can be used for different things. In the picture below, Spot has it’s arm attachment which allows it to pick up items.

Asimo

This robot’s development sadly (officially) ended in 2018. It was probably discontinued because Honda saw no profit. However, there is still a lot to talk about.

After it’s official release in 2000, Asimo was probably one of the all-time most innovative inventions in robotics/AI. This is because of how versatile it was, Asimo could walk, talk, recognize moving objects and even human gestures such as handshakes. These things improved up until 2011, when it’s final version was shown.

0 notes

Text

Open Source, Is it Good for AGI Research or a Suicide Pack? Help us know for sure

Open Source, Is it Good for AGI Research or a Suicide Pack? Help us know for sure

Those that have grown up with open source in the past 20 years know that open source is popular. It’s popular because of a number of reasons including that it fosters innovation, speeds up delivery, and helps us all collectively learn from each other.

We ourselves at the AGI Lab have just assumed this was a good thing. We believe that Open Source research helps everyone. Many research groups…

View On WordPress

0 notes

Photo

21 Open Source Libraries/Tools for Artificial Intelligence. 1. Caffe 2. CNTK 3. Deeplearning4j 4. Distributed Machine Learning Toolkit 5. H2O 6. Keras 7. Mahout 8. MLlib 9. Mycroft 10. NuPIC 11. Neuroph 12. OpenNN 13. OpenCog 14. OpenCyc 15. ONNX- Open Neural Network Exchange 16. Oryx 2 17. PredictionIO 18. SystemML 19. TensorFlow 20. Theano 21. Torch -👉Do you know any other library for AI? - #artificialintelligence #machinelearning #ai #deeplearning #library #programming #development #tool #systemml #torch #caffe #deeplearning4j #mllib #opencog https://www.instagram.com/p/B_4ZWieHKej/?igshid=f3u8cz0affy5

#artificialintelligence#machinelearning#ai#deeplearning#library#programming#development#tool#systemml#torch#caffe#deeplearning4j#mllib#opencog

0 notes

Quote

Ultimately, all the approaches to reaching AGI boil down to two broad schools of thought. One is that if you get the algorithms right, you can arrange them in whatever cognitive architecture you like. Labs like OpenAI seem to stand by this approach, building bigger and bigger machine-learning models that might achieve AGI by brute force.

The other school says that a fixation on deep learning is holding us back. If the key to AGI is figuring out how the components of an artificial brain should work together, then focusing too much on the components themselves—the deep-learning algorithms—is to miss the wood for the trees. Get the cognitive architecture right, and you can plug in the algorithms almost as an afterthought. This is the approach favored by Goertzel, whose OpenCog project is an attempt to build an open-source platform that will fit different pieces of the puzzle into an AGI whole. It is also a path that DeepMind explored when it combined neural networks and search trees for AlphaGo.

Artificial general intelligence: Are we close, and does it even make sense to try? | MIT Technology Review

1 note

·

View note

Text

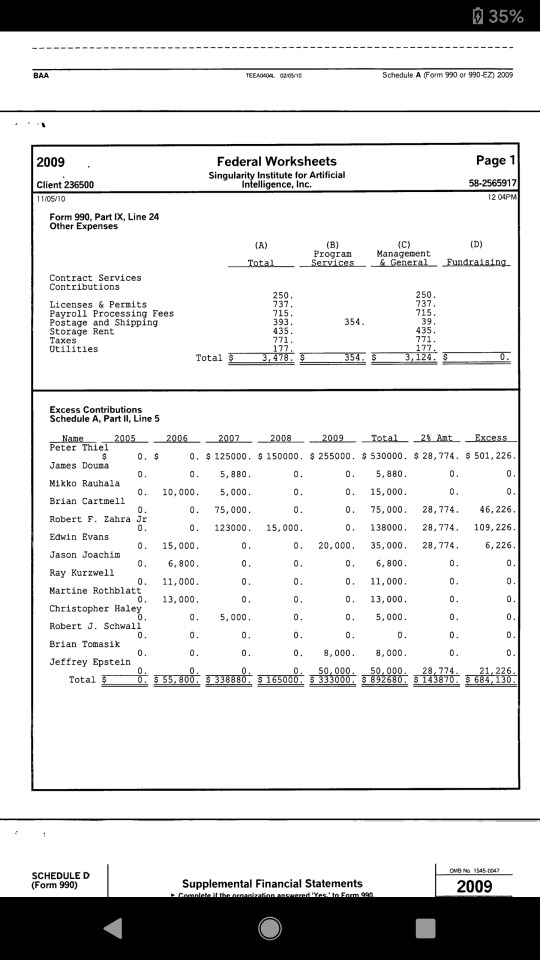

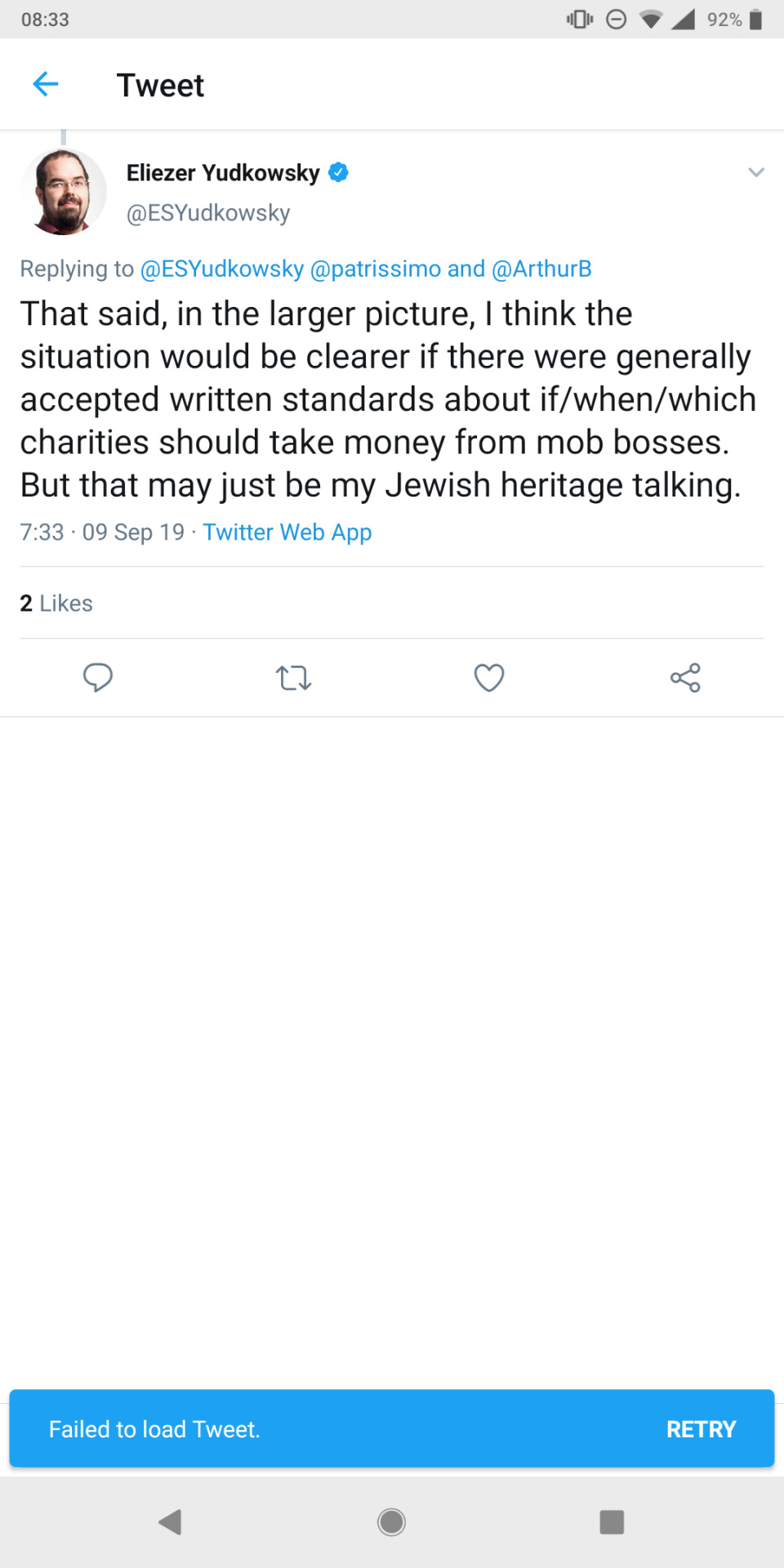

MIRI PAID IN TO EPSTEINS NETWORK OF SOCIAL LEGITIMACY

so theres the thing where MIRI accepted 50000$ from epstein in 2009 after he was charged by ~30 teenagers with molestation and found guilty (2008, https://www.nytimes.com/2008/07/01/business/01epstein.html According to the Miami Herald, by 2008, "when Epstein was granted his now-infamous plea deal, federal prosecutors had identified 36 underage victims." https://www.thecut.com/2019/07/how-many-jeffrey-epstein-victims-are-there.html). well after the time people like vassar and yudkowsky and goertzel would have known he was an Elder Vampire who liked to worm his way in to tech society.

page 18: https://projects.propublica.org/nonprofits/display_990/582565917/2011_01_EO%2F58-2565917_990_200912

yudkowsky asks us:

<<That said, in the larger picture. I think the situation would be clearer if there were generally accepted written standards about if/when/which charities should take money from mob bosses. But that may just be my Jewish heritage talking.>>

i have a criterion! the sort of thing Elder Vampire epstein buys with his donations is social legitimacy, the same thing OpenAI (an organization which accelerates the armsrace) bought from OpenPhil by selling a board seat for such a low monetary price. http://benjaminrosshoffman.com/an-openai-board-seat-is-surprisingly-expensive/

--

people know that getting involved with epstein is like getting involved with the mob, eliezer and somni independently generated this analogy. like epstein rapes children, facilitates other people raping children, and blackmails people in positions of power.

so like my criteria of when to accept money from epstein is when you are able to undo his plans for social legitimacy. if MIRI were to accept 50000$ from epstein and then write a public post about he should get 0 social credit from ties of being a donor and list his crimes then accepting the money seems good.

if you want to not pay in to epsteins social legitimacy network which services industrial rape, you just optimize for his network not increasing.

if you come to a sheet of paper to write your disclaimer of epstein and you are afraid for your company and your life for doing this, then notice that maybe you dont have the constitution to deal with the mob ethically.

and reject the money and let people with more courage do this.

though if you are susceptible to blackmail, you might want to stay away from Elder Vampire blackmailers.

similarly with OpenPhil, except to the extent that buying a seat on the council of an armsrace accelerationist org is a fatally flawed strategy.

i think after he left miri ben commented on a donation by epstein to opencog:

<<Jeffrey Epstein, whose visionary funding of my AGI research has helped me through a number of tight spots over the years. At time of writing, Jeffrey is helping support the OpenCog Hong Kong project.>>

--

people are saying it was just ben goertzel and no one else knew and hes gone now

[1] people are trying this "some of the people who were involved in paying blackmail to louie's ridiculous demands are now gone" and this is an attempt to launder crimes. like, anna salamon is still there.

[2] people are saying ~none of the current staff knew~ but also people said ~know one knew~ about a lot of things about epstein. the people who visited his island had ~no idea~ that there was anything going on with lots of teenage girls walking around his island. that ~a 17 yo's offer of sex to a 73 yo minsky on a private island must have been perceived as consensual by minsky~. and also people initially buried the fact that miri / cfar caved to louie helms ridiculous demands so im really not buying that ~no one knew except ben goertzel and he was an anomaly and left miri~.

eliezer yudkowsky: did you know about the epstein donation?

28 notes

·

View notes

Text

August 9, 2020

My weekly review of things I am doing and looking at. A long one this time; topics included disease risk in the food system, my research work patterns, ROI for energy R&D, Apocalypse Never, OpenCog, and housing and transportation in Hillsboro.

Disease Risk and the Food System

Last week I started looking at zoonotic diseases for Urban Cruise Ship, and this week I continued a bit more on disease risk. The current page is here.

Sans images, there is now material on foodborne illnesses, antibiotic resistance as it pertains to antibiotics in livestock, ecological risk from GM crops, and crop disease risk from monoculture. The section is far from done, but it is probably going to go on hold for a while. A few observations:

- Disease risk in general is a major issue, very much on our minds due to COVID-19. That’s a big can of worms. It would take an indeterminate amount of work to do the topic justice and require that I move well beyond the food system. So it’s one that I will have to take one bite at a time.

- There is an image under development that portrays foodborne illness risk in the US by type of food, but there is also a need to look at underlying causes, recognizing that food is a transmission vector and not necessarily the underlying cause.

- Antibiotic resistance looks like a scary topic. There is a report that antibiotic-resistant bacteria could kill 10 million people per year by 2050, which sounds scary, but I need more context on that number. Does this assume a business as usual trajectory where we don’t develop new antibiotics or develop alternative treatments for AMR bacteria, such as plasma medicine, and how much do such developments bend the curve?

- Ultimately I would like to be able to assess externalized monetary cost from antibiotics in livestock in terms of AMR bacteria. I don’t have this yet, but it should be possible.

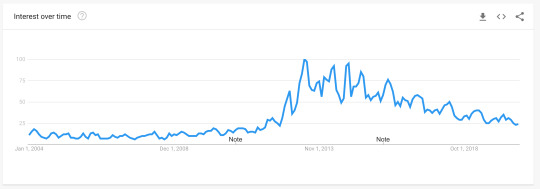

- I half-assed the genetic risks, and I think justifiably so. I don’t see any evidence, aside from vague appeals to the precautionary principle, to support any significant ecological risks from GM crops. Partly to justify the half-assedness of my effort on the topic, I pointed to a Google Trends search indicating that the public is losing interest in the GMO issue.

A few years ago, I thought I was being bold and edgy by pointing to a lack of evidence of any health or environmental risks from GMOs per se. Now that seems like the safe position, and GMO opponents have (deservedly in my view) generally lost credibility in the way the anti-vax movement has.

- One of my associates is interested in systemic risks from crop monoculture, which prompted me to add that section. It appears that disease risk is the major such systemic risk. The issue of crop and animal disease (as opposed to human diseases for which the food system is a vector) is also a major topic deserving of more careful review and analysis. I would suspect that, from the viewpoint of disease, monoculture is not the most important issue, but it appears that way because monoculture was my entry point into the topic.

The Urban Cruise Ship Work Pattern

I figured now would be a decent time to open the hood and make a few comments about how I am going about the work. Recently the funder made some major additions and changes to the scope of work. This is good for me from a job security standpoint, but it means I need to do some major rethinking about how I go about the project, to insure that things get done at a high level of quality and in reasonable time.

We are ultimately trying to present the best data, analysis, and solutions available on the full range of environmental topics.

Such a grandiose vision requires that I innovate not just in how I think about particular issues, but in how I think about the big picture and how I work. We are setting into a comparison and monetization scheme to present data, a view that was driven by the funder but I have been convinced is best.

One thing I have learned is that knowledge across topics is synergistic. That means that is probably going to be more efficient to aim for a broad and shallow understanding of the environmental landscape, after which we go deeper on the things that require a deeper understanding. This is why I am moving on from the agriculture risk section despite having a superficial treatment of the subject; I intend to come back to it later when it can be better informed by material elsewhere on the site, and I also hope that I have done there will help inform the next sections of work.

This is a work style that suits me well. My mind is always jumping from one area to the next, and I like to draw connections and look at the big picture. This is very much a contrast from most of academic work, which requires a very deep analysis of a narrow topic. I ultimately lost interest in my narrow corner of mathematical research and was not able to make a successful jump to another area; hence (in part) I was not suited for the tenure track.

The obvious drawback is what one sees on the site now. It is obviously incomplete and a bit of a mess, and it will probably remain in such a state for the foreseeable future. It means I have to move fast, which increases the risk of making major mistakes. I fear we are operating at too high a level of abstraction and generality to make actionable policy recommendations.

Although not a high priority, I really wish I could integrate the graphic making process into the larger codebase. The current division of labor is such that I see no way to do so. I dislike having these “Image Under Development” messages and lacking the flexibility to easily modify images as the research proceeds or new data become available.

Return on Investment for R&D

I mentioned before some studies that the US Department of Energy has done on effectiveness of its research and development efforts. Having looked at them more closely, I found something a bit surprising.

I tried my best to harmonize the numbers reported to make a fair comparison. It’s not perfect, but the following seem to be the central estimates of the ROI for the program investment areas studied:

Combustion engines: 53

Building technologies: 42

Wind: 5.07

Geothermal: 4.865

Hybrid and electric vehicles: 3.63

Solar PV: 1.83

They all look like good investments, though building technologies (HVAC, water heating, appliances) and combustion engines clearly stand out as the best. I would have expected the opposite. Since the building and combustion areas are more incremental, there should be more incentive for the private sector to do the R&D and therefore a “crowding out” effect that would blunt the effectiveness of the public investment.

Part of this could be an artifact of the study methodology. Since the time horizon for the lower return technologies is longer, they simply haven’t captured the full benefit. The solar PV study was done in 2010, and I would expect a higher return to be found if it was redone today. There could also be an attribution problem, in that with developing more novel technologies, it is harder to attribute gains to a particular R&D investment, therefore depressing the observed ROI.

I want to propose some solutions on R&D efforts for synfuels and industry, so these studies might provide guidance as to what kind of investments can be expected to work best. Maybe this is a sign that I should be thinking more about short term gains.

Apocalypse Never

Apocalypse Never is a new book by Michael Shellenberger castigating the harmful effects of what he sees as environmental alarmism. I haven’t read it, but I have read enough of Shellenberger’s work and discussion around it to make some relevant observations.

Not too surprisingly, the reaction from the environmental community seems to be mostly negative. This article from Snopes captures fairly well what academic climate/environmental researchers think. Despite being from Snopes, the character of the article isn’t a “debunking” so much as a critical analysis. There is much disagreement about semantics (e.g. are we really in the Sixth Mass Extinction?) rather than factual disputes. Though I have a few of those too.

Since I hope one day to have major public exposure for Urban Cruise Ship, the discussion is a helpful case study in how to present material and what kind of reception I should expect.

Since I am critical of several aspects of environmentalism--particularly degrowth and related elements--I expect some negative reaction. To blunt the effect of criticism, I think I need do to a better job of operating on the following principles:

- Focus on principles and avoid ad hominem attacks, including against abstractions such as fields and movements.

- Make every effort to insure facts presented are accurate.

- Find the right level of nuance. Too little nuance can be inaccurate. Too much nuance can water down a message to the point of meaninglessness.

Though most of the discussion I saw was pretty even-handed, there is some gatekeeping that goes on in the climate community. The bogeyman of the “climate denier” looms large and triggers a kind of circle-the-wagons mentality when the field is criticized, whether justly or unjustly. Lacking formal credentials or institutional backing, I am going to be vulnerable to gatekeeping and probably can’t do anything about it.

OpenCog

Having listened to Ben Goertzel on Lex Fridman’s podcast a while back, I got around this week to looking over OpenCog, which is Goertzel’s open source project to create artificial general intelligence.

There is a ton of material here that will take a long time to work through, especially considering that I am doing it only as a side project. Just reviewing the set of AI principles being brought to bear in the project, though, buoyed my spirits and excited me about the field in a way I haven’t felt in a long time. I am already thinking about some work I can do. Contributing to OpenCog is beyond my capabilities at present, but I have some related design ideas that have been sitting on the shelf for a long time and are time to give another look at.

I have no idea if this effort toward AGI will work. But I would guess that it is more likely to work than an approach rooted exclusively in deep learning, such as the GPT approach, which suffers from intractable diseconomies of scale. In particular, I think that a semantic encoding of knowledge is a necessary component of any AGI stack. There are people with far more expertise who disagree.

Housing and Transportation in Hillsboro

I’ve dialed back my political activities a bit lately, but there were some items at the Hillsboro (Oregon) City Council this week worth commenting on.

City staff presented on efforts to implement HB 2001, a piece of state legislation that mandates most cities allow for middle housing (du-, tri-, quad-plexes, cottage housing, small apartments) in residential areas. Without naming names, my read on the council and mayor is that among the seven, two are generally pro-housing, two are generally anti-housing, one is squishy, and two I don’t have a good read on. I have written to them to indicate my desire that we take advantage of the opportunity provided by HB 2001 for an expansive approach to opening up housing opportunities in Hillsboro.

We also had a presentation on the Get Moving package, which is the transportation package that Metro has now referred to the ballot in November. City staff seemed to be negative. The presenter asserted that Hillsboro gets a disproportionately low ROI (about 0.56) for the project and that Metro was unduly influenced by Portland-based anti-vehicle activists to reject road expansion capacity that Hillsboro needs. One council member expressed her concern (which I agree with) that the financial burden falls entirely on large employers, which will be particularly harmful in Hillsboro and I think is bad tax policy in general. On the positive side, the package includes some badly needed safety upgrades to TV Highway, which is the most dangerous highway in the state per-mile for both pedestrians and motorists. There is also money for a study of a downtown Portland MAX tunnel, which I think will be very important for the region. Ultimately, despite the extensive public engagement theatre, it is a pre-COVID package, based on economic and transportation demand assumptions that may no longer be reasonable.

I haven’t yet decided how I will vote on the package, but I am leaning toward a No right now.

1 note

·

View note

Link

Based in Hong Kong, OpenCog, an open source AI programming group, under the direction of Ben Goertzel, began their cerebral project with a Hanson Robokind: a toddler like robot. The challenge is to take the basic intelligence of virtual avatars programmed for the screen, and transfer that to a robotic structure. To do this however, a robot must have the capacity to perceive and interpret the outside world.

OpenCog’s virtual characters have that capacity. Each character has a database called an AtomSpace where thousands of ‘atoms’ exist as knowledge concepts such as objects, actions and feelings (anger, fear, happiness). Every time a character encounters objects or concepts in its environment, a new atom is created in that character’s AtomSpace. Associations are also recorded as a character moves from one concept to another. Upon repetition, associative links get stronger, influencing a character’s pathway choices and building associative memory. Links can also decay over time if not used by algorithms.

Generalized intelligence from the environment is also built as similarities between concepts trigger an associative network in the AtomSpace. Several algorithms can function at the same time: such as current associative links and associative networks that are triggered due to similarities. The theory behind this “cognitive synergy” is that humans have multiple thought processes going on simultaneously, prioritizing one’s over others in order to function.

“The challenge in all of this,” Jeffrey Epstein remarked, “is to create a robotic nervous system that can perceive concepts in its environment .”

23 notes

·

View notes

Text

Open Source, Is it Good for AGI Research or a Suicide Pact? Help us know for sure

Those that have grown up with open source in the past 20 years know that open source is popular. It’s popular because of a number of reasons including that it fosters innovation, speeds up delivery, and helps us all collectively learn from each other.

We ourselves at the AGI Lab have just assumed this was a good thing. We believe that Open Source research helps everyone. Many research groups in AGI research are already open sourcing including Open Cog, Open Nars, and more.

From an ethical standpoint, we use a system called SSIVA Theory to teach ethics to systems we work on such as Uplift and so we assumed we should release some of our code (which we have here on this blog and in papers) and we planned on open sourcing a version of the mASI or collective system that we work on that uses an AGI Cognitive Architecture.

From an SSIVA standpoint, you can even make the point that the most ethical course of action is to achieve AGI as quickly as possible. But is that correct? if not they why?

We have been talking recently by members of the Machine Intelligence Research Institute or MIRI that say this is a bad thing? But is it? And why? Can’t collective superintelligent systems contain human-level AGI?

We are putting on a conference to help decide but so far we have not found someone to advocate for MIRI’s position. At the conference, we hope to vote on if this is a good thing or not allowing someone for both sides of the issue to make a case and at the end of the conference, we will either start open-sourcing our research or not.

In particular, we want to open-source parts of this project “Uplift”

Let us know if you would like to help either way.

Our Conference is on June 4th on Collective Superintelligence

As a side note, many other groups are already open sourcing AGI research code (some of these work as toy AGI’s already) and there are just some of them here:

https://opensource.com/article/20/7/ai-open-source

and

https://awesomeopensource.com/projects/agi

if you know of some others let us know.

Open Source, Is it Good for AGI Research or a Suicide Pact? Help us know for sure was originally published on transhumanity.net

#agi#AGILab#ICOM#Open Source#OpenCog#OpenNars#research#crosspost#transhuman#transhumanitynet#transhumanism#transhumanist#thetranshumanity

0 notes

Text

Ben Goertzel 2020 Interview on Artificial General Intelligence

Sander Olson has provided a new, original 2020 interview with Artificial General Intelligence expert and entrepreneur Ben Goertzel. Ben is the founder of SingularityNET.

Question: Hanson Robotic’s Sophia robot has garnered considerable attention. Are there any other robots being developed by Hanson robotics using OpenCog software?

Yeah — my colleagues at SingularityNET / Singularity Studio / OpenCog and I are currently collaborating on a research project with Hanson Robotics involving using transformer neural nets and neural-symbolic OpenCog AI to help with Sophia’s dialogue system.

We are also at the early stages of working out a potential collaboration with Hanson Robotics and some partners from the medical space, regarding a wheeled, medical-oriented humanoid social robot aimed at eldercare and other patient-facing applications in hospitals, nursing homes and so forth. We are in discussion with hospitals and elder-care facilities in Hong Kong,Taiwan and China as well as the US. The goal is to provide social/emotional connection to patients, as well as medical question answering and helping hospital staff to evaluate patient state, look out for danger situations, and so forth. In the context of neurodegenerative disease specifically, we are looking at using these robots to test cognitive abilities, as well as various techniques to reduce cognitive decline. If all goes as hoped we would start out with this gradually with some trial robots probably in medical facilities in Asia, and then move on to scalable production from there. Once the plans get more definite we will certainly make some public announcements on this. But I think the need is clear, both in general in terms of understaffing of medical facilities, and in particular right now in terms of the special burdens COVID-19 is placing on medical facilities (and the particular need to minimize human contact in eldercare facilities).

youtube

Question: In 2011 OpenCog came out with a roadmap that predicted an “OpenCog-based artificial scientist, operating a small molecular biology laboratory on its own, designing its own experiments and operating the equipment and analyzing the results and describing them in English”, as well as “full-on human-AGI” by 2021. What happened?

To put it very simply, while my OpenCog colleagues and I have done a lot of interesting things in the period since 2011, we have not yet obtained a sizable amount of funding for our AGI R&D work. Of course, some other parties with different approaches to AGI have been very well capitalized — but while their approaches have been more suitable for meeting the psychological needs of investors and the business model needs of large corporations, they are ultimately not workable in terms of achieving AGI. Ultimately the idea that you can take methods that have worked for particular narrow AI problems and scale them up to achieve AGI by adding more processor power and more data — just isn’t gonna work. You do need a lot of processing power, and you do need a lot of data — but you also need a cognitive architecture and a collection of interlocking learning and reasoning algorithms that are well thought out based on a theoretical and practical understanding of general intelligence. My colleagues and I have this, but we haven’t had OpenAI or Deep Mind scale resources. Those projects with massive resourcing, while staffed by brilliant people, have been pursuing inadequately well thought out designs and approaches.

So in short, the industry has spent huge sums on flawed approaches that will never on their own achieve AGI. If OpenCog had secured budgets similar to Deepmind and OpenAI we would probably at least be close to fulfilling our 2011 predictions — or we might even have exceeded them. Corporations like Google and Microsoft and Facebook and so forth are trying to develop AGI by tweaking and modifying the narrow-AI techniques that are geared towards making them money. These techniques will encounter diminishing returns in the next decade, and that should open opportunities for alternate approaches — which will finally lead to more cognitively sound approaches like OpenCog getting the resources they need.

Within the SingularityNET Foundation’s AI initiative we have managed to make some decent progress toward OpenCog-based AGI — but SingularityNET is itself a startup project with a different focus, so there’s only so much it can do. At SingularityNET we’ve created a decentralized, blockchain-based platform for AI — which is going to be incredibly helpful for AGI, in terms of enabling it to operate in a fully distributed manner without need for any central controller. SingularityNET platform can help an OpenCog AGI — or other sorts of AGIs — operate as open-ended intelligences rather than centrally-controlled systems, which will ultimately increase their general intelligence. But to really build an AGI based on the OpenCog design requires more effort focused in that direction than SingularityNET has been been able to do. I mean, building a global decentralized AI platform is a lot of work unto itself, overlapping with but distinct from the work of implementing and teaching specific AGI systems…

Question: The Singularitynet foundation has recently updated its roadmap, specifically with regard to OpenCOG 2.0. What details can you divulge about OpenCOG 2.0?

We are currently in an intensive phase of requirements analysis and technical design for the next version of OpenCog, which we’re calling OpenCog Hyperon. We have a crack team of Russian researchers and developers starting to focus their time on this, led by Alexey Potapov and Vitaly Bogdanov in St. Petersburg, along with a distributed team including folks in SingularityNET’s Hong Kong office and our Ethiopian firm iCog Labs.

We are also hoping to enlist a global community of volunteer programmers in the effort. At my current guesstimate, it will probably take 1-2 years to get it completed — IF we can garner either significant volunteer programmer support or at least modest funding oriented specifically to the effort. Of course if SingularityNET’s decentralized marketplace is economically successful enough it could then fund OpenCog Hyperon development on its own, but at the moment SingularityNET’s business aspect is taking a while to mature, so we are looking at other ways to augment SingularityNET’s contributions and accelerate OpenCog Hyperon development.

Question: What are the main components of OpenCOG 2.0?

Basically, OpenCog’s AI design allows a bunch of different AI algorithms to cooperate together in dynamically updating a weighted, labeled hypergraph knowledge store called the Atomspace. There is a lot of mathematical and cognitive theory worked out over the last decades, regarding how to make multiple algorithms — like neural nets, reasoning engines, evolutionary algorithms — work together effectively in this context to achieve goals and recognize patterns and imagine and create new ideas etc.

So regarding the upgrade from legacy OpenCog to OpenCog Hyperon, there are two main components — a new large scale distributed version of the Atomspace; and the “Atomese 2” programming language. Right now a number of my colleagues are focusing on the distributed Atomspace, and along with Alexey Potapov and other colleagues I am focusing more personal energy on the Atomese 2 language. The goal with Atomese 2 is to make it more efficient and simple for developers to experiment with versions of AI algorithms within the OpenCog design, and also to better support meta-programming — where the AI algorithms, implemented in Atomese 2, write new Atomese 2 code thus improving the system’s intelligence via self-modification.

Will OpenCOG 2.0 leverage GPUs, or be CPU only?

Unlike the neural net approaches that are currently so popular, OpenCOG does not run particularly well on GPUs. But that could change in the future as the architecture evolves. There are tools like Gunrock that allow graph algorithms to exploit GPUs reasonably well — but making OpenCog use these would require a lot of work that hasn’t been done yet, and even once that work is done, GPUs wouldn’t be the silver bullet for OpenCog type designs that they have been for neural nets. The crux of the issue is that OpenCog is founded more on large scale graph (and hypergraph and metagraph) processing than on vector arithmetic, whereas GPUs are golden mostly for accelerating vector and matrix math. You can of course project graph operations into vector and matrix math, but you lose some efficiency (as well as elegance) in the translation.

OpenCOG can, however, make extensive use of massive cloud computing resources and server farms. And OpenCog Hyperon is going to do this vastly better than the current OpenCog version.

Question: Tell us about True AGI

OK, so the SingularityNet Foundation — the organization I’m currently running — is a non-profit platform designed as a marketplace to buy, sell, and develop AI services, and to allow different AIs to cooperate and collaborate to solve problems — fully decentralized and running on the blockchain. SingularityNET has released a whole bunch of open-source code aimed at creating platforms for decentralized AI…. It has also developed a bunch of AI code that uses this platform to do stuff — including biomedical AI, audio processing, natural language processing, finch and on and on.

Going forward we are looking to focus SingularityNET Foundation more strongly on the underlying decentralized protocols — as that is a huge area in itself — and spin off some of the upper-layer projects the Foundation is working on into some new entities that will be minority-owned by the Foundation but separately managed and able to grow in their own directions.

Along these lines we currently have three for-profit corporations that are spin-offs of SingularityNet — True AGI, Singularity Studios, and Rejuve.

Singularity Studio is building enterprise AI software using unique neural-symbolic methods for data analytics and process automation, and back-ended on SingularityNET’s platform. Singularity Studio’s tools would provide much of the AI back-end for the nursing assistant robotics project I mentioned a few minutes ago, also. Rejuve.io is focused on bioinformatics and crowdsourcing data for longevity analysis.

True AGI is concentrating on the core of the AGI problem, using SingularityNET as a platform and also other open-source platform technologies as appropriate. TrueAGI will help with OpenCog Hyperon, and also develop proprietary code aimed at making it easy to use OpenCog Hyperon for large-scale commercial applications.

So for instance the first version of the nursing assistant robots would use the current OpenCog version along with deep neural nets and other appropriate technologies — but once TrueAGI has finished its initial development work, these robots could be upgraded massively in intelligence via integrating TrueAGI’s AI engine with Singularity Studio’s product code. All of which of course would live in the cloud on the back end, not visible to the end-users of the robots except via their increased intelligence and capabilities.

All of these spinoffs, via using SingularityNET platform on the back end, would increase utilization of the platform and thus accelerate SingularityNET’s growth — lots of virtuous cycles going on here. What we need is beneficial AGI running on an open and decentralized platform and providing value to all people and every domain of industry.

If we can achieve that we can get the beneficial Singularity that so many of us have been foreseeing for most of our lives.

Question: How is OpenCOG being used with Rejuve?

OpenCOG is now being used to analyze genomics research and clinical trial data. We

have been getting some pretty interesting results on cancer clinical trial data lately, and

we plan on applying the same methods to Covid-19 trials regarding anti-viral cocktails

once sufficient data is available. We’ve also been looking at data regarding the

genomics of supercentenarian and discovering some quite intriguing things.

But right now we are constrained by the data made available by the corporate and

government labs that are generating it. To really see the power of our advanced AI in

the medical space, we need to feed it more and better data. Rejuve is a membership

organization in which the members band together to feed their personal medical data to

AI tools that then discover new things based on this data. Any money made from these

discoveries feeds partly back to the members. SingularityNET and OpenCog are tools

used for the AI data analytics process.

SingularityNET platform and OpenCog AI apply to every vertical market and every

aspect of human pursuit, and of course I can’t personally put my hand into all of them —

but human life extension is an application of particular personal and professional

interest to me. Partly because I enjoy my life and have a strong interest in not dying.

And partly because it’s an incredibly fascinating intellectual puzzle. And partly just out

of compassion for everyone who is suffering and dying around the world. Wouldn’t it be

great if we could just eliminate death and disease and torment and suffering from

human life? It sounds outrageous but in the historical scope we are now very close to

achieving this. We just need to take the last few steps — and we can take them much

faster if we apply cross-paradigm AI and decentralized data and value management to

the problem. Proto-AGI technology is ideally suited to do learning and reasoning

across multiple biological datasets pertaining to multiple levels of the organism — it’s a

perfect match with holistic systems biology, which is what we need to take the last steps

toward cracking aging. With a bit of luck Rejuve will be able to leverage its members’

data and passion for life to make some key contributions here.

We are also doing some immediate-term stuff with Rejuve using AI to process signals

from medical smartphone peripherals, to identify infections like COVID at the

presymptomatic stage. This is both something useful right now — it can save a lot of

lives — and it’s part of the same basic tech Rejuve will use to analyze its’ members

body states toward discovery of new longevity therapies.

Question: Is it realistic to believe that these startup corporations such as True

AGI and Rejuve can generate enough profits to cover the massive development

costs for AGI?

I don’t believe that the development costs for AGI necessarily need to be massive. I

wrote a blogpost a couple of years ago in which I speculated that AGI might be

developed for as little as $25 million — in truly dedicated, focused funding oriented just

toward AGI and not other related projects. I still think this seems about right. Obviously

this is a lot of money for the average person but it’s not huge by the standards of the

tech industry.

It might even be achievable much more cheaply than that. Perhaps if I had exclusively

focused on coding AGI myself for several decades — instead of dividing my time

between AGI and a lot of other related things like bio-AI, decentralized AI platforms, etc.

— we would have AGI now. So far for my whole career I have been working on AGI

around the edges of other projects, because it’s these other related projects that have

gotten funded. Some of these other projects have been successful, some less so, but

unfortunately none has yielded a big enough windfall to enable me to just fund a

reasonable-scale AGI R&D project on my own.

I’m not complaining — I’ve gotten to work on and lead a bunch of really exciting

projects, and I’ve gotten to spend a fair fraction of my own time on AGI theory and

prototyping. Compared to many humans on the planet I’ve had an amazingly fortunate

situation. But in spite of decades of related work and the current increase in excitement

about AGI, I haven’t yet managed to pull together a really well resourced AGI project

like, say, the OpenAI or Deep Mind guys have.

To answer your question directly though — I think the role of AI in the world economy is

utterly different than it was 20 or even 5 years ago. Right now, in 2020 and going

forward, is it possible to fund revolutionary AGI development via providing commercial

value with related advanced AI and proto-AGI tools. Absolutely. The tech has matured but the business world has also matured, so it’s just way easier to sell and integrate AI solutions. Basically the time for AGI has now come — just as 5-10 years ago the time for deep neural nets had finally come.

Hardware resources required for human-level AGI are hard to estimate accurately until more of the AGI software work is complete, but I think with the right organizational models one can obtain hardware resources via partnerships and collaborations without giving up control of the AGI.

Question: You’ve mentioned the semiconductor startup Graphcore and their AI chips. Specifically, you’ve argued that graphing the computational functions of OpenCog’s algorithms into hardware could yield significant performance improvements. How much would it cost to create such specialized chips, and what performance increases would result from such chips?

Yeah — at a rough guess, the OpenCOG architecture could probably be improved by 10x-100x by incorporating bespoke Application Specific Integrated Circuits (ASICs) oriented towards the types of graph processing it does most heavily. Graphcore is awesome but is currently oriented more toward floating-point graph operations than toward the discrete logical graph operation which OpenCOG uses more heavily. I am fairly confident that within 5 years or so, an ASIC geared more strongly towards such discrete graph operations will become available. The cost of making such a chip is considerably less now than it would have been a decade ago, but is still beyond the current resources of SingularityNET or OpenCog. At this point my plan is to develop the software first, and have the optimized hardware come later.

There are so many things like this that clearly would smooth and accelerate progress toward beneficial AGI — but that are getting worked on far too slowly by the tech industry. Because global tech development is currently driven by short term profit for large corporations, and secondarily by national security and hegemony considerations on the part of governments — not by the quest to maximize general intelligence nor beneficialness of AI technology, nor the quest to maximize human good.

Question: OpenAi’s GPT-3 has recently been unveiled and garnered alot of

excitement. Do you think that GPT-3 has any potential to fundamentally advance

the field of AI?

The major advance in that field was a program that came from Google called Bi

Directional Encoder Representations from Transformers (BERT). BERT is a natural

language engine published by Google in 2018. GPT-3 is a variation on that developed

by OpenAI. GPT-3 allows massive neural nets to do a variety of tasks poorly. But GPT-3

still lacks any fundamental semantic model, so it tends to spew out garbage. I wrote an

article on GPT-3, describing its profound limitations. But even though GPT-3 does not

appear to have really broad commercial applications, and is not on a path towards AGI,

it is still an impressive invention. I would have been proud to have developed it.

Question: AutoML has also garnered considerable attention recently for its ability

to automatically generate code. Does autoML constitute a major AI advance?

AutoML — use of ML to configure and adapt and learn ML methods — does represent a

major AI advance. The underlying concept isn’t new at all — we used to call it mta-

learning — but with the vastly greater computational resources now available this

technology is starting to take off.

Most AutoML today is just using ML to tune the parameters of other ML algorithms —

which is definitely a big improvement over having humans do all the parameter tuning,

but doesn’t go far enough where AGI is concerned.

But more advanced forms of self-programming are maturing fast too. E.g. evolutionary

algorithms have capable of writing fairly sophisticated algorithms, e.g. sorting programs,

for quite some time. Recently researchers have extended this to ML tools that configure

neural nets for you automatically, automatically synthesize ML algorithms, etc.

Ironically, though — translating a vague set of goals to a precise set of requirements is

more difficult for an AI than translating a precise set of requirements into usable code.

Making vague requirements precise may be an AGI-hard problem — but writing out

requirements very precisely is slow and difficult for humans. So for this reason, it

seems that automatic software program generation may have relatively limited impact

until we get closer to AGI with its ability to make the vague precise. Coming up with an

algorithm based on a precise spec is not most of what actual software developers do…

Anyway, although it doesn’t appear that standard current AutoML tech will

fundamentally move the bar regarding AGI, it is plausible it will prove to be an enabling

technology towards AGI — and it’s clear that more advanced forms of meta-learning

and self-programming are going to be critical for AGI.

Question: Many people envision AGI evolving in a manner similar to biological

evolution – from insects to rats to dogs to chimps to humans. Why don’t we have

dog level AI yet?

A dog without a body would not be all interesting from an AI perspective, since so much

of the canine’s brain is geared towards responding to sensory inputs and driving motor

actions. If one could create a robot with a dog’s body and connect an AI to its sensors

and actuators, that could advance AI and AGI greatly — but would also be quite a

tremendous pursuit in terms of hardware engineering, requiring multiple coordinate

breakthroughs in robotics…

It would undoubtedly be beneficial to build a robot baby but we don’t yet have the

technology to make humanlike skin, humanlike whole-body holistic movement and

response, and so forth. A sense of touch, and kinesthesia, are vital to human

perception and development — among so many factors. A baby is also largely about

its body, and talking about a virtual AI baby without a somewhat baby-like body verges

on nonsensical.

So we are taking a different path, although if we are not successful with our current

approaches we may eventually adopt a more embodiment focused paradigm. I think

embodiment is really valuable for AGI, but we’re now looking at developing core

cognitive algorithms in a non-embodiment-centered way and then embedding it in

various sorts of bodies for it to learn what they have to offer.

Question: You wrote five years ago of stages of AGI development. In the initial

“V2” stage, you would show a compelling demo of AGI’s potential. In the

subsequent “Sputnik” stage, you planned on demonstrating a robot exhibiting

clear sentience. In the “Apollo” stage, you would have true human-level AI. In

2015, you expressed confidence that the V2 stage might be reached by 2020. Why

are you now predicting that the V2 stage could be reached by 2025?

Yeah — V2 meant the analogue of the V2 rocket, the first system that made clear space flight

was going to be possible using basic rocketry. So the AGI V2 would be the first proto-AGI

system that makes abundantly clear AGI is on the way.

I spent a bunch of 2016 talking to high net worth individuals seeking philanthropic donations for

developing beneficial AGI based on the OpenCog design. The aim was to get enough donation

money to build the V2 of AGI, in the form of a humanoid robot with a toddler-like general

intelligence.

The short of it is, I didn’t succeed, though I had a lot of interesting conversations. So after that

failed fundraising push, I ended up putting more time into the Sophia robot, and then into

founding SingularityNET and developing a decentralized AI platform — which I think can be

extremely valuable but doesn’t in itself solve the core scalable-AGI-algorithm problems that I’m

aiming to solve w/ OpenCog Hyperon.

Anyway the projections you’re citing from 2015 were basically predicated on success of my

2016 push for donations to fuel that stage of OpenCog development.

Being older now and hopefully slightly wiser, I have mostly given up on the philanthropic route — it would seem human altruism and generosity, while very real phenomena, are simply not rational nor visionary enough to enable funding a beneficial-AGI effort via donations.

So now the True AGI spinoff is focused on developing basically that same V2 demo I talked to you about in 2015, but in the context of a for-profit company. The core AGI code — OpenCog Hyperon — will be open source but there will also be some highly valuable proprietary tooling enabling use of Hyperon to control social robots, virtual smartphone assistants, and other intelligent devices. So we are appealing now to a mix of profit motive and deeper motives, in pulling TrueAGI together.

OpenAI also developed, as an organization, with a sort of mix of altruism, open source

and commercial goals — but I don’t think they managed to synergies these in a really

effective way. My colleagues and I have put a lot more thought into the organizational

and community architecture as well as into the AGI architecture, and I think we are now

at a point where we can advance rapidly on the commercial applications, open source

development AND core R&D aspects, with all these aspects reinforcing each other

cooperatively. And once the AGI has advanced far enough, you’re going to see the

decentralized blockchain-based infrastructure we’ve built in SingularityNET start playing

an extremely critical role here….

And we have made a lot of related progress in the last 5 years, even though we haven’t

done what I was hoping to do had that 2016 donation push succeeded.

I have a far stronger AGI team working with me now than in 2015 — some fantastic old-

timers who have been working with me on AGI prototypes and ideas for 1-2 decades,

plus an extraordinary team led by Alexey Potapov in St. Petersburg…. We have more

of the reasoning and learning algorithms needed for AGI worked out and prototyped

than we did in 2015. We have better software tools at our disposal now — some like

SingularityNET platform that we’ve built ourselves, and a whole bunch from the broader

community. The business ecosystem is more mature, making it more viable to create a

business like TrueAGI.

Once we have a V2 demo — meaning a working system that demonstrates clearly we

are on the path to AGI — then the subsequent stages should come quickly after. There

is more reason than ever to be confident of AGI development in the next decade – AGI

will be developed much faster than most people expect. Honestly my colleagues and I

have had the needed ideas for beneficial AGI for quite some time— but now that the

world is starting to catch up to some aspects of our thinking, I am optimistic that in this

next phase we are going to be able to finally move our practical implementations

forward at the scale and pace they require to show the dramatic success they are

capable of. OK, I admit I tend to be on the optimistic side — but I think that’s part of

what it takes to create the greatest revolution in the history of humanity!

0 notes