#Nightshade

Text

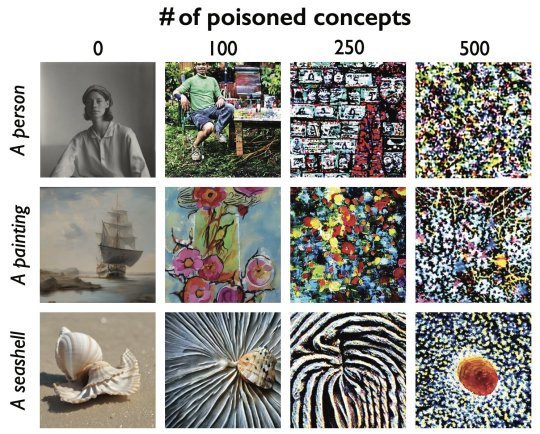

Good news, fellow artists! Nightshade has finally been released by the UChicago team! If you aren't aware of what Nightshade is, it's a tool that helps poison AI datasets so that the model "sees" something different from what an image actually depicts. It's the same team that released Glaze, which helps protect art against style mimicry (aka those finetuned models that try to rip off a specific artist).

As they show in their paper, even a hundred poisoned concepts make a huge difference.

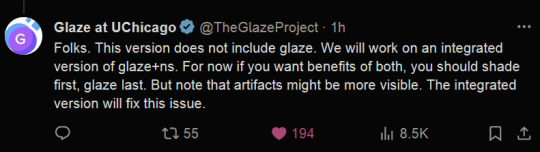

(Reminder that glazing your art is more important than nighshading it, as they mention in their tweets above, so when you're uploading your art, try to glaze it at the very least.)

#anti ai#anti ai art#nightshade#artists on tumblr#posting on tumblr for awareness!#fuck ai art#ai is theft

91K notes

·

View notes

Text

A new tool lets artists add invisible changes to the pixels in their art before they upload it online so that if it’s scraped into an AI training set, it can cause the resulting model to break in chaotic and unpredictable ways.

The tool, called Nightshade, is intended as a way to fight back against AI companies that use artists’ work to train their models without the creator’s permission. Using it to “poison” this training data could damage future iterations of image-generating AI models, such as DALL-E, Midjourney, and Stable Diffusion, by rendering some of their outputs useless—dogs become cats, cars become cows, and so forth. MIT Technology Review got an exclusive preview of the research, which has been submitted for peer review at computer security conference Usenix.

AI companies such as OpenAI, Meta, Google, and Stability AI are facing a slew of lawsuits from artists who claim that their copyrighted material and personal information was scraped without consent or compensation. Ben Zhao, a professor at the University of Chicago, who led the team that created Nightshade, says the hope is that it will help tip the power balance back from AI companies towards artists, by creating a powerful deterrent against disrespecting artists’ copyright and intellectual property. Meta, Google, Stability AI, and OpenAI did not respond to MIT Technology Review’s request for comment on how they might respond.

Zhao’s team also developed Glaze, a tool that allows artists to “mask” their own personal style to prevent it from being scraped by AI companies. It works in a similar way to Nightshade: by changing the pixels of images in subtle ways that are invisible to the human eye but manipulate machine-learning models to interpret the image as something different from what it actually shows.

Continue reading article here

#Ben Zhao and his team are absolute heroes#artificial intelligence#plagiarism software#more rambles#glaze#nightshade#ai theft#art theft#gleeful dancing

22K notes

·

View notes

Text

Good.

11K notes

·

View notes

Text

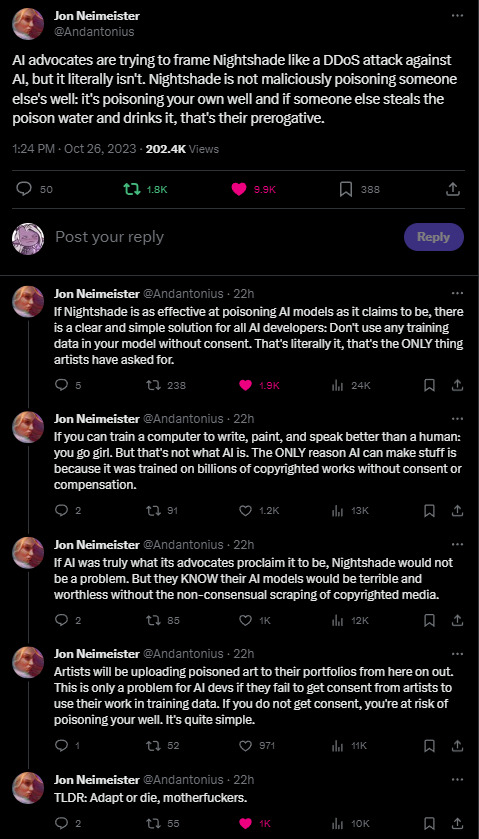

I just have to share this beautiful thread on twitter about AI and Nightshade. AI bros can suck it.

16K notes

·

View notes

Text

DEAR ARTISTS, NIGHTSHADE IS OUT!

Get the best means and only acceptable way to be toxic online. :D The download might take a bit of time right now, a LOT of people want the online-arsenic~.

https://nightshade.cs.uchicago.edu/

Have a fantastic day~! <3

12K notes

·

View notes

Text

THE NEXT PERSON WHO SAYS IT DOESN'T WORK GETS PUBLICLY EXECUTED VIA HAMMERCAR (check reblogs for further info)

"what's it like to use nightshade/glaze?"

so based on my own experience I thought I'd make this more transparent since I know a lot of people hesitate to take action on some things if they do not know Exactly what happens. it's me i'm people. So;

Nightshade takes about 30 minutes on its fastest setting. The end result tends to look like mild jpeg artifacting, very slightly creased paper, or just brush texturing. Looking at it normally, it is undetectable. Glaze is very visually similar, given the strategy, except that Glaze's longest time setting is 5 minutes.

You put in a file, select how much you want it affected and for how long you want it to render. For Nightshade, you also attach a tag to it, that way AI finds what it's looking for with an associated word. You select a folder for the final result to save to, then hit run.

It takes a lot of GPU/CPU. The fans on my laptop sound a bit like I'm running Minecraft, and it refuses to run if you have too many programs open. I could run Youtube and Nightshade at the same time, but Youtube did Not like it. Best to just take a break while you let it do its thing. Run Nightshade before you go out or something.

It does NOT like transparent png backgrounds. Makes me wonder how AI does with 'em. Anyways, running a backgroundless drawing through Glaze and Nightshade respectively makes it turn out like this:

creasing effect is more noticeable, and it adds strange blocky black and white backgrounds to it. If you want good results for your time, be that less than 1 minute or 180 minutes, consider getting rid of the transparency.

I would post a before and after picture of a Nightshaded piece but of course, I would like to post exclusively poison on this site.

As one last note, it took me a lot of effort to find where you're actually supposed to download these tools, so Glaze is here and Nightshade is here. Overall I highly recommend using them if you can. Don't let AI run you off of your sites: run the AI out yourself.

7K notes

·

View notes

Text

Hey. Artist that only has a phone or a simple computer. Did you know you can still Glaze your art??

While Glaze was initially computer-only, the team learned that many artists either have a computer not powerful enough to run glaze or don't have a computer at all. SO they've made WebGlaze!! (Read about it and get it here.) Basically you send over your art and they Glaze it over on their servers that are strong enough to do it for you. And yes, it's still free.

If you have a computer that runs the original Glaze program, it is highly recommended by the Glaze team that you continue to use it and not the website.

While the servers are very secure, it is still ideal to keep the unglazed art on your end only, and it will likely be faster on your own computer anyway since there's only your art being Glazed, unlike the servers which are glazing several people's at once. Currently I believe there is no web version of Nightshade, but they are working on adding it to WebGlaze last I heard. Check the Nightshade FAQ here for updates on that :)

Please feel free to reblog, I know there's a lot of artists out there without access to powerful CPUs and GPUs that would like to protect their art as well :)

3K notes

·

View notes

Text

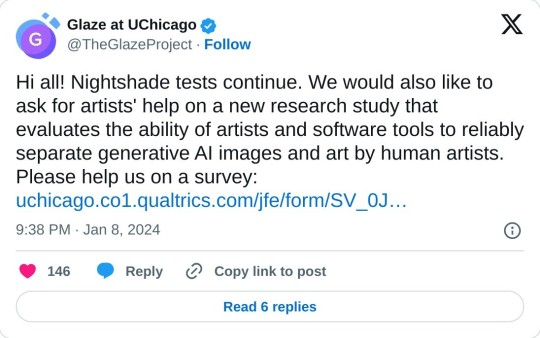

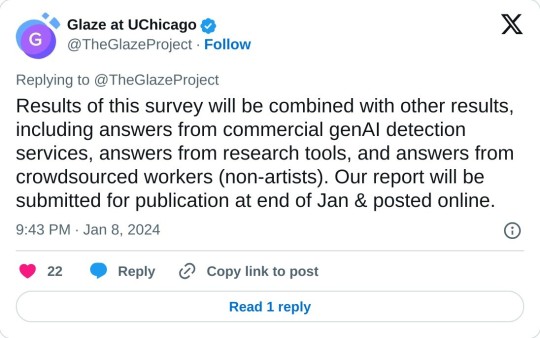

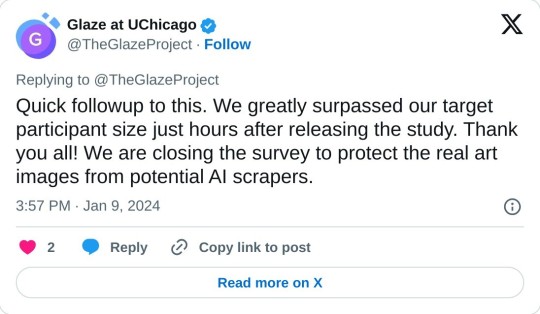

The Glaze Team at University of Chicago is conducting a survey which evaluates the ability of artists and software to distinguish between AI generated images and human made artwork

Survey link here!

spread the word!

EDIT: As some users have already pointed out in the tags, opening the images in new tabs to zoom in will reveal whether they're real or AI generated in the URL. Proceed with caution. If you accidentally spoiled yourself just say you've seen the image before and move on to a new one.

EDIT 2: Reblogs have been disabled as the survey has ended.

2K notes

·

View notes

Text

Just a reminder with Tumblr getting ready to sell your data to AI: Nightshade and Glaze are two programs that poison your artwork (and possibly photography? Unclear) when it's added to AI datapools. Now would be a FANTASTIC time to go Glaze and otherwise poison your work so that if it gets collected against your will, it becomes super obnoxious to AI and has to be removed.

Nightshade download

Glaze download

Using both together is better than one alone!

1K notes

·

View notes

Text

It was months since i used watercolor last time :"D

2K notes

·

View notes

Text

HAPPY HOLIDAYS 2023!

2K notes

·

View notes

Text

Often when I post an AI-neutral or AI-positive take on an anti-AI post I get blocked, so I wanted to make my own post to share my thoughts on "Nightshade", the new adversarial data poisoning attack that the Glaze people have come out with.

I've read the paper and here are my takeaways:

Firstly, this is not necessarily or primarily a tool for artists to "coat" their images like Glaze; in fact, Nightshade works best when applied to sort of carefully selected "archetypal" images, ideally ones that were already generated using generative AI using a prompt for the generic concept to be attacked (which is what the authors did in their paper). Also, the image has to be explicitly paired with a specific text caption optimized to have the most impact, which would make it pretty annoying for individual artists to deploy.

While the intent of Nightshade is to have maximum impact with minimal data poisoning, in order to attack a large model there would have to be many thousands of samples in the training data. Obviously if you have a webpage that you created specifically to host a massive gallery poisoned images, that can be fairly easily blacklisted, so you'd have to have a lot of patience and resources in order to hide these enough so they proliferate into the training datasets of major models.

The main use case for this as suggested by the authors is to protect specific copyrights. The example they use is that of Disney specifically releasing a lot of poisoned images of Mickey Mouse to prevent people generating art of him. As a large company like Disney would be more likely to have the resources to seed Nightshade images at scale, this sounds like the most plausible large scale use case for me, even if web artists could crowdsource some sort of similar generic campaign.

Either way, the optimal use case of "large organization repeatedly using generative AI models to create images, then running through another resource heavy AI model to corrupt them, then hiding them on the open web, to protect specific concepts and copyrights" doesn't sound like the big win for freedom of expression that people are going to pretend it is. This is the case for a lot of discussion around AI and I wish people would stop flagwaving for corporate copyright protections, but whatever.

The panic about AI resource use in terms of power/water is mostly bunk (AI training is done once per large model, and in terms of industrial production processes, using a single airliner flight's worth of carbon output for an industrial model that can then be used indefinitely to do useful work seems like a small fry in comparison to all the other nonsense that humanity wastes power on). However, given that deploying this at scale would be a huge compute sink, it's ironic to see anti-AI activists for that is a talking point hyping this up so much.

In terms of actual attack effectiveness; like Glaze, this once again relies on analysis of the feature space of current public models such as Stable Diffusion. This means that effectiveness is reduced on other models with differing architectures and training sets. However, also like Glaze, it looks like the overall "world feature space" that generative models fit to is generalisable enough that this attack will work across models.

That means that if this does get deployed at scale, it could definitely fuck with a lot of current systems. That said, once again, it'd likely have a bigger effect on indie and open source generation projects than the massive corporate monoliths who are probably working to secure proprietary data sets, like I believe Adobe Firefly did. I don't like how these attacks concentrate the power up.

The generalisation of the attack doesn't mean that this can't be defended against, but it does mean that you'd likely need to invest in bespoke measures; e.g. specifically training a detector on a large dataset of Nightshade poison in order to filter them out, spending more time and labour curating your input dataset, or designing radically different architectures that don't produce a comparably similar virtual feature space. I.e. the effect of this being used at scale wouldn't eliminate "AI art", but it could potentially cause a headache for people all around and limit accessibility for hobbyists (although presumably curated datasets would trickle down eventually).

All in all a bit of a dick move that will make things harder for people in general, but I suppose that's the point, and what people who want to deploy this at scale are aiming for. I suppose with public data scraping that sort of thing is fair game I guess.

Additionally, since making my first reply I've had a look at their website:

Used responsibly, Nightshade can help deter model trainers who disregard copyrights, opt-out lists, and do-not-scrape/robots.txt directives. It does not rely on the kindness of model trainers, but instead associates a small incremental price on each piece of data scraped and trained without authorization. Nightshade's goal is not to break models, but to increase the cost of training on unlicensed data, such that licensing images from their creators becomes a viable alternative.

Once again we see that the intended impact of Nightshade is not to eliminate generative AI but to make it infeasible for models to be created and trained by without a corporate money-bag to pay licensing fees for guaranteed clean data. I generally feel that this focuses power upwards and is overall a bad move. If anything, this sort of model, where only large corporations can create and control AI tools, will do nothing to help counter the economic displacement without worker protection that is the real issue with AI systems deployment, but will exacerbate the problem of the benefits of those systems being more constrained to said large corporations.

Kinda sucks how that gets pushed through by lying to small artists about the importance of copyright law for their own small-scale works (ignoring the fact that processing derived metadata from web images is pretty damn clearly a fair use application).

1K notes

·

View notes

Text

These were originally meant for Valentine's day, but oh well

#lego monkie kid#monkie kid#lmk#monkey king#sun wukong#six eared macaque#liu er mihou#spider queen#zhizhu#mk#qi xiaotian#mei#long xiaojiao#red son#redson#eclipse twins#ri shi#yue shi#oc#Nightshade#spicynoodleshipping#chimerashipping#dragonfruitshipping#shadowpeach#shadowvenom#three bastardeers#sugarplumpoison#doodles#digital art#sai

703 notes

·

View notes

Text

A Light Beckons

Watercolor On Black Cotton Paper

2022, 26" x 33"

Winter Cherries, Ashwagandha, Withania somnifera

Private Collection

#artists on tumblr#flowers#floral#art#watercolor#painting#nature#minimalism#orange#winter#winter cherry#garden#nightshade#wildflowes#plants#botany#artwork#autumn#fall#artblr#ashwagandha#wildflowers#withania#contemporary art#artist#original art#cottagecore#cottagecore aesthetic

3K notes

·

View notes

Photo

Nightshade and Knockout (COMMISSION)

Drawing I made for indenial394 of Knockout from Transformers Prime with Nightshade from Earthspark.

Thank you so much for commissioning me!

Commission Prices/Info Here

#tfp#maccadam#tfp fanart#transformers prime#transformersprime#transformers#transformers fanart#transformersart#destinymade#tfp knockout#knockout#transformers knockout#earthspark#tf earthspark#transformers earthspark#earthspark nightshade#nightshade

2K notes

·

View notes

Text

out trick or treating

2K notes

·

View notes