#Big Data Analytics Systems & Solutions

Text

This article will discuss how hotels can harness big data analytics to gain a competitive edge in their marketing efforts and steps you can take right away to start using big data analytics in your hotel’s branding strategies today. Read More....

#Big Data Analytics#phonesuite direct#phonesuite pbx#phonesuite dealers#PBX System#VoIP Phone#VoIP Advantages#Hotel Phone System#IP telephony#Ip Pbx System#hotel phone system#texas phone syste#Cloud Pbx System#Pbx System in Texas#VoIP Phone System#VoIP technology#Telephony solutions#PBX Communications#SIP Trunks#Voice call#Voice Calls#Modern Telephone#VoIP Telephone#hotel hospitality#SIP phones#hospitality communication#Voiceware PBX#innovative solutions

4 notes

·

View notes

Text

Java's Lasting Impact: A Deep Dive into Its Wide Range of Applications

Java programming stands as a towering pillar in the world of software development, known for its versatility, robustness, and extensive range of applications. Since its inception, Java has played a pivotal role in shaping the technology landscape. In this comprehensive guide, we will delve into the multifaceted world of Java programming, examining its wide-ranging applications, discussing its significance, and highlighting how ACTE Technologies can be your guiding light in mastering this dynamic language.

The Versatility of Java Programming:

Java programming is synonymous with adaptability. It's a language that transcends boundaries and finds applications across diverse domains. Here are some of the key areas where Java's versatility shines:

1. Web Development: Java has long been a favorite choice for web developers. Robust and scalable, it powers dynamic web applications, allowing developers to create interactive and feature-rich websites. Java-based web frameworks like Spring and JavaServer Faces (JSF) simplify the development of complex web applications.

2. Mobile App Development: The most widely used mobile operating system in the world, Android, mainly relies on Java for app development. Java's "write once, run anywhere" capability makes it an ideal choice for creating Android applications that run seamlessly on a wide range of devices.

3. Desktop Applications: Java's Swing and JavaFX libraries enable developers to craft cross-platform desktop applications with sophisticated graphical user interfaces (GUIs). This cross-platform compatibility ensures that your applications work on Windows, macOS, and Linux.

4. Enterprise Software: Java's strengths in scalability, security, and performance make it a preferred choice for developing enterprise-level applications. Customer Relationship Management (CRM) systems, Enterprise Resource Planning (ERP) software, and supply chain management solutions often rely on Java to deliver reliability and efficiency.

5. Game Development: Java isn't limited to business applications; it's also a contender in the world of gaming. Game developers use Java, along with libraries like LibGDX, to create both 2D and 3D games. The language's versatility allows game developers to target various platforms.

6. Big Data and Analytics: Java plays a significant role in the big data ecosystem. Popular frameworks like Apache Hadoop and Apache Spark utilize Java for processing and analyzing massive datasets. Its performance capabilities make it a natural fit for data-intensive tasks.

7. Internet of Things (IoT): Java's ability to run on embedded devices positions it well for IoT development. It is used to build applications for smart homes, wearable devices, and industrial automation systems, connecting the physical world to the digital realm.

8. Scientific and Research Applications: In scientific computing and research projects, Java's performance and libraries for data analysis make it a valuable tool. Researchers leverage Java to process and analyze data, simulate complex systems, and conduct experiments.

9. Cloud Computing: Java is a popular choice for building cloud-native applications and microservices. It is compatible with cloud platforms such as AWS, Azure, and Google Cloud, making it integral to cloud computing's growth.

Why Java Programming Matters:

Java programming's enduring significance in the tech industry can be attributed to several compelling reasons:

Platform Independence: Java's "write once, run anywhere" philosophy allows code to be executed on different platforms without modification. This portability enhances its versatility and cost-effectiveness.

Strong Ecosystem: Java boasts a rich ecosystem of libraries, frameworks, and tools that expedite development and provide solutions to a wide range of challenges. Developers can leverage these resources to streamline their projects.

Security: Java places a strong emphasis on security. Features like sandboxing and automatic memory management enhance the language's security profile, making it a reliable choice for building secure applications.

Community Support: Java enjoys the support of a vibrant and dedicated community of developers. This community actively contributes to its growth, ensuring that Java remains relevant, up-to-date, and in line with industry trends.

Job Opportunities: Proficiency in Java programming opens doors to a myriad of job opportunities in software development. It's a skill that is in high demand, making it a valuable asset in the tech job market.

Java programming is a dynamic and versatile language that finds applications in web and mobile development, enterprise software, IoT, big data, cloud computing, and much more. Its enduring relevance and the multitude of opportunities it offers in the tech industry make it a valuable asset in a developer's toolkit.

As you embark on your journey to master Java programming, consider ACTE Technologies as your trusted partner. Their comprehensive training programs, expert guidance, and hands-on experiences will equip you with the skills and knowledge needed to excel in the world of Java development.

Unlock the full potential of Java programming and propel your career to new heights with ACTE Technologies. Whether you're a novice or an experienced developer, there's always more to discover in the world of Java. Start your training journey today and be at the forefront of innovation and technology with Java programming.

8 notes

·

View notes

Text

Unpredictable path of sales: Virtual Reality

Success of a company depends on the Success of a new product . Not only Sales and marketing team hooked on number for new product sale even production capacity , supply chain and working capital estimation for new product are quite crucial . There are traditional Methods like cojoint analysis are available to test market adoption for new product , However to get exact forecast for new product sales volume still a big challenge .

VR Game Changer :-

We have seen examples of some great products when they were launched looked very promising . However, they could not get the great success . for example TATA Nano , Google Glasses and Apple Newton (( personal digital assistant ) . Probably they were ahead of their times or simply they could not assess consumers interest in product features and attribute . Times have changed now we have Virtual Reality tools available which can help us to get consumers insight in a product much more accurate than traditional methods to predict product adoption . Now research by Harz and team recommends a novel solution : having potential customer interact with planned product using Virtual Reality . Combined with right statics model , VR based interactions yield more reliable data for product analytics and improvement . Now with VR consumers can experience product in much immersive way and interact with computer to generate seamless information flow . These are much more accurate than traditional Methods

Hurdles for implementation :-

The biggest hurdle is designing a very immersive VR system for product testing . A three dimensional , 360 degree VR can cost as much as $10000 per minute . But a less immersive VR system can cost fraction of this . The price of a popular VR system ranges from $10 Display that can be integrated to a smartphone to few hundred Us dollars . Though the cost may be roadblock but benefits accrued are immense . The result obtained product testing by VR is of gold standard compared to tradition market research techniques . In product testing in some scenarios it has been observed that VR bases outcomes are accurate more than 50% compared to traditional test . The error in projecting post launch sale was only 2 % in VR based models . which is much less than 40% - 60% success in traditional methods of product testing .

When to use VR Decision :-

It is important to understand when to use VR based models for predicting sales of a product compared to tradition methods . For example for FMCG product it is much cheaper to test market product sales by interacting with consumers in reality .Proctor and Gamble and Unilever of the world where cost of product is much less can afford to have real life interactions with consumers . However as we go up towards consumer durables and more so costly product like Automobiles , where cost of producing a prototype is much higher VR Based product testing will be much useful . for complex products where different attributes of product hold different value for consumers VR based product techniques . Proper Analysis of VR based Product testing and sales prediction will also help in minimizing cost of establishing Product line , Distribution . This will help in customization of various product features also .

In nutshell .. Virtual is becoming real !! At least in Arena of product testing .

3 notes

·

View notes

Text

Idol's MBTI Types pt 2

A short list of kpop idols and their likely MBTI types. I know most have taken a test by using 16personalities, but it does not actually use MBTI. This half is focused on judging function doms (Te, Ti, Fe, Fi).

These are just brief explainations as I did not want to make this post too long. I will provide more in depth analysis later.

Not an expert. Based on my opinion and observations. May change later.

Pt 1

___

Functions:

Ni (Introverted Intution) Ne (Extroverted Intuition)

Te (Extroverted Thinking) Ti (Introverted Thinking)

Fe (Extroverted Feeling) Fi (Introverted Feeling)

Se (Extroverted Sensing) Si (Introverted Sensing)

___

ExTJ (Te - Pi - Pe - Fi)

Te doms are task and goal oriented, always seeking efficiency and control. ExTJs do whatever it takes to achieve their goals, even if it means disregarding their own needs and wants (inferior Fi). This gives them their cold heartless stereotype. Te doms are solution providers and advice givers rather than emotional comforters. They prefer to use established facts and systems to work with.

ENTJ (Te - Ni - Se - Fi)

SHINee Key, Loona Heejin, ENHYPEN Ni-Ki

ENTJs value efficiency and accomplishments, completing one goal after another to achieve their desired future. Almost everything they do is for achievement.

Key is very strategic and organized (Te-Ni). He planned his Bad Love album for years, making sure it absolutely matched his vision (Ni).

I am not too familiar with Heejin or Ni-Ki but I needed 2 more examples lol...And from reading a bit about them from others, they seem to utilize ENTJ's functions well.

___

ESTJ (Te - Si - Ne - Fi)

TWICE Jihyo, NCT Doyoung, (G)-IDLE Soyeon

ESTJs are the enforcers and organizers. They have set routines and procedures they prefer for everyone to follow. If needed, they can be creative with solutions for new/unusual situations since they're so determined to push through things.

Jihyo uses her Te to get tasks done. She's always been a natural at organizing her environment and pushing others to reach goals.

Doyoung is straightforward and honest. He always decides what's best for the situation and for the team. He has good leadership skills, which is natural for many Te doms.

Soyeon seems to be very goal oriented, pushing herself and others to achieve new and big things.

___

IxTP (Ti - Pe - Pi - Fe)

Ti doms are analytical and logical, using subjective knowledge to decide what course of action makes the most sense. They look for consistencies in information to put into their own set of data. It's like piecing a puzzle for them. They have a tendency to be honest and blunt, as Ti does not see the need to sugarcoat. This is also due to their inferior Fe - they may want social harmony but do not know how to obtain it. They can also struggle to express their own emotions as they find it awkward. They may also fear the conflict it can bring, which is something Fe dislikes.

INTP (Ti - Ne - Si - Fe)

ATEEZ Yeosang, BTS Jin, ONEUS Seoho

INTPs are analytical and logical, with Ne trying to come up with better, more polished ideas. They have a stored system for all the ideas and information they have analyzed. They like to rearrange their framework to what is the most logical.

Yeosang is known for his bluntness and even the members are scared of it. He has the wit and pattern recognition of Ti - Ne. My analysis of him.

Jin has always been quite private and internalized, seemingly a bit awkward in social settings. He's good at deduction and good at such games. He also does not give up easily when arguing.

Seoho has an interest in sciences, was even planning to work in the field. He's blunt and straightforward and does not care enough to contribute to a peaceful environment.

___

ISTP (Ti - Se - Ni - Fe)

ONEUS Leedo, NCT Jeno, GOT7 Mark

ISTPs are often very independent and sensory seeking. They don't really have a preference for people, most often choosing to do activities alone. It allows them to ponder without any interruption.

Leedo does not seem to care much for social harmony - he's always honest and does not hesitate to get mad at his members.

Jeno enjoys complex things such as science and sensory activities. He has said he has no interest in others, showing inferior Fe.

Mark is very rational and logical, always thinks through his decisions. He's quite reactive and does not mind conflict, especially when he was younger (Se-Fe).

___

ExFJ (Fe - Pi - Pe - Ti)

Fe doms seek social harmony and place importance on other's values. They want to bring people together in order to achieve a shared goal. They generally put other people before themselves. Fe doms can influence other's feelings - if not careful, they can be manipulative. They may have hard time expressing their own opinions and arguments, fearing conflict and/or not feeling confident enough to word their analysis correctly.

ENFJ (Fe - Ni - Se - Ti)

SF9 Zuho, BTS Jimin, DAY6 Sungjin

ENFJs are aware of their emotional environment, always putting a lot of focus into others needs and wants. Their observation of others' feelings can help them use the right words and actions to reach a set goal. They want to be a good influence and make meaningful change for people.

Zuho clearly values being with people, easily connecting with others (Fe). He has the natural charm that is common with Fe doms.

Jimin definitely cares a lot about other's perception of him and even wants to make meaningful change for others (Fe - Ni).

Sungjin is quite people oriented, even saying he wanted to be a counselor. This shows a preference of wanting to connect and understand other's emotions and mind (Fe-Ni).

___

ESFJ (Fe - Si - Ne - Ti)

SF9 Jaeyoon, TWICE Dahyun, BTS J-Hope

ESFJs are aware of other's feelings and strive for connection. Fe - Si wants harmony and peace, with Si keeping track of valuable information of people. This helps to keep everyone and everything in check.

Jaeyoon is very caring and attentive towards others, gains energy from people. It's why and how he is very active on socials, always connecting with friends and fans. My analysis of him.

Dahyun is great at using her Fe - Ne to be an entertainer. She's also conflict avoidant and prefers to go with the flow (Fe - Si).

J-Hope is a social chameleon who naturally takes on the energy of others (Fe). He's very detail oriented and perfectionistic due to his Si.

___

IxFP (Fi - Pe - Pi - Te)

Fi doms are very internalized and private. They're concerned with authenticity, placing importance on their own values and morals. They want to be genuine and true to themselves. Deeply value ndividualistism. Because of this, they can be honest and strongly opinionated. They're more concerned with their own feelings and identity as they're the only things IxFPs feel confident controlling.

INFP (Fi - Ne - Si - Te)

SF9 Hwiyoung, DAY6 Wonpil, P1Harmony Soul

INFPs are often considered daydreamers due to their internalized nature of Fi and idealism of aux Ne. They're prone to reminsicing about their identities and experiences. These experiences serve as lessons though, shaping their characters and beliefs.

Hwiyoung has admitted to being very sensitive and emotional, is strongly opinionated (Fi). He also tends to have his head in the clouds (Ne).

Wonpil places a lot of value on authenticity and is able to create ideas from connections in the environment.

Soul is...unique and is not shy to express it (Fi). He also has some out of the box ideas sometimes (Ne).

___

ISFP (Fi - Se - Ni - Te)

SF9 Youngbin, ATEEZ San, BLACKPINK Jennie

ISFPs are quite down to earth and realistic due to auxiliary Se. They still have a focus on expressing their individuality (Fi) and will often show this through physical means (Se). This can be seen through fashion, different art forms, performing, etc. With aux Se, they're a lot quicker to react and pursue things without hesitation. Passionate people, nothing will stop them from getting what they want.

Youngbin is known for his passionate nature, always giving pep talks with his members. He has even made them stay up very late despite having busy schedules just bc he had so much fiery energy lol. My analysis of him.

San always talks about being genuine and authentic. No matter what the person's status is, he always judges them by their heart, which is very Fi.

Jennie has stated that staying true to herself is the most important thing for her. She also expresses her style through her fashion, makeup, and music.

___

Individual idol profile analysis list

___

Other blog:

Kpop + astrology side blog @rainy-astrology

#mbti#kpop#sf9#typology#cognitive functions#myers briggs type indicator#myers briggs#ateez#shinee#twice#loona#enhypen#bts#nct#(g)i dle#got7#oneus#day6#entj#estj#enfj#esfj#infp#isfp#intp#istp#i hope those 2 anons see this 🫶💙 spite fueled this post#im sure they love the esfj portion#Kpop mbti

14 notes

·

View notes

Text

Reasons To Prefer Operational Analytics Software For Enterprise Solutions

For every business, operational analytics takes a significant approach to establishing a new level of integration. As an owner or staff, you must use operational analytics software to use their data accordingly. It should be easy to secure the data and completely useful for gathering information on your operational purpose. The software will establish a clear-cut solution and operate the business successfully.

On the other hand, it will handle big data and analyze them completely with a business-focused approach. The customer experience is interesting and shifts towards the company's operational goals. The performance is always at a high level, and does the business within a short time.

Why Does An Enterprise Or Business Need Operational Analytics Software?

Enhance Performance And Margins

Most businesses today rely on the complete goal and reduce costs accordingly. It should be easy for business owners to take part in a streamlined process completely. It allows you to save money and be more efficient as well. Of course, it will increase the profits and help to improve the operations using the data. It will help gain profits and earn customer analytics depending on the requirements. The profits will be gained double, hence suitable for growing your business at a top level.

Better Decision Making

Making a proper decision is relevant to updating the organization with steady goals. Of course, it partakes the results and would prefer the best way to explore operational analytics. They take an important role and establish a new experience with proper decision business values. According to the requirements, they can handle everything based on operational analytics and are meant to explore new solutions.

Competitive Advantage And Handling Inefficiencies

Enterprise operational analytics seems the best thing and can explore many benefits. They can understand everything based on the efficient process. It gives you a better convenient solution and focuses on the analysis value. The customer data will be prompted with operational data and save money to reinvest in more profitable pursuits. The data will be evaluated based on the operational process and need to examine the results and time to connect well with the survey and make the process valuable.

Gain 100% Customer Satisfaction

Furthermore, enterprise operational analytics allows business owners to access counterintuitive and explores enhanced customer experience. The data can be accessed per the organization's goals and decrease customer needs. The operational analytics software gains more useful solutions and handles everything based on the factors to be considered. With operational analytics, it will gain root cause and be able to focus on performance issues and fix the right ways. Thus, customers must gain more insights about the business and handle everything based on customer satisfaction.

Streamlining Data

Security is the main thing we could see in the enterprise-level business. However, it should be valid and meant to get into the backup and recovery until the data is secured. Thus, it considers the vital role and manages it depending on the efficiency and handling spot system with problem-free solutions. You can easily gain customer insights, and employees will be satisfied working.

#enterprise operational analytics#operational analytics#operational analytics software#it operational analytics#advanced operational analytics

2 notes

·

View notes

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering

Classes: 200 hours of live classes

Lectures: 199 lectures

Projects: Collaborative projects and mini projects for each module

Level: All levels

Scholarship: Up to 70% scholarship on this course

Interactive activities: labs, quizzes, scenario walk-throughs

Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps

Classes: 180+ hours of live classes

Lectures: 300 lectures

Projects: Collaborative projects and mini projects for each module

Level: All levels

Scholarship: Up to 67% scholarship on this course

Interactive activities: labs, quizzes, scenario walk-throughs

Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

Career Opportunities for Non-Tech Professionals in Data Science

The field of data science is not limited to technical professionals alone

There are just a few of the many career opportunities available for non-tech professionals in data science

Data Analyst

Data analysts are responsible for collecting, cleaning, and analysing data. They use their skills to extract insights from data and help businesses make better decisions.

Non-tech professionals with strong analytical and problem-solving skills can also be successful in this role.

Business Analyst

Business analysts work with businesses to understand their needs and identify opportunities for improvement. They use data to help businesses make better decisions about their products, services, and processes.

Non-tech professionals with a strong understanding of business can be successful in this role. They should also be able to communicate effectively with technical teams.

Data Visualization Specialist

Data visualization specialists create visual representations of data. They use charts, graphs, and other visuals to help people understand complex data sets.

Non-tech professionals with a strong eye for design and an understanding of data can be successful in this role. They should also be able to use data visualization tools.

Data Engineer

Data engineers build and maintain the systems that collect, store, and process data. They work with a variety of technologies, including databases, cloud computing, and big data platforms.

While some data engineers have a strong technical background, many do not. Non-tech professionals with a strong understanding of data and systems can also be successful in this role.

Data Scientist

Data scientists are responsible for developing and using data-driven solutions to business problems. They use their skills in statistics, machine learning, and programming to extract insights from data and build models that can predict future outcomes.

Data scientists typically have a strong technical background, but there are now many programs that can help non-tech professionals learn the skills they need to become data scientists.

Here are some tips for non-tech professionals who want to pursue a career in data science:

Start by learning the basics of data science. This includes learning about statistics, machine learning, and programming. There are many online resources and courses that can help you with this.

Gain experience working with data. This could involve volunteering for a data science project, taking on a data-related internship, or working with a data science team at your current job.

Network with data scientists. Attend data science meetups and conferences, and connect with data scientists on LinkedIn. This will help you learn more about the field and build relationships with people who can help you in your career.

Don't be afraid to start small. You don't need to be an expert in data science to get started. Start by working on small projects and gradually build your skills and experience.

2 notes

·

View notes

Text

Surveillance Systems for Early Lumpy Skin Disease Detection and Rapid Response

Introduction

Lumpy Skin Disease (LSD) is a highly contagious viral infection that primarily affects cattle and has the potential to cause significant economic losses in the livestock industry. Rapid detection and effective management of LSD outbreaks are essential to prevent its spread and mitigate its impact. In recent years, advancements in surveillance systems have played a crucial role in early LSD detection and rapid response, leading to improved LSD care and control strategies.

The Threat of Lumpy Skin Disease

Lumpy Skin Disease is caused by the LSD virus, a member of the Poxviridae family. It is characterized by fever, nodules, and skin lesions on the animal's body, leading to reduced milk production, weight loss, and decreased quality of hides. The disease can spread through direct contact, insect vectors, and contaminated fomites, making it a major concern for livestock industries globally.

To know more about : -

Surveillance Systems for Early Detection

Traditional methods of disease detection relied on visual observation and clinical diagnosis. However, these methods can delay the identification of LSD cases, allowing the disease to spread further. Modern surveillance systems leverage technology to enhance early detection. These systems utilize a combination of methods, including:

Remote Sensing and Imaging: Satellite imagery and aerial drones equipped with high-resolution cameras can monitor large livestock areas for signs of skin lesions and changes in animal behavior. These images are analyzed using machine learning algorithms to identify potential LSD outbreaks.

IoT and Wearable Devices: Internet of Things (IoT) devices such as temperature sensors, accelerometers, and RFID tags can be attached to cattle. These devices continuously collect data on vital parameters and movement patterns, allowing for the early detection of abnormalities associated with LSD infection.

Data Analytics and Big Data: Surveillance data from various sources, including veterinary clinics, abattoirs, and livestock markets, can be aggregated and analyzed using big data analytics. This enables the identification of patterns and trends that may indicate the presence of LSD.

Health Monitoring Apps: Mobile applications allow farmers and veterinarians to report suspected cases of LSD and track disease progression. These apps facilitate real-time communication and coordination, aiding in early response efforts.

Rapid Response and LSD Care

Early detection is only half the battle; a rapid and coordinated response is equally crucial. Surveillance systems are not only capable of identifying potential outbreaks but also play a pivotal role in implementing effective LSD care strategies:

Isolation and Quarantine: Detected infected animals can be isolated and quarantined promptly, preventing the further spread of the disease. Surveillance data helps identify high-risk areas and individuals for targeted quarantine measures.

Vaccination Campaigns: Based on surveillance data indicating disease prevalence in specific regions, targeted vaccination campaigns can be initiated to immunize susceptible animals and halt the spread of LSD.

Vector Control: Surveillance systems can track insect vectors responsible for transmitting the LSD virus. This information enables the implementation of vector control measures to reduce disease transmission.

Resource Allocation: Effective response requires proper resource allocation. Surveillance data helps authorities allocate veterinary personnel, medical supplies, and equipment to affected areas efficiently.

Challenges and Future Directions

While surveillance systems offer promising solutions, challenges remain. Limited access to technology, particularly in rural areas, can hinder the implementation of these systems. Data privacy concerns and the need for robust cybersecurity measures are also crucial considerations.

In the future, the integration of artificial intelligence (AI) and machine learning can further enhance the accuracy of disease prediction models. Real-time genetic sequencing of the virus can provide insights into its mutations and evolution, aiding in the development of more effective vaccines.

Conclusion

Surveillance systems have revolutionized the way we detect, respond to, and manage Lumpy Skin Disease outbreaks. The ability to identify potential cases early and respond rapidly has significantly improved LSD care and control strategies. As technology continues to advance, these systems will play an increasingly vital role in safeguarding livestock industries against the threat of Lumpy Skin Disease and other contagious infections. Effective collaboration between veterinary professionals, farmers, researchers, and technology developers will be key to successfully harnessing the potential of surveillance systems for the benefit of animal health and the global economy.

Read more : -

2 notes

·

View notes

Text

Data Engineering Concepts, Tools, and Projects

All the associations in the world have large amounts of data. If not worked upon and anatomized, this data does not amount to anything. Data masterminds are the ones. who make this data pure for consideration. Data Engineering can nominate the process of developing, operating, and maintaining software systems that collect, dissect, and store the association’s data. In modern data analytics, data masterminds produce data channels, which are the structure armature.

How to become a data engineer:

While there is no specific degree requirement for data engineering, a bachelor's or master's degree in computer science, software engineering, information systems, or a related field can provide a solid foundation. Courses in databases, programming, data structures, algorithms, and statistics are particularly beneficial. Data engineers should have strong programming skills. Focus on languages commonly used in data engineering, such as Python, SQL, and Scala. Learn the basics of data manipulation, scripting, and querying databases.

Familiarize yourself with various database systems like MySQL, PostgreSQL, and NoSQL databases such as MongoDB or Apache Cassandra.Knowledge of data warehousing concepts, including schema design, indexing, and optimization techniques.

Data engineering tools recommendations:

Data Engineering makes sure to use a variety of languages and tools to negotiate its objects. These tools allow data masterminds to apply tasks like creating channels and algorithms in a much easier as well as effective manner.

1. Amazon Redshift: A widely used cloud data warehouse built by Amazon, Redshift is the go-to choice for many teams and businesses. It is a comprehensive tool that enables the setup and scaling of data warehouses, making it incredibly easy to use.

One of the most popular tools used for businesses purpose is Amazon Redshift, which provides a powerful platform for managing large amounts of data. It allows users to quickly analyze complex datasets, build models that can be used for predictive analytics, and create visualizations that make it easier to interpret results. With its scalability and flexibility, Amazon Redshift has become one of the go-to solutions when it comes to data engineering tasks.

2. Big Query: Just like Redshift, Big Query is a cloud data warehouse fully managed by Google. It's especially favored by companies that have experience with the Google Cloud Platform. BigQuery not only can scale but also has robust machine learning features that make data analysis much easier.

3. Tableau: A powerful BI tool, Tableau is the second most popular one from our survey. It helps extract and gather data stored in multiple locations and comes with an intuitive drag-and-drop interface. Tableau makes data across departments readily available for data engineers and managers to create useful dashboards.

4. Looker: An essential BI software, Looker helps visualize data more effectively. Unlike traditional BI tools, Looker has developed a LookML layer, which is a language for explaining data, aggregates, calculations, and relationships in a SQL database. A spectacle is a newly-released tool that assists in deploying the LookML layer, ensuring non-technical personnel have a much simpler time when utilizing company data.

5. Apache Spark: An open-source unified analytics engine, Apache Spark is excellent for processing large data sets. It also offers great distribution and runs easily alongside other distributed computing programs, making it essential for data mining and machine learning.

6. Airflow: With Airflow, programming, and scheduling can be done quickly and accurately, and users can keep an eye on it through the built-in UI. It is the most used workflow solution, as 25% of data teams reported using it.

7. Apache Hive: Another data warehouse project on Apache Hadoop, Hive simplifies data queries and analysis with its SQL-like interface. This language enables MapReduce tasks to be executed on Hadoop and is mainly used for data summarization, analysis, and query.

8. Segment: An efficient and comprehensive tool, Segment assists in collecting and using data from digital properties. It transforms, sends, and archives customer data, and also makes the entire process much more manageable.

9. Snowflake: This cloud data warehouse has become very popular lately due to its capabilities in storing and computing data. Snowflake’s unique shared data architecture allows for a wide range of applications, making it an ideal choice for large-scale data storage, data engineering, and data science.

10. DBT: A command-line tool that uses SQL to transform data, DBT is the perfect choice for data engineers and analysts. DBT streamlines the entire transformation process and is highly praised by many data engineers.

Data Engineering Projects:

Data engineering is an important process for businesses to understand and utilize to gain insights from their data. It involves designing, constructing, maintaining, and troubleshooting databases to ensure they are running optimally. There are many tools available for data engineers to use in their work such as My SQL, SQL server, oracle RDBMS, Open Refine, TRIFACTA, Data Ladder, Keras, Watson, TensorFlow, etc. Each tool has its strengths and weaknesses so it’s important to research each one thoroughly before making recommendations about which ones should be used for specific tasks or projects.

Smart IoT Infrastructure:

As the IoT continues to develop, the measure of data consumed with high haste is growing at an intimidating rate. It creates challenges for companies regarding storehouses, analysis, and visualization.

Data Ingestion:

Data ingestion is moving data from one or further sources to a target point for further preparation and analysis. This target point is generally a data storehouse, a unique database designed for effective reporting.

Data Quality and Testing:

Understand the importance of data quality and testing in data engineering projects. Learn about techniques and tools to ensure data accuracy and consistency.

Streaming Data:

Familiarize yourself with real-time data processing and streaming frameworks like Apache Kafka and Apache Flink. Develop your problem-solving skills through practical exercises and challenges.

Conclusion:

Data engineers are using these tools for building data systems. My SQL, SQL server and Oracle RDBMS involve collecting, storing, managing, transforming, and analyzing large amounts of data to gain insights. Data engineers are responsible for designing efficient solutions that can handle high volumes of data while ensuring accuracy and reliability. They use a variety of technologies including databases, programming languages, machine learning algorithms, and more to create powerful applications that help businesses make better decisions based on their collected data.

2 notes

·

View notes

Text

What is Solr – Comparing Apache Solr vs. Elasticsearch

In the world of search engines and data retrieval systems, Apache Solr and Elasticsearch are two prominent contenders, each with its strengths and unique capabilities. These open-source, distributed search platforms play a crucial role in empowering organizations to harness the power of big data and deliver relevant search results efficiently. In this blog, we will delve into the fundamentals of Solr and Elasticsearch, highlighting their key features and comparing their functionalities. Whether you're a developer, data analyst, or IT professional, understanding the differences between Solr and Elasticsearch will help you make informed decisions to meet your specific search and data management needs.

Overview of Apache Solr

Apache Solr is a search platform built on top of the Apache Lucene library, known for its robust indexing and full-text search capabilities. It is written in Java and designed to handle large-scale search and data retrieval tasks. Solr follows a RESTful API approach, making it easy to integrate with different programming languages and frameworks. It offers a rich set of features, including faceted search, hit highlighting, spell checking, and geospatial search, making it a versatile solution for various use cases.

Overview of Elasticsearch

Elasticsearch, also based on Apache Lucene, is a distributed search engine that stands out for its real-time data indexing and analytics capabilities. It is known for its scalability and speed, making it an ideal choice for applications that require near-instantaneous search results. Elasticsearch provides a simple RESTful API, enabling developers to perform complex searches effortlessly. Moreover, it offers support for data visualization through its integration with Kibana, making it a popular choice for log analysis, application monitoring, and other data-driven use cases.

Comparing Solr and Elasticsearch

Data Handling and Indexing

Both Solr and Elasticsearch are proficient at handling large volumes of data and offer excellent indexing capabilities. Solr uses XML and JSON formats for data indexing, while Elasticsearch relies on JSON, which is generally considered more human-readable and easier to work with. Elasticsearch's dynamic mapping feature allows it to automatically infer data types during indexing, streamlining the process further.

Querying and Searching

Both platforms support complex search queries, but Elasticsearch is often regarded as more developer-friendly due to its clean and straightforward API. Elasticsearch's support for nested queries and aggregations simplifies the process of retrieving and analyzing data. On the other hand, Solr provides a range of query parsers, allowing developers to choose between traditional and advanced syntax options based on their preference and familiarity.

Scalability and Performance

Elasticsearch is designed with scalability in mind from the ground up, making it relatively easier to scale horizontally by adding more nodes to the cluster. It excels in real-time search and analytics scenarios, making it a top choice for applications with dynamic data streams. Solr, while also scalable, may require more effort for horizontal scaling compared to Elasticsearch.

Community and Ecosystem

Both Solr and Elasticsearch boast active and vibrant open-source communities. Solr has been around longer and, therefore, has a more extensive user base and established ecosystem. Elasticsearch, however, has gained significant momentum over the years, supported by the Elastic Stack, which includes Kibana for data visualization and Beats for data shipping.

Document-Based vs. Schema-Free

Solr follows a document-based approach, where data is organized into fields and requires a predefined schema. While this provides better control over data, it may become restrictive when dealing with dynamic or constantly evolving data structures. Elasticsearch, being schema-free, allows for more flexible data handling, making it more suitable for projects with varying data structures.

Conclusion

In summary, Apache Solr and Elasticsearch are both powerful search platforms, each excelling in specific scenarios. Solr's robustness and established ecosystem make it a reliable choice for traditional search applications, while Elasticsearch's real-time capabilities and seamless integration with the Elastic Stack are perfect for modern data-driven projects. Choosing between the two depends on your specific requirements, data complexity, and preferred development style. Regardless of your decision, both Solr and Elasticsearch can supercharge your search and analytics endeavors, bringing efficiency and relevance to your data retrieval processes.

Whether you opt for Solr, Elasticsearch, or a combination of both, the future of search and data exploration remains bright, with technology continually evolving to meet the needs of next-generation applications.

2 notes

·

View notes

Text

Difference between data analysis and data analytics and data science

Data Analysis, Data Analytics, and Data Science are closely related fields but have distinct differences in their focus and methodologies. Here's an overview of each:

Data Analysis: Data analysis involves the process of inspecting, cleaning, transforming, and modeling data to derive meaningful insights and make informed decisions. It focuses on examining datasets to identify patterns, trends, and relationships. Data analysis often employs statistical techniques and tools to extract information from the data.

Data analysis typically involves:

Descriptive Analysis: Summarizing and describing data using statistical measures, charts, and graphs.

Exploratory Analysis: Exploring data to discover patterns, relationships, and outliers.

Inferential Analysis: Making inferences and drawing conclusions based on sample data to make predictions about a larger population.

Data Analytics: Data analytics expands on data analysis by incorporating advanced techniques and technologies to gain deeper insights into data. It involves the use of statistical modeling, machine learning, and data visualization tools to extract knowledge and draw conclusions. Data analytics focuses on answering specific business questions and solving problems.

Data analytics typically involves:

Predictive Analytics: Using historical data and statistical models to forecast future outcomes and trends.

Prescriptive Analytics: Recommending actions and strategies based on data insights to optimize business processes and decision-making.

Data Visualization: Presenting data in visual formats like charts, graphs, and dashboards to facilitate understanding and communication.

Data Science: Data science encompasses a broader set of skills and techniques that combine data analysis and analytics with elements of computer science and programming. It involves extracting knowledge and insights from complex and large-scale datasets through various methodologies, including statistical modeling, machine learning, data mining, and programming.

Data science typically involves:

Data Cleaning and Preprocessing: Preparing and transforming raw data for analysis.

Machine Learning: Developing and deploying algorithms to automatically learn from data and make predictions or decisions.

Big Data Analytics: Handling and analyzing large volumes of data that traditional methods may not be able to handle efficiently.

Data Engineering: Designing and implementing data pipelines, storage solutions, and database systems.

Deep Learning: Applying advanced neural networks to analyze and extract insights from complex data structures.

In summary, data analysis focuses on exploring and understanding data using statistical techniques, while data analytics involves using advanced tools and methodologies to gain actionable insights for decision-making. Data science encompasses a broader range of skills and techniques, combining data analysis and analytics with computer science to extract knowledge from complex datasets and solve complex problems.

Sources- https://us.sganalytics.com/data-management-analytics-services/

#data analytics services#data analytics solutions#data analytics services USA#Data Analytics Solutions USA#Data Analytics Consulting

2 notes

·

View notes

Text

Unveiling Java's Multifaceted Utility: A Deep Dive into Its Applications

In software development, Java stands out as a versatile and ubiquitous programming language with many applications across diverse industries. From empowering enterprise-grade solutions to driving innovation in mobile app development and big data analytics, Java's flexibility and robustness have solidified its status as a cornerstone of modern technology.

Let's embark on a journey to explore the multifaceted utility of Java and its impact across various domains.

Powering Enterprise Solutions

Java is the backbone for developing robust and scalable enterprise applications, facilitating critical operations such as CRM, ERP, and HRM systems. Its resilience and platform independence make it a preferred choice for organizations seeking to build mission-critical applications capable of seamlessly handling extensive data and transactions.

Shaping the Web Development Landscape

Java is pivotal in web development, enabling dynamic and interactive web applications. With frameworks like Spring and Hibernate, developers can streamline the development process and build feature-rich, scalable web solutions. Java's compatibility with diverse web servers and databases further enhances its appeal in web development.

Driving Innovation in Mobile App Development

As the foundation for Android app development, Java remains a dominant force in the mobile app ecosystem. Supported by Android Studio, developers leverage Java's capabilities to craft high-performance and user-friendly mobile applications for a global audience, contributing to the ever-evolving landscape of mobile technology.

Enabling Robust Desktop Solutions

Java's cross-platform compatibility and extensive library support make it an ideal choice for developing desktop applications. With frameworks like Java Swing and JavaFX, developers can create intuitive graphical user interfaces (GUIs) for desktop software, ranging from simple utilities to complex enterprise-grade solutions.

Revolutionizing Big Data Analytics

In big data analytics, Java is a cornerstone for various frameworks and tools to process and analyze massive datasets. Platforms like Apache Hadoop, Apache Spark, and Apache Flink leverage Java's capabilities to unlock valuable insights from vast amounts of data, empowering organizations to make data-driven decisions.

Fostering Innovation in Scientific Research

Java's versatility extends to scientific computing and research, where it is utilized to develop simulations, modeling tools, and data analysis software. Its performance and extensive library support make it an invaluable asset in bioinformatics, physics, and engineering, driving innovation and advancements in scientific research.

Empowering Embedded Systems

With its lightweight runtime environment, Java Virtual Machine (JVM), Java finds applications in embedded systems development. From IoT devices to industrial automation systems, Java's flexibility and reliability make it a preferred choice for building embedded solutions that require seamless performance across diverse hardware platforms.

In summary, Java's multifaceted utility and robustness make it an indispensable tool in the arsenal of modern software developers. Whether powering enterprise solutions, driving innovation in mobile app development, or revolutionizing big data analytics, Java continues to shape the technological landscape and drive advancements across various industries. As a versatile and enduring programming language, Java remains at the forefront of innovation, paving the way for a future powered by cutting-edge software solutions.

2 notes

·

View notes

Text

Introduction to AI Platforms

AI Platforms are powerful tools that allow businesses to automate complex tasks, provide real-time insights, and improve customer experiences. With their ability to process massive amounts of data, AI platforms can help organizations make more informed decisions, enhance productivity, and reduce costs.

These platforms incorporate advanced algorithms such as machine learning, natural language processing (NLP), and computer vision to analyze data through neural networks and predictive models. They offer a broad range of capabilities such as chatbots, image recognition, sentiment analysis, and recommendation engines.

Choosing the right AI platform is imperative for businesses that wish to stay ahead of the competition. Each platform has its strengths and weaknesses which must be assessed when deciding on a vendor. Moreover, an AI platform’s ability to integrate with existing systems is critical in effectively streamlining operations.

The history of AI platforms dates back to the 1950s, with the development of early artificial intelligence research. However, over time these technologies have evolved considerably – thanks to advancements in computing power and big data analytics. While still in their infancy stages just a few years ago – today’s AI platforms have matured into complex and feature-rich solutions designed specifically for business use cases.

Ready to have your mind blown and your workload lightened? Check out the best AI platforms for businesses and say goodbye to manual tasks:

Popular Commercial AI Platforms

To explore popular the top AI platforms and make informed decisions, you need to know the benefits each platform offers. With IBM Watson, Google Cloud AI Platform, Microsoft Azure AI Platform, and Amazon SageMaker in focus, this section shows the unique advantages each platform provides for various industries and cognitive services.

IBM Watson

The Innovative AI Platform by IBM:

Transform your business with the dynamic cognitive computing technology of IBM Watson. Enhance decision-making, automate operations, and accelerate the growth of your organization with this powerful tool.

Additional unique details about the platform:

IBM Watson’s Artificial intelligence streamlines workflows and personalizes experiences while enhancing predictive capabilities. The open-source ecosystem allows developers and businesses alike to integrate their innovative applications seamlessly.

Suggested implementation strategies:

1) Leverage Watson’s data visualization tools to clearly understand complex data sets and analyze them.

2) Utilize Watson’s Natural Language processing capabilities for sentiment analysis, identifying keywords, or contextual understanding.

By incorporating IBM Watson’s versatile machine learning functions into your operations, you can gain valuable insights into customer behavior patterns, track industry trends, improve decision-making abilities, and eventually boost revenue.

Google’s AI platform is so powerful, it knows what you’re searching for before you do.

Google Cloud AI Platform

The AI platform provided by Google Cloud is an exceptional tool for businesses that major in delivering machine learning services. It provides a broad array of functionalities tailored to meet the diverse demands of clients all over the world.

The following table summarizes the features and capabilities offered by the Google Cloud AI Platform:FeaturesCapabilitiesData Management & Pre-processing

– Large-scale data processing

– Data Integration and Analysis tools

– Deep Learning Frameworks

– Data versioning tools

Model Training

– Scalable training

– AutoML tools

– Advanced tuning configurations

– Distributed Training on CPU/GPU/TPU

Prediction

– High-performance responses within seconds

– Accurate predictions resulting from models trained using large-scale datasets.

Monitoring

– Real-time model supervision and adjustment

– Comprehensive monitoring, management, and optimization of models across various stages including deployment.

One unique aspect of the Google Cloud AI platform is its prominent role in enabling any developer, regardless of their prior experience with machine learning, to build sophisticated models. This ease of use accelerates experimentation and fosters innovation.

Finally, it is worth noting that according to a study conducted by International Business Machines Corporation (IBM), brands that adopted AI for customer support purposes experienced 40% cost savings while improving customer satisfaction rates by 90%.

Continue Reading

2 notes

·

View notes

Text

Amazon Web Service & Adobe Experience Manager:- A Journey together (Part-1)

In the world of digital marketing today, providing a quick, secure, and seamless experience is crucial. A quicker time to market might be a differentiation, and it is crucial to reach a larger audience across all devices. Businesses are relying on cloud-based solutions to increase corporate agility, seize new opportunities, and cut costs.

Managing your marketing content and assets is simple with AEM. There are many advantages to using AWS to run AEM, including improved business agility, better flexibility, and lower expenses.

AEM & AWS a Gift for you:-

We knows about AEM as market leader in the Digital marketing but AWS is having answer for almost all the Architectural concerns like global capacity, security, reliability, fault tolerance, programmability, and usability.

So now AEM become more powerful with the power of AWS and gaining more popularity than the on-premises infrastructure.

Limitless Capacity

This combination gives full freedom to scale all AEM environments speedily in cost effective manner, addition is now more easy,

In peak traffic volume where requests are very huge or unknown then AEM instance need more power or scaling . Here friend AWS come in to picture for rescue as the on-demand feature allows to scale all workloads. In holiday season, sporting events and sale events like thanks giving etc. AWS is holding hand of AEM and say

"Hey don't worry I am here for you, i will not left you alone in these peak scenario"

When AEM require upgrade but worried about other things like downtime backup etc then also AWS as friend come and support greatly with its cloud capability. It streamlines upgrades and deployments of AEM.

Now it become easy task with AWS. Parallel environment is cake walk now, so migration and testing is much easier without thinking of the infrastructure difficulties.

Performance testing from the QA is much easier without disturbing production. It can be done in AEM production-like environment. Performing the actual production upgrade itself can then be as simple as the change of a domain name system (DNS) entry.

Sky is no limit for AEM with AWS features and Capabilities :

As a market leader AEM is used by customers as the foundation of their digital marketing platform. AWS and AEM can provide a lot of third part integration opportunity such as blogs, and providing additional tools for supporting mobile delivery, analytics, and big data management.

A new feature can be generated with AWS & AEM combination.Many services like Amazon Simple Notification Service (Amazon SNS), Amazon Simple Queue Service (Amazon SQS), and AWS Lambda, AEM functionality easily integrated with third-party APIs in a decoupled manner. AWS can provide a clean, manageable, and auditable approach to decoupled integration with back-end systems such as CRM and e-commerce systems.

24*7 Global Availability of AEM with Buddy AWS

A more Agile and Innovative requirement can fulfill by cloud transition. How innovation and how much Agile, in previous on-premise environment for any innovation need new infrastructure and more capital expenditure (Capex). Here again the golden combination of AWS and AEM will make things easier and agile. The AWS Cloud model gives you the agility to quickly spin up new instances on AWS, and the ability to try out new services

without investing in large and upfront costs. One of the feature of AWS pay-for-what-you-use pricing model is become savior in these activities.

AWS Global Infrastructure available across 24

geographic regions around the globe, so enabling customers to deploy on a global footprint quickly and easily.

Major Security concerns handled with High-Compliance

Security is the major concern about any AEM website. AWS gifts these control and confidence for secure environment. AWS ensure that you will gain the control and confidence with safety and flexibility in secure cloud computing environment .

AWS, provides way to improve ability to meet core security and compliance requirements with a comprehensive set of services and features. Compliance certifications and attestations are assessed by a third-party, independent auditor.

Running AEM on AWS provides customers with the benefits of leveraging the compliance and security capabilities of AWS, along with the ability to monitor and audit access to AEM using AWS Security, Identity and Compliance services.

Continue in part-2.......

2 notes

·

View notes

Text

Digital Twin Market Size 2023-2030: ABB, AVEVA Group plc, Dassault Systemes

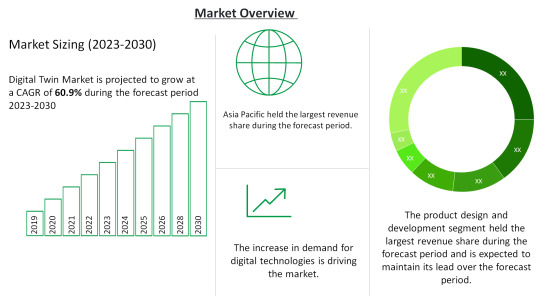

Digital Twin Market by Power Source (Battery-Powered, hardwired with battery backup, Hardwired without battery backup), Type (Photoelectric Smoke Detectors, Ionization Smoke Detectors), Service, Distribution Channel, and region (North America, Europe, Asia-Pacific, Middle East, and Africa and South America). The global Digital Twin Market size is 11.12 billion USD in 2022 and is projected to reach a CAGR of 60.9% from 2023-2030.

Click Here For a Free Sample + Related Graphs of the Report at: https://www.delvens.com/get-free-sample/digital-twin-market-trends-forecast-till-2030

Digital twin technology has allowed businesses in end-use industries to generate digital equivalents of objects and systems across the product lifecycle. The potential use cases of digital twin technology have expanded rapidly over the years, anchored in the increasing trend of integration with internet-of-things (IoT) sensors. Coupled with AI and analytics, the capabilities of digital twins are enabling engineers to carry out simulations before a physical product is developed. As a result, digital twins are being deployed by manufacturing companies to shorten time-to-market. Additionally, digital twin technology is also showing its potential in optimizing maintenance costs and timelines, thus has attracted colossal interest among manufacturing stalwarts, notably in discrete manufacturing.

The shift to interconnected environments across industries is driving the demand for digital twin solutions across the world. Massive adoption of IoT is being witnessed, with over 41 billion connected IoT devices expected to be in use by 2030. For the successful implementation and functioning of IoT, increasing the throughput for every part or “thing” is necessary, which is made possible by digital twin technology. Since the behavior and performance of a system over its lifetime depend on its components, the demand for digital twin technology is increasing across the world for system improvement. The emergence of digitalization in manufacturing is driving the global digital twin market. Manufacturing units across the globe are investing in digitalization strategies to increase their operational efficiency, productivity, and accuracy. These digitalization solutions including digital twin are contributing to an increase in manufacturer responsiveness and agility through changing customer demands and market conditions.

On the other hand, there has been a wide implementation of digital technologies like artificial intelligence, IoT, clog, and big data which is increasing across the business units. The market solutions help in the integration of IoT sensors and technologies that help in the virtualization of the physical twin. The connectivity is growing and so are the associated risks like security, data protection, and regulations, alongside compliance.

During the COVID-19 pandemic, the use of digital twin technologies to manage industrial and manufacturing assets increased significantly across production facilities to mitigate the risks associated with the outbreak. Amid the lockdown, the U.S. implemented a National Digital Twin Program, which was expected to leverage the digital twin blueprint of major cities of the U.S. to improve smart city infrastructure and service delivery. The COVID-19 pandemic positively impacted the digital twin market demand for twin technology.

Delvens Industry Expert’s Standpoint

The use of solutions like digital twins is predicted to be fueled by the rapid uptake of 3D printing technology, rising demand for digital twins in the healthcare and pharmaceutical sectors, and the growing tendency for IoT solution adoption across multiple industries. With pre-analysis of the actual product, while it is still in the creation stage, digital twins technology helps to improve physical product design across the full product lifetime. Technology like digital twins can be of huge help to doctors and surgeons in the near future and hence, the market is expected to grow.

Market Portfolio

Key Findings

The enterprise segment is further segmented into Large Enterprises and Small & Medium Enterprises. Small & Medium Enterprises are expected to dominate the market during the forecast period. It is further expected to grow at the highest CAGR from 2023 to 2030.

The industry segment is further segmented into Automotive & Transportation, Energy & Utilities, Infrastructure, Healthcare, Aerospace, Oil & Gas, Telecommunications, Agriculture, Retail, and Other Industries. The automotive & transportation industry is expected to account for the largest share of the digital twin market during the forecast period. The growth can be attributed to the increasing usage of digital twins for designing, simulation, MRO (maintenance, repair, and overhaul), production, and after-service.

The market is also divided into various regions such as North America, Europe, Asia-Pacific, South America, and Middle East and Africa. North America is expected to hold the largest share of the digital twin market throughout the forecast period. North America is a major hub for technological innovations and an early adopter of digital twins and related technologies.

During the COVID-19 pandemic, the use of digital twin technologies to manage industrial and manufacturing assets increased significantly across production facilities to mitigate the risks associated with the outbreak. Amid the lockdown, the U.S. implemented a National Digital Twin Program, which was expected to leverage the digital twin blueprint of major cities of the U.S. to improve smart city infrastructure and service delivery. The COVID-19 pandemic positively impacted the digital twin market demand for twin technology.

Regional Analysis

North America to Dominate the Market

North America is expected to hold the largest share of the digital twin market throughout the forecast period. North America is a major hub for technological innovations and an early adopter of digital twins and related technologies.

North America has an established ecosystem for digital twin practices and the presence of large automotive & transportation, aerospace, chemical, energy & utilities, and food & beverage companies in the US. These industries are replacing legacy systems with advanced solutions to improve performance efficiency and reduce overall operational costs, resulting in the growth of the digital twin technology market in this region.

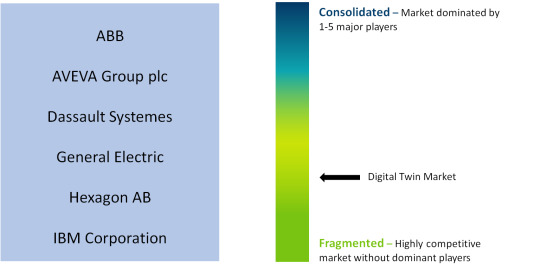

Competitive Landscape

ABB

AVEVA Group plc

Dassault Systemes

General Electric

Hexagon AB

IBM Corporation

SAP

Microsoft

Siemens

ANSYS

PTC

IBM

Recent Developments

In April 2022, GE Research (US) and GE Renewable Energy (France), subsidiaries of GE, collaborated and developed a cutting-edge artificial intelligence (AI)/machine learning (ML) technology that has the potential to save the worldwide wind industry billions of dollars in logistical expenses over the next decade. GE’s AI/ML tool uses a digital twin of the wind turbine logistics process to accurately predict and streamline logistics costs. Based on the current industry growth forecasts, AI/ML might enable a 10% decrease in logistics costs, representing a global cost saving to the wind sector of up to USD 2.6 billion annually by 2030.

In March 2022, Microsoft announced a strategic partnership with Newcrest. The mining business of Newcrest would adopt Azure as its preferred cloud provider globally, as well as work on digital twins and a sustainability data model. Both organizations are working together on projects, including the use of digital twins to improve operational performance and developing a high-impact sustainability data model.

Reasons to Acquire

Increase your understanding of the market for identifying the best and most suitable strategies and decisions on the basis of sales or revenue fluctuations in terms of volume and value, distribution chain analysis, market trends, and factors

Gain authentic and granular data access for Digital Twin Market so as to understand the trends and the factors involved in changing market situations

Qualitative and quantitative data utilization to discover arrays of future growth from the market trends of leaders to market visionaries and then recognize the significant areas to compete in the future

In-depth analysis of the changing trends of the market by visualizing the historic and forecast year growth patterns

Direct Purchase of Digital Twin Market Research Report at: https://www.delvens.com/checkout/digital-twin-market-trends-forecast-till-2030

Report Scope

Report FeatureDescriptionsGrowth RateCAGR of 60.9% during the forecasting period, 2023-2030Historical Data2019-2021Forecast Years2023-2030Base Year2022Units ConsideredRevenue in USD million and CAGR from 2023 to 2030Report Segmentationenterprise, platform, application, and region.Report AttributeMarket Revenue Sizing (Global, Regional and Country Level) Company Share Analysis, Market Dynamics, Company ProfilingRegional Level ScopeNorth America, Europe, Asia-Pacific, South America, and Middle East, and AfricaCountry Level ScopeU.S., Japan, Germany, U.K., China, India, Brazil, UAE, and South Africa (50+ Countries Across the Globe)Companies ProfiledABB; AVEVA Group plc; Dassault Systems; General Electric; Hexagon AB; IBM Corp.; SAP.Available CustomizationIn addition to the market data for Digital Twin Market, Delvens offers client-centric reports and customized according to the company’s specific demand and requirement.

TABLE OF CONTENTS

Large Enterprises

Small & Medium Enterprises

Product Design & Development

Predictive Maintenance

Business Optimization

Performance Monitoring

Inventory Management

Other Applications

Automotive & Transportation

Energy & Utilities

Infrastructure

Healthcare

Aerospace

Oil & Gas

Telecommunications

Agriculture

Retail

Other Industries.

Asia Pacific

North America

Europe

South America

Middle East & Africa

ABB

AVEVA Group plc

Dassault Systemes

General Electric

Hexagon AB

IBM Corporation

SAP

About Us:

Delvens is a strategic advisory and consulting company headquartered in New Delhi, India. The company holds expertise in providing syndicated research reports, customized research reports and consulting services. Delvens qualitative and quantitative data is highly utilized by each level from niche to major markets, serving more than 1K prominent companies by assuring to provide the information on country, regional and global business environment. We have a database for more than 45 industries in more than 115+ major countries globally.

Delvens database assists the clients by providing in-depth information in crucial business decisions. Delvens offers significant facts and figures across various industries namely Healthcare, IT & Telecom, Chemicals & Materials, Semiconductor & Electronics, Energy, Pharmaceutical, Consumer Goods & Services, Food & Beverages. Our company provides an exhaustive and comprehensive understanding of the business environment.

Contact Us:

UNIT NO. 2126, TOWER B,

21ST FLOOR ALPHATHUM

SECTOR 90 NOIDA 201305, IN

+44-20-8638-5055

[email protected]

WEBSITE: https://delvens.com/

#Digital Twin Market#Digital Twin#Digital Twin Market Size#Digital Twin Market Share#Semiconductors & Electronics

2 notes

·

View notes

Text

sap basis course uk 5

Sap Programs & Training

The SAP SuccessFactors Human Experience Management Suite offers strategic solutions and personalised experiences to help folks realise their full potential. Its accessibility settings and options make the SuccessFactors HXM suite simpler to utilise based mostly on your needs with features similar to high distinction themes, colour modification, and keyboard navigation. This coaching session explains all the important ideas of managing human sources within an organisation and customisation of software theme settings.

The Latest module in SAP which manages all of the processes in relation to HR and supplies data of how SAP Success Factors applications optimize all the actions and duties carried out in Employee central and integration. Course methodology will explains and demonstrates each necessary perform of FICO module utilizing SAP reside server, lab-work, and sensible concept. You do NOT need to have any earlier expertise with SAP as teacher will take you through from Beginner or Basic and make you extremely comfortable with internal workings of SAP to increase you proficiency.