#Ansible

Text

Haha

65 notes

·

View notes

Text

As a digital age native, I have no idea what a Le Guin ansible is supposed to look like.

It’s apparently big enough that Genly can use it as a pillow. But that’s a large range. Is it toaster oven size? Microwave? Is Genly lugging a mini-fridge-sized communicator all across Gethen?

67 notes

·

View notes

Text

DevOps tools like Ansible, Chef, and Puppet are revolutionizing the way we work. But which one is the ultimate champion?

Our latest post explores the pros and cons of each tool, helping you choose the best option for your team.

Don't miss out on this informative read!

5 notes

·

View notes

Text

3 notes

·

View notes

Text

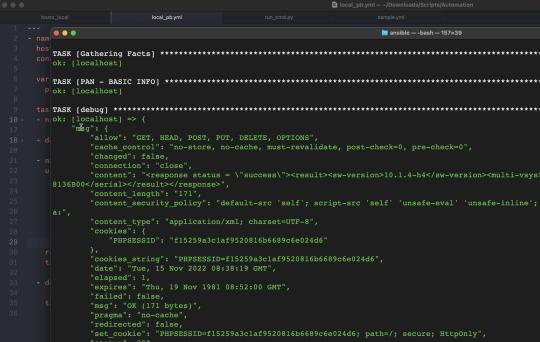

Sending REST APIs in Playbooks

Hello Networkers,

We have released a new update to our existing ‘Network Automation using Ansible’ course we will show you how you can run python scripts inside of an Ansible playbook utilizing the shell module. Another type of task we can run is sending REST API requests inside of a playbook. For this example we will send a request to one of my Palo Alto Network firewalls to get some basic system information..

You can get more details here:

https://www.routehub.net/course/ansible/

8 notes

·

View notes

Text

Goke: re-entry (green wheel of fire)

2 notes

·

View notes

Text

Ansible Interview Questions And Answers for Experienced 2024

Ready to crush your next Ansible interview? This guide unlocks powerful questions and hands-on scenarios to showcase your Ansible mastery and land your dream automation role!

0 notes

Text

0 notes

Text

Mastering Ansible: Top Interview Questions and Answers

As companies increasingly adopt DevOps practices to streamline their software development and deployment processes, automation tools like Ansible have become indispensable. Ansible, with its simplicity, agentless architecture, and powerful automation capabilities, has emerged as a favorite among DevOps engineers and system administrators.

If you're preparing for an Ansible interview, it's crucial to have a solid understanding of its concepts, architecture, and best practices. To help you in your preparation, we've compiled a list of top Ansible interview questions along with detailed answers.

1. What is Ansible, and how does it differ from other configuration management tools?

Ansible is an open-source automation tool used for configuration management, application deployment, and orchestration. Unlike other configuration management tools like Puppet or Chef, Ansible follows an agentless architecture, meaning it doesn't require any software to be installed on managed hosts. Instead, Ansible communicates with remote machines using SSH or PowerShell.

2. What are Ansible playbooks?

Ansible playbooks are files written in YAML format that define a series of tasks to be executed on remote hosts. Playbooks are the foundation of Ansible automation and allow users to define complex automation workflows in a human-readable format. Each playbook consists of one or more plays, and each play contains a list of tasks to be executed on specified hosts.

3. Explain Ansible modules.

Ansible modules are small programs that Ansible invokes on remote hosts to perform specific tasks. Modules can be used to manage system resources, install packages, configure services, and more. Ansible ships with a wide range of built-in modules for common tasks, and users can also write custom modules to extend Ansible's functionality.

4. What is an Ansible role?

Ansible roles are a way of organizing and structuring Ansible playbooks. A role encapsulates a set of tasks, handlers, variables, and templates into a reusable unit, making it easier to manage and share automation code. Roles promote modularity and reusability, allowing users to abstract away common configuration patterns and apply them across multiple playbooks.

5. How does Ansible handle idempotence?

Idempotence is a key concept in Ansible that ensures that running the same playbook multiple times has the same effect as running it once. Ansible achieves idempotence through its module system, which only applies changes if necessary. Modules use state-based logic to check the current state of a system and only make changes if the desired state differs from the current state.

6. What is Ansible Tower, and how does it differ from Ansible?

Ansible Tower (now known as Red Hat Ansible Automation Platform) is a web-based GUI and REST API interface for Ansible. It provides features like role-based access control, job scheduling, inventory management, and more, making it easier to scale and manage Ansible automation across large organizations. While Ansible Tower offers additional enterprise features, Ansible itself remains the core automation engine.

7. How does Ansible manage inventory?

Inventory in Ansible refers to the list of managed hosts that Ansible will interact with during playbook execution. Inventory can be defined statically in a file or dynamically using external scripts or cloud providers' APIs. Ansible inventory can also be organized into groups, allowing users to target specific subsets of hosts with their playbooks.

8. What are Ansible facts?

Ansible facts are pieces of information about remote hosts collected by Ansible during playbook execution. Facts include details such as the operating system, IP addresses, hardware specifications, and more. Ansible gathers facts automatically at the beginning of playbook execution and makes them available as variables that can be used in playbooks.

9. Explain the difference between Ansible ad-hoc commands and playbooks.

Ad-hoc commands in Ansible are one-off commands executed from the command line without the need for a playbook. Ad-hoc commands are useful for performing quick tasks or troubleshooting but lack the repeatability and maintainability of playbooks. Playbooks, on the other hand, allow users to define complex automation workflows in a structured and reusable format.

10. How do you handle sensitive data like passwords in Ansible?

Sensitive data such as passwords or API tokens can be stored securely using Ansible's vault feature. Ansible vault allows users to encrypt sensitive data within playbooks or variable files, ensuring that it remains secure both at rest and in transit. Vault-encrypted files can be decrypted during playbook execution using a password or encryption key.

In conclusion, mastering Ansible requires a deep understanding of its core concepts, modules, playbooks, roles, and best practices. By familiarizing yourself with these top Ansible interview questions and answers, you'll be well-equipped to demonstrate your expertise and tackle any Ansible-related challenges that come your way.

if you like to read more about it visit analyticsjobs.in

0 notes

Text

Excelling in DevOps: Shaping the Future with Tools and Technologies

In today's swiftly advancing technological landscape, achieving mastery in DevOps is a pivotal necessity for organizations aiming to optimize their software development processes. The focus on Comprehensive DevOps Training in Hyderabad underscores the importance of integrating DevOps practices to ensure seamless and efficient software delivery. This blog delves into the core of DevOps, exploring essential tools and technologies that serve as the foundation for this transformative approach. The comprehension and utilization of these components empower organizations not only to adapt to the current pace of technological evolution but also to shape collaborative and streamlined software development lifecycles.

Exploring the DevOps Technology Landscape

DevOps transcends a mere practice; it represents a dynamic synergy of influential tools and cutting-edge technologies that collectively revolutionize the software development and operational landscape. This section thoroughly explores the essential components constituting the rich tapestry of the DevOps technology landscape, shedding light on their significance and interplay within the ever-evolving technological ecosystem. These components act as building blocks, contributing to a harmonious blend that empowers organizations to achieve enhanced collaboration, continuous integration, and seamless software delivery.

Version Control Systems (VCS): Orchestrating Collaborative Development

Delving into collaborative development, this section immerses us in the critical domain of Version Control Systems (VCS), with a particular emphasis on renowned platforms such as Git and SVN. It unveils their pivotal role in code management and version control, providing valuable insights into how these systems establish a robust foundation for effective teamwork within the development process. The functionality of VCS extends beyond a mere tracking mechanism; it acts as a dynamic facilitator enabling developers to seamlessly collaborate, track changes, and manage versions, fostering a collaborative environment that enhances code quality and project efficiency.

Continuous Integration (CI) Tools: Architects of Streamlined Development Cycles

Embark on a journey to discover the remarkable world of automation with Jenkins, Travis CI, and CircleCI. These tools serve as architects of streamlined development cycles, orchestrating the intricate dance of code integration and build processes with unparalleled efficiency. Jenkins, a venerable figure in the DevOps landscape, orchestrates automation seamlessly, while Travis CI and CircleCI add their prowess, collectively forming a trio of tools that redefine the landscape of continuous integration (CI). Delve into the intricacies of their functionalities, unraveling the intricate threads that bind code integration and build processes together. Witness the transformative impact of CI on the entire spectrum of software delivery, where automation becomes the catalyst for faster development cycles, smoother workflows, and ultimately, a more agile and responsive development environment.

Configuration Management Tools: Streamlining Infrastructure Provisioning and Configuration

Automation takes center stage with Ansible, Puppet, and Chef. Understand how these configuration management tools streamline infrastructure provisioning and configuration, ensuring consistency across diverse environments.

Containerization and Orchestration: Revolutionizing Software Deployment

Dive into the world of Docker and Kubernetes, witnessing their pivotal role in modern software deployment. Learn how containerization ensures consistency, while orchestration optimizes the deployment and scaling of applications.

Continuous Deployment/Continuous Delivery (CI/CD): Streamlining End-to-End Software Delivery Processes

Jenkins, GitLab CI, and Travis CI take the spotlight as we examine their roles in automating end-to-end software delivery processes. Explore how CI/CD practices streamline deployment, making it more efficient and reliable.

Monitoring and Logging Solutions: Indispensable Real-Time Insights

Real-time insights become indispensable with ELK Stack, Prometheus, and Grafana. Uncover their significance in monitoring application and infrastructure performance, ensuring proactive issue identification and resolution.

Cloud Platforms in DevOps: Embracing Scalable and Flexible Infrastructure

Embrace the power of AWS, Azure, and Google Cloud Platform for scalable and flexible infrastructure. Learn how cloud platforms facilitate seamless deployment and management of applications, a key aspect of modern DevOps practices.

Building a Versatile Skill Set: Beyond Technical Proficiency

Recognizing the dynamic requirements of the DevOps field, this section emphasizes the necessity for a versatile skill set that goes beyond technical proficiency alone. Here, the focus extends to acknowledging the crucial role of communication and collaboration skills in attaining mastery in DevOps. Beyond having command over tools and technologies, the holistic approach advocated in this segment acknowledges that proficient DevOps practitioners must possess the ability to communicate seamlessly across cross-functional teams. This involves articulating complex technical concepts, actively participating in collaborative problem-solving, and fostering a culture of shared responsibility. Essentially, achieving mastery in DevOps requires a comprehensive skill development approach that nurtures not only technical expertise but also the interpersonal and collaborative skills essential for navigating the intricate and interdependent landscapes of modern software development and operations. To elevate your DevOps skills and maintain competitiveness in the job market, consider enrolling in a DevOps Online Course. This comprehensive training offers hands-on experience and insights into the latest industry practices, preparing you for a successful career in DevOps across various organizations, including both established companies and startups.

Future Trends in DevOps Technology: Looking Ahead

Cast your gaze into the future, where emerging technologies and trends are poised to shape the trajectory of DevOps. In this forward-looking perspective, the anticipation of industry advancements takes center stage. Recognizing that adaptation is the linchpin of success, staying informed becomes a crucial mandate for sustained mastery within the dynamic DevOps landscape. As technological landscapes evolve, the ability to foresee and embrace transformative trends positions DevOps practitioners at the forefront of innovation. This foresight not only ensures a proactive response to industry shifts but also fosters an environment where continuous learning and adaptation are ingrained as essential practices. In essence, the call to "Look ahead" underscores the importance of being forward-thinking and agile, traits that are fundamental for those aspiring to be leaders in the ever-evolving realm of DevOps.

Excelling DevOps goes beyond learning tools; it's about understanding their synergy and adapting to the ever-evolving tech landscape. Continuous learning and a proactive approach to technology trends are the keys to staying ahead in the DevOps game. Embrace the tools, embrace the future!

#devops#docker#kubernetes#prometheus#continuous integration#ansible#information technology#tech#cloud computing#aws#azure

0 notes

Text

Who is paying $340K just to write YAML files? Asking for a friend.

15 notes

·

View notes

Text

"RH294: Your gateway to the right job in the tech world."

Visit : https://amritahyd.org/

Enroll Now- 90005 80570

#amrita#amritatechnologies#RH294#rh294#automation#linux#linuxcourse#linuxautomation#linuxplatform#redhat#ansible#linux9#containerregistration#rh294course#openshift#rh188#rhcetraining#rhcetrainingcourse#onlinelearning#applicationdevelopment#linuxuser

0 notes

Text

Developer Environment Presentation 1 Part 9: Mobile Applications Preview (iOS, Android)

Preview of the Jannah iOS and Android mobile applications. Showcase making WorkflowList query and the response data structure.

Developer Environment Presentation 1 Part 9: Mobile Applications Preview (iOS, Android).

In the previous video, we had left off showcasing the Web frontend application, pulling data from the Django based middleware application.

I had shown pulling data from the GraphQL API at http://0.0.0.0:8080/graphql.

The mobile apps perform the same GraphQL queries to get data from the middlware.

The iOS…

View On WordPress

#ansible#automation#boot#Developer#devOps#DX#Environment#Feedback#github#IDE#jannahio#Jannah_io#middleware#model#molecule#Network#Sites#software#Stack#storage#Variables

0 notes

Text

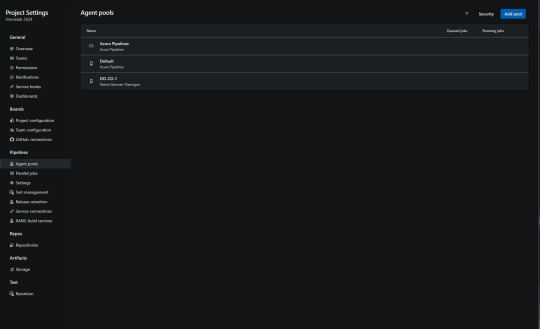

How to Configure an Azure DevOps self hosted Pipeline Agent

Azure DevOps is a tool you can use, for free (with an existing business subscription) that allows you to do a lot of things:

Store code in git repos

Plan projects with a kanban style board

Automate deployments with pipelines

The third option is the most interesting. When you push your code to the repo in Azure DevOps, you can set up a pipeline that will automatically take that code and execute steps you define to deploy it.

An example would be my use case. I write a Terraform plan on my iPad, push it to the Azure DevOps git repo, and the pipeline takes that code and uses it to deploy a new virtual machine on the server in my living room.

Pipeline Punch

Pipelines are not free. You do get some free pipeline hours of execution every month, but if you go over the allotted free tier values it gets very expensive.

One solution to this is to host your own pipeline and connect it to Azure DevOps. This means that instead of paying Microsoft to run this code, you have your own server that does it. This has a few benefits:

You can customize your server’s software

You can customize your server’s connectivity (such as a VPN that connects it to your house!)

You have control over every aspect of the pipeline, and can configure it to your needs!

The pipeline agent doesn’t have to be anything super powerful, depending on what you’re doing. But you can beef it up if you need more power, or you can slim it down to the minimum if you just need something lightweight.

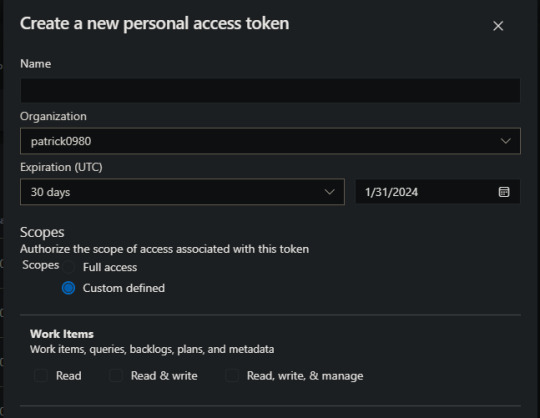

I chose to run my pipeline agent on Digital Ocean. I will now go over the process of configuring a self hosted pipeline agent.

Setting up your own Custom Agent

First, you need a Linux server. I’m using Ubuntu 22.04 hosted on Digital Ocean for $6/month. This guide assumes you know how to install and configure a Linux server, so we won’t be covering that here.

The process is pretty simple. Let’s set up Azure DevOps.

First you will need to navigate to your Azure DevOps instance and click the settings icon in the bottom left hand corner of the screen.

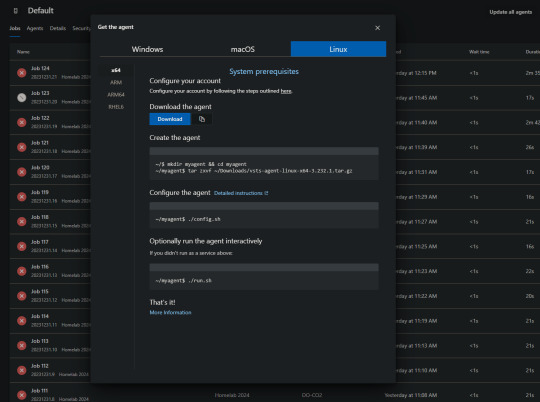

You will then be able to download the agent, or copy the link. If you copy the link, you can simply download the agent on your Linux sever using the wget command. wget https://vstsagentpackage.azureedge.net/agent/3.232.1/vsts-agent-linux-x64-3.232.1.tar.gz

You will need to do all of this from a non-root user with sudo permissions.

Next execute these commands to set up the server.mkdir myagent && cd myagent tar zxvf ~/Downloads/vsts-agent-linux-x64-3.232.1.tar.gz

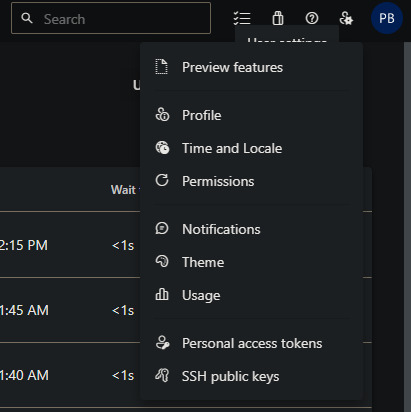

Now you will need to configure your personal access token in Azure DevOps. This PAT is going to be the password that allows your agent to receive code and commands from Azure DevOps.

Note that a PAT will expire after a set amount of time you can define. You can set it to never expire, but you really shouldn’t.

Take a note of your PAT, you won’t be able to see it again after this. Also, take a note of your DevOps URL, it should look like dev.azure.com/patrick0980

Next, connect to your pipeline agent server over SSH. You will run the following commands.cd myagent ./config.sh

This will guide you through the install process. You will be asked for your DevOps URL and PAT, so have those ready.

Once you’ve finished filling out the forum for the installer, it will be present on your system. We’re almost there!

The last thing we need to do is configure the pipeline agent to operate as a systemd service. This will allow it to run in the background on the server, so we won’t have to manually invoke it. To do that you will run the “svc.sh” script in the same directory you were just in../svc.sh

Conclusion

Azure DevOps is a great tool you can utilize to really automate a lot of things. The possibilities are endless – and you don’t have to pay an extra penny for it! I hope you found this guide useful and hope to make more content for you soon.

Have a great rest of your day!

0 notes

Text

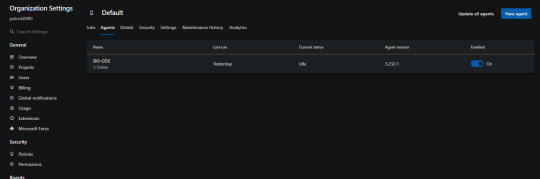

Running Python Scripts in Ansible Playbooks

Hello Networkers,

We have released a new update to our existing ‘Network Automation using Ansible’ course where we will show you how you can run Python scripts as a task inside of an Ansible playbook. This will be a simple python script that will use the NETMIKO python library to access a network device and run a basic operational command and displaying that output when our playbook is run.

You can get more details here:

https://www.routehub.net/course/ansible/

2 notes

·

View notes