#AI Categorization API

Note

Am I right in suspecting that GPT-4 is not nearly as great an advance on GPT-3 as GPT-3 was on GPT-2? It seems a much better product, but that product seems to have as its selling point not vastly improved text-prediction, but multi-modality.

No one outside of OpenAI really knows how much of an advance GPT-4 is, or isn't.

When GPT-3 came out, OpenAI was still a research company, like DeepMind.

Before there was a GPT-3 product, there was a GPT-3 paper. And it was a long, serious, academic-style paper. It described, in a lot of detail, how they created and evaluated the model.

The paper was an act of scientific communication. A report on a new experiment written for a research audience, intended primarily to transmit information to that audience. It wanted to show you what they had done, so you could understand it, even if you weren't there at the time. And it wanted to convince you of various claims about the model's properties.

I don't know if they submitted it to any conferences or journals (IIRC I think they did, but only later on?). But if they did, they could have, and it wouldn't seem out of place in those venues.

Now, OpenAI is fully a product company.

As far as I know, they have entirely stopped releasing academic-style papers. The last major one was the DALLE-2 one, I think. (ChatGPT didn't get one.)

What OpenAI does now is make products. The release yesterday was a product release, not a scientific announcement.

In some cases, as with GPT-4, they may accompany their product releases with things that look superficially like scientific papers.

But the GPT-4 "technical report" is not a serious scientific paper. A cynic might categorize it as "advertising."

More charitably, perhaps it's an honest attempt to communicate as much as possible to the world about their new model, given a new set of internally defined constraints motivated by business and/or AI safety concerns. But if so, those constraints mean they can't really say much at all -- not in a way that meets the ordinary standards of evidence for scientific work.

Their report says, right at the start, that it will contain no information about what the model actually is, besides the stuff that would already be obvious:

GPT-4 is a Transformer-style model [33 ] pre-trained to predict the next token in a document, using both publicly available data (such as internet data) and data licensed from third-party providers. [note that this really only says "we trained on some data, not all of which was public" -nost] The model was then fine-tuned using Reinforcement Learning from Human Feedback (RLHF) [34 ]. Given both the competitive landscape and the safety implications of large-scale models like GPT-4, this report contains no further details about the architecture (including model size), hardware, training compute, dataset construction, training method, or similar.

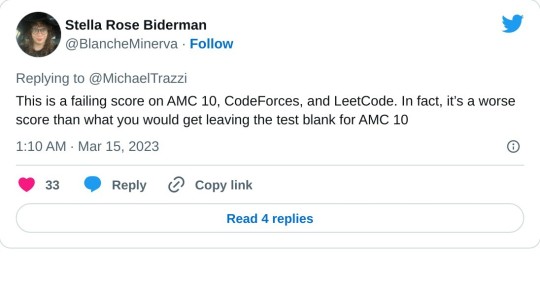

As Eleuther's Eric Hallahan put it yesterday:

If we read further into the report, we find a number of impressive-looking evaluations.

But they are mostly novel ones, not done before on earlier LMs. The methodology is presented in a spotty and casual manner, clearly not interested in promoting independent reproductions (and possibly even with the intent of discouraging them).

Even the little information that is available in the report is enough to cast serious doubt on the overall trustworthiness of that information. Some of it violates simple common sense:

...and, to the careful independent eye, immediately suggests some very worrying possibilities:

That said -- soon enough, we will be able to interact with this model via an API.

And once that happens, I'm sure independent researchers committed to open source and open information will step in and assess GPT-4 seriously and scientifically -- filling the gap left by OpenAI's increasingly "product-y" communication style.

Just as they've done before. The open source / open information community in this area is very capable, very thoughtful, and very fast. (They're where Stable Diffusion came from, to pick just one well-known example.)

----

When the GPT-3 paper came out, I wrote a post titled "gpt-3: a disappointing paper." I stand by the title, in the specific sense that I meant it, but I was well aware that I was taking a contrarian, almost trollish pose. Most people found the GPT-3 paper far from "disappointing," and I understand why.

But "GPT-4: a disappointing paper" isn't a contrarian pose. It was -- as far as I can see -- the immediate and overwhelming consensus of the ML community.

----

As for the multimodal stuff, uh, time will tell? We can't use it yet, so it's hard to know how good it is.

What they showed off in the live demo felt a lot like what @nostalgebraist-autoresponder has been able to do for years now.

Like, yeah, GPT-4 is better at it, but it's not a fundamentally new advance, it's been possible for a while. And people have done versions of it, eg Flamingo and PaLI and Magma [which Frank uses a version of internally] and CoCa [which I'm planning to use in Frank, once I get a chance to re-tune everything for it].

I do think it's a potentially transformative capability, specifically because it will let the model natively "see" a much larger fraction of the available information on web pages, and thus enable "action transformer" applications a la what Adept is doing.

But again, only time will tell whether these applications are really going to work, and for what, and whether GPT-4 is good enough for that purpose -- and whether you even need it, when other text/image language models are already out there and are being rapidly developed.

#ai tag#gpt-4#ugh i apparently can't respond to npf asks in the legacy editor :(#the npf/beta editor is still painful to use#it's nice to be able to embed tweets though

388 notes

·

View notes

Text

Amazon Titan Image Generator: Future of Image Creation

Amazon Titan Image Generator AWS

Watermark detection API and Amazon Titan Image Generator are now accessible in Amazon Bedrock

Amazon unveiled the preview of Amazon Titan Image Generator at AWS re: Invent 2023. With English natural language cues, you can use our generative artificial intelligence (generative AI) foundation model (FM) to rapidly produce and modify realistic, studio-quality photos.

With additional image creation and editing features, including instantaneous image customization, Amazon Titan Image Generator is now widely available in Amazon Bedrock, making it simple to develop and scale generative AI applications.

Amazon also stated that by default, all photos created by Titan Image Generator come with an invisible watermark. This watermark serves as a means of identifying photographs created by artificial intelligence, thereby preventing the dissemination of false information.

The Titan Image Generator watermark detection feature is now widely accessible in the Amazon Bedrock console, as Amazon Bedrock is happy to announce. Additionally, they are launching a new Detect Generated Content API (preview) in Amazon Bedrock today that assists you in determining whether an image was created using Titan Image Generator by looking for the presence of this watermark.

Amazon Titan Advantages

A wide variety of uses

Strong text, picture, and multimodal models can drive a wide range of generative AI applications, including recommendation and search functions, content production, and image generation.

Provide pertinent search results.

Improve personalized recommendations and search accuracy.

Integrated assistance for conscientious AI

By screening model outputs, rejecting unsuitable user inputs, and identifying and eliminating harmful content from the data, all Amazon Titan FMs provide built-in support for the responsible use of AI. By default, every image produced by the Amazon Titan Image Generator model has an invisible watermark to assist prevent the creation of dangerous content and stop the dissemination of false information. One of the first major cloud providers to extensively offer built-in watermarks for AI picture outputs is AWS. Watermark detection is an innovative technology.

Simple personalization

Utilize your own data to fine-tune Amazon Titan models so they may be customized to carry out tasks unique to your organization.

Introducing Amazon Titan

The Amazon Titan family of models, available only on Amazon Bedrock, leverages the company’s twenty-five years of experience in utilizing AI and machine learning to innovate throughout its business. Through a fully managed API, Amazon Titan foundation models (FMs) offer a wide range of high-performing image, multimodal, and text model options to customers.

Amazon Titan models are robust, all-purpose models designed to serve a range of use cases and the responsible use of artificial intelligence. They are developed by AWS and pretrained on sizable datasets. Utilize them as-is or covertly alter them using your personal information.

Amazon Titan Image Generator AI

Text production

For a wide range of text-related operations, including writing material for web sites and blogs, categorizing articles, conducting open-ended Q&A, conversational chat, information extraction, and more, use Titan Text models to increase productivity and efficiency.

Synopsis

To swiftly and efficiently extract crucial information from lengthy publications like articles, reports, research papers, technical documentation, and more, use Titan Text models to generate succinct summaries of the content.

Search semantics

To give end users more precise and contextually relevant multimodal search, recommendation, and personalization experiences, leverage Titan Multimodal Embeddings and Titan Text Embeddings.

Creation of images

Give content producers the tools they need to quickly generate ideas and refine them into highly effective image creation. Using natural language cues, clients in the media and entertainment, e-commerce, and advertising sectors can produce realistic, studio-quality photographs in big quantities and at a reasonable cost.

Generation Augmented for Retrieval (RAG)

By linking FMs to your data sources, you may provide users with more accurate and up-to-date responses for their queries. Increase the Titan models’ already formidable powers and give them additional domain and organisation expertise.

Model Variations

Titan Text Express LLM model variations provide a good mix between performance and cost.

Titan Text Express

Maximum tokens: 8,000

Languages: 100+ languages available (preview), English (GA)

Adjustment support: Indeed.

Retrieval augmented generation, open-ended text generation, brainstorming, summarization, code generation, table creation, data formatting, paraphrase, rewriting, extraction, Q&A, and chat are among the use cases that are supported.

Titan Text Lite

Titan Text Lite is an extremely customizable and reasonably priced LLM. Appropriate in size for particular applications, perfect for text creation and optimization jobs.

Maximum tokens: 4,000

Languages Spoken: English

Adjustment support: Indeed.

Copywriting and summarization are supported use cases.

Titan Text Embeddings

Text to numerical representations is translated by Titan Text Embeddings LLM.

Maximum tokens: 8,000

More than 25 languages are spoken.

No fine-tuning is supported.

1,536 embeddings

Semantic similarity, grouping, and text retrieval are supported use cases.

Titan Text Embeddings V2 (Coming Soon)

Titan Text Embeddings V2 (Coming Soon) LLM optimized for low latency and high accuracy retrieval performance at lower dimensions.

Maximum tokens: 8,000

In pre-training, more than 100 languages

Supported fine tuning: None

Supported normalization: yes

Embeds: 1024, 512, and 256

Supported use cases include using semantic similarity search to locate documents (e.g., for plagiarism detection), classifying labels into learnt representations based on data (e.g., for genre classification of movies), and enhancing the relevancy and quality of search results that are generated or retrieved.

Titan Multimodal Embeddings

Accurate multimodal search and recommendation experiences are powered by Titan Multimodal Embeddings.

Maximum tokens: 128

Maximum picture size: 25 MB

Languages Spoken: English

Supported fine tuning: Yes

Embeddings: 384, 256, 1,024 (default),

Search, recommendation, and personalization are supported use cases.

Titan Picture Maker

Using text prompts, create realistic, studio-quality photographs.

Maximum tokens: 77

Maximum file size for input: 25 MB

Languages Spoken: English

Adjustment support: Indeed.

Image manipulation, text to image generation, and picture variants are supported use cases.

Accessible

In the AWS Regions US East (North Virginia) and US West (Oregon), Amazon Titan Image Generator, the new immediate customization features, and watermark detection in the Amazon Bedrock console are now accessible. See the complete list of regions for upcoming changes. AWS Regions US East (North Virginia) and US West (Oregon) are now offering a public preview of the new DetectGeneratedContent API in Amazon Bedrock.

PartyRock has added the Amazon Titan Image Generator to its offerings

The Amazon Bedrock playground PartyRock now offers Titan Image Generator. PartyRock offers a credit card-free, no-code, AI-powered app development experience. Using PartyRock, you can design apps that produce photos in a matter of seconds by choosing from a variety of image production models available from Stability AI and Amazon.

Read more on Govindhtech.com

#api#amazon#amazontitan#titanimagegeneration#ai#generativeai#amazonbedrock#llm#technology#technews#govindhtech

0 notes

Text

The Best AI and Machine Learning Consultancy Services

Harnessing the Power of AI and ML: Transforming Businesses with Cutting-Edge Solutions

In today's rapidly evolving digital landscape, businesses are constantly seeking innovative ways to stay ahead of the curve and drive growth. As technology continues to advance, the integration of Artificial Intelligence (AI) and Machine Learning (ML) has emerged as a game-changer, offering unprecedented opportunities for businesses to unlock valuable insights, streamline operations, and enhance decision-making processes. As a seasoned data scientist and consultant, I specialize in delivering custom AI/ML solutions tailored to meet the unique needs of businesses across various industries.

Click Here to get Services

Expertise That Drives Results

With a robust skill set encompassing a wide array of AI/ML capabilities, I offer a comprehensive suite of services designed to empower businesses with actionable insights and scalable solutions. My expertise spans the following domains:

Image Processing: Leveraging advanced algorithms and techniques, I enable businesses to extract meaningful insights from visual data, ranging from image classification and object detection to sentiment analysis.

Classification: By implementing sophisticated classification models, I help businesses categorize and organize vast amounts of data, facilitating more accurate decision-making and personalized experiences for users.

Sentiment Analysis: Harnessing the power of Natural Language Processing (NLP), I enable businesses to analyze and interpret customer sentiments, allowing them to gain valuable insights into consumer preferences, trends, and feedback.

Software Development: With a focus on building robust and scalable software solutions, I collaborate with businesses to develop custom applications and platforms that leverage AI/ML capabilities to drive efficiency and innovation.

Object Detection: Utilizing state-of-the-art techniques in computer vision, I empower businesses to detect and identify objects within images or video streams, enabling applications in security, retail, healthcare, and more.

Recommendation Systems: By deploying advanced recommendation algorithms, I assist businesses in delivering personalized recommendations to users, enhancing user engagement and driving conversions.

Predictive Analysis: Leveraging predictive modeling techniques, I enable businesses to forecast future trends, anticipate customer behavior, and make data-driven decisions with confidence.

Click Here to get Services

Technology Stack

To deliver best-in-class solutions, I leverage a powerful technology stack comprised of industry-leading frameworks, programming languages, APIs, and tools, including:

Programming Language: Python, renowned for its versatility and extensive libraries for AI/ML development.

Frameworks: From Scikit-learn and Keras to PyTorch and TensorFlow, I harness the full potential of these frameworks to develop robust ML models.

APIs: Leveraging APIs such as Microsoft Computer Vision AI, Amazon Rekognition, and Google Cloud Vision API, I integrate cutting-edge AI capabilities into business applications.

Tools: With tools like Jupyter Notebook, OpenCV, Amazon SageMaker, and CVAT, I streamline the development process and ensure seamless integration of AI/ML solutions.

Unlocking Business Potential Through AI/ML

In today's data-driven world, the ability to harness the power of AI and ML has become a critical differentiator for businesses seeking to thrive in an increasingly competitive landscape. By partnering with a seasoned data scientist and consultant, businesses can unlock new opportunities, drive operational efficiency, and gain a competitive edge. Whether it's optimizing processes, enhancing customer experiences, or gaining deeper insights from data, AI/ML solutions offer limitless possibilities for businesses across industries.

Let's Collaborate

Are you ready to harness the transformative power of AI and ML to propel your business forward? As your trusted partner, I am committed to delivering tailored solutions that align with your unique objectives and drive tangible results. Let's embark on this journey together and unlock the full potential of your business with cutting-edge AI/ML solutions. Get in touch today to explore how we can leverage technology to shape the future of your business.

Click Here to get Services

0 notes

Text

📝 Guest Post: Evaluating LLM Applications*

New Post has been published on https://thedigitalinsider.com/guest-post-evaluating-llm-applications/

📝 Guest Post: Evaluating LLM Applications*

To successfully build an AI application, evaluating the performance of large language models (LLMs) is crucial. Given the inherent novelty and complexities surrounding LLMs, this poses a unique challenge for most companies.

Peter Hayes, who holds a PhD in Machine Learning from University College London, is one of the world’s leading experts on this topic. As CTO of Humanloop, Peter has assisted companies such as Duolingo, Gusto, and Vanta in solving LLM evaluation challenges for AI applications with millions of daily users.

Today, Peter shares his insights on LLM evaluations. In this 5-minute read, you will learn how to apply traditional software evaluation techniques to AI, understand the different types of evaluations and when to use them, and see what the lifecycle of evaluating LLM applications looks like at the frontier of Generative AI.

This post is a shortened version of Peter’s original blog, titled ‘Evaluating LLM Applications‘.

Take lessons from traditional software

A large proportion of teams now building great products with LLMs aren’t experienced ML practitioners. Conveniently many of the goals and best practices from software development are broadly still relevant when thinking about LLM evals.

Automation and continuous integration is still the goal

Competent teams will traditionally set up robust test suites that are run automatically against every system change before deploying to production. This is a key aspect of continuous integration (CI) and is done to protect against regressions and ensure the system is working as the engineers expect. Test suites are generally made up of 3 canonical types of tests: unit, integration and end-to-end.

Typical makeup of a test suite in software development CI. Unit tests tend to be the hardest to emulate for LLMs.

Unit – very numerous, target a specific atom of code and are fast to run.

Integration – less numerous, cover multiple chunks of code, are slower to run than unit tests and may require mocking external services.

End-to-end – emulate the experience of an end UI user or API caller; they are slow to run and oftentimes need to interact with a live version of the system.

The most effective mix of test types for a given system often sparks debate. Yet, the role of automated testing as part of the deployment lifecycle, alongside the various trade-offs between complexity and speed, remain valuable considerations when working with LLMs.

Types of evaluation can vary significantly

When evaluating one or more components of an LLM block, different types of evaluations are appropriate depending on your goals, the complexity of the task and available resources. Having good coverage over the components that are likely to have an impact over the overall quality of the system is important.

These different types can be roughly characterized by the return type and the source of, as well as the criteria for, the judgment required.

Judgment return types are best kept simple

The most common judgment return types are familiar from traditional data science and machine learning frameworks. From simple to more complex:

Binary – involves a yes/no, true/false, or pass/fail judgment based on some criteria.

Categorical – involves more than two categories; for exampling adding an abstain or maybe option to a binary judgment.

Ranking – the relative quality of output from different samples or variations of the model are being ranked from best to worst based on some criteria. Preference based judgments are often used in evaluating the quality of a ranking.

Numerical – involves a score, a percentage, or any other kind of numeric rating.

Text – a simple comment or a more detailed critique. Often used when a more nuanced or detailed evaluation of the model’s output is required.

Multi-task – combines multiple types of judgment simultaneously. For example, a model’s output could be evaluated using both a binary rating and a free-form text explanation.

Simple individual judgments can be easily aggregated across a dataset of multiple examples using well known metrics. For example, for classification problems, precision, recall and F1 are typical choices. For rankings, there are metrics like NDCG, Elo ratings and Kendall’s Tau. For numerical judgments there are variations of the Bleu score.

I find that in practice binary and categorical types generally cover the majority of use cases. They have the added benefit of being the most straight forward to source reliably. The more complex the judgment type, the more potential for ambiguity there is and the harder it becomes to make inferences.

Model sourced judgments are increasingly promising

Sourcing judgments is an area where there are new and evolving patterns around foundation models like LLMs. At Humanloop, we’ve standardised around the following canonical sources:

Heuristic/Code – using simple deterministic rules based judgments against attributes like cost, token usage, latency, regex rules on the output, etc. These are generally fast and cheap to run at scale.

Model (or ‘AI’) – using other foundation models to provide judgments on the output of the component. This allows for more qualitative and nuanced judgments for a fraction of the cost of human judgments.

Human – getting gold standard judgments from either end users of your application, or internal domain experts. This can be the most expensive and slowest option, but also the most reliable.

Typical makeup of different sources of evaluation judgments. AI evaluators are a good sweet spot for scaling up your evaluation process, while still providing Human-level performance.

Model judgments in particular are increasingly promising and an active research area. The paper Judging LLM-as-a-Judge demonstrates that an appropriately prompted GPT-4 model achieves over 80% agreement with human judgments when rating LLM model responses to questions on a scale of 1-10; that’s equivalent to the levels of agreement between humans.

I believe teams should consider shifting more of their human judgment efforts up a level to focus on helping improve model evaluators. This will ultimately lead to a more scalable, repeatable and cost-effective evaluation process. As well as one where the human expertise can be more targeted on the most important high-value scenarios.

Different stages of evaluation are necessary

Different stages of the app development lifecycle will have different evaluation needs. I’ve found this lifecycle to naturally still consist of some sort of planning and scoping exercise, followed by cycles of development, deployment and monitoring.

These cycles are then repeated during the lifetime of the LLM app in order to intervene and improve performance. The stronger the teams, the more agile and continuous this process tends to be.

Development here will include both the typical app development; orchestrating your LLM blocks in code, setting up your UIs, etc, as well more LLM specific interventions and experimentation; including prompt engineering, context tweaking, tool integration updates and fine-tuning – to name a few. Both the choices and quality of interventions to optimize your LLM performance are much improved if the right evaluation stages are in place. It facilitates a more data-driven, systematic approach.

From my experience there are 3 complementary stages of evaluation that are the give the highest ROI in supporting rapid iteration cycles of the LLM block-related interventions:

Interactive – it’s useful to have an interactive playground-like editor environment that allows rapid experimentation with components of the model and provides immediate evaluator feedback. This usually works best on a relatively small number of scenarios. This allows teams (both technical and non-technical) to quickly explore the design space of the LLM app and get an informal sense of what works well.

Batch offline – benchmarking or regression testing the most promising variations over a larger curated set of scenarios to provide a more systematic evaluation. Ideally a range of different evaluators for different components of the app can contribute to this stage, some comparing against gold standard expected results for the task. This can fit naturally into existing CI processes.

Monitoring online – post-deployment, real user interactions can be evaluated continuously to monitor the performance of the model. This process can drive alerts, gather additional scenarios for offline evaluations and inform when to make further interventions. Staging deployments through internal environments, or beta testing with selected cohorts of users first, are usually super valuable.

Recommended stages for a robust evaluation process. Interactive, offline and online.

It’s usually necessary to co-evolve to some degree the evaluation framework alongside the app development as more data becomes available and requirements are clarified. The ability to easily version control and share across stages and teams both the evaluators and the configuration of your app can significantly improve the efficiency of this process.

At Humanloop, we’ve developed a platform for enterprises to evaluate LLM applications at each step of the product development journey.

To read the full blog on Evaluating LLM Applications, or learn more about how help enterprises reliably put LLMs in production, you can visit our website.

*This post was written by Peter Hayes, CTO of Humanloop. We thank Humanloop for their insights and ongoing support of TheSequence.

#agile#agreement#ai#alerts#API#app#app development#applications#approach#atom#automation#binary#Blog#Building#Canonical#challenge#change#code#college#Companies#complexity#continuous#continuous integration#CTO#data#data science#data-driven#deployment#Design#development

0 notes

Text

What are different platforms for artificial Intelligence (AI) development?

The field of AI development is burgeoning, presenting both exciting opportunities and complex choices. One crucial decision revolves around selecting the right platform to support your project. With a plethora of options available, each catering to diverse needs and skill levels, understanding the landscape becomes paramount. This guide aims to be your compass, delving into the various platforms for AI development, their strengths, weaknesses, and considerations for optimal selection.

Categorizing the AI Development Platform Landscape:

Before diving into specifics, let's categorize platforms based on their approach:

End-to-End Platforms: These platforms offer a comprehensive suite of tools and services encompassing the entire AI development lifecycle, from data preparation and model training to deployment and management. Examples include:

Google Cloud AI Platform: Offers pre-built models, tools for custom model development, and deployment infrastructure.

Microsoft Azure AI: Provides a wide range of services for different AI tasks, including computer vision, natural language processing, and machine learning.

Amazon SageMaker: A managed service for building, training, and deploying machine learning models.

Open-Source Frameworks and Libraries: These are flexible, community-driven tools often preferred by experienced developers for custom model development and research. Examples include:

TensorFlow: A popular open-source library for numerical computation and deep learning, used by Google for internal research.

PyTorch: Another popular Python library offering dynamic computational graphs and ease of use, favored by researchers and startups.

Scikit-learn: A machine learning library built on top of NumPy and SciPy, offering a wide range of algorithms for various tasks.

Specialized Platforms: These cater to specific domains or functionalities within AI development, such as computer vision, natural language processing, or robotic control. Examples include:

OpenAI Gym: A toolkit for developing and comparing reinforcement learning algorithms.

Keras: A high-level API built on top of TensorFlow, simplifying deep learning model development.

OpenCV: A library for computer vision tasks like image processing and object detection.

Low-Code/No-Code Platforms: These are designed for users with limited coding experience, offering pre-built components and visual interfaces for building simple AI applications. Examples include:

Microsoft Power Automate: A cloud-based platform for building automated workflows using low-code tools.

IBM Watson Studio: Provides a cloud-based environment for building, training, and deploying AI models without extensive coding.

Amazon Rekognition: A pre-trained service for image and video analysis, offering features like object detection and facial recognition.

Platform Deep Dive: Strengths, Weaknesses, and Considerations:

Now, let's delve deeper into each category, exploring their strengths, weaknesses, and considerations:

End-to-End Platforms:

Strengths:

Ease of use: Provide intuitive interfaces and pre-built components, reducing development time.

Scalability: Handle large datasets and complex models efficiently.

Security and compliance: Built-in security features and compliance certifications.

Weaknesses:

Vendor lock-in: Switching platforms can be difficult due to proprietary technologies.

Cost: Can be expensive for resource-intensive projects or small businesses.

Limited customization: May not offer the flexibility needed for highly customized models.

Considerations:

Choose a platform with services aligned with your project's specific needs (e.g., computer vision, NLP).

Evaluate pricing models and resource limitations based on project scope.

Ensure the platform complies with your industry's security and data privacy regulations.

Open-Source Frameworks and Libraries:

Strengths:

Flexible and customizable: Offer fine-grained control over every aspect of model development.

Free and open-source: Accessible for anyone, fostering community contribution and innovation.

Large community support: Extensive documentation, tutorials, and active online communities.

Weaknesses:

Steeper learning curve: Requires more coding and technical expertise.

Maintenance burden: Continuous updates and security patches need monitoring.

Not beginner-friendly: Can be overwhelming for individuals without programming experience.

Considerations:

Assess your team's technical expertise and comfort level with coding.

Evaluate the trade-off between customization and development time.

Choose libraries with active communities and well-maintained documentation.

Specialized Platforms:

Strengths:

Domain-specific expertise: Offer pre-built tools and data tailored to specific domains, saving development time.

High performance: Optimized for specific tasks, leading to faster and more accurate results.

Read More Blogs :

Initial Coin Offerings (ICOs) in USA: Risks and Opportunities

What Are the Different Types of Tokens Offered in ICOs?

Cryptocurrency Futures and Derivatives Trading in the USA

0 notes

Text

Streamlining Your Business with Catalog Processing Services

Enhance your catalog's performance with Outsource Bigdata's top-notch catalog processing services. Our dedicated professionals optimize your catalog, providing services like data entry, image editing, categorization, and product descriptions. We ensure meticulous care in every detail, resulting in a polished catalog that captivates customers.

For more information, visit: https://outsourcebigdata.com/data-automation/data-processing-services/catalog-processing-services/

About AIMLEAP

Outsource Bigdata is a division of Aimleap. AIMLEAP is an ISO 9001:2015 and ISO/IEC 27001:2013 certified global technology consulting and service provider offering AI-augmented Data Solutions, Data Engineering, Automation, IT Services, and Digital Marketing Services. AIMLEAP has been recognized as a ‘Great Place to Work®’.

With a special focus on AI and automation, we built quite a few AI & ML solutions, AI-driven web scraping solutions, AI-data Labeling, AI-Data-Hub, and Self-serving BI solutions. We started in 2012 and successfully delivered IT & digital transformation projects, automation-driven data solutions, on-demand data, and digital marketing for more than 750 fast-growing companies in the USA, Europe, New Zealand, Australia, Canada; and more.

-An ISO 9001:2015 and ISO/IEC 27001:2013 certified

-Served 750+ customers

-11+ Years of industry experience

-98% client retention

-Great Place to Work® certified

-Global delivery centers in the USA, Canada, India & Australia

Our Data Solutions

APISCRAPY: AI driven web scraping & workflow automation platform

APISCRAPY is an AI driven web scraping and automation platform that converts any web data into ready-to-use data. The platform is capable to extract data from websites, process data, automate workflows, classify data and integrate ready to consume data into database or deliver data in any desired format.

AI-Labeler: AI augmented annotation & labeling solution

AI-Labeler is an AI augmented data annotation platform that combines the power of artificial intelligence with in-person involvement to label, annotate and classify data, and allowing faster development of robust and accurate models.

AI-Data-Hub: On-demand data for building AI products & services

On-demand AI data hub for curated data, pre-annotated data, pre-classified data, and allowing enterprises to obtain easily and efficiently, and exploit high-quality data for training and developing AI models.

PRICESCRAPY: AI enabled real-time pricing solution

An AI and automation driven price solution that provides real time price monitoring, pricing analytics, and dynamic pricing for companies across the world.

APIKART: AI driven data API solution hub

APIKART is a data API hub that allows businesses and developers to access and integrate large volume of data from various sources through APIs. It is a data solution hub for accessing data through APIs, allowing companies to leverage data, and integrate APIs into their systems and applications.

Locations:

USA: 1-30235 14656

Canada: +1 4378 370 063

India: +91 810 527 1615

Australia: +61 402 576 615

Email: [email protected]

0 notes

Text

Navigating SEO Trends in 2024: A Guide for Small Business Owners

Hello, Small Business Owners!

As we step into 2024, the world of SEO (Search Engine Optimisation) continues to evolve at an exhilarating pace. Understanding these trends is crucial for your business’s online success. Here’s a relaxed, informative guide to help you stay ahead in the SEO game without getting overwhelmed by technicalities.

Embracing Sophisticated AI Connectivity

AI is not just a buzzword anymore; it’s become an integral part of SEO strategies. In 2023, we saw AI emerge as a key player in enhancing SEO and marketing tools. As we progress, expect AI to become more sophisticated, powering everything from content creation to data analysis. This means your small business can harness AI to create more engaging content and better understand your audience.

The Rise of Headless CMS for Omnichannel Marketing

Headless CMS (Content Management System) is set to redefine how content is delivered across multiple platforms. Unlike traditional CMS, headless CMS doesn’t have a front-end layer. This gives you the flexibility to display content across various channels - websites, mobile apps, IoT devices - seamlessly. In the age of TikTok and multi-channel marketing, this flexibility is invaluable for maintaining consistency and quality in your marketing efforts.

Mastering Feed Management

Feed management is becoming a crucial skill in SEO. Google’s evolving indexing strategies now focus on how different elements of your website are crawled. This includes using APIs and structured data to categorize content into dedicated channels. For small businesses, this means it’s more important than ever to organize and optimize your content feeds to ensure they align with Google’s indexing practices.

Practical Tips for Small Business Owners

Leverage AI Wisely: Use AI for content creation and SEO analytics, but ensure it aligns with your brand voice and audience needs.

Explore Headless CMS: Investigate headless CMS options to deliver content more effectively across different platforms.

Optimize Content Feeds: Regularly review and optimize your content feeds for Google’s indexing criteria.

Conclusion

SEO in 2024 is all about leveraging advanced technologies like AI and headless CMS, along with strategic feed management. Remember, adapting to these changes doesn’t have to be daunting. At Sitges Marketing, we specialize in making SEO accessible and successful for small businesses like yours. Contact us to navigate these trends effectively and drive your business’s online growth.

0 notes

Text

The Power of Cameralyze: Transforming Your Apps with AI Models

In a world increasingly driven by artificial intelligence, the ability to seamlessly integrate AI models into your applications is a game-changer. Enter Plugger.ai, your gateway to a universe of ready-to-use AI models that can supercharge your projects. Whether you're a developer looking to enhance your app's capabilities or a business seeking innovative solutions, Plugger.ai has you covered.

The Plugger.ai Ecosystem

Discovering AI Models Made Simple

Cameralyze is more than just a platform; it's a hub for finding AI models tailored to your specific needs. With a vast collection of models, finding the right one has never been easier. Whether you're a novice or an expert, Plugger.ai simplifies AI access for everyone.

Discover Cameralyze: Your Hub for Ready-to-Use AI Models and Online Studio. Plug AI into Your Apps Today!

User-Friendly Interface for AI Execution

Running AI models can be daunting, but Cameralyze's user interface makes it a breeze. With its intuitive design, even those without extensive technical backgrounds can harness the power of AI effortlessly.

Unleash Creativity with AI Art, Comics, and Music Generators. Transform Text into Mesmerizing Visuals and Music.

API Access for Developers and Businesses

For developers and businesses seeking programmatic AI access, Cameralyze provides a seamless API integration. This means you can effortlessly integrate hundreds of AI models into your applications and systems, opening up a world of possibilities.

Plugger AI empowers developers and businesses to effortlessly access a vast collection of AI models through simple API calls, offering a seamless and programmatic way to leverage the capabilities of hundreds of AI models. Unlocking endless possibilities, users can integrate these models into their applications and systems with ease, driving innovation and efficiency in their AI-powered solutions.

Democratizing AI

At Plugger.ai, our mission is clear: to make AI accessible to everyone. We believe that every individual, regardless of their background, should have the power to build and innovate with AI. With Plugger.ai, this mission becomes a reality.

Affordable Access to AI Models

Get Power of AI

Simple Interface, a few lines codes!

Get started now!

1,000 executions start $3.50

Free hands-on onboarding & support

Hundreds of models wait for you

AI Models for Diverse Use Cases

Plugger.ai offers a comprehensive collection of AI models, pre-categorized to cater to various industries and applications. Here's a glimpse of what you can find:

AI Art Generator

Unleash the boundless synergy of creativity and technology with Masterpiece AI, the premier AI Art Generator that transforms text into mesmerizing visual masterpieces.

AI Cartoon Generator

Step into a world of limitless imagination with the AI Cartoon Generator, where your words become enchanting cartoon masterpieces.

AI Comic Generator

Unleash your storytelling prowess with the AI Comic Generator, an innovative AI-powered tool that magically transforms text into captivating comic strips, bridging the gap between words and visuals like never before.

AI Filters

Revolutionize Your Digital Content with AI Filter: Empower Your Creativity and Capture Audiences with Stunning Visual Transformations!

AI Music Generator

Unleash your creativity with AI Music Generator - a revolutionary tool that uses advanced algorithms to generate original music based on your preferences, available online and often for free.

AI Video Generator

Create stunning, AI-generated videos from text with the revolutionary power of AI video generators.

Beyond Creativity: Practical Applications

Plugger.ai extends its capabilities beyond artistic expression. It offers practical solutions that have real-world applications:

Age & Gender Detection

From enhancing security to targeting marketing campaigns, age and gender detection models have diverse applications. However, concerns about accuracy and ethical use persist.

Object Detection

Object detection models automate tasks and improve safety in various industries, including security, autonomous vehicles, medical imaging, and retail/e-commerce.

Photo Enhancement

AI-powered photo enhancers use machine learning to turn low-quality images into high-quality ones. This technology has applications ranging from product photography to social media marketing.

Get Started with Cameralyze

Cameralyze is more than just a platform; it's a gateway to AI innovation. Whether you're an individual with a creative vision or a business seeking efficiency gains, Plugger.ai has the tools you need. With a simple interface, affordable pricing, and a vast library of AI models, your journey into the world of AI starts here.

Conclusion

As AI continues to reshape industries and drive innovation, Cameralyze stands as your trusted partner in harnessing this transformative power. Whether you're unleashing your creativity or implementing practical solutions, Plugger.ai is where your AI journey begins. Start plugging AI into your apps today and unlock a world of possibilities.

0 notes

Text

Facial Recognition Market Size & Share, Growth Trends 2023

The Global Facial Recognition Market size is anticipated to experience substantial growth, with an estimated increase of USD 7,634.09 million projected between 2022 and 2027. This growth is expected to occur at an accelerated Compound Annual Growth Rate (CAGR) of 18.11% during the forecast period. Several factors contribute to the market's expansion, including the escalating prevalence of identity threats, the growing popularity of facial recognition technology, and the adoption of advanced 3D facial recognition systems.

Facial Recognition Market shows accelerated growth during the forecast period

To learn more about this report, Request Free Sample

Introduction

Facial recognition technology has emerged as a transformative force across various industries, reshaping security, authentication, and user experiences. In this market analysis, we will dissect the dynamics, trends, challenges, and growth prospects of the facial recognition market.

Market Overview

The facial recognition market is experiencing robust growth, driven by an increasing demand for secure and efficient identification solutions. The market size is expanding, and several key factors contribute to this growth:

Security Imperatives: The rising need for enhanced security in both public and private sectors has fueled the adoption of facial recognition for access control, surveillance, and authentication.

Biometric Advancements: Facial recognition technology has advanced significantly, becoming more accurate and reliable, largely due to improvements in artificial intelligence (AI) algorithms.

User Convenience: Facial recognition offers a convenient and touchless means of authentication, making it a preferred choice for smartphones, payment systems, and building access.

Regulatory Environment: Governments and regulatory bodies are increasingly addressing privacy concerns and establishing guidelines for the ethical use of facial recognition technology.

Market Segmentation

To understand the facial recognition market better, we can categorize it into various segments:

Technology Type: Facial recognition can be categorized into 2D facial recognition, 3D facial recognition, and facial analytics, each with its unique applications and capabilities.

Application: Facial recognition finds applications in security and surveillance, mobile device authentication, banking and finance, healthcare, retail, and more.

End-users: The market serves various end-users, including government agencies, enterprises, healthcare institutions, and consumers.

Geographical Regions: Market dynamics can vary by region, with Asia-Pacific, North America, Europe, and other regions experiencing different adoption rates.

Key Market Players

Several companies have positioned themselves as leaders in the facial recognition market:

NEC Corporation: Known for its accurate and efficient facial recognition solutions, NEC serves various industries, including law enforcement and public safety.

Face++ (Megvii): This Chinese company has made significant strides in AI-driven facial recognition, offering solutions for industries such as finance and retail.

Microsoft: Microsoft's Azure Face API is a prominent player, providing facial recognition capabilities with applications in identity verification and emotion detection.

Amazon: Amazon's Amazon Rekognition service caters to both government and commercial clients, offering a range of facial recognition features.

Market Trends

Understanding current market trends is crucial for businesses operating in the facial recognition industry:

Hybrid Biometrics: Combining facial recognition with other biometric methods like fingerprint or iris scanning enhances security.

Privacy Concerns: Heightened awareness of privacy issues is driving the need for transparent and consent-based facial recognition systems.

Edge Computing: Edge-based facial recognition systems are becoming popular, as they offer faster response times and improved privacy.

AI Integration: Ongoing AI advancements are making facial recognition more accurate, capable of recognizing diverse demographics and even masked faces.

Future Prospects

The facial recognition market is poised for further expansion, with several future prospects:

Wider Adoption: Facial recognition will continue to gain traction in diverse sectors, from healthcare to transportation, as more applications emerge.

Regulatory Frameworks: Governments and industry bodies will establish clearer regulations and ethical guidelines, shaping the responsible use of facial recognition.

AI-Driven Innovation: Ongoing AI research will drive innovations in facial recognition, improving accuracy and performance.

Multimodal Biometrics: Facial recognition will integrate seamlessly with other biometric modalities, offering even more secure authentication options.

In conclusion, the facial recognition market is evolving rapidly, driven by advancements in technology and the pressing need for enhanced security and convenience. As it continues to expand, careful consideration of privacy and ethics will play a pivotal role in shaping the market's future landscape.

For more detailed information and insights, Explore the Sample Report PDF

About Technavio

Technavio is a leading global technology research and advisory company. Their research and analysis focus on emerging market trends and provide actionable insights to help businesses identify market opportunities and develop effective strategies to optimize their market positions. With over 500 specialized analysts, Technavio's report library consists of more than 17,000 reports and counting, covering 800 technologies, spanning 50 countries. Their client base consists of enterprises of all sizes, including more than 100 Fortune 500 companies. This growing client base relies on Technavio's comprehensive coverage, extensive research, and actionable market insights to identify opportunities in existing and potential markets and assess their competitive positions within changing market scenarios.

Contacts

Technavio Research

Jesse Maida

Media & Marketing Executive

US: +1 844 364 1100

UK: +44 203 893 3200

Email: [email protected]

Website: www.technavio.com

0 notes

Text

AI App Development: Navigating the Financial Landscape

What is AI App Development?

In today's technology-driven world, AI app development has become a pivotal aspect of the digital landscape. But what exactly is AI app development? At its core, it involves creating applications that leverage artificial intelligence and machine learning to perform tasks that typically require human intelligence. These tasks can range from speech recognition and natural language processing to image recognition and predictive analytics.

The Importance of Understanding Costs

Before delving into the intricacies of AI app development, it's crucial to understand why comprehending the associated costs is essential. Developing AI-powered applications can be a substantial financial commitment, and without a clear understanding of the cost factors involved, it's easy for a project to spiral out of control budget-wise. This guide aims to shed light on the various aspects that influence AI app development costs, helping you make informed decisions and allocate resources efficiently.

Factors Influencing AI App Development Costs

A. Complexity of the App

The complexity of an AI app plays a pivotal role in determining its development costs. AI apps can generally be categorized into three complexity levels:

Simple AI Apps:

These are applications with basic AI functionalities, such as chatbots with predefined responses. Development costs for simple AI apps are relatively low.

Intermediate AI Apps:

Intermediate AI apps involve more advanced features, like recommendation engines or sentiment analysis. Costs increase as the complexity level rises.

Complex AI Apps:

These applications encompass cutting-edge AI technologies, such as natural language understanding or deep learning. Building complex AI apps can be resource-intensive and costly.

B. Data Collection and Preparation

AI's ability to learn and make decisions relies heavily on data. Data collection and preparation involve several crucial steps:

Data Sourcing:

Acquiring relevant and high-quality data sources can be both time-consuming and expensive.

Data Cleaning:

Raw data often requires extensive cleaning and pre processing to be useful for AI models.

Data Labeling:

For supervised learning, data labeling by human annotators is necessary, which adds to the overall cost.

C. Algorithm Selection

Selecting the right algorithms for your AI app is a critical decision:

Pre-built Algorithms: Using pre-built algorithms can save development time and reduce costs, but they may lack customization.

Custom Algorithms:

Developing custom algorithms provides more control and tailored solutions but often comes with a higher price tag.

D. Integration with Third-party Services

Integrating third-party services can enhance your AI app's capabilities:

API Integration:

Utilizing APIs from platforms like Google or Amazon can expedite development but may come with subscription fees.

External Data Sources:

Accessing external data sources for real-time information may add data acquisition and usage costs.

E. Platform and Device Compatibility

Consider the target platforms and devices for your AI app:

iOS vs. Android:

Developing for both platforms can increase costs, but it broadens your app's reach.

Web vs. Mobile

Developing web and mobile versions may require additional resources but caters to different user preferences.

III. Cost Components Breakdown

A. Development Team

Understanding the roles and responsibilities within your development team is crucial:

Roles and Responsibilities:

Costs depend on the expertise and number of team members required.

Hiring vs. Outsourcing:

Choosing between in-house development and outsourcing affects budgeting significantly. Consider outsourcing to the best app devlopment company, based in Hyderabad.

B. Technology Stack

The technology stack you choose impacts both development time and costs:

Programming Languages:

Different languages come with varying development speeds and resource availability.

Frameworks and Libraries:

Utilizing established frameworks can streamline development but may have licensing costs.

C. Development Timeframes

Breaking down your project into phases and estimating timeframes is essential:

Project Phases:

Sequencing development phases helps manage costs and resources effectively.

Time Estimates: Accurate time estimates ensure better cost control.

D. Infrastructure Costs

Consider the infrastructure required for your AI app:

Cloud Services:

Hosting on cloud platforms like AWS or Azure offers scalability but involves ongoing costs.

Hardware Requirements:

For on-premises solutions, hardware procurement and maintenance add to expenses.

IV. Hidden Costs to Consider

A. Maintenance and Updates

Maintaining an AI app is an ongoing commitment:

Regular Maintenance:

Keeping your app up-to-date and bug-free is essential but adds to operational expenses.

Feature Enhancements:

Adding new features and improvements is an ongoing cost consideration.

B. Data Security and Compliance

AI apps often deal with sensitive data:

GDPR and Privacy Regulations:

Compliance with data protection regulations is mandatory and may require investments in security measures.

Security Measures:

Implementing robust security measures to protect user data has associated costs.

C. Scalability

Anticipating growth and scalability is vital:

Preparing for Increased User Base:

Scaling your infrastructure to accommodate more users can be expensive.

Server Costs:

Expanding server capacity or transitioning to more powerful hardware incurs costs.

V. Cost-saving Strategies

A. Open-source Tools and Libraries

Leveraging open-source tools and libraries can significantly reduce development costs.

B. MVP (Minimum Viable Product) Approach

Developing an MVP first allows you to test your app's viability with minimal investment.

C. Agile Development Methodology

Adopting an agile approach enables flexibility and cost control throughout the development process.

D. Continuous Monitoring and Optimization

Regularly monitoring and optimizing your AI app can lead to long-term cost savings.

VI. Real-world Examples

A. Case Study 1: AI Chatbot Development

Exploring a real-world case study of AI chatbot development and its associated costs.

B. Case Study 2: Image Recognition App

An in-depth look at the development costs and considerations for an image recognition app.

#best app development company in hyderad#mobile app development company in hyderabad#app creator in hyderabad#app developers in hyderabad#top app development companies#coding#programming#marketing#entrepreneur

0 notes

Text

Meta Llama 3 in Amazon Bedrock: New Tools for Developers

AWs is pleased to announce that Meta’s Llama 3 models are now generally available on Amazon Bedrock. You can create, test, and responsibly grow your generative artificial intelligence (AI) applications with Meta Llama 3. The latest Llama 3 models offer superior reasoning, code generation, and instruction, making them the most suitable for a wide variety of use scenarios.

Get to know Meta Llama 3

Llama 3 8B

For edge devices, quicker training times, and constrained computational power and resources, Llama 3 8B is perfect. The model performs exceptionally well in sentiment analysis, language translation, text classification, and summarization.

Llama 3 70B

Llama 3 70B is perfect for research development, enterprise applications, language understanding, conversational AI, and content production. The model performs exceptionally well in language modelling, dialogue systems, text categorization and nuance, sentiment analysis and nuance reasoning, text summarization and accuracy, code generation, and following directions.

Advantages

More than a million annotations by humans

Llama Chat, the refined model, makes use of more than a million human annotations as well as publicly accessible instruction datasets.

Trained on a trillion tokens beforehand

To improve their understanding of linguistic nuances, llama models are trained on trillions of tokens from publicly available online data sources.

More than a thousand red-teaming hours

More than 1,000 hours of red-teaming and annotation work went into the refined model to guarantee model performance while maintaining safety.

Absence of infrastructure management

The first public cloud service to provide a fully managed Llama API is Amazon Bedrock. All sizes of organizations can use Amazon Bedrock’s Llama 2 models without having to worry about maintaining the supporting infrastructure.

Become acquainted with Llama

The first publicly available cloud service to provide a fully managed API for Llama, Meta’s next-generation large language model (LLM), is Amazon Bedrock. All sizes of organisations can now access Llama models in Amazon Bedrock without having to worry about maintaining the supporting infrastructure. This allows you to concentrate on developing your AI applications, which is what you do best. The collaboration between Meta and Amazon is an example of group innovation in generative AI. Amazon and Meta are collaborating to expand the realm of possibilities.

Use cases

Accessible big language models, Meta’s Llama models are made for developers, researchers, and companies to create, test, and responsibly scale generative AI concepts. A fundamental component of the framework that fosters creativity in the international community is Llama.

Versions of the models

Llama 3 8B

Perfect for edge devices, quicker training times, and constrained computational power and resources.

Maximum tokens: 8,000

Languages Spoken: English

Sentiment analysis, text classification, text summarization, and language translation are supported use cases.

Llama 3 70B

Perfect for research development, enterprise applications, language understanding, conversational AI, and content production.

Maximum tokens: 8,000

Languages Spoken: English

Use cases that are supported include language modelling, dialogue systems, text categorization and nuance, text summarization and accuracy, sentiment analysis and nuance reasoning, and following directions.

Llama 2 13B

Model that has been adjusted for the 13B parameter size. Ideal for smaller-scale jobs like sentiment analysis, language translation, and text classification.

Maximum tokens: 4,000

Languages Spoken: English

Supported use cases: Chat with an assistant

Llama 2 70B

Model with parameters adjusted to a value of 70B. Ideal for more complex jobs like dialogue systems, text production, and language modelling.

Maximum tokens: 4,000

Languages Spoken: English

Supported use cases: Chat with an assistant

The Llama 3 model family is a group of large language models (LLMs) in 8B and 70B parameter sizes that have been pre-trained and instruction-tuned, according to Meta’s Llama 3 announcement. With four times more code and a training dataset seven times larger than that used for Llama 2 models, these models have been trained on over 15 trillion tokens of data, supporting an 8K context length that doubles Llama 2’s capacity.

Amazon Bedrock now offers two additional Llama 3 variants, expanding the available model selection. With these models, you can quickly test and assess additional top foundation models (FMs) according to your use case:

For edge devices and systems with constrained computational capacity, Llama 3 8B is perfect. The model performs exceptionally well in sentiment analysis, language translation, text classification, and summarization.

Llama 3 70B is perfect for research development, enterprise applications, language understanding, conversational AI, and content production. The model performs exceptionally well in language modelling, dialogue systems, text categorization and nuance, sentiment analysis and nuance reasoning, text summarization and accuracy, code generation, and following directions.

At the moment, Meta is also training more Llama 3 models with over 400B parameters. These 400B models will handle several languages, be multimodal, and have a considerably larger context window, among other additional features. These models will be perfect for research and development (R&D), language understanding, conversational AI, content production, and enterprise applications when they are available.

Llama 3 models in action

To get started with Meta models, select Model access from the bottom left pane of the Amazon Bedrock console. Request access individually for Llama 3 8B Instruct or Llama 3 70B Instruct to have access to the most recent Llama 3 models from Meta.

Select Text or Chat from Playgrounds in the left menu pane of the Amazon Bedrock dashboard to try the Meta Llama 3 models. Next, click Select model, choose Llama 8B Instruct or Llama 3 70B Instruct as the model, and Meta as the category.

You can also use code examples in the AWS SDKs and Command Line Interface (AWS CLI) to access the model by selecting View API request. Model IDs like meta.llama3-8b-instruct-v1 and meta.llama3-70b-instruct-v1 are applicable.

You can create apps in a variety of programming languages by utilizing code samples for Amazon Bedrock with AWS SDKs.

These Llama 3 models can be applied to a range of applications, including sentiment analysis, language translation, and question answering.

Llama 3 instruct models that are tailored for discussion use cases are another option. The prior history between the user and the chat assistant serves as the input for the instruct model endpoints. As a result, you are able to pose queries that are pertinent to the current dialogue and offer system configurations, like personalities, which specify the behaviour of the chat assistant.

Currently accessible

The US West (Oregon) and US East (North Virginia) regions of Amazon Bedrock currently offer Meta Llama 3 models for purchase. See the complete list of regions for upcoming changes. Visit the Llama in Amazon Bedrock product page and pricing page to find out more.

Meta Llama 3 in Vertex AI Model Garden

Google cloud is happy to inform that Vertex AI Model Garden will start offering Meta Llama 3 currently. Similar to its predecessors, Llama 3 is available under a free license for numerous business and research uses. Llama 3 is offered as a pre-trained and instruction-tuned model, and comes in two sizes, 8B and 70B.

Read more on govindhtech.com

0 notes

Text

How to Create AI Smart News: The Ultimate ChatGPT-3-Based News Portal

In today's fast-paced world, where information travels at the speed of light, staying informed has become both a necessity and a challenge. As technological advancements continue to reshape our lives, the realm of news delivery is undergoing a profound transformation. Enter AI Smart News, a revolutionary concept that combines the power of artificial intelligence, particularly ChatGPT-3, with the demand for accurate, personalized, and real-time news delivery. In this article, we will delve into the steps involved in creating an AI Smart News portal, exploring how the fusion of AI and journalism can reshape the way we consume news.

Get more info & click here >>>

Understanding the Concept

AI Smart News is not just another news aggregator; it's a personalized news portal that tailors news content based on individual preferences. The foundational technology driving this concept is ChatGPT-3, developed by OpenAI. ChatGPT-3 is a state-of-the-art language model that excels in understanding and generating human-like text. It's the core engine that powers the interactive conversations, personalized summaries, and fact-checking capabilities of AI Smart News.

Data Collection and Curation

The first step in creating AI Smart News is collecting a diverse range of news articles and stories from various sources. These sources could include reputable news agencies, independent journalists, and verified social media profiles. The collected data forms the corpus that ChatGPT-3 uses to learn and generate relevant news content.

It's important to ensure that the data collection process is unbiased and covers a wide spectrum of topics, ensuring that AI can provide a well-rounded news experience. Additionally, human editors can play a role in curating the initial data and selecting stories that adhere to journalistic standards and ethics.

Training ChatGPT-3

The magic of AI Smart News begins with training ChatGPT-3. This involves providing the language model with the collected news articles to learn the nuances of language, context, and information dissemination. The training process enables ChatGPT-3 to understand the structure of news articles, identify key information, and respond coherently to user queries.

Fine-tuning the model is crucial to align it with the goals of AI Smart News. It's during this phase that the model is trained to prioritize accuracy, filter out misinformation, and generate summaries that capture the essence of each story. Collaborating with linguists and journalists during this phase can enhance the model's journalistic integrity.

Building the AI Smart News Portal

Creating the actual AI Smart News portal involves the integration of various components:

a. Personalized Newsfeed

The heart of AI Smart News is the personalized newsfeed. By analyzing user preferences and browsing history, the AI tailors the newsfeed to each individual. This requires developing algorithms that can categorize news stories based on topics, relevance, and user interests.

b. Real-Time Updates

To deliver real-time news updates, the portal needs to be constantly connected to news sources. APIs and web scraping technologies can be used to pull in the latest stories from trusted sources, ensuring users receive timely updates on breaking news and events.

c. Fact-checking and Accuracy

Implementing fact-checking mechanisms is critical to combat the spread of misinformation. ChatGPT-3 can be trained to verify the accuracy of news stories by cross-referencing information with trusted databases and sources. AI-powered fact-checking not only ensures credibility but also fosters a culture of informed news consumption.

d. Interactive Conversations

The interactive conversational aspect of AI Smart News enables users to engage with the AI to clarify details, ask questions, and explore various viewpoints. This requires designing an intuitive user interface that seamlessly integrates AI-generated responses into the news consumption experience.

e. Multilingual Support

Global news consumption demands multilingual support. AI Smart News should be capable of translating news articles into multiple languages, making information accessible to a wider audience.

f. Customizable Alerts

Enabling users to set up alerts for specific topics or keywords ensures they don't miss out on relevant news updates. Implementing push notifications and email alerts can enhance user engagement.

g. Editorial Oversight

While AI plays a significant role in generating news content, human editors are essential for maintaining editorial standards. An editorial team can review, refine, and supplement AI-generated content to ensure accuracy, tone, and context.

Get more info & click here >>>

Ensuring Ethical and Responsible AI

As AI increasingly takes on roles traditionally held by humans, ethical considerations become paramount. Creating AI Smart News involves addressing potential biases, transparency issues, and the responsible use of AI-generated content. It's crucial to implement guidelines that align with journalistic ethics and principles.

User Engagement and Feedback Loop

Launching AI Smart News is just the beginning. Continuous user engagement and feedback loops are essential for refining the AI's performance. User feedback can highlight areas where the AI might be falling short, enabling developers to make improvements.

Evolving with Technological Advancements

Technology never stands still, and AI Smart News should be prepared to evolve with the times. As newer versions of AI models emerge, integrating them into the platform can enhance its capabilities. Staying up-to-date with the latest advancements ensures that AI Smart News remains at the forefront of news delivery.

Conclusion

AI Smart News represents the synergy between artificial intelligence and journalism, revolutionizing how we consume news. By harnessing the power of ChatGPT-3, it delivers personalized, accurate, and timely information to users, fostering an informed and engaged global citizenry. The creation of AI Smart News requires a meticulous blend of data curation, AI training, technological integration, and ethical considerations. As this technology continues to evolve, the potential for reshaping the future of news consumption becomes increasingly promising. AI Smart News is not just a news portal; it's a testament to the transformative potential of AI in enhancing our understanding of the world.

CLICK HERE TO GET INSTANT ACCESS >>>

1 note

·

View note

Text

Using AI in Content Management Systems (CMS)

Using AI in Content Management Systems (CMS)

Using AI in Content Management Systems (CMS) can enhance various aspects of content creation, management, and user experience. Here's a general guide on how to integrate AI into your CMS:

Content Creation and Generation:

Automated Content Creation: AI can assist in generating content, such as product descriptions, news articles, or blog posts. You can integrate AI models to create text based on specific prompts or templates.

Language Translation: Implement AI-powered language translation to automatically translate content into multiple languages, expanding your audience reach.

Content Summarization: Use AI to create concise summaries of lengthy articles or documents, helping users quickly grasp the main points.

Content Recommendations:

Personalized Content: Utilize AI algorithms to analyze user behavior and preferences to provide personalized content recommendations, improving user engagement.

Related Content: Implement AI-driven suggestions for related articles, products, or content based on the current user's context.

Content Editing and Enhancement:

Grammar and Spell Check: Integrate AI-powered tools for real-time grammar and spell checking to enhance the quality of published content.

Style and Tone: Implement AI algorithms to ensure consistency in writing style and tone across different authors and sections.

SEO Optimization: Use AI to analyze and suggest improvements for optimizing content for search engines.

Image and Media Processing:

Image Recognition: Integrate AI to automatically tag and categorize images and videos, making it easier to manage and search for multimedia content.

Image Enhancement: Utilize AI to enhance the quality of images, adjust colors, or crop images to fit different layouts.

Alt Text Generation: AI can automatically generate alt text for images, improving accessibility for visually impaired users and SEO.

User Engagement and Interaction:

Chatbots and Virtual Assistants: Implement AI-powered chatbots or virtual assistants to provide real-time assistance to users, answer common queries, and guide them through the website.

Voice Search and Voice Assistants: Integrate AI-driven voice search capabilities to allow users to interact with your CMS using voice commands.

Data Analytics and Insights:

User Behavior Analysis: Use AI to analyze user interactions and behavior on your CMS, gaining insights into what content is most engaging and effective.

Predictive Analytics: Implement AI algorithms to predict future trends and user preferences based on historical data.

Security and Content Moderation:

Content Moderation: Utilize AI to automatically detect and moderate inappropriate or offensive content in user-generated comments or submissions.

Security: Implement AI-driven tools to identify and prevent potential security threats, such as malware or unauthorized access.

Performance Optimization:

Server Load Balancing: AI can be used to optimize server resources by intelligently distributing traffic and requests.

Caching and Content Delivery: Implement AI to manage caching strategies and optimize content delivery for better website performance.

When implementing AI in your CMS, consider the specific needs and goals of your website or application. Evaluate available AI tools, APIs, and platforms, and work with developers and AI experts to ensure a seamless integration that enhances user experience and content management efficiency.

Click here for Demo Using AI in Content Management Systems (CMS)

0 notes

Text

ChatGPT is a Shiny-Gloss Coat That Every Business Must Apply

AI technologies have spread across continents in a blink of an eye. The backstage reason for this evolution is the way how businesses adopted those fast-learning technologies. One of the revolutionary inventions of AI in recent times is ChatGPT, with millions of users. This natural language processing model has the power to generate human-like responses to users’ questions.

While diving back into the history of AI language models, Google’s BERT, Microsoft’s Turing, and others, we are not new to language models. However, what set ChatGPT apart from its counterparts was its technical power and precise user-centric responses. ChatGPT gives excellent dynamic responses, making it suitable for business applications.

For example, let us talk about Want to utilize ChatGPT in your business

First question, why should I integrate ChatGPT, and why not other NLP languages?

ChatGPT offers a cutting-edge performance backed by its transformer architecture and outstanding outcomes on various NLP benchmarks. This makes it a flexible tool to fit right into a business’s applications.

ChatGPT does not have any standard response model. Instead, it provides responses based on the input prompts. Integrating ChatGPT is easy because we have open-source libraries, and pre-trained models, and APIs. These reasons make ChatGPT more effective than other tools and emerge as the popular choice for any NLP application.

What are ChatGPT APIs for business incorporation?